1004ce84a47e8fc0fb885bd038c5c38a.ppt

- Количество слайдов: 43

Bibliomining for Automated Collection Development in a Digital Library Setting: Using Data Mining to Discover Web-Based Scholarly Research Works Scott Nicholson, Ph. D. , M. L. I. S. Assistant Professor, Syracuse University School of Information Studies

Research Inspiration w Many academic research works are published online w w w Publicly accessible e-journals Conference proceedings Research & information centers Course pages Home pages w. . but are not easy to find using general search tools.

Purpose of Research To create an information agent that discriminates between Web pages containing scholarly research works and pages that do not contain scholarly research.

Primary Research Tool w Bibliomining = Data Mining for Libraries w Data Mining w Uses low-level data w Searches for patterns w Descriptive or Predictive w More information at bibliomining. org

Background w Purpose of academic library in research w Nonexistence of automated Internet equivalent of general academic research library w General search tools not effective w Production too rapid for human collection w Collections of e-journals w Human-collected lists of pages

Similar Tools - Human w Infomine (http: //infomine. ucr. edu) w Univ. of California, librarian selected collections of “academically valuable resources” w BUBL (http: //bubl. ac. uk/) w University of Strathclyde, run from Centre for Digital Library Research, aimed at “UK higher education academic and research community” w Virtual reference resources w Dynamic and static

Similar Tools - Automated w Research. Index/Cite. Seer (Lawrence, Giles, Bollacker) w Science (emphasis on CS domain), uses citations w CORA/Just. Research (Mc. Callum, Nigam, Rennie, and Seymore) w Emphasis on CS domain, uses departmental home pages w Pubsearch (Yulan and Cheung) w Uses a seed list of citations to find similar works

Introduction to the Study

Problem Statement w There is no equivalent of the academic library on the World Wide Web: w A large database of Web-based scholarly research not bounded by domain. w The first step is to create the underlying decision-making model which will identify scholarly research works. w In the library, this is the role of collection development

Scholarly Research Works w Scholarly - Written by researchers of an academic or non-profit research institution or published in a peer-reviewed academic journal. w Research - “Systematic investigation towards increasing the sum of knowledge” (Dickinson) w Works - A Web page that contains the full text of a research report w Limitations: English, HTML/Text, one-page, “visible”

Accuracy and Return w Based on traditional Cranfield measures w Accuracy (Precision): Number of correctly identified pages Total number of pages identified by model w Return (Recall): Number of correctly identified pages Total number of scholarly pages in database

Problematic Pages Problematic pages are those that look to this agent like scholarly research works but are not. w w w Non-ann. bibliographies Syllabi Vitae Book reviews Non-scholarly articles w w w Foreign research Partial research Corporate research Research proposals Abstracts

Methodology

Methodology w w w Collect criteria from experts Create tool that can analyze criteria Collect sample pages Run tool on pages to create datasets Use datasets to create and test models

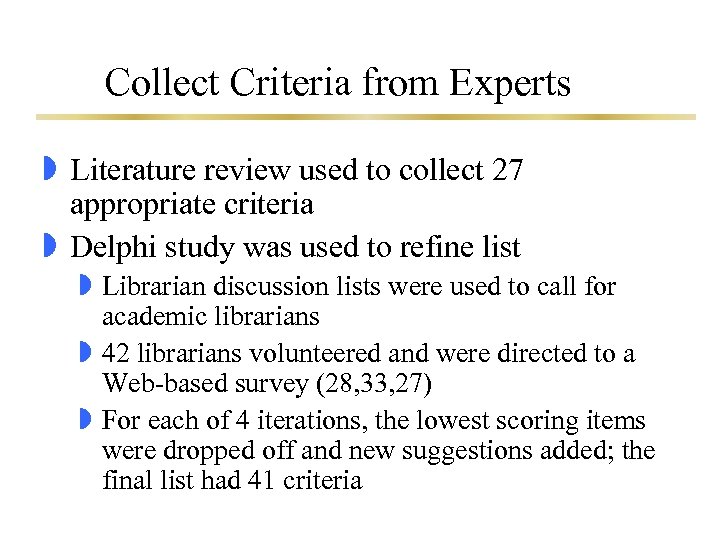

Collect Criteria from Experts w Literature review used to collect 27 appropriate criteria w Delphi study was used to refine list w Librarian discussion lists were used to call for academic librarians w 42 librarians volunteered and were directed to a Web-based survey (28, 33, 27) w For each of 4 iterations, the lowest scoring items were dropped off and new suggestions added; the final list had 41 criteria

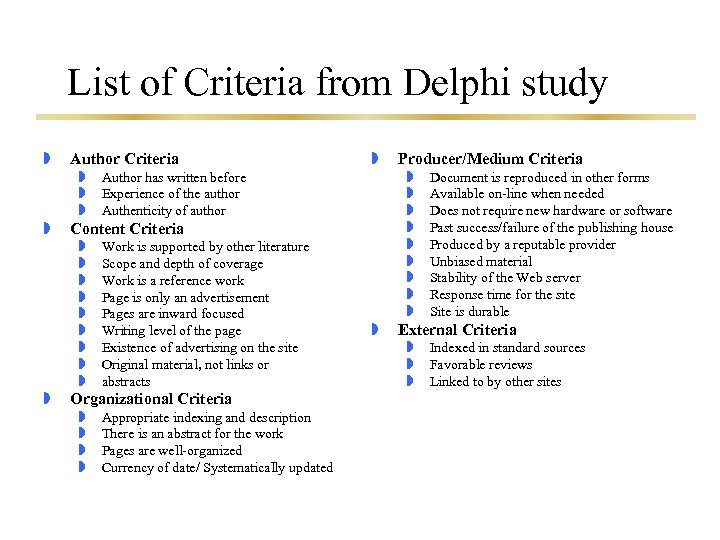

List of Criteria from Delphi study w Author Criteria w w Author has written before Experience of the author Authenticity of author Work is supported by other literature Scope and depth of coverage Work is a reference work Page is only an advertisement Pages are inward focused Writing level of the page Existence of advertising on the site Original material, not links or abstracts Organizational Criteria w w Appropriate indexing and description There is an abstract for the work Pages are well-organized Currency of date/ Systematically updated Producer/Medium Criteria w w w w w Content Criteria w w w Document is reproduced in other forms Available on-line when needed Does not require new hardware or software Past success/failure of the publishing house Produced by a reputable provider Unbiased material Stability of the Web server Response time for the site Site is durable External Criteria w w w Indexed in standard sources Favorable reviews Linked to by other sites

Create Tool that can Analyze Criteria w Perl was used for its pattern matching and ease of interfacing with the Internet w Many criterion was operationalized, programmed, and tested on a set of test pages

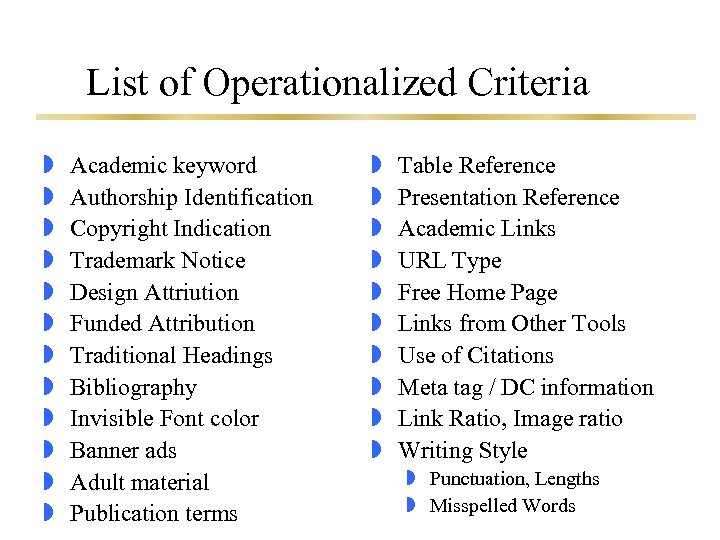

List of Operationalized Criteria w w w Academic keyword Authorship Identification Copyright Indication Trademark Notice Design Attriution Funded Attribution Traditional Headings Bibliography Invisible Font color Banner ads Adult material Publication terms w w w w w Table Reference Presentation Reference Academic Links URL Type Free Home Page Links from Other Tools Use of Citations Meta tag / DC information Link Ratio, Image ratio Writing Style w Punctuation, Lengths w Misspelled Words

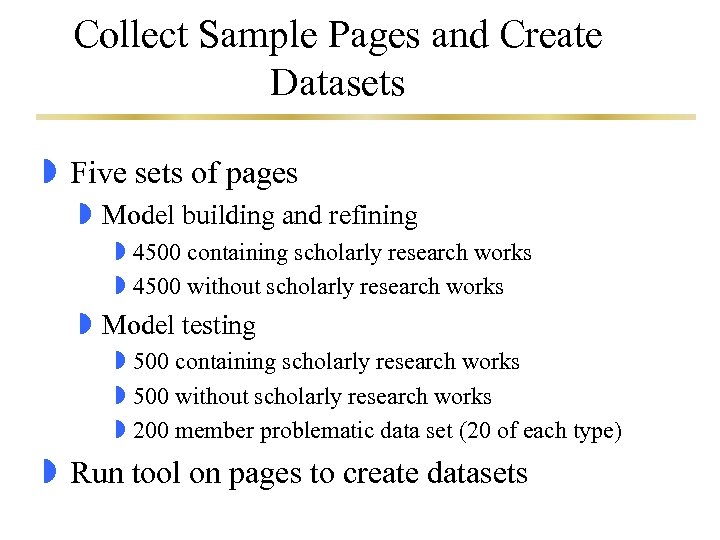

Collect Sample Pages and Create Datasets w Five sets of pages w Model building and refining w 4500 containing scholarly research works w 4500 without scholarly research works w Model testing w 500 containing scholarly research works w 500 without scholarly research works w 200 member problematic data set (20 of each type) w Run tool on pages to create datasets

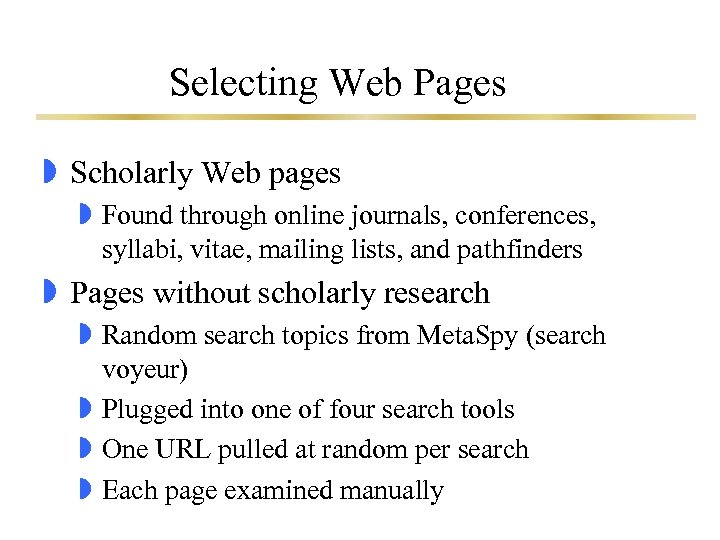

Selecting Web Pages w Scholarly Web pages w Found through online journals, conferences, syllabi, vitae, mailing lists, and pathfinders w Pages without scholarly research w Random search topics from Meta. Spy (search voyeur) w Plugged into one of four search tools w One URL pulled at random per search w Each page examined manually

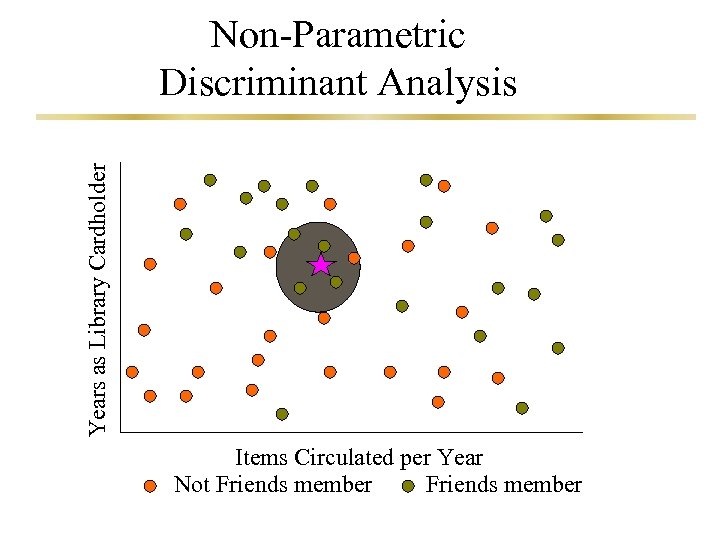

Use Datasets to Create and Test Models w Four modeling tools w w Logistic Regression Non-parametric Discriminant Analysis Classification Tree Neural Networks w Each is used to create a model, modified with a subset of the model-creation group, and then tested against the evaluation groups

Circulation Logistic Regression Library Visitors

Years as Library Cardholder Non-Parametric Discriminant Analysis Items Circulated per Year Not Friends member

Classification Tree Years as Library Cardholder 0 -10 11+ Items Circulated Per Year 0 -10 90% No 11 -30 75% Yes 31+ 82% No

Neural Network ? ? ? ? ? *4 /2 *3

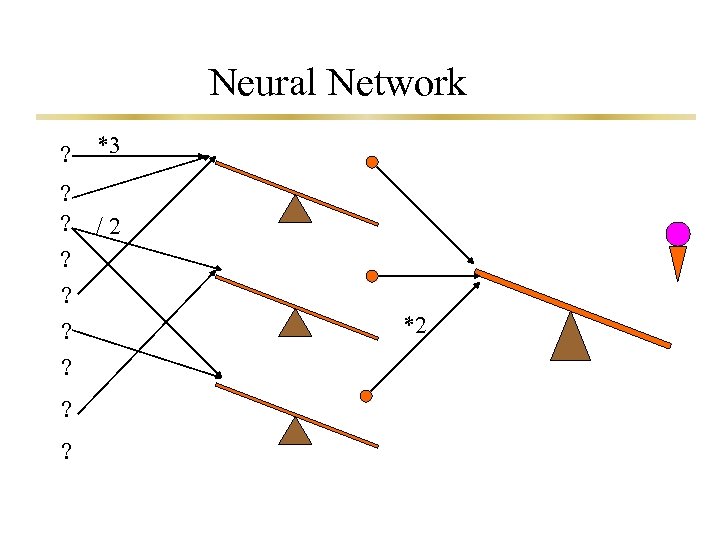

Neural Network ? *3 ? ? /2 ? ? ? *2

Results of the Study

Logistic Regression w w Combined 21 criteria 463 scholarly/473 non-scholarly correct 94. 5% accuracy / 92. 6% return Problematic pages: w NS: . edu, long text, few links w S: . com, no headings, graphics w Missed 30% of problematic dataset w Missed bibliographies, vitae, research proposals w Correct on all non-scholarly articles

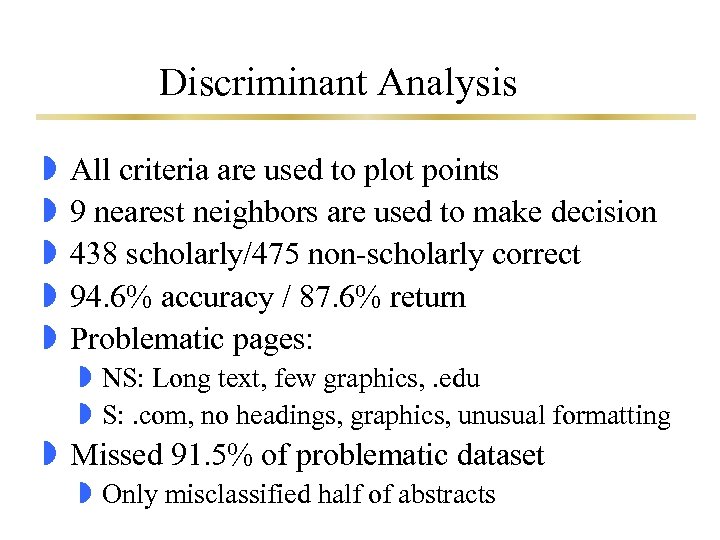

Discriminant Analysis w w w All criteria are used to plot points 9 nearest neighbors are used to make decision 438 scholarly/475 non-scholarly correct 94. 6% accuracy / 87. 6% return Problematic pages: w NS: Long text, few graphics, . edu w S: . com, no headings, graphics, unusual formatting w Missed 91. 5% of problematic dataset w Only misclassified half of abstracts

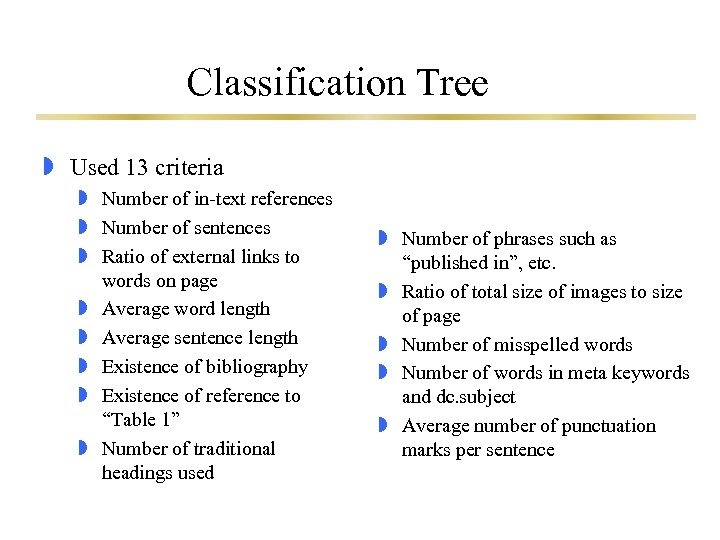

Classification Tree w Used 13 criteria w Number of in-text references w Number of sentences w Ratio of external links to words on page w Average word length w Average sentence length w Existence of bibliography w Existence of reference to “Table 1” w Number of traditional headings used w Number of phrases such as “published in”, etc. w Ratio of total size of images to size of page w Number of misspelled words w Number of words in meta keywords and dc. subject w Average number of punctuation marks per sentence

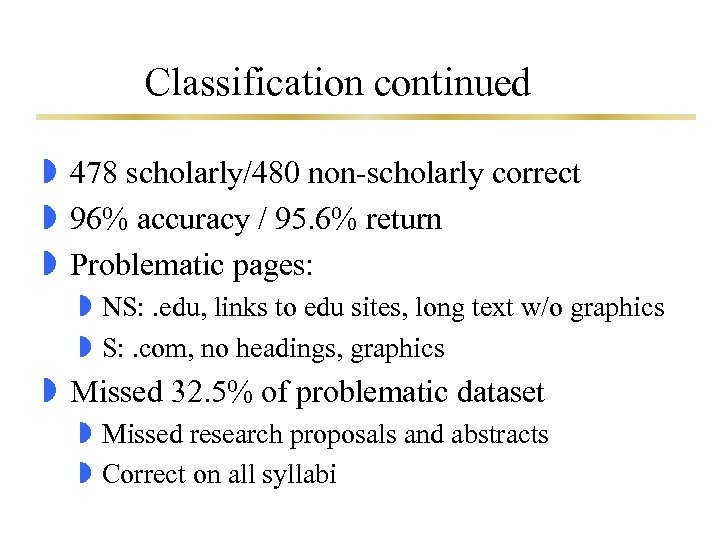

Classification continued w 478 scholarly/480 non-scholarly correct w 96% accuracy / 95. 6% return w Problematic pages: w NS: . edu, links to edu sites, long text w/o graphics w S: . com, no headings, graphics w Missed 32. 5% of problematic dataset w Missed research proposals and abstracts w Correct on all syllabi

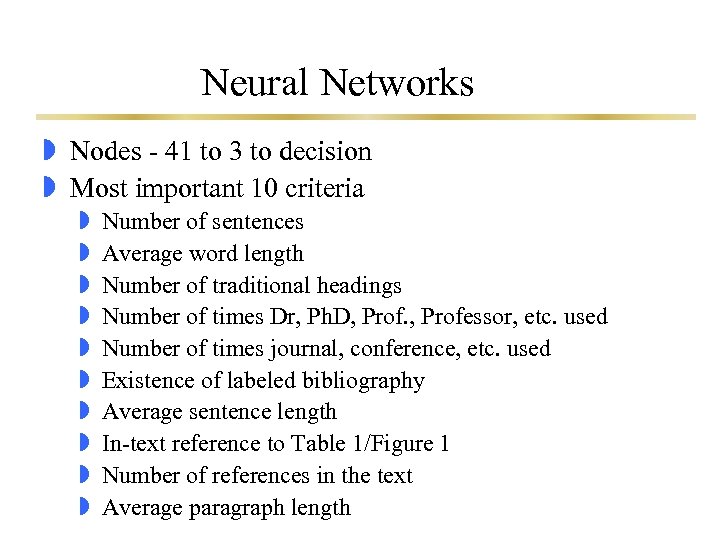

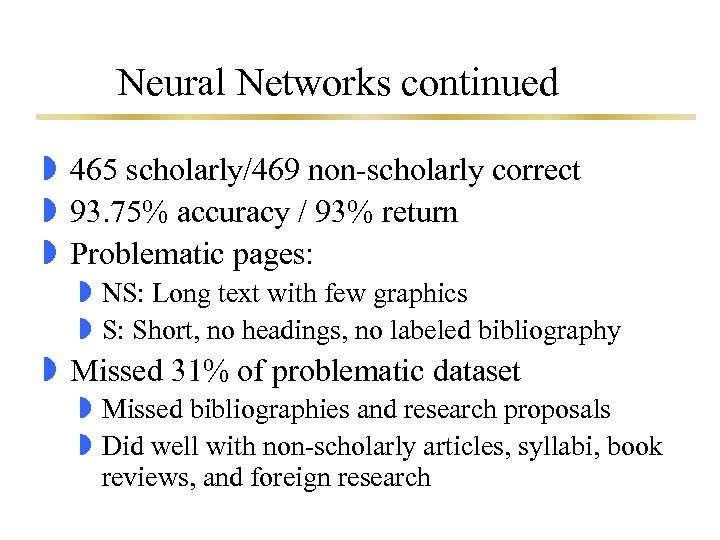

Neural Networks w Nodes - 41 to 3 to decision w Most important 10 criteria w w w w w Number of sentences Average word length Number of traditional headings Number of times Dr, Ph. D, Professor, etc. used Number of times journal, conference, etc. used Existence of labeled bibliography Average sentence length In-text reference to Table 1/Figure 1 Number of references in the text Average paragraph length

Neural Networks continued w 465 scholarly/469 non-scholarly correct w 93. 75% accuracy / 93% return w Problematic pages: w NS: Long text with few graphics w S: Short, no headings, no labeled bibliography w Missed 31% of problematic dataset w Missed bibliographies and research proposals w Did well with non-scholarly articles, syllabi, book reviews, and foreign research

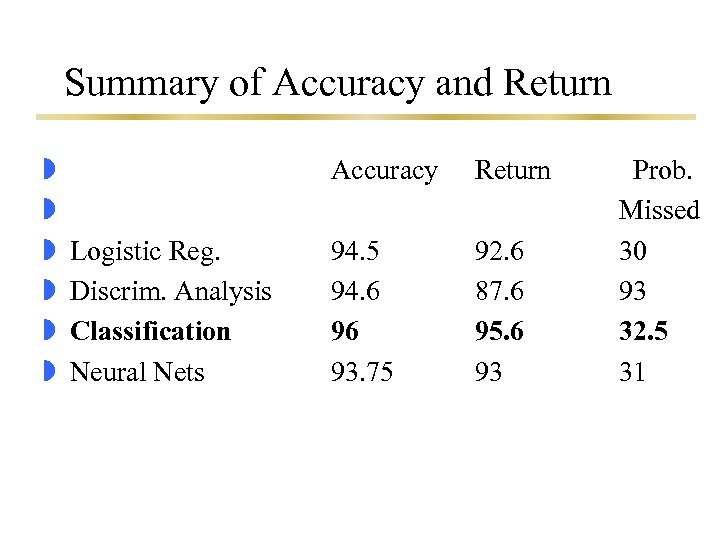

Summary of Accuracy and Return w w w Accuracy Logistic Reg. Discrim. Analysis Classification Neural Nets Return 94. 5 94. 6 96 93. 75 92. 6 87. 6 95. 6 93 Prob. Missed 30 93 32. 5 31

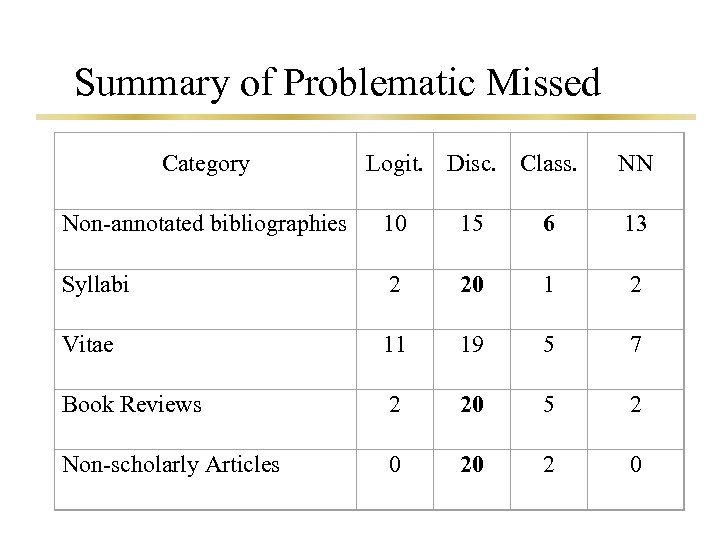

Summary of Problematic Missed Category Logit. Disc. Class. NN Non-annotated bibliographies 10 15 6 13 Syllabi 2 20 1 2 Vitae 11 19 5 7 Book Reviews 2 20 5 2 Non-scholarly Articles 0 20 2 0

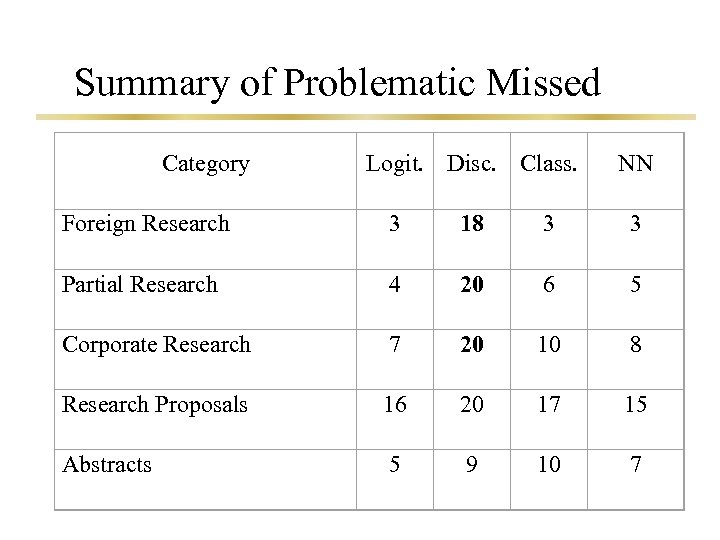

Summary of Problematic Missed Category Logit. Disc. Class. NN Foreign Research 3 18 3 3 Partial Research 4 20 6 5 Corporate Research 7 20 10 8 Research Proposals 16 20 17 15 Abstracts 5 9 10 7

Conclusions and Future Research

Best Modeling Techniques w Classification tree had the highest accuracy and return w Logistic regression misclassified the fewest problematic pages w A multi-stage model using both methods may return a stronger and more robust result

Contribution to Knowledge w Information agent created for scholarly research through reduction of criteria w Four-step technique can be used to create other information agents w Technique for selecting random Web pages representative of user searches

Contribution continued w Suggestion of standards to aid in future automated discovery of scholarly research 1. Entire document contained on single page 2. Graphics, navigational links, frames, and unusual formatting used only when necessary 3. Traditional heading structure (using accepted style guide) with labeled bibliography

Future Research w Remove limitations w Discover multi-page scholarly research works w PS, PDF, La. Tex, Doc w Focus on areas of problematic pages w Two methods: w Create new search tool and full-text database of scholarly research w Create filter for existing Web search tool results

Future Research continued w Create search agents for other groups of highlystructured documents w Databases of online financial reports from private companies. w Full-text databases of poetry or lyrics w Database of genealogical information w Threaded discussion boards created by gathering, analyzing, and categorizing posts from many different discussions w Statistical sports database with on-demand analysis capabilities w Most Invisible Web topics = Structured information w Candidates for this technique

Conclusion -Started with traditional librarianship -Captured knowledge through elicitation technique -Embedded knowledge into system -Used system to judge Web resources -Created and Tested decision-making models -Example of Moving Librarianship Forward

1004ce84a47e8fc0fb885bd038c5c38a.ppt