a14c2a06ab75f0d0bcbdb2e8c6c4f7bd.ppt

- Количество слайдов: 33

Beyond 2011: Automating the linkage of anonymous data Pete Jones Office for National Statistics

The Beyond 2011 Programme • Office for National Statistics (ONS) conducted a review (Beyond 2011 Programme) for the future approach to the census and population statistics in England Wales • National Statistician made a recommendation to Government in March 2014 that there should be a predominantly online census in 2021 • This will be supplemented with increased use of administrative data and surveys to enhance census outputs and annual statistics • Part of our research leading up to the recommendation was to explore an administrative data option for producing population statistics • Involved large scale record linkage with national datasets and surveys • Lots of research into developing fully automated methods to link anonymous data

Definitions LA = local authority, between 2, 200 and 1 million people, average size = 160, 000 Postcode = An alpha-numeric code assigned to a postal address to assist the sorting of mail • Sources used in Beyond 2011 research: PR = Patient Register – list of all patients registered with an NHS doctor in England Wales CIS = Customer Information System – list of people who have a National Insurance Number – tax register HESA = Higher Education Statistics Agency – list of students registered on a Higher Education course in England Wales SC = School Census – list of pupils registered at state schools in England Wales

Matching to produce population estimates • For the 2011 Census, data matching was undertaken between Census and Census Coverage Survey (CCS) • By aggregating the number of matched / unmatched records we are able to adjust for non-response • Requires near perfect matching ( zero false positives / false negatives) • Matching error will result in over-estimate or under-estimate of the population • To ensure quality a combination of exact matching, probabilistic matching and clerical matching was used

Beyond 2011 Linkage Model • There additional challenges associated with the use of admin data • Data quality – particularly lags in data being up to date • Efficiency – need to match datasets with 60 million + records • Public acceptability - ONS unique in holding multiple admin sources in one place • Made the decision that names, dates of birth and addresses will be anonymised with a hashing algorithm (SHA-256) • Converts original identifiers into meaningless hashed values (e. g. John hashes to XY 143257461) • Consistently maps same entities to the same hashed value

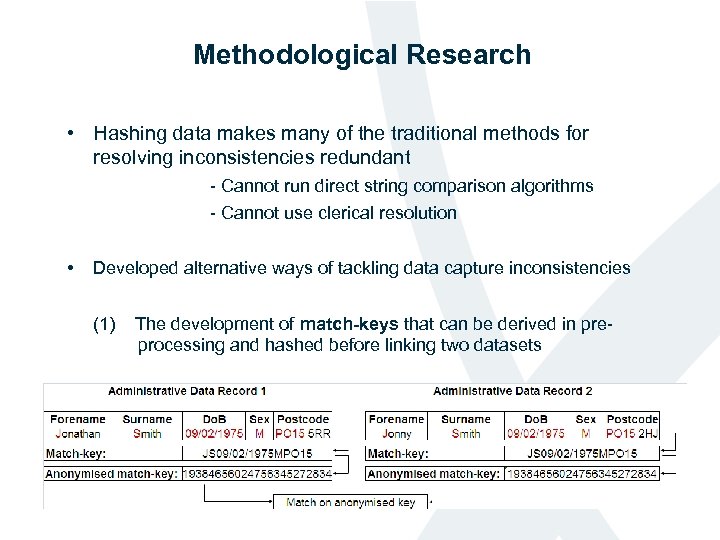

Methodological Research • Hashing data makes many of the traditional methods for resolving inconsistencies redundant - Cannot run direct string comparison algorithms - Cannot use clerical resolution • Developed alternative ways of tackling data capture inconsistencies (1) The development of match-keys that can be derived in preprocessing and hashed before linking two datasets

Beyond 2011 Match-Keys Key Type Unique records on EPR (%) 1 Forename, Surname, Do. B, Sex, Postcode 100. 00% 2 Forename initial , Surname initial, Do. B, Sex, Postcode District 99. 55% 3 Forename bi-gram, Surname bi-gram, Do. B, Sex, Postcode Area 99. 44% 4 Forename initial, Do. B, Sex, Postcode 99. 84% 5 Surname initial, Do. B, Sex, Postcode 99. 44% 6 Forename, Surname, Age, Sex, Postcode Area 99. 46% 7 Forename, Surname, Sex, Postcode 99. 19% 8 Forename, Surname, Do. B, Sex 98. 87% 9 Forename, Surname, Do. B, Postcode 99. 52% 10 Surname, Forename, Do. B, Sex, Postcode (matched on key 1) 100. 00% 11 Middle name, Surname, Do. B, Sex, Postcode (matched on key 1) 99. 90%

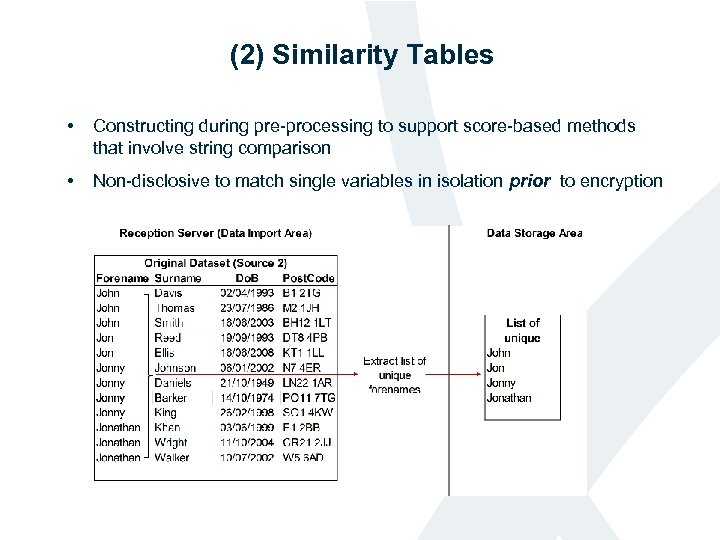

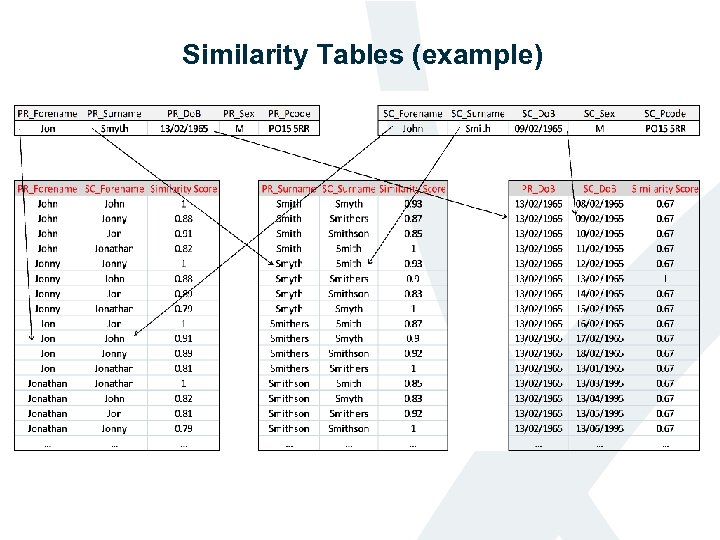

(2) Similarity Tables • Constructing during pre-processing to support score-based methods that involve string comparison • Non-disclosive to match single variables in isolation prior to encryption

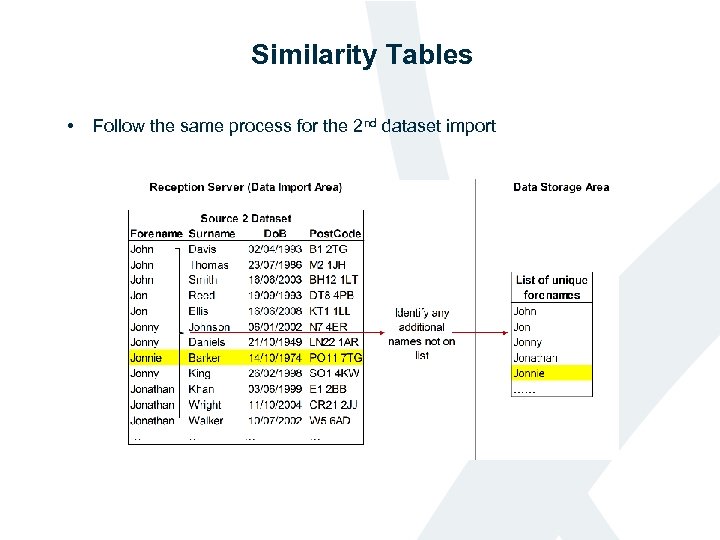

Similarity Tables • Follow the same process for the 2 nd dataset import

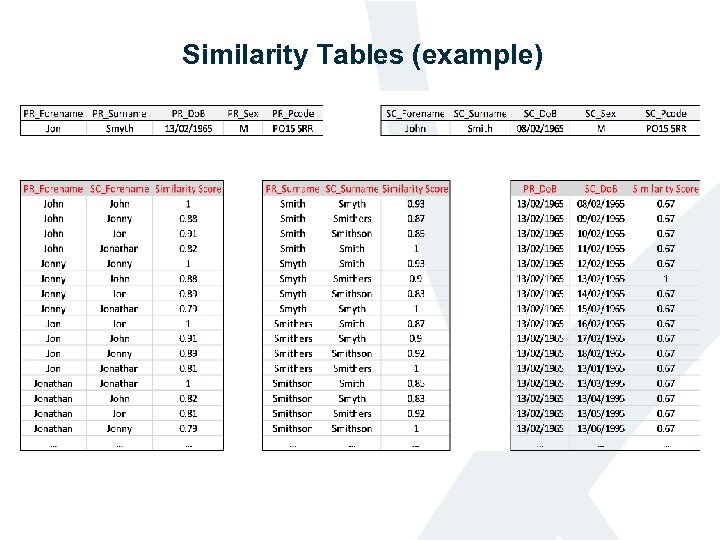

Similarity Tables • Run string comparison algorithm between all names on the list

Similarity Tables (example)

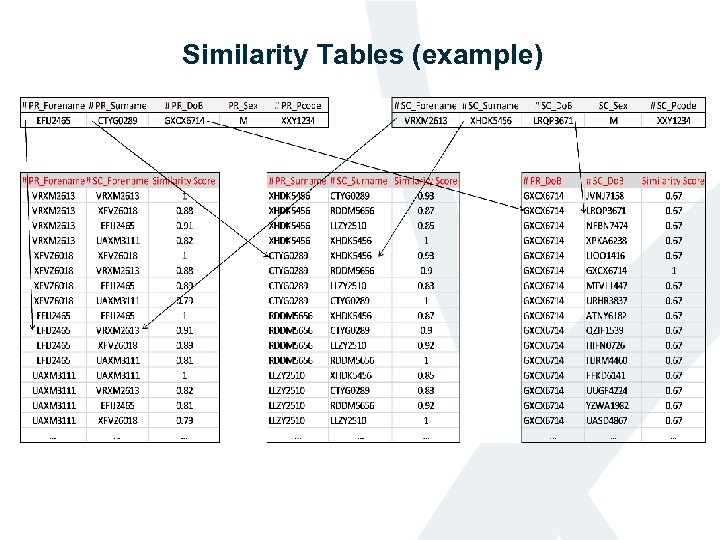

Similarity Tables (example)

Similarity Tables (example)

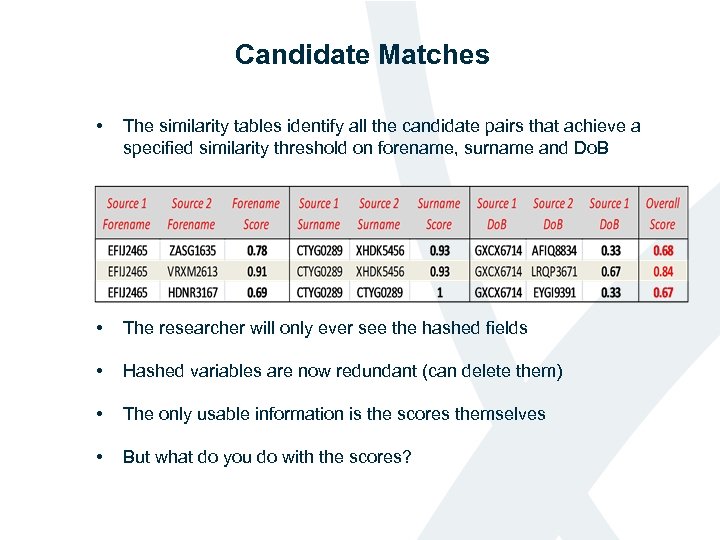

Candidate Matches ames • The similarity tables identify all the candidate pairs that achieve a specified similarity threshold on forename, surname and Do. B • The researcher will only ever see the hashed fields • Hashed variables are now redundant (can delete them) • The only usable information is the scores themselves • But what do you do with the scores?

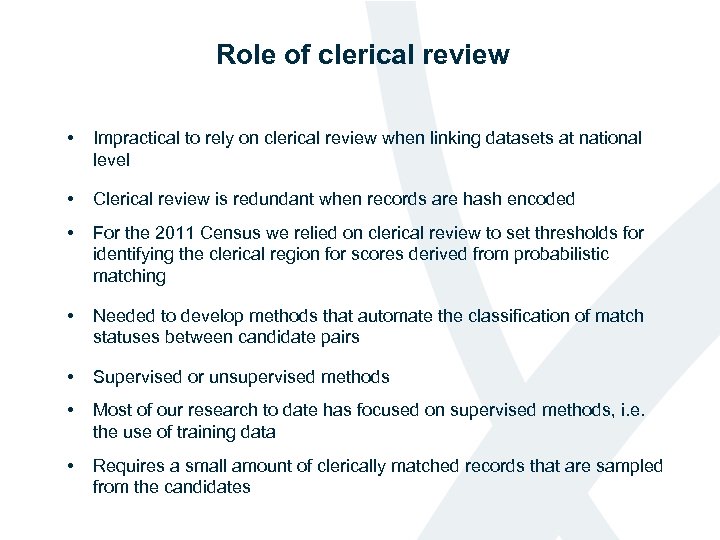

Role of clerical review • Impractical to rely on clerical review when linking datasets at national level • Clerical review is redundant when records are hash encoded • For the 2011 Census we relied on clerical review to set thresholds for identifying the clerical region for scores derived from probabilistic matching • Needed to develop methods that automate the classification of match statuses between candidate pairs • Supervised or unsupervised methods • Most of our research to date has focused on supervised methods, i. e. the use of training data • Requires a small amount of clerically matched records that are sampled from the candidates

Supervised Learning • Beyond 2011 are unable to undertake large-scale clerical work but will have access to a small sample set of candidate pairs • Modelling approach that moves away from setting two thresholds – logistic regression • Clerically match a small sample of unencrypted records: - Fit a logistic regression model where y-variable is the decision to match or not - Predictor variables are the similarity scores, name frequencies, geographic distances • The idea is to substitute the clerical decision with an automated procedure • • Beta coefficients serve as weights for the matching variables • Generates a single cut-off point (match where p >= 0. 5) Regression equation can be applied to remaining candidates between the two datasets

Model Design • Piloted in SC-PR matching (12 year olds) • Following auto-match, used similarity tables to identify 7303 records • A clerical decision was made for 5% of records (365 candidate pairs) • Fitted a logistic regression model with the dependent variable as the clerical match decision (binary outcome ‘Yes’ or ‘No’) and the following variables as predictors: - Agreement between forenames (SPEDIS Score) - Agreement between surnames (SPEDIS Score) - Forename weight (highest on both sources) - Surname weight (highest on both sources) - Sex agreement (agree = 2, disagree = 1) - Postcode agreement (full=5, sector=4, district=3, area=2, none=1) - Do. B agreement (full=3, M/Y=2, D/Y=1) - Distance between OA centroids

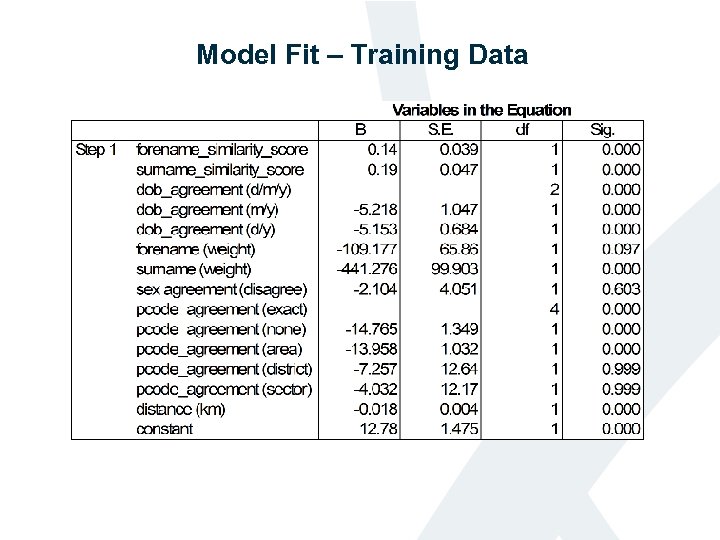

Model Fit – Training Data t tables

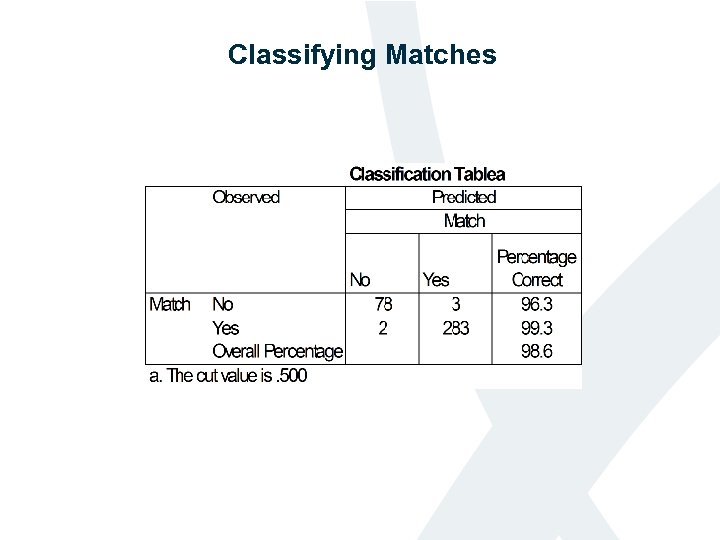

Classifying Matches t tables

Further research on supervised learning • The rationale behind this approach is to automate decision making for more difficult candidate pairs • Logistic regression provides an initial method for identifying a single threshold for classifying match candidates • Optimum method could be something else Support Vector Machine / Decision Trees / Bayesian Methods • To what extent can we quality assure these matches? • Can we apply this modelling approach to the match-keys? • How much training data do we need to produce accurate models? • Can synthetic data be used as training data?

Unsupervised approaches • Supervised methods in 2001 Census matching resulted in overfitting • 2011 Census matching used Expectation-Maximisation (EM algorithm) to calculate m and u probabilities • Winkler et al 2007 (Data Quality and Record Linkage Techniques) outline method in detail – does not require training data – can use data from all of the blocked candidates – incorporated into probabilistic framework (Fellegi-Sunter model) • But still requires clerical review to decide on the threshold score for match / non-match • Further research is planned to explore ways of threshold setting that does not involve clerical resolution

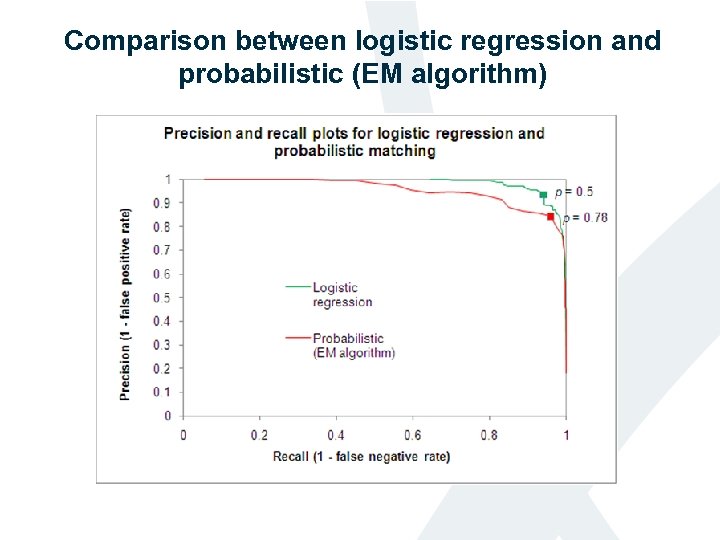

Comparison with synthetic data • Tested whether probabilistic matching outperforms logistic regression • Blocked records by postcode and used the EM algorithm to calculate match weights → probabilistic scores • Good estimates of m and u probabilities • Sampled 2500 records for clerical review • Found the optimum cut-off point for the probabilistic score • Fitted a logistic regression model with the candidates • Undertook ROC curve analysis and precision / recall plots

Comparison between logistic regression and probabilistic (EM algorithm)

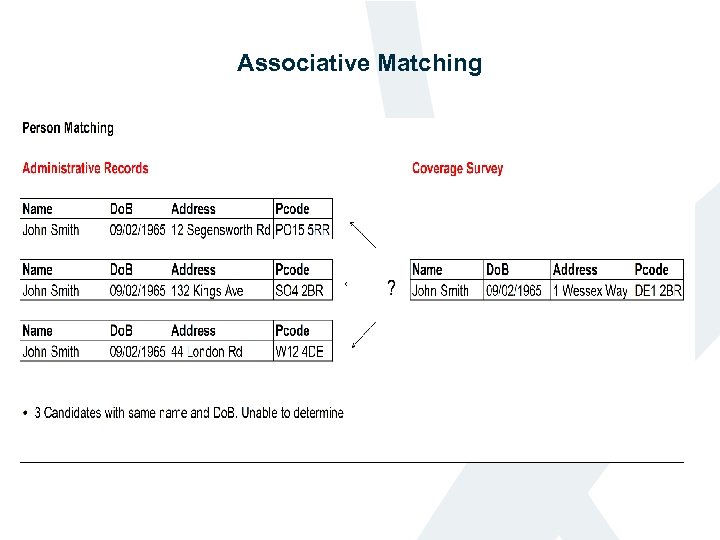

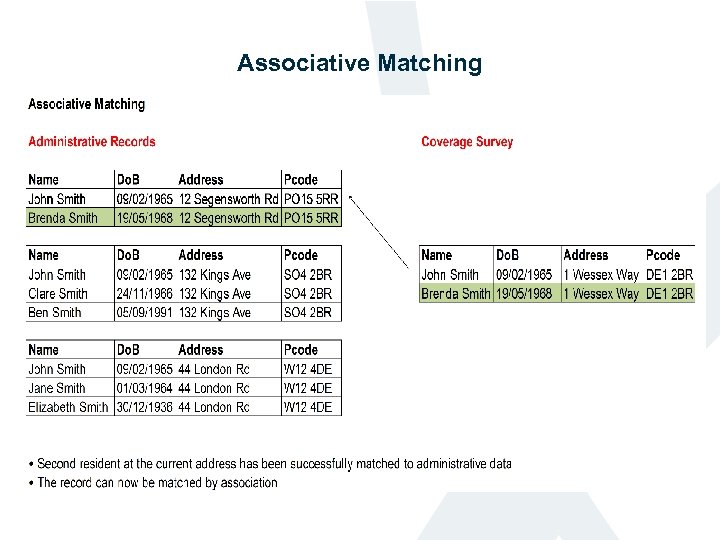

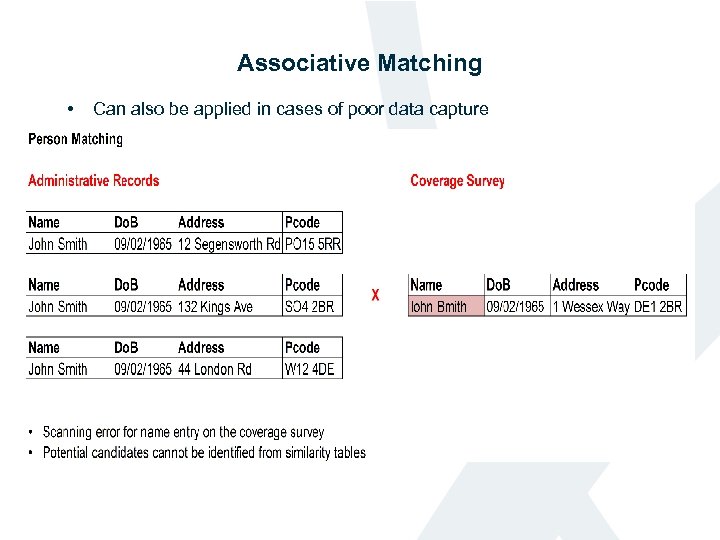

Associative Matching • Probabilistic algorithms in Beyond 2011 will not identify all matches (1) People with common names moving address Probabilistic algorithms are designed to leave records unmatched where there are multiple candidates of similar likelihood (2) Where data is missing or of poor quality For a candidate pair to qualify for the logistic regression stage they must achieve a similarity threshold • Having access to broad coverage sources provides opportunities for correctly identifying some of these matches • By relying on the strength of a match made by someone else at the same address we can match difficult cases by association

Associative Matching

Associative Matching

Associative Matching • Can also be applied in cases of poor data capture

Testing the Algorithms • Major requirement to understand quality loss between survey to admin source matching • To date quality loss has been measured by undertaking controlled comparisons between conventional approaches and SRE simulations • Number of caveats - Sample bias (small scale / dob blocking) - No understanding of geographic variance - Limited clerical (compared to Census / Census QA matching) • Undertaking record level comparison with Census QA to establish a more robust picture of precision and recall of the Beyond 2011 algorithm Precision = number of true positives / number of B 2011 matches Recall = number of true positives / number of Census QA matches

Census QA Comparison Exercise • Running it for 8 LA’s to start with Powys Westminster Birmingham Mid-Devon Lambeth Southwark Aylesbury Vale Newham • Adjusting the Beyond 2011 matching strategy to make it comparable with census QA • Subset PR by CCS cluster in LA • Comparisons in cross LA matching to be undertaken at later date Matching to Census / CCS LA

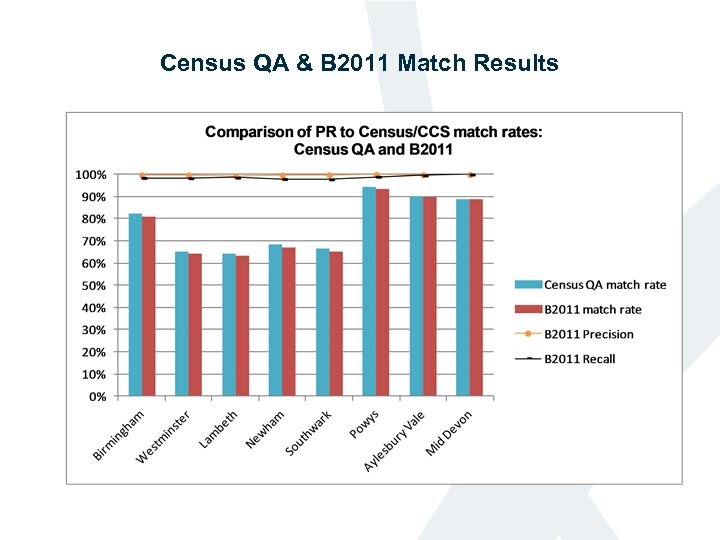

Census QA & B 2011 Match Results

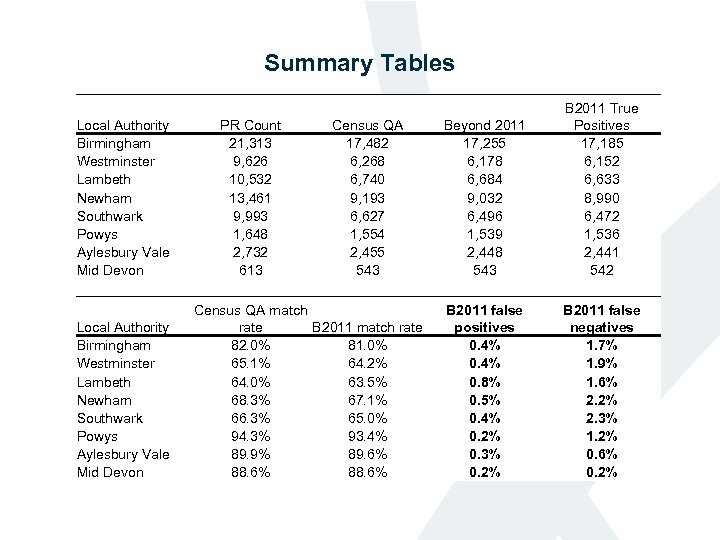

Summary Tables Local Authority Birmingham Westminster Lambeth Newham Southwark Powys Aylesbury Vale Mid Devon PR Count 21, 313 9, 626 10, 532 13, 461 9, 993 1, 648 2, 732 613 Census QA 17, 482 6, 268 6, 740 9, 193 6, 627 1, 554 2, 455 543 Local Authority Birmingham Westminster Lambeth Newham Southwark Powys Aylesbury Vale Mid Devon Census QA match rate B 2011 match rate 82. 0% 81. 0% 65. 1% 64. 2% 64. 0% 63. 5% 68. 3% 67. 1% 66. 3% 65. 0% 94. 3% 93. 4% 89. 9% 89. 6% 88. 6% B 2011 True Positives 17, 185 6, 152 6, 633 8, 990 6, 472 1, 536 2, 441 542 Beyond 2011 17, 255 6, 178 6, 684 9, 032 6, 496 1, 539 2, 448 543 B 2011 false positives 0. 4% 0. 8% 0. 5% 0. 4% 0. 2% 0. 3% 0. 2% B 2011 false negatives 1. 7% 1. 9% 1. 6% 2. 2% 2. 3% 1. 2% 0. 6% 0. 2%

Summary and Future Research • The B 2011 algorithms automate the matching process for anonymised data • False positives are minimal • False negatives are currently higher than target (<1%) • Consider additional methods to improve matching accuracy - Longitudinal data - Improved data capture - Widening the blocking strategy - Improving on classification methods • Research will continue in phase 2 of the programme

Summary and Future Research • • Corporate strategy for record linkage at ONS • Collaboration with statistics agencies internationally • ONS partnering with ADRC England • Working with researchers outside of ONS • Publishing research

a14c2a06ab75f0d0bcbdb2e8c6c4f7bd.ppt