994d4bb7ce5478c9172632a1961aca33.ppt

- Количество слайдов: 32

Benchmarking Requirements for NASA Decision CAN Early Warning Project Kenton Ross, SSAI/SSC 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 1

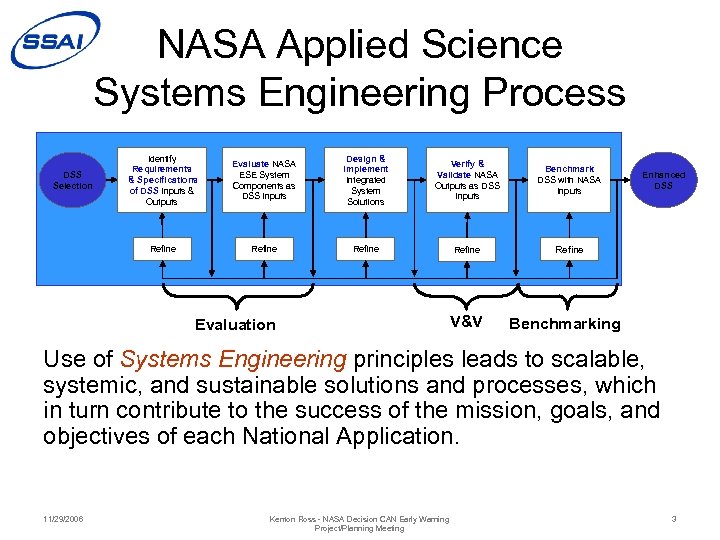

Why Benchmarking? • Within the context of the NASA Applied Sciences Program, benchmarking has come to be used as shorthand for a comprehensive systems engineering approach to bringing the benefits of NASA science to decision support systems of national or international scope • More precisely, benchmarking refers the systems engineering elements that are described as Evaluation, Verification, Validation and Benchmarking (EVVB) 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 2

NASA Applied Science Systems Engineering Process Evaluate NASA ESE System Components as DSS Inputs Design & Implement Integrated System Solutions Verify & Validate NASA Outputs as DSS Inputs Benchmark DSS with NASA Inputs Refine DSS Selection Identify Requirements & Specifications of DSS Inputs & Outputs Refine Evaluation V&V Enhanced DSS Benchmarking Use of Systems Engineering principles leads to scalable, systemic, and sustainable solutions and processes, which in turn contribute to the success of the mission, goals, and objectives of each National Application. 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 3

EVVB Notes • The systems engineering process is not sequential. It is necessarily iterative and some components will develop in parallel. • By definition, in an awarded project, one or more iterations of the systems engineering steps labeled “Evaluation” have already happened. The beginning of the evaluation process is an intrinsic part of the proposal and review process. 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 4

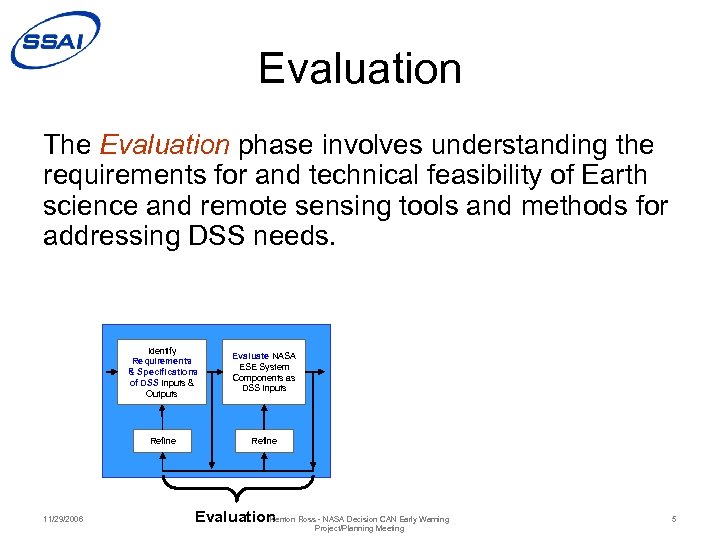

Evaluation The Evaluation phase involves understanding the requirements for and technical feasibility of Earth science and remote sensing tools and methods for addressing DSS needs. Identify Requirements & Specifications of DSS Inputs & Outputs Refine 11/29/2006 Evaluate NASA ESE System Components as DSS Inputs Refine Kenton Ross - NASA Decision CAN Early Warning Evaluation Project/Planning Meeting 5

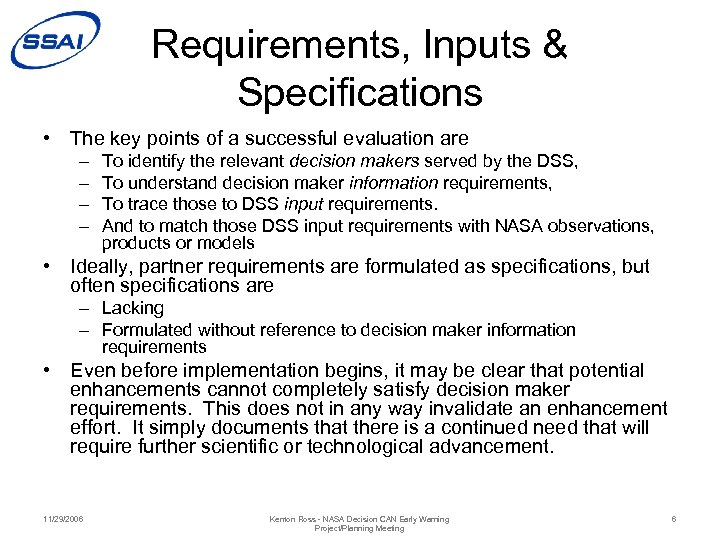

Requirements, Inputs & Specifications • The key points of a successful evaluation are – – To identify the relevant decision makers served by the DSS, To understand decision maker information requirements, To trace those to DSS input requirements. And to match those DSS input requirements with NASA observations, products or models • Ideally, partner requirements are formulated as specifications, but often specifications are – Lacking – Formulated without reference to decision maker information requirements • Even before implementation begins, it may be clear that potential enhancements cannot completely satisfy decision maker requirements. This does not in any way invalidate an enhancement effort. It simply documents that there is a continued need that will require further scientific or technological advancement. 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 6

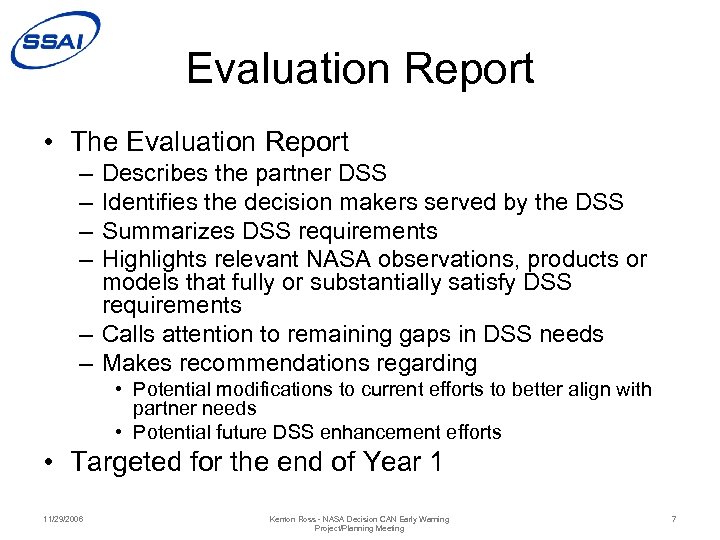

Evaluation Report • The Evaluation Report – – Describes the partner DSS Identifies the decision makers served by the DSS Summarizes DSS requirements Highlights relevant NASA observations, products or models that fully or substantially satisfy DSS requirements – Calls attention to remaining gaps in DSS needs – Makes recommendations regarding • Potential modifications to current efforts to better align with partner needs • Potential future DSS enhancement efforts • Targeted for the end of Year 1 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 7

PECAD Example: Upper Level Organization of Evaluation • • • Executive Summary 1. 0 Introduction 2. 0 Description of PECAD’s DSS 3. 0 Consideration of NASA Inputs 4. 0 Identified NASA Technology Gaps in Meeting PECAD Needs • 5. 0 Conclusions 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 8

PECAD Example: Requirements & Inputs • 3. 0 Consideration of NASA Inputs – 3. 1 PECAD’s DSS Requirements – 3. 2 Matching NASA Inputs Against PECAD Requirements – 3. 3 Planned NASA Inputs • • 3. 3. 1 MODIS Rapid Response Products 3. 3. 2 TRMM Tailored Products and Systems 3. 3. 3 Satellite Altimetry Products 3. 3. 4 Synthesized Products and Predictions – 3. 4 Potential NASA Inputs • 3. 4. 1 Water Balance • 3. 4. 2 Radiation Budget • 3. 4. 3 Bare Earth Elevation 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 9

PECAD Example: Requirements Working assumptions regarding PECAD’s DSS requirements. DSS Property Requirement Drivers 1 day Time requirements of emergency response planners Monthly reporting 24 hours or less Relevance of DSS inputs Food security 6 hours or less Time requirements of emergency response planners Legacy spatial resolution of CADRE Field-level analysis: 50 m or better Smallest interesting field dimension Port monitoring: 1 m or better Width of railroad cars/containers 25 km or better Legacy spatial resolution of CADRE Moderate resolution product should be free Budget constraints Simple (not HDF) Capabilities of current and next generation of CADRE Accessibility Web accessible Continue greater public benefit leveraging being demonstrated in Crop Explorer Data validation Validated and science team in place Quality assurance Data redundancy 11/29/2006 Food security Format General Properties Need to establish trends within 1 -month cycle, legacy time step of CADRE Cost Spatial Resolution 1 day, with 10 -day summaries Food security Latency Monthly reporting Synoptic analysis: 25 km or better Data Frequency (time step) Multiple sources Data continuity, convergence of evidence Monthly reporting Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 10

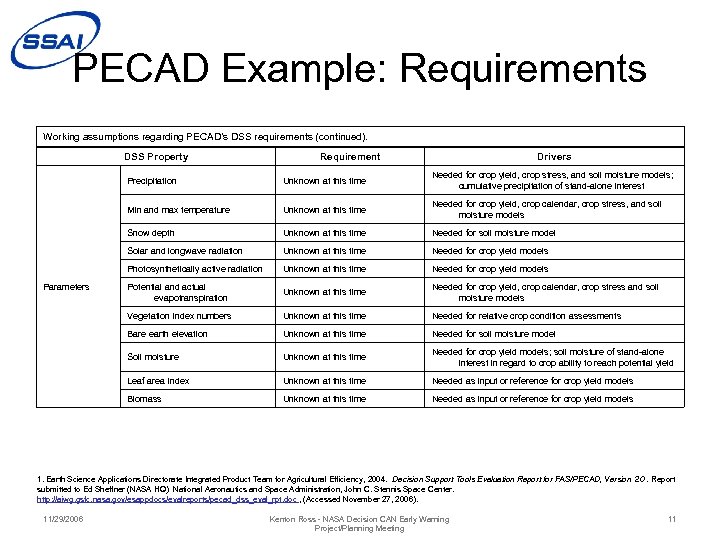

PECAD Example: Requirements Working assumptions regarding PECAD’s DSS requirements (continued). DSS Property Requirement Drivers Precipitation Needed for crop yield, crop stress, and soil moisture models; cumulative precipitation of stand-alone interest Min and max temperature Unknown at this time Needed for crop yield, crop calendar, crop stress, and soil moisture models Snow depth Unknown at this time Needed for soil moisture model Solar and longwave radiation Unknown at this time Needed for crop yield models Photosynthetically active radiation Unknown at this time Needed for crop yield models Potential and actual evapotranspiration Unknown at this time Needed for crop yield, crop calendar, crop stress and soil moisture models Vegetation index numbers Unknown at this time Needed for relative crop condition assessments Bare earth elevation Unknown at this time Needed for soil moisture model Soil moisture Unknown at this time Needed for crop yield models; soil moisture of stand-alone interest in regard to crop ability to reach potential yield Leaf area index Unknown at this time Needed as input or reference for crop yield models Biomass Parameters Unknown at this time Needed as input or reference for crop yield models 1. Earth Science Applications Directorate Integrated Product Team for Agricultural Efficiency, 2004. Decision Support Tools Evaluation Report for FAS/PECAD, Version 2. 0. Report submitted to Ed Sheffner (NASA HQ) National Aeronautics and Space Administration, John C. Stennis Space Center. http: //aiwg. gsfc. nasa. gov/esappdocs/evalreports/pecad_dss_eval_rpt. doc , (Accessed November 27, 2006). 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 11

PECAD Example: Requirements/Input Match Table 6 A. DSS requirement/NASA input match (incomplete). DSS Required Observation & Predictions 1. Moderate Resolution Visible. IR Remote Sensing NASA Inputs o Met/Unmet Requirements MODIS data and products MET: 3 -day global coverage → MODIS 1 - to 2 -day global coverage MET: 25 -km spatial resolution → MODIS 250 m to 1 km PLANNED: Modify to 10 -day time step PLANNED: Upgrade to 72 -hour latency for regular delivery, 2 - to 4 -hour delivery for Rapid Response a. Surface Reflectance o MODIS Surface Reflectance MET: Synoptic viewing capability UNCERTAIN: Accuracy requirement b. Moderate Resolution VIs o o MODIS NDVI MODIS EVI PLANNED: Continuity to be established by back-processing to year 2000 data UNCERTAIN: Accuracy requirement NOTE: MODIS EVI is an enhanced ratio index. PECAD analysts have used an EVI that was a simple difference index (NIR – Red) c. Temperature o MODIS Land Surface Temperature MET: Measured land surface temperature as opposed to current interpolated temperatures UNCERTAIN: Accuracy requirement d. Snow Depth o MODIS Snow Cover ISSUE: MODIS product is only snow cover, not depth; snow depth will be an AMSR Level 3 product. No AMSR Level 3 products will be available before March 2004 e. Leaf Area Index o MODIS LAI MET: This assumes that PECAD agrees that LAI should be a required parameter UNCERTAIN: Accuracy requirement f. Actual and Potential ET UNMET: There is no plan in writing to deliver the MODIS ET product to PECAD once it is validated. Current PECAD input is based on agrometeorological inputs alone with no direct sensing of canopies. UNCERTAIN: Accuracy requirement o MODIS ET 1. Earth Science Applications Directorate Integrated Product Team for Agricultural Efficiency, 2004. Decision Support Tools Evaluation Report for FAS/PECAD, Version 2. 0. Report submitted to Ed Sheffner (NASA HQ) National Aeronautics and Space Administration, John C. Stennis Space Center. http: //aiwg. gsfc. nasa. gov/esappdocs/evalreports/pecad_dss_eval_rpt. doc , (Accessed November 27, 2006). 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 12

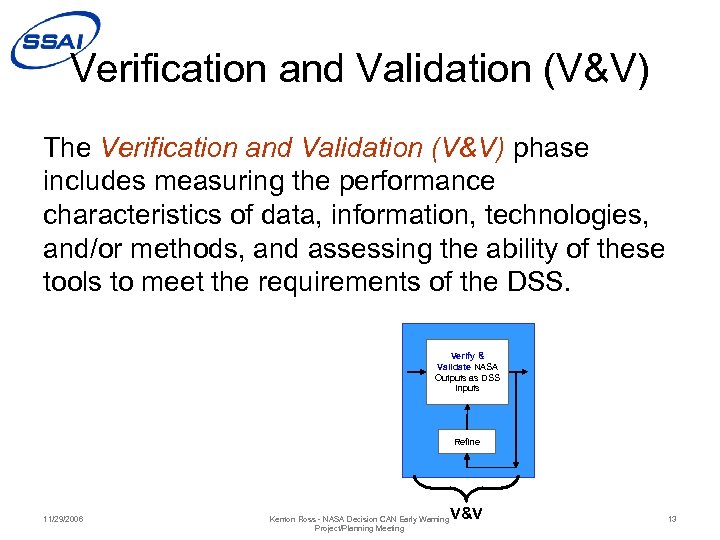

Verification and Validation (V&V) The Verification and Validation (V&V) phase includes measuring the performance characteristics of data, information, technologies, and/or methods, and assessing the ability of these tools to meet the requirements of the DSS. Verify & Validate NASA Outputs as DSS Inputs Refine 11/29/2006 V&V Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 13

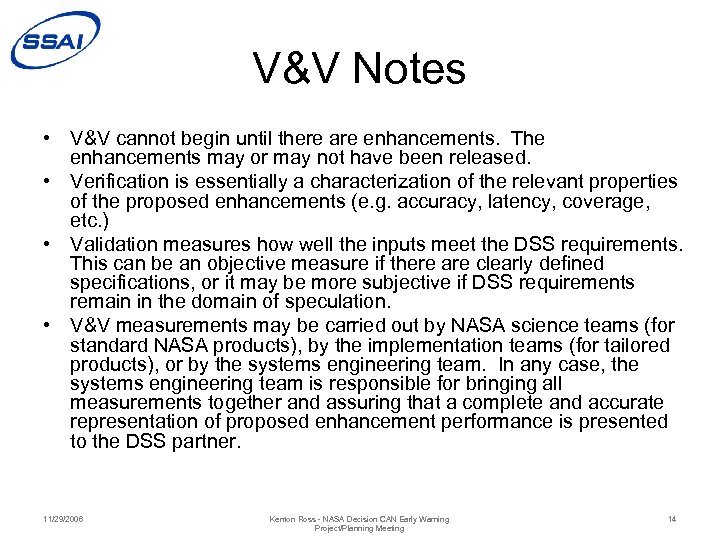

V&V Notes • V&V cannot begin until there are enhancements. The enhancements may or may not have been released. • Verification is essentially a characterization of the relevant properties of the proposed enhancements (e. g. accuracy, latency, coverage, etc. ) • Validation measures how well the inputs meet the DSS requirements. This can be an objective measure if there are clearly defined specifications, or it may be more subjective if DSS requirements remain in the domain of speculation. • V&V measurements may be carried out by NASA science teams (for standard NASA products), by the implementation teams (for tailored products), or by the systems engineering team. In any case, the systems engineering team is responsible for bringing all measurements together and assuring that a complete and accurate representation of proposed enhancement performance is presented to the DSS partner. 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 14

V&V Report • The V&V report – Characterizes the performance of proposed DSS enhancing inputs – Compares input performance to stated DSS specifications or understood DSS requirements (as captured in the evaluation process) – Makes recommendations regarding • Potential modifications to current efforts to better align with partner needs • Potential future DSS enhancement efforts • Targeted for the middle of Year 3 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 15

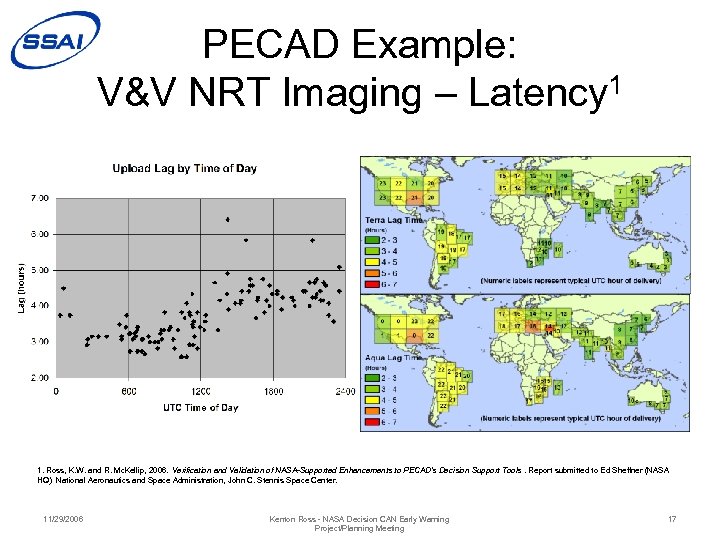

PECAD Example: V&V for Near-Real-Time Imaging PECAD accuracy, delivery, and coverage requirements for near-real-time imaging. Category Requirement Product accuracy Undetermined Data time step 1 day Latency 6 hours or less Coverage All land regions important for crop production and food security 1. Ross, K. W. and R. Mc. Kellip, 2006. Verification and Validation of NASA-Supported Enhancements to PECAD’s Decision Support Tools. Report submitted to Ed Sheffner (NASA HQ) National Aeronautics and Space Administration, John C. Stennis Space Center. 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 16

PECAD Example: V&V NRT Imaging – Latency 1 1. Ross, K. W. and R. Mc. Kellip, 2006. Verification and Validation of NASA-Supported Enhancements to PECAD’s Decision Support Tools. Report submitted to Ed Sheffner (NASA HQ) National Aeronautics and Space Administration, John C. Stennis Space Center. 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 17

Benchmarking In the Benchmarking phase, the adoption of NASA inputs within a DSS and the resulting impacts and outcomes are documented in a Benchmark Report. Benchmark DSS with NASA Inputs Refine 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting Benchmarking 18

What Is Benchmarking? • The term “benchmarking” is used in several ways 1. 2. It can simply mean to establish a reference or baseline performance 4. 11/29/2006 It can mean to compare an entity to best practices found in another entity 3. • As mentioned earlier, it has been applied to the entire systems engineering process It can mean to measure current performance against baseline performance In applying the EVVB process, we define benchmarking as #4 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 19

What to Benchmark? Is the DSS being benchmarked or are the proposed NASA enhancements? • The DSS – The benchmarking process is intended to measure the change in the effectiveness of the DSS in response to NASA enhancements • Measuring the effectiveness of the NASA enhancements is the purpose of validation 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 20

What Does Benchmarking Measure? Or “Can DSS effectiveness be quantified? ” • Ideally, one might measure whether the updated DSS was leading to better decisions. In the case of the early warning systems, this is so subjective as to be immeasurable. • Direct measures are possible for use and indirect measures are possible usability. 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 21

Use and Usability • Use measures how often content is being used, by whom, how long, etc. Web content can be followed by web statistics; hard copy can be followed by distribution statistics. • Usability is a quality measure of “the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use. ” 1 These qualities can be rated and measured through questionnaires or direct interview. Relevant topics to measure might include – Ease of decision making – Confidence in decision making 1. ISO 9241 -11, “Guidance on usability” 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 22

Benchmarking Process • The benchmarking process is started by the identification of the relevant decision makers and their requirements in the evaluation stage • The next steps are to establish use and usability baselines • After the update of the DSS with NASA inputs, use and usability are measured again • The changes between the baseline and the final measurement are analyzed to gauge the impact of the NASA enhancements 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 23

Benchmarking Report • The Benchmarking Report – Characterizes the change in use and usefulness of the partner DSS – Summarizes the overall conclusions of the systems engineering process – Makes recommendations regarding • Potential modifications to NASA enhancements as they are transferred from experimental to operational status • Potential future DSS enhancement efforts • Targeted for the end of Year 3 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 24

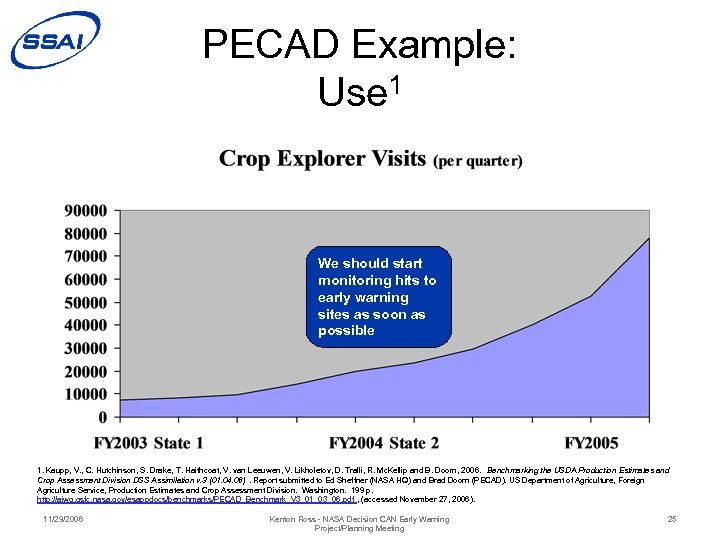

PECAD Example: Use 1 We should start monitoring hits to early warning sites as soon as possible 1. Kaupp, V. , C. Hutchinson, S. Drake, T. Haithcoat, V. van Leeuwen, V. Likholetov, D. Tralli, R. Mc. Kellip and B. Doorn, 2006. Benchmarking the USDA Production Estimates and Crop Assessment Division DSS Assimilation v. 3 (01. 04. 06) . Report submitted to Ed Sheffner (NASA HQ) and Brad Doorn (PECAD). US Department of Agriculture, Foreign Agriculture Service, Production Estimates and Crop Assessment Division. Washington. 199 p. http: //aiwg. gsfc. nasa. gov/esappdocs/benchmarks/PECAD_Benchmark_V 3_01_03_06. pdf , (accessed November 27, 2006). 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 25

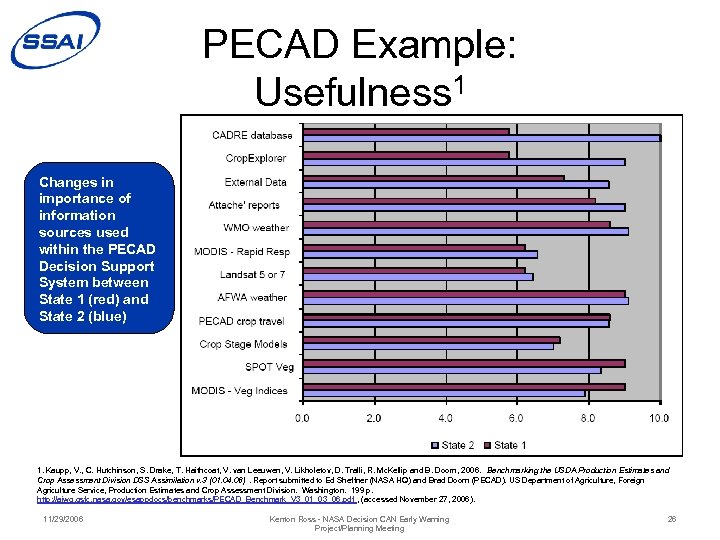

PECAD Example: Usefulness 1 Changes in importance of information sources used within the PECAD Decision Support System between State 1 (red) and State 2 (blue) 1. Kaupp, V. , C. Hutchinson, S. Drake, T. Haithcoat, V. van Leeuwen, V. Likholetov, D. Tralli, R. Mc. Kellip and B. Doorn, 2006. Benchmarking the USDA Production Estimates and Crop Assessment Division DSS Assimilation v. 3 (01. 04. 06) . Report submitted to Ed Sheffner (NASA HQ) and Brad Doorn (PECAD). US Department of Agriculture, Foreign Agriculture Service, Production Estimates and Crop Assessment Division. Washington. 199 p. http: //aiwg. gsfc. nasa. gov/esappdocs/benchmarks/PECAD_Benchmark_V 3_01_03_06. pdf , (accessed November 27, 2006). 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 26

EVVB for Early Warning • Who are the relevant decision makers or more broadly users? – FEWS NET – Health. Mapper • How does the systems engineering team gain access to decision makers/users? • Are data sources settled or are should alternatives be considered? 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 27

Potential Early Warning Precipitation Options • NOAA NWS Climate Prediction Center – – • NOAA NESDIS Satellite Services Division – – • – Nex. Sat (Simulated next generation satellite precipitation based on infrared imagery tuned with passive microwave) http: //www. nrlmry. navy. mil/NEXSAT. html UC Irvine – – • Global Precipitation Climatology Project (GPCP) http: //precip. gsfc. nasa. gov/ Navy NRL Montery – • TMPA-RT or 3 B 42 RT (3 -hourly Multi-Satellite Precipitation Analysis) http: //disc 2. nascom. nasa. gov/Giovanni/tovas/realtime. 3 B 42 RT. shtml NASA Laboratory for Atmospheres – – • Satellite Precipitation Estimates http: //www. ssd. noaa. gov/PS/PCPN/ NASA GES-DISC DAAC – – • RFE (Rain. Fall Estimate) http: //www. cpc. ncep. noaa. gov/products/fews/central_america/trmm_rainfall. html Hy. DIS – PERSIANN System http: //hydis 8. eng. uci. edu/persiann/ AFWA AGRMET via UCAR MM 5 – – 11/29/2006 Does not do a true merge – fills between surface observations using SSM/I (DOD passive microwave instrument) http: //www. mmm. ucar. edu/mm 5 v 3/data/agrmet. html Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 28

Potential Early Warning Humidity Options • Various Assimilation Models Incorporating AMSU (Advanced Microwave Sounding Unit – NOAA/NASA LDAS – GMAO – LIS – Navy NOGAPS 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 29

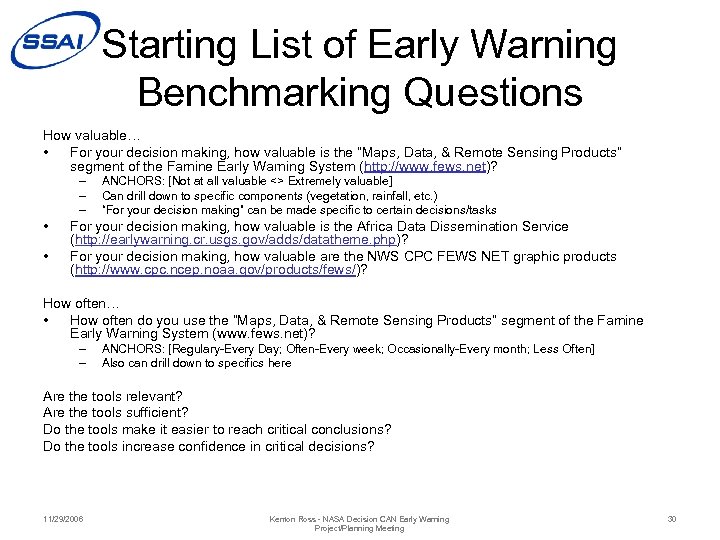

Starting List of Early Warning Benchmarking Questions How valuable… • For your decision making, how valuable is the “Maps, Data, & Remote Sensing Products” segment of the Famine Early Warning System (http: //www. fews. net)? – – – • • ANCHORS: [Not at all valuable <> Extremely valuable] Can drill down to specific components (vegetation, rainfall, etc. ) “For your decision making” can be made specific to certain decisions/tasks For your decision making, how valuable is the Africa Data Dissemination Service (http: //earlywarning. cr. usgs. gov/adds/datatheme. php)? For your decision making, how valuable are the NWS CPC FEWS NET graphic products (http: //www. cpc. ncep. noaa. gov/products/fews/)? How often… • How often do you use the “Maps, Data, & Remote Sensing Products” segment of the Famine Early Warning System (www. fews. net)? – – ANCHORS: [Regulary-Every Day; Often-Every week; Occasionally-Every month; Less Often] Also can drill down to specifics here Are the tools relevant? Are the tools sufficient? Do the tools make it easier to reach critical conclusions? Do the tools increase confidence in critical decisions? 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 30

Starting List of Early Warning Benchmarking Questions (cont. ) 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 31

Discussion? 11/29/2006 Kenton Ross - NASA Decision CAN Early Warning Project/Planning Meeting 32

994d4bb7ce5478c9172632a1961aca33.ppt