d64b0980c7d1e2443514ad3793612adf.ppt

- Количество слайдов: 21

Ba. Bar Data Distribution using the Storage Resource Broker Adil Hasan, Wilko Kroeger (SLAC Computing Services), Dominique Boutigny (LAPP), Cristina Bulfon (INFN, Rome), Jean-Yves Nief (ccin 2 p 3), Liliana Martin (Paris VI et VII), Andreas Petzold (TUD), Jim Cochran (ISU) (on behalf of the Ba. Bar Computing Group) IX International Workshop on Advanced Computing and Analysis Techniques in Physics Research KEK Japan 1 -5 December 2003 1

Ba. Bar – the parameters (computing-wise) • ~80 institutions in Europe and North America. • 5 Tier A computing centers: – SLAC (USA), ccin 2 p 3 (France), RAL (UK), Grid. KA (Germany), Padova (Italy). • Processing of data done in Padova. • Bulk of simulation production done by remote institutions. • Ba. Bar computing is highly distributed. • Reliable data distribution essential to Ba. Bar. 2

The SRB • The Storage Resource Broker (SRB) is developed by San Diego Supercomputing Center (SDSC). • A client-server middleware for connecting heterogeneous data resources. • Provides a uniform method to access the resources. • Provides relational database backend to record file metadata (metadata catalog called MCAT) and for access control lists (acls). • Can use Grid Security Infrastructure (GSI) authentication. • Also provides Audit information. 3

The SRB • SRB v 3: – Define an SRB zone comprising of one MCAT and one or more SRB servers. – Provides applications to federate zones (synch MCATs, create users, data belonging to different zones). – Within one federation all SRB servers need to run on the same port. – Allows an SRB server at one site to belong to more than one zone. 4

The SRB in Ba. Bar • The SRB feature-set makes it a useful tool for data distribution. • Particle Physics Data Grid (PPDG) effort initiated interest in SRB. • PPDG and Ba. Bar collaboration effort has gone into testing and deploying the SRB in Ba. Bar. 5

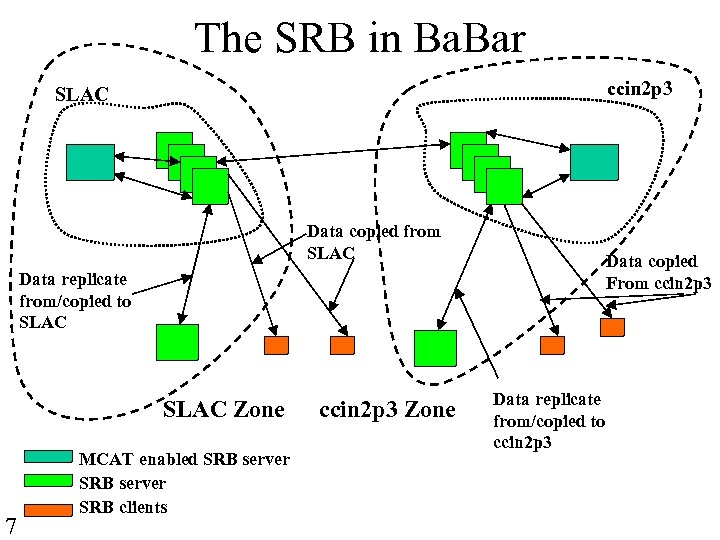

The SRB in Ba. Bar • The Ba. Bar system has 2 MCATs: one at SLAC and one at ccin 2 p 3. • Use SRB v 3 to create and federate the two zones: SLAC and ccin 2 p 3 zone. • Advantage that client can connect to SLAC or ccin 2 p 3 to see files at other site. 6

The SRB in Ba. Bar ccin 2 p 3 SLAC Data copied from SLAC Data copied From ccin 2 p 3 Data replicate from/copied to SLAC Zone 7 MCAT enabled SRB server SRB clients ccin 2 p 3 Zone Data replicate from/copied to ccin 2 p 3

Data Distribution using SRB • Ba. Bar Data distribution with SRB consists of the following steps: – Publish files available for distribution in MCAT (publication I). – Locate files to distribute (location). – Distribute files (distribution). – Publish distributed files (publication II). • Each of these steps requires the user to belong to some ACL (authorization). 8

Authorization • Ba. Bar. Grid currently uses European Data Grid Virtual Organization (VO). – Consists of an Lightweight Directory Access Protocol (LDAP) database holding certificate Distinguished Name (DN) strings for all Ba. Bar members. – Used to update Globus grid-mapfiles. • SRB authentication akin to grid-mapfile: – Maps SRB username to DN string. – SRB username doesn’t have to map to UNIX username. • Developing application to obtain user DN strings from VO. 9 – App is experiment neutral. – Has ability to include information from Virtual Organization Management System.

Publication I • The initial publication step (event store files) entails: – Publication of files into SRB MCAT once files have been produced and published in Ba. Bar bookkeeping. – Files are grouped into collections based on run range, release, production type (SRB collection != Ba. Bar collection). – Extra metadata information (such as file UUID, Ba. Bar collection name) stored in MCAT. – SRB object name contains processing spec, etc that uniquely id the object. – ~5 K event files (or SRB objects) per SRB collection. 10

Publication I • Detector conditions files are more complicated as files are constantly updated (ie not closed). • As files are update in SRB need to prevent users from taking an inconsistent copy. • Unfortunately SRB does not currently permit locking of collections. 11

Publication I • Have devised a workaround: – Register conditions file objects under date-specified collection. – Register a ‘locator file’ object containing the conditions date-specified collection name. – Then, new conditions files registered under a new datespecified collection. – ‘Locator file’ contents updated with new date-specified collection. • This method prevents users from taking an inconsistent set of files. • Only two sets kept at any one time. 12

Location & Distribution • Location and distribution happen in one client application. • User supplies Ba. Bar collection name from Ba. Bar bookkeeping. • SRB searches MCAT for files that have that collection name as metadata. • Files are then copied to target site. • SRB allows simple checksum to be performed. – But checksum is not md 5 or cksum. – Still can be useful. 13

Location & Distribution • SRB allows 3 rd-party replication. – But, most likely we will always run distribution command from source or target site. • Also have the ability to create a logical resource of more than 1 physical resource. – Can replicate to all resources with one command. – Useful if more than 1 site regularly needs the data. 14

Publication II • Optionally can register copied file in MCAT (decision a matter of policy). • Extra step for data distribution to ccin 2 p 3: – Publication of files in ccin 2 p 3 MCAT. – Required since current SRB v 3 does not allow replication across zones. 15 • Extra step not a problem since need to integrity check data before publishing anyway. • Important note: data can be published & accessed at ccin 2 p 3 or SLAC since MCATs will be synch’d regularly.

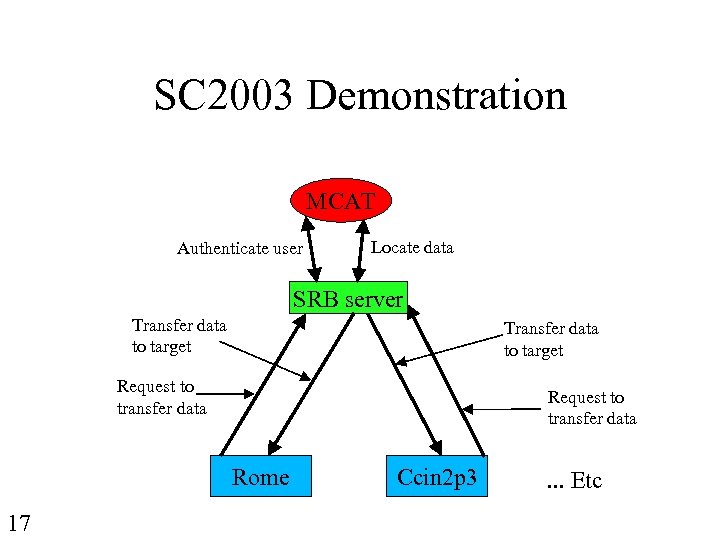

SC 2003 demonstration • Demonstrated distribution of detector conditions files using scheme previously described to 5 sites: – SLAC, ccin 2 p 3, Rome, Bristol, Iowa State. • Data were distributed over 2 servers at SLAC and files were copied in a round-robin manner from each server. • Files were continuously copied and deleted at target site. • Demonstration ran 1 full week continuously without problems. 16

SC 2003 Demonstration MCAT Authenticate user Locate data SRB server Transfer data to target Request to transfer data Rome 17 Ccin 2 p 3 . . . Etc

18 SC 2003 demonstration

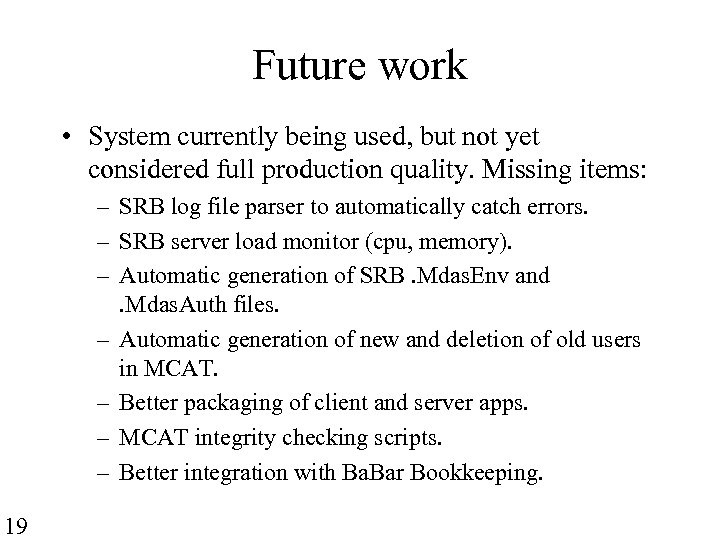

Future work • System currently being used, but not yet considered full production quality. Missing items: – SRB log file parser to automatically catch errors. – SRB server load monitor (cpu, memory). – Automatic generation of SRB. Mdas. Env and. Mdas. Auth files. – Automatic generation of new and deletion of old users in MCAT. – Better packaging of client and server apps. – MCAT integrity checking scripts. – Better integration with Ba. Bar Bookkeeping. 19

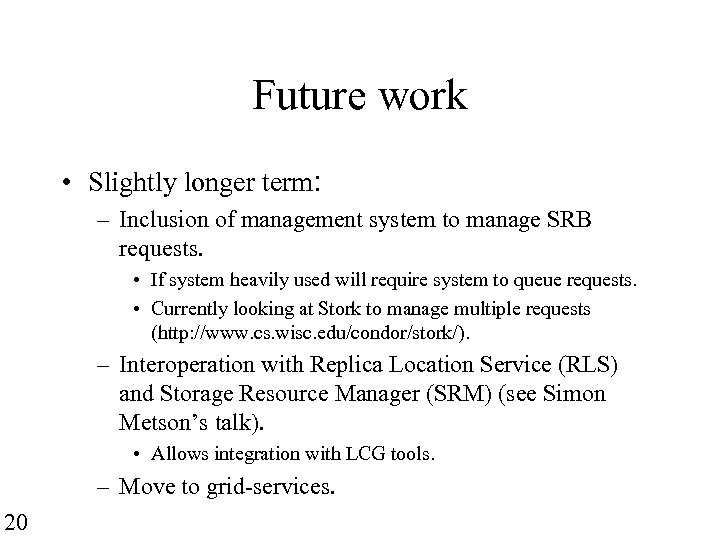

Future work • Slightly longer term: – Inclusion of management system to manage SRB requests. • If system heavily used will require system to queue requests. • Currently looking at Stork to manage multiple requests (http: //www. cs. wisc. edu/condor/stork/). – Interoperation with Replica Location Service (RLS) and Storage Resource Manager (SRM) (see Simon Metson’s talk). • Allows integration with LCG tools. – Move to grid-services. 20

Summary • Extensive testing and interaction with SRB developers has allowed Ba. Bar to develop a data distribution system based on existing grid middleware. • Used for distributing conditions files to 5 sites since October. • Will be used to distribute: – Detector conditions files. – Event store files. – Random trigger files (used for simulation). 21 • Ba. Bar’s data distribution system is sufficiently modular can be adapted to other environments.

d64b0980c7d1e2443514ad3793612adf.ppt