Automatically Generating Custom Instruction Set Extensions Nathan Clark, Wilkin Tang, Scott Mahlke Workshop on Application Specific Processors 1

Automatically Generating Custom Instruction Set Extensions Nathan Clark, Wilkin Tang, Scott Mahlke Workshop on Application Specific Processors 1

Problem Statement There’s a demand for high performance, low power special purpose systems n E. g. Cell phones, network routers, PDAs One way to achieve these goals is augmenting a general purpose processor with Custom Function Units (CFUs) n n Combine several primitive operations We propose an automated method for CFU generation 2

Problem Statement There’s a demand for high performance, low power special purpose systems n E. g. Cell phones, network routers, PDAs One way to achieve these goals is augmenting a general purpose processor with Custom Function Units (CFUs) n n Combine several primitive operations We propose an automated method for CFU generation 2

System Overview 3

System Overview 3

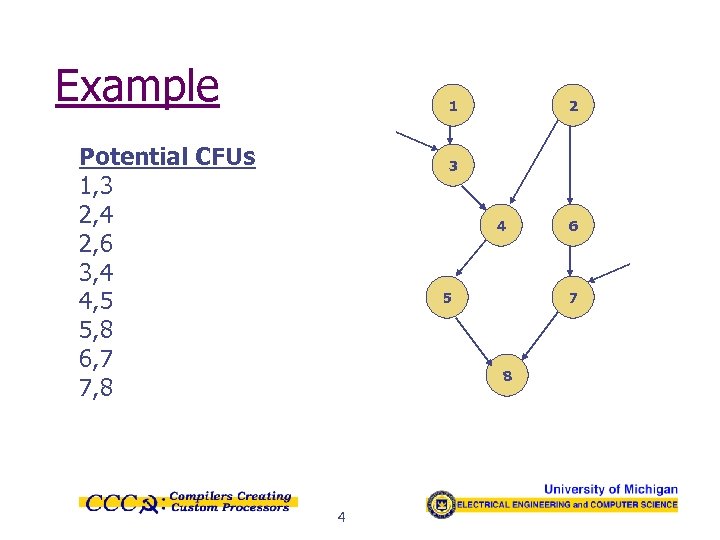

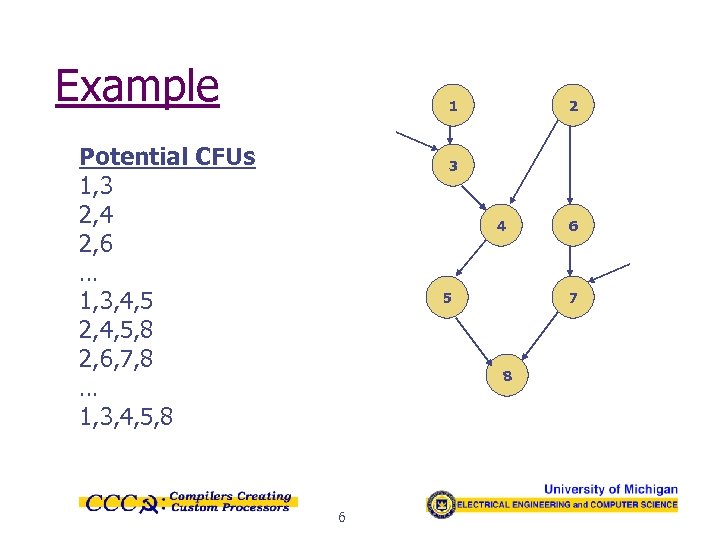

Example 1 Potential CFUs 1, 3 2, 4 2, 6 3, 4 4, 5 5, 8 6, 7 7, 8 2 3 4 5 7 8 4 6

Example 1 Potential CFUs 1, 3 2, 4 2, 6 3, 4 4, 5 5, 8 6, 7 7, 8 2 3 4 5 7 8 4 6

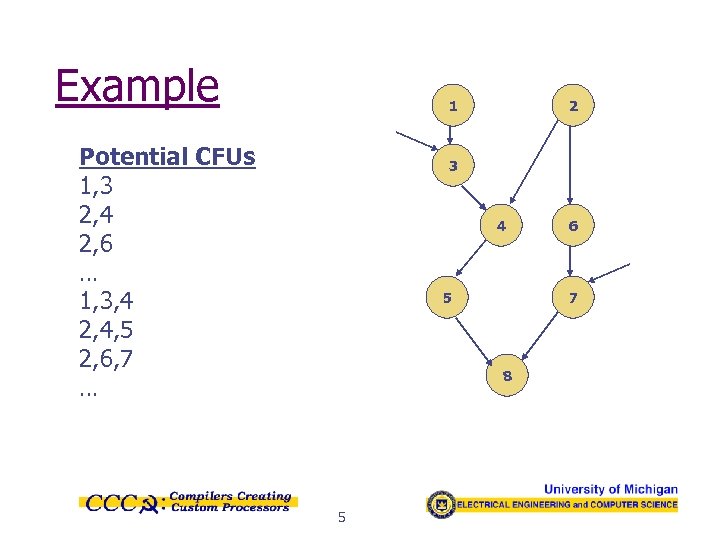

Example 1 Potential CFUs 1, 3 2, 4 2, 6 … 1, 3, 4 2, 4, 5 2, 6, 7 … 2 3 4 5 7 8 5 6

Example 1 Potential CFUs 1, 3 2, 4 2, 6 … 1, 3, 4 2, 4, 5 2, 6, 7 … 2 3 4 5 7 8 5 6

Example 1 Potential CFUs 1, 3 2, 4 2, 6 … 1, 3, 4, 5 2, 4, 5, 8 2, 6, 7, 8 … 1, 3, 4, 5, 8 2 3 4 5 7 8 6 6

Example 1 Potential CFUs 1, 3 2, 4 2, 6 … 1, 3, 4, 5 2, 4, 5, 8 2, 6, 7, 8 … 1, 3, 4, 5, 8 2 3 4 5 7 8 6 6

Characterization Use the macro library to get information on each potential CFU n n Latency is the sum of each primitive’s latency Area is the sum of each primitive’s macrocell 7

Characterization Use the macro library to get information on each potential CFU n n Latency is the sum of each primitive’s latency Area is the sum of each primitive’s macrocell 7

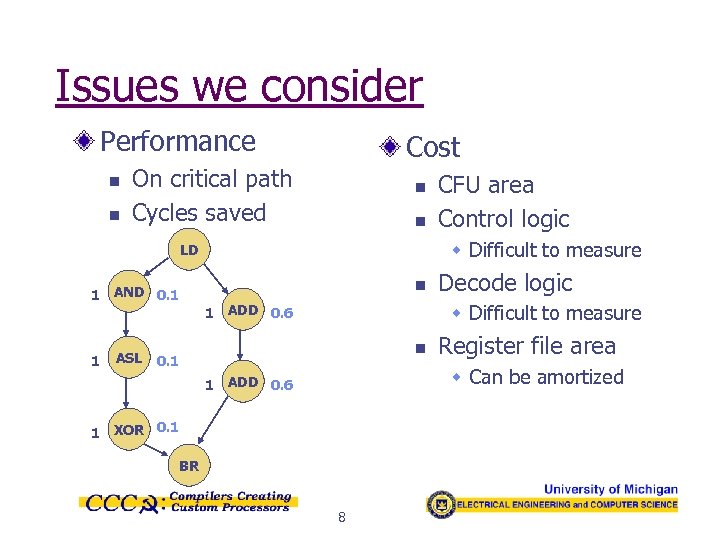

Issues we consider Performance n n Cost On critical path Cycles saved n n w Difficult to measure LD 1 AND 0. 1 1 ASL CFU area Control logic n Decode logic w Difficult to measure 1 ADD 0. 6 n 0. 1 Register file area w Can be amortized 1 ADD 0. 6 1 XOR 0. 1 BR 8

Issues we consider Performance n n Cost On critical path Cycles saved n n w Difficult to measure LD 1 AND 0. 1 1 ASL CFU area Control logic n Decode logic w Difficult to measure 1 ADD 0. 6 n 0. 1 Register file area w Can be amortized 1 ADD 0. 6 1 XOR 0. 1 BR 8

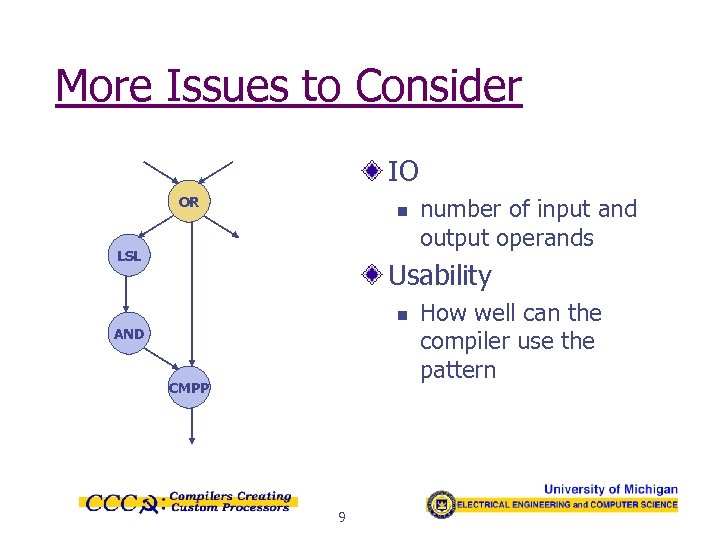

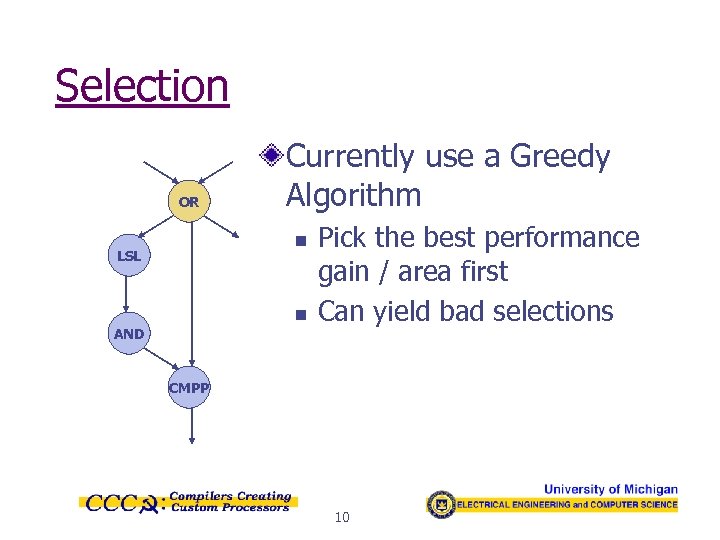

More Issues to Consider IO OR n LSL number of input and output operands Usability n AND CMPP 9 How well can the compiler use the pattern

More Issues to Consider IO OR n LSL number of input and output operands Usability n AND CMPP 9 How well can the compiler use the pattern

Selection OR Currently use a Greedy Algorithm n LSL n AND Pick the best performance gain / area first Can yield bad selections CMPP 10

Selection OR Currently use a Greedy Algorithm n LSL n AND Pick the best performance gain / area first Can yield bad selections CMPP 10

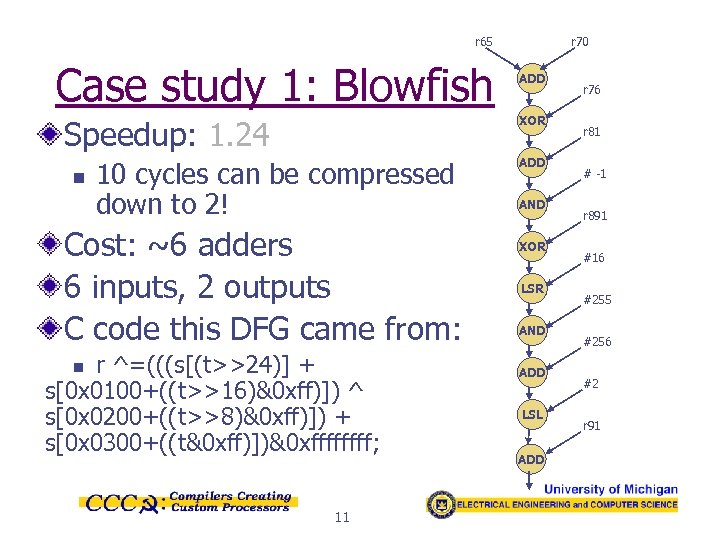

r 65 Case study 1: Blowfish ADD XOR Speedup: 1. 24 n r 70 10 cycles can be compressed down to 2! Cost: ~6 adders 6 inputs, 2 outputs C code this DFG came from: r ^=(((s[(t>>24)] + s[0 x 0100+((t>>16)&0 xff)]) ^ s[0 x 0200+((t>>8)&0 xff)]) + s[0 x 0300+((t&0 xff)])&0 xffff; n 11 ADD AND XOR LSR AND ADD LSL ADD r 76 r 81 # -1 r 891 #16 #255 #256 #2 r 91

r 65 Case study 1: Blowfish ADD XOR Speedup: 1. 24 n r 70 10 cycles can be compressed down to 2! Cost: ~6 adders 6 inputs, 2 outputs C code this DFG came from: r ^=(((s[(t>>24)] + s[0 x 0100+((t>>16)&0 xff)]) ^ s[0 x 0200+((t>>8)&0 xff)]) + s[0 x 0300+((t&0 xff)])&0 xffff; n 11 ADD AND XOR LSR AND ADD LSL ADD r 76 r 81 # -1 r 891 #16 #255 #256 #2 r 91

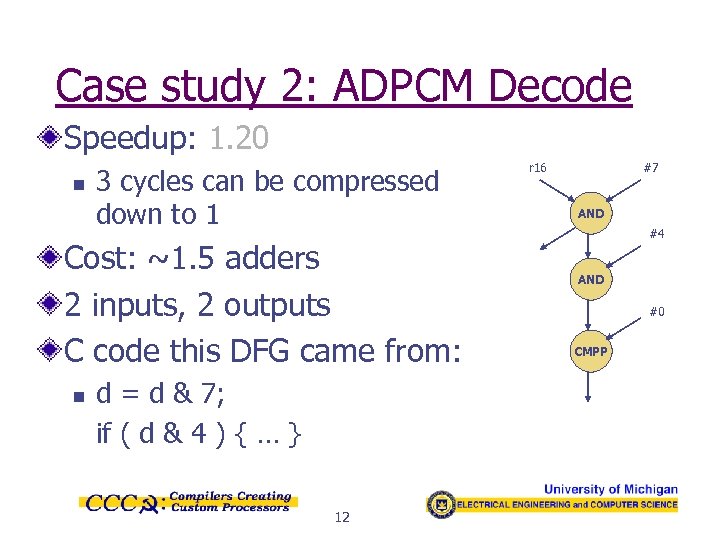

Case study 2: ADPCM Decode Speedup: 1. 20 n 3 cycles can be compressed down to 1 Cost: ~1. 5 adders 2 inputs, 2 outputs C code this DFG came from: n d = d & 7; if ( d & 4 ) { … } 12 r 16 #7 AND #4 AND #0 CMPP

Case study 2: ADPCM Decode Speedup: 1. 20 n 3 cycles can be compressed down to 1 Cost: ~1. 5 adders 2 inputs, 2 outputs C code this DFG came from: n d = d & 7; if ( d & 4 ) { … } 12 r 16 #7 AND #4 AND #0 CMPP

Experimental Setup CFU recognition implemented in the Trimaran research infrastructure Speedup shown is with CFUs relative to a baseline machine n n n Four wide VLIW with predication Can issue at most 1 Int, Flt, Mem, Brn inst. /cyc. 300 MHz clock CFU Latency is estimated using standard cells from Synopsis’ design library 13

Experimental Setup CFU recognition implemented in the Trimaran research infrastructure Speedup shown is with CFUs relative to a baseline machine n n n Four wide VLIW with predication Can issue at most 1 Int, Flt, Mem, Brn inst. /cyc. 300 MHz clock CFU Latency is estimated using standard cells from Synopsis’ design library 13

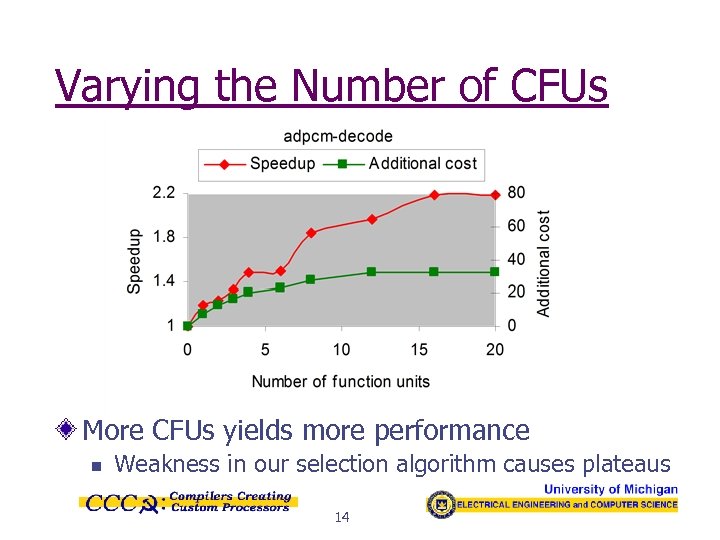

Varying the Number of CFUs More CFUs yields more performance n Weakness in our selection algorithm causes plateaus 14

Varying the Number of CFUs More CFUs yields more performance n Weakness in our selection algorithm causes plateaus 14

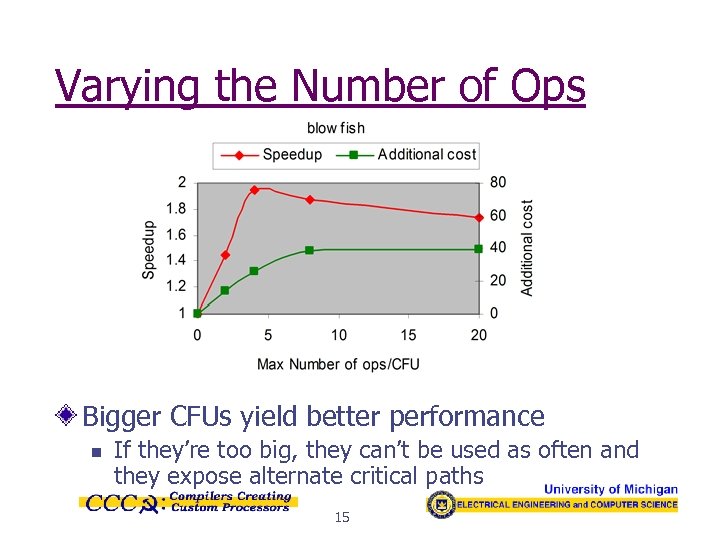

Varying the Number of Ops Bigger CFUs yield better performance n If they’re too big, they can’t be used as often and they expose alternate critical paths 15

Varying the Number of Ops Bigger CFUs yield better performance n If they’re too big, they can’t be used as often and they expose alternate critical paths 15

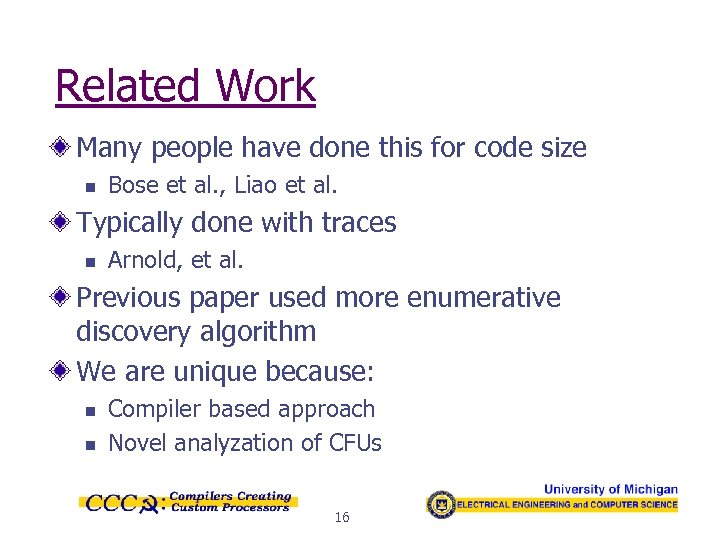

Related Work Many people have done this for code size n Bose et al. , Liao et al. Typically done with traces n Arnold, et al. Previous paper used more enumerative discovery algorithm We are unique because: n n Compiler based approach Novel analyzation of CFUs 16

Related Work Many people have done this for code size n Bose et al. , Liao et al. Typically done with traces n Arnold, et al. Previous paper used more enumerative discovery algorithm We are unique because: n n Compiler based approach Novel analyzation of CFUs 16

Conclusion and Future Work CFUs have the potential to offer big performance gain for small cost Recognize more complex subgraphs n Generalized acyclic/cyclic subgraphs Develop our system to automatically synthesize application tailored coprocessors 17

Conclusion and Future Work CFUs have the potential to offer big performance gain for small cost Recognize more complex subgraphs n Generalized acyclic/cyclic subgraphs Develop our system to automatically synthesize application tailored coprocessors 17