437df23415ff1560ab4f611dc7bdeba5.ppt

- Количество слайдов: 26

Automatic Tuning of Two-Level Caches to Embedded Applications Ann Gordon-Ross and Frank Vahid* Department of Computer Science and Engineering University of California, Riverside *Also with the Center for Embedded Computer Systems, UC Irvine Nikil Dutt Center for Embedded Computer Systems School for Information and Computer Science University of California, Irvine This work was supported by the U. S. National Science Foundation, and by the Semiconductor Research Corporation

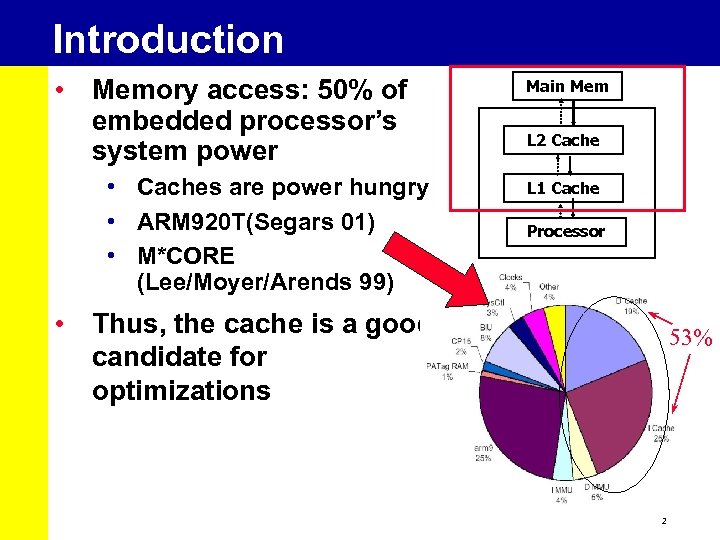

Introduction • Memory access: 50% of embedded processor’s system power • Caches are power hungry • ARM 920 T(Segars 01) • M*CORE (Lee/Moyer/Arends 99) Main Mem L 2 Cache L 1 Cache Processor • Thus, the cache is a good candidate for optimizations 53% 2

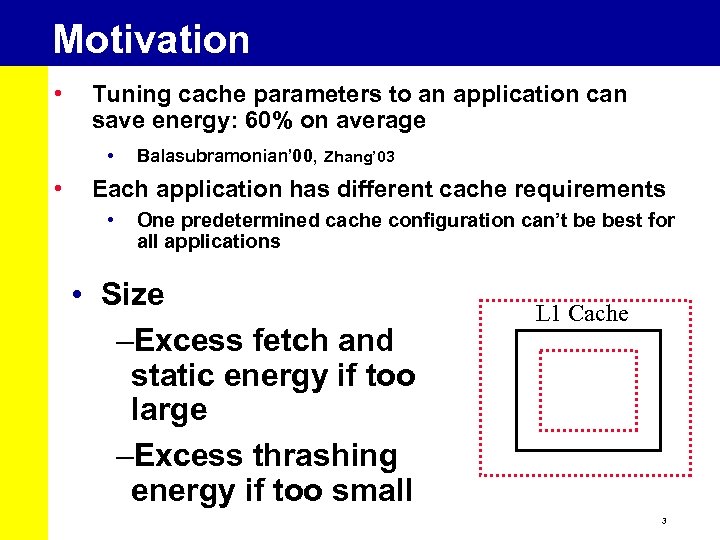

Motivation • Tuning cache parameters to an application can save energy: 60% on average • • Balasubramonian’ 00, Zhang’ 03 Each application has different cache requirements • One predetermined cache configuration can’t be best for all applications • Size –Excess fetch and static energy if too large –Excess thrashing energy if too small L 1 Cache 3

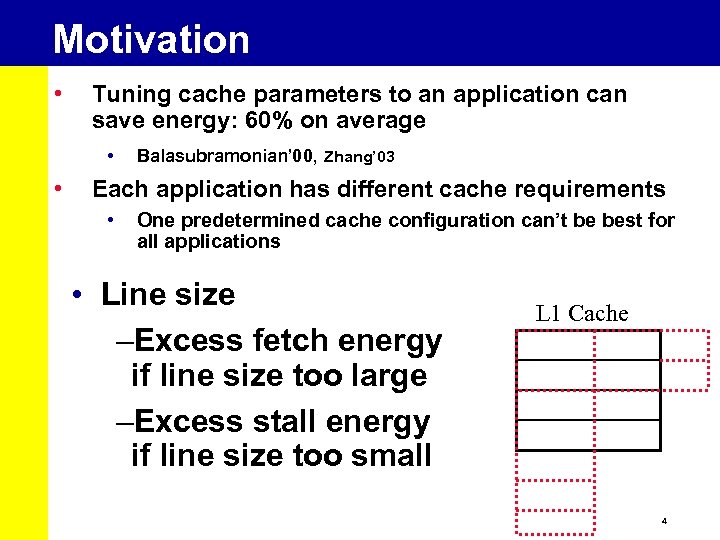

Motivation • Tuning cache parameters to an application can save energy: 60% on average • • Balasubramonian’ 00, Zhang’ 03 Each application has different cache requirements • One predetermined cache configuration can’t be best for all applications • Line size –Excess fetch energy if line size too large –Excess stall energy if line size too small L 1 Cache 4

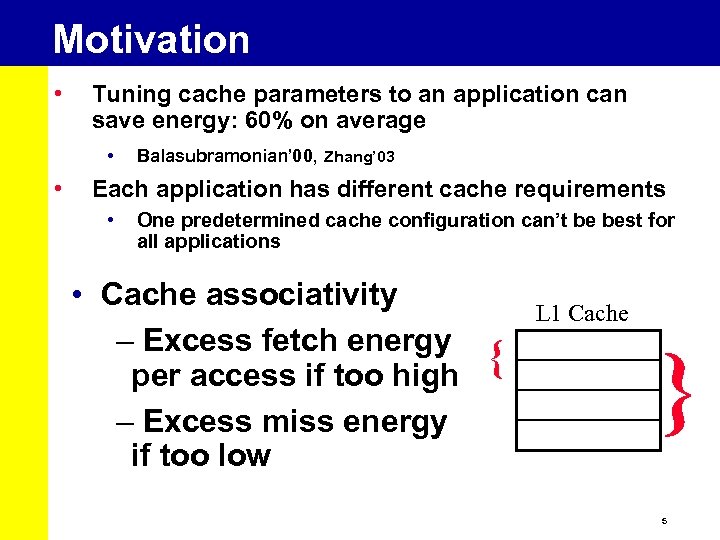

Motivation • Tuning cache parameters to an application can save energy: 60% on average • • Balasubramonian’ 00, Zhang’ 03 Each application has different cache requirements • One predetermined cache configuration can’t be best for all applications • Cache associativity – Excess fetch energy per access if too high – Excess miss energy if too low L 1 Cache { } 5

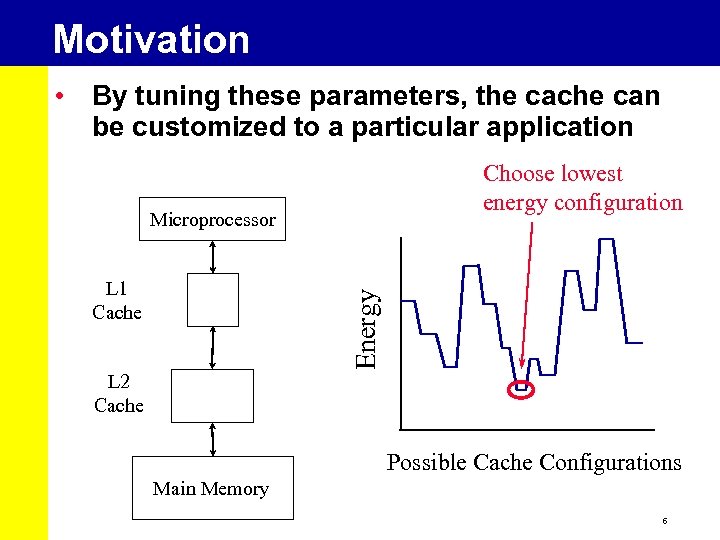

Motivation • By tuning these parameters, the cache can be customized to a particular application Choose lowest energy configuration Microprocessor Tuning L 2 Cache Tuning Energy L 1 Cache Possible Cache Configurations Main Memory 6

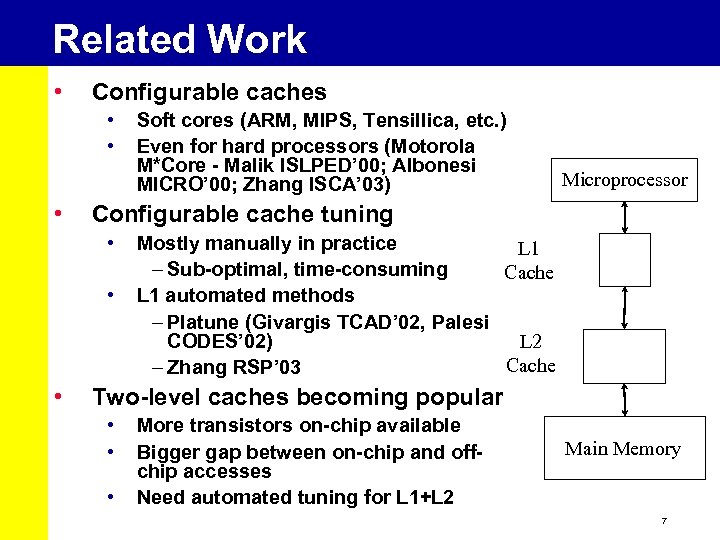

Related Work • Configurable caches • • • Microprocessor Configurable cache tuning • • • Soft cores (ARM, MIPS, Tensillica, etc. ) Even for hard processors (Motorola M*Core - Malik ISLPED’ 00; Albonesi MICRO’ 00; Zhang ISCA’ 03) Mostly manually in practice L 1 – Sub-optimal, time-consuming Cache L 1 automated methods – Platune (Givargis TCAD’ 02, Palesi L 2 CODES’ 02) Cache – Zhang RSP’ 03 Tuning Two-level caches becoming popular • • • More transistors on-chip available Bigger gap between on-chip and offchip accesses Need automated tuning for L 1+L 2 Main Memory 7

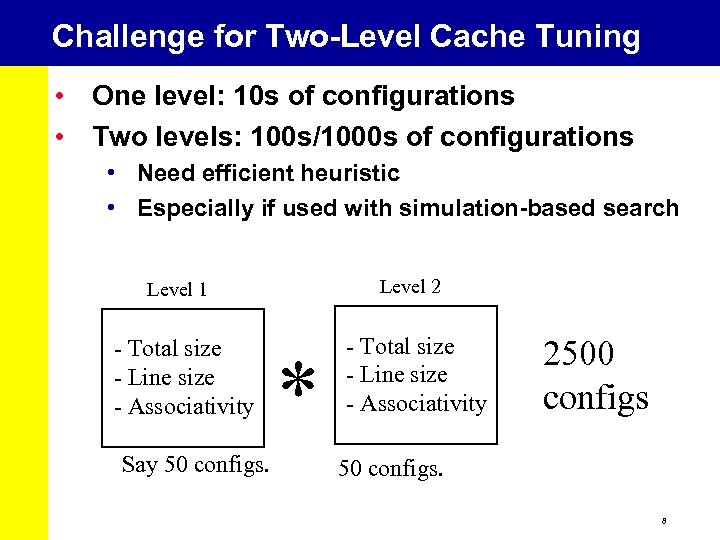

Challenge for Two-Level Cache Tuning • One level: 10 s of configurations • Two levels: 100 s/1000 s of configurations • Need efficient heuristic • Especially if used with simulation-based search Level 2 Level 1 - Total size - Line size - Associativity Say 50 configs. * - Total size - Line size - Associativity 2500 configs 50 configs. 8

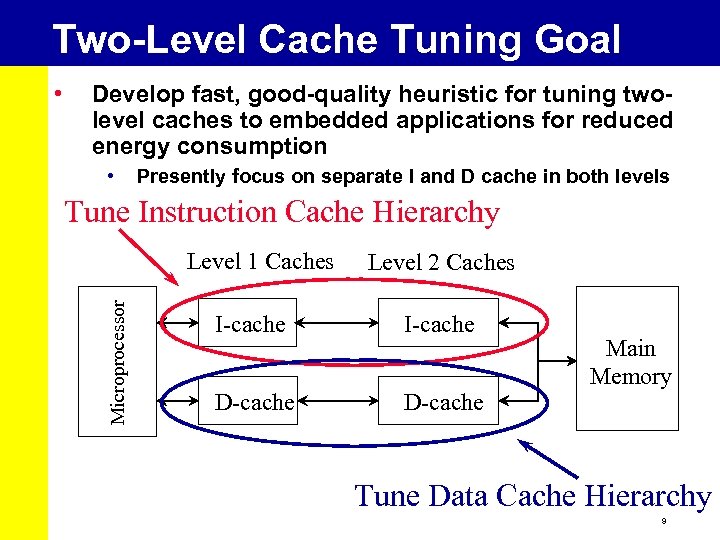

Two-Level Cache Tuning Goal Develop fast, good-quality heuristic for tuning twolevel caches to embedded applications for reduced energy consumption • Presently focus on separate I and D cache in both levels Tune Instruction Cache Hierarchy Level 1 Caches Microprocessor • Level 2 Caches I-cache D-cache Main Memory Tune Data Cache Hierarchy 9

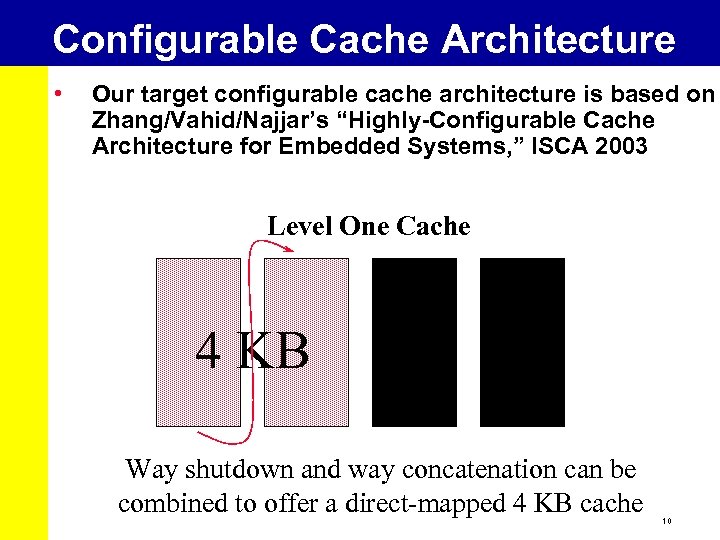

Configurable Cache Architecture • Our target configurable cache architecture is based on Zhang/Vahid/Najjar’s “Highly-Configurable Cache Architecture for Embedded Systems, ” ISCA 2003 Base Level One Cache 8 KB 2 4 KBKB 4 KB 2 KB 4 KB 2 KB 8 KB cache consistinga concatenation can be Way shutdown and way of 4 2 KB KB or that Way concatenation 2 -way 4 banks a Way shutdown offers a 2 -way cache combined directed-mapped 22 KB cache offer a direct-mapped 4 or aa can operate as variation KB andtodirect-mapped 4 wayscache direct-mapped KB 10

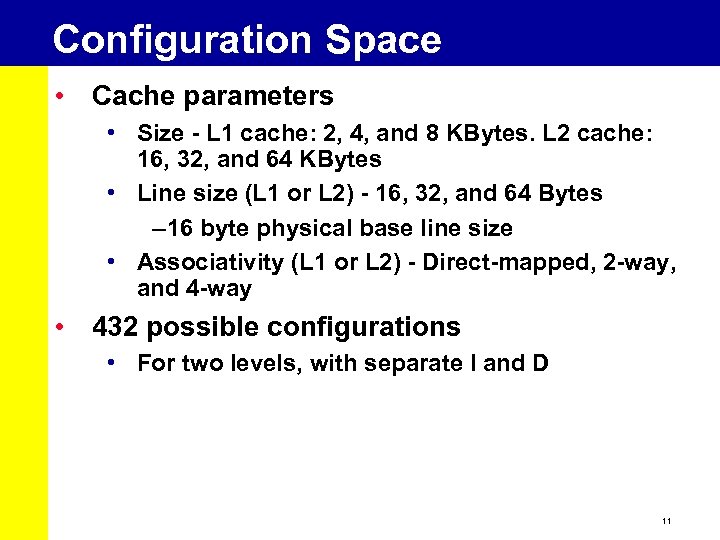

Configuration Space • Cache parameters • Size - L 1 cache: 2, 4, and 8 KBytes. L 2 cache: 16, 32, and 64 KBytes • Line size (L 1 or L 2) - 16, 32, and 64 Bytes – 16 byte physical base line size • Associativity (L 1 or L 2) - Direct-mapped, 2 -way, and 4 -way • 432 possible configurations • For two levels, with separate I and D 11

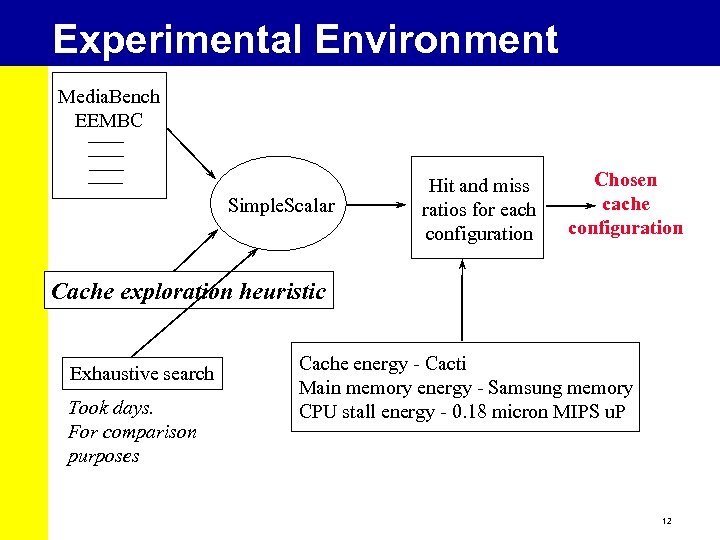

Experimental Environment Media. Bench EEMBC Simple. Scalar Hit and miss ratios for each configuration Chosen cache configuration Cache exploration heuristic Exhaustive search Took days. For comparison purposes Cache energy - Cacti Main memory energy - Samsung memory CPU stall energy - 0. 18 micron MIPS u. P 12

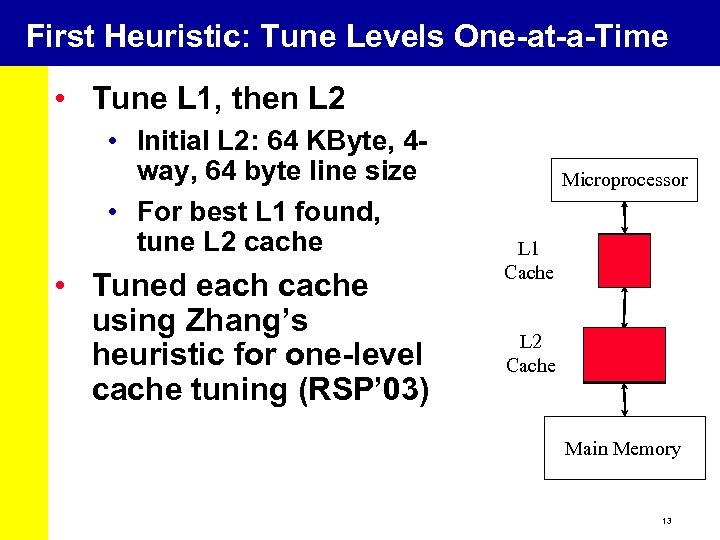

First Heuristic: Tune Levels One-at-a-Time • Tune L 1, then L 2 • Initial L 2: 64 KByte, 4 way, 64 byte line size • For best L 1 found, tune L 2 cache • Tuned each cache using Zhang’s heuristic for one-level cache tuning (RSP’ 03) Microprocessor L 1 Cache L 2 Cache Main Memory 13

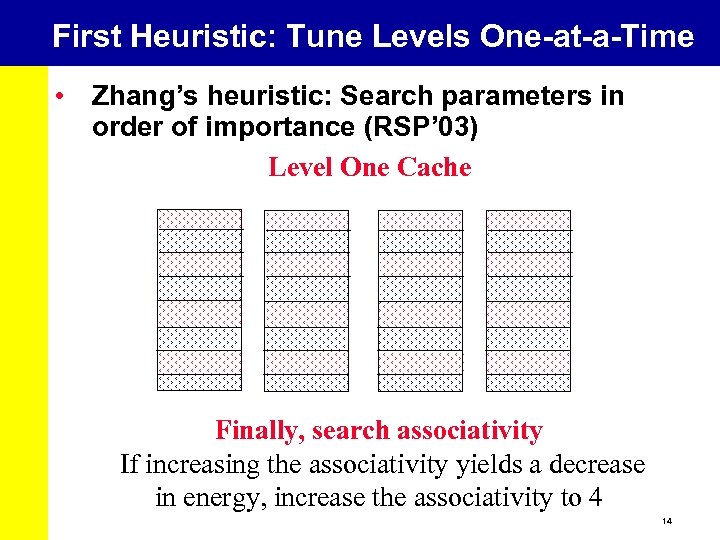

First Heuristic: Tune Levels One-at-a-Time • Zhang’s heuristic: Search parameters in order of importance (RSP’ 03) Level One Cache First search size Next search line size Finally, search associativity a Ifincreasing thein increase yields aincrease the increase energysize yields a decrease line direct-mapped For the with. Increase size to 4 size, increase the If. Begin. Iflowest 2 energycachesize, energycache For the sizeassociativity yieldsdecrease in lowest KByte, line KB. withincrease the size to 16 the to size energy, increase the line cache 64 to 4 improvements, associativity Bytes size. Bytes in energy, lineasize. Byteassociativity to 8 KB. increase to 32 line 2 14

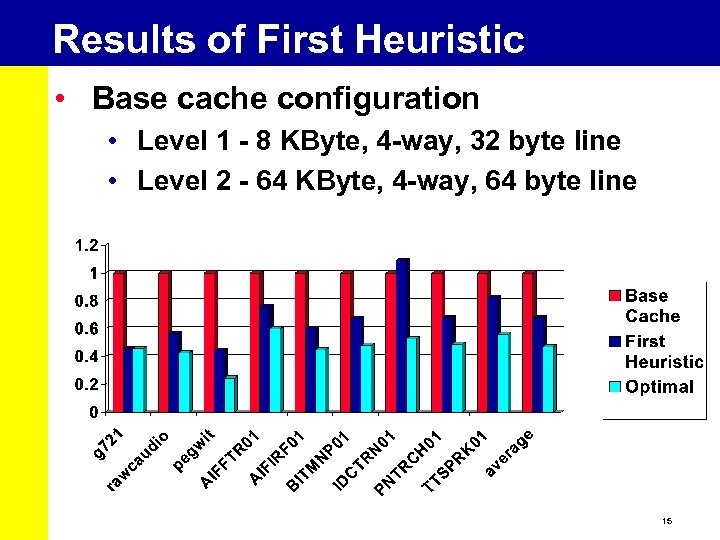

Results of First Heuristic • Base cache configuration • Level 1 - 8 KByte, 4 -way, 32 byte line • Level 2 - 64 KByte, 4 -way, 64 byte line 15

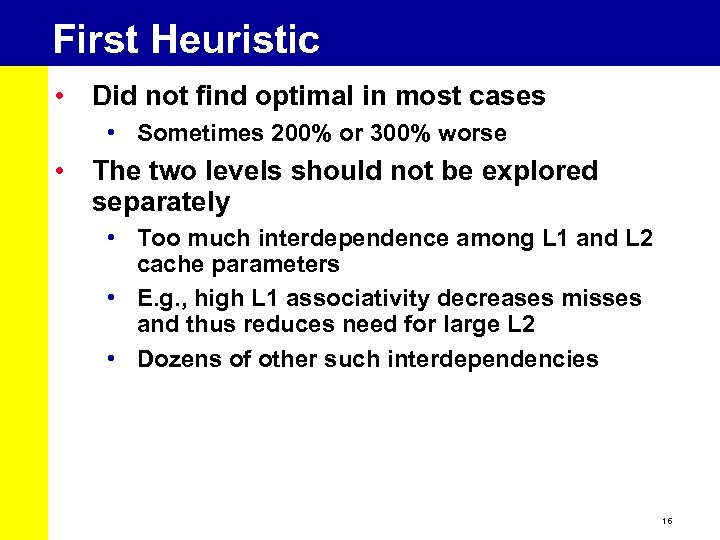

First Heuristic • Did not find optimal in most cases • Sometimes 200% or 300% worse • The two levels should not be explored separately • Too much interdependence among L 1 and L 2 cache parameters • E. g. , high L 1 associativity decreases misses and thus reduces need for large L 2 • Dozens of other such interdependencies 16

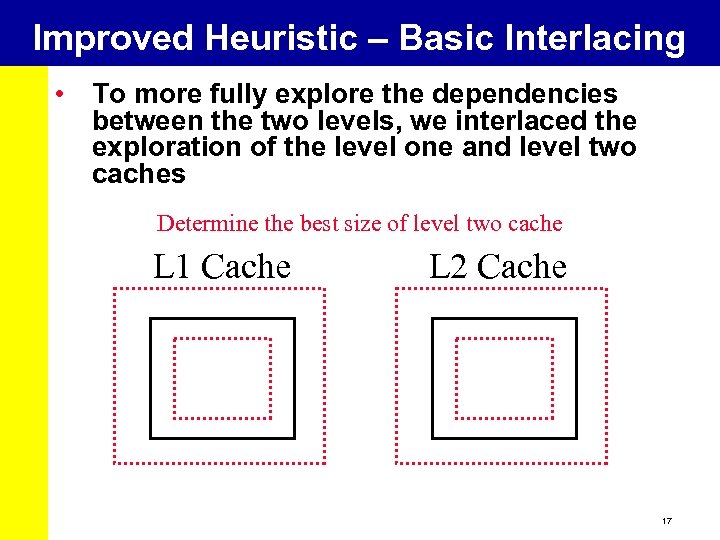

Improved Heuristic – Basic Interlacing • To more fully explore the dependencies between the two levels, we interlaced the exploration of the level one and level two caches Determine the best size of level one cache two L 1 Cache L 2 Cache 17

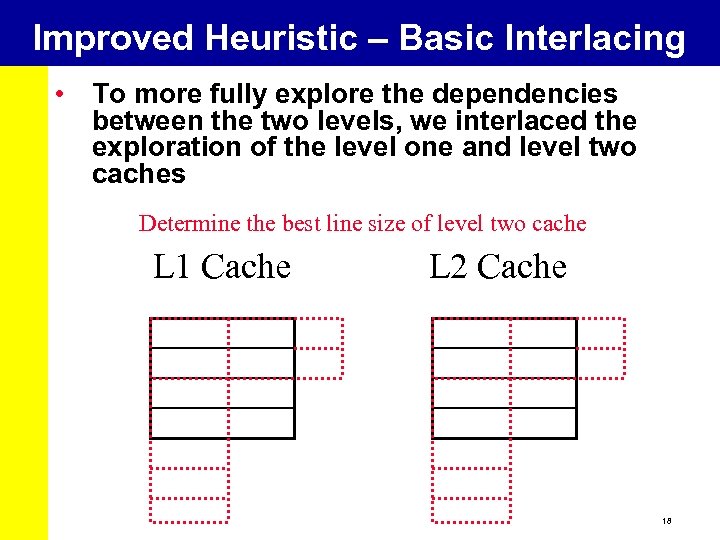

Improved Heuristic – Basic Interlacing • To more fully explore the dependencies between the two levels, we interlaced the exploration of the level one and level two caches Determine the best line size of level one cache two L 1 Cache L 2 Cache 18

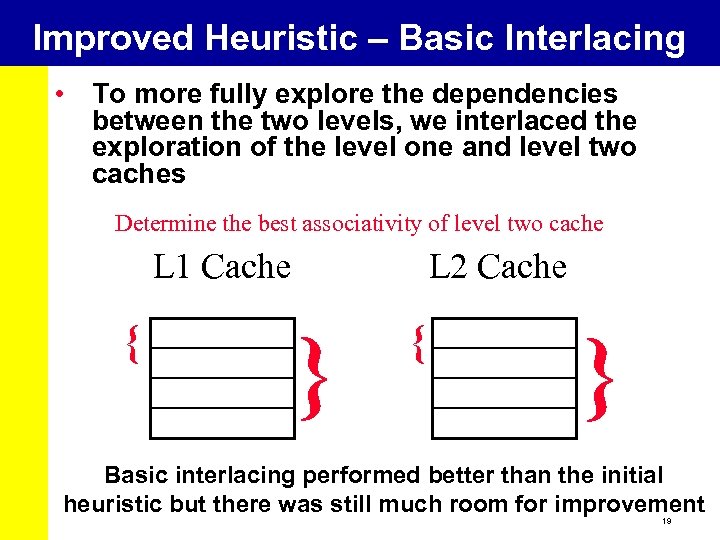

Improved Heuristic – Basic Interlacing • To more fully explore the dependencies between the two levels, we interlaced the exploration of the level one and level two caches Determine the best associativity of level one cache two L 1 Cache { L 2 Cache } { } Basic interlacing performed better than the initial heuristic but there was still much room for improvement 19

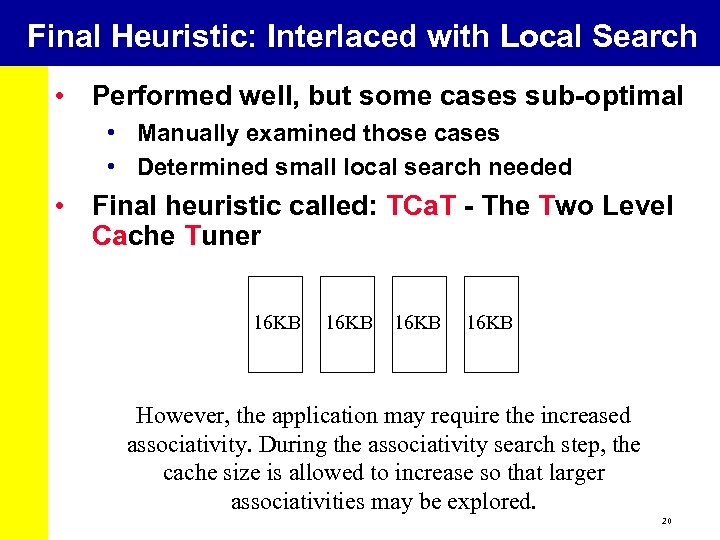

Final Heuristic: Interlaced with Local Search • Performed well, but some cases sub-optimal • Manually examined those cases • Determined small local search needed • Final heuristic called: TCa. T - The Two Level Cache Tuner 16 KB 16 KB However, the application may require the increased Because of the bank arrangements, if a 16 KB cache is associativity. During the associativity search step, the determinedsizebe the best size, the only associativity cache to is allowed to increase so that larger option is direct-mapped associativities may be explored. 20

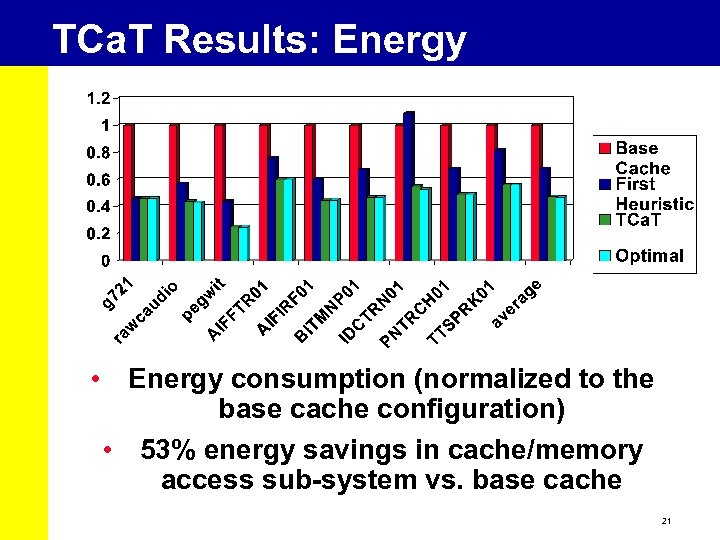

TCa. T Results: Energy • Energy consumption (normalized to the base cache configuration) • 53% energy savings in cache/memory access sub-system vs. base cache 21

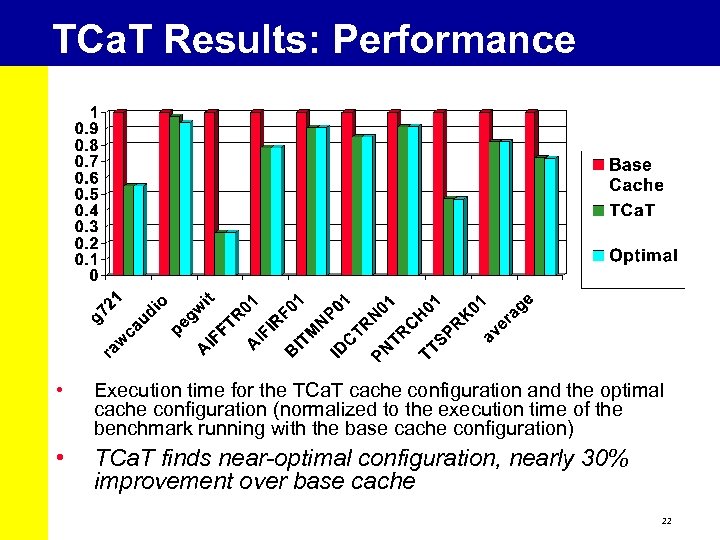

TCa. T Results: Performance • Execution time for the TCa. T cache configuration and the optimal cache configuration (normalized to the execution time of the benchmark running with the base cache configuration) • TCa. T finds near-optimal configuration, nearly 30% improvement over base cache 22

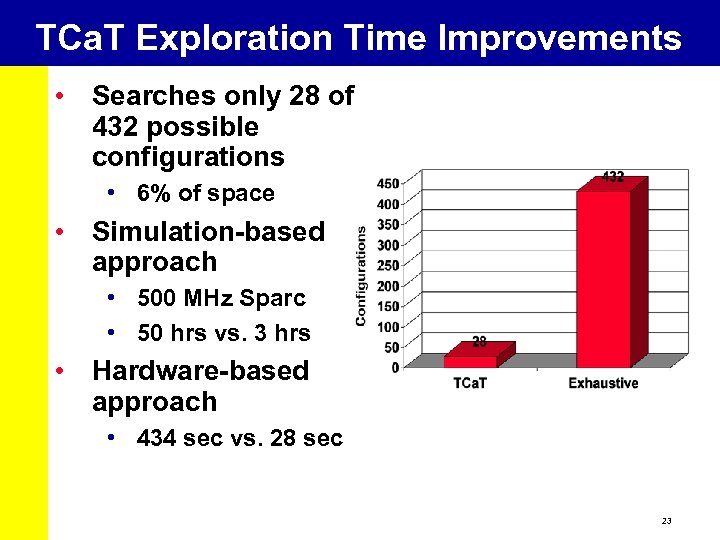

TCa. T Exploration Time Improvements • Searches only 28 of 432 possible configurations • 6% of space • Simulation-based approach • 500 MHz Sparc • 50 hrs vs. 3 hrs • Hardware-based approach • 434 sec vs. 28 sec 23

TCa. T in Presence of Hw/Sw Partitioning • Hardware/software partitioning may become common in SOC platforms • On-chip FPGA • Program kernels moved to FPGA • Greatly reduces temporal and spatial locality of program • Does TCa. T still work well on programs with very low locality? 24

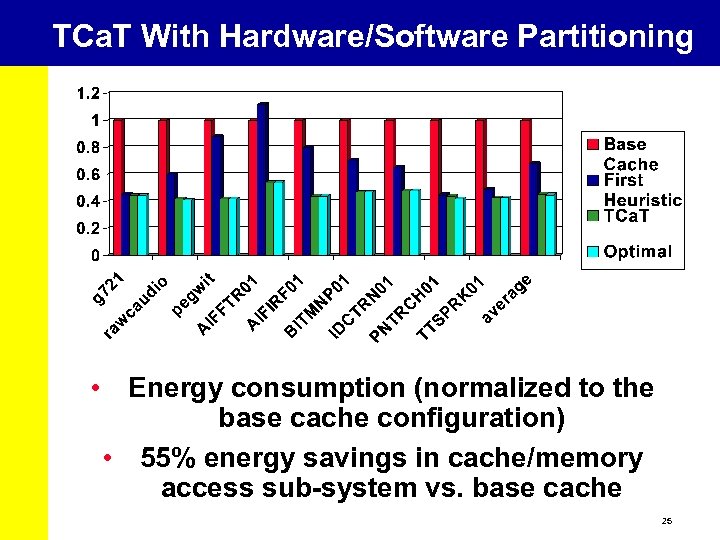

TCa. T With Hardware/Software Partitioning • Energy consumption (normalized to the base cache configuration) • 55% energy savings in cache/memory access sub-system vs. base cache 25

Conclusions • TCa. T is an effective heuristic for two-level cache tuning • Prunes 94% of search space for a given two-level configurable cache architecture • Near-optimal performance results, 30% improvement vs. base cache • Near-optimal energy results, 53% improvement vs. base cache • Robust in presence of hw/sw partitioning • Future work • More cache parameters, unified 2 L cache – Even larger search space • Dynamic in-system tuning – Must avoid cache flushes 26

437df23415ff1560ab4f611dc7bdeba5.ppt