98aecd58e807843b3ee030da54406e23.ppt

- Количество слайдов: 60

Automatic Text Summarization Instructor : Prof. Nachum Dershowitz Presented by : Dan Cohen

Automatic Text Summarization Instructor : Prof. Nachum Dershowitz Presented by : Dan Cohen

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs – Recent approaches • References

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs – Recent approaches • References

Introduction • The problem – Information overload • 4 Billion URLs indexed by Google • 200 TB of data on the Web [Lyman and Varian 03] • Information is created every day in enormous amounts • One solution – summarization [Borko and Bernier 75] – – – Abstracts promote current awareness Abstracts save reading time Abstracts facilitate selection Abstracts facilitate literature searches Abstracts improve indexing efficiency Abstracts aid in the preparation of reviews • But what is an abstract? ?

Introduction • The problem – Information overload • 4 Billion URLs indexed by Google • 200 TB of data on the Web [Lyman and Varian 03] • Information is created every day in enormous amounts • One solution – summarization [Borko and Bernier 75] – – – Abstracts promote current awareness Abstracts save reading time Abstracts facilitate selection Abstracts facilitate literature searches Abstracts improve indexing efficiency Abstracts aid in the preparation of reviews • But what is an abstract? ?

Introduction • abstract: brief but accurate representation of the contents of a document • goal: take an information source, extract the most important content from it and present it to the user in a condensed form and in a manner sensitive to the user’s needs. • compression: the amount of text to present or the length of the summary to the length of the source.

Introduction • abstract: brief but accurate representation of the contents of a document • goal: take an information source, extract the most important content from it and present it to the user in a condensed form and in a manner sensitive to the user’s needs. • compression: the amount of text to present or the length of the summary to the length of the source.

Introduction • Ashworth: • “To take an original article, understand it and pack it neatly into a nutshell without loss of substance or clarity presents a challenge which many have felt worth taking up for the joys of achievement alone. These are the characteristics of an art form”. • He is quite weird

Introduction • Ashworth: • “To take an original article, understand it and pack it neatly into a nutshell without loss of substance or clarity presents a challenge which many have felt worth taking up for the joys of achievement alone. These are the characteristics of an art form”. • He is quite weird

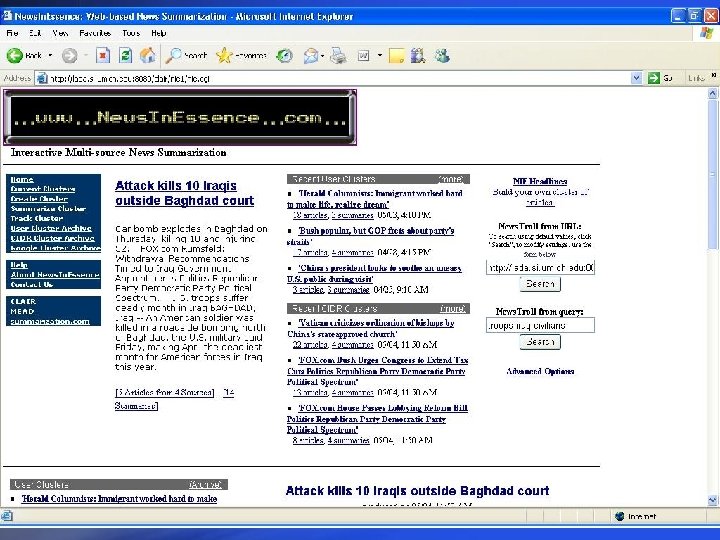

![Introduction - History • The problem has been addressed since the 50’ [Luhn 58] Introduction - History • The problem has been addressed since the 50’ [Luhn 58]](https://present5.com/presentation/98aecd58e807843b3ee030da54406e23/image-6.jpg) Introduction - History • The problem has been addressed since the 50’ [Luhn 58] • Numerous methods are currently being suggested • [In my opinion] most methods still rely on 50’-70’ algorithms • Problem is still hard yet there are many commercial aplications (MS Word, www. newsinessence. com, etc. )

Introduction - History • The problem has been addressed since the 50’ [Luhn 58] • Numerous methods are currently being suggested • [In my opinion] most methods still rely on 50’-70’ algorithms • Problem is still hard yet there are many commercial aplications (MS Word, www. newsinessence. com, etc. )

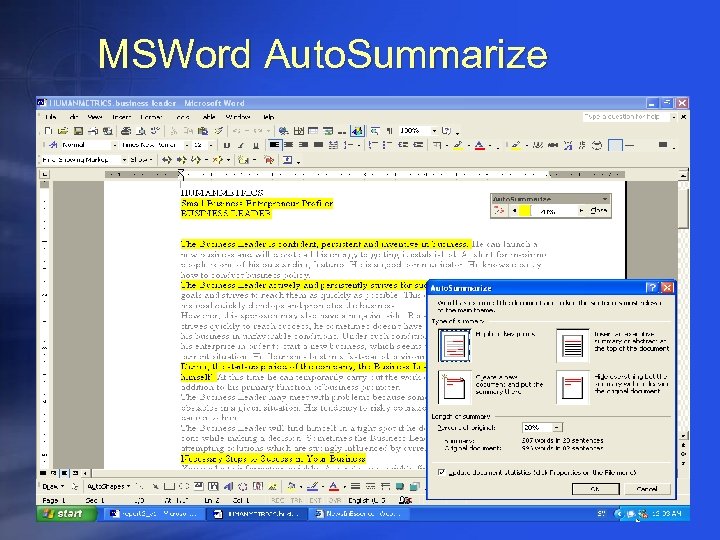

MSWord Auto. Summarize

MSWord Auto. Summarize

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs – Recent approaches • References

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs – Recent approaches • References

Motivation • Abstracts for Scientific and other articles • News summarization (mostly Multiple document summarization) • Classification of articles and other written data • Web pages for search engines • Web access from PDAs, Cell phones • Question answering and data gathering

Motivation • Abstracts for Scientific and other articles • News summarization (mostly Multiple document summarization) • Classification of articles and other written data • Web pages for search engines • Web access from PDAs, Cell phones • Question answering and data gathering

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs – Recent approaches • References

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs – Recent approaches • References

Types of summarization tasks • Purpose : What is the summary intended for • input : what is summarized • Output : how is the summary built

Types of summarization tasks • Purpose : What is the summary intended for • input : what is summarized • Output : how is the summary built

Types of summarization tasks • Indicative vs Informative Purpose – Indicative - indicates types of information (“alerts”) • “The work of Consumer Advice Centres is examined…” – Informative • “The work of Consumer Advice Centres was found to be a waste of resources due to low availabily…” – Critic • Evaluates the content of the document • Background – Does the reader have the needed prior knowledge? – Expert reader vs Novice reader • Query based or General – Query based – a form is being filled, answers should be answered – General purpose summarization

Types of summarization tasks • Indicative vs Informative Purpose – Indicative - indicates types of information (“alerts”) • “The work of Consumer Advice Centres is examined…” – Informative • “The work of Consumer Advice Centres was found to be a waste of resources due to low availabily…” – Critic • Evaluates the content of the document • Background – Does the reader have the needed prior knowledge? – Expert reader vs Novice reader • Query based or General – Query based – a form is being filled, answers should be answered – General purpose summarization

Types of summarization input • single document vs multiple documents • Domain specific (chemistry) or general • Genre specific (newspaper items) of general

Types of summarization input • single document vs multiple documents • Domain specific (chemistry) or general • Genre specific (newspaper items) of general

Types of summarization • extract vs abstract Output – Extracts – representative paragraphs/sentences/ phrases/words, fragments of the original text – Abstracts – a concise summary of the central subjects in the document. – Research shows that sometimes readers prefer Extracts! • language chosen for summarization • format of the resulting summary (table/paragraph/key words)

Types of summarization • extract vs abstract Output – Extracts – representative paragraphs/sentences/ phrases/words, fragments of the original text – Abstracts – a concise summary of the central subjects in the document. – Research shows that sometimes readers prefer Extracts! • language chosen for summarization • format of the resulting summary (table/paragraph/key words)

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs – Recent approaches • References

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs – Recent approaches • References

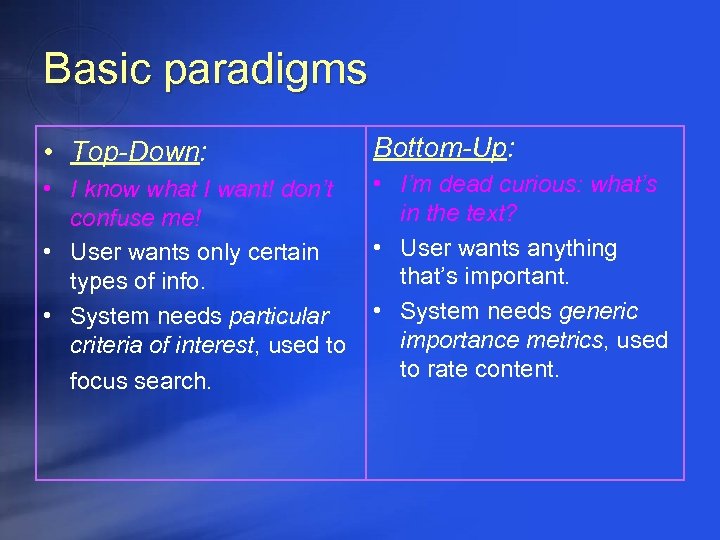

Basic paradigms • Top-Down: Bottom-Up: • I know what I want! don’t confuse me! • User wants only certain types of info. • System needs particular criteria of interest, used to focus search. • I’m dead curious: what’s in the text? • User wants anything that’s important. • System needs generic importance metrics, used to rate content.

Basic paradigms • Top-Down: Bottom-Up: • I know what I want! don’t confuse me! • User wants only certain types of info. • System needs particular criteria of interest, used to focus search. • I’m dead curious: what’s in the text? • User wants anything that’s important. • System needs generic importance metrics, used to rate content.

Summarization In 3 steps • Content/Topic Identification: find/extract the most important material • Conceptual/Topic Interpretation: compress it • Summary Generation: say it in your own words – Simple if extraction if preformed

Summarization In 3 steps • Content/Topic Identification: find/extract the most important material • Conceptual/Topic Interpretation: compress it • Summary Generation: say it in your own words – Simple if extraction if preformed

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs – Recent approaches • References

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs – Recent approaches • References

Methods • (Pseudo) Statistical scoring methods • Higher semantic/syntactic structures – Network (graph) based methods – Other methods (rhetorical analysis, lexical chains, coreference chains) • AI methods

Methods • (Pseudo) Statistical scoring methods • Higher semantic/syntactic structures – Network (graph) based methods – Other methods (rhetorical analysis, lexical chains, coreference chains) • AI methods

(Pseudo) Statistical scoring • General method: score each entity (sentence, word) ; combine scores; choose best sentence(s) • Scoring techniques: – – – Word frequencies throughout the text (Luhn 58) Position in the text (Edmunson 69, Lin&Hovy 97) Title method (Edmunson 69) Cue phrases in sentences (Edmunson 69) Bayesian Classifier (Kupiec at el 95)

(Pseudo) Statistical scoring • General method: score each entity (sentence, word) ; combine scores; choose best sentence(s) • Scoring techniques: – – – Word frequencies throughout the text (Luhn 58) Position in the text (Edmunson 69, Lin&Hovy 97) Title method (Edmunson 69) Cue phrases in sentences (Edmunson 69) Bayesian Classifier (Kupiec at el 95)

Word frequencies (Luhn 58) • Very first work in automated summarization • Claim: words which are frequent in a document indicate the topic discussed – Frequent words indicate the topic – Frequent with reference to the corpus frequency – Clusters of frequent words indicate summarizing sentence • Stemming should be used • “stop words” (i. e. ”the”, “a”, “for”, “is”) are ignord

Word frequencies (Luhn 58) • Very first work in automated summarization • Claim: words which are frequent in a document indicate the topic discussed – Frequent words indicate the topic – Frequent with reference to the corpus frequency – Clusters of frequent words indicate summarizing sentence • Stemming should be used • “stop words” (i. e. ”the”, “a”, “for”, “is”) are ignord

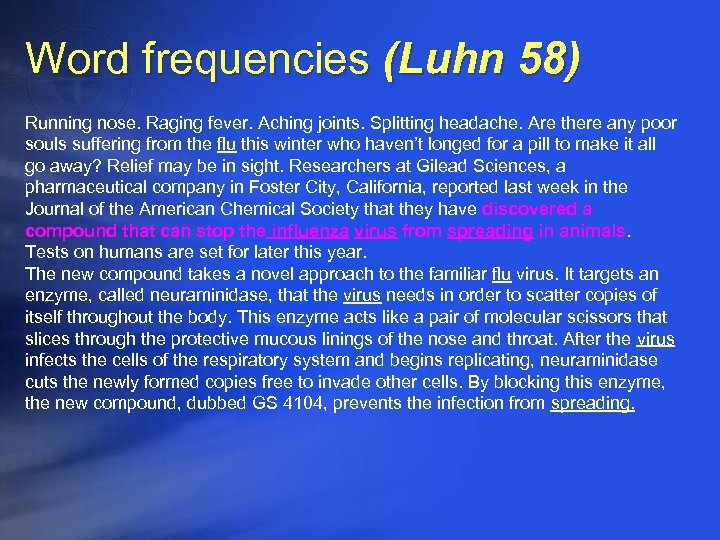

Word frequencies (Luhn 58) Running nose. Raging fever. Aching joints. Splitting headache. Are there any poor souls suffering from the flu this winter who haven’t longed for a pill to make it all go away? Relief may be in sight. Researchers at Gilead Sciences, a pharmaceutical company in Foster City, California, reported last week in the Journal of the American Chemical Society that they have discovered a compound that can stop the influenza virus from spreading in animals. Tests on humans are set for later this year. The new compound takes a novel approach to the familiar flu virus. It targets an enzyme, called neuraminidase, that the virus needs in order to scatter copies of itself throughout the body. This enzyme acts like a pair of molecular scissors that slices through the protective mucous linings of the nose and throat. After the virus infects the cells of the respiratory system and begins replicating, neuraminidase cuts the newly formed copies free to invade other cells. By blocking this enzyme, the new compound, dubbed GS 4104, prevents the infection from spreading.

Word frequencies (Luhn 58) Running nose. Raging fever. Aching joints. Splitting headache. Are there any poor souls suffering from the flu this winter who haven’t longed for a pill to make it all go away? Relief may be in sight. Researchers at Gilead Sciences, a pharmaceutical company in Foster City, California, reported last week in the Journal of the American Chemical Society that they have discovered a compound that can stop the influenza virus from spreading in animals. Tests on humans are set for later this year. The new compound takes a novel approach to the familiar flu virus. It targets an enzyme, called neuraminidase, that the virus needs in order to scatter copies of itself throughout the body. This enzyme acts like a pair of molecular scissors that slices through the protective mucous linings of the nose and throat. After the virus infects the cells of the respiratory system and begins replicating, neuraminidase cuts the newly formed copies free to invade other cells. By blocking this enzyme, the new compound, dubbed GS 4104, prevents the infection from spreading.

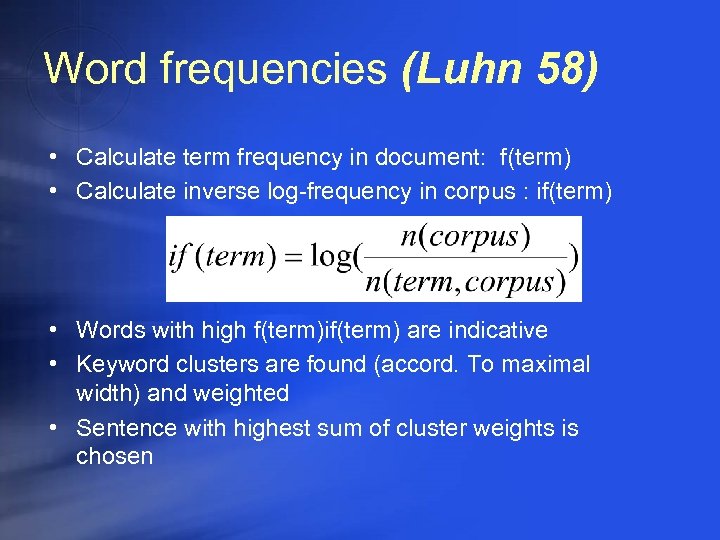

Word frequencies (Luhn 58) • Calculate term frequency in document: f(term) • Calculate inverse log-frequency in corpus : if(term) • Words with high f(term)if(term) are indicative • Keyword clusters are found (accord. To maximal width) and weighted • Sentence with highest sum of cluster weights is chosen

Word frequencies (Luhn 58) • Calculate term frequency in document: f(term) • Calculate inverse log-frequency in corpus : if(term) • Words with high f(term)if(term) are indicative • Keyword clusters are found (accord. To maximal width) and weighted • Sentence with highest sum of cluster weights is chosen

Position in the text (Edmunson 69, Lin&Hovy 97) • Claim : Important sentences occur in specific positions – “lead-based” summary (Brandow’ 95) – inverse of position in document works well for the “news” – Important information occurs in specific sections of the document (introduction/conclusion)

Position in the text (Edmunson 69, Lin&Hovy 97) • Claim : Important sentences occur in specific positions – “lead-based” summary (Brandow’ 95) – inverse of position in document works well for the “news” – Important information occurs in specific sections of the document (introduction/conclusion)

Position in the text (Edmunson 69, Lin&Hovy 97) • Assign score to sentences according to location in paragraph • Assign score to paragraphs and sentences according to location in entire text • Definition of important sections might help • Position evidence (Baxendale’ 58) – first/last sentences in a paragraph are topical

Position in the text (Edmunson 69, Lin&Hovy 97) • Assign score to sentences according to location in paragraph • Assign score to paragraphs and sentences according to location in entire text • Definition of important sections might help • Position evidence (Baxendale’ 58) – first/last sentences in a paragraph are topical

Position in the text - OPP (Edmunson 69, Lin&Hovy 97) • Position depends on type(genre) of text • “Optimum Position Policy” (Lin & Hovy’ 97) method is used to learn “positions” which contain relevant information • “learning” method uses documents + abstracts + keywords provided by authors • OPP is learned for each genre (problematic when the number of abstracted publications is not large)

Position in the text - OPP (Edmunson 69, Lin&Hovy 97) • Position depends on type(genre) of text • “Optimum Position Policy” (Lin & Hovy’ 97) method is used to learn “positions” which contain relevant information • “learning” method uses documents + abstracts + keywords provided by authors • OPP is learned for each genre (problematic when the number of abstracted publications is not large)

Title method (Edmunson 69) • • Claim : title of document indicates its content (Duh!) words in title help find relevant content create a list of title words, remove “stop words” Use those as keywords in order to find important sentences (for example with Luhn’s methods)

Title method (Edmunson 69) • • Claim : title of document indicates its content (Duh!) words in title help find relevant content create a list of title words, remove “stop words” Use those as keywords in order to find important sentences (for example with Luhn’s methods)

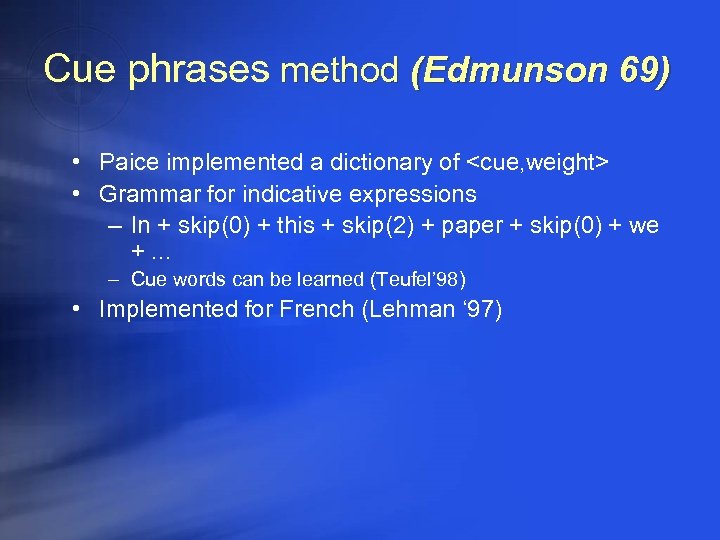

Cue phrases method (Edmunson 69) • Claim : Important sentences contain cue words/indicative phrases – – “The main aim of the present paper is to describe…” (IND) “The purpose of this article is to review…” (IND) “In this report, we outline…” (IND) “Our investigation has shown that…” (INF) • Some words are considered bonus others stigma – bonus: comparatives, superlatives, conclusive expressions, etc. – stigma: negatives, pronouns, etc.

Cue phrases method (Edmunson 69) • Claim : Important sentences contain cue words/indicative phrases – – “The main aim of the present paper is to describe…” (IND) “The purpose of this article is to review…” (IND) “In this report, we outline…” (IND) “Our investigation has shown that…” (INF) • Some words are considered bonus others stigma – bonus: comparatives, superlatives, conclusive expressions, etc. – stigma: negatives, pronouns, etc.

Cue phrases method (Edmunson 69) • Paice implemented a dictionary of

Cue phrases method (Edmunson 69) • Paice implemented a dictionary of

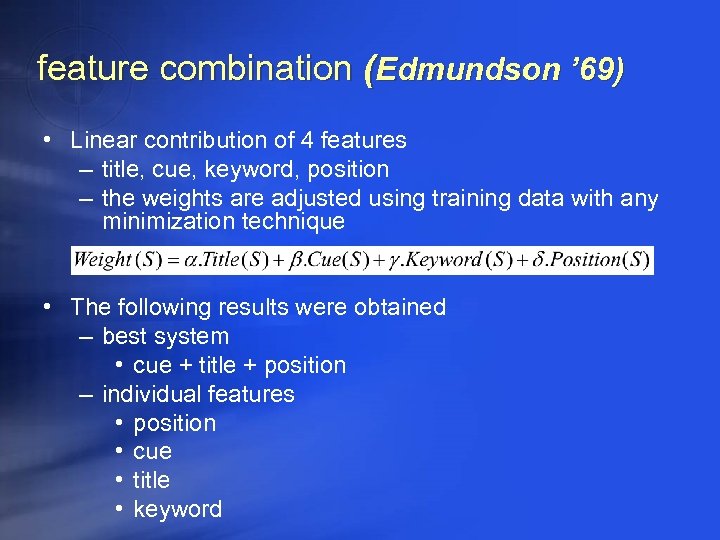

feature combination (Edmundson ’ 69) • Linear contribution of 4 features – title, cue, keyword, position – the weights are adjusted using training data with any minimization technique • The following results were obtained – best system • cue + title + position – individual features • position • cue • title • keyword

feature combination (Edmundson ’ 69) • Linear contribution of 4 features – title, cue, keyword, position – the weights are adjusted using training data with any minimization technique • The following results were obtained – best system • cue + title + position – individual features • position • cue • title • keyword

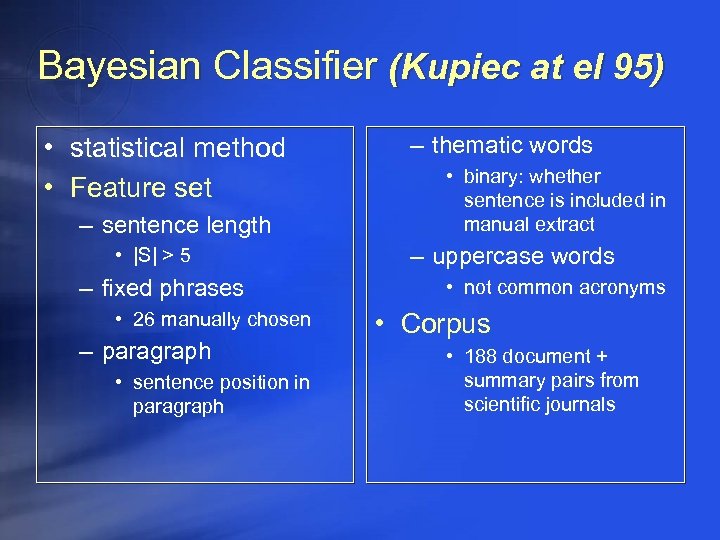

Bayesian Classifier (Kupiec at el 95) • statistical method • Feature set – sentence length • |S| > 5 – fixed phrases • 26 manually chosen – paragraph • sentence position in paragraph – thematic words • binary: whether sentence is included in manual extract – uppercase words • not common acronyms • Corpus • 188 document + summary pairs from scientific journals

Bayesian Classifier (Kupiec at el 95) • statistical method • Feature set – sentence length • |S| > 5 – fixed phrases • 26 manually chosen – paragraph • sentence position in paragraph – thematic words • binary: whether sentence is included in manual extract – uppercase words • not common acronyms • Corpus • 188 document + summary pairs from scientific journals

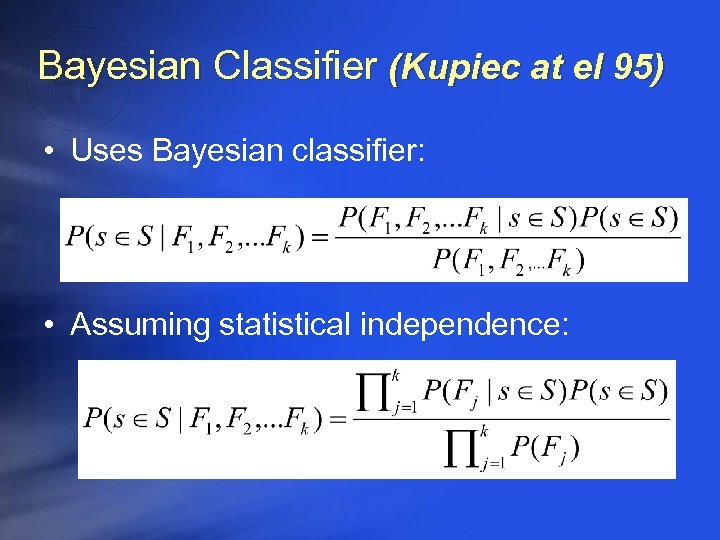

Bayesian Classifier (Kupiec at el 95) • Uses Bayesian classifier: • Assuming statistical independence:

Bayesian Classifier (Kupiec at el 95) • Uses Bayesian classifier: • Assuming statistical independence:

Bayesian Classifier (Kupiec at el 95) • Each Probability is calculated empirically from a corpus • Higher probability sentences are chosed to be in the summary • Performance: – For 25% summaries, 84% precision

Bayesian Classifier (Kupiec at el 95) • Each Probability is calculated empirically from a corpus • Higher probability sentences are chosed to be in the summary • Performance: – For 25% summaries, 84% precision

Methods • (Pseudo) Statistical scoring methods • Higher semantic/syntactic structures – Network (graph) based methods – Other methods (rhetorical analysis, lexical chains, coreference chains) • AI methods

Methods • (Pseudo) Statistical scoring methods • Higher semantic/syntactic structures – Network (graph) based methods – Other methods (rhetorical analysis, lexical chains, coreference chains) • AI methods

Higher semantic/syntactic structures • Claim: Important sentences/paragraphs are the highest connected entities in more or less elaborate semantic structures. • Classes of approaches – word co-occurrences; – co-reference; – lexical similarity (Word. Net, lexical chains); – combinations of the above.

Higher semantic/syntactic structures • Claim: Important sentences/paragraphs are the highest connected entities in more or less elaborate semantic structures. • Classes of approaches – word co-occurrences; – co-reference; – lexical similarity (Word. Net, lexical chains); – combinations of the above.

Coreference method • Build co-reference chains (noun/event identity, part-whole relations) between – query and document - In the context of querybased summarization – title and document – sentences within document • Important sentences are those traversed by a large number of chains: – a preference is imposed on chains (query > title > doc)

Coreference method • Build co-reference chains (noun/event identity, part-whole relations) between – query and document - In the context of querybased summarization – title and document – sentences within document • Important sentences are those traversed by a large number of chains: – a preference is imposed on chains (query > title > doc)

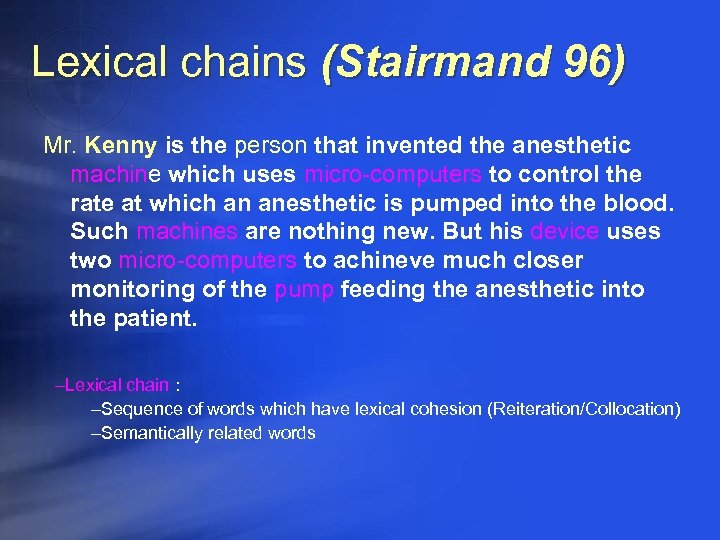

Lexical chains (Stairmand 96) Mr. Kenny is the person that invented the anesthetic machine which uses micro-computers to control the rate at which an anesthetic is pumped into the blood. Such machines are nothing new. But his device uses two micro-computers to achineve much closer monitoring of the pump feeding the anesthetic into the patient. –Lexical chain : –Sequence of words which have lexical cohesion (Reiteration/Collocation) –Semantically related words

Lexical chains (Stairmand 96) Mr. Kenny is the person that invented the anesthetic machine which uses micro-computers to control the rate at which an anesthetic is pumped into the blood. Such machines are nothing new. But his device uses two micro-computers to achineve much closer monitoring of the pump feeding the anesthetic into the patient. –Lexical chain : –Sequence of words which have lexical cohesion (Reiteration/Collocation) –Semantically related words

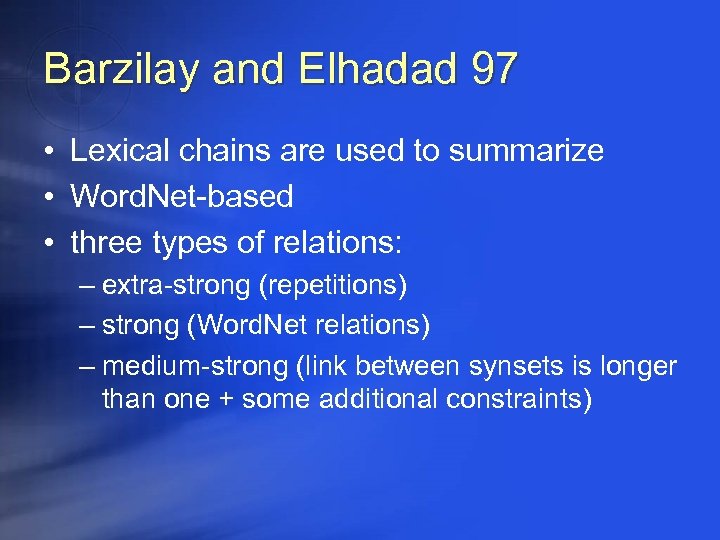

Barzilay and Elhadad 97 • Lexical chains are used to summarize • Word. Net-based • three types of relations: – extra-strong (repetitions) – strong (Word. Net relations) – medium-strong (link between synsets is longer than one + some additional constraints)

Barzilay and Elhadad 97 • Lexical chains are used to summarize • Word. Net-based • three types of relations: – extra-strong (repetitions) – strong (Word. Net relations) – medium-strong (link between synsets is longer than one + some additional constraints)

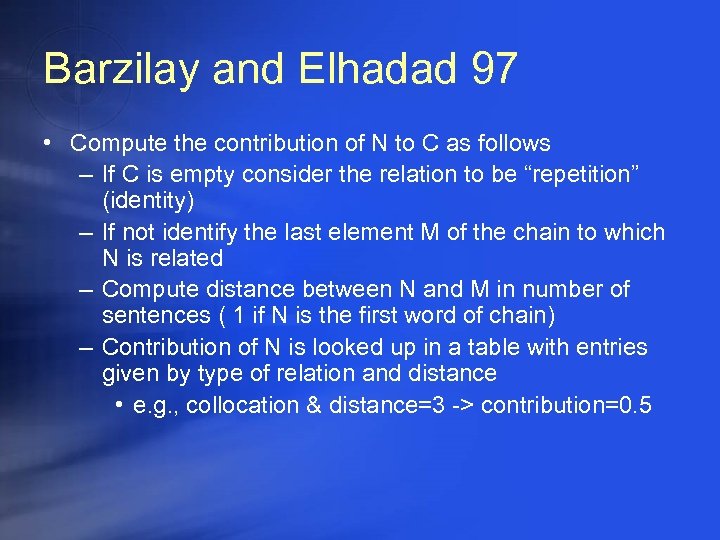

Barzilay and Elhadad 97 • Compute the contribution of N to C as follows – If C is empty consider the relation to be “repetition” (identity) – If not identify the last element M of the chain to which N is related – Compute distance between N and M in number of sentences ( 1 if N is the first word of chain) – Contribution of N is looked up in a table with entries given by type of relation and distance • e. g. , collocation & distance=3 -> contribution=0. 5

Barzilay and Elhadad 97 • Compute the contribution of N to C as follows – If C is empty consider the relation to be “repetition” (identity) – If not identify the last element M of the chain to which N is related – Compute distance between N and M in number of sentences ( 1 if N is the first word of chain) – Contribution of N is looked up in a table with entries given by type of relation and distance • e. g. , collocation & distance=3 -> contribution=0. 5

Barzilay and Elhadad 97 • After inserting all nouns in chains there is a second step • For each noun, identify the chain where it most contributes; delete it from the other chains and adjust weights

Barzilay and Elhadad 97 • After inserting all nouns in chains there is a second step • For each noun, identify the chain where it most contributes; delete it from the other chains and adjust weights

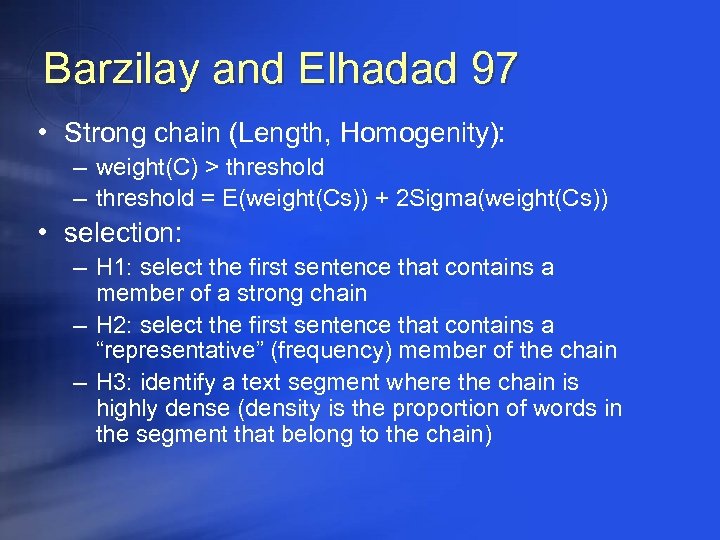

Barzilay and Elhadad 97 • Strong chain (Length, Homogenity): – weight(C) > threshold – threshold = E(weight(Cs)) + 2 Sigma(weight(Cs)) • selection: – H 1: select the first sentence that contains a member of a strong chain – H 2: select the first sentence that contains a “representative” (frequency) member of the chain – H 3: identify a text segment where the chain is highly dense (density is the proportion of words in the segment that belong to the chain)

Barzilay and Elhadad 97 • Strong chain (Length, Homogenity): – weight(C) > threshold – threshold = E(weight(Cs)) + 2 Sigma(weight(Cs)) • selection: – H 1: select the first sentence that contains a member of a strong chain – H 2: select the first sentence that contains a “representative” (frequency) member of the chain – H 3: identify a text segment where the chain is highly dense (density is the proportion of words in the segment that belong to the chain)

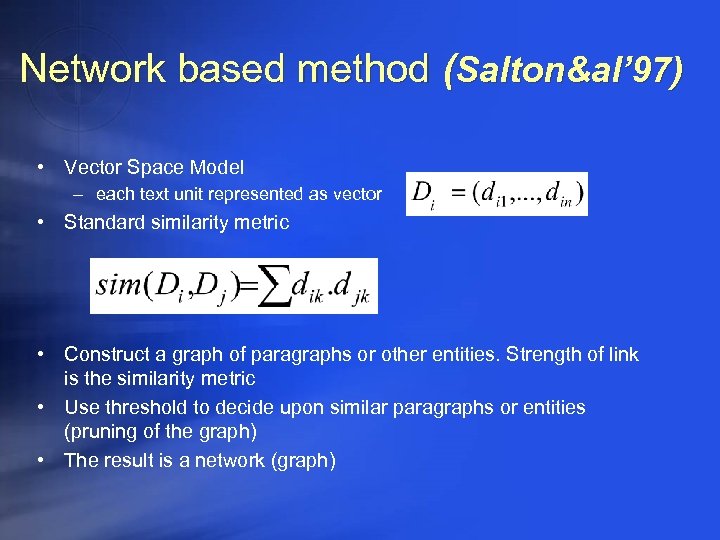

Network based method (Salton&al’ 97) • Vector Space Model – each text unit represented as vector • Standard similarity metric • Construct a graph of paragraphs or other entities. Strength of link is the similarity metric • Use threshold to decide upon similar paragraphs or entities (pruning of the graph) • The result is a network (graph)

Network based method (Salton&al’ 97) • Vector Space Model – each text unit represented as vector • Standard similarity metric • Construct a graph of paragraphs or other entities. Strength of link is the similarity metric • Use threshold to decide upon similar paragraphs or entities (pruning of the graph) • The result is a network (graph)

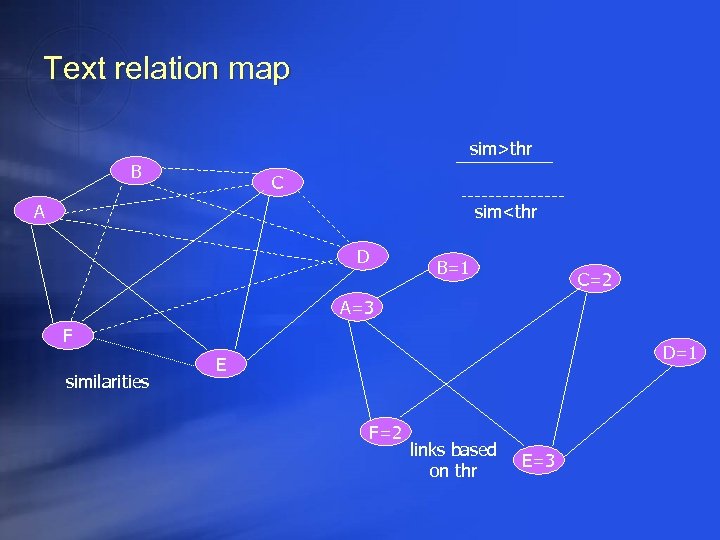

Text relation map sim>thr B C A sim

Text relation map sim>thr B C A sim

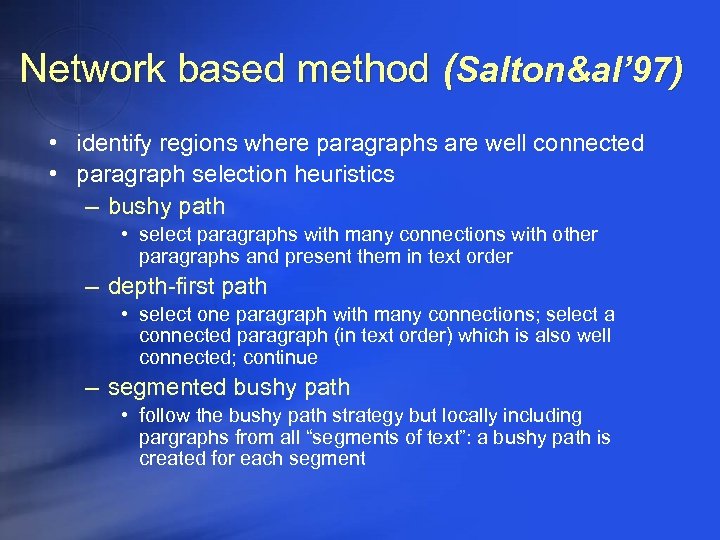

Network based method (Salton&al’ 97) • identify regions where paragraphs are well connected • paragraph selection heuristics – bushy path • select paragraphs with many connections with other paragraphs and present them in text order – depth-first path • select one paragraph with many connections; select a connected paragraph (in text order) which is also well connected; continue – segmented bushy path • follow the bushy path strategy but locally including pargraphs from all “segments of text”: a bushy path is created for each segment

Network based method (Salton&al’ 97) • identify regions where paragraphs are well connected • paragraph selection heuristics – bushy path • select paragraphs with many connections with other paragraphs and present them in text order – depth-first path • select one paragraph with many connections; select a connected paragraph (in text order) which is also well connected; continue – segmented bushy path • follow the bushy path strategy but locally including pargraphs from all “segments of text”: a bushy path is created for each segment

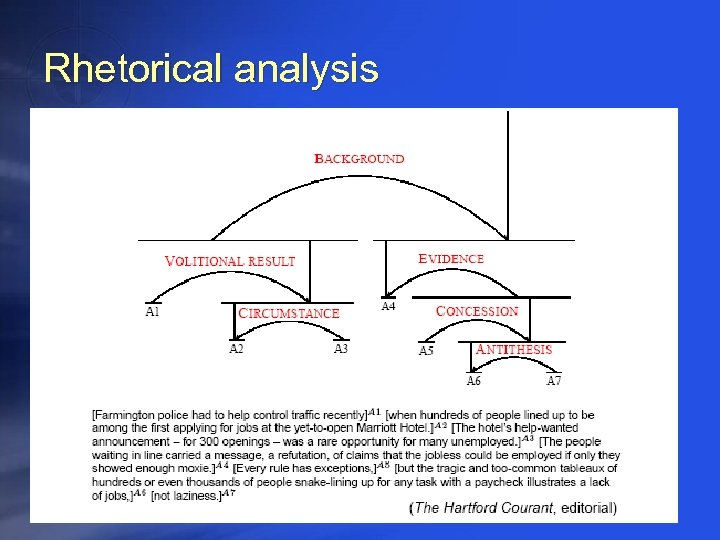

Rhetorical analysis • Rhetorical Structure Theory (RST) – Mann & Thompson’ 88 • Descriptive theory of text organization • Relations between two text spans – nucleus & satellite – nucleus & nucleus – Relations as • Background text • Preparation • Concession (“Even though”)

Rhetorical analysis • Rhetorical Structure Theory (RST) – Mann & Thompson’ 88 • Descriptive theory of text organization • Relations between two text spans – nucleus & satellite – nucleus & nucleus – Relations as • Background text • Preparation • Concession (“Even though”)

Rhetorical analysis

Rhetorical analysis

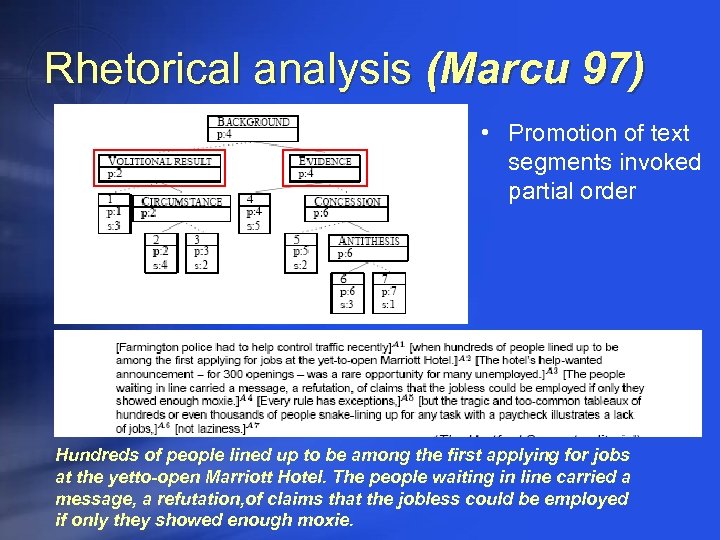

Rhetorical analysis (Marcu 97) • Promotion of text segments invoked partial order Hundreds of people lined up to be among the first applying for jobs at the yetto-open Marriott Hotel. The people waiting in line carried a message, a refutation, of claims that the jobless could be employed if only they showed enough moxie.

Rhetorical analysis (Marcu 97) • Promotion of text segments invoked partial order Hundreds of people lined up to be among the first applying for jobs at the yetto-open Marriott Hotel. The people waiting in line carried a message, a refutation, of claims that the jobless could be employed if only they showed enough moxie.

Rhetorical analysis • A built RST captures relations in the text and can be used for high quality smart summarization • creates a spectrum of summaries due to the partial ordering invoked on the text parts • Building the RST (automatically) is hard nowadays • Not suitable for question answering (targeted summarization)

Rhetorical analysis • A built RST captures relations in the text and can be used for high quality smart summarization • creates a spectrum of summaries due to the partial ordering invoked on the text parts • Building the RST (automatically) is hard nowadays • Not suitable for question answering (targeted summarization)

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs • References

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs • References

Evaluation metrics • precision – Ratio of correct summary sentences (length S) / (length T) • recall – Ratio of relevant sentences included in summary (info in S) / (info in T) • Measuring information: – Shannon Game: quantify information content. – Question Game: test reader’s understanding. – Classification Game: compare classifiability.

Evaluation metrics • precision – Ratio of correct summary sentences (length S) / (length T) • recall – Ratio of relevant sentences included in summary (info in S) / (info in T) • Measuring information: – Shannon Game: quantify information content. – Question Game: test reader’s understanding. – Classification Game: compare classifiability.

Evaluation methods • When a man made summary is available 1. choose a granularity (clause; sentence; paragraph), 2. create a similarity measure for that granularity (word overlap; multi-word overlap, perfect match), 3. measure the similarity of each unit in the new to the most similar unit(s) 4. measure Recall and Precision. • Otherwise 1. Evaluation – expensive , inconclusive 2. Intrinsic – evaluate how good is the summary as a summary 3. Extrinsic – how well does the summary help the user.

Evaluation methods • When a man made summary is available 1. choose a granularity (clause; sentence; paragraph), 2. create a similarity measure for that granularity (word overlap; multi-word overlap, perfect match), 3. measure the similarity of each unit in the new to the most similar unit(s) 4. measure Recall and Precision. • Otherwise 1. Evaluation – expensive , inconclusive 2. Intrinsic – evaluate how good is the summary as a summary 3. Extrinsic – how well does the summary help the user.

Intrinsic measures • Intrinsic measures (glass-box): how good is the summary as a summary? – Problem: how do you measure the goodness of a summary? – Studies: compare to ideal (Edmundson, 69; Kupiec et al. , 95; Salton et al. , 97; Marcu, 97) or supply criteria—fluency, informativeness, coverage, etc. (Brandow et al. , 95). • Summary evaluated on its own or comparing it with the source – Is the text cohesive and coherent? – Does it contain the main topics of the document? – Are important topics omitted?

Intrinsic measures • Intrinsic measures (glass-box): how good is the summary as a summary? – Problem: how do you measure the goodness of a summary? – Studies: compare to ideal (Edmundson, 69; Kupiec et al. , 95; Salton et al. , 97; Marcu, 97) or supply criteria—fluency, informativeness, coverage, etc. (Brandow et al. , 95). • Summary evaluated on its own or comparing it with the source – Is the text cohesive and coherent? – Does it contain the main topics of the document? – Are important topics omitted?

Extrinsic measures • Extrinsic measures (black-box): how well does the summary help a user with a task? – Problem: does summary quality correlate with performance? – Studies: GMAT tests (Morris et al. , 92); news analysis (Miike et al. 94); IR (Mani and Bloedorn, 97); text categorization (SUMMAC 98; Sundheim, 98). • Evaluation in an specific task – Can the summary be used instead of the document? – Can the document be classified by reading the summary? – Can we answer questions by reading the summary?

Extrinsic measures • Extrinsic measures (black-box): how well does the summary help a user with a task? – Problem: does summary quality correlate with performance? – Studies: GMAT tests (Morris et al. , 92); news analysis (Miike et al. 94); IR (Mani and Bloedorn, 97); text categorization (SUMMAC 98; Sundheim, 98). • Evaluation in an specific task – Can the summary be used instead of the document? – Can the document be classified by reading the summary? – Can we answer questions by reading the summary?

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs • References

Contents • • Introduction to summarization Motivation Types of summarization tasks Basic paradigms Single document summarization Evaluation methods Advanced topics – Multiple document summarization – Summarization for PDAs • References

Multiple document summarization • A large number of documents telling the same story • Analysis is mostly done in paragraph level or document level • General summary : eliminate most of the noise without losing information • Question based : return all documents/paragraphs that are relevant (+sometimes – summarize) • Based on the same similarity measures we saw before

Multiple document summarization • A large number of documents telling the same story • Analysis is mostly done in paragraph level or document level • General summary : eliminate most of the noise without losing information • Question based : return all documents/paragraphs that are relevant (+sometimes – summarize) • Based on the same similarity measures we saw before

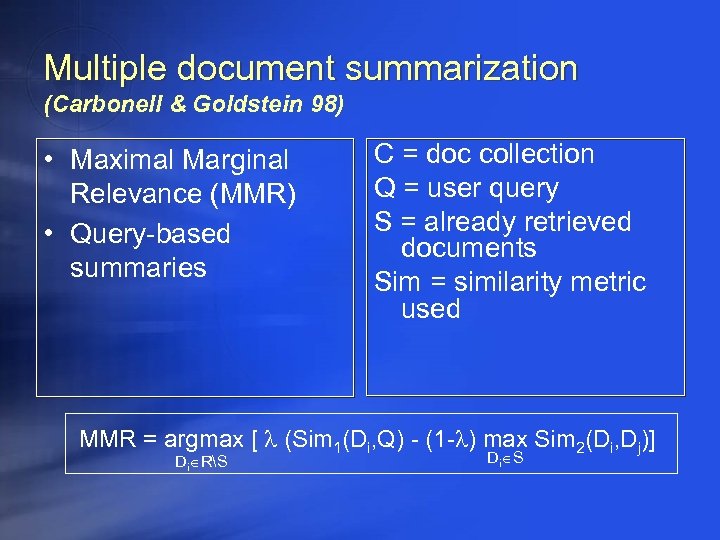

Multiple document summarization (Carbonell & Goldstein 98) • Maximal Marginal Relevance (MMR) • Query-based summaries C = doc collection Q = user query S = already retrieved documents Sim = similarity metric used MMR = argmax [ l (Sim 1(Di, Q) - (1 -l) max Sim 2(Di, Dj)] Di RS Di S

Multiple document summarization (Carbonell & Goldstein 98) • Maximal Marginal Relevance (MMR) • Query-based summaries C = doc collection Q = user query S = already retrieved documents Sim = similarity metric used MMR = argmax [ l (Sim 1(Di, Q) - (1 -l) max Sim 2(Di, Dj)] Di RS Di S

Application of summarization for pda browser (Buyukkokten et al 02) • Due to small scale of PDA, summarization is crucial for easy browsing • Summarization is based on mix of Sentence extraction and keyword extraction based on statistical methods presented • User profiles are supported (type of question answering) • When using WWW data there is a problem in creating an exhaustive corpus – 0 probability words will be encountered and must be treated

Application of summarization for pda browser (Buyukkokten et al 02) • Due to small scale of PDA, summarization is crucial for easy browsing • Summarization is based on mix of Sentence extraction and keyword extraction based on statistical methods presented • User profiles are supported (type of question answering) • When using WWW data there is a problem in creating an exhaustive corpus – 0 probability words will be encountered and must be treated

A now, a human summary of this lecture… • A large number of algorithms, articles and application • Most of modern methods are not implemented in software (only proofs of concept) • Very hard to create a large corpus that has “correct answers” – very hard to evaluate results • “Less is more…” so maybe I should just stop talking

A now, a human summary of this lecture… • A large number of algorithms, articles and application • Most of modern methods are not implemented in software (only proofs of concept) • Very hard to create a large corpus that has “correct answers” – very hard to evaluate results • “Less is more…” so maybe I should just stop talking

Links and articles The Automatic Creation of Literature Abstracts (H. P. Luhn) New Methods in Automatic Extracting (H. P. Edmundson) A Trainable Document Summarizer (J. Kupiec, J. Pedersen, and F. Chen) Automated Text Summarization in SUMMARIST (E. Hovy and C. Lin) Using Lexical Chains for Text Summarization (R. Barzilay and M. Elhadad) Discourse Trees Are Good Indicators of Importance in Text (D. Marcu) Automatic Text Structuring and Summarization (G. Salton, A. Singhal, M. Mitra, and C. Buckley) www. summarization. com

Links and articles The Automatic Creation of Literature Abstracts (H. P. Luhn) New Methods in Automatic Extracting (H. P. Edmundson) A Trainable Document Summarizer (J. Kupiec, J. Pedersen, and F. Chen) Automated Text Summarization in SUMMARIST (E. Hovy and C. Lin) Using Lexical Chains for Text Summarization (R. Barzilay and M. Elhadad) Discourse Trees Are Good Indicators of Importance in Text (D. Marcu) Automatic Text Structuring and Summarization (G. Salton, A. Singhal, M. Mitra, and C. Buckley) www. summarization. com