3e4e801767147c010d7807ef1fc650e8.ppt

- Количество слайдов: 20

Automatic Synthesis of Efficient Intrusion Detection Systems on FPGAs by Zachary K. Baker and Viktor K. Prasanna University of Southern California, Los Angeles, CA, USA FPL 2004 Review 12 -9 -04 Presented by Jack Meier

Automatic Synthesis of Efficient Intrusion Detection Systems on FPGAs by Zachary K. Baker and Viktor K. Prasanna University of Southern California, Los Angeles, CA, USA FPL 2004 Review 12 -9 -04 Presented by Jack Meier

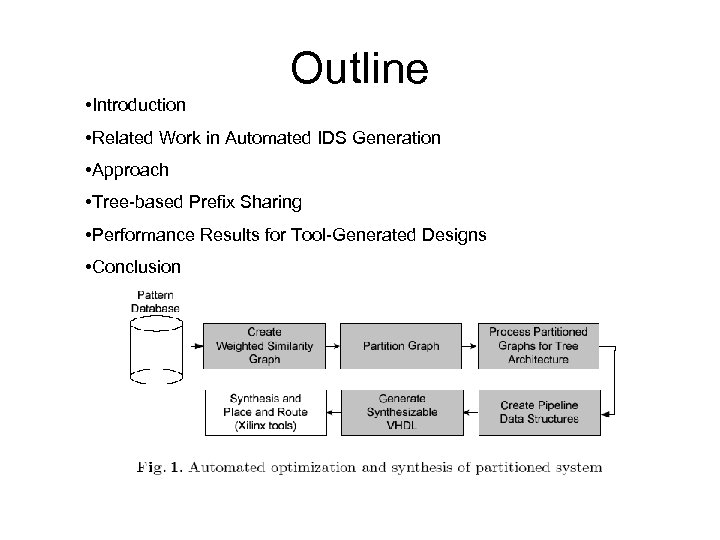

Outline • Introduction • Related Work in Automated IDS Generation • Approach • Tree-based Prefix Sharing • Performance Results for Tool-Generated Designs • Conclusion

Outline • Introduction • Related Work in Automated IDS Generation • Approach • Tree-based Prefix Sharing • Performance Results for Tool-Generated Designs • Conclusion

Background on network security and intrusion detection • Performance – ability to match against a large set of patterns • Features – automatically optimize and synthesize large designs • Application for Intrusion detection software – Snort – Hogwash • Trends – Move away from general-purpose microprocessor • Admins remove rules from the databases when performance is limited – Move to string matching to an FPGA based reconfigurable hardware

Background on network security and intrusion detection • Performance – ability to match against a large set of patterns • Features – automatically optimize and synthesize large designs • Application for Intrusion detection software – Snort – Hogwash • Trends – Move away from general-purpose microprocessor • Admins remove rules from the databases when performance is limited – Move to string matching to an FPGA based reconfigurable hardware

Related Work in Automated IDS Generation • Open-source Intrusion detection software – Snort – Hogwash • String matching is just one component of the many functions used in Snort and Hogwash

Related Work in Automated IDS Generation • Open-source Intrusion detection software – Snort – Hogwash • String matching is just one component of the many functions used in Snort and Hogwash

Related work in FPGA-based network scanning • First reassemble TCP stream (Wash. U: Schuehler) – demultiplex the TCP/IP stream into substreams • Sort packets based on rules – (Wash. U: Mike Attig) • Washington University research approach – Deterministic Finite Automata pattern matchers (Wash. U: Reg. Ex) • Spread the load over several parallel matching units • Four parallel reduce the runtime by 4 X • Limited number of regular expressions patterns support – Bloom Filters • Provides large amount of string matching • Pre-decoded shift-and-compare – shift registers • Alternative approaches – This paper lacks references much of the related work

Related work in FPGA-based network scanning • First reassemble TCP stream (Wash. U: Schuehler) – demultiplex the TCP/IP stream into substreams • Sort packets based on rules – (Wash. U: Mike Attig) • Washington University research approach – Deterministic Finite Automata pattern matchers (Wash. U: Reg. Ex) • Spread the load over several parallel matching units • Four parallel reduce the runtime by 4 X • Limited number of regular expressions patterns support – Bloom Filters • Provides large amount of string matching • Pre-decoded shift-and-compare – shift registers • Alternative approaches – This paper lacks references much of the related work

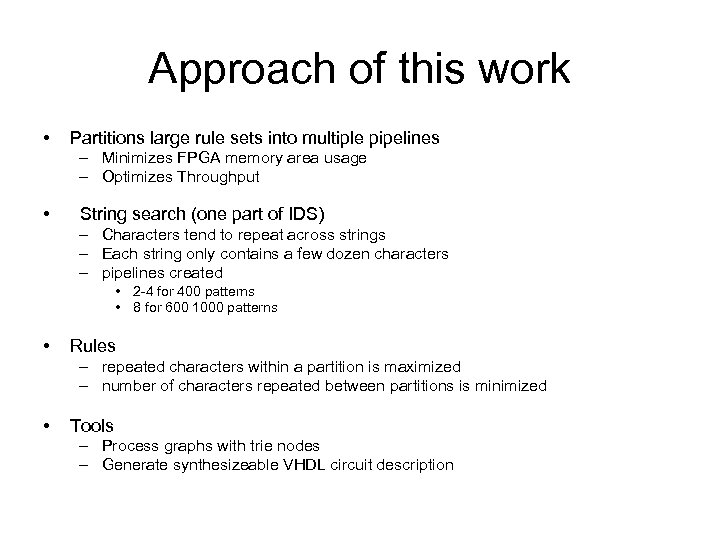

Approach of this work • Partitions large rule sets into multiple pipelines – Minimizes FPGA memory area usage – Optimizes Throughput • String search (one part of IDS) – Characters tend to repeat across strings – Each string only contains a few dozen characters – pipelines created • 2 -4 for 400 patterns • 8 for 600 1000 patterns • Rules – repeated characters within a partition is maximized – number of characters repeated between partitions is minimized • Tools – Process graphs with trie nodes – Generate synthesizeable VHDL circuit description

Approach of this work • Partitions large rule sets into multiple pipelines – Minimizes FPGA memory area usage – Optimizes Throughput • String search (one part of IDS) – Characters tend to repeat across strings – Each string only contains a few dozen characters – pipelines created • 2 -4 for 400 patterns • 8 for 600 1000 patterns • Rules – repeated characters within a partition is maximized – number of characters repeated between partitions is minimized • Tools – Process graphs with trie nodes – Generate synthesizeable VHDL circuit description

Approach

Approach

Contribution of this work • Tool – – – accepts rule strings creates pipelined distribution networks converts template-generated Java to netlists w/JHDL Reduces amount of routing required Reduces complexity of finite automata state machines • Proposed Tool combines common prefixes to form matching trees – Adds pre-decoded wide parallel inputs

Contribution of this work • Tool – – – accepts rule strings creates pipelined distribution networks converts template-generated Java to netlists w/JHDL Reduces amount of routing required Reduces complexity of finite automata state machines • Proposed Tool combines common prefixes to form matching trees – Adds pre-decoded wide parallel inputs

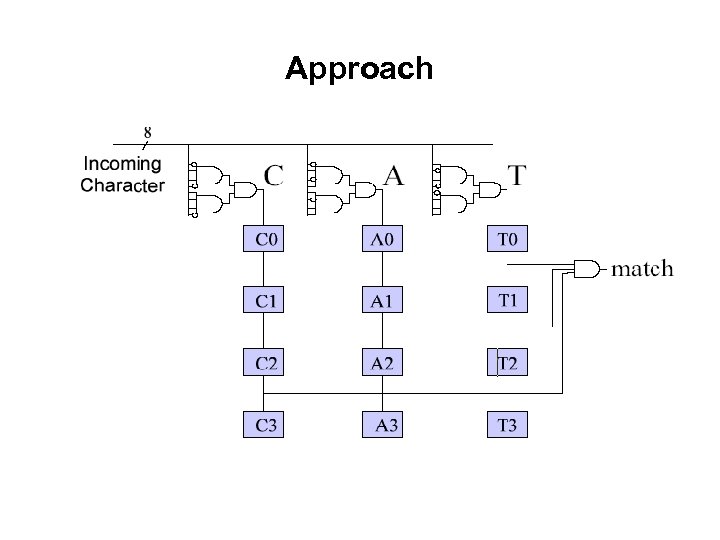

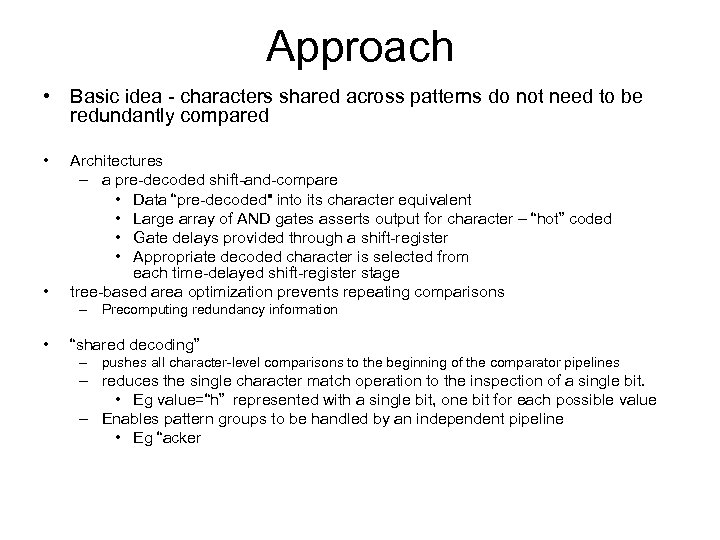

Approach • Basic idea - characters shared across patterns do not need to be redundantly compared • • Architectures – a pre-decoded shift-and-compare • Data “pre-decoded" into its character equivalent • Large array of AND gates asserts output for character – “hot” coded • Gate delays provided through a shift-register • Appropriate decoded character is selected from each time-delayed shift-register stage tree-based area optimization prevents repeating comparisons – Precomputing redundancy information • “shared decoding” – pushes all character-level comparisons to the beginning of the comparator pipelines – reduces the single character match operation to the inspection of a single bit. • Eg value=“h” represented with a single bit, one bit for each possible value – Enables pattern groups to be handled by an independent pipeline • Eg “acker

Approach • Basic idea - characters shared across patterns do not need to be redundantly compared • • Architectures – a pre-decoded shift-and-compare • Data “pre-decoded" into its character equivalent • Large array of AND gates asserts output for character – “hot” coded • Gate delays provided through a shift-register • Appropriate decoded character is selected from each time-delayed shift-register stage tree-based area optimization prevents repeating comparisons – Precomputing redundancy information • “shared decoding” – pushes all character-level comparisons to the beginning of the comparator pipelines – reduces the single character match operation to the inspection of a single bit. • Eg value=“h” represented with a single bit, one bit for each possible value – Enables pattern groups to be handled by an independent pipeline • Eg “acker

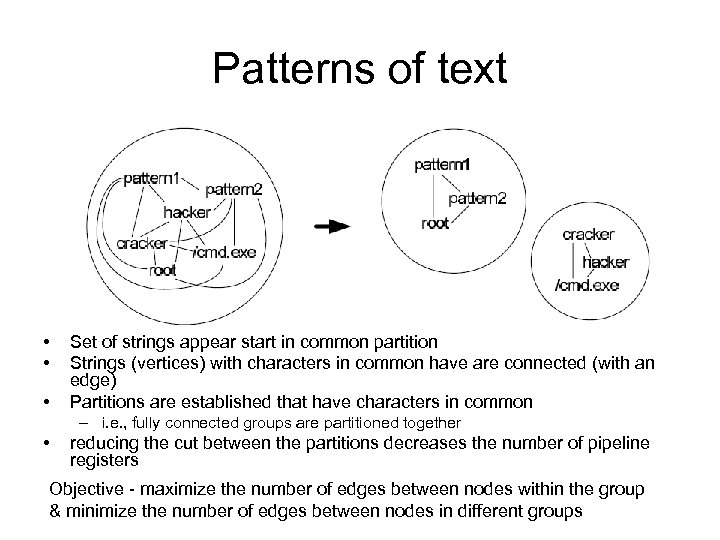

Patterns of text • • • Set of strings appear start in common partition Strings (vertices) with characters in common have are connected (with an edge) Partitions are established that have characters in common – i. e. , fully connected groups are partitioned together • reducing the cut between the partitions decreases the number of pipeline registers Objective - maximize the number of edges between nodes within the group & minimize the number of edges between nodes in different groups

Patterns of text • • • Set of strings appear start in common partition Strings (vertices) with characters in common have are connected (with an edge) Partitions are established that have characters in common – i. e. , fully connected groups are partitioned together • reducing the cut between the partitions decreases the number of pipeline registers Objective - maximize the number of edges between nodes within the group & minimize the number of edges between nodes in different groups

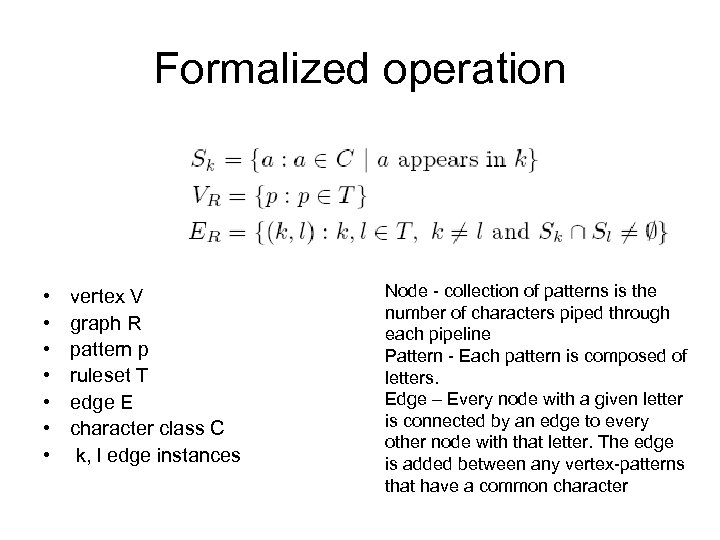

Formalized operation • • vertex V graph R pattern p ruleset T edge E character class C k, l edge instances Node - collection of patterns is the number of characters piped through each pipeline Pattern - Each pattern is composed of letters. Edge – Every node with a given letter is connected by an edge to every other node with that letter. The edge is added between any vertex-patterns that have a common character

Formalized operation • • vertex V graph R pattern p ruleset T edge E character class C k, l edge instances Node - collection of patterns is the number of characters piped through each pipeline Pattern - Each pattern is composed of letters. Edge – Every node with a given letter is connected by an edge to every other node with that letter. The edge is added between any vertex-patterns that have a common character

Tree-based Prefix Sharing • efficient search of matching rules – Boyer-Moore algorithm – Aho-Corasick algorithm – hashing mechanism utilizing the Bloom filter • pre-decoding strategy - converts characters to single bit lines in the cycle before they are required in the state machine – – reduces the area of designs allows more patterns to be packed in the FPGA customized for the 4 -bit blocks of characters • four character prefixes map to four decoded bits • Fits Xilinx Virtex 4 -bit lookup table

Tree-based Prefix Sharing • efficient search of matching rules – Boyer-Moore algorithm – Aho-Corasick algorithm – hashing mechanism utilizing the Bloom filter • pre-decoding strategy - converts characters to single bit lines in the cycle before they are required in the state machine – – reduces the area of designs allows more patterns to be packed in the FPGA customized for the 4 -bit blocks of characters • four character prefixes map to four decoded bits • Fits Xilinx Virtex 4 -bit lookup table

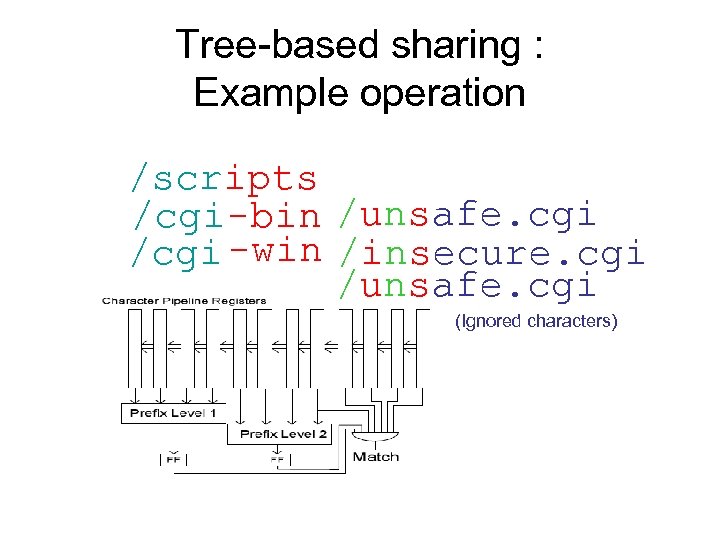

Tree-based sharing : Example operation /scripts /cgi-bin /unsafe. cgi /cgi -win /insecure. cgi /unsafe. cgi (Ignored characters)

Tree-based sharing : Example operation /scripts /cgi-bin /unsafe. cgi /cgi -win /insecure. cgi /unsafe. cgi (Ignored characters)

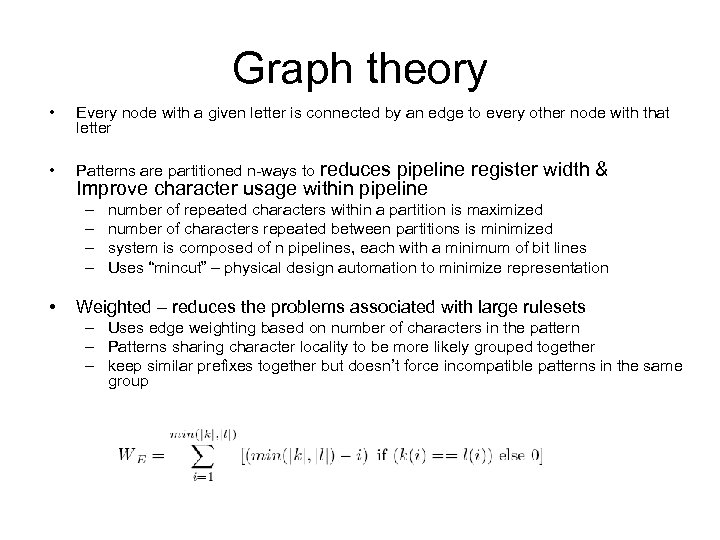

Graph theory • Every node with a given letter is connected by an edge to every other node with that letter • Patterns are partitioned n-ways to reduces pipeline register width & Improve character usage within pipeline – – • number of repeated characters within a partition is maximized number of characters repeated between partitions is minimized system is composed of n pipelines, each with a minimum of bit lines Uses “mincut” – physical design automation to minimize representation Weighted – reduces the problems associated with large rulesets – Uses edge weighting based on number of characters in the pattern – Patterns sharing character locality to be more likely grouped together – keep similar prefixes together but doesn’t force incompatible patterns in the same group

Graph theory • Every node with a given letter is connected by an edge to every other node with that letter • Patterns are partitioned n-ways to reduces pipeline register width & Improve character usage within pipeline – – • number of repeated characters within a partition is maximized number of characters repeated between partitions is minimized system is composed of n pipelines, each with a minimum of bit lines Uses “mincut” – physical design automation to minimize representation Weighted – reduces the problems associated with large rulesets – Uses edge weighting based on number of characters in the pattern – Patterns sharing character locality to be more likely grouped together – keep similar prefixes together but doesn’t force incompatible patterns in the same group

On-line Tools • Tools on-line at – http: //halcyon. usc. edu/~zbaker/idstools • Tool Package • Partitioning tools - KMETIS toolset • the trie data structure module http: //search. cpan. org/~avif/Tree-Trie-0. 4/Trie. pm • IDS database - Hogwash

On-line Tools • Tools on-line at – http: //halcyon. usc. edu/~zbaker/idstools • Tool Package • Partitioning tools - KMETIS toolset • the trie data structure module http: //search. cpan. org/~avif/Tree-Trie-0. 4/Trie. pm • IDS database - Hogwash

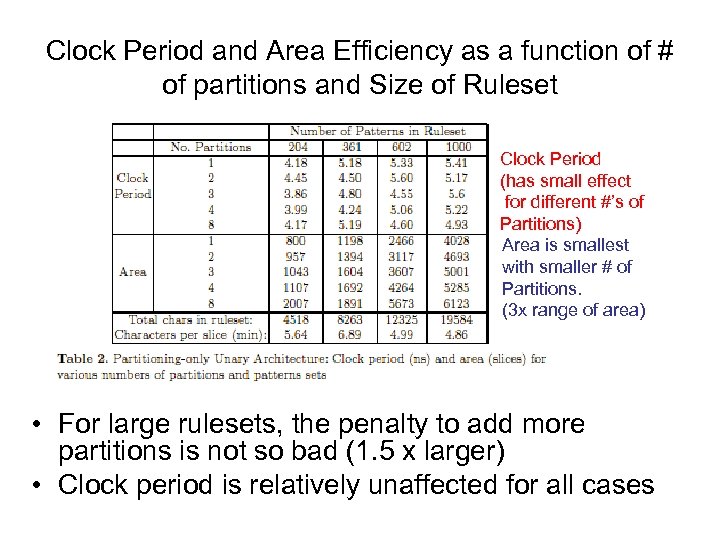

Clock Period and Area Efficiency as a function of # of partitions and Size of Ruleset Clock Period (has small effect for different #’s of Partitions) Area is smallest with smaller # of Partitions. (3 x range of area) • For large rulesets, the penalty to add more partitions is not so bad (1. 5 x larger) • Clock period is relatively unaffected for all cases

Clock Period and Area Efficiency as a function of # of partitions and Size of Ruleset Clock Period (has small effect for different #’s of Partitions) Area is smallest with smaller # of Partitions. (3 x range of area) • For large rulesets, the penalty to add more partitions is not so bad (1. 5 x larger) • Clock period is relatively unaffected for all cases

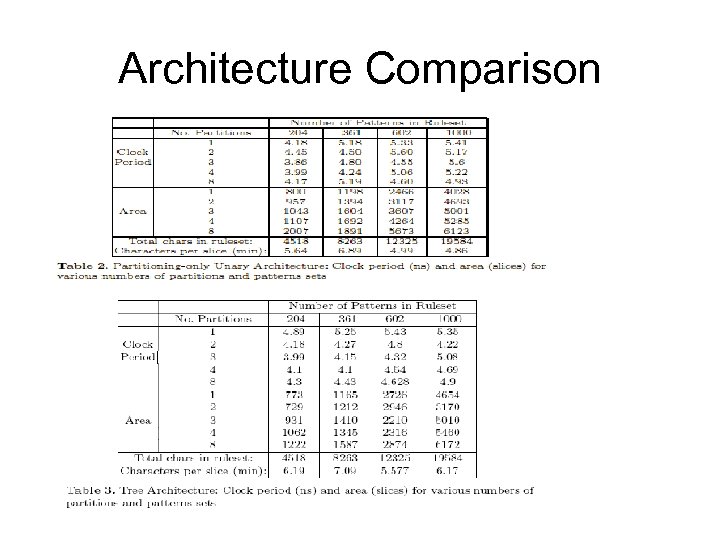

Architecture Comparison

Architecture Comparison

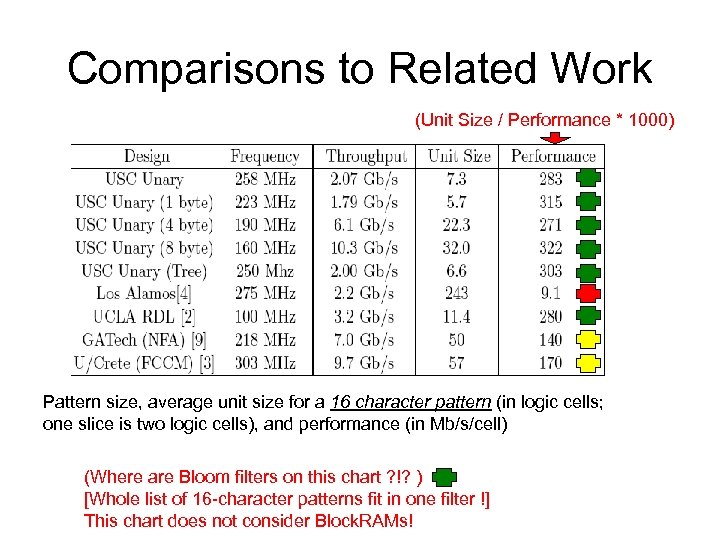

Comparisons to Related Work (Unit Size / Performance * 1000) Pattern size, average unit size for a 16 character pattern (in logic cells; one slice is two logic cells), and performance (in Mb/s/cell) (Where are Bloom filters on this chart ? !? ) [Whole list of 16 -character patterns fit in one filter !] This chart does not consider Block. RAMs!

Comparisons to Related Work (Unit Size / Performance * 1000) Pattern size, average unit size for a 16 character pattern (in logic cells; one slice is two logic cells), and performance (in Mb/s/cell) (Where are Bloom filters on this chart ? !? ) [Whole list of 16 -character patterns fit in one filter !] This chart does not consider Block. RAMs!

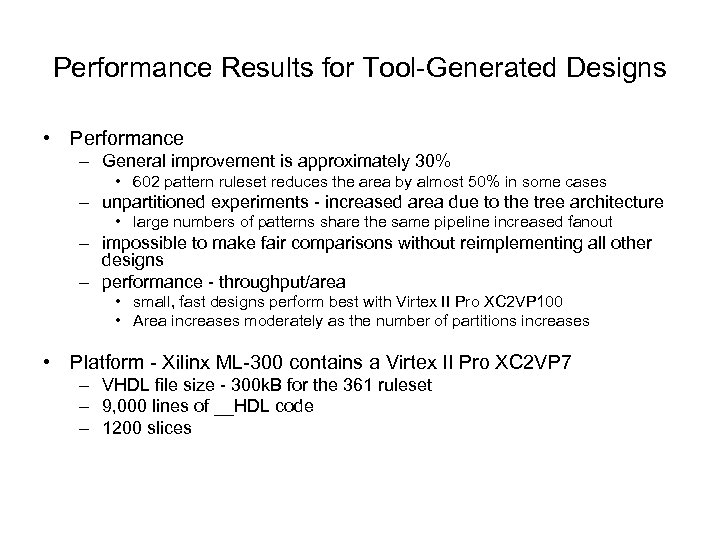

Performance Results for Tool-Generated Designs • Performance – General improvement is approximately 30% • 602 pattern ruleset reduces the area by almost 50% in some cases – unpartitioned experiments - increased area due to the tree architecture • large numbers of patterns share the same pipeline increased fanout – impossible to make fair comparisons without reimplementing all other designs – performance - throughput/area • small, fast designs perform best with Virtex II Pro XC 2 VP 100 • Area increases moderately as the number of partitions increases • Platform - Xilinx ML-300 contains a Virtex II Pro XC 2 VP 7 – VHDL file size - 300 k. B for the 361 ruleset – 9, 000 lines of __HDL code – 1200 slices

Performance Results for Tool-Generated Designs • Performance – General improvement is approximately 30% • 602 pattern ruleset reduces the area by almost 50% in some cases – unpartitioned experiments - increased area due to the tree architecture • large numbers of patterns share the same pipeline increased fanout – impossible to make fair comparisons without reimplementing all other designs – performance - throughput/area • small, fast designs perform best with Virtex II Pro XC 2 VP 100 • Area increases moderately as the number of partitions increases • Platform - Xilinx ML-300 contains a Virtex II Pro XC 2 VP 7 – VHDL file size - 300 k. B for the 361 ruleset – 9, 000 lines of __HDL code – 1200 slices

Conclusion • Throughput (1 / Clock_rate) not greatly affected by # of partitions • Area increases with # of Partitions • Performance of approach (speed/area) – – Comparable to that achieved by UCLA RDL About 2 x better than Ga. Tech and U. Create About 30 x better than Los Alamos Not compared to Bloom filters

Conclusion • Throughput (1 / Clock_rate) not greatly affected by # of partitions • Area increases with # of Partitions • Performance of approach (speed/area) – – Comparable to that achieved by UCLA RDL About 2 x better than Ga. Tech and U. Create About 30 x better than Los Alamos Not compared to Bloom filters