0016f3811334bdb8d90d6bebfd1e5067.ppt

- Количество слайдов: 47

Automatic Music Classification Cory Mc. Kay

Automatic Music Classification Cory Mc. Kay

Introduction n Many areas of research in music information retrieval (MIR) involve using computers to classify music in various ways ¨ ¨ ¨ ¨ n Genre or style classification Mood classification Performer or composer identification Music recommendation Playlist generation Hit prediction Audio to symbolic transcription etc. Such areas often share similar central procedures 2/47

Introduction n Many areas of research in music information retrieval (MIR) involve using computers to classify music in various ways ¨ ¨ ¨ ¨ n Genre or style classification Mood classification Performer or composer identification Music recommendation Playlist generation Hit prediction Audio to symbolic transcription etc. Such areas often share similar central procedures 2/47

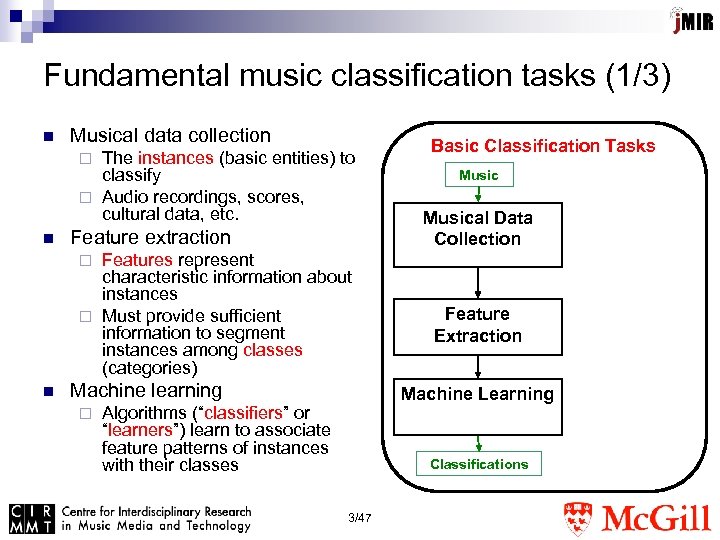

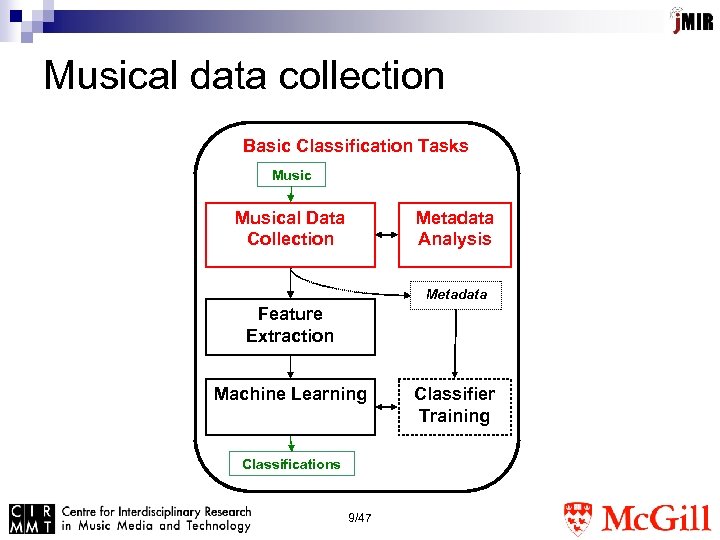

Fundamental music classification tasks (1/3) n Musical data collection The instances (basic entities) to classify ¨ Audio recordings, scores, cultural data, etc. ¨ n Feature extraction Features represent characteristic information about instances ¨ Must provide sufficient information to segment instances among classes (categories) Basic Classification Tasks Musical Data Collection ¨ n Machine learning ¨ Feature Extraction Machine Learning Algorithms (“classifiers” or “learners”) learn to associate feature patterns of instances with their classes Classifications 3/47

Fundamental music classification tasks (1/3) n Musical data collection The instances (basic entities) to classify ¨ Audio recordings, scores, cultural data, etc. ¨ n Feature extraction Features represent characteristic information about instances ¨ Must provide sufficient information to segment instances among classes (categories) Basic Classification Tasks Musical Data Collection ¨ n Machine learning ¨ Feature Extraction Machine Learning Algorithms (“classifiers” or “learners”) learn to associate feature patterns of instances with their classes Classifications 3/47

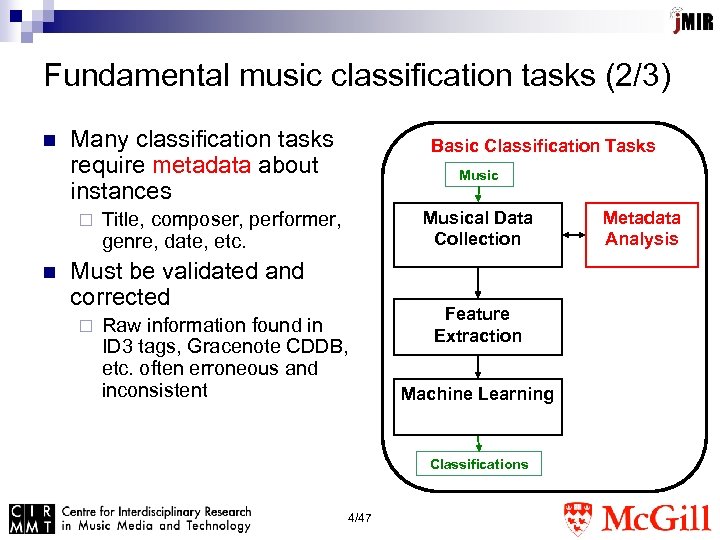

Fundamental music classification tasks (2/3) n Many classification tasks require metadata about instances ¨ n Basic Classification Tasks Musical Data Collection Title, composer, performer, genre, date, etc. Must be validated and corrected ¨ Raw information found in ID 3 tags, Gracenote CDDB, etc. often erroneous and inconsistent Feature Extraction Machine Learning Classifications 4/47 Metadata Analysis

Fundamental music classification tasks (2/3) n Many classification tasks require metadata about instances ¨ n Basic Classification Tasks Musical Data Collection Title, composer, performer, genre, date, etc. Must be validated and corrected ¨ Raw information found in ID 3 tags, Gracenote CDDB, etc. often erroneous and inconsistent Feature Extraction Machine Learning Classifications 4/47 Metadata Analysis

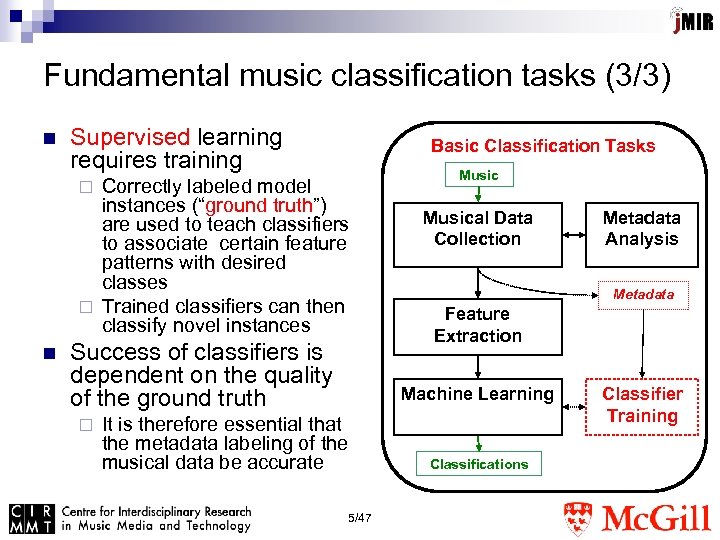

Fundamental music classification tasks (3/3) n Supervised learning requires training Basic Classification Tasks Correctly labeled model instances (“ground truth”) are used to teach classifiers to associate certain feature patterns with desired classes ¨ Trained classifiers can then classify novel instances ¨ n Success of classifiers is dependent on the quality of the ground truth ¨ Musical Data Collection Metadata Feature Extraction Machine Learning It is therefore essential that the metadata labeling of the musical data be accurate Classifications 5/47 Metadata Analysis Classifier Training

Fundamental music classification tasks (3/3) n Supervised learning requires training Basic Classification Tasks Correctly labeled model instances (“ground truth”) are used to teach classifiers to associate certain feature patterns with desired classes ¨ Trained classifiers can then classify novel instances ¨ n Success of classifiers is dependent on the quality of the ground truth ¨ Musical Data Collection Metadata Feature Extraction Machine Learning It is therefore essential that the metadata labeling of the musical data be accurate Classifications 5/47 Metadata Analysis Classifier Training

Consolidating fundamental tasks n Properly performing these tasks requires significant effort and knowledge in (at least): Data mining ¨ Signal processing ¨ Musicology ¨ n Result: Naïve or improperly performed research ¨ Duplication of effort ¨ Reluctance to use automatic music classification in musicological or other research where it could be useful ¨ n Solution: standardized MIR research software ¨ Makes automatic music classification technology available to researchers in many disciplines 6/47

Consolidating fundamental tasks n Properly performing these tasks requires significant effort and knowledge in (at least): Data mining ¨ Signal processing ¨ Musicology ¨ n Result: Naïve or improperly performed research ¨ Duplication of effort ¨ Reluctance to use automatic music classification in musicological or other research where it could be useful ¨ n Solution: standardized MIR research software ¨ Makes automatic music classification technology available to researchers in many disciplines 6/47

Existing MIR software n Only a few MIR software systems have been built for use by other researchers ¨ ¨ e. g. Marsyas and M 2 K Tend to focus primarily on particular sub-tasks n ¨ ¨ ¨ Not typically well integrated with other systems Do not sufficiently emphasize extensibility Typically have usability problems n n e. g. audio feature extraction Installation and licensing issues, poor documentation Result: Emphasis on existing techniques rather than development of new approaches ¨ Difficulties in integrating research between labs ¨ Inaccessible to non-technical music researchers ¨ 7/47

Existing MIR software n Only a few MIR software systems have been built for use by other researchers ¨ ¨ e. g. Marsyas and M 2 K Tend to focus primarily on particular sub-tasks n ¨ ¨ ¨ Not typically well integrated with other systems Do not sufficiently emphasize extensibility Typically have usability problems n n e. g. audio feature extraction Installation and licensing issues, poor documentation Result: Emphasis on existing techniques rather than development of new approaches ¨ Difficulties in integrating research between labs ¨ Inaccessible to non-technical music researchers ¨ 7/47

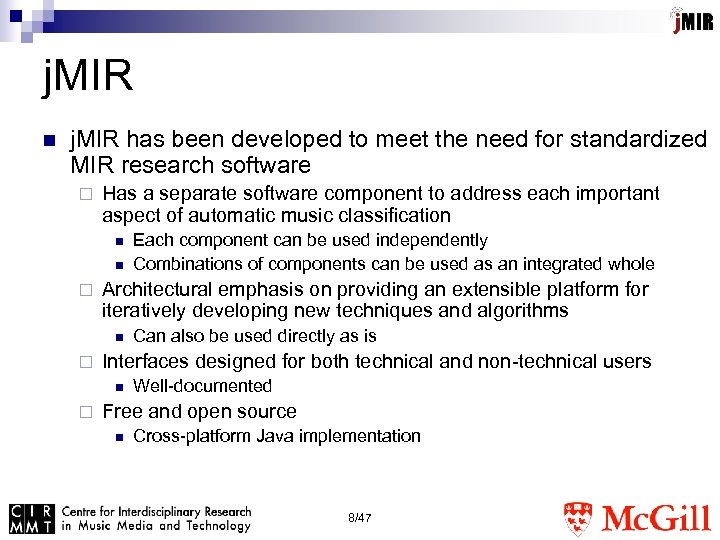

j. MIR n j. MIR has been developed to meet the need for standardized MIR research software ¨ Has a separate software component to address each important aspect of automatic music classification n n ¨ Architectural emphasis on providing an extensible platform for iteratively developing new techniques and algorithms n ¨ Can also be used directly as is Interfaces designed for both technical and non-technical users n ¨ Each component can be used independently Combinations of components can be used as an integrated whole Well-documented Free and open source n Cross-platform Java implementation 8/47

j. MIR n j. MIR has been developed to meet the need for standardized MIR research software ¨ Has a separate software component to address each important aspect of automatic music classification n n ¨ Architectural emphasis on providing an extensible platform for iteratively developing new techniques and algorithms n ¨ Can also be used directly as is Interfaces designed for both technical and non-technical users n ¨ Each component can be used independently Combinations of components can be used as an integrated whole Well-documented Free and open source n Cross-platform Java implementation 8/47

Musical data collection Basic Classification Tasks Musical Data Collection Metadata Analysis Metadata Feature Extraction Machine Learning Classifications 9/47 Classifier Training

Musical data collection Basic Classification Tasks Musical Data Collection Metadata Analysis Metadata Feature Extraction Machine Learning Classifications 9/47 Classifier Training

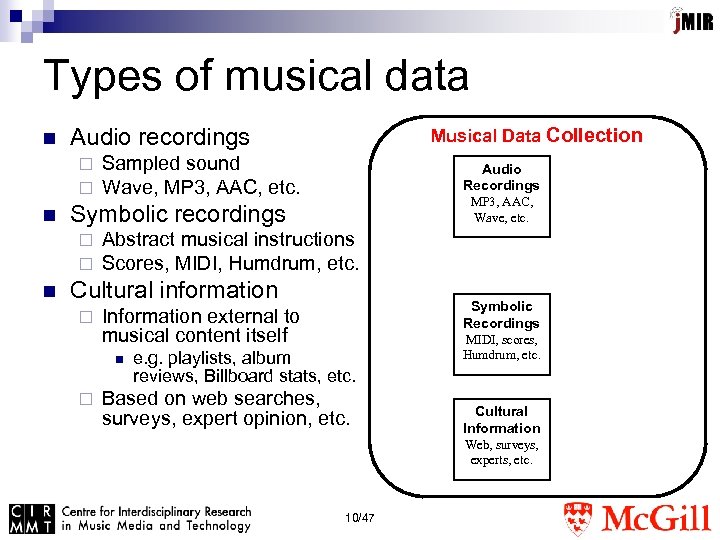

Types of musical data n Audio recordings ¨ ¨ n Sampled sound Wave, MP 3, AAC, etc. Audio Recordings MP 3, AAC, Wave, etc. Symbolic recordings ¨ ¨ n Musical Data Collection Abstract musical instructions Scores, MIDI, Humdrum, etc. Cultural information ¨ Symbolic Recordings Information external to musical content itself e. g. playlists, album reviews, Billboard stats, etc. Based on web searches, surveys, expert opinion, etc. MIDI, scores, Humdrum, etc. Cultural Information Web, surveys, experts, etc. 10/47

Types of musical data n Audio recordings ¨ ¨ n Sampled sound Wave, MP 3, AAC, etc. Audio Recordings MP 3, AAC, Wave, etc. Symbolic recordings ¨ ¨ n Musical Data Collection Abstract musical instructions Scores, MIDI, Humdrum, etc. Cultural information ¨ Symbolic Recordings Information external to musical content itself e. g. playlists, album reviews, Billboard stats, etc. Based on web searches, surveys, expert opinion, etc. MIDI, scores, Humdrum, etc. Cultural Information Web, surveys, experts, etc. 10/47

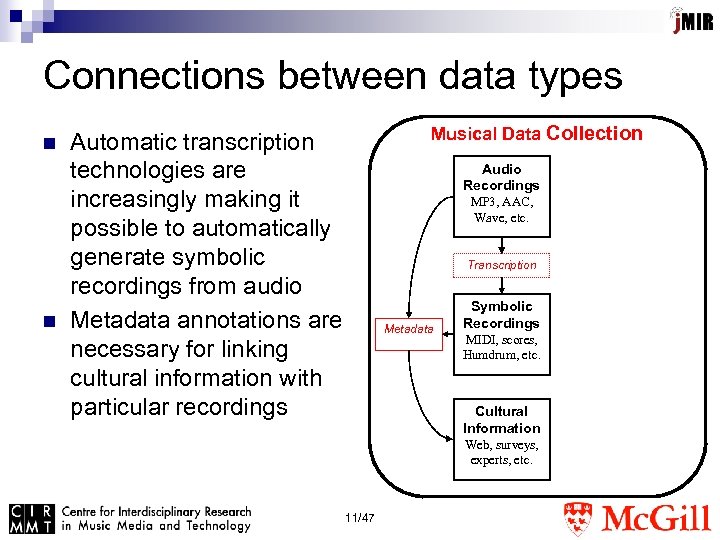

Connections between data types n n Musical Data Collection Automatic transcription technologies are increasingly making it possible to automatically generate symbolic recordings from audio Metadata annotations are necessary for linking cultural information with particular recordings Audio Recordings MP 3, AAC, Wave, etc. Transcription Metadata Symbolic Recordings MIDI, scores, Humdrum, etc. Cultural Information Web, surveys, experts, etc. 11/47

Connections between data types n n Musical Data Collection Automatic transcription technologies are increasingly making it possible to automatically generate symbolic recordings from audio Metadata annotations are necessary for linking cultural information with particular recordings Audio Recordings MP 3, AAC, Wave, etc. Transcription Metadata Symbolic Recordings MIDI, scores, Humdrum, etc. Cultural Information Web, surveys, experts, etc. 11/47

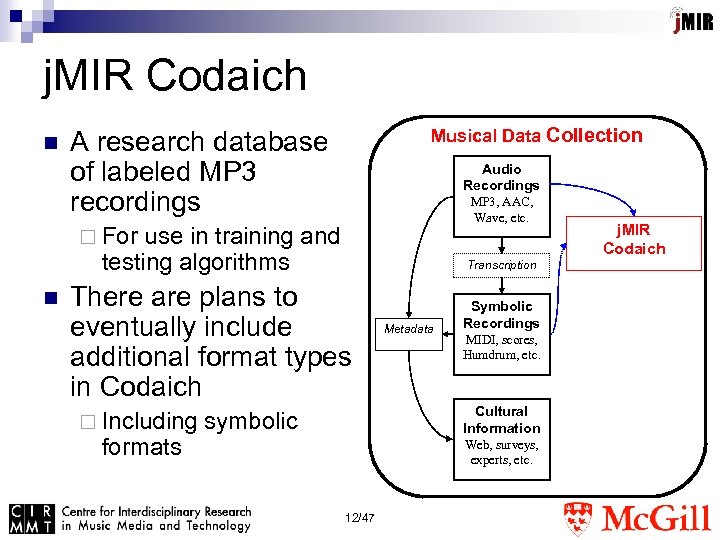

j. MIR Codaich n Musical Data Collection A research database of labeled MP 3 recordings Audio Recordings MP 3, AAC, Wave, etc. ¨ For use in training and testing algorithms n Transcription There are plans to eventually include additional format types in Codaich ¨ Including formats Metadata Symbolic Recordings MIDI, scores, Humdrum, etc. Cultural Information symbolic Web, surveys, experts, etc. 12/47 j. MIR Codaich

j. MIR Codaich n Musical Data Collection A research database of labeled MP 3 recordings Audio Recordings MP 3, AAC, Wave, etc. ¨ For use in training and testing algorithms n Transcription There are plans to eventually include additional format types in Codaich ¨ Including formats Metadata Symbolic Recordings MIDI, scores, Humdrum, etc. Cultural Information symbolic Web, surveys, experts, etc. 12/47 j. MIR Codaich

Sharing Codaich n Codaich is intended to provide a common knowledge base that can be used by researchers in different labs to compare the effectiveness of their varying approaches n Overcoming copyright limitations on distributing music: ¨ On-demand Feature Extraction Network (OMEN) n Implemented by Daniel Mc. Ennis ¨ Researchers use distributed computing and the j. MIR j. Audio feature extractor to request local feature extraction at sites (e. g. , libraries) that have legal access to individual recordings ¨ j. Audio and OMEN allow custom original features and extraction parameters 13/47

Sharing Codaich n Codaich is intended to provide a common knowledge base that can be used by researchers in different labs to compare the effectiveness of their varying approaches n Overcoming copyright limitations on distributing music: ¨ On-demand Feature Extraction Network (OMEN) n Implemented by Daniel Mc. Ennis ¨ Researchers use distributed computing and the j. MIR j. Audio feature extractor to request local feature extraction at sites (e. g. , libraries) that have legal access to individual recordings ¨ j. Audio and OMEN allow custom original features and extraction parameters 13/47

Statistics on Codaich n 27 305 MP 3 recordings ¨ Constantly growing 2247 artists n 55 genres n ¨ Popular, n classical, jazz and “world” 19 metadata fields 14/47

Statistics on Codaich n 27 305 MP 3 recordings ¨ Constantly growing 2247 artists n 55 genres n ¨ Popular, n classical, jazz and “world” 19 metadata fields 14/47

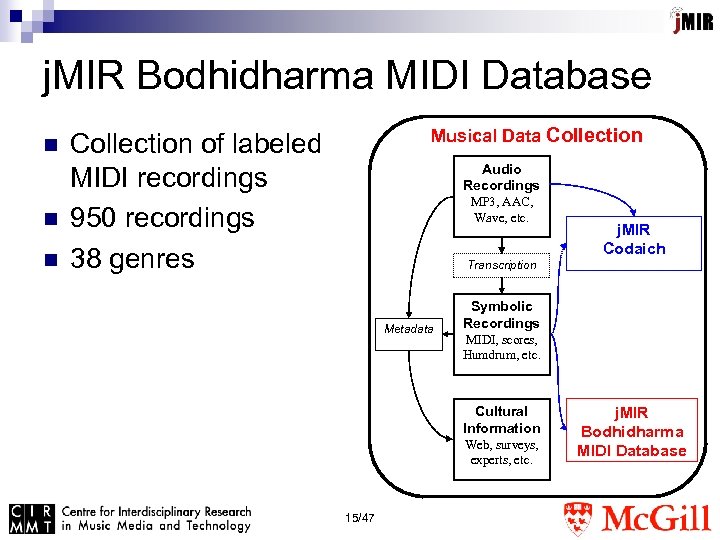

j. MIR Bodhidharma MIDI Database n n n Musical Data Collection of labeled MIDI recordings 950 recordings 38 genres Audio Recordings MP 3, AAC, Wave, etc. Transcription Metadata Symbolic Recordings MIDI, scores, Humdrum, etc. Cultural Information Web, surveys, experts, etc. 15/47 j. MIR Codaich j. MIR Bodhidharma MIDI Database

j. MIR Bodhidharma MIDI Database n n n Musical Data Collection of labeled MIDI recordings 950 recordings 38 genres Audio Recordings MP 3, AAC, Wave, etc. Transcription Metadata Symbolic Recordings MIDI, scores, Humdrum, etc. Cultural Information Web, surveys, experts, etc. 15/47 j. MIR Codaich j. MIR Bodhidharma MIDI Database

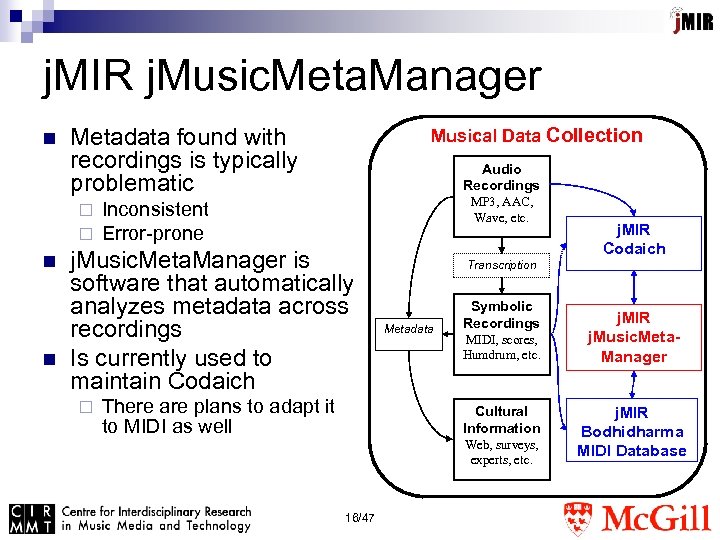

j. MIR j. Music. Meta. Manager n Metadata found with recordings is typically problematic ¨ ¨ n n Musical Data Collection Audio Recordings MP 3, AAC, Wave, etc. Inconsistent Error-prone j. Music. Meta. Manager is software that automatically analyzes metadata across recordings Is currently used to maintain Codaich ¨ There are plans to adapt it to MIDI as well Transcription Metadata Symbolic Recordings MIDI, scores, Humdrum, etc. Cultural Information Web, surveys, experts, etc. 16/47 j. MIR Codaich j. MIR j. Music. Meta. Manager j. MIR Bodhidharma MIDI Database

j. MIR j. Music. Meta. Manager n Metadata found with recordings is typically problematic ¨ ¨ n n Musical Data Collection Audio Recordings MP 3, AAC, Wave, etc. Inconsistent Error-prone j. Music. Meta. Manager is software that automatically analyzes metadata across recordings Is currently used to maintain Codaich ¨ There are plans to adapt it to MIDI as well Transcription Metadata Symbolic Recordings MIDI, scores, Humdrum, etc. Cultural Information Web, surveys, experts, etc. 16/47 j. MIR Codaich j. MIR j. Music. Meta. Manager j. MIR Bodhidharma MIDI Database

Tasks performed by j. Music. Meta. Manager n Detects differing metadata values that should in fact be the same ¨ e. g. in an performer identification task, “Charlie Mingus” should not be misclassified as a different performer than “Mingus, Charles” n Detects redundant copies of recordings ¨ Could n contaminate test sets Generates inventory and statistical profile reports ¨ 39 reports in all 17/47

Tasks performed by j. Music. Meta. Manager n Detects differing metadata values that should in fact be the same ¨ e. g. in an performer identification task, “Charlie Mingus” should not be misclassified as a different performer than “Mingus, Charles” n Detects redundant copies of recordings ¨ Could n contaminate test sets Generates inventory and statistical profile reports ¨ 39 reports in all 17/47

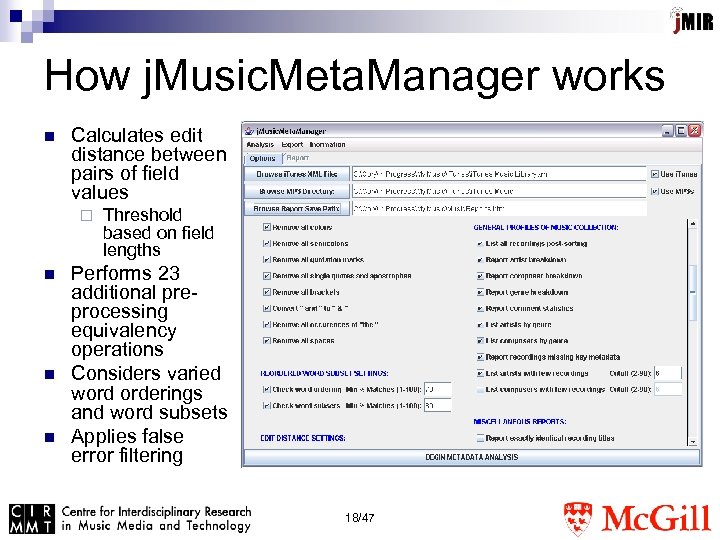

How j. Music. Meta. Manager works n Calculates edit distance between pairs of field values ¨ n n n Threshold based on field lengths Performs 23 additional preprocessing equivalency operations Considers varied word orderings and word subsets Applies false error filtering 18/47

How j. Music. Meta. Manager works n Calculates edit distance between pairs of field values ¨ n n n Threshold based on field lengths Performs 23 additional preprocessing equivalency operations Considers varied word orderings and word subsets Applies false error filtering 18/47

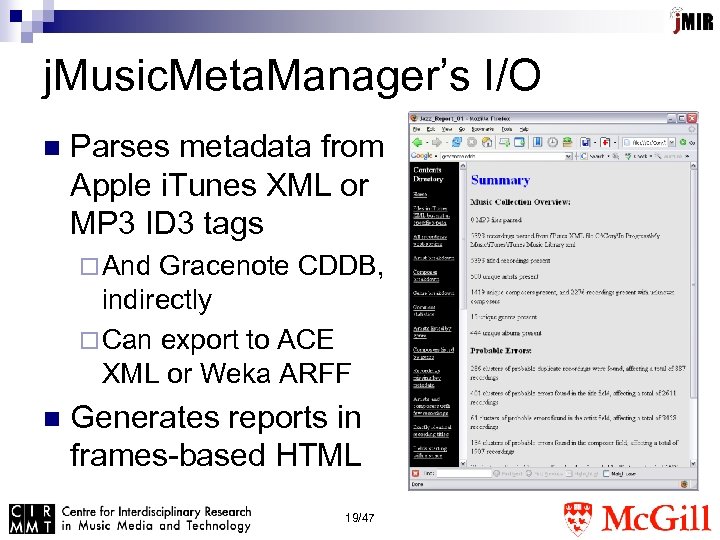

j. Music. Meta. Manager’s I/O n Parses metadata from Apple i. Tunes XML or MP 3 ID 3 tags ¨ And Gracenote CDDB, indirectly ¨ Can export to ACE XML or Weka ARFF n Generates reports in frames-based HTML 19/47

j. Music. Meta. Manager’s I/O n Parses metadata from Apple i. Tunes XML or MP 3 ID 3 tags ¨ And Gracenote CDDB, indirectly ¨ Can export to ACE XML or Weka ARFF n Generates reports in frames-based HTML 19/47

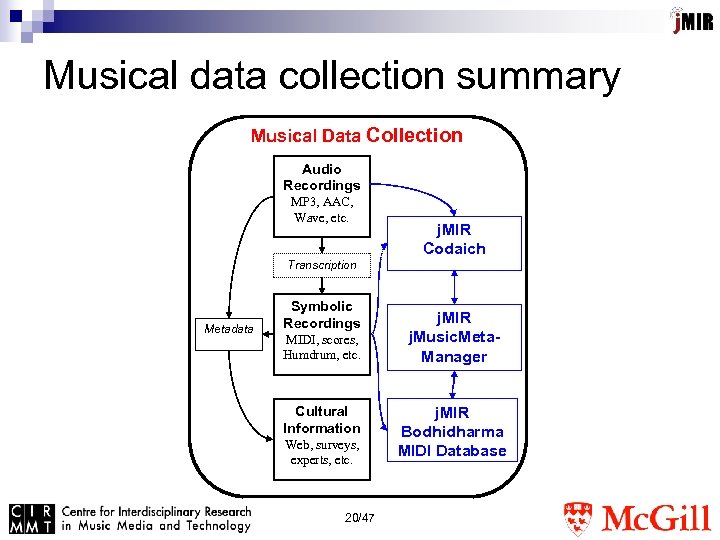

Musical data collection summary Musical Data Collection Audio Recordings MP 3, AAC, Wave, etc. Transcription Metadata Symbolic Recordings MIDI, scores, Humdrum, etc. Cultural Information Web, surveys, experts, etc. 20/47 j. MIR Codaich j. MIR j. Music. Meta. Manager j. MIR Bodhidharma MIDI Database

Musical data collection summary Musical Data Collection Audio Recordings MP 3, AAC, Wave, etc. Transcription Metadata Symbolic Recordings MIDI, scores, Humdrum, etc. Cultural Information Web, surveys, experts, etc. 20/47 j. MIR Codaich j. MIR j. Music. Meta. Manager j. MIR Bodhidharma MIDI Database

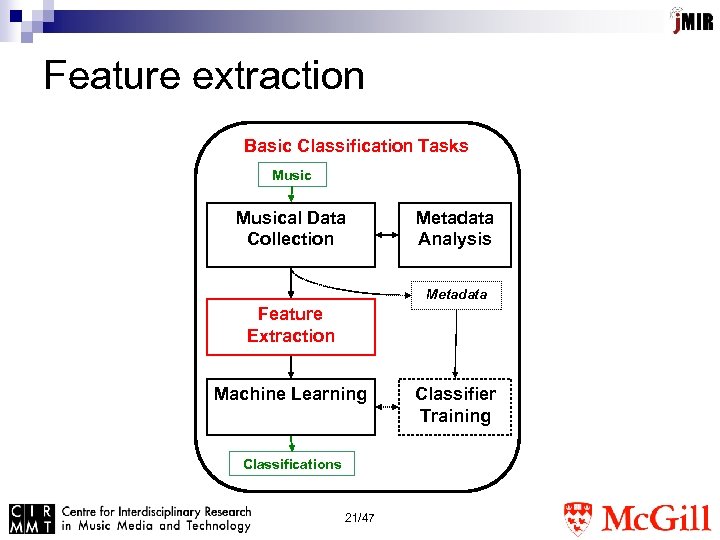

Feature extraction Basic Classification Tasks Musical Data Collection Metadata Analysis Metadata Feature Extraction Machine Learning Classifications 21/47 Classifier Training

Feature extraction Basic Classification Tasks Musical Data Collection Metadata Analysis Metadata Feature Extraction Machine Learning Classifications 21/47 Classifier Training

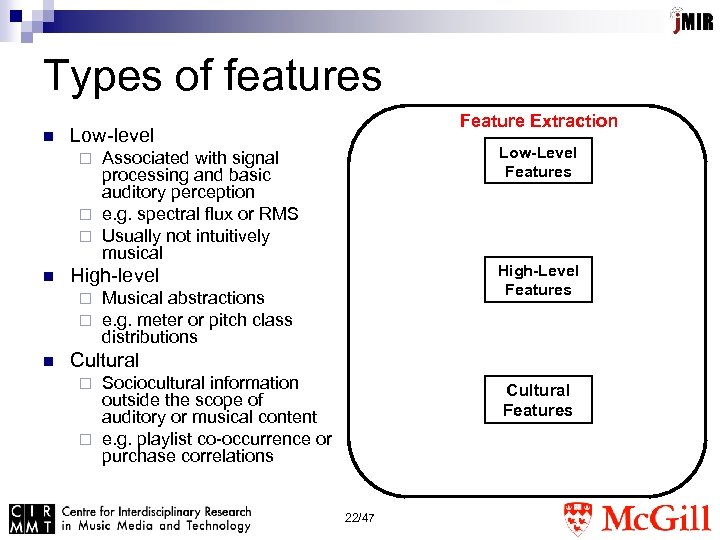

Types of features n Feature Extraction Low-level Low-Level Features Associated with signal processing and basic auditory perception ¨ e. g. spectral flux or RMS ¨ Usually not intuitively musical ¨ n ¨ ¨ n High-Level Features High-level Musical abstractions e. g. meter or pitch class distributions Cultural Sociocultural information outside the scope of auditory or musical content ¨ e. g. playlist co-occurrence or purchase correlations ¨ Cultural Features 22/47

Types of features n Feature Extraction Low-level Low-Level Features Associated with signal processing and basic auditory perception ¨ e. g. spectral flux or RMS ¨ Usually not intuitively musical ¨ n ¨ ¨ n High-Level Features High-level Musical abstractions e. g. meter or pitch class distributions Cultural Sociocultural information outside the scope of auditory or musical content ¨ e. g. playlist co-occurrence or purchase correlations ¨ Cultural Features 22/47

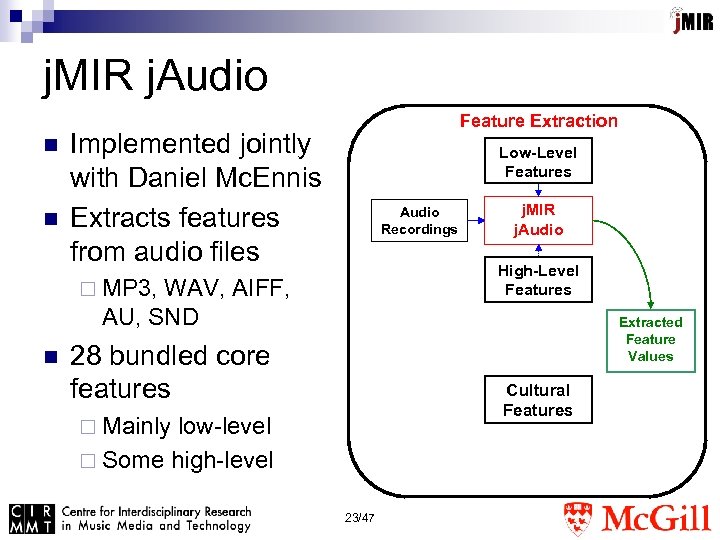

j. MIR j. Audio n n Feature Extraction Implemented jointly with Daniel Mc. Ennis Extracts features from audio files Low-Level Features Audio Recordings High-Level Features ¨ MP 3, WAV, AIFF, AU, SND n j. MIR j. Audio Extracted Feature Values 28 bundled core features Cultural Features ¨ Mainly low-level ¨ Some high-level 23/47

j. MIR j. Audio n n Feature Extraction Implemented jointly with Daniel Mc. Ennis Extracts features from audio files Low-Level Features Audio Recordings High-Level Features ¨ MP 3, WAV, AIFF, AU, SND n j. MIR j. Audio Extracted Feature Values 28 bundled core features Cultural Features ¨ Mainly low-level ¨ Some high-level 23/47

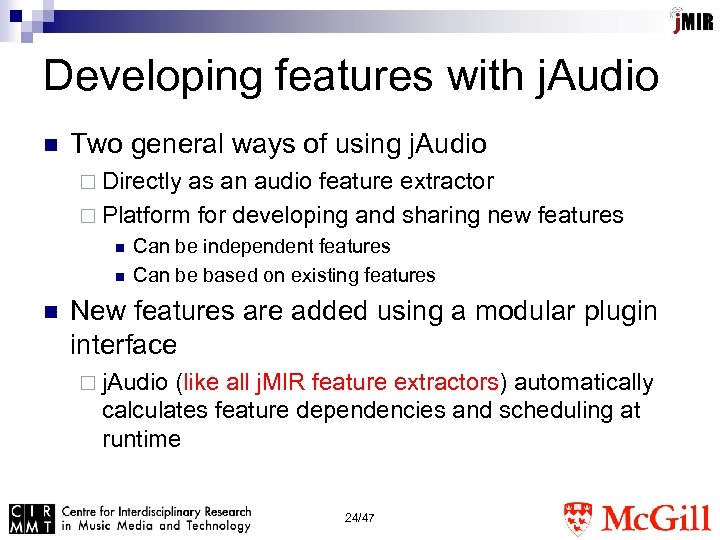

Developing features with j. Audio n Two general ways of using j. Audio ¨ Directly as an audio feature extractor ¨ Platform for developing and sharing new features n n n Can be independent features Can be based on existing features New features are added using a modular plugin interface ¨ j. Audio (like all j. MIR feature extractors) automatically calculates feature dependencies and scheduling at runtime 24/47

Developing features with j. Audio n Two general ways of using j. Audio ¨ Directly as an audio feature extractor ¨ Platform for developing and sharing new features n n n Can be independent features Can be based on existing features New features are added using a modular plugin interface ¨ j. Audio (like all j. MIR feature extractors) automatically calculates feature dependencies and scheduling at runtime 24/47

Metafeatures and aggregators n j. Audio automatically calculates “metafeatures” of new or existing features ¨ e. g. running means, standard deviations or derivatives across sample windows n j. Audio automatically calculates “aggregators” for new or existing features ¨ Functions that collapse a sequence of feature vectors into a single vector or smaller sequence of vectors ¨ Useful for representing in a low-dimensional way how different features change together ¨ e. g. the Area of Moments aggregator transforms a set of feature vectors into a two-dimensional image matrix and calculates two-dimensional moments 25/47

Metafeatures and aggregators n j. Audio automatically calculates “metafeatures” of new or existing features ¨ e. g. running means, standard deviations or derivatives across sample windows n j. Audio automatically calculates “aggregators” for new or existing features ¨ Functions that collapse a sequence of feature vectors into a single vector or smaller sequence of vectors ¨ Useful for representing in a low-dimensional way how different features change together ¨ e. g. the Area of Moments aggregator transforms a set of feature vectors into a two-dimensional image matrix and calculates two-dimensional moments 25/47

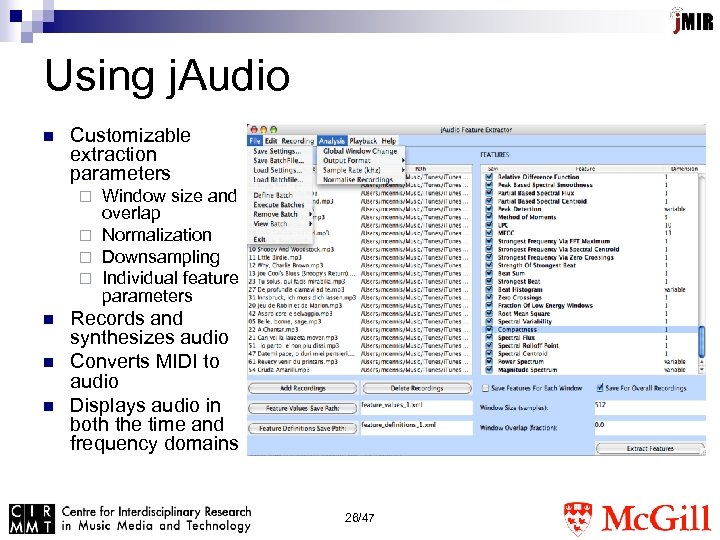

Using j. Audio n Customizable extraction parameters Window size and overlap ¨ Normalization ¨ Downsampling ¨ Individual feature parameters ¨ n n n Records and synthesizes audio Converts MIDI to audio Displays audio in both the time and frequency domains 26/47

Using j. Audio n Customizable extraction parameters Window size and overlap ¨ Normalization ¨ Downsampling ¨ Individual feature parameters ¨ n n n Records and synthesizes audio Converts MIDI to audio Displays audio in both the time and frequency domains 26/47

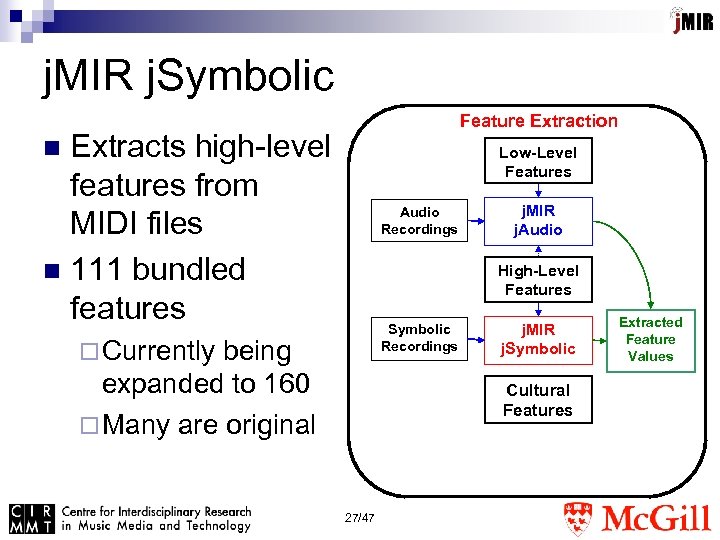

j. MIR j. Symbolic Feature Extraction Extracts high-level features from MIDI files n 111 bundled features n Low-Level Features Audio Recordings j. MIR j. Audio High-Level Features Symbolic Recordings ¨ Currently being expanded to 160 ¨ Many are original j. MIR j. Symbolic Cultural Features 27/47 Extracted Feature Values

j. MIR j. Symbolic Feature Extraction Extracts high-level features from MIDI files n 111 bundled features n Low-Level Features Audio Recordings j. MIR j. Audio High-Level Features Symbolic Recordings ¨ Currently being expanded to 160 ¨ Many are original j. MIR j. Symbolic Cultural Features 27/47 Extracted Feature Values

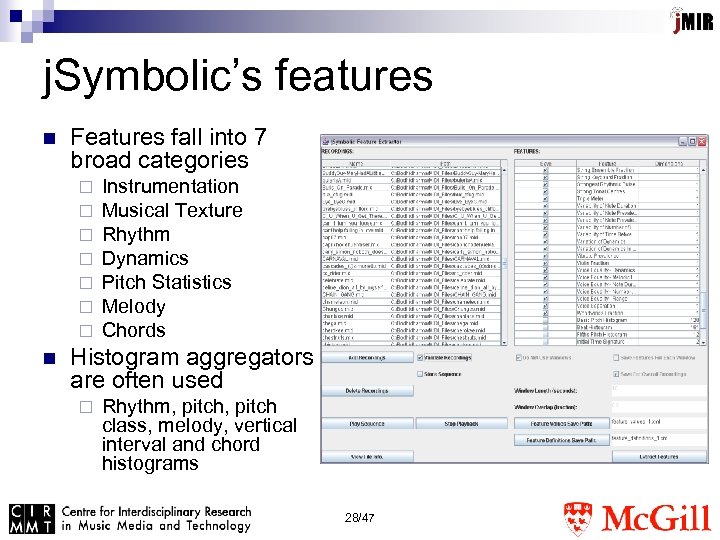

j. Symbolic’s features n Features fall into 7 broad categories ¨ ¨ ¨ ¨ n Instrumentation Musical Texture Rhythm Dynamics Pitch Statistics Melody Chords Histogram aggregators are often used ¨ Rhythm, pitch class, melody, vertical interval and chord histograms 28/47

j. Symbolic’s features n Features fall into 7 broad categories ¨ ¨ ¨ ¨ n Instrumentation Musical Texture Rhythm Dynamics Pitch Statistics Melody Chords Histogram aggregators are often used ¨ Rhythm, pitch class, melody, vertical interval and chord histograms 28/47

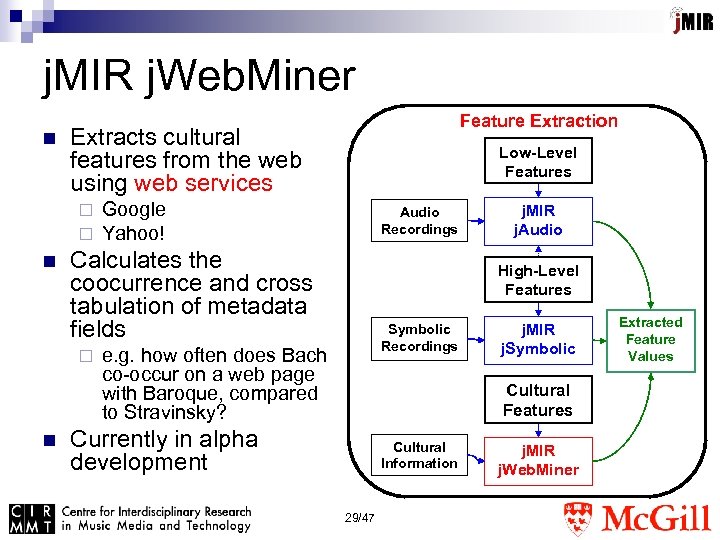

j. MIR j. Web. Miner n Extracts cultural features from the web using web services ¨ ¨ n Low-Level Features Google Yahoo! Audio Recordings Calculates the coocurrence and cross tabulation of metadata fields ¨ n Feature Extraction j. MIR j. Audio High-Level Features Symbolic Recordings e. g. how often does Bach co-occur on a web page with Baroque, compared to Stravinsky? j. MIR j. Symbolic Cultural Features Currently in alpha development Cultural Information 29/47 j. MIR j. Web. Miner Extracted Feature Values

j. MIR j. Web. Miner n Extracts cultural features from the web using web services ¨ ¨ n Low-Level Features Google Yahoo! Audio Recordings Calculates the coocurrence and cross tabulation of metadata fields ¨ n Feature Extraction j. MIR j. Audio High-Level Features Symbolic Recordings e. g. how often does Bach co-occur on a web page with Baroque, compared to Stravinsky? j. MIR j. Symbolic Cultural Features Currently in alpha development Cultural Information 29/47 j. MIR j. Web. Miner Extracted Feature Values

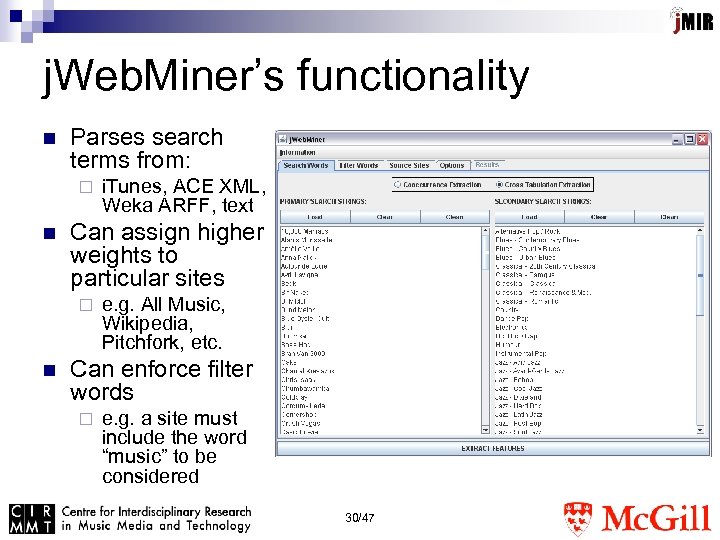

j. Web. Miner’s functionality n Parses search terms from: ¨ n Can assign higher weights to particular sites ¨ n i. Tunes, ACE XML, Weka ARFF, text e. g. All Music, Wikipedia, Pitchfork, etc. Can enforce filter words ¨ e. g. a site must include the word “music” to be considered 30/47

j. Web. Miner’s functionality n Parses search terms from: ¨ n Can assign higher weights to particular sites ¨ n i. Tunes, ACE XML, Weka ARFF, text e. g. All Music, Wikipedia, Pitchfork, etc. Can enforce filter words ¨ e. g. a site must include the word “music” to be considered 30/47

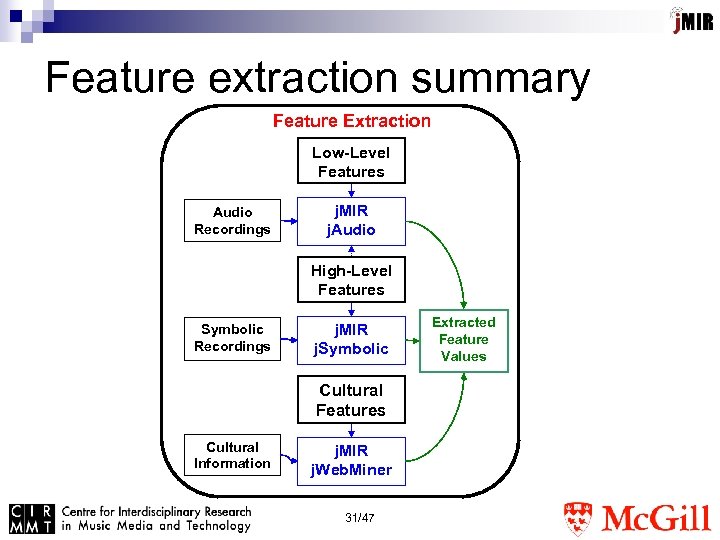

Feature extraction summary Feature Extraction Low-Level Features Audio Recordings j. MIR j. Audio High-Level Features Symbolic Recordings j. MIR j. Symbolic Cultural Features Cultural Information j. MIR j. Web. Miner 31/47 Extracted Feature Values

Feature extraction summary Feature Extraction Low-Level Features Audio Recordings j. MIR j. Audio High-Level Features Symbolic Recordings j. MIR j. Symbolic Cultural Features Cultural Information j. MIR j. Web. Miner 31/47 Extracted Feature Values

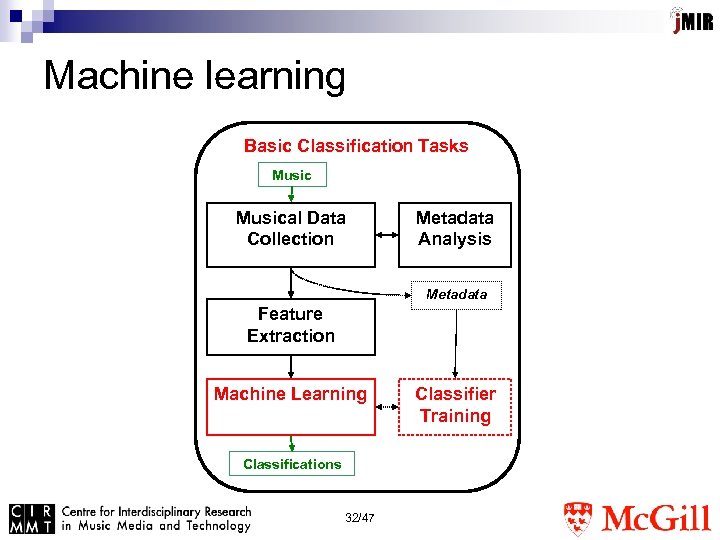

Machine learning Basic Classification Tasks Musical Data Collection Metadata Analysis Metadata Feature Extraction Machine Learning Classifications 32/47 Classifier Training

Machine learning Basic Classification Tasks Musical Data Collection Metadata Analysis Metadata Feature Extraction Machine Learning Classifications 32/47 Classifier Training

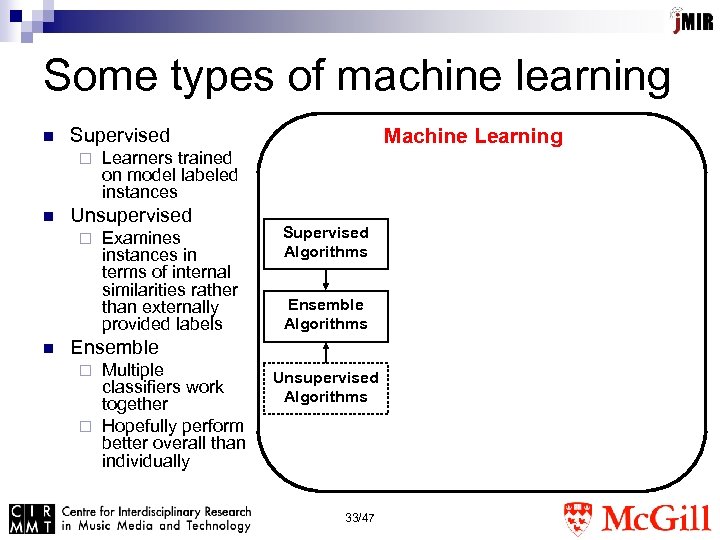

Some types of machine learning n Supervised ¨ n n Learners trained on model labeled instances Unsupervised ¨ Machine Learning Examines instances in terms of internal similarities rather than externally provided labels Supervised Algorithms Ensemble Multiple classifiers work together ¨ Hopefully perform better overall than individually ¨ Unsupervised Algorithms 33/47

Some types of machine learning n Supervised ¨ n n Learners trained on model labeled instances Unsupervised ¨ Machine Learning Examines instances in terms of internal similarities rather than externally provided labels Supervised Algorithms Ensemble Multiple classifiers work together ¨ Hopefully perform better overall than individually ¨ Unsupervised Algorithms 33/47

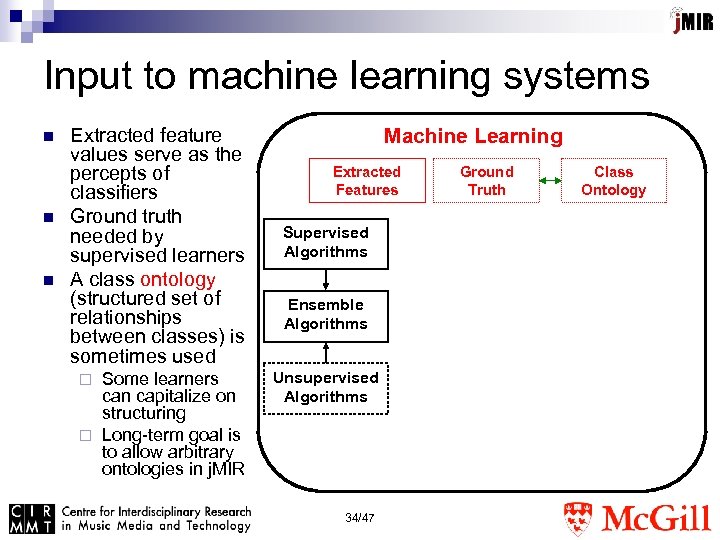

Input to machine learning systems n n n Extracted feature values serve as the percepts of classifiers Ground truth needed by supervised learners A class ontology (structured set of relationships between classes) is sometimes used Some learners can capitalize on structuring ¨ Long-term goal is to allow arbitrary ontologies in j. MIR ¨ Machine Learning Extracted Features Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms 34/47 Ground Truth Class Ontology

Input to machine learning systems n n n Extracted feature values serve as the percepts of classifiers Ground truth needed by supervised learners A class ontology (structured set of relationships between classes) is sometimes used Some learners can capitalize on structuring ¨ Long-term goal is to allow arbitrary ontologies in j. MIR ¨ Machine Learning Extracted Features Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms 34/47 Ground Truth Class Ontology

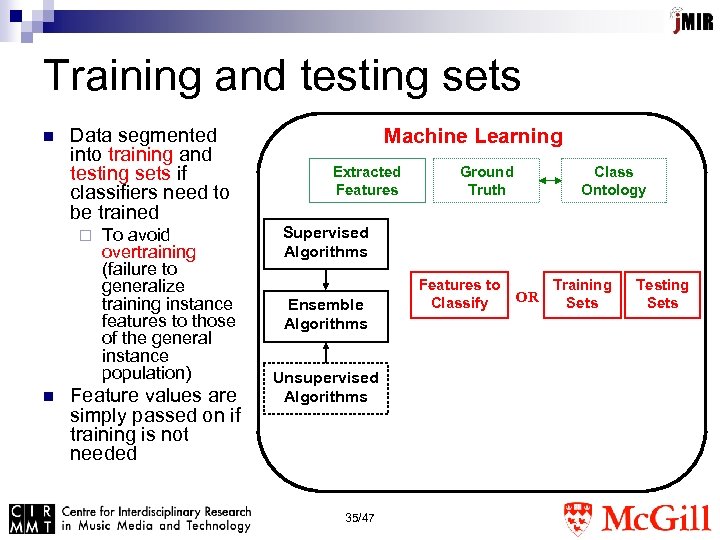

Training and testing sets n Data segmented into training and testing sets if classifiers need to be trained ¨ n To avoid overtraining (failure to generalize training instance features to those of the general instance population) Feature values are simply passed on if training is not needed Machine Learning Extracted Features Ground Truth Class Ontology Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms 35/47 Features to Classify OR Training Sets Testing Sets

Training and testing sets n Data segmented into training and testing sets if classifiers need to be trained ¨ n To avoid overtraining (failure to generalize training instance features to those of the general instance population) Feature values are simply passed on if training is not needed Machine Learning Extracted Features Ground Truth Class Ontology Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms 35/47 Features to Classify OR Training Sets Testing Sets

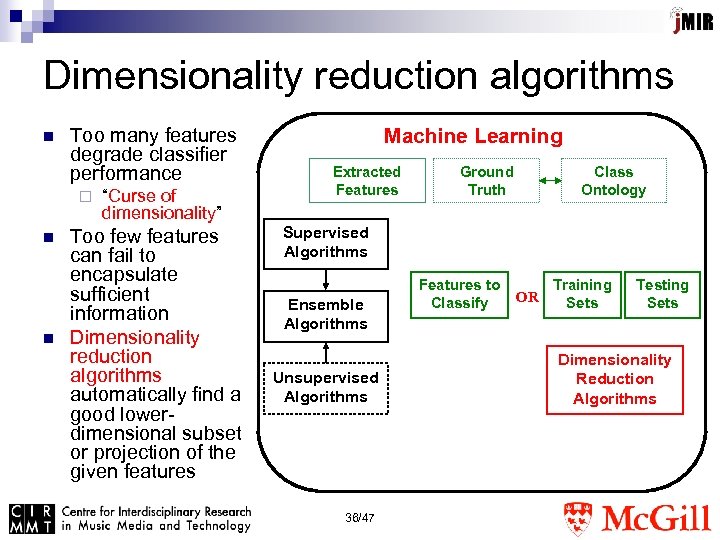

Dimensionality reduction algorithms n Too many features degrade classifier performance ¨ n n “Curse of dimensionality” Too few features can fail to encapsulate sufficient information Dimensionality reduction algorithms automatically find a good lowerdimensional subset or projection of the given features Machine Learning Extracted Features Ground Truth Class Ontology Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms 36/47 Features to Classify OR Training Sets Testing Sets Dimensionality Reduction Algorithms

Dimensionality reduction algorithms n Too many features degrade classifier performance ¨ n n “Curse of dimensionality” Too few features can fail to encapsulate sufficient information Dimensionality reduction algorithms automatically find a good lowerdimensional subset or projection of the given features Machine Learning Extracted Features Ground Truth Class Ontology Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms 36/47 Features to Classify OR Training Sets Testing Sets Dimensionality Reduction Algorithms

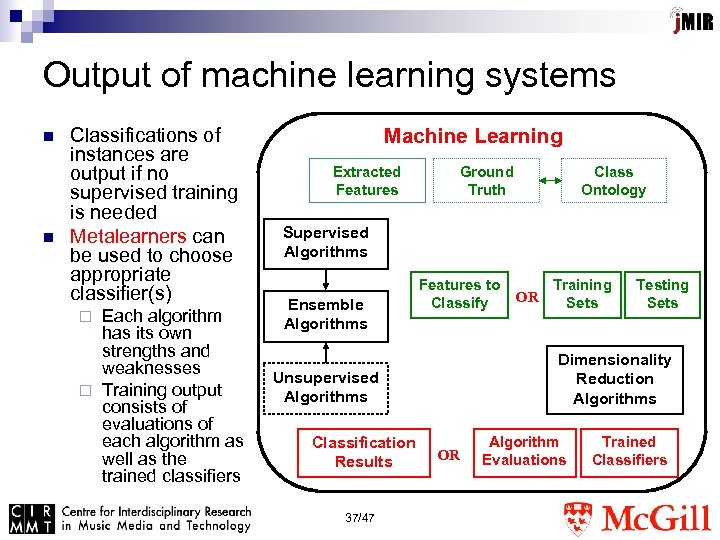

Output of machine learning systems n n Classifications of instances are output if no supervised training is needed Metalearners can be used to choose appropriate classifier(s) Each algorithm has its own strengths and weaknesses ¨ Training output consists of evaluations of each algorithm as well as the trained classifiers ¨ Machine Learning Extracted Features Ground Truth Class Ontology Supervised Algorithms Ensemble Algorithms Features to Classify 37/47 Training Sets Testing Sets Dimensionality Reduction Algorithms Unsupervised Algorithms Classification Results OR OR Algorithm Evaluations Trained Classifiers

Output of machine learning systems n n Classifications of instances are output if no supervised training is needed Metalearners can be used to choose appropriate classifier(s) Each algorithm has its own strengths and weaknesses ¨ Training output consists of evaluations of each algorithm as well as the trained classifiers ¨ Machine Learning Extracted Features Ground Truth Class Ontology Supervised Algorithms Ensemble Algorithms Features to Classify 37/47 Training Sets Testing Sets Dimensionality Reduction Algorithms Unsupervised Algorithms Classification Results OR OR Algorithm Evaluations Trained Classifiers

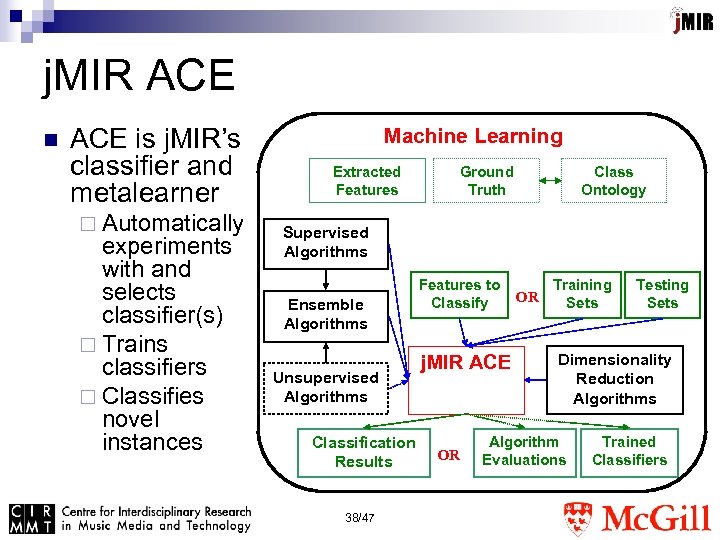

j. MIR ACE n ACE is j. MIR’s classifier and metalearner ¨ Automatically experiments with and selects classifier(s) ¨ Trains classifiers ¨ Classifies novel instances Machine Learning Extracted Features Ground Truth Class Ontology Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms Classification Results 38/47 Features to Classify j. MIR ACE OR OR Training Sets Testing Sets Dimensionality Reduction Algorithms Algorithm Evaluations Trained Classifiers

j. MIR ACE n ACE is j. MIR’s classifier and metalearner ¨ Automatically experiments with and selects classifier(s) ¨ Trains classifiers ¨ Classifies novel instances Machine Learning Extracted Features Ground Truth Class Ontology Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms Classification Results 38/47 Features to Classify j. MIR ACE OR OR Training Sets Testing Sets Dimensionality Reduction Algorithms Algorithm Evaluations Trained Classifiers

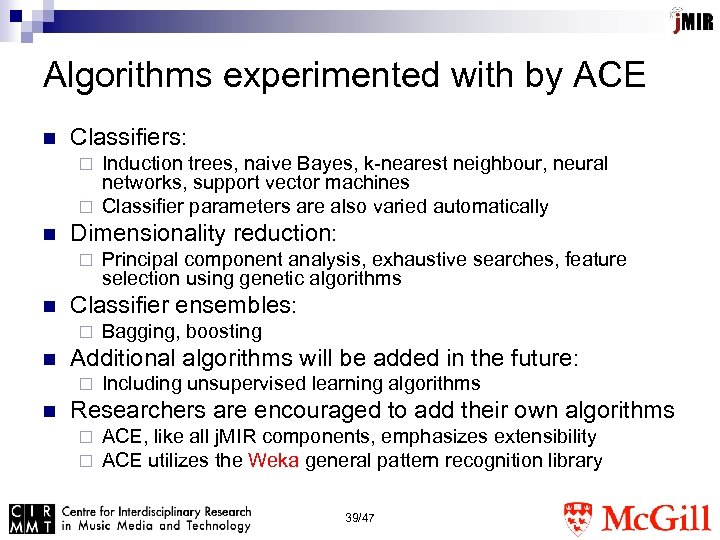

Algorithms experimented with by ACE n Classifiers: Induction trees, naive Bayes, k-nearest neighbour, neural networks, support vector machines ¨ Classifier parameters are also varied automatically ¨ n Dimensionality reduction: ¨ n Classifier ensembles: ¨ n Bagging, boosting Additional algorithms will be added in the future: ¨ n Principal component analysis, exhaustive searches, feature selection using genetic algorithms Including unsupervised learning algorithms Researchers are encouraged to add their own algorithms ¨ ¨ ACE, like all j. MIR components, emphasizes extensibility ACE utilizes the Weka general pattern recognition library 39/47

Algorithms experimented with by ACE n Classifiers: Induction trees, naive Bayes, k-nearest neighbour, neural networks, support vector machines ¨ Classifier parameters are also varied automatically ¨ n Dimensionality reduction: ¨ n Classifier ensembles: ¨ n Bagging, boosting Additional algorithms will be added in the future: ¨ n Principal component analysis, exhaustive searches, feature selection using genetic algorithms Including unsupervised learning algorithms Researchers are encouraged to add their own algorithms ¨ ¨ ACE, like all j. MIR components, emphasizes extensibility ACE utilizes the Weka general pattern recognition library 39/47

Details of ACE n ACE evaluates algorithms in terms of ¨ Classification accuracy ¨ Performance consistency ¨ Training complexity / time ¨ Classification complexity / time n There are future plans to utilize distributed computing to spread out the computational burden ¨ Will also add the ability to impose limits on the time available for the ACE metalearner to come up algorithm selections 40/47

Details of ACE n ACE evaluates algorithms in terms of ¨ Classification accuracy ¨ Performance consistency ¨ Training complexity / time ¨ Classification complexity / time n There are future plans to utilize distributed computing to spread out the computational burden ¨ Will also add the ability to impose limits on the time available for the ACE metalearner to come up algorithm selections 40/47

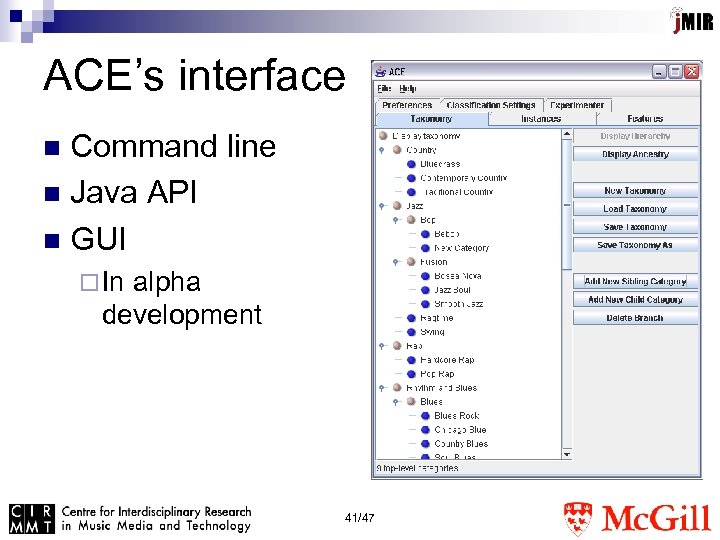

ACE’s interface Command line n Java API n GUI n ¨ In alpha development 41/47

ACE’s interface Command line n Java API n GUI n ¨ In alpha development 41/47

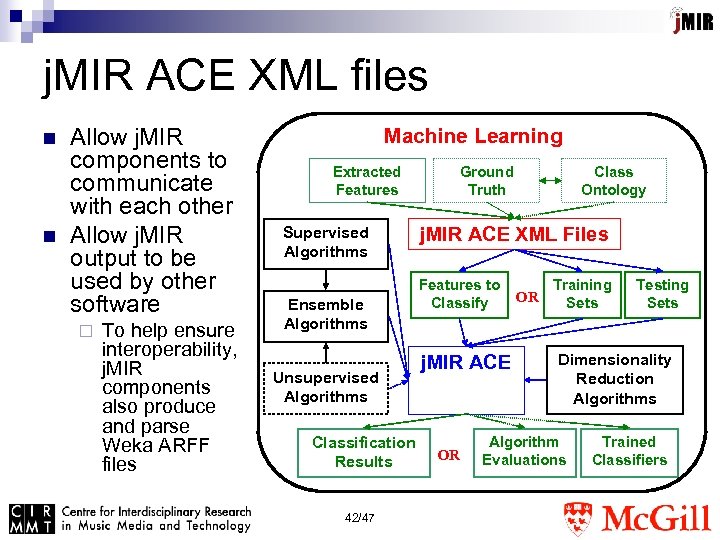

j. MIR ACE XML files n n Allow j. MIR components to communicate with each other Allow j. MIR output to be used by other software ¨ To help ensure interoperability, j. MIR components also produce and parse Weka ARFF files Machine Learning Extracted Features Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms Classification Results 42/47 Ground Truth Class Ontology j. MIR ACE XML Files Features to Classify j. MIR ACE OR OR Training Sets Testing Sets Dimensionality Reduction Algorithms Algorithm Evaluations Trained Classifiers

j. MIR ACE XML files n n Allow j. MIR components to communicate with each other Allow j. MIR output to be used by other software ¨ To help ensure interoperability, j. MIR components also produce and parse Weka ARFF files Machine Learning Extracted Features Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms Classification Results 42/47 Ground Truth Class Ontology j. MIR ACE XML Files Features to Classify j. MIR ACE OR OR Training Sets Testing Sets Dimensionality Reduction Algorithms Algorithm Evaluations Trained Classifiers

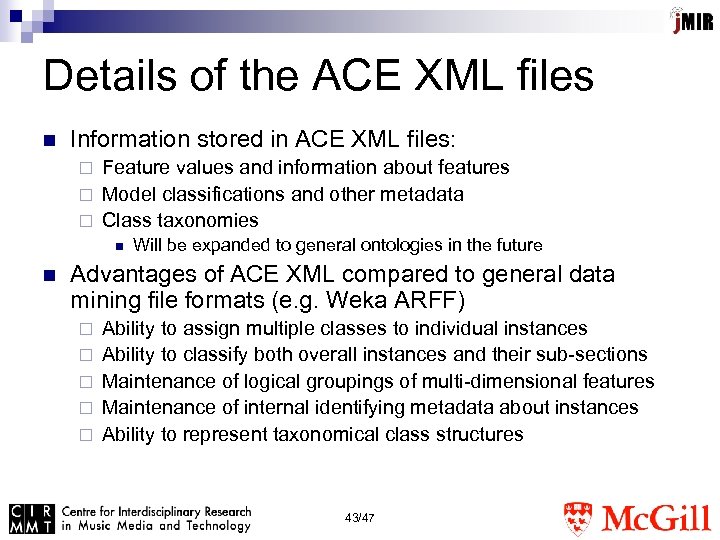

Details of the ACE XML files n Information stored in ACE XML files: Feature values and information about features ¨ Model classifications and other metadata ¨ Class taxonomies ¨ n n Will be expanded to general ontologies in the future Advantages of ACE XML compared to general data mining file formats (e. g. Weka ARFF) ¨ ¨ ¨ Ability to assign multiple classes to individual instances Ability to classify both overall instances and their sub-sections Maintenance of logical groupings of multi-dimensional features Maintenance of internal identifying metadata about instances Ability to represent taxonomical class structures 43/47

Details of the ACE XML files n Information stored in ACE XML files: Feature values and information about features ¨ Model classifications and other metadata ¨ Class taxonomies ¨ n n Will be expanded to general ontologies in the future Advantages of ACE XML compared to general data mining file formats (e. g. Weka ARFF) ¨ ¨ ¨ Ability to assign multiple classes to individual instances Ability to classify both overall instances and their sub-sections Maintenance of logical groupings of multi-dimensional features Maintenance of internal identifying metadata about instances Ability to represent taxonomical class structures 43/47

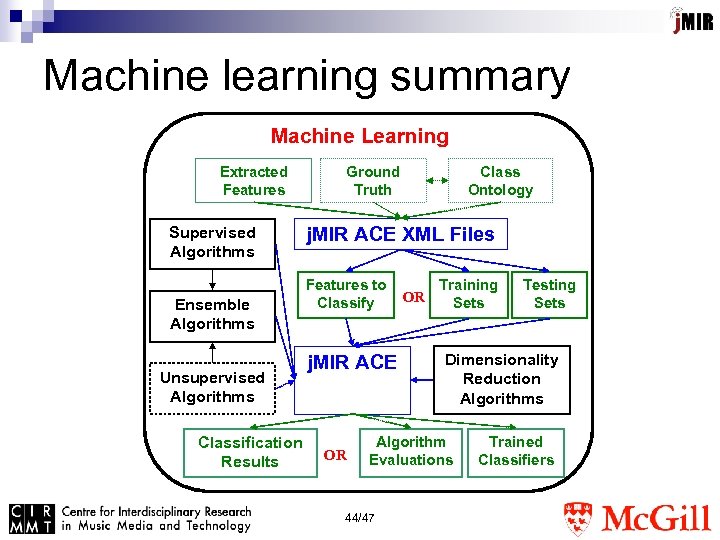

Machine learning summary Machine Learning Extracted Features Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms Classification Results Ground Truth Class Ontology j. MIR ACE XML Files Features to Classify j. MIR ACE OR OR Training Sets Dimensionality Reduction Algorithms Algorithm Evaluations 44/47 Testing Sets Trained Classifiers

Machine learning summary Machine Learning Extracted Features Supervised Algorithms Ensemble Algorithms Unsupervised Algorithms Classification Results Ground Truth Class Ontology j. MIR ACE XML Files Features to Classify j. MIR ACE OR OR Training Sets Dimensionality Reduction Algorithms Algorithm Evaluations 44/47 Testing Sets Trained Classifiers

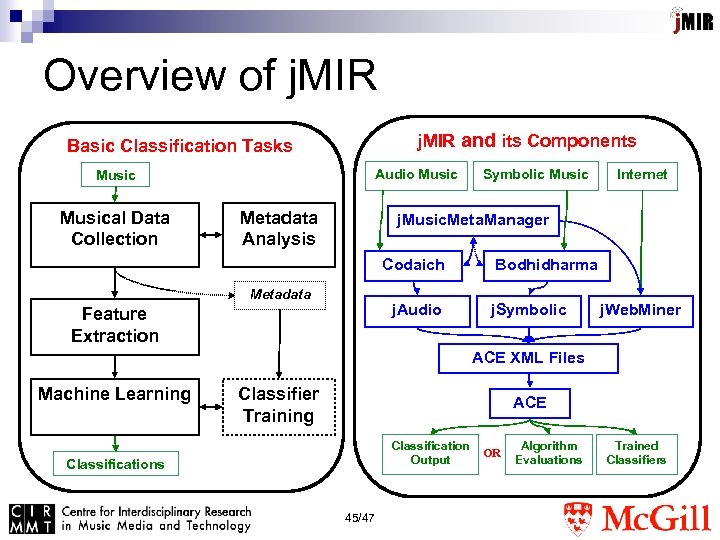

Overview of j. MIR and its Components Basic Classification Tasks Audio Musical Data Collection Metadata Analysis Symbolic Music Internet j. Music. Meta. Manager Codaich Metadata j. Audio Feature Extraction Bodhidharma j. Symbolic j. Web. Miner ACE XML Files Machine Learning Classifier Training ACE Classification Output Classifications 45/47 OR Algorithm Evaluations Trained Classifiers

Overview of j. MIR and its Components Basic Classification Tasks Audio Musical Data Collection Metadata Analysis Symbolic Music Internet j. Music. Meta. Manager Codaich Metadata j. Audio Feature Extraction Bodhidharma j. Symbolic j. Web. Miner ACE XML Files Machine Learning Classifier Training ACE Classification Output Classifications 45/47 OR Algorithm Evaluations Trained Classifiers

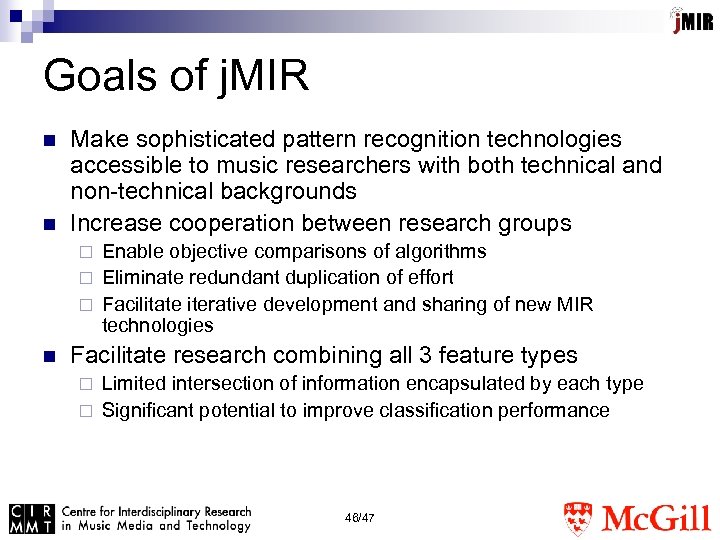

Goals of j. MIR n n Make sophisticated pattern recognition technologies accessible to music researchers with both technical and non-technical backgrounds Increase cooperation between research groups Enable objective comparisons of algorithms ¨ Eliminate redundant duplication of effort ¨ Facilitate iterative development and sharing of new MIR technologies ¨ n Facilitate research combining all 3 feature types Limited intersection of information encapsulated by each type ¨ Significant potential to improve classification performance ¨ 46/47

Goals of j. MIR n n Make sophisticated pattern recognition technologies accessible to music researchers with both technical and non-technical backgrounds Increase cooperation between research groups Enable objective comparisons of algorithms ¨ Eliminate redundant duplication of effort ¨ Facilitate iterative development and sharing of new MIR technologies ¨ n Facilitate research combining all 3 feature types Limited intersection of information encapsulated by each type ¨ Significant potential to improve classification performance ¨ 46/47

Contact information n Software available at: ¨ http: //sourceforge. net/projects/jmir n e-mail: ¨ cory. mckay@mail. mcgill. ca 47/47

Contact information n Software available at: ¨ http: //sourceforge. net/projects/jmir n e-mail: ¨ cory. mckay@mail. mcgill. ca 47/47