c1453d4a2c7a3381c20734907bd35ee0.ppt

- Количество слайдов: 28

Automatic Acquisition of Synonyms Using the Web as a Corpus 3 rd Annual South East European Doctoral Student Conference (DSC 2008): Infusing Knowledge and Research in South East Europe Svetlin Nakov, Sofia University "St. Kliment Ohridski" nakov@fmi-uni-sofia. bg DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Introduction n We want to automatically extract all pairs of synonyms inside given text n Our goal is: n Design an algorithm that can distinguish between synonyms and non synonyms n Our approach: n Measure semantic similarity using the Web as a corpus n Synonyms are expected to have higher semantic similarity than non synonyms DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

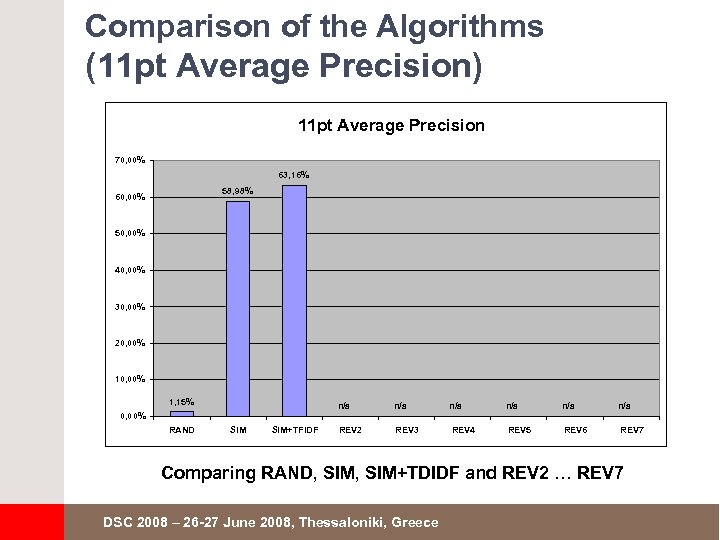

The Paper in One Slide n Measuring semantic similarity n Analyze the words local contexts n Use the Web as a corpus n Similar contexts similar words n TF. IDF weighting & reverse context lookup n Evaluation n 94 words (Russian fine arts terminology) n 50 synonym pairs to be found n 11 pt average precision: 63. 16% DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Contextual Web Similarity n What is local context? n Few words before and after the target word Same day delivery of fresh flowers, roses, and unique gift baskets from our online boutique. Flower delivery online by local florists for birthday flowers. n The words in the local context of given word are semantically related to it n Need to exclude the stop words: prepositions, pronouns, conjunctions, etc. n Stop words appear in all contexts n Need of sufficiently big corpus DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Contextual Web Similarity n Web as a corpus n The Web can be used as a corpus to extract the local context for given word n The Web is the largest possible corpus n Contains large corpora in any language n Searching some word in Google can return up to 1 000 snippets of texts n The target word is given along with its local context: few words before and after it n Target language can be specified DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

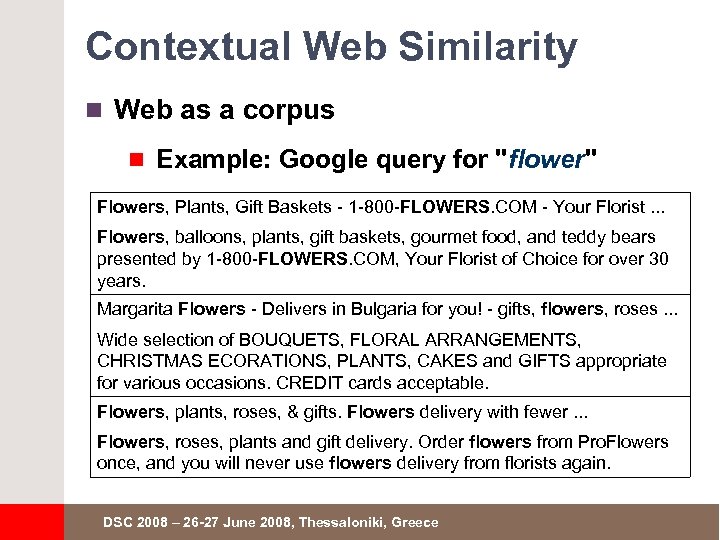

Contextual Web Similarity n Web as a corpus n Example: Google query for "flower" Flowers, Plants, Gift Baskets - 1 -800 -FLOWERS. COM - Your Florist. . . Flowers, balloons, plants, gift baskets, gourmet food, and teddy bears presented by 1 -800 -FLOWERS. COM, Your Florist of Choice for over 30 years. Margarita Flowers - Delivers in Bulgaria for you! - gifts, flowers, roses. . . Wide selection of BOUQUETS, FLORAL ARRANGEMENTS, CHRISTMAS ECORATIONS, PLANTS, CAKES and GIFTS appropriate for various occasions. CREDIT cards acceptable. Flowers, plants, roses, & gifts. Flowers delivery with fewer. . . Flowers, roses, plants and gift delivery. Order flowers from Pro. Flowers once, and you will never use flowers delivery from florists again. DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Contextual Web Similarity n Measuring semantic similarity n For given two words their local contexts are extracted from the Web n A set of words and their frequencies n Semantic similarity is measured as similarity between these local contexts n Local contexts are represented as frequency vectors for given set of words n Cosine between the frequency vectors in the Euclidean space is calculated DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

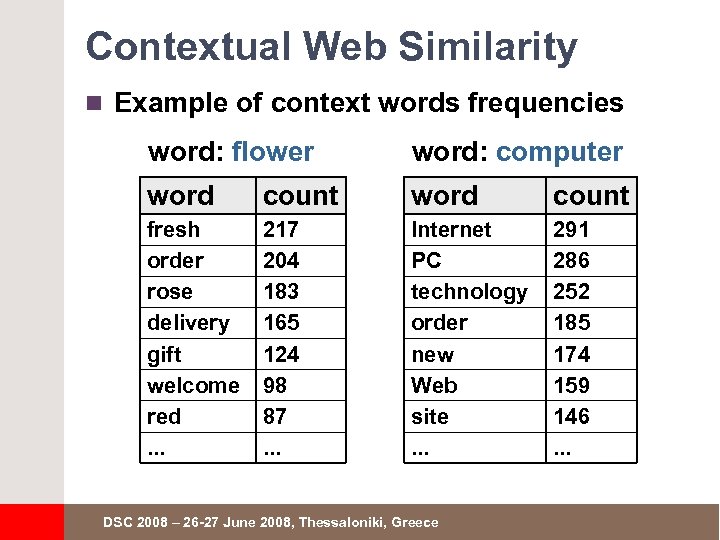

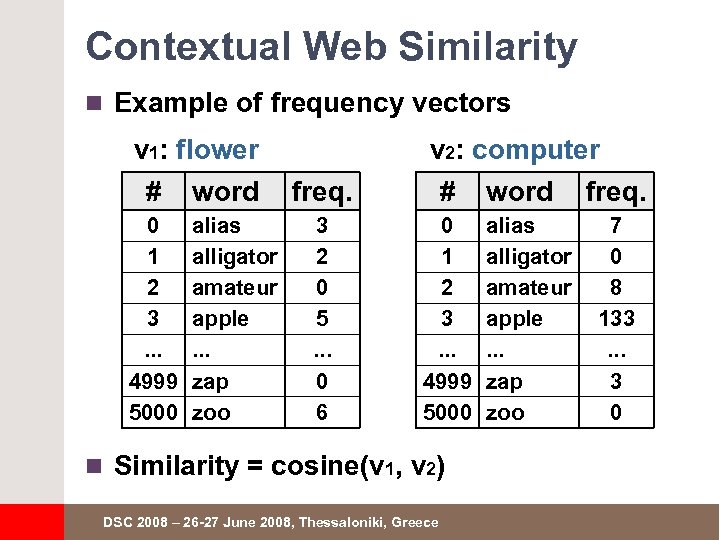

Contextual Web Similarity n Example of context words frequencies word: flower word: computer word count fresh order rose delivery gift welcome red. . . 217 204 183 165 124 98 87. . . Internet PC technology order new Web site. . . 291 286 252 185 174 159 146. . . DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Contextual Web Similarity n Example of frequency vectors v 1: flower # word 0 1 2 3. . . 4999 5000 alias alligator amateur apple. . . zap zoo freq. 3 2 0 5. . . 0 6 v 2: computer # word freq. 0 1 2 3. . . 4999 5000 n Similarity = cosine(v 1, v 2) DSC 2008 – 26 27 June 2008, Thessaloniki, Greece alias alligator amateur apple. . . zap zoo 7 0 8 133. . . 3 0

TF. IDF Weighting n TF. IDF (term frequency times inverted document frequency) n Statistical measure in information retrieval n Shows how important is a certain word for a given document in a set of documents n Increases proportionally to the number of word's occurrences in the document n Decreases proportionally to the total number of documents containing the word DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Reverse Context Lookup n Local context extracted from the Web can contain arbitrary parasite words like "online", "home", "search", "click", etc. n Internet terms appear in any Web page n Such words are not likely to be associated with the target word n Example (for the word flowers) n "send flowers online", "flowers here", "order flowers here" n Will the word "flowers" appear in the local context of "send", "online" and "here"? DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Reverse Context Lookup n If two words are semantically related, then n Both of them should appear in the local contexts of each other n Let #{x, y} = number of occurrences of x in the local context of y n For any word w and a word from its local context wc, we define their strength of semantic association p(w, wc) as follows: n p(w, wc) = min{ #(w, wc), #(wc, w) } n We use p(w, wc) as vector coordinates n We introduce a minimal occurrence threshold (e. g. 5) to filter words appearing just by chance DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Data Set n We use a list of 94 Russian words: n Terms extracted from texts in the subject of fine arts n Limited to nouns only n The data set: абрис, адгезия, алмаз, алтарь, амулет, асфальт, беломорит, битум, бородки, ваятель, вермильон, . . . , шлифовка, штихель, экспрессивность, экспрессия, эстетизм, эстетство n There are 50 synonym pairs in these words n We expect to find them by our algorithms DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Experiments n We tested few modifications of our contextual Web similarity algorithm n Basic algorithm (without modifications) n TF. IDF weighting n Reverse context lookup with different frequency threshold DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

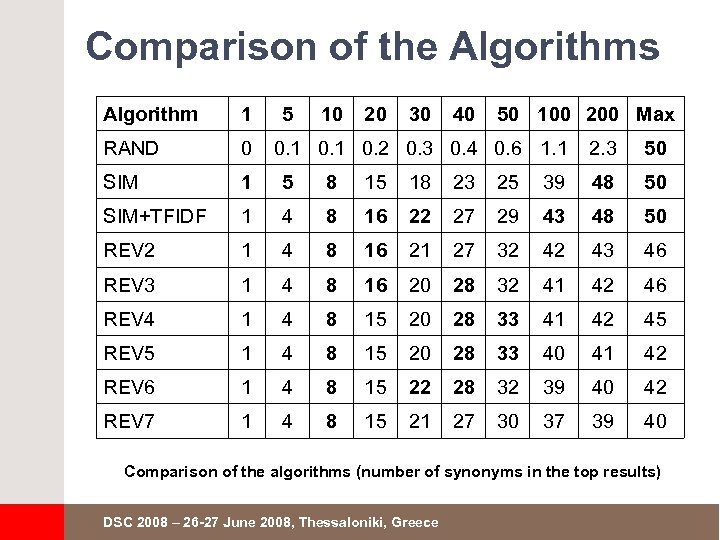

Experiments n RAND – random ordering of all the pairs n SIM – the basic algorithm for extraction of semantic similarity from the Web n Context size of 3 words n Without analyzing the reverse context n With lemmatization n SIM+TFIDF – modification of the SIM algorithm with TF. IDF weighting n REV 2, REV 3, REV 4, REV 5, REV 6, REV 7 – the SIM algorithm + “reverse context lookup” with frequency thresholds of: 2, 3, 4, 5, 6 and 7 DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

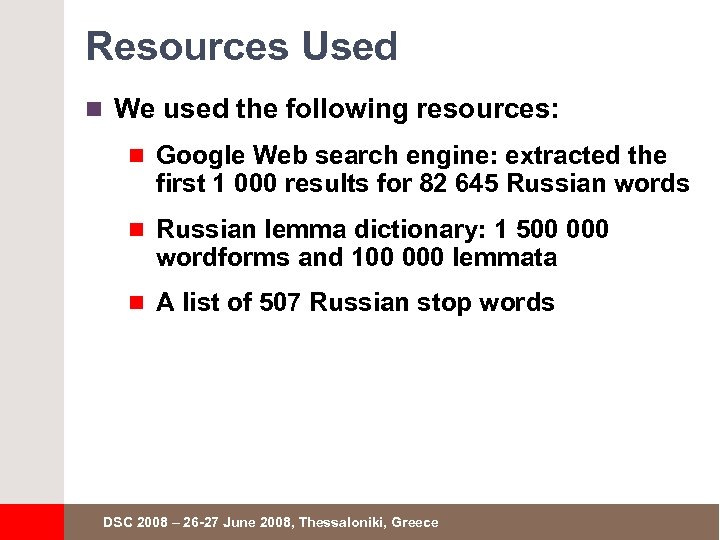

Resources Used n We used the following resources: n Google Web search engine: extracted the first 1 000 results for 82 645 Russian words n Russian lemma dictionary: 1 500 000 wordforms and 100 000 lemmata n A list of 507 Russian stop words DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Evaluation n Our algorithms arrange all pairs of words according to their semantic similarity n We expect the 50 synonyms pairs to be at the top of the result list n We count how many synonyms are found in the top N results (e. g. top 5, top 10, etc. ) n We measure precision and recall n We measure 11 pt average precision to evaluate the results DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

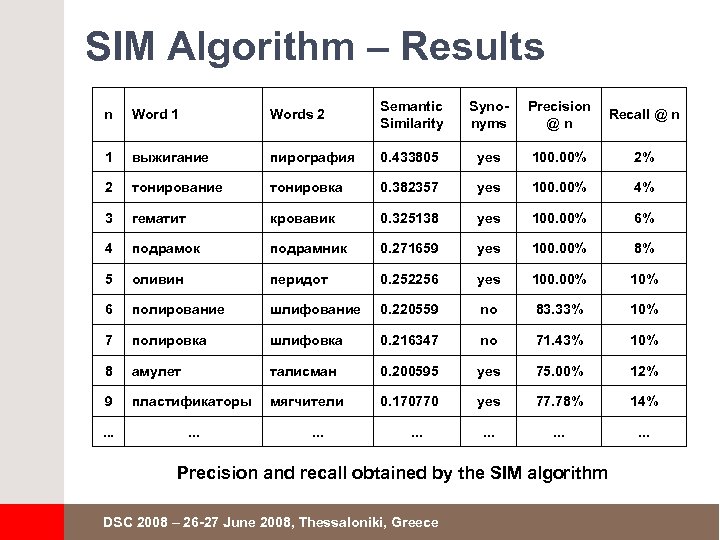

SIM Algorithm – Results n Word 1 Words 2 Semantic Similarity Syno nyms Precision @n Recall @ n 1 выжигание пирография 0. 433805 yes 100. 00% 2% 2 тонирование тонировка 0. 382357 yes 100. 00% 4% 3 гематит кровавик 0. 325138 yes 100. 00% 6% 4 подрамок подрамник 0. 271659 yes 100. 00% 8% 5 оливин перидот 0. 252256 yes 100. 00% 10% 6 полирование шлифование 0. 220559 no 83. 33% 10% 7 полировка шлифовка 0. 216347 no 71. 43% 10% 8 амулет талисман 0. 200595 yes 75. 00% 12% 9 пластификаторы мягчители 0. 170770 yes 77. 78% 14% . . Precision and recall obtained by the SIM algorithm DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Comparison of the Algorithms Algorithm 1 5 10 20 30 40 50 100 200 Max RAND 0 SIM 1 5 8 15 18 23 25 SIM+TFIDF 1 4 8 16 22 27 REV 2 1 4 8 16 21 REV 3 1 4 8 16 REV 4 1 4 8 REV 5 1 4 REV 6 1 REV 7 1 0. 2 0. 3 0. 4 0. 6 1. 1 2. 3 50 39 48 50 29 43 48 50 27 32 42 43 46 20 28 32 41 42 46 15 20 28 33 41 42 45 8 15 20 28 33 40 41 42 4 8 15 22 28 32 39 40 42 4 8 15 21 27 30 37 39 40 Comparison of the algorithms (number of synonyms in the top results) DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Comparison of the Algorithms (11 pt Average Precision) 11 pt Average Precision 70, 00% 63, 16% 58, 98% 60, 00% 50, 00% 40, 00% 30, 00% 20, 00% 1, 15% n/a 0, 00% RAND SIM+TFIDF n/a n/a n/a REV 2 REV 3 REV 4 REV 5 REV 6 REV 7 Comparing RAND, SIM+TDIDF and REV 2 … REV 7 DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

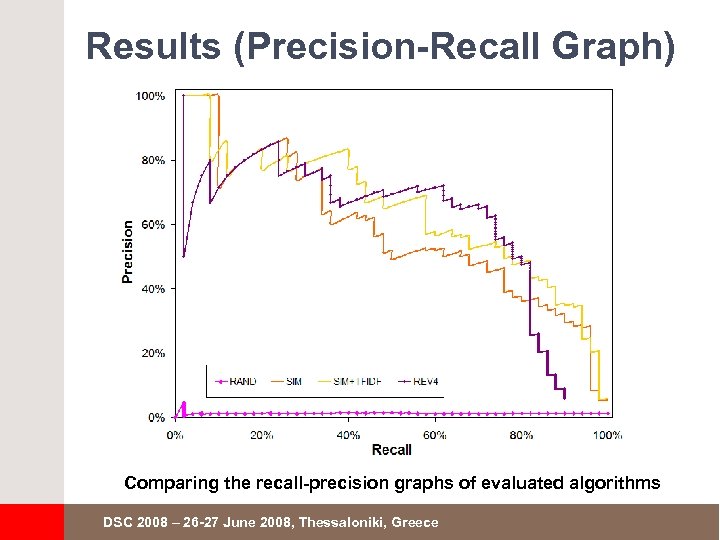

Results (Precision Recall Graph) Comparing the recall precision graphs of evaluated algorithms DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Discussion n Our approach is original because: n Measures automatically semantic similarity n Uses the Web as a corpus n Does not rely on any preexisting corpora n Does not requires semantic resources like Word. Net and Euro. Word. Net n Works for any language n Tested for Bulgarian and Russian n Uses reverse context lookup and TF. IDF n Significant improvement in quality DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Discussion n Good accuracy, but far away from 100% n Known problems of the proposed algorithms: n Semantically related words are not always synonyms n red – blue n wood – pine n apple – computer n Similar contexts does not always mean similar words (distributional hypothesis) n The Web as a corpus introduces noise n Google returns the first 1 000 results only DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Discussion n Known problems of the proposed algorithms: n Google ranks higher news portals, travel agencies and retail sites than books, articles and forum messages n Local context always contain noise n Working with words, not capturing phrases DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Conclusion and Future Work n Conclusion n Our algorithms can distinguish between synonyms and non synonyms n Accuracy should be improved n Future Work n Additional techniques to distinguish between synonyms and semantically related words n Improve the semantic similarity measure algorithm DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

References n Hearst M. (1991). "Noun Homograph Disambiguation Using Local Context in Large Text Corpora". In Proceedings of the 7 th Annual Conference of the University of Waterloo Centre for the New OED and Text Research, Oxford, England, pages 1 22. n Nakov P. , Nakov S. , Paskaleva E. (2007 a). “Improved Word Alignments Using the Web as a Corpus”. In Proceedings of RANLP'2007, pages 400 405, Borovetz, Bulgaria. n Nakov S. , Nakov P. , Paskaleva E. (2007 b). “Cognate or False Friend? Ask the Web!”. In Proceedings of the Workshop on Acquisition and Management of Multilin gual Lexicons, held in conjunction with RANLP'2007 pages 55 62, , Borovetz, Bulgaria. n Sparck Jones K. (1972). “A Statistical Interpretation of Term Specificity and its Application in Retrieval”. Journal of Documentation, volume 28, pages 11 21. n Salton G. , Mc. Gill M. (1983), Introduction to Modern Information Retrieval, Mc. Graw Hill, New York. n Paskaleva E. (2002). “Processing Bulgarian and Russian Resources in Unified Format”. In Proceedings of the 8 th International Scientific Symposium MAPRIAL, Veliko Tarnovo, Bulgaria, pages 185 194. n Harris, Z. (1954). "Distributional structure”. Word, 10, pages 146 162. n Lin D. (1998). "Automatic Retrieval and Clustering of Similar Words". In Proceedings of COLING ACL'98, Montreal, Canada, pages 768 774. n Curran J. , Moens M. (2002). "Improvements in Аutomatic Тhesaurus Еxtraction". In Proceedings of the Workshop on Unsupervised Lexical Acquisition, SIGLEX 2002, Philadelphia, USA, pages 59 67. DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

References n Plas L. , Tiedeman J. (2006). "Finding Synonyms Using Automatic Word Alignment and Measures of Distribu tional Similarity". In. Proceedings of COLING/ACL 2006, Sydney, Australia. n Och F. , Ney H. (2003). "A Systematic Comparison of Various Statistical Alignment Models". Computational Linguistics, 29 (1), 2003. n Hagiwara М. , Ogawa Y. , Toyama K. (2007). "Effectiveness of Indirect Dependency for Automatic Synonym Acquisition". In Proceedings of Co. SMo 2007 Workshop, held in conjuction with CONTEXT 2007, Roskilde, Denmark. n Kilgarriff A. , Grefenstette G. (2003). "Introduction to the Special Issue on the Web as Corpus", Computational Linguistics, 29(3): 333– 347. n Inkpen D. (2007). "Near synonym Choice in an Intelligent Thesaurus". In Proceedings of the NAACL HLT, New York, USA. n Chen H. , Lin M. , Wei Y. (2006). "Novel Association Measures Using Web Search with Double Checking". In Proceedings of the COLING/ACL 2006, Sydney, Australia, pages 1009 1016. n Sahami M. , Heilman T. (2006). "A Web based Kernel Function for Measuring the Similarity of Short Text Snippets". In Proceedings of 15 th International World Wide Web Conference, Edinburgh, Scotland. n Bollegala D. , Matsuo Y. , Ishizuka M. (2007). "Measuring Semantic Similarity between Words Using Web Search Engines", In Proceedings of the 16 th International World Wide Web Conference (WWW 2007), Banff, Canada, pages 757 766. n Sanchez D. , Moreno A. (2005), "Automatic Discovery of Synonyms and Lexicalizations from the Web". Artificial Intelligence Research and Development, Volume 131, 2005. DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

Automatic Acquisition of Synonyms Using the Web as a Corpus Questions? DSC 2008 – 26 27 June 2008, Thessaloniki, Greece

c1453d4a2c7a3381c20734907bd35ee0.ppt