80a434680a291cb88908af1c75b50daa.ppt

- Количество слайдов: 64

Automated Testing of Massively Multi -Player Games Lessons Learned from The Sims Online Larry Mellon Spring 2003

Automated Testing of Massively Multi -Player Games Lessons Learned from The Sims Online Larry Mellon Spring 2003

Context: What Is Automated Testing?

Context: What Is Automated Testing?

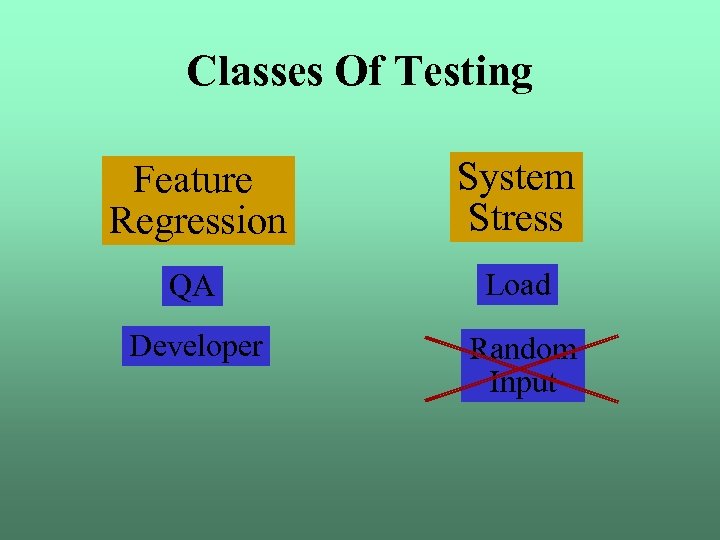

Classes Of Testing Feature Regression System Stress QA Load Developer Random Input

Classes Of Testing Feature Regression System Stress QA Load Developer Random Input

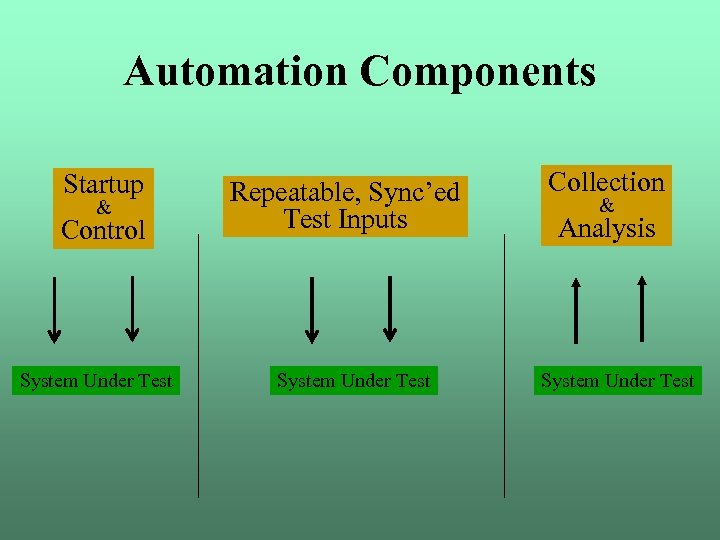

Automation Components Startup & Control System Under Test Repeatable, Sync’ed Test Inputs System Under Test Collection & Analysis System Under Test

Automation Components Startup & Control System Under Test Repeatable, Sync’ed Test Inputs System Under Test Collection & Analysis System Under Test

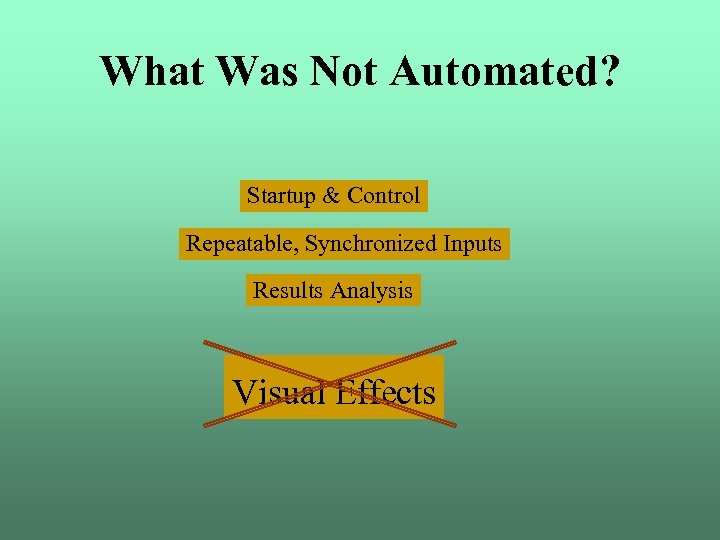

What Was Not Automated? Startup & Control Repeatable, Synchronized Inputs Results Analysis Visual Effects

What Was Not Automated? Startup & Control Repeatable, Synchronized Inputs Results Analysis Visual Effects

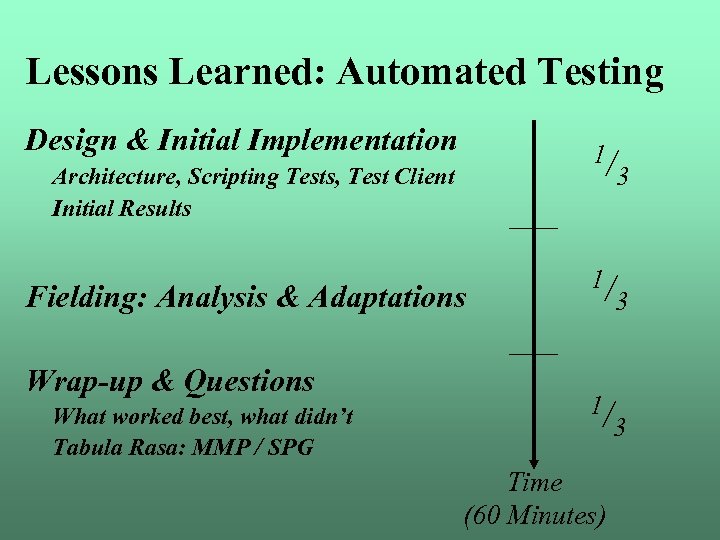

Lessons Learned: Automated Testing Design & Initial Implementation 1/ Architecture, Scripting Tests, Test Client Initial Results Fielding: Analysis & Adaptations Wrap-up & Questions What worked best, what didn’t Tabula Rasa: MMP / SPG 1/ 1/ Time (60 Minutes) 3 3 3

Lessons Learned: Automated Testing Design & Initial Implementation 1/ Architecture, Scripting Tests, Test Client Initial Results Fielding: Analysis & Adaptations Wrap-up & Questions What worked best, what didn’t Tabula Rasa: MMP / SPG 1/ 1/ Time (60 Minutes) 3 3 3

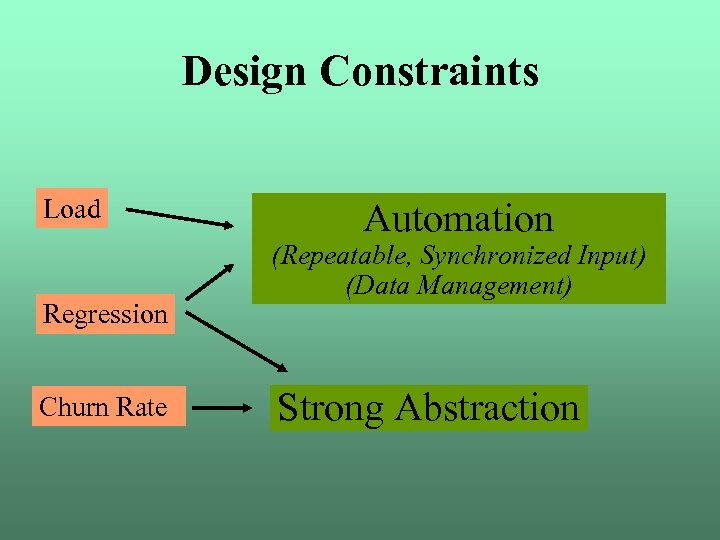

Design Constraints Load Regression Churn Rate Automation (Repeatable, Synchronized Input) (Data Management) Strong Abstraction

Design Constraints Load Regression Churn Rate Automation (Repeatable, Synchronized Input) (Data Management) Strong Abstraction

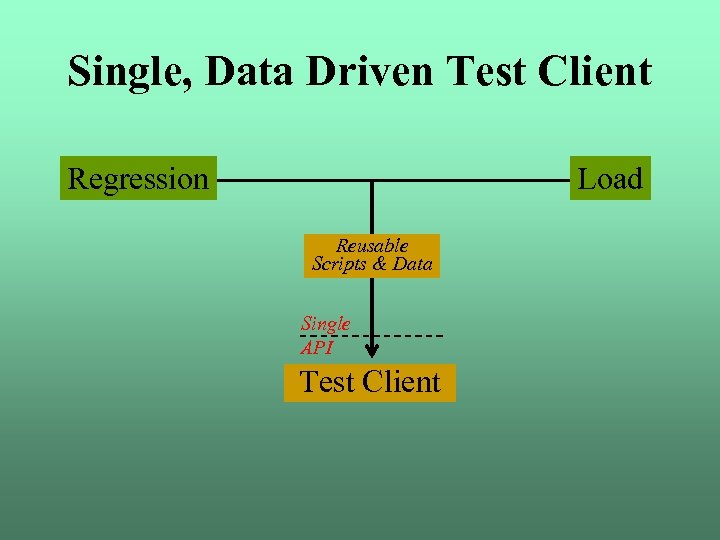

Single, Data Driven Test Client Load Regression Reusable Scripts & Data Single API Test Client

Single, Data Driven Test Client Load Regression Reusable Scripts & Data Single API Test Client

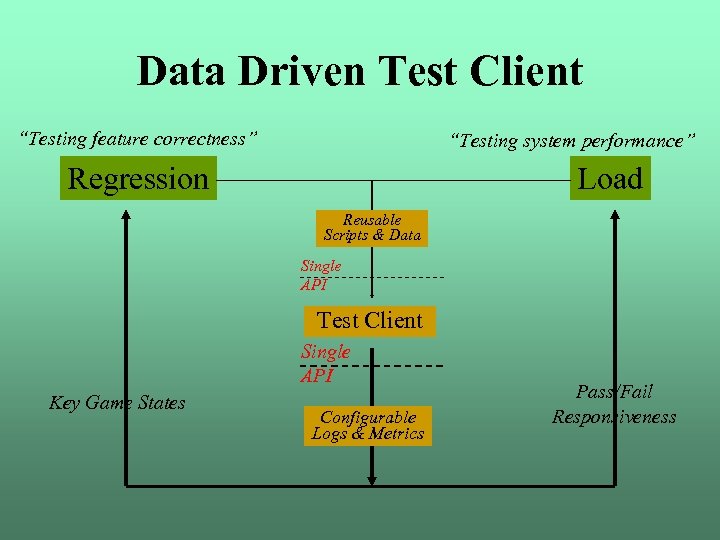

Data Driven Test Client “Testing feature correctness” “Testing system performance” Load Regression Reusable Scripts & Data Single API Test Client Single API Key Game States Configurable Logs & Metrics Pass/Fail Responsiveness

Data Driven Test Client “Testing feature correctness” “Testing system performance” Load Regression Reusable Scripts & Data Single API Test Client Single API Key Game States Configurable Logs & Metrics Pass/Fail Responsiveness

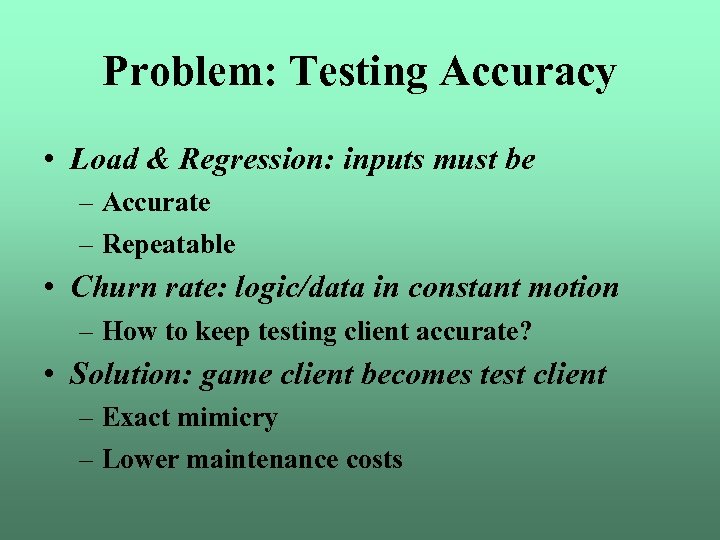

Problem: Testing Accuracy • Load & Regression: inputs must be – Accurate – Repeatable • Churn rate: logic/data in constant motion – How to keep testing client accurate? • Solution: game client becomes test client – Exact mimicry – Lower maintenance costs

Problem: Testing Accuracy • Load & Regression: inputs must be – Accurate – Repeatable • Churn rate: logic/data in constant motion – How to keep testing client accurate? • Solution: game client becomes test client – Exact mimicry – Lower maintenance costs

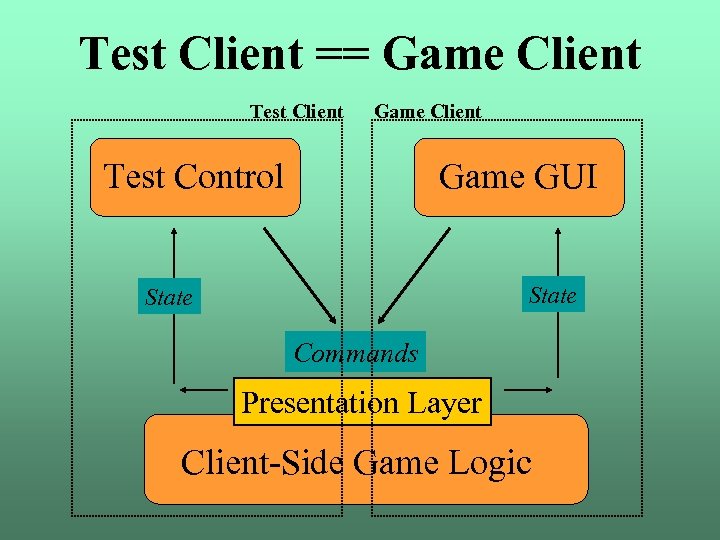

Test Client == Game Client Test Client Game Client Test Control Game GUI State Commands Presentation Layer Client-Side Game Logic

Test Client == Game Client Test Client Game Client Test Control Game GUI State Commands Presentation Layer Client-Side Game Logic

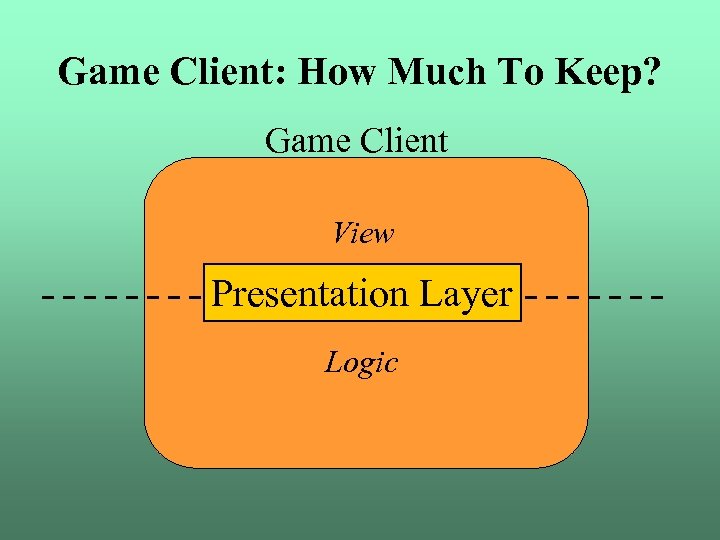

Game Client: How Much To Keep? Game Client View Presentation Layer Logic

Game Client: How Much To Keep? Game Client View Presentation Layer Logic

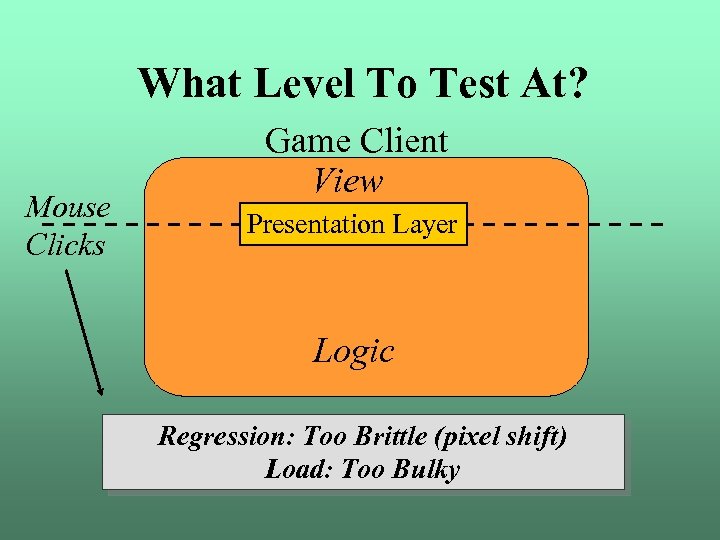

What Level To Test At? Mouse Clicks Game Client View Presentation Layer Logic Regression: Too Brittle (pixel shift) Load: Too Bulky

What Level To Test At? Mouse Clicks Game Client View Presentation Layer Logic Regression: Too Brittle (pixel shift) Load: Too Bulky

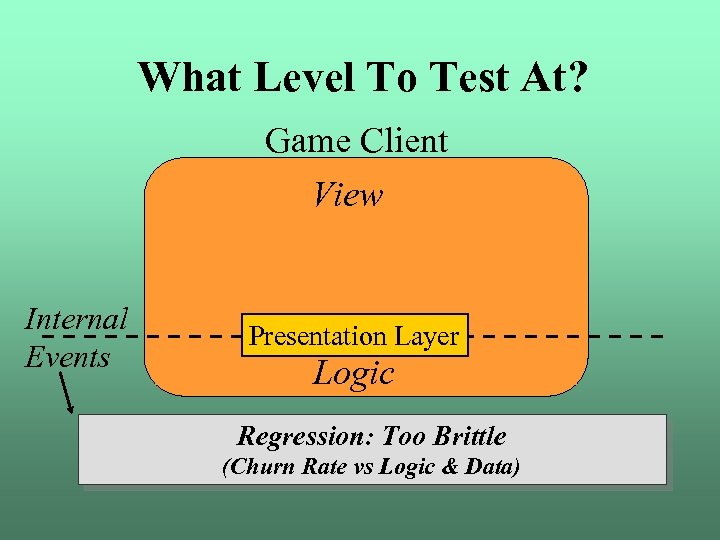

What Level To Test At? Game Client View Internal Events Presentation Layer Logic Regression: Too Brittle (Churn Rate vs Logic & Data)

What Level To Test At? Game Client View Internal Events Presentation Layer Logic Regression: Too Brittle (Churn Rate vs Logic & Data)

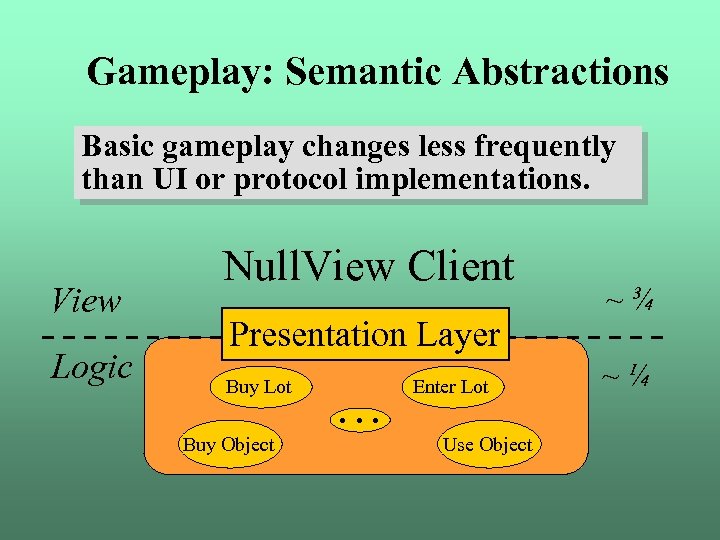

Gameplay: Semantic Abstractions Basic gameplay changes less frequently than UI or protocol implementations. View Logic Null. View Client Presentation Layer Buy Lot Buy Object … Enter Lot Use Object ~¾ ~¼

Gameplay: Semantic Abstractions Basic gameplay changes less frequently than UI or protocol implementations. View Logic Null. View Client Presentation Layer Buy Lot Buy Object … Enter Lot Use Object ~¾ ~¼

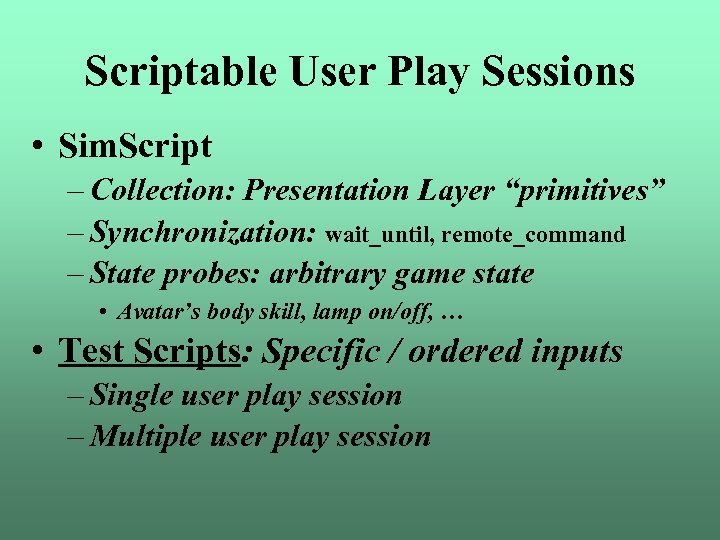

Scriptable User Play Sessions • Sim. Script – Collection: Presentation Layer “primitives” – Synchronization: wait_until, remote_command – State probes: arbitrary game state • Avatar’s body skill, lamp on/off, … • Test Scripts: Specific / ordered inputs – Single user play session – Multiple user play session

Scriptable User Play Sessions • Sim. Script – Collection: Presentation Layer “primitives” – Synchronization: wait_until, remote_command – State probes: arbitrary game state • Avatar’s body skill, lamp on/off, … • Test Scripts: Specific / ordered inputs – Single user play session – Multiple user play session

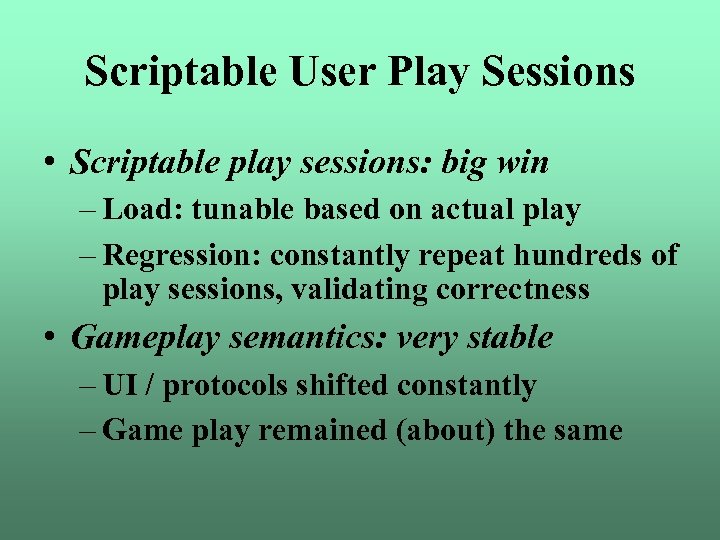

Scriptable User Play Sessions • Scriptable play sessions: big win – Load: tunable based on actual play – Regression: constantly repeat hundreds of play sessions, validating correctness • Gameplay semantics: very stable – UI / protocols shifted constantly – Game play remained (about) the same

Scriptable User Play Sessions • Scriptable play sessions: big win – Load: tunable based on actual play – Regression: constantly repeat hundreds of play sessions, validating correctness • Gameplay semantics: very stable – UI / protocols shifted constantly – Game play remained (about) the same

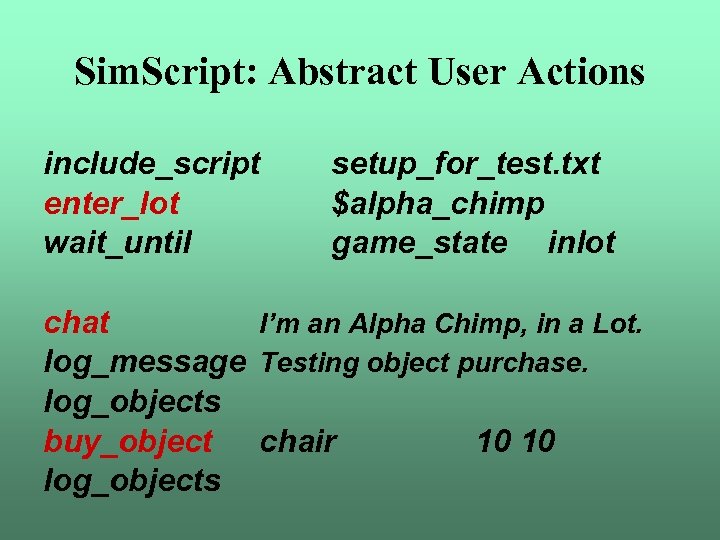

Sim. Script: Abstract User Actions include_script enter_lot wait_until setup_for_test. txt $alpha_chimp game_state inlot chat I’m an Alpha Chimp, in a Lot. log_message Testing object purchase. log_objects buy_object chair 10 10 log_objects

Sim. Script: Abstract User Actions include_script enter_lot wait_until setup_for_test. txt $alpha_chimp game_state inlot chat I’m an Alpha Chimp, in a Lot. log_message Testing object purchase. log_objects buy_object chair 10 10 log_objects

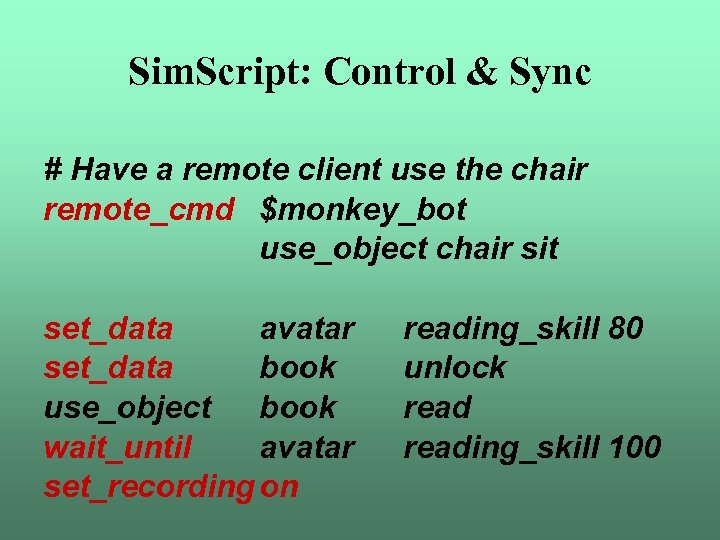

Sim. Script: Control & Sync # Have a remote client use the chair remote_cmd $monkey_bot use_object chair sit set_data avatar reading_skill 80 set_data book unlock use_object book read wait_until avatar reading_skill 100 set_recording on

Sim. Script: Control & Sync # Have a remote client use the chair remote_cmd $monkey_bot use_object chair sit set_data avatar reading_skill 80 set_data book unlock use_object book read wait_until avatar reading_skill 100 set_recording on

Client Implementation

Client Implementation

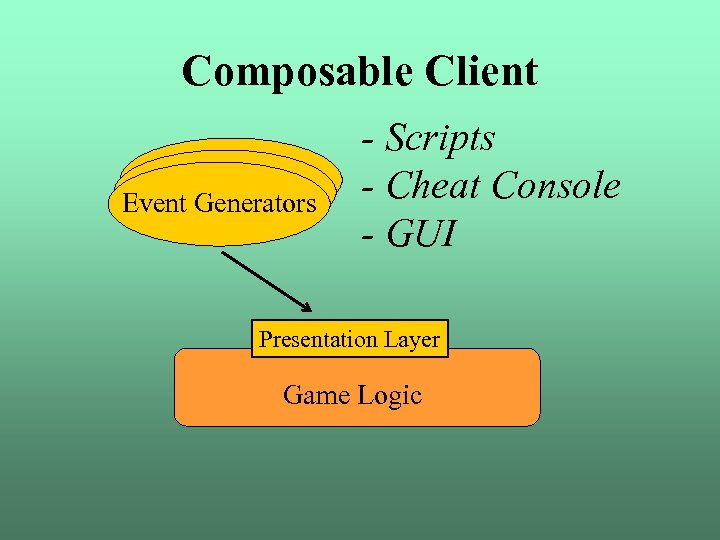

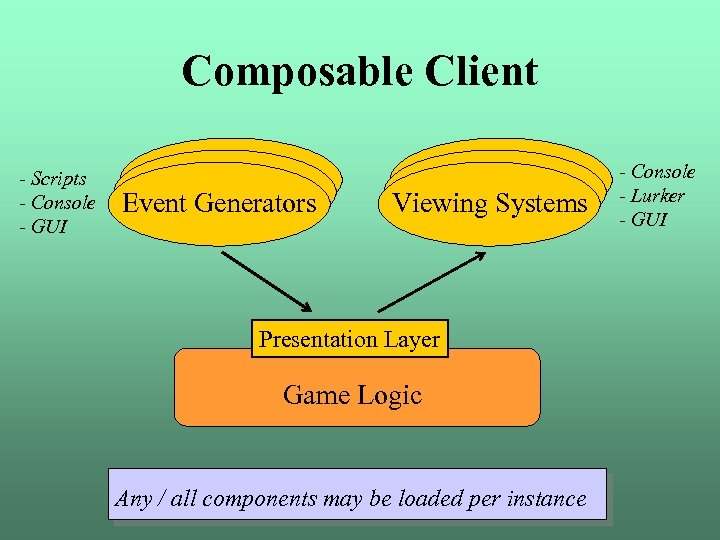

Composable Client Event Generators Event - Scripts - Cheat Console - GUI Presentation Layer Game Logic

Composable Client Event Generators Event - Scripts - Cheat Console - GUI Presentation Layer Game Logic

Composable Client - Scripts - Console - GUI Event Generators Event Viewing Systems Viewing Presentation Layer Game Logic Any / all components may be loaded per instance - Console - Lurker - GUI

Composable Client - Scripts - Console - GUI Event Generators Event Viewing Systems Viewing Presentation Layer Game Logic Any / all components may be loaded per instance - Console - Lurker - GUI

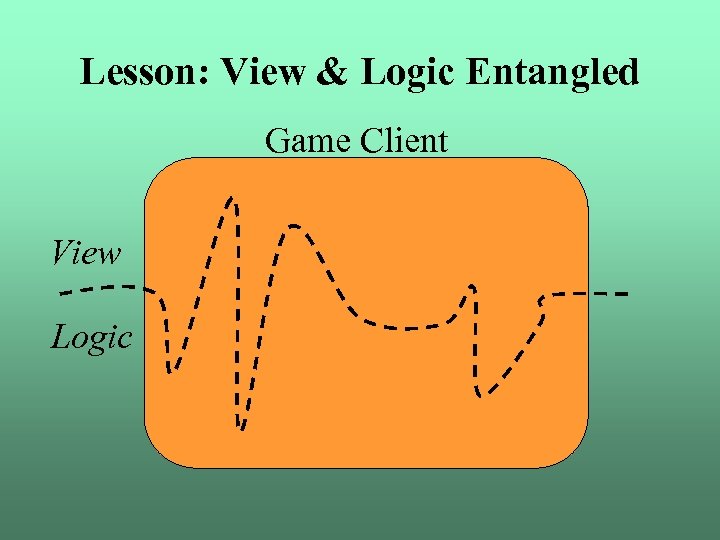

Lesson: View & Logic Entangled Game Client View Logic

Lesson: View & Logic Entangled Game Client View Logic

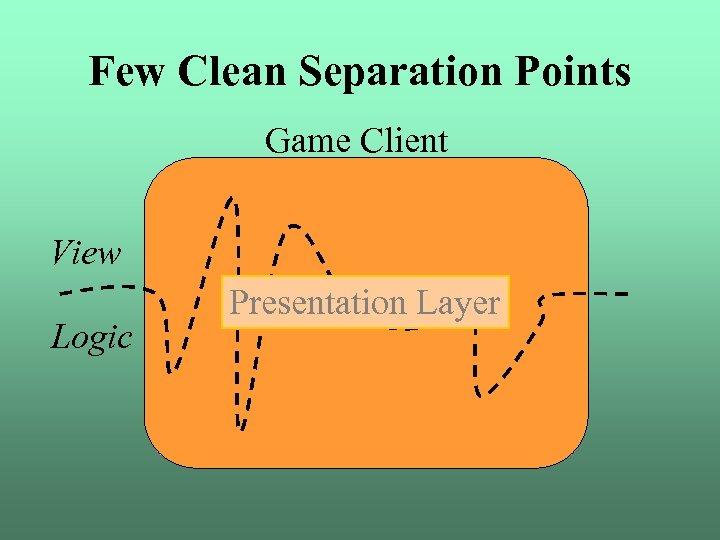

Few Clean Separation Points Game Client View Logic Presentation Layer

Few Clean Separation Points Game Client View Logic Presentation Layer

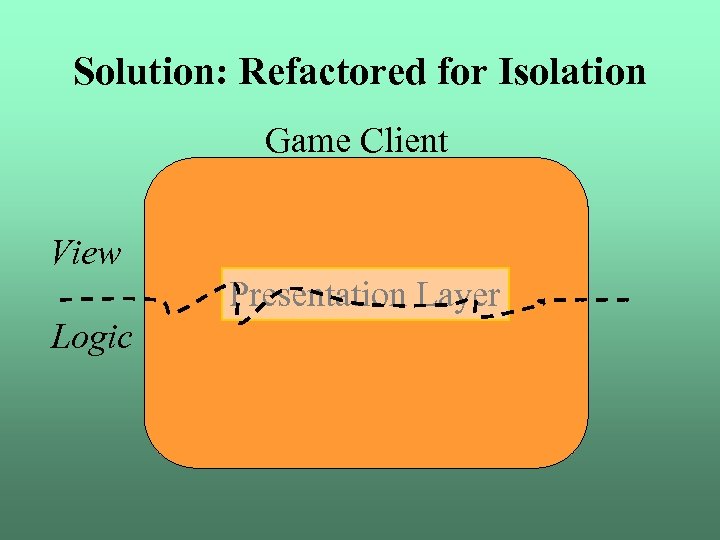

Solution: Refactored for Isolation Game Client View Presentation Layer Logic

Solution: Refactored for Isolation Game Client View Presentation Layer Logic

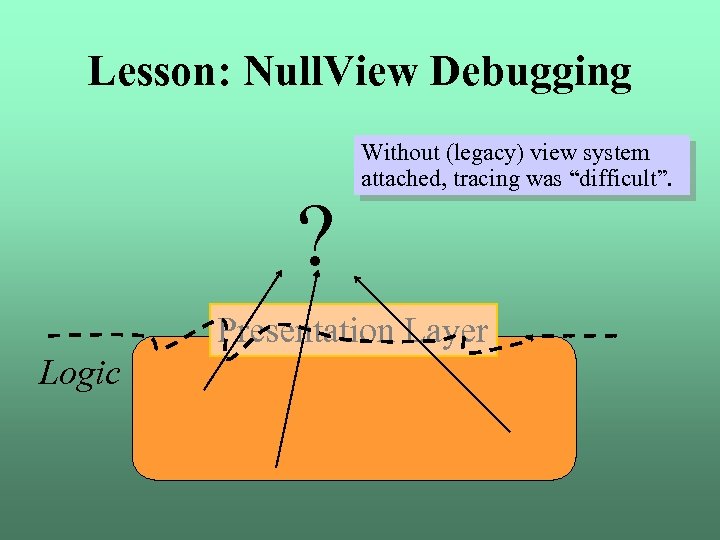

Lesson: Null. View Debugging ? Without (legacy) view system attached, tracing was “difficult”. Presentation Layer Logic

Lesson: Null. View Debugging ? Without (legacy) view system attached, tracing was “difficult”. Presentation Layer Logic

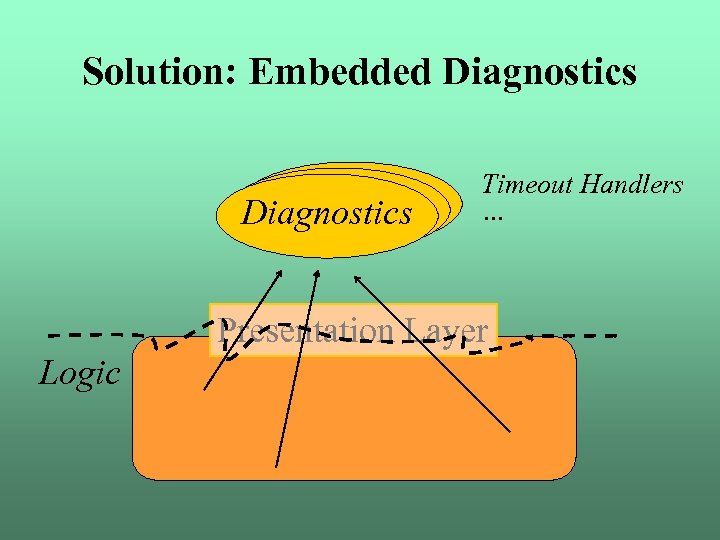

Solution: Embedded Diagnostics Timeout Handlers … Presentation Layer Logic

Solution: Embedded Diagnostics Timeout Handlers … Presentation Layer Logic

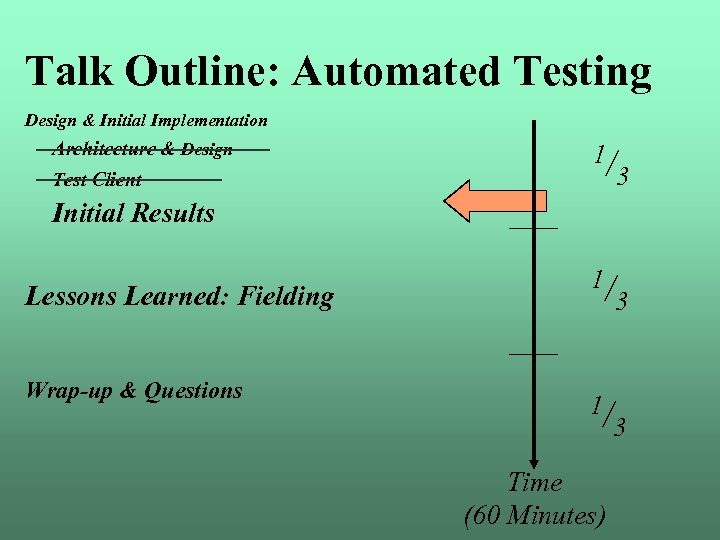

Talk Outline: Automated Testing Design & Initial Implementation Architecture & Design Test Client 1/ 3 Initial Results Lessons Learned: Fielding Wrap-up & Questions 1/ 1/ Time (60 Minutes) 3 3

Talk Outline: Automated Testing Design & Initial Implementation Architecture & Design Test Client 1/ 3 Initial Results Lessons Learned: Fielding Wrap-up & Questions 1/ 1/ Time (60 Minutes) 3 3

Mean Time Between Failure • Random Event, Log & Execute • Record client lifetime / RAM • Worked: just not relevant in early stages of development – Most failures / leaks found were not high-priority at that time, when weighed against server crashes

Mean Time Between Failure • Random Event, Log & Execute • Record client lifetime / RAM • Worked: just not relevant in early stages of development – Most failures / leaks found were not high-priority at that time, when weighed against server crashes

Monkey Tests • Constant repetition of simple, isolated actions against servers • Very useful: – Direct observation of servers while under constant, simple input – Server processes “aged” all day • Examples: – Login / Logout – Enter House / Leave House

Monkey Tests • Constant repetition of simple, isolated actions against servers • Very useful: – Direct observation of servers while under constant, simple input – Server processes “aged” all day • Examples: – Login / Logout – Enter House / Leave House

QA Test Suite Regression • High false positive rate & high maintenance – New bugs / old bugs – Shifting game design – “Unknown” failures Not helping in day to day work.

QA Test Suite Regression • High false positive rate & high maintenance – New bugs / old bugs – Shifting game design – “Unknown” failures Not helping in day to day work.

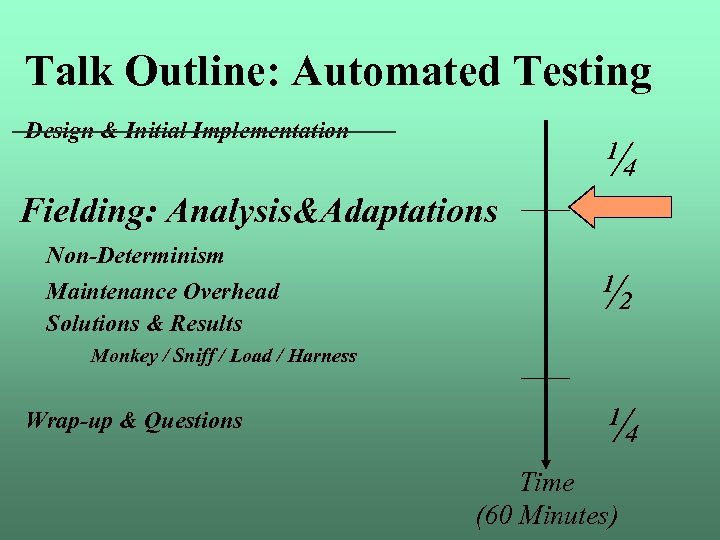

Talk Outline: Automated Testing Design & Initial Implementation ¼ Fielding: Analysis&Adaptations Non-Determinism Maintenance Overhead Solutions & Results ½ Monkey / Sniff / Load / Harness Wrap-up & Questions ¼ Time (60 Minutes)

Talk Outline: Automated Testing Design & Initial Implementation ¼ Fielding: Analysis&Adaptations Non-Determinism Maintenance Overhead Solutions & Results ½ Monkey / Sniff / Load / Harness Wrap-up & Questions ¼ Time (60 Minutes)

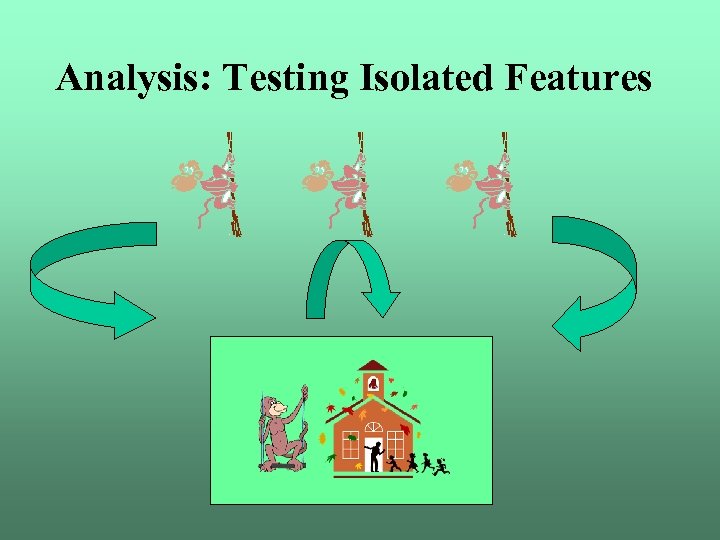

Analysis: Testing Isolated Features

Analysis: Testing Isolated Features

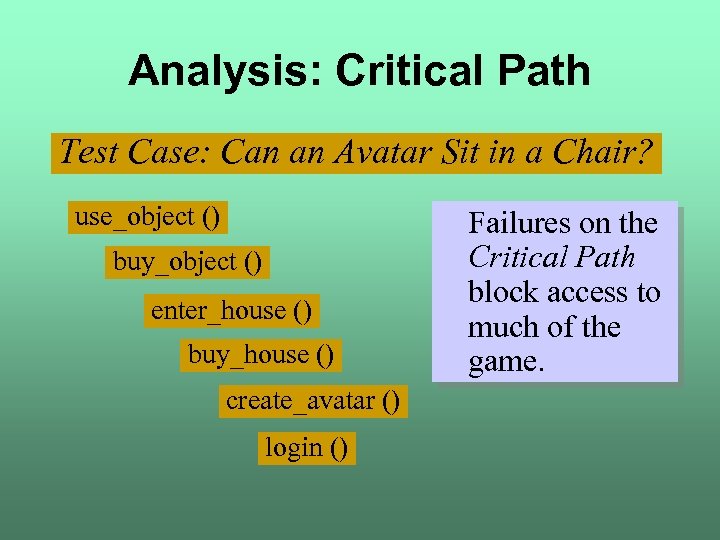

Analysis: Critical Path Test Case: Can an Avatar Sit in a Chair? use_object () buy_object () enter_house () buy_house () create_avatar () login () Failures on the Critical Path block access to much of the game.

Analysis: Critical Path Test Case: Can an Avatar Sit in a Chair? use_object () buy_object () enter_house () buy_house () create_avatar () login () Failures on the Critical Path block access to much of the game.

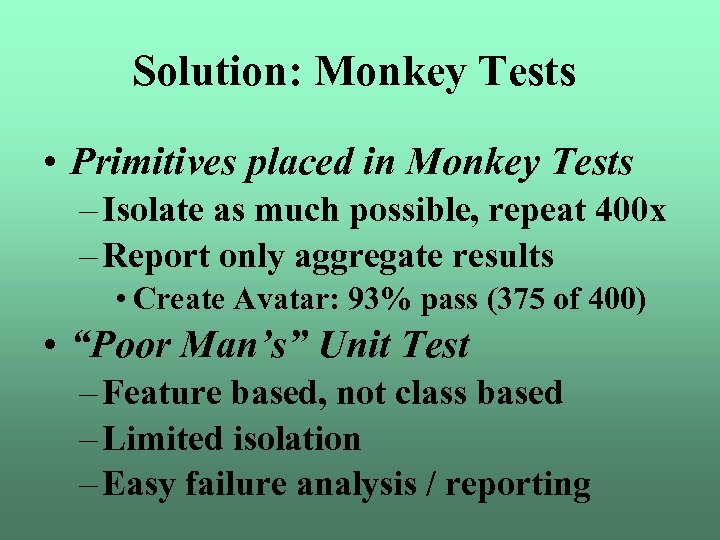

Solution: Monkey Tests • Primitives placed in Monkey Tests – Isolate as much possible, repeat 400 x – Report only aggregate results • Create Avatar: 93% pass (375 of 400) • “Poor Man’s” Unit Test – Feature based, not class based – Limited isolation – Easy failure analysis / reporting

Solution: Monkey Tests • Primitives placed in Monkey Tests – Isolate as much possible, repeat 400 x – Report only aggregate results • Create Avatar: 93% pass (375 of 400) • “Poor Man’s” Unit Test – Feature based, not class based – Limited isolation – Easy failure analysis / reporting

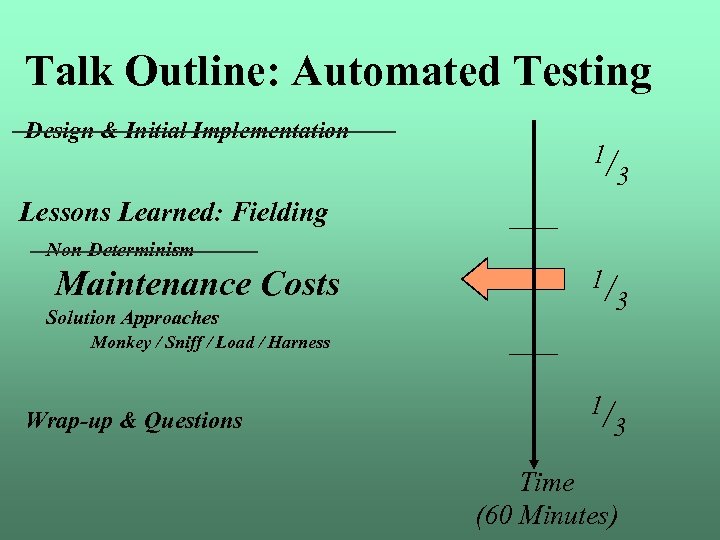

Talk Outline: Automated Testing Design & Initial Implementation 1/ 3 Lessons Learned: Fielding Non-Determinism Maintenance Costs Solution Approaches 1/ 3 Monkey / Sniff / Load / Harness Wrap-up & Questions 1/ 3 Time (60 Minutes)

Talk Outline: Automated Testing Design & Initial Implementation 1/ 3 Lessons Learned: Fielding Non-Determinism Maintenance Costs Solution Approaches 1/ 3 Monkey / Sniff / Load / Harness Wrap-up & Questions 1/ 3 Time (60 Minutes)

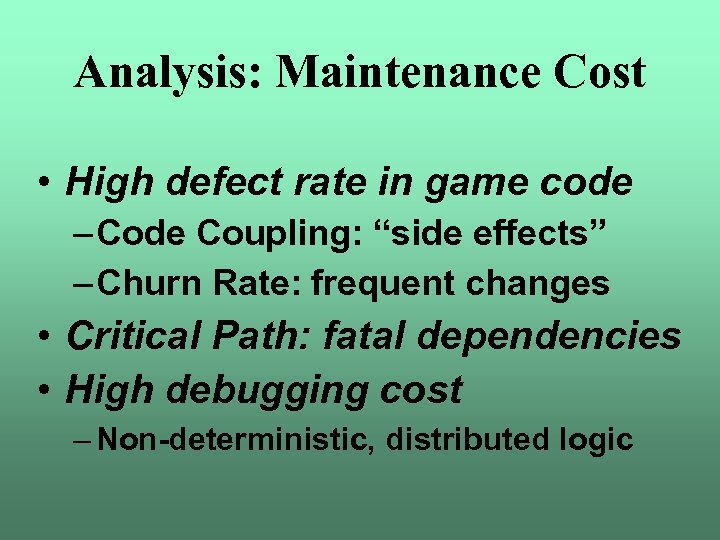

Analysis: Maintenance Cost • High defect rate in game code – Code Coupling: “side effects” – Churn Rate: frequent changes • Critical Path: fatal dependencies • High debugging cost – Non-deterministic, distributed logic

Analysis: Maintenance Cost • High defect rate in game code – Code Coupling: “side effects” – Churn Rate: frequent changes • Critical Path: fatal dependencies • High debugging cost – Non-deterministic, distributed logic

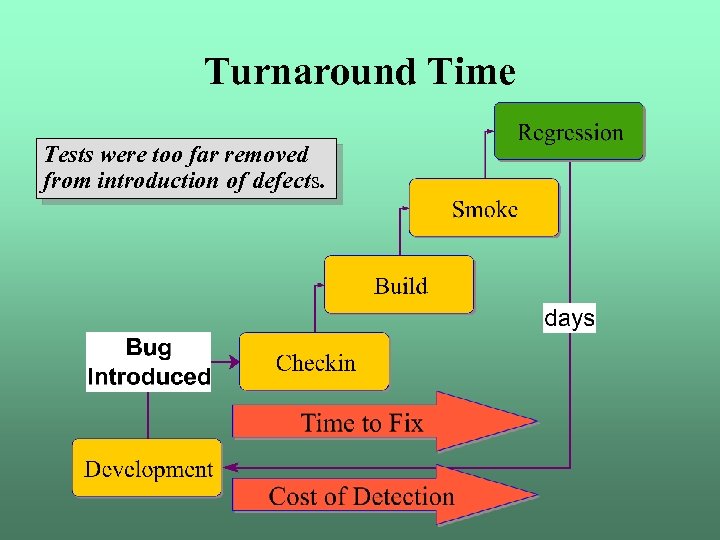

Turnaround Time Tests were too far removed from introduction of defects.

Turnaround Time Tests were too far removed from introduction of defects.

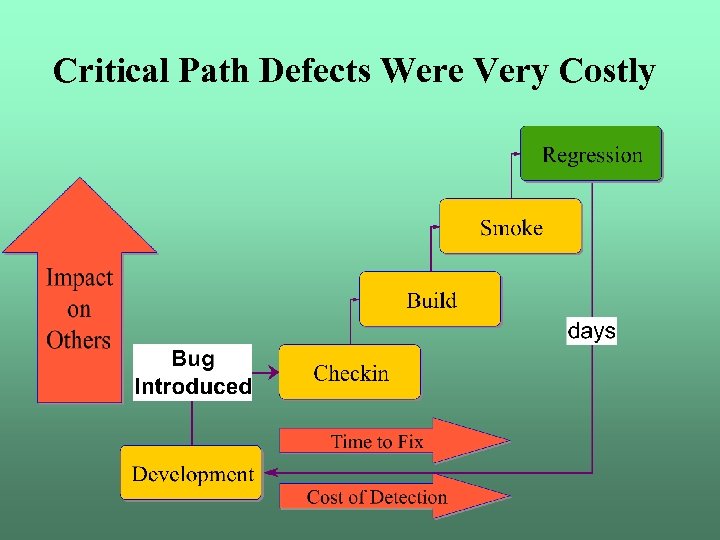

Critical Path Defects Were Very Costly

Critical Path Defects Were Very Costly

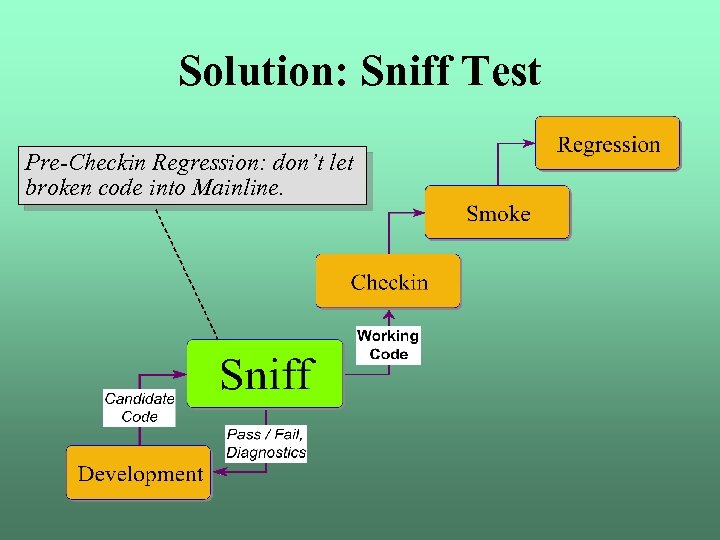

Solution: Sniff Test Pre-Checkin Regression: don’t let broken code into Mainline.

Solution: Sniff Test Pre-Checkin Regression: don’t let broken code into Mainline.

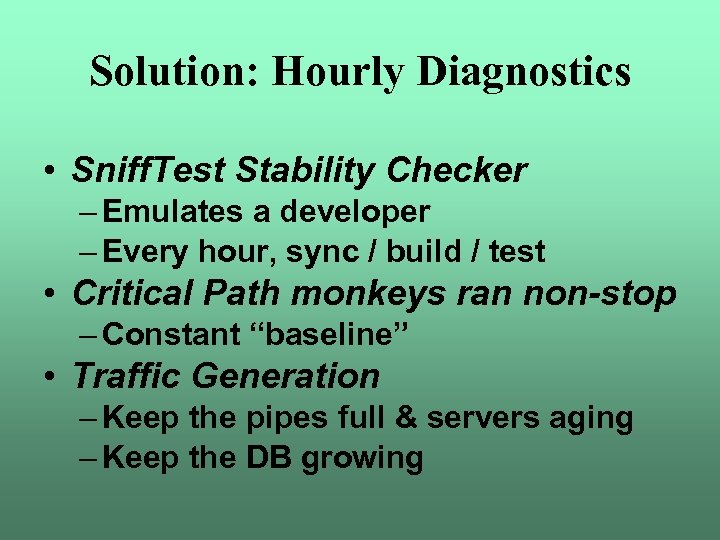

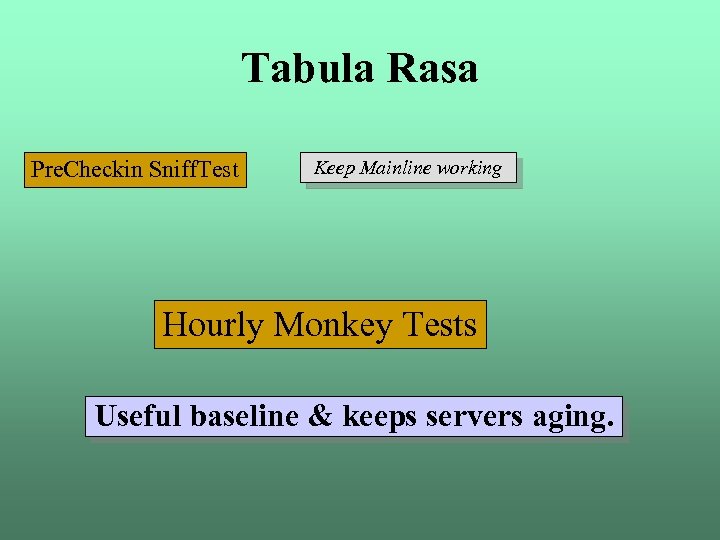

Solution: Hourly Diagnostics • Sniff. Test Stability Checker – Emulates a developer – Every hour, sync / build / test • Critical Path monkeys ran non-stop – Constant “baseline” • Traffic Generation – Keep the pipes full & servers aging – Keep the DB growing

Solution: Hourly Diagnostics • Sniff. Test Stability Checker – Emulates a developer – Every hour, sync / build / test • Critical Path monkeys ran non-stop – Constant “baseline” • Traffic Generation – Keep the pipes full & servers aging – Keep the DB growing

Analysis: CONSTANT SHOUTING IS REALLY IRRITATING • Bugs spawned many, emails • Solution: Report Managers – Aggregates / correlates across tests – Filters known defects – Translates common failure reports to their root causes • Solution: Data Managers – Information Overload: Automated workflow tools mandatory

Analysis: CONSTANT SHOUTING IS REALLY IRRITATING • Bugs spawned many, emails • Solution: Report Managers – Aggregates / correlates across tests – Filters known defects – Translates common failure reports to their root causes • Solution: Data Managers – Information Overload: Automated workflow tools mandatory

Tool. Kit Usability • • Workflow automation Information management Developer / Tester “push button” ease of use XP flavour: increasingly easy to run tests – Must be easier to run than avoid to running – Must solve problems “on the ground now”

Tool. Kit Usability • • Workflow automation Information management Developer / Tester “push button” ease of use XP flavour: increasingly easy to run tests – Must be easier to run than avoid to running – Must solve problems “on the ground now”

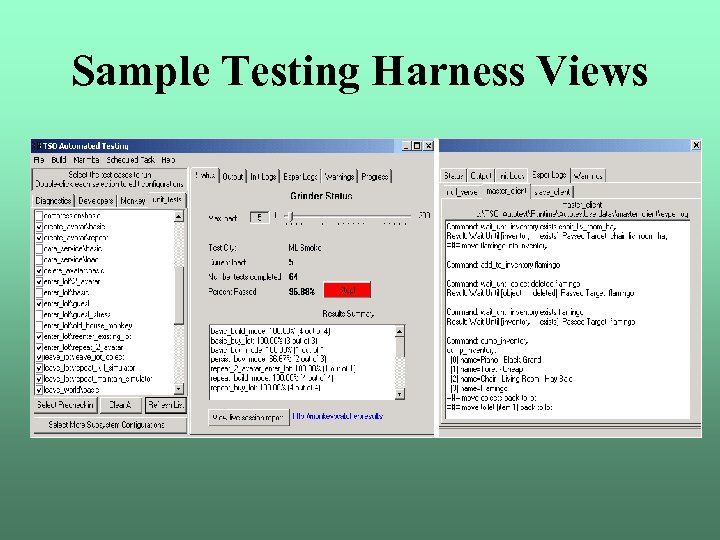

Sample Testing Harness Views

Sample Testing Harness Views

Load Testing: Goals • • Expose issues that only occur at scale Establish hardware requirements Establish response is playable @ scale Emulate user behaviour – Use server-side metrics to tune test scripts against observed Beta behaviour • Run full scale load tests daily

Load Testing: Goals • • Expose issues that only occur at scale Establish hardware requirements Establish response is playable @ scale Emulate user behaviour – Use server-side metrics to tune test scripts against observed Beta behaviour • Run full scale load tests daily

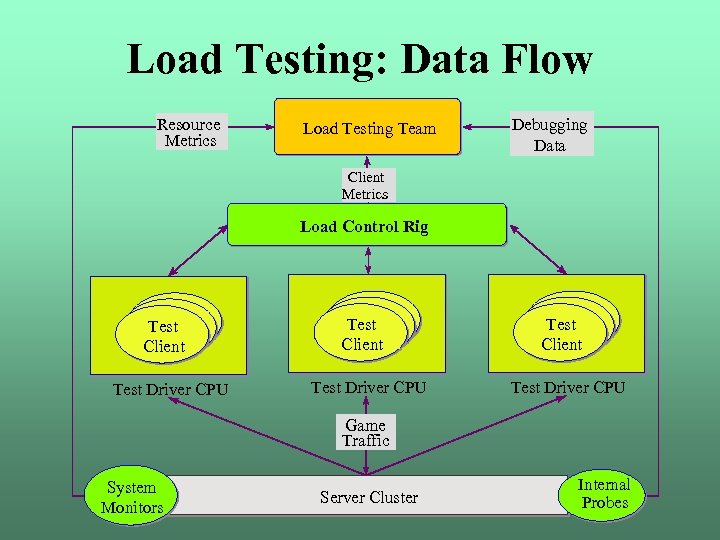

Load Testing: Data Flow Resource Metrics Load Testing Team Debugging Data Client Metrics Load Control Rig Test Test Client Client Test Client Test Driver CPU Game Traffic System Monitors Server Cluster Internal Probes

Load Testing: Data Flow Resource Metrics Load Testing Team Debugging Data Client Metrics Load Control Rig Test Test Client Client Test Client Test Driver CPU Game Traffic System Monitors Server Cluster Internal Probes

Load Testing: Lessons Learned • Very successful – “Scale&Break”: up to 4, 000 clients • Some conflicting requirements w/Regression – Continue on fail – Transaction tracking – Nullview client a little “chunky”

Load Testing: Lessons Learned • Very successful – “Scale&Break”: up to 4, 000 clients • Some conflicting requirements w/Regression – Continue on fail – Transaction tracking – Nullview client a little “chunky”

Current Work • QA test suite automation • Workflow tools • Integrating testing into the new features design/development process • Planned work – Extend Esper Toolkit for general use – Port to other Maxis projects

Current Work • QA test suite automation • Workflow tools • Integrating testing into the new features design/development process • Planned work – Extend Esper Toolkit for general use – Port to other Maxis projects

Talk Outline: Automated Testing Design & Initial Implementation 1/ Lessons Learned: Fielding 1/ Wrap-up & Questions Biggest Wins / Losses Reuse Tabula Rasa: MMP & SSP 1/ 3 3 3 Time (60 Minutes)

Talk Outline: Automated Testing Design & Initial Implementation 1/ Lessons Learned: Fielding 1/ Wrap-up & Questions Biggest Wins / Losses Reuse Tabula Rasa: MMP & SSP 1/ 3 3 3 Time (60 Minutes)

Biggest Wins • Presentation Layer Abstraction • • – Null. View client – Scripted playsessions: powerful for regression & load Pre-Checkin Snifftest Load Testing Continual Usability Enhancements Team – Upper Management Commitment – Focused Group, Senior Developers

Biggest Wins • Presentation Layer Abstraction • • – Null. View client – Scripted playsessions: powerful for regression & load Pre-Checkin Snifftest Load Testing Continual Usability Enhancements Team – Upper Management Commitment – Focused Group, Senior Developers

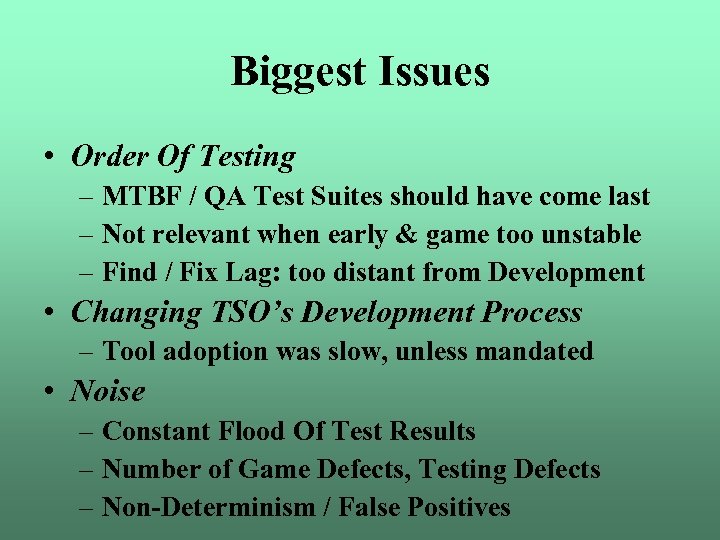

Biggest Issues • Order Of Testing – MTBF / QA Test Suites should have come last – Not relevant when early & game too unstable – Find / Fix Lag: too distant from Development • Changing TSO’s Development Process – Tool adoption was slow, unless mandated • Noise – Constant Flood Of Test Results – Number of Game Defects, Testing Defects – Non-Determinism / False Positives

Biggest Issues • Order Of Testing – MTBF / QA Test Suites should have come last – Not relevant when early & game too unstable – Find / Fix Lag: too distant from Development • Changing TSO’s Development Process – Tool adoption was slow, unless mandated • Noise – Constant Flood Of Test Results – Number of Game Defects, Testing Defects – Non-Determinism / False Positives

Tabula Rasa How Would I Start The Next Project?

Tabula Rasa How Would I Start The Next Project?

Tabula Rasa Pre. Checkin Sniff Test There’s just no reason to let code break.

Tabula Rasa Pre. Checkin Sniff Test There’s just no reason to let code break.

Tabula Rasa Pre. Checkin Sniff. Test Keep Mainline working Hourly Monkey Tests Useful baseline & keeps servers aging.

Tabula Rasa Pre. Checkin Sniff. Test Keep Mainline working Hourly Monkey Tests Useful baseline & keeps servers aging.

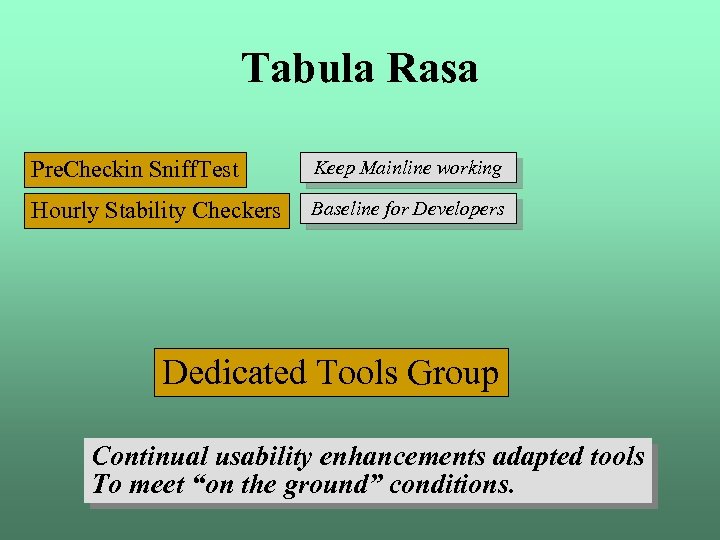

Tabula Rasa Pre. Checkin Sniff. Test Keep Mainline working Hourly Stability Checkers Baseline for Developers Dedicated Tools Group Continual usability enhancements adapted tools To meet “on the ground” conditions.

Tabula Rasa Pre. Checkin Sniff. Test Keep Mainline working Hourly Stability Checkers Baseline for Developers Dedicated Tools Group Continual usability enhancements adapted tools To meet “on the ground” conditions.

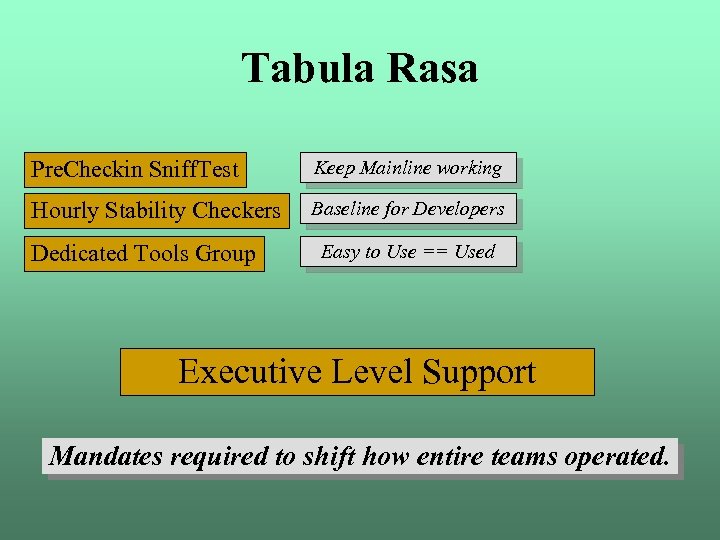

Tabula Rasa Pre. Checkin Sniff. Test Keep Mainline working Hourly Stability Checkers Baseline for Developers Dedicated Tools Group Easy to Use == Used Executive Level Support Mandates required to shift how entire teams operated.

Tabula Rasa Pre. Checkin Sniff. Test Keep Mainline working Hourly Stability Checkers Baseline for Developers Dedicated Tools Group Easy to Use == Used Executive Level Support Mandates required to shift how entire teams operated.

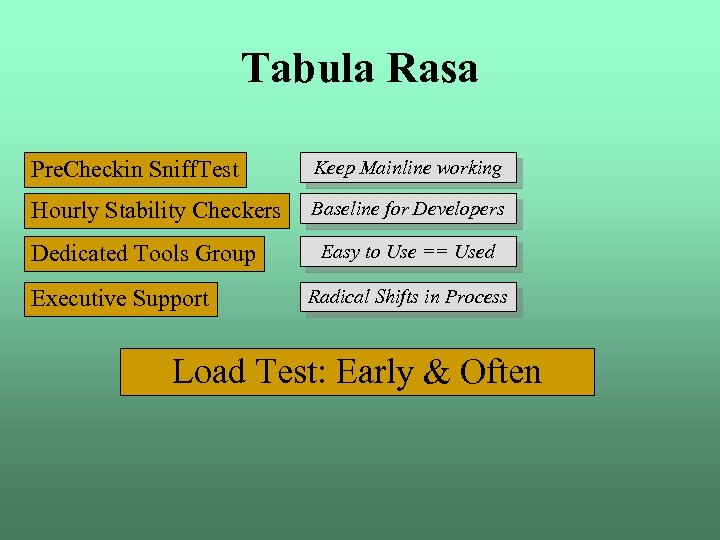

Tabula Rasa Pre. Checkin Sniff. Test Keep Mainline working Hourly Stability Checkers Baseline for Developers Dedicated Tools Group Executive Support Easy to Use == Used Radical Shifts in Process Load Test: Early & Often

Tabula Rasa Pre. Checkin Sniff. Test Keep Mainline working Hourly Stability Checkers Baseline for Developers Dedicated Tools Group Executive Support Easy to Use == Used Radical Shifts in Process Load Test: Early & Often

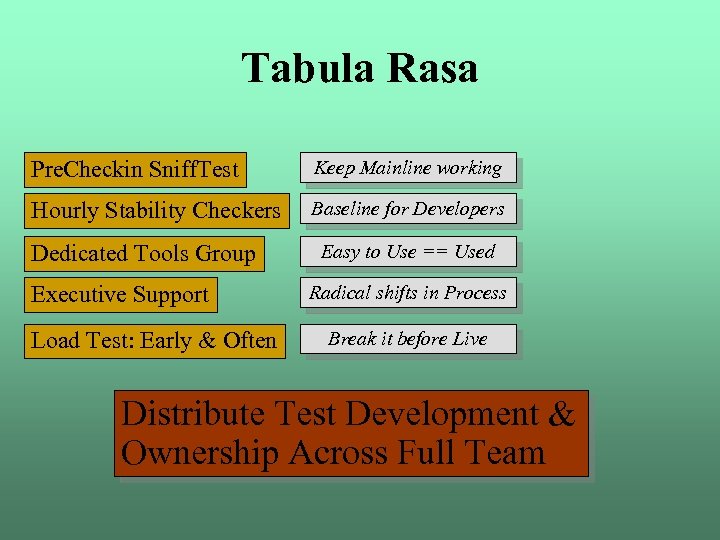

Tabula Rasa Pre. Checkin Sniff. Test Keep Mainline working Hourly Stability Checkers Baseline for Developers Dedicated Tools Group Executive Support Load Test: Early & Often Easy to Use == Used Radical shifts in Process Break it before Live Distribute Test Development & Ownership Across Full Team

Tabula Rasa Pre. Checkin Sniff. Test Keep Mainline working Hourly Stability Checkers Baseline for Developers Dedicated Tools Group Executive Support Load Test: Early & Often Easy to Use == Used Radical shifts in Process Break it before Live Distribute Test Development & Ownership Across Full Team

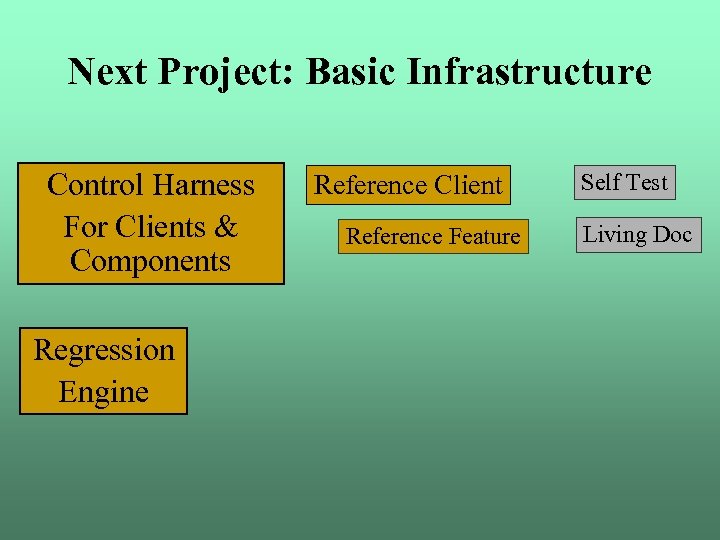

Next Project: Basic Infrastructure Control Harness For Clients & Components Regression Engine Reference Client Reference Feature Self Test Living Doc

Next Project: Basic Infrastructure Control Harness For Clients & Components Regression Engine Reference Client Reference Feature Self Test Living Doc

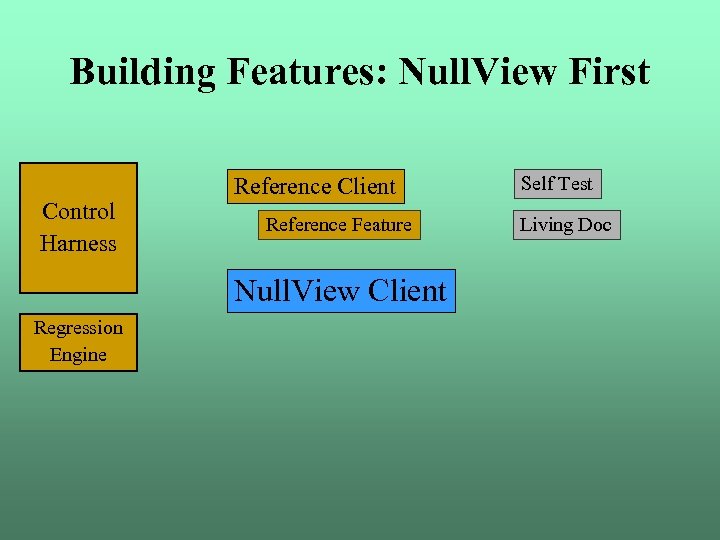

Building Features: Null. View First Control Harness Reference Client Reference Feature Null. View Client Regression Engine Self Test Living Doc

Building Features: Null. View First Control Harness Reference Client Reference Feature Null. View Client Regression Engine Self Test Living Doc

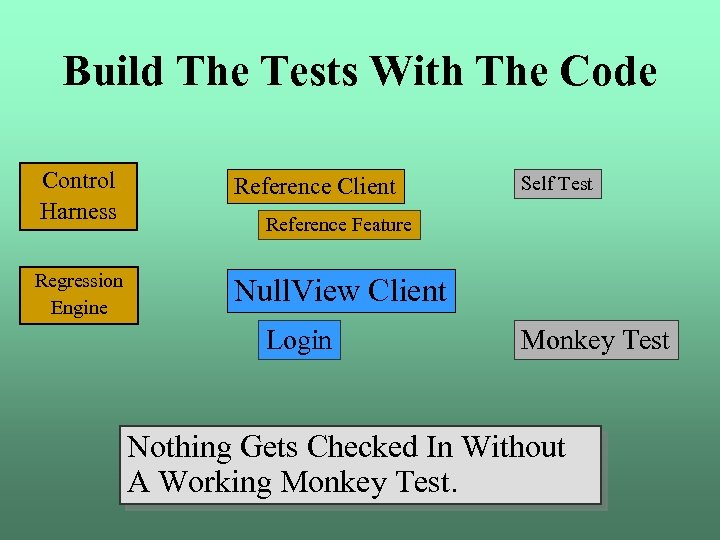

Build The Tests With The Code Control Harness Reference Client Regression Engine Null. View Client Self Test Reference Feature Login Monkey Test Nothing Gets Checked In Without A Working Monkey Test.

Build The Tests With The Code Control Harness Reference Client Regression Engine Null. View Client Self Test Reference Feature Login Monkey Test Nothing Gets Checked In Without A Working Monkey Test.

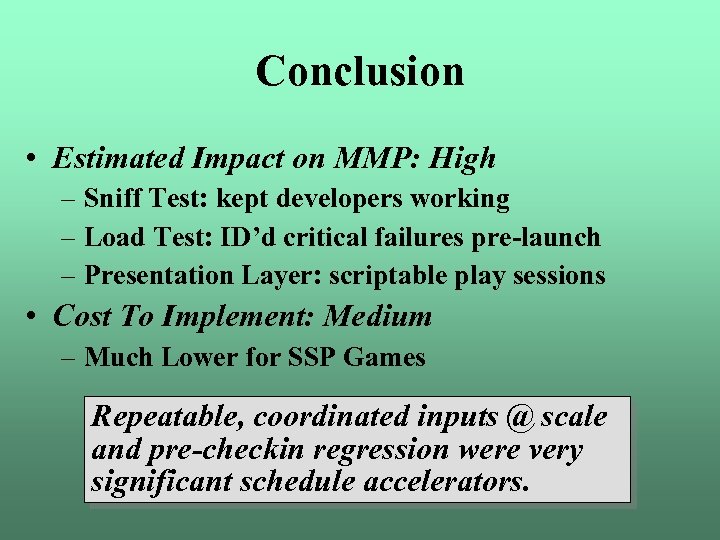

Conclusion • Estimated Impact on MMP: High – Sniff Test: kept developers working – Load Test: ID’d critical failures pre-launch – Presentation Layer: scriptable play sessions • Cost To Implement: Medium – Much Lower for SSP Games Repeatable, coordinated inputs @ scale and pre-checkin regression were very significant schedule accelerators.

Conclusion • Estimated Impact on MMP: High – Sniff Test: kept developers working – Load Test: ID’d critical failures pre-launch – Presentation Layer: scriptable play sessions • Cost To Implement: Medium – Much Lower for SSP Games Repeatable, coordinated inputs @ scale and pre-checkin regression were very significant schedule accelerators.

Conclusion Go For It…

Conclusion Go For It…

Talk Outline: Automated Testing Design & Initial Implementation 1/ Lessons Learned: Fielding 1/ Wrap-up Questions 1/ 3 3 3 Time (60 Minutes)

Talk Outline: Automated Testing Design & Initial Implementation 1/ Lessons Learned: Fielding 1/ Wrap-up Questions 1/ 3 3 3 Time (60 Minutes)