50b2855f649119fb213aadb5099d0cb0.ppt

- Количество слайдов: 35

Automated administration for storage system Presentation by Amitayu Das 1 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

Introduction l 2 Major challenges in storage management – System design and configuration (device management) – Capacity Planning (space management) – Performance tuning (performance management) – High Availability (availability management) – Automation (all of the above, in a self-managing manner) 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

Motivation l l 3 Large disk arrays and networked storage lead to huge storage capacities and high bandwidth access to facilitate consolidated storage systems. Enterprise-scale storage systems contain hundreds of host computers and storage devices and up to tens of thousands of disks. Designing, deploying and runtime management of such systems lead to huge cost (often higher than procuring cost)… Look at the problems in greater details … 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

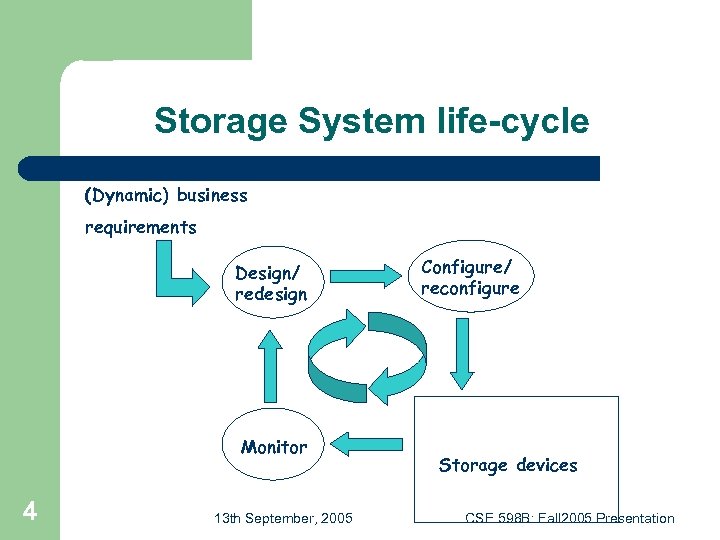

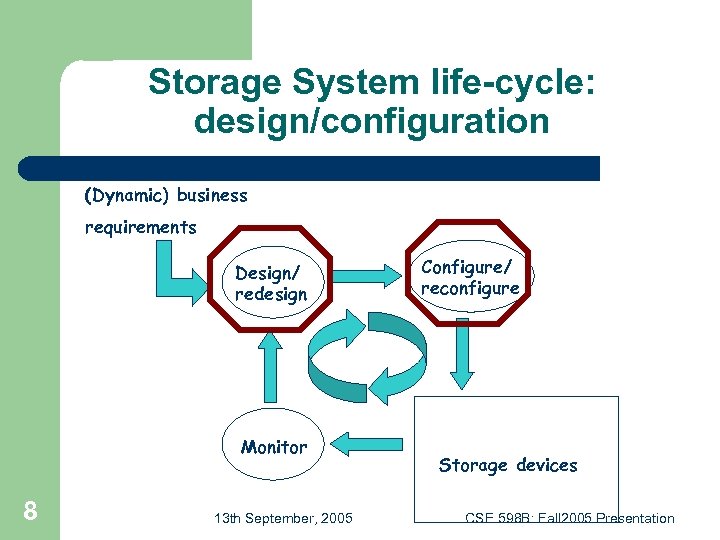

Storage System life-cycle (Dynamic) business requirements Design/ redesign Monitor 4 13 th September, 2005 Configure/ reconfigure Storage devices CSE 598 B: Fall 2005 Presentation

Storage administration functions l l l 5 Data protection Performance tuning Planning and deployment Monitoring and record-keeping Diagnosis and repair 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

Few notable attempts l l l System-managed storage (IBM) Attribute-managed storage (HP) Replication – – l l 6 RAID Online snapshot support Remote replication Online archival Interposed request routing Smart file-system switches 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

Designing problem l l 7 Given a pool of resources and workload, determine appropriate choice of devices, configure them and assign the workload to the configured storage. Solution is not straight-forward because, – Huge size of system and thousands of design choices and many choices have unforeseen circumstances. – Personnel with detailed knowledge of applications’ storage behavior are in short supply and hence, are quite expensive. – Design process is tedious and complicated to do by hand, usually leading to solutions that are grossly over-provisioned, substantially under-performing or, in the worst case, both. – Once a design is in place, implementing it is time-consuming, tedious and error-prone. – A mistake in any of these steps is difficult to identify and can 13 th September, 2005 CSE 598 B: Fall 2005 Presentation result in a failure to meet the performance requirements.

Storage System life-cycle: design/configuration (Dynamic) business requirements Design/ redesign Monitor 8 13 th September, 2005 Configure/ reconfigure Storage devices CSE 598 B: Fall 2005 Presentation

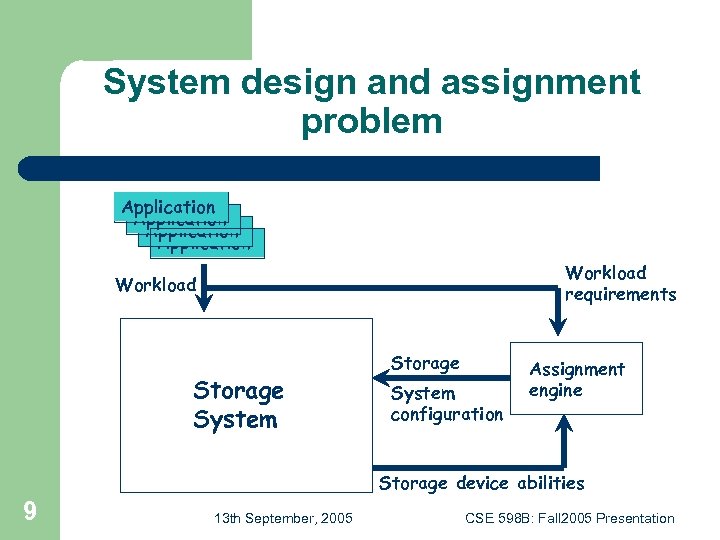

System design and assignment problem Application Workload requirements Workload Storage System configuration Assignment engine Storage device abilities 9 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

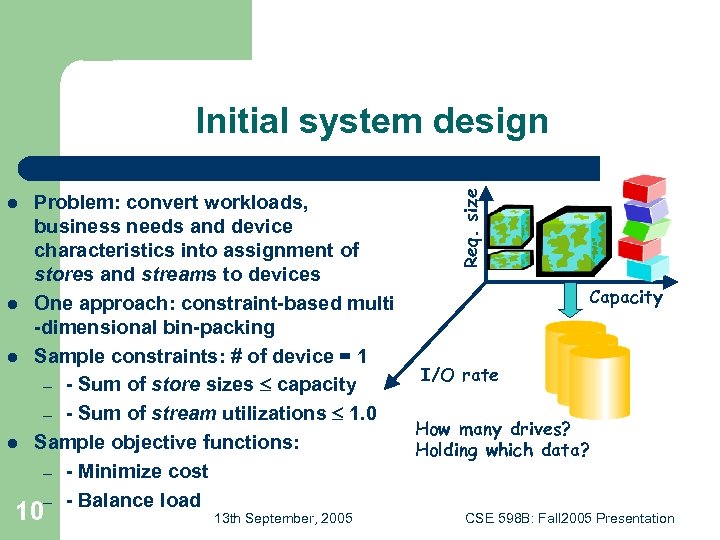

l l Problem: convert workloads, business needs and device characteristics into assignment of stores and streams to devices One approach: constraint-based multi -dimensional bin-packing Sample constraints: # of device = 1 – - Sum of store sizes capacity – - Sum of stream utilizations 1. 0 Sample objective functions: – - Minimize cost – - Balance load 10 13 th September, 2005 Req. size Initial system design Capacity I/O rate How many drives? Holding which data? CSE 598 B: Fall 2005 Presentation

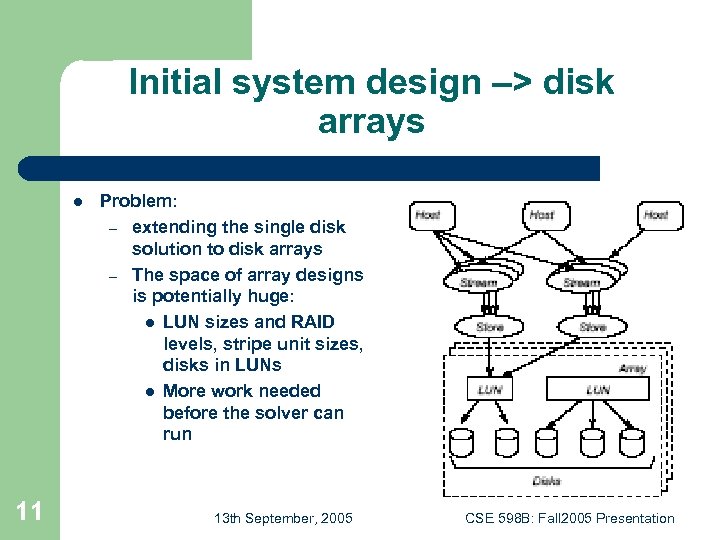

Initial system design –> disk arrays l 11 Problem: – extending the single disk solution to disk arrays – The space of array designs is potentially huge: l LUN sizes and RAID levels, stripe unit sizes, disks in LUNs l More work needed before the solver can run 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

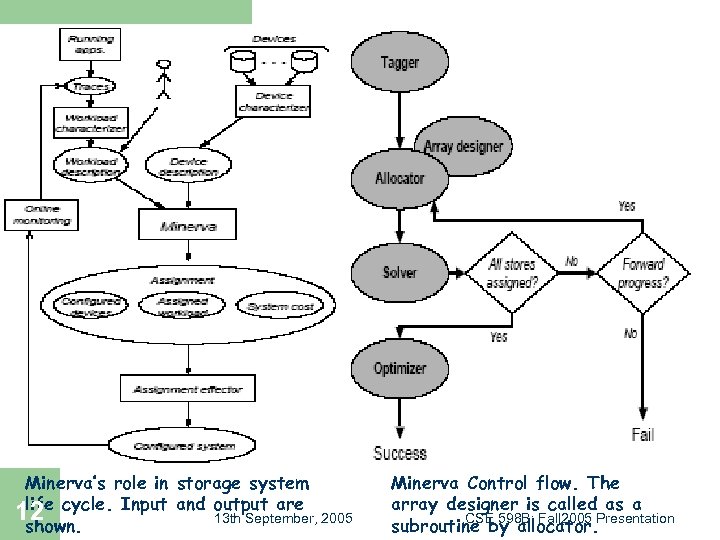

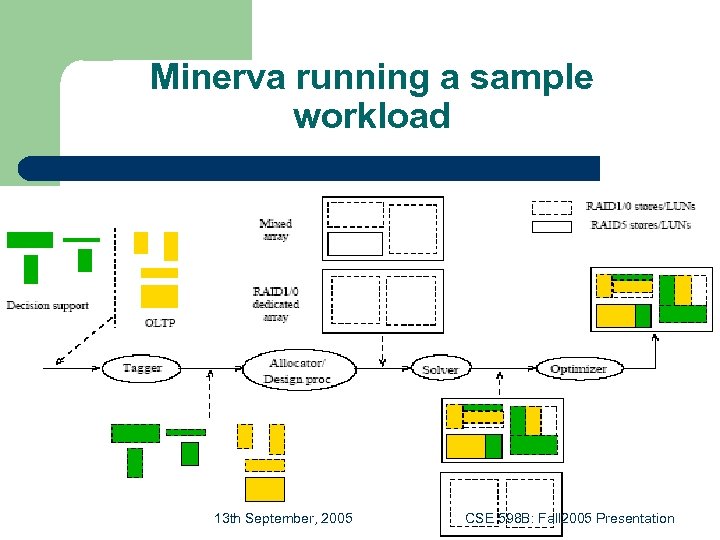

Minerva’s role in storage system life 12 cycle. Input and output are 2005 13 th September, shown. Minerva Control flow. The array designer is called as a CSE 598 B: Fall 2005 Presentation subroutine by allocator.

Minerva running a sample workload 13 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

Merits/demerits l Merits: – l Demerits: – – 14 Reasonable automation Requires accurate models of workloads, performance requirements, and devices Address only the mechanisms, not the policy 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

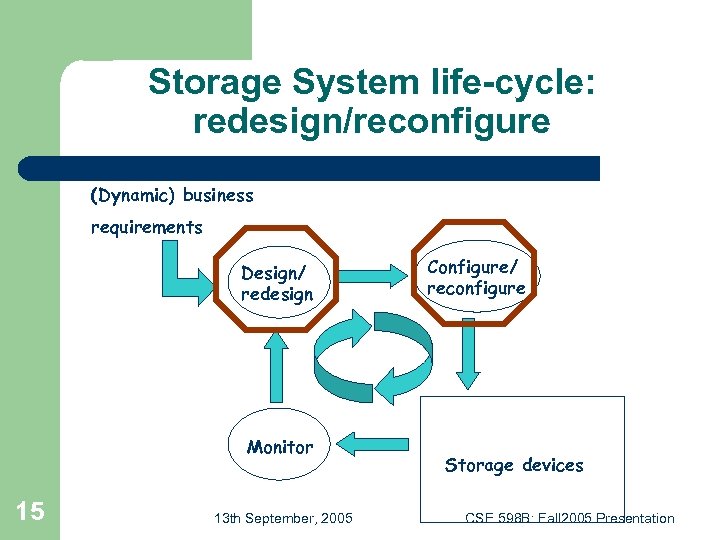

Storage System life-cycle: redesign/reconfigure (Dynamic) business requirements Design/ redesign Monitor 15 13 th September, 2005 Configure/ reconfigure Storage devices CSE 598 B: Fall 2005 Presentation

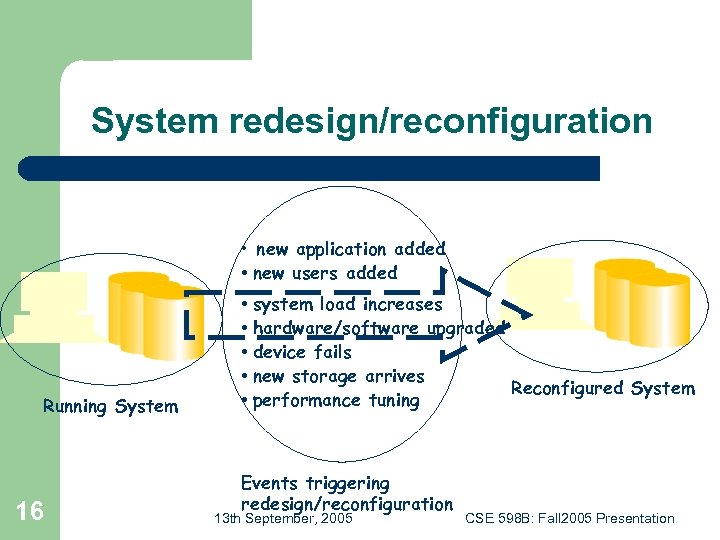

System redesign/reconfiguration • new application added • new users added Running System 16 • system load increases • hardware/software upgraded • device fails • new storage arrives Reconfigured System • performance tuning Events triggering redesign/reconfiguration 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

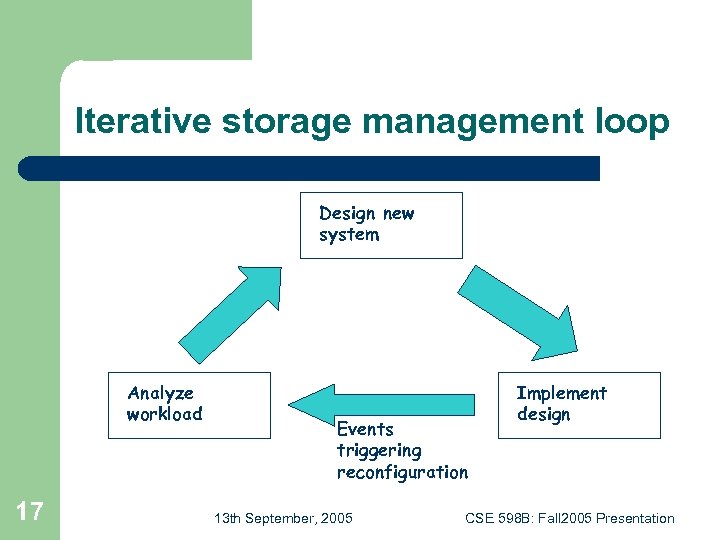

Iterative storage management loop Design new system Analyze workload 17 Events triggering reconfiguration 13 th September, 2005 Implement design CSE 598 B: Fall 2005 Presentation

Hippodrome l Two objectives: – – 18 The automated loop must converge on a viable design that meets the workload’s requirements without over- or underprovisioning. It must converge to a stable final system as quickly as possible, with as little as input from its users. 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

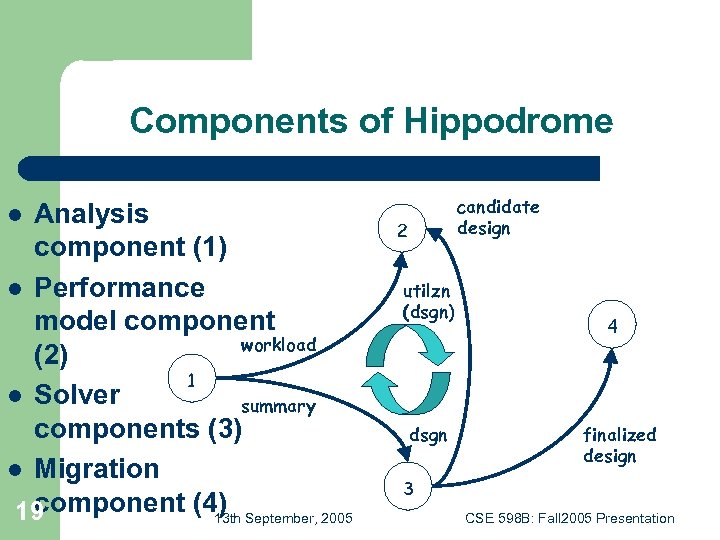

Components of Hippodrome Analysis component (1) l Performance model component workload (2) 1 l Solver summary components (3) l Migration component (4) September, 2005 19 13 th l candidate design 2 utilzn (dsgn) dsgn 4 finalized design 3 CSE 598 B: Fall 2005 Presentation

Issues in system design and allocation l l l 20 What optimization algorithms are most effective? What optimization objectives and constraints produce reasonable designs? – ex: cost of reconfiguring system What's the right part of the storage design space to explore? – ex: RAID level vs. stripe unit size vs. cache management parameters What are reasonable general guidelines for tagging a store's RAID level? What (other) decompositions of the design and allocation problem are reasonable? How to generalize system design? – for SAN environment – for host and applications 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

Issues in reconfiguration l l l 21 How to do system discovery? – e. g. , existing state, presence of new devices – Dealing with inconsistent information – In a scalable fashion How to abstractly describe storage devices? – For system discovery output – For input to tools that perform changes How to automate the physical redesign process? – e. g. , physical space allocation etc. Events trigger redesign decision – – How do we decide when to reconfigure? Reconfiguration inputs: – current system configuration/assignment – desired system configuration/assignment 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

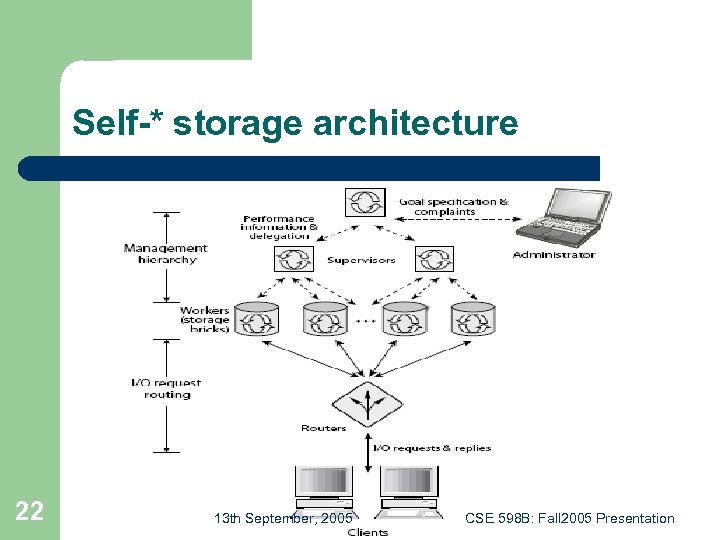

Self-* storage architecture 22 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

Administration and organization l l l 23 Administrative interface Supervisors Administrative assistants Data access and storage Routers Workers 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

Merits l Simpler storage administration – – – 24 Data protection Performance tuning Planning and deployment Monitoring and record-keeping Diagnosis and repair 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

Demerits l l 25 The proposed solution is too simplistic to handle the issues raised. Authors have provided solution from a high-level viewpoint, but the solution is not complete in any sense. The implementation and evaluation is not convincing enough. All the aspects of “self-*” has not been addressed as claimed. 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

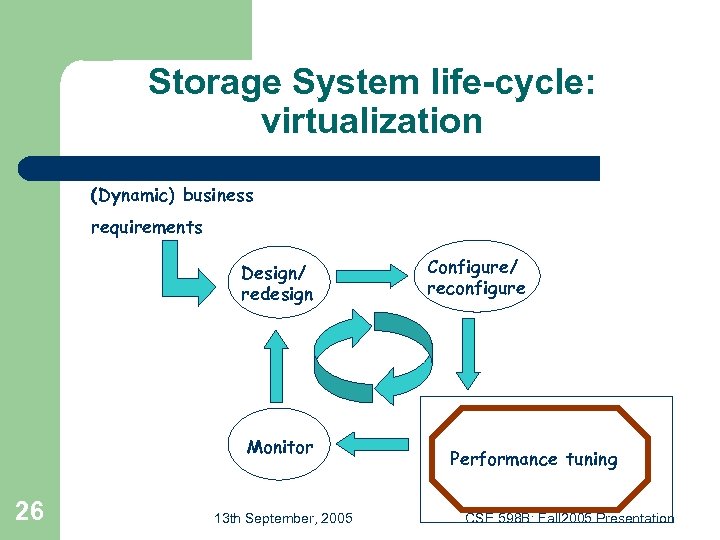

Storage System life-cycle: virtualization (Dynamic) business requirements Design/ redesign Monitor 26 13 th September, 2005 Configure/ reconfigure Performance tuning CSE 598 B: Fall 2005 Presentation

Runtime management problem l l 27 Often, enterprise customers outsource their storage needs to data centers. At data centers, different workload /application /services share the underlying storage infrastructure. Sharing (of disk drives, storage caches, network links, controllers etc. ) can lead to interference between the users/applications leading to possible violations in performance-based Qo. S guarantees. To prevent that, data centers needs to insulate the users from each other – virtualization. 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

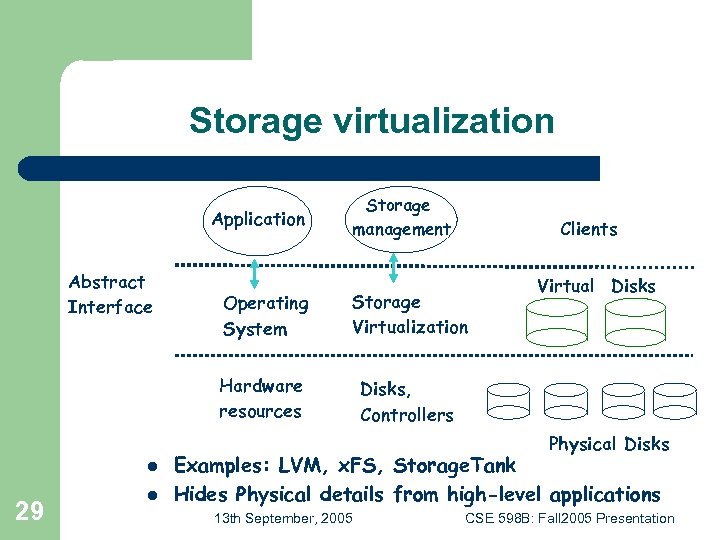

Need for virtualization l l l 28 At data centers, many different enterprise servers that support different business processes, such as, Web servers, file servers, database serves may have very different performance requirements on their backend storage server. Sophisticated resource allocation and scheduling technology is required to effectively isolate these logical storage servers as if they are separate physical storage servers. Storage Virtualization refers to the technology that allows creation of a set of logical CSE 598 B: Fall 2005 Presentation storage devices from 13 th September, 2005

Storage virtualization Application Abstract Interface Storage management Clients Operating System Storage Virtualization Hardware resources Virtual Disks, Controllers Physical Disks l 29 l Examples: LVM, x. FS, Storage. Tank Hides Physical details from high-level applications 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

Dimensions of virtualization l l l 30 Commercial storage virtualization systems are rather limited because they can virtualize storage capacity. However, from the standpoint of storage clients or enterprise servers, the virtual storage devices are desired to be as tangible as physical disks. Need to virtualize efficiently any standard attribute associated with a physical disk, such as capacity, bandwidth, latency, availability etc. 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

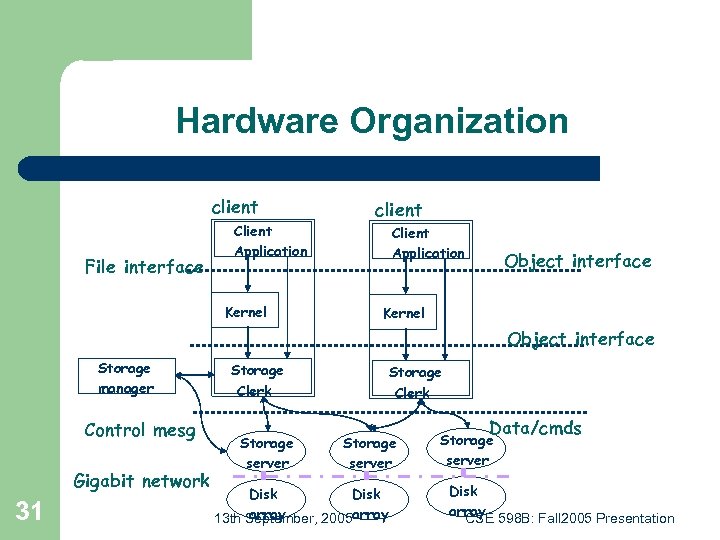

Hardware Organization client File interface Client Application Kernel client Client Application Object interface Kernel Object interface Storage manager Control mesg Gigabit network 31 Storage Clerk Storage server Disk array 13 th September, 2005 array Data/cmds Storage server Disk array 598 B: Fall 2005 Presentation CSE

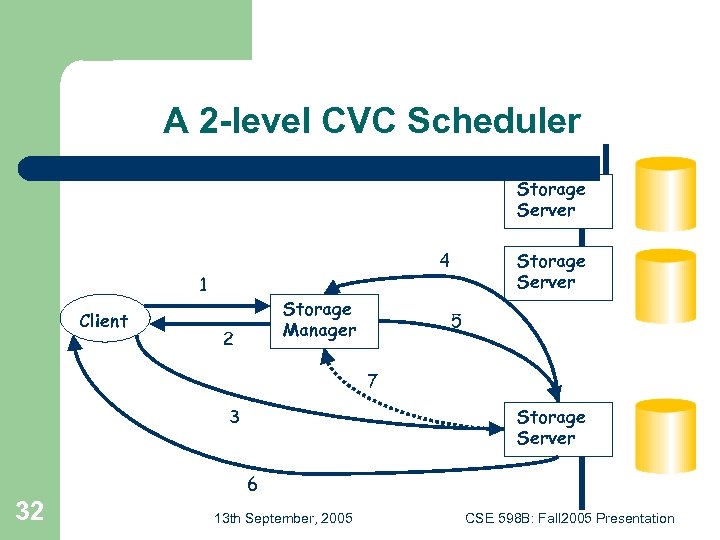

A 2 -level CVC Scheduler Storage Server 4 1 Client Storage Manager 2 Storage Server 5 7 3 Storage Server 6 32 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

References l l l 33 Hippodrome: running circles around storage administration. Eric Anderson et. al. , FAST ’ 02, pp. 175 -188, January 2002. Minerva: an automated resource provisioning tool for large -scale storage systems. G. Alveraz et. al. , ACM Transactions on Computer Systems 19 (4): 483 -518, November 2001 Ergastulum: quickly finding near-optimal storage system designs. Eric Anderson et. al. , Technical Report from HP Laboratories. Disk Array Models in Minerva. Arif Merchant et. al. , Technical Report, HP Laboratories. Self-* Storage: Brick-based Storage with Automated Administration. September, 2005 et. al. , Technical 598 B: Fall 2005 Presentation 13 th G. Ganger CSE report, 2003

References l l l 34 SIGMETRICS ’ 00 Tutorial, HP Laboratories. Optimization algorithms – Bin-packing Heuristics [Coffman 84] – Toyoda Gradient [Toyoda 75] – Simulated Annealing [Drexl 88] – Relaxation Approaches [Pattipati 90, Trick 92] – Genetic Algorithms [Chu 97] Multidimensional Storage Virtualization. Lan Huang et. al. , SIGMETRICS ’ 04, New York, June 2004. An Interposed 2 -Level I/O Scheduling Framework for Performance Virtualization. J. Zhang et. al. , SIGMETRICS ’ 05 Efficiency-aware disk scheduler: – - Cello, Prism, YFQ 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

35 13 th September, 2005 CSE 598 B: Fall 2005 Presentation

50b2855f649119fb213aadb5099d0cb0.ppt