1803a26ba8b8c9aec080df4ec48d07af.ppt

- Количество слайдов: 65

Audit, Security Evaluation, Information Assurance Nicolas T. Courtois - University College London

Audit, Security Evaluation, Information Assurance Nicolas T. Courtois - University College London

Comp. Sec COMPGA 01 Audit 2 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Audit 2 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Audit Major source of jobs Audit – several different types: • • • Quality audits… In project management: advancement audits, In accounting: Audit checks the accounts – and compliance to certain rules such as nobody is claiming 200 % of his time, • Internal Audit: – compliance to the internal rules of an organization, • External Audit: – an authority checks compliance to laws, and regulations, 3 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Audit Major source of jobs Audit – several different types: • • • Quality audits… In project management: advancement audits, In accounting: Audit checks the accounts – and compliance to certain rules such as nobody is claiming 200 % of his time, • Internal Audit: – compliance to the internal rules of an organization, • External Audit: – an authority checks compliance to laws, and regulations, 3 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 IT and Audit In IT: Certified Information Systems Auditor (CISA) – scope is the whole of IT, not only security… In Information Security: Audit = analysis of past events in order to see if the security breaches have occurred or have been attempted. Offline, after the facts… About security. 4 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 IT and Audit In IT: Certified Information Systems Auditor (CISA) – scope is the whole of IT, not only security… In Information Security: Audit = analysis of past events in order to see if the security breaches have occurred or have been attempted. Offline, after the facts… About security. 4 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Residual Risk = def what remains after defences are in place… 5 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Residual Risk = def what remains after defences are in place… 5 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Audit is Needed because of this residual risk. Things are going to happen. Sometimes it is a part of the whole system of defenses. Certain security violations will be detected ONLY at a much later time. That’s all right if we can • trace the culprit, • cancel certain transactions, • revoke certain keys, • etc… 6 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Audit is Needed because of this residual risk. Things are going to happen. Sometimes it is a part of the whole system of defenses. Certain security violations will be detected ONLY at a much later time. That’s all right if we can • trace the culprit, • cancel certain transactions, • revoke certain keys, • etc… 6 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Audit Logs Example: In access control we do record: • subject requesting • object of the request • operation requested • time and date • location / IP address of the request • status of the request: – granted / denied, – physical resources used (like CPU time). . . 7 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Audit Logs Example: In access control we do record: • subject requesting • object of the request • operation requested • time and date • location / IP address of the request • status of the request: – granted / denied, – physical resources used (like CPU time). . . 7 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Why Not More? Choices are specific to each application. If memory is critical, it is very important to log only the minimum, example: in bank cards. Otherwise nowadays space if getting cheaper and cheaper… But even then • legal or contractual obligations – (e. g. credit card merchants are NOT allowed to store the CVC) • or privacy-friendly design considerations • “proportionality principles” will prevent us from logging too much information… 8 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Why Not More? Choices are specific to each application. If memory is critical, it is very important to log only the minimum, example: in bank cards. Otherwise nowadays space if getting cheaper and cheaper… But even then • legal or contractual obligations – (e. g. credit card merchants are NOT allowed to store the CVC) • or privacy-friendly design considerations • “proportionality principles” will prevent us from logging too much information… 8 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Intrusion Detection 9 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Intrusion Detection 9 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Intrusion Detection Audit: • usually offline, after the facts Intrusion detection: • usually active, real time, online. • otherwise we speak about “passive intrusion detection”… 10 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Intrusion Detection Audit: • usually offline, after the facts Intrusion detection: • usually active, real time, online. • otherwise we speak about “passive intrusion detection”… 10 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Intrusion Detection • • 11 Threshold-based Anomaly-based, Rule-based, State transition-based, Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Intrusion Detection • • 11 Threshold-based Anomaly-based, Rule-based, State transition-based, Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Most Basic Intrusion Detection Notion of “security events” 12 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Most Basic Intrusion Detection Notion of “security events” 12 Nicolas T. Courtois, December 2009

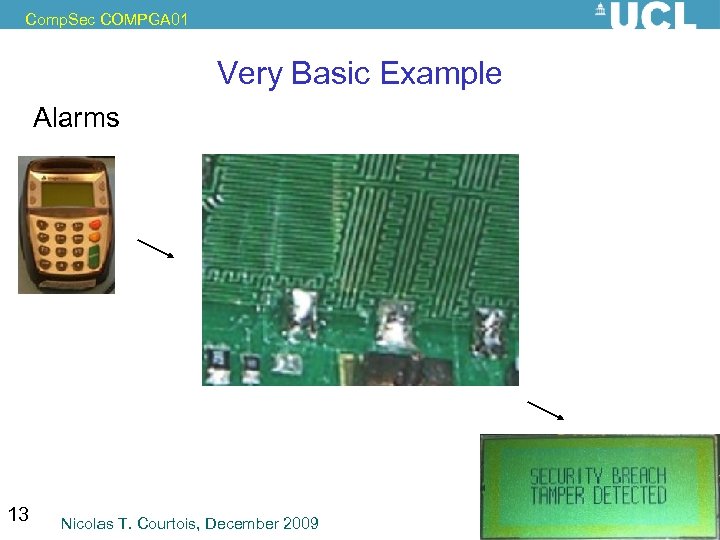

Comp. Sec COMPGA 01 Very Basic Example Alarms 13 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Very Basic Example Alarms 13 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Intrusion Detection • Threshold-based = detect abnormal use. Examples: • try 4 different PINs in an ATM, – the card will stop working forever • high CPU load, a lot of data sent out… Cons: false positives. (for other methods too…) 14 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Intrusion Detection • Threshold-based = detect abnormal use. Examples: • try 4 different PINs in an ATM, – the card will stop working forever • high CPU load, a lot of data sent out… Cons: false positives. (for other methods too…) 14 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Intrusion Detection (2) • Anomaly-based: Defines profiles: what is the normal behavior in terms of • key security events • CPU/memory usage by a user • their timing • How to Measure the deviation? – Each profile will define acceptable intervals, otherwise an alarm will be raised. • Example: an employee of the bank is expected to do between 10 and 40 credit history checks per day in office, with time intervals of at least 10 minutes. – Can also use the time series model: • events occurring in circumstances where the historical probability is VERY low (say a credit check during the lunch break in a big French bank) will raise an alert. 15 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Intrusion Detection (2) • Anomaly-based: Defines profiles: what is the normal behavior in terms of • key security events • CPU/memory usage by a user • their timing • How to Measure the deviation? – Each profile will define acceptable intervals, otherwise an alarm will be raised. • Example: an employee of the bank is expected to do between 10 and 40 credit history checks per day in office, with time intervals of at least 10 minutes. – Can also use the time series model: • events occurring in circumstances where the historical probability is VERY low (say a credit check during the lunch break in a big French bank) will raise an alert. 15 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Key Latency Control • Powerful mechanism to detect anomalies in behavior of employees and to detect malware. 16 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Key Latency Control • Powerful mechanism to detect anomalies in behavior of employees and to detect malware. 16 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Intrusion Detection (2, 3) • Anomaly-based – Doesn’t require a lot of technical knowledge, • it is just doing stats on apples and bananas… • Rule-based, can be based on: – past experience of intrusions / breaches – Known system vulnerabilities / attacks Cons: require a lot of expert knowledge… 17 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Intrusion Detection (2, 3) • Anomaly-based – Doesn’t require a lot of technical knowledge, • it is just doing stats on apples and bananas… • Rule-based, can be based on: – past experience of intrusions / breaches – Known system vulnerabilities / attacks Cons: require a lot of expert knowledge… 17 Nicolas T. Courtois, December 2009

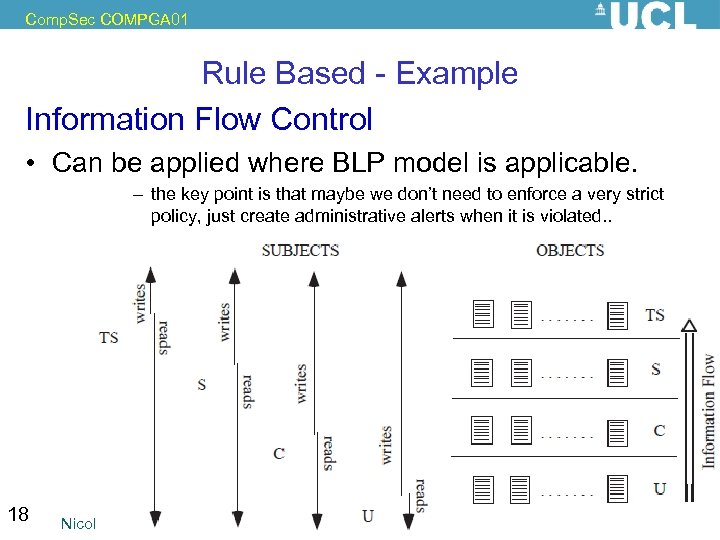

Comp. Sec COMPGA 01 Rule Based - Example Information Flow Control • Can be applied where BLP model is applicable. – the key point is that maybe we don’t need to enforce a very strict policy, just create administrative alerts when it is violated. . 18 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Rule Based - Example Information Flow Control • Can be applied where BLP model is applicable. – the key point is that maybe we don’t need to enforce a very strict policy, just create administrative alerts when it is violated. . 18 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Intrusion Detection (4) • State transition-based: Require a sort of “security model” where: – Each state is characterized by assertions evaluating whether certain conditions are verified in the system. – For example, check if a user has the right privileges for a given object. 19 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Intrusion Detection (4) • State transition-based: Require a sort of “security model” where: – Each state is characterized by assertions evaluating whether certain conditions are verified in the system. – For example, check if a user has the right privileges for a given object. 19 Nicolas T. Courtois, December 2009

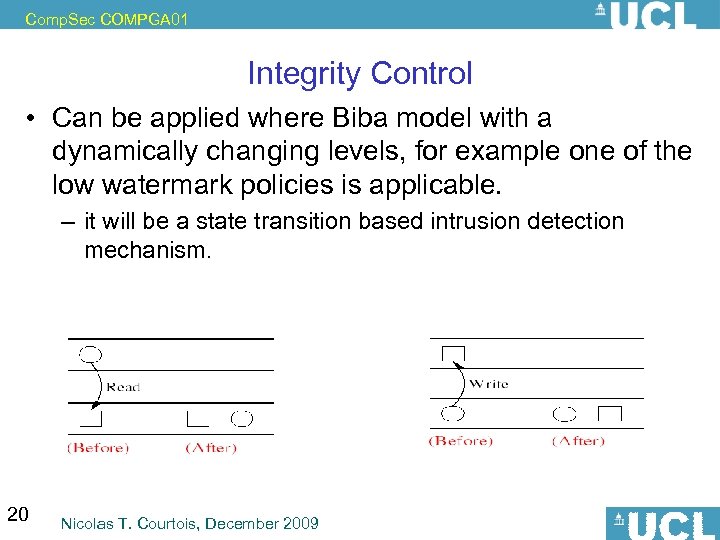

Comp. Sec COMPGA 01 Integrity Control • Can be applied where Biba model with a dynamically changing levels, for example one of the low watermark policies is applicable. – it will be a state transition based intrusion detection mechanism. 20 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Integrity Control • Can be applied where Biba model with a dynamically changing levels, for example one of the low watermark policies is applicable. – it will be a state transition based intrusion detection mechanism. 20 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Information Assurance 21 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Information Assurance 21 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Security Safety intentional damages. . . 22 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Security Safety intentional damages. . . 22 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Scope 4 Today Both: Safety and Security! 23 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Scope 4 Today Both: Safety and Security! 23 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Who to Trust ? Þpejorative: “Commercial security”; works if you believe it. Imagine a banker who wants to buy a product which is “secure”. How can he know it is secure? Security usually cannot be seen… Only the opposite is visible… 24 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Who to Trust ? Þpejorative: “Commercial security”; works if you believe it. Imagine a banker who wants to buy a product which is “secure”. How can he know it is secure? Security usually cannot be seen… Only the opposite is visible… 24 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 What Makes That a Product is Safe? • Inherent to the technology: – trains and lifts are inherently MUCH safer than cars… – bad news: computers + networks are by nature a security nightmare… • Market regulation: – example: make an airbag a legal obligation. – no legal obligations for computers yet… • “nobody is in charge”… 25 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 What Makes That a Product is Safe? • Inherent to the technology: – trains and lifts are inherently MUCH safer than cars… – bad news: computers + networks are by nature a security nightmare… • Market regulation: – example: make an airbag a legal obligation. – no legal obligations for computers yet… • “nobody is in charge”… 25 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Open Source vs. Closed Source Can be much easier to attack! will many people looking at source code will find the bugs? • bugs are VERY HARD to find, – people rarely get paid for that… much less hours spent… • security problems can be a result of a combination of: – code+compiler (e. g. buffer overflow) – code+hardware (side channel attacks on crypto) – good programmers + poor security background (prevalent) 26 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Open Source vs. Closed Source Can be much easier to attack! will many people looking at source code will find the bugs? • bugs are VERY HARD to find, – people rarely get paid for that… much less hours spent… • security problems can be a result of a combination of: – code+compiler (e. g. buffer overflow) – code+hardware (side channel attacks on crypto) – good programmers + poor security background (prevalent) 26 Nicolas T. Courtois, December 2009

![Comp. Sec COMPGA 01 Boeing vs. Airbus: [factories wide open to visitors] 60 recorded Comp. Sec COMPGA 01 Boeing vs. Airbus: [factories wide open to visitors] 60 recorded](https://present5.com/presentation/1803a26ba8b8c9aec080df4ec48d07af/image-27.jpg) Comp. Sec COMPGA 01 Boeing vs. Airbus: [factories wide open to visitors] 60 recorded crashes for 12 million flights. Boeing: [their factories are closed, security!] 194 crashed for 35 million flights. Almost the same rate: 1 crash out of 0. 2 M flights. Beware of statistical manipulation: how many crashes per mile? Per hour of flight? Deaths per hour*passenger? Etc… Cars vs. planes: similar rate of 1. 5 death / 100 million miles traveled… 27 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Boeing vs. Airbus: [factories wide open to visitors] 60 recorded crashes for 12 million flights. Boeing: [their factories are closed, security!] 194 crashed for 35 million flights. Almost the same rate: 1 crash out of 0. 2 M flights. Beware of statistical manipulation: how many crashes per mile? Per hour of flight? Deaths per hour*passenger? Etc… Cars vs. planes: similar rate of 1. 5 death / 100 million miles traveled… 27 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 **Which Model is Better? Open and closed security are as more or less equivalent, more or less as secure: • opening the system helps both the attackers and the defenders. Ross Anderson: Open and Closed Systems are Equivalent (that is, in an ideal world). In Perspectives on Free and Open Source Software, MIT Press 2005, pp. 127 -142. 28 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 **Which Model is Better? Open and closed security are as more or less equivalent, more or less as secure: • opening the system helps both the attackers and the defenders. Ross Anderson: Open and Closed Systems are Equivalent (that is, in an ideal world). In Perspectives on Free and Open Source Software, MIT Press 2005, pp. 127 -142. 28 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Airlines: What they Do “High Assurance” industry, obsessed with safety since ever… What is Assurance? 1. Building confidence that systems meet the security criteria, based on application of “assurance” techniques: – – – design methodology expert design analysis assessment/testing 2. Estimate the likelihood that bad things will happen… 29 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Airlines: What they Do “High Assurance” industry, obsessed with safety since ever… What is Assurance? 1. Building confidence that systems meet the security criteria, based on application of “assurance” techniques: – – – design methodology expert design analysis assessment/testing 2. Estimate the likelihood that bad things will happen… 29 Nicolas T. Courtois, December 2009

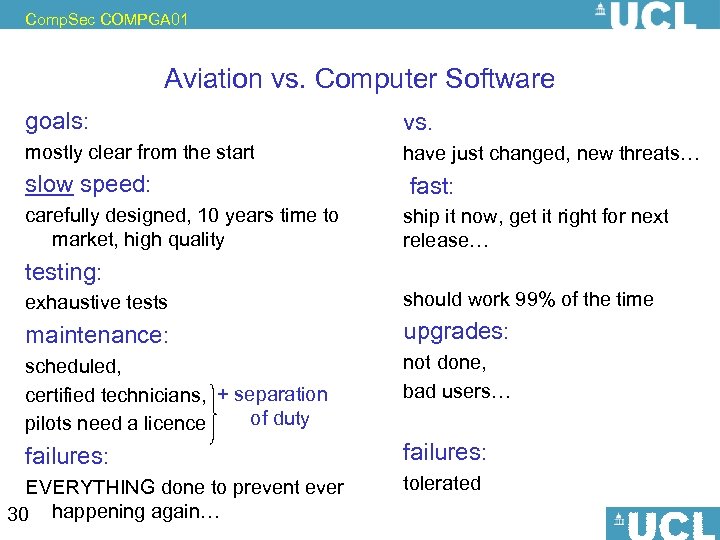

Comp. Sec COMPGA 01 Aviation vs. Computer Software goals: vs. mostly clear from the start have just changed, new threats… slow speed: carefully designed, 10 years time to market, high quality fast: ship it now, get it right for next release… testing: exhaustive tests should work 99% of the time maintenance: upgrades: scheduled, certified technicians, + separation of duty pilots need a licence not done, bad users… failures: EVERYTHING done to prevent ever 30 happening again… Nicolas T. Courtois, December 2009 tolerated

Comp. Sec COMPGA 01 Aviation vs. Computer Software goals: vs. mostly clear from the start have just changed, new threats… slow speed: carefully designed, 10 years time to market, high quality fast: ship it now, get it right for next release… testing: exhaustive tests should work 99% of the time maintenance: upgrades: scheduled, certified technicians, + separation of duty pilots need a licence not done, bad users… failures: EVERYTHING done to prevent ever 30 happening again… Nicolas T. Courtois, December 2009 tolerated

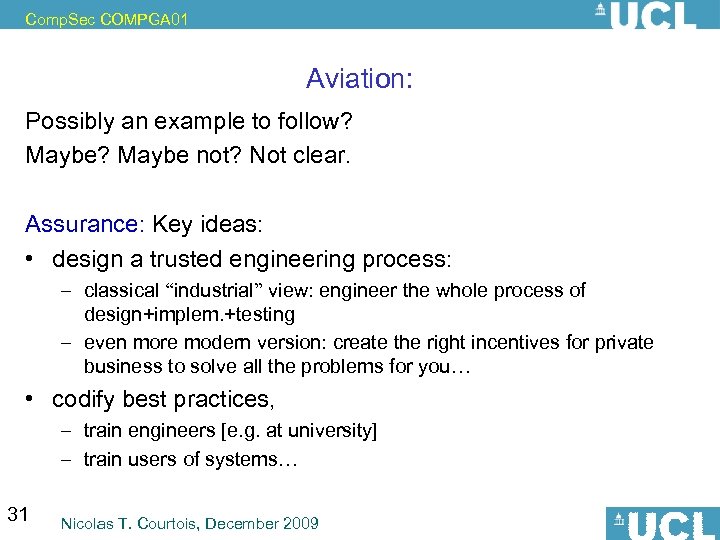

Comp. Sec COMPGA 01 Aviation: Possibly an example to follow? Maybe not? Not clear. Assurance: Key ideas: • design a trusted engineering process: – classical “industrial” view: engineer the whole process of design+implem. +testing – even more modern version: create the right incentives for private business to solve all the problems for you… • codify best practices, – train engineers [e. g. at university] – train users of systems… 31 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Aviation: Possibly an example to follow? Maybe not? Not clear. Assurance: Key ideas: • design a trusted engineering process: – classical “industrial” view: engineer the whole process of design+implem. +testing – even more modern version: create the right incentives for private business to solve all the problems for you… • codify best practices, – train engineers [e. g. at university] – train users of systems… 31 Nicolas T. Courtois, December 2009

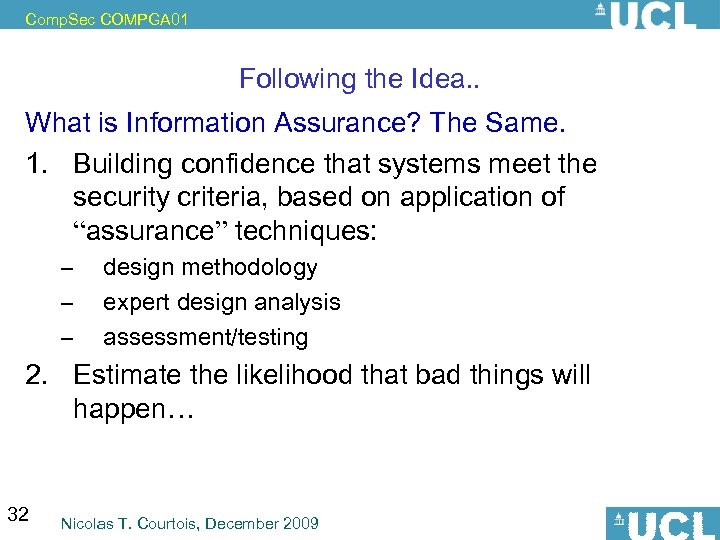

Comp. Sec COMPGA 01 Following the Idea. . What is Information Assurance? The Same. 1. Building confidence that systems meet the security criteria, based on application of “assurance” techniques: – – – design methodology expert design analysis assessment/testing 2. Estimate the likelihood that bad things will happen… 32 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Following the Idea. . What is Information Assurance? The Same. 1. Building confidence that systems meet the security criteria, based on application of “assurance” techniques: – – – design methodology expert design analysis assessment/testing 2. Estimate the likelihood that bad things will happen… 32 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Evidence-based Assurance Sullivan: there should be sufficient credible evidence that the system meets the requirements… 33 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Evidence-based Assurance Sullivan: there should be sufficient credible evidence that the system meets the requirements… 33 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Ross Anderson: “Fundamentally, assurance comes down to the question of whether capable, motivated people have beat up on the system enough. • But how do you define enough? • And how do you define the system? • How do you deal with people who protect the wrong thing, … out of date or plain wrong? … • allow for human failures? ” 34 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Ross Anderson: “Fundamentally, assurance comes down to the question of whether capable, motivated people have beat up on the system enough. • But how do you define enough? • And how do you define the system? • How do you deal with people who protect the wrong thing, … out of date or plain wrong? … • allow for human failures? ” 34 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Failures 35 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Failures 35 Nicolas T. Courtois, December 2009

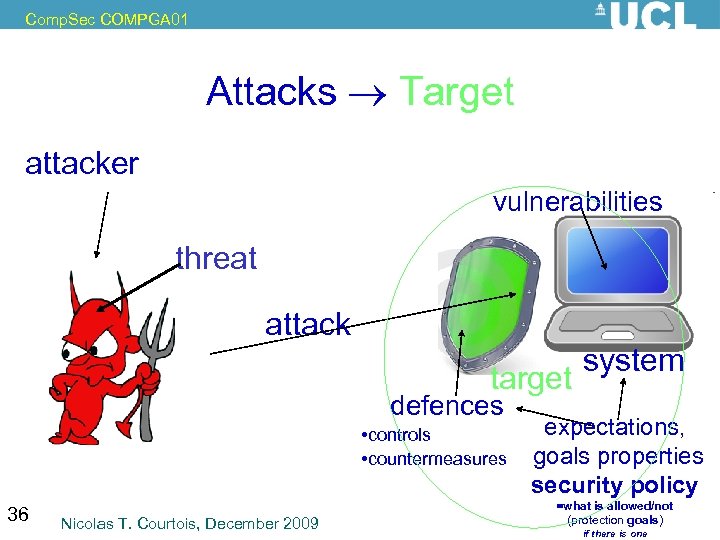

Comp. Sec COMPGA 01 Attacks Target attacker vulnerabilities threat potential attack scenario attack target defences • controls • countermeasures 36 Nicolas T. Courtois, December 2009 system expectations, goals properties security policy =what is allowed/not (protection goals) if there is one

Comp. Sec COMPGA 01 Attacks Target attacker vulnerabilities threat potential attack scenario attack target defences • controls • countermeasures 36 Nicolas T. Courtois, December 2009 system expectations, goals properties security policy =what is allowed/not (protection goals) if there is one

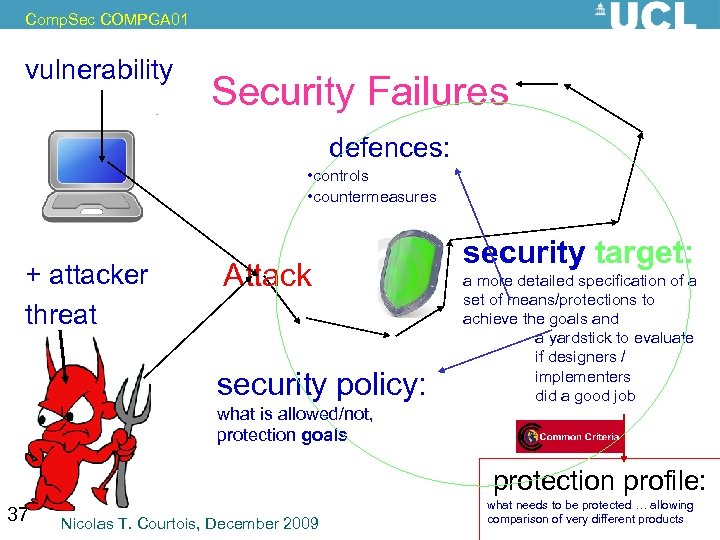

Comp. Sec COMPGA 01 vulnerability Security Failures defences: • controls • countermeasures Actual + attacker threat potential Attack scenario attack scenario security policy: what is allowed/not, protection goals security target: a more detailed specification of a set of means/protections to achieve the goals and a yardstick to evaluate if designers / implementers did a good job protection profile: 37 Nicolas T. Courtois, December 2009 what needs to be protected … allowing comparison of very different products famous for omissions

Comp. Sec COMPGA 01 vulnerability Security Failures defences: • controls • countermeasures Actual + attacker threat potential Attack scenario attack scenario security policy: what is allowed/not, protection goals security target: a more detailed specification of a set of means/protections to achieve the goals and a yardstick to evaluate if designers / implementers did a good job protection profile: 37 Nicolas T. Courtois, December 2009 what needs to be protected … allowing comparison of very different products famous for omissions

![Comp. Sec COMPGA 01 Types of Failures [Sullivan] • Failure in design – – Comp. Sec COMPGA 01 Types of Failures [Sullivan] • Failure in design – –](https://present5.com/presentation/1803a26ba8b8c9aec080df4ec48d07af/image-38.jpg) Comp. Sec COMPGA 01 Types of Failures [Sullivan] • Failure in design – – • Failure in implementation – • omissions/mistakes in the spec bad engineering /faulty design hardware/software Implementation Assurance Failure in operation – – – operator errors willful misuse random failures • • 38 Design Assurance – Operational Assurance hardware or comm/network malfunction natural causes: “Acts of God” failure in upgrade / maintenance Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Types of Failures [Sullivan] • Failure in design – – • Failure in implementation – • omissions/mistakes in the spec bad engineering /faulty design hardware/software Implementation Assurance Failure in operation – – – operator errors willful misuse random failures • • 38 Design Assurance – Operational Assurance hardware or comm/network malfunction natural causes: “Acts of God” failure in upgrade / maintenance Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Design Assurance requirements should determine the security policy, or security model == the space of possible security policies 39 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Design Assurance requirements should determine the security policy, or security model == the space of possible security policies 39 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Policy Assurance Evidence that the set of security requirements is: • Complete: – Every situation is either “safe” or “unsafe”. • Ambitious. Are we sure we need this one? • Consistent: – Free of (formal) contradiction. . . We want it! • Technically Sound: – The policy captures what we wanted, • not like to Biba policy where all subjects are downgraded immediately, and everybody is secure by name… but not in meaningful way. 40 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Policy Assurance Evidence that the set of security requirements is: • Complete: – Every situation is either “safe” or “unsafe”. • Ambitious. Are we sure we need this one? • Consistent: – Free of (formal) contradiction. . . We want it! • Technically Sound: – The policy captures what we wanted, • not like to Biba policy where all subjects are downgraded immediately, and everybody is secure by name… but not in meaningful way. 40 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Design Assurance Show that the policy requirements will be met… 41 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Design Assurance Show that the policy requirements will be met… 41 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Implementation Assurance Considerations • implemented correctly • Using tools and best practices used to avoid introducing extra implementation vulnerabilities (e. g. side channels, backdoors, etc…) • testing • proof of correctness? Hard. • document the product well! 42 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Implementation Assurance Considerations • implemented correctly • Using tools and best practices used to avoid introducing extra implementation vulnerabilities (e. g. side channels, backdoors, etc…) • testing • proof of correctness? Hard. • document the product well! 42 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Operational Assurance • system should sustain the security policy requirements during – installation/configuration, – day-to-day operation • usability testing is needed to – upgrades/maintenance 43 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Operational Assurance • system should sustain the security policy requirements during – installation/configuration, – day-to-day operation • usability testing is needed to – upgrades/maintenance 43 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Following the Idea. . What is Information Assurance? It is also basically a method to “argument”. • can I convince myself it is secure? 44 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Following the Idea. . What is Information Assurance? It is also basically a method to “argument”. • can I convince myself it is secure? 44 Nicolas T. Courtois, December 2009

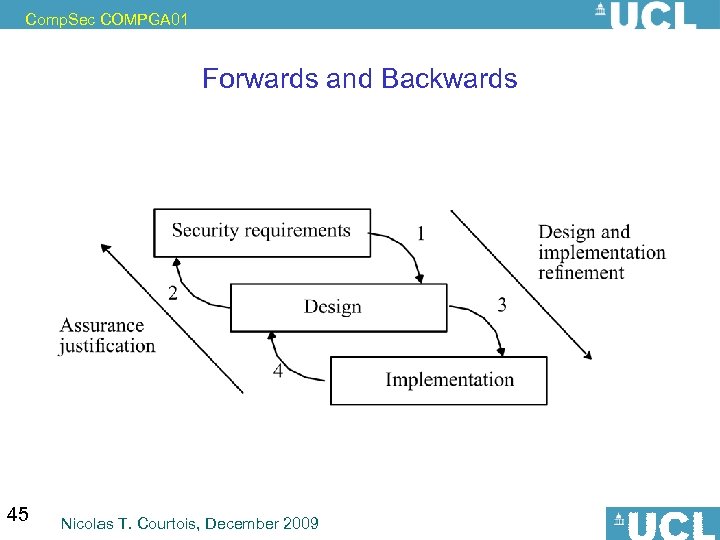

Comp. Sec COMPGA 01 Forwards and Backwards 45 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Forwards and Backwards 45 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 ***Life Cycle Assurance • Conception – focus on policy and requirements • Building – select mechanisms to enforce policies • give evidence that are appropriate • Deployment – provide mechanisms for delivery that assures integrity, • initial setup and key management • appropriate configuration • Fielded Product Life – update and patch mechanism – customer support – product decommissioning and end of life 46 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 ***Life Cycle Assurance • Conception – focus on policy and requirements • Building – select mechanisms to enforce policies • give evidence that are appropriate • Deployment – provide mechanisms for delivery that assures integrity, • initial setup and key management • appropriate configuration • Fielded Product Life – update and patch mechanism – customer support – product decommissioning and end of life 46 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Orange Book, CC 47 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Orange Book, CC 47 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 History: 1983 -1999 • Orange Book = Trusted Computer System Evaluation Criteria = TCSEC – designed for OS mostly… emphasis on confidentiality… 1998 -present • Common Criteria = ITSEC = ISO 15408 – designed for “everything” Both deeply rooted in the military circles… Do. D, NSA, GCHQ, etc… Both give a single linear scale – right answer: 48 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 History: 1983 -1999 • Orange Book = Trusted Computer System Evaluation Criteria = TCSEC – designed for OS mostly… emphasis on confidentiality… 1998 -present • Common Criteria = ITSEC = ISO 15408 – designed for “everything” Both deeply rooted in the military circles… Do. D, NSA, GCHQ, etc… Both give a single linear scale – right answer: 48 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Orange Book DC Division D: Minimal Protection Division C: Discretionary Protection C 1 - DAC, Identification and Authentication, protected from external tampering, … C 2 – Controls access to objects, object reuse, has auditing, more security testing 49 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Orange Book DC Division D: Minimal Protection Division C: Discretionary Protection C 1 - DAC, Identification and Authentication, protected from external tampering, … C 2 – Controls access to objects, object reuse, has auditing, more security testing 49 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Orange Book B Division B: Mandatory Protection B 1 - labeled security, MAC for named objects, informal security policy B 2 - structured protection: consistent formal security policy/model; MAC for all objects, labeling; trusted path; least privilege; covert channel analysis, admin tools, configuration management, pen-tested B 3 - structured design, security domains, full reference monitor, increases trusted path requirements, constrains code development, realtime monitoring, good documentation, 50 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Orange Book B Division B: Mandatory Protection B 1 - labeled security, MAC for named objects, informal security policy B 2 - structured protection: consistent formal security policy/model; MAC for all objects, labeling; trusted path; least privilege; covert channel analysis, admin tools, configuration management, pen-tested B 3 - structured design, security domains, full reference monitor, increases trusted path requirements, constrains code development, realtime monitoring, good documentation, 50 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Orange Book A Division A: Verification Protection A 1 As B 3, but designed from the scratch with formal methods… 51 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Orange Book A Division A: Verification Protection A 1 As B 3, but designed from the scratch with formal methods… 51 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Common Criteria • 1998: A Common Criteria Recognition Agreement: – US, UK, Canada, France, Germany • joined in 2002 by: – Australia, Finland, Greece, Israel, Italy, Netherlands, New Zealand, Norway, Spain, Sweden; India, Japan, Russia, South Korea • later became an international standard ISO/IEC 15408 http: //www. commoncriteria. org/ 52 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Common Criteria • 1998: A Common Criteria Recognition Agreement: – US, UK, Canada, France, Germany • joined in 2002 by: – Australia, Finland, Greece, Israel, Italy, Netherlands, New Zealand, Norway, Spain, Sweden; India, Japan, Russia, South Korea • later became an international standard ISO/IEC 15408 http: //www. commoncriteria. org/ 52 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Common Criteria What does it do? describes a language and a framework where: • the security requirements will be specified, – goals can be claimed to be achieved, – and this can be evaluated • claims can later be shown to hold or shown not to hold! 53 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Common Criteria What does it do? describes a language and a framework where: • the security requirements will be specified, – goals can be claimed to be achieved, – and this can be evaluated • claims can later be shown to hold or shown not to hold! 53 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 • • 54 Common Criteria Concepts Target Of Evaluation (TOE): the product or system that is the subject of the evaluation. Protection Profile (PP): a document that identifies security requirements relevant to a user community for a particular purpose. Security Target (ST): a document that identifies the security properties one wants to evaluate against Evaluation Assurance Level (EAL) - a numerical rating (1 -7) reflecting the assurance requirements fulfilled during the evaluation. Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 • • 54 Common Criteria Concepts Target Of Evaluation (TOE): the product or system that is the subject of the evaluation. Protection Profile (PP): a document that identifies security requirements relevant to a user community for a particular purpose. Security Target (ST): a document that identifies the security properties one wants to evaluate against Evaluation Assurance Level (EAL) - a numerical rating (1 -7) reflecting the assurance requirements fulfilled during the evaluation. Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 To. E • Target Of Evaluation (TOE): the product or system that is the subject of the evaluation. 55 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 To. E • Target Of Evaluation (TOE): the product or system that is the subject of the evaluation. 55 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 PP • Protection Profile (PP): a document that identifies security requirements relevant to a user community for a particular purpose. – an implementation-independent set of security requirements for a category of products or systems that meet specific consumer needs” – a more detailed specification of a set of means/protections to achieve the goals – and a yardstick to evaluate if designers / implementers did a good job – subject to review and certified 56 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 PP • Protection Profile (PP): a document that identifies security requirements relevant to a user community for a particular purpose. – an implementation-independent set of security requirements for a category of products or systems that meet specific consumer needs” – a more detailed specification of a set of means/protections to achieve the goals – and a yardstick to evaluate if designers / implementers did a good job – subject to review and certified 56 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 PP - Example • Controlled Access PP == CAPP_V 1. d – Security functional requirements • Authentication, • User Data Protection, • Prevent Audit Loss – Security assurance requirements • Security testing, • Admin guidance, • Life-cycle support, … – Assumes non-hostile and well-managed users – Does not consider malicious system developers 57 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 PP - Example • Controlled Access PP == CAPP_V 1. d – Security functional requirements • Authentication, • User Data Protection, • Prevent Audit Loss – Security assurance requirements • Security testing, • Admin guidance, • Life-cycle support, … – Assumes non-hostile and well-managed users – Does not consider malicious system developers 57 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 ST • Security Target (ST): a document that identifies the security properties one wants to evaluate against. – “a set of security requirements and specifications to be used for evaluation of an identified product or system” – describes specific security functions and mechanisms – allowing comparison of very different products 58 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 ST • Security Target (ST): a document that identifies the security properties one wants to evaluate against. – “a set of security requirements and specifications to be used for evaluation of an identified product or system” – describes specific security functions and mechanisms – allowing comparison of very different products 58 Nicolas T. Courtois, December 2009

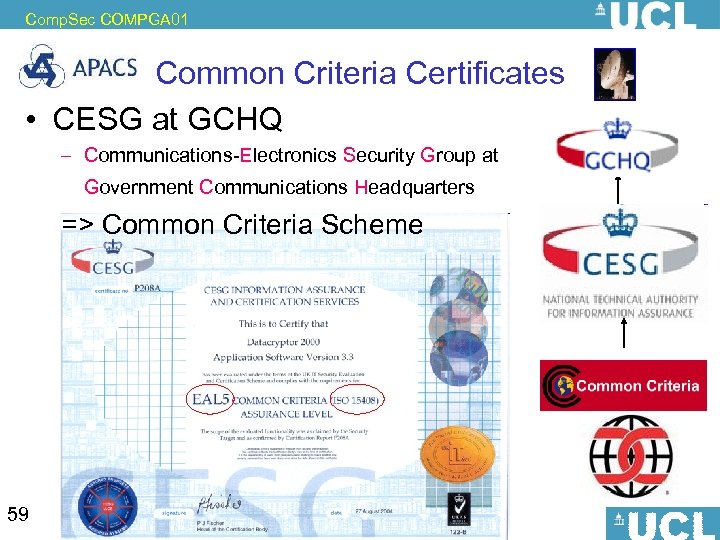

Comp. Sec COMPGA 01 Common Criteria Certificates • CESG at GCHQ – Communications-Electronics Security Group at Government Communications Headquarters => Common Criteria Scheme 59 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Common Criteria Certificates • CESG at GCHQ – Communications-Electronics Security Group at Government Communications Headquarters => Common Criteria Scheme 59 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 EAL = Evaluation Assurance Level • EAL 1: Functionally Tested • no need disclose the design/sources to government agencies… • EAL 2: Structurally Tested • 6 months, 150 K$ • EAL 3: Methodically Tested and Checked • EAL 4: Methodically Designed, Tested, and Reviewed – EAL 4+: flaw remediation, better crypto, etc… – 24 months, 150 K$ - 2. 5 M$ per product c o m m e r c i a l – Ms. Windows 2000 source certified EAL 4+ for an undisclosed amount • EAL 5: Semi-formally Designed and Tested • EAL 6: Semi-formally Verified Design and Tested • EAL 7: Formally Verified Design and Tested 60 Nicolas T. Courtois, December 2009 m i l i t a r y

Comp. Sec COMPGA 01 EAL = Evaluation Assurance Level • EAL 1: Functionally Tested • no need disclose the design/sources to government agencies… • EAL 2: Structurally Tested • 6 months, 150 K$ • EAL 3: Methodically Tested and Checked • EAL 4: Methodically Designed, Tested, and Reviewed – EAL 4+: flaw remediation, better crypto, etc… – 24 months, 150 K$ - 2. 5 M$ per product c o m m e r c i a l – Ms. Windows 2000 source certified EAL 4+ for an undisclosed amount • EAL 5: Semi-formally Designed and Tested • EAL 6: Semi-formally Verified Design and Tested • EAL 7: Formally Verified Design and Tested 60 Nicolas T. Courtois, December 2009 m i l i t a r y

Comp. Sec COMPGA 01 Microsoft and EAL 4+ Schneier on Security Microsoft Windows Receives EAL 4+ Certification Microsoft announced that all the products earned the EAL 4+ (Evaluation Assurance Level), which is the highest level granted to a commercial product. The products receiving CC certification include Windows XP Professional with Service Pack 2 and Windows XP Embedded with Service Pack 2. Four different versions of Windows Server 2003 also received certification. Is this true? . . . director of security engineering strategy at Microsoft Steve Lipner said the certifications are a significant proof point of Redmond's commitment to creating secure software. Bad publicity for EAL 4+… Target of evaluation: switch the network off. 61 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Microsoft and EAL 4+ Schneier on Security Microsoft Windows Receives EAL 4+ Certification Microsoft announced that all the products earned the EAL 4+ (Evaluation Assurance Level), which is the highest level granted to a commercial product. The products receiving CC certification include Windows XP Professional with Service Pack 2 and Windows XP Embedded with Service Pack 2. Four different versions of Windows Server 2003 also received certification. Is this true? . . . director of security engineering strategy at Microsoft Steve Lipner said the certifications are a significant proof point of Redmond's commitment to creating secure software. Bad publicity for EAL 4+… Target of evaluation: switch the network off. 61 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 CC Recognition Only EAL 1 -5 are recognized by other countries… Levels 6 -7 are closed military and national stuff… 62 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 CC Recognition Only EAL 1 -5 are recognized by other countries… Levels 6 -7 are closed military and national stuff… 62 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 CC Criticism • people that do these evaluations are more or less the national military or national intelligence agencies (GCHQ, NSA), but also US NIST, – all willing to help defend the national industry… – but also conflicting interests (signals intelligence interception etc. ) • top-down culture, not listening to the industry • slow, product is now obsolete by the time you go through it… • bureaucratic process, mostly about writing things in a certain way… – used to be a major source of jobs for cryptologists… • there is no evidence that EAL made products more secure in practice. – Ross Anderson suggests that some governments were permissive/easy on business, or maybe cynical: certified the products they could break… • evaluation depends on PP, this one is not too broad, – chosen by the vendor in his favor. – should be the one required by the customer! 63 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 CC Criticism • people that do these evaluations are more or less the national military or national intelligence agencies (GCHQ, NSA), but also US NIST, – all willing to help defend the national industry… – but also conflicting interests (signals intelligence interception etc. ) • top-down culture, not listening to the industry • slow, product is now obsolete by the time you go through it… • bureaucratic process, mostly about writing things in a certain way… – used to be a major source of jobs for cryptologists… • there is no evidence that EAL made products more secure in practice. – Ross Anderson suggests that some governments were permissive/easy on business, or maybe cynical: certified the products they could break… • evaluation depends on PP, this one is not too broad, – chosen by the vendor in his favor. – should be the one required by the customer! 63 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Good Points Some countries such as France (DCSSI) have developed extra evaluations and force stronger mechanisms, especially in cryptography. – products can be evaluated as “high level” (niveau élevé). – It is recognized that CC contributed a lot for developing MUCH stronger cryptography used in the industry of countries such as France, Germany, UK etc… 64 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 Good Points Some countries such as France (DCSSI) have developed extra evaluations and force stronger mechanisms, especially in cryptography. – products can be evaluated as “high level” (niveau élevé). – It is recognized that CC contributed a lot for developing MUCH stronger cryptography used in the industry of countries such as France, Germany, UK etc… 64 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 CC: Decline? – today, since 2000 or so. . • • the profitability of security industries suffered, less ‘legal’ reasons to ever evaluate products… time to market became very important, got some bad publicity • since 2000 CC evaluations are less widely used (banks still continue: eg bank cards and terminals…) New U. S. Do. D strategy: COTS = Commercial Off The Shelf, » can add custom configuration / setup 65 Nicolas T. Courtois, December 2009

Comp. Sec COMPGA 01 CC: Decline? – today, since 2000 or so. . • • the profitability of security industries suffered, less ‘legal’ reasons to ever evaluate products… time to market became very important, got some bad publicity • since 2000 CC evaluations are less widely used (banks still continue: eg bank cards and terminals…) New U. S. Do. D strategy: COTS = Commercial Off The Shelf, » can add custom configuration / setup 65 Nicolas T. Courtois, December 2009