2c35dd81679fa4604c285605b2518994.ppt

- Количество слайдов: 106

Auctions 1

Auctions 1

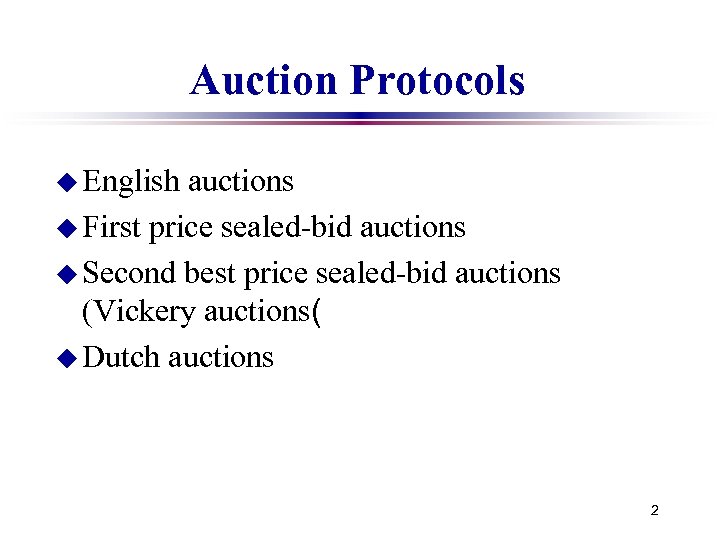

Auction Protocols u English auctions u First price sealed-bid auctions u Second best price sealed-bid auctions (Vickery auctions( u Dutch auctions 2

Auction Protocols u English auctions u First price sealed-bid auctions u Second best price sealed-bid auctions (Vickery auctions( u Dutch auctions 2

3

3

The Contract Net R. G. Smith and R. Davis 4

The Contract Net R. G. Smith and R. Davis 4

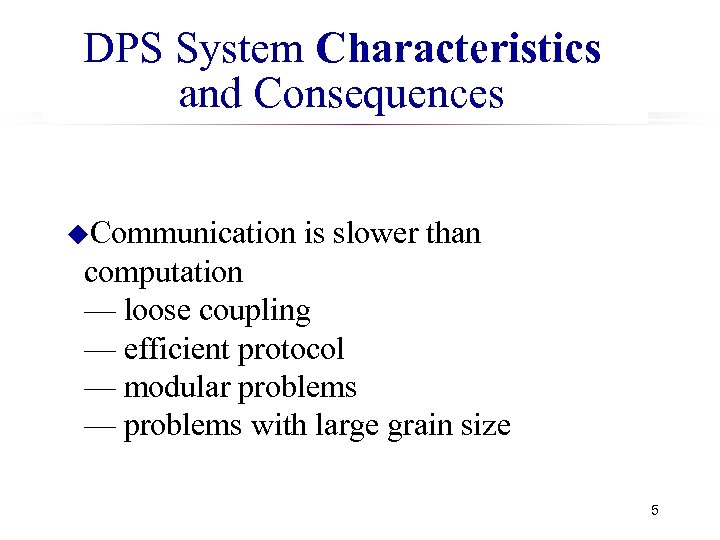

DPS System Characteristics and Consequences u. Communication is slower than computation — loose coupling — efficient protocol — modular problems — problems with large grain size 5

DPS System Characteristics and Consequences u. Communication is slower than computation — loose coupling — efficient protocol — modular problems — problems with large grain size 5

More DPS System Characteristics and Consequences u. Any unique node is a potential bottleneck — distribute data — distribute control — organized behavior is hard to guarantee (since no one node has complete picture( 6

More DPS System Characteristics and Consequences u. Any unique node is a potential bottleneck — distribute data — distribute control — organized behavior is hard to guarantee (since no one node has complete picture( 6

The Contract Net u. An approach to distributed problem solving, focusing on task distribution u. Task distribution viewed as a kind of contract negotiation u“Protocol” specifies content of communication, not just form u. Two-way transfer of information is natural extension of transfer of control mechanisms 7

The Contract Net u. An approach to distributed problem solving, focusing on task distribution u. Task distribution viewed as a kind of contract negotiation u“Protocol” specifies content of communication, not just form u. Two-way transfer of information is natural extension of transfer of control mechanisms 7

Four Phases to Solution, as Seen in Contract Net. 1 Problem Decomposition. 2 Sub-problem distribution. 3 Sub-problem solution. 4 Answer synthesis The contract net protocol deals. with phase 2 8

Four Phases to Solution, as Seen in Contract Net. 1 Problem Decomposition. 2 Sub-problem distribution. 3 Sub-problem solution. 4 Answer synthesis The contract net protocol deals. with phase 2 8

Contract Net u. The collection of nodes is the “contract net” u. Each node on the network can, at different times or for different tasks, be a manager or a contractor u. When a node gets a composite task (or for any reason can’t solve its present task), it breaks it into subtasks (if possible) and announces them (acting as a manager), receives bids from potential contractors, then awards the job (example domain: network resource management, printers(… , 9

Contract Net u. The collection of nodes is the “contract net” u. Each node on the network can, at different times or for different tasks, be a manager or a contractor u. When a node gets a composite task (or for any reason can’t solve its present task), it breaks it into subtasks (if possible) and announces them (acting as a manager), receives bids from potential contractors, then awards the job (example domain: network resource management, printers(… , 9

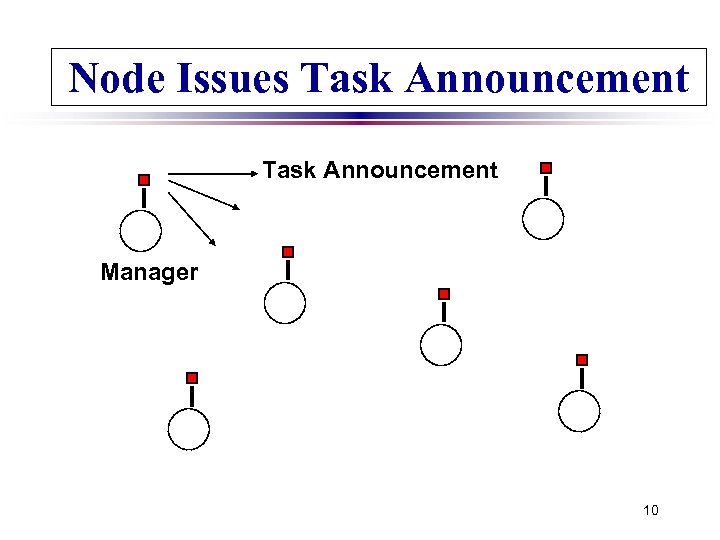

Node Issues Task Announcement Manager 10

Node Issues Task Announcement Manager 10

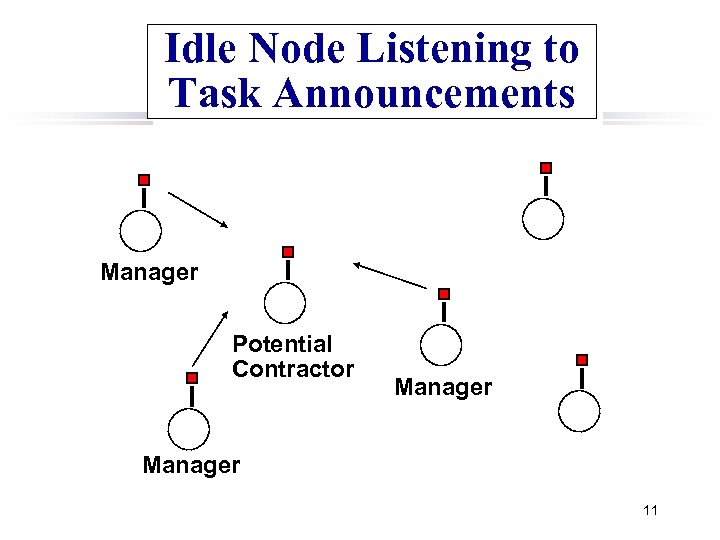

Idle Node Listening to Task Announcements Manager Potential Contractor Manager 11

Idle Node Listening to Task Announcements Manager Potential Contractor Manager 11

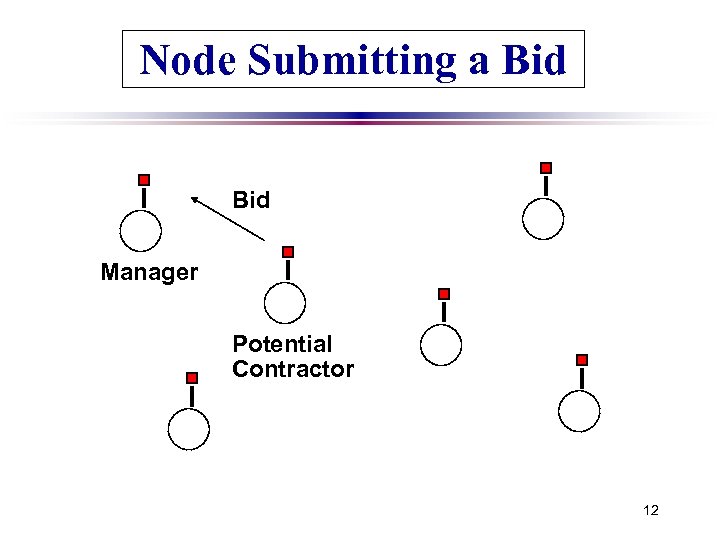

Node Submitting a Bid Manager Potential Contractor 12

Node Submitting a Bid Manager Potential Contractor 12

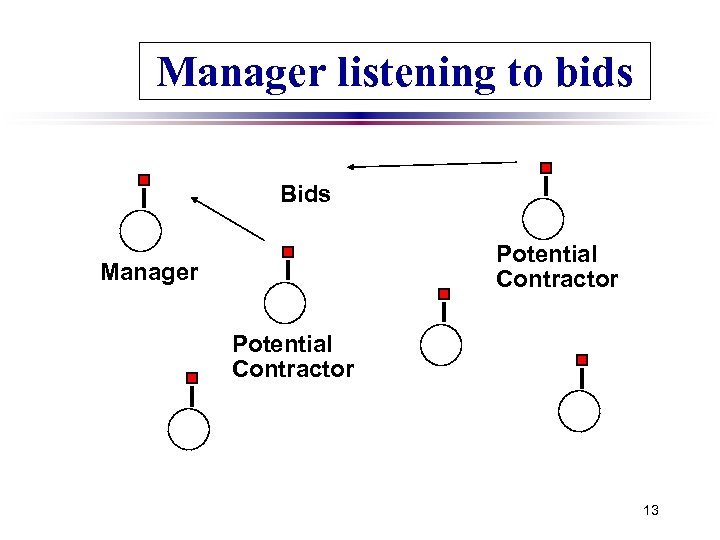

Manager listening to bids Bids Potential Contractor Manager Potential Contractor 13

Manager listening to bids Bids Potential Contractor Manager Potential Contractor 13

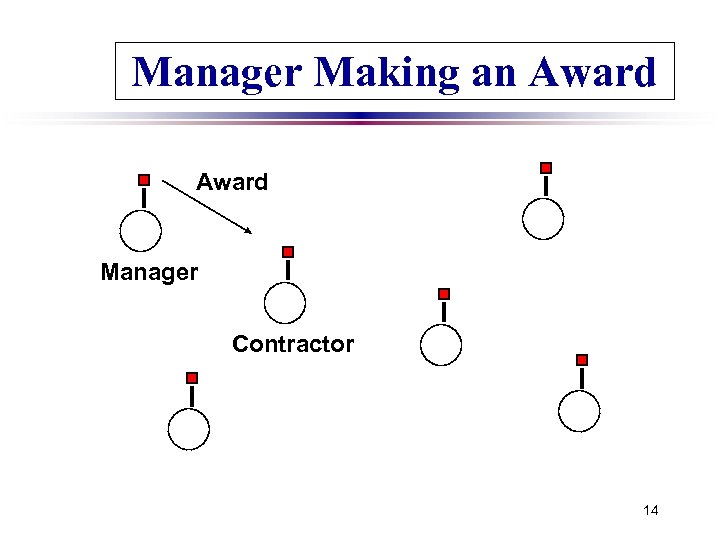

Manager Making an Award Manager Contractor 14

Manager Making an Award Manager Contractor 14

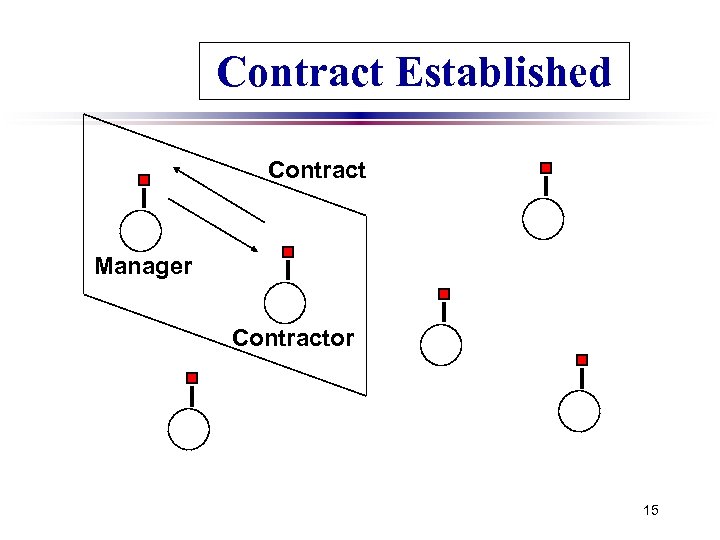

Contract Established Contract Manager Contractor 15

Contract Established Contract Manager Contractor 15

Domain-Specific Evaluation u. Task announcement message prompts potential contractors to use domain specific task evaluation procedures; there is deliberation going on, not just selection — perhaps no tasks are suitable at present u. Manager considers submitted bids using domain specific bid evaluation procedure 16

Domain-Specific Evaluation u. Task announcement message prompts potential contractors to use domain specific task evaluation procedures; there is deliberation going on, not just selection — perhaps no tasks are suitable at present u. Manager considers submitted bids using domain specific bid evaluation procedure 16

Types of Messages u. Task announcement u. Bid u. Award u. Interim u. Final report (on progress( report (including result description( u. Termination message (if manager wants to terminate contract( 17

Types of Messages u. Task announcement u. Bid u. Award u. Interim u. Final report (on progress( report (including result description( u. Termination message (if manager wants to terminate contract( 17

Efficiency Modifications Focused addressing — when general broadcast isn’t required u Directed contracts — when manager already knows which node is appropriate u Request-response mechanism — for simple transfer of information without overhead of contracting u Node-available message — reverses initiative of negotiation process u 18

Efficiency Modifications Focused addressing — when general broadcast isn’t required u Directed contracts — when manager already knows which node is appropriate u Request-response mechanism — for simple transfer of information without overhead of contracting u Node-available message — reverses initiative of negotiation process u 18

Message Format u. Task Announcement Slots: — Eligibility specification — Task abstraction — Bid specification — Expiration time 19

Message Format u. Task Announcement Slots: — Eligibility specification — Task abstraction — Bid specification — Expiration time 19

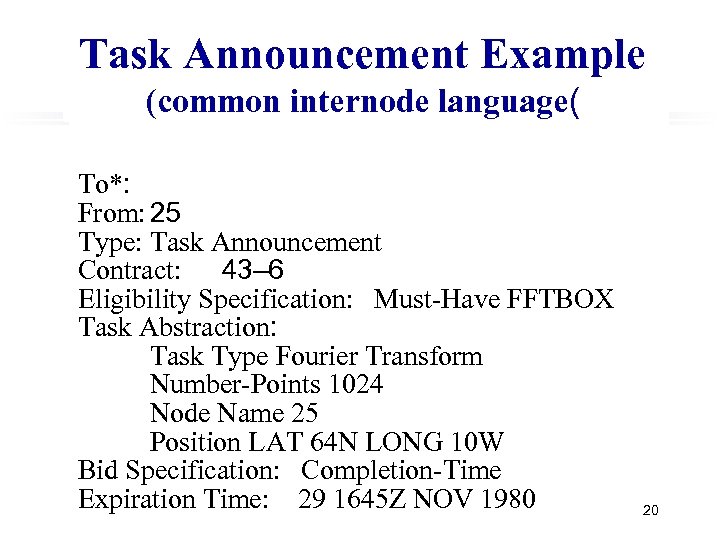

Task Announcement Example (common internode language( To*: From: 25 Type: Task Announcement Contract: 43– 6 Eligibility Specification: Must-Have FFTBOX Task Abstraction: Task Type Fourier Transform Number-Points 1024 Node Name 25 Position LAT 64 N LONG 10 W Bid Specification: Completion-Time Expiration Time: 29 1645 Z NOV 1980 20

Task Announcement Example (common internode language( To*: From: 25 Type: Task Announcement Contract: 43– 6 Eligibility Specification: Must-Have FFTBOX Task Abstraction: Task Type Fourier Transform Number-Points 1024 Node Name 25 Position LAT 64 N LONG 10 W Bid Specification: Completion-Time Expiration Time: 29 1645 Z NOV 1980 20

The existence of a common internode language allows new nodes to be added to the system modularly, without the need for explicit linking to others in the network (e. g. , as needed in standard procedure calling. ( 21

The existence of a common internode language allows new nodes to be added to the system modularly, without the need for explicit linking to others in the network (e. g. , as needed in standard procedure calling. ( 21

Applications of the contract Net u Sensing u Task Allocation (Malone( u Delivery companies (Sandholm( u Market-oriented programming (Wellman( 22

Applications of the contract Net u Sensing u Task Allocation (Malone( u Delivery companies (Sandholm( u Market-oriented programming (Wellman( 22

Bidding Mechanisms for Data Allocation A user sends its query directly to the server where the needed document is stored. 23

Bidding Mechanisms for Data Allocation A user sends its query directly to the server where the needed document is stored. 23

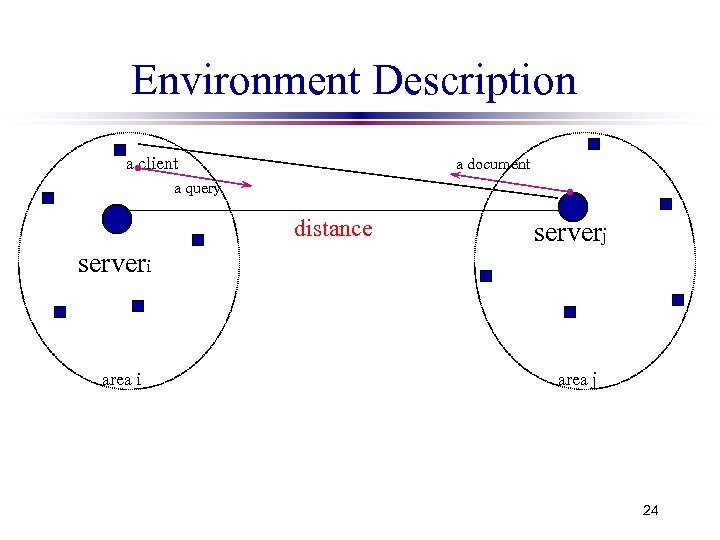

Environment Description a client a document a query distance serverj serveri area j 24

Environment Description a client a document a query distance serverj serveri area j 24

Utility Function u. Each server is concerned only whether a dataset is stored locally or remotely, but is indifferent with respect to different remote location of the dataset. 25

Utility Function u. Each server is concerned only whether a dataset is stored locally or remotely, but is indifferent with respect to different remote location of the dataset. 25

The Trading Mechanism u Bidding sessions are carried on during predefined time periods. u In each bidding session, the location of the new datasets is determined and the location of each old dataset can be changed. u Until a decision is reached, the new datasets are stored in a temporary buffer. 26

The Trading Mechanism u Bidding sessions are carried on during predefined time periods. u In each bidding session, the location of the new datasets is determined and the location of each old dataset can be changed. u Until a decision is reached, the new datasets are stored in a temporary buffer. 26

The Trading Mechanism - cont. u Each dataset has an initial owner (called contractor(ds)), according to the static allocation. s s For an old dataset - the server which stores it. For a new dataset - the server with the nearest topics (defined according to the topics of the datasets stored by this server. ( 27

The Trading Mechanism - cont. u Each dataset has an initial owner (called contractor(ds)), according to the static allocation. s s For an old dataset - the server which stores it. For a new dataset - the server with the nearest topics (defined according to the topics of the datasets stored by this server. ( 27

The Bidding Steps ¬ Each server broadcasts an announcement for each new dataset it owns, and also for some of its old local datasets. For each such announcement, each server sends the price it is willing to pay in order to store the dataset locally. ® The winner of each dataset is determined by its contractor. It broadcasts a message, including: the winner, the price it has to pay, and the server which bids this price. 28

The Bidding Steps ¬ Each server broadcasts an announcement for each new dataset it owns, and also for some of its old local datasets. For each such announcement, each server sends the price it is willing to pay in order to store the dataset locally. ® The winner of each dataset is determined by its contractor. It broadcasts a message, including: the winner, the price it has to pay, and the server which bids this price. 28

Cost of Reallocating Old Datasets u move_cost(ds, bidder): the cost for contractor(ds) for moving ds from its current location to bidder. (for new datasets, move_cost=0( u obtain_cost(ds, bidder): the cost for bidder for moving ds from its current location to bidder. 29

Cost of Reallocating Old Datasets u move_cost(ds, bidder): the cost for contractor(ds) for moving ds from its current location to bidder. (for new datasets, move_cost=0( u obtain_cost(ds, bidder): the cost for bidder for moving ds from its current location to bidder. 29

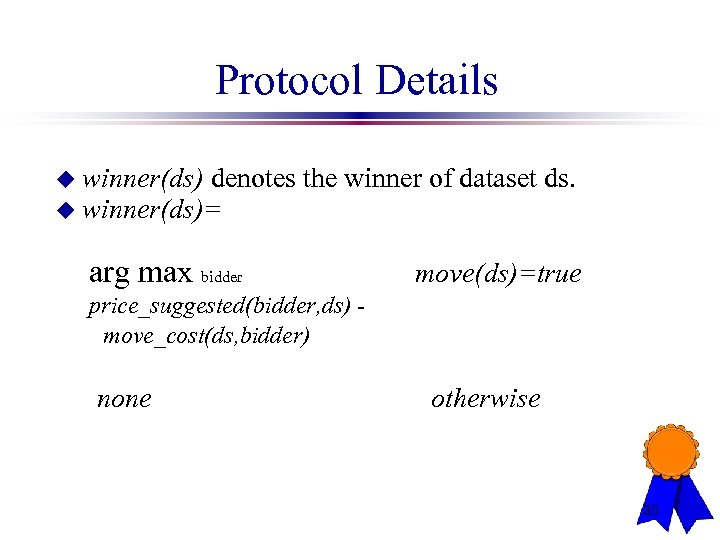

Protocol Details u winner(ds) denotes u winner(ds)= the winner of dataset ds. arg max bidder move(ds)=true price_suggested(bidder, ds) move_cost(ds, bidder) none otherwise 30

Protocol Details u winner(ds) denotes u winner(ds)= the winner of dataset ds. arg max bidder move(ds)=true price_suggested(bidder, ds) move_cost(ds, bidder) none otherwise 30

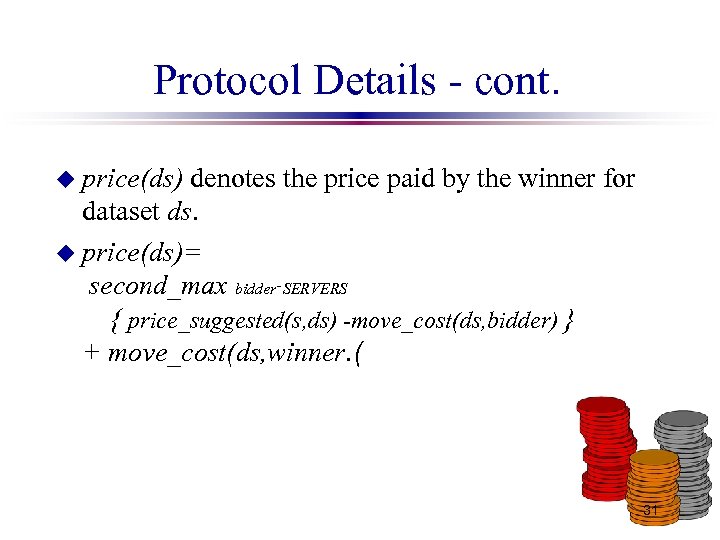

Protocol Details - cont. u price(ds) denotes the price paid by the winner for dataset ds. u price(ds)= second_max bidder ־ SERVERS { price_suggested(s, ds) -move_cost(ds, bidder) } + move_cost(ds, winner. ( 31

Protocol Details - cont. u price(ds) denotes the price paid by the winner for dataset ds. u price(ds)= second_max bidder ־ SERVERS { price_suggested(s, ds) -move_cost(ds, bidder) } + move_cost(ds, winner. ( 31

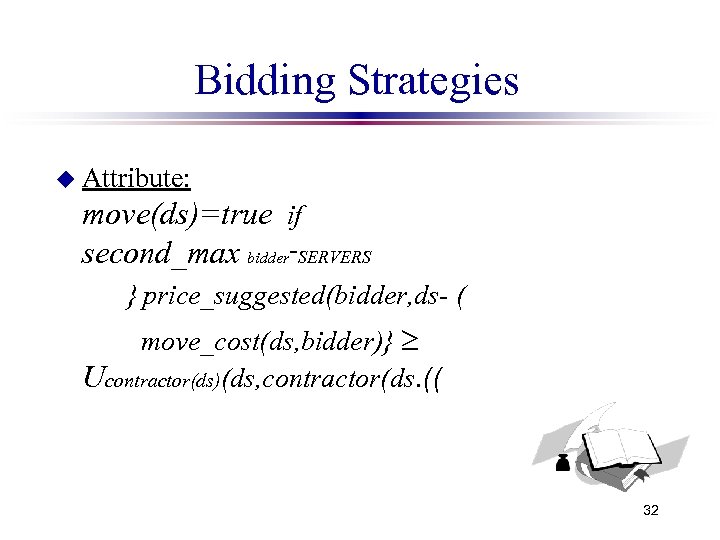

Bidding Strategies u Attribute: move(ds)=true if second_max bidder ־ SERVERS } price_suggested(bidder, ds- ( move_cost(ds, bidder)} ³ Ucontractor(ds)(ds, contractor(ds. (( 32

Bidding Strategies u Attribute: move(ds)=true if second_max bidder ־ SERVERS } price_suggested(bidder, ds- ( move_cost(ds, bidder)} ³ Ucontractor(ds)(ds, contractor(ds. (( 32

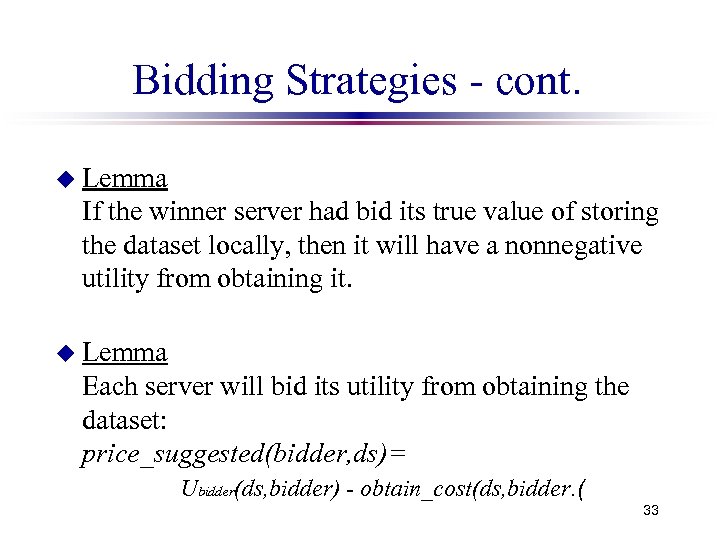

Bidding Strategies - cont. u Lemma If the winner server had bid its true value of storing the dataset locally, then it will have a nonnegative utility from obtaining it. u Lemma Each server will bid its utility from obtaining the dataset: price_suggested(bidder, ds)= Ubidder(ds, bidder) - obtain_cost(ds, bidder. ( 33

Bidding Strategies - cont. u Lemma If the winner server had bid its true value of storing the dataset locally, then it will have a nonnegative utility from obtaining it. u Lemma Each server will bid its utility from obtaining the dataset: price_suggested(bidder, ds)= Ubidder(ds, bidder) - obtain_cost(ds, bidder. ( 33

Bidding Strategies - cont. u Theorem If announcing and bidding are free, then the allocation reached by the bidding protocol leads to better or equal utility for each server than does the static policy. The utility function is evaluated according to the expected profits of the server from the allocation. 34

Bidding Strategies - cont. u Theorem If announcing and bidding are free, then the allocation reached by the bidding protocol leads to better or equal utility for each server than does the static policy. The utility function is evaluated according to the expected profits of the server from the allocation. 34

Usage Estimation u Each server knows only the usage of datasets stored locally. u For new datasets and remote datasets, the server has no information about past usage. u It estimates the future usage of new and remote datasets, using the past usage of local datasets, which contain similar topics. 35

Usage Estimation u Each server knows only the usage of datasets stored locally. u For new datasets and remote datasets, the server has no information about past usage. u It estimates the future usage of new and remote datasets, using the past usage of local datasets, which contain similar topics. 35

Queries Structure u We assume that a query sent to a server contains a list of required documents. u This is the situation if the search mechanism to find the required documents is installed locally by the client. u In this situation, the server has to learn from the queries about its local documents, to the expected usage of other documents, in order to decide whether it needs them or not. 36

Queries Structure u We assume that a query sent to a server contains a list of required documents. u This is the situation if the search mechanism to find the required documents is installed locally by the client. u In this situation, the server has to learn from the queries about its local documents, to the expected usage of other documents, in order to decide whether it needs them or not. 36

Usage Prediction u We assume that a dataset contains several keywords (k 1. . kn. ( u For each local dataset ds, and each server d, the server saves the past usage of ds by d, in the last period u Then, it has to predict the future usage of ds by d. It assumes the same behavior than in the past. 37

Usage Prediction u We assume that a dataset contains several keywords (k 1. . kn. ( u For each local dataset ds, and each server d, the server saves the past usage of ds by d, in the last period u Then, it has to predict the future usage of ds by d. It assumes the same behavior than in the past. 37

Usage Prediction - cont. u It is assumed that the users are interested in keywords, so the usage of a dataset is a function of the keywords it contains. u The simplest model is: when a dataset usage is the sum of the usage of each of its keywords. However, the relationship between the keywords and the dataset may be different. 38

Usage Prediction - cont. u It is assumed that the users are interested in keywords, so the usage of a dataset is a function of the keywords it contains. u The simplest model is: when a dataset usage is the sum of the usage of each of its keywords. However, the relationship between the keywords and the dataset may be different. 38

Usage Prediction - cont. u The server has to learn about usage of datasets not stored locally: u We suggest that it will build a Neural Network for learning the usage template of each area. 39

Usage Prediction - cont. u The server has to learn about usage of datasets not stored locally: u We suggest that it will build a Neural Network for learning the usage template of each area. 39

What is Neural Network • A neural network is composed of a number of nodes, or units, connected by links. • Each link has numeric weight associated with it. • The weight are modified so as to try to bring the network’s input/output behavior more into line with that of the environment providing the input. 40

What is Neural Network • A neural network is composed of a number of nodes, or units, connected by links. • Each link has numeric weight associated with it. • The weight are modified so as to try to bring the network’s input/output behavior more into line with that of the environment providing the input. 40

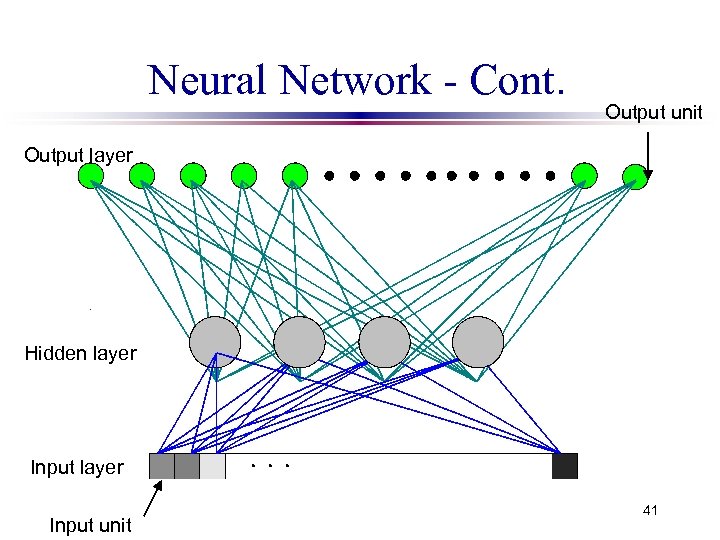

Neural Network - Cont. Output unit Output layer Hidden layer Input unit 41

Neural Network - Cont. Output unit Output layer Hidden layer Input unit 41

Structure of the Neural Network u For each area d, we build a neural network. u Each dataset stored by the server in area d, is one example for the neural network of d. u The inputs of the examples contain, for each possible keyword, whether it exist in this dataset, or not. 42

Structure of the Neural Network u For each area d, we build a neural network. u Each dataset stored by the server in area d, is one example for the neural network of d. u The inputs of the examples contain, for each possible keyword, whether it exist in this dataset, or not. 42

Structure of the Neural Network cont. u The output unit of the Neural Network for area d, is its past usage of this dataset. u In order to find the expected usage of another dataset, ds 2, by d, we provide the network with the keywords of ds 2. u The output of the network is its predicted usage of ds 2 by area d. 43

Structure of the Neural Network cont. u The output unit of the Neural Network for area d, is its past usage of this dataset. u In order to find the expected usage of another dataset, ds 2, by d, we provide the network with the keywords of ds 2. u The output of the network is its predicted usage of ds 2 by area d. 43

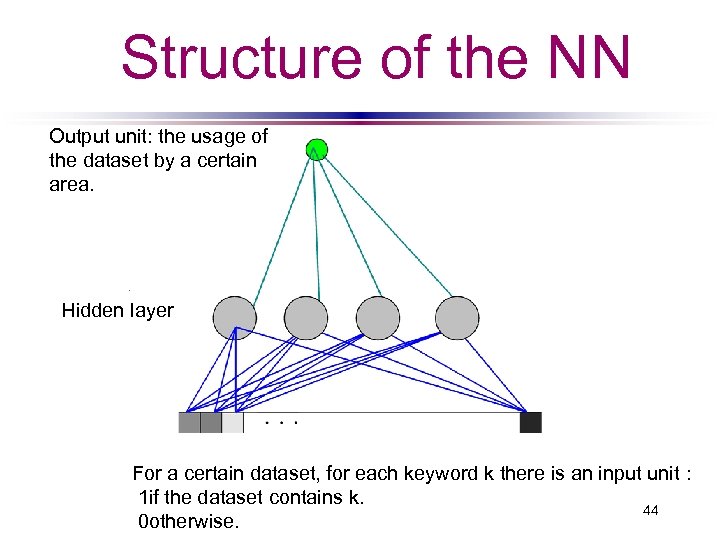

Structure of the NN Output unit: the usage of the dataset by a certain area. Hidden layer For a certain dataset, for each keyword k there is an input unit : 1 if the dataset contains k. 44 0 otherwise.

Structure of the NN Output unit: the usage of the dataset by a certain area. Hidden layer For a certain dataset, for each keyword k there is an input unit : 1 if the dataset contains k. 44 0 otherwise.

Experimental Evaluation - Results Measurement u vcosts(alloc) - the variable cost of an allocation, which consists of the transmission costs due to the flow of queries. u vcost_ratio: the ratio of the variable costs when using the bidding mechanism and the variable costs of the static allocation. 45

Experimental Evaluation - Results Measurement u vcosts(alloc) - the variable cost of an allocation, which consists of the transmission costs due to the flow of queries. u vcost_ratio: the ratio of the variable costs when using the bidding mechanism and the variable costs of the static allocation. 45

Experimental Evaluation u Complete information concerning previous queries (still uncertainty: ( The bidding mechanism reaches results near to that of the optimal allocation (reached by a central decision maker. ( s The bidding mechanism yields a lower standard deviation of the servers utilities than the optimal allocation. u Incomplete information : s The results of the bidding mechanism are better than those of static allocation. 46 s

Experimental Evaluation u Complete information concerning previous queries (still uncertainty: ( The bidding mechanism reaches results near to that of the optimal allocation (reached by a central decision maker. ( s The bidding mechanism yields a lower standard deviation of the servers utilities than the optimal allocation. u Incomplete information : s The results of the bidding mechanism are better than those of static allocation. 46 s

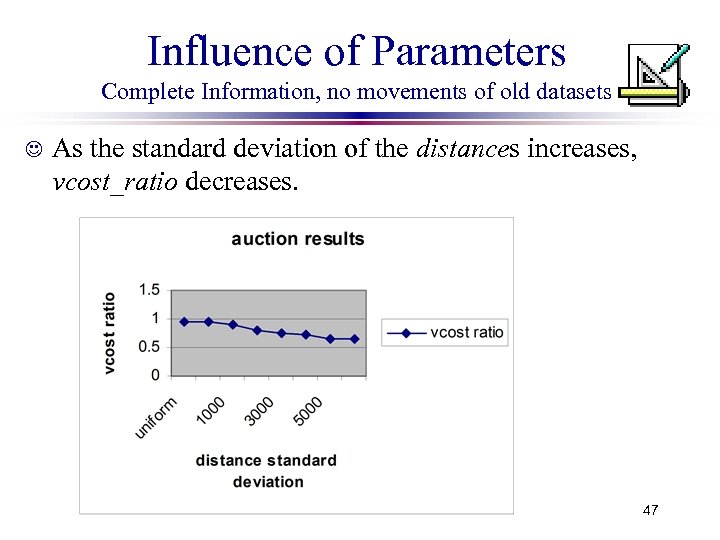

Influence of Parameters Complete Information, no movements of old datasets J As the standard deviation of the distances increases, vcost_ratio decreases. 47

Influence of Parameters Complete Information, no movements of old datasets J As the standard deviation of the distances increases, vcost_ratio decreases. 47

Influence of Parameters - cont. K K K When increasing the number of servers and the number datasets, vcost_ratio is not influenced. query_price, answer_cost, storage_cost, dataset_size and retrieve_ cost do not influence vcost_ratio. usage, std. usage, distance do not influence vcost_ratio. 48

Influence of Parameters - cont. K K K When increasing the number of servers and the number datasets, vcost_ratio is not influenced. query_price, answer_cost, storage_cost, dataset_size and retrieve_ cost do not influence vcost_ratio. usage, std. usage, distance do not influence vcost_ratio. 48

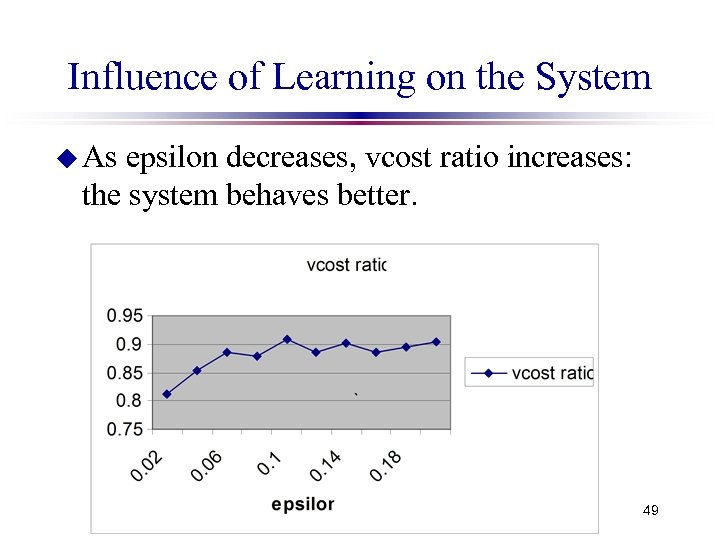

Influence of Learning on the System u As epsilon decreases, vcost ratio increases: the system behaves better. 49

Influence of Learning on the System u As epsilon decreases, vcost ratio increases: the system behaves better. 49

Conclusion u We have considered the data allocation problem in a distributed environment. u We have presented the utility function of the servers, which expresses their preferences over the data allocation. u We have proposed using a bidding protocol for solving the problem. 50

Conclusion u We have considered the data allocation problem in a distributed environment. u We have presented the utility function of the servers, which expresses their preferences over the data allocation. u We have proposed using a bidding protocol for solving the problem. 50

Conclusion - cont. u We have considered complete as well as incomplete information. u For the complete information case, we have proved that the results obtained by the bidding mechanism are better than those of the static allocation, and closed to the optimal results. 51

Conclusion - cont. u We have considered complete as well as incomplete information. u For the complete information case, we have proved that the results obtained by the bidding mechanism are better than those of the static allocation, and closed to the optimal results. 51

Conclusion - cont. u For the incomplete information environment: We have developed a neural-network based learning mechanism. u For each area d, we build a neural network, trained by the server of d. u By this network, we find expectation for other datasets, not currently stored by d. u We found, by simulation, that the results obtained are still significantly better than the static allocation. 52

Conclusion - cont. u For the incomplete information environment: We have developed a neural-network based learning mechanism. u For each area d, we build a neural network, trained by the server of d. u By this network, we find expectation for other datasets, not currently stored by d. u We found, by simulation, that the results obtained are still significantly better than the static allocation. 52

Future Work u Future s s s Work: Datasets can be stored in more than one server. Bounded rationality. Repeated game. 53

Future Work u Future s s s Work: Datasets can be stored in more than one server. Bounded rationality. Repeated game. 53

Reaching Agreements Through Argumentation Collaborator: Katia Sycara, Madhura Nirkhe, Amir Evenchik, and Ariel Stolman 54

Reaching Agreements Through Argumentation Collaborator: Katia Sycara, Madhura Nirkhe, Amir Evenchik, and Ariel Stolman 54

Introduction u Argumentation--an iterative process emerging from exchanges among agents to persuade each other and bring about a change in intentions. u A logical model of the mental states of the agents: beliefs, desires, intentions, goals. u The logic is used to specify argument formulation and a basis for Automated Negotiation Agent. 55

Introduction u Argumentation--an iterative process emerging from exchanges among agents to persuade each other and bring about a change in intentions. u A logical model of the mental states of the agents: beliefs, desires, intentions, goals. u The logic is used to specify argument formulation and a basis for Automated Negotiation Agent. 55

Agents as Belief, Desire, Intention systems u Belief: u information about the current world state u subjective u Desire u preferences u can over future world states be inconsistent (in contrast to goals( u Intentions u set of goals the agent is committed to achieve u the agent’s “runtime stack” u Formal models: mostly modal logics with possible-worlds semantics 56

Agents as Belief, Desire, Intention systems u Belief: u information about the current world state u subjective u Desire u preferences u can over future world states be inconsistent (in contrast to goals( u Intentions u set of goals the agent is committed to achieve u the agent’s “runtime stack” u Formal models: mostly modal logics with possible-worlds semantics 56

Logic Background u Modal logics; Kripke structures u Syntactic Approaches u Baysen Networks 57

Logic Background u Modal logics; Kripke structures u Syntactic Approaches u Baysen Networks 57

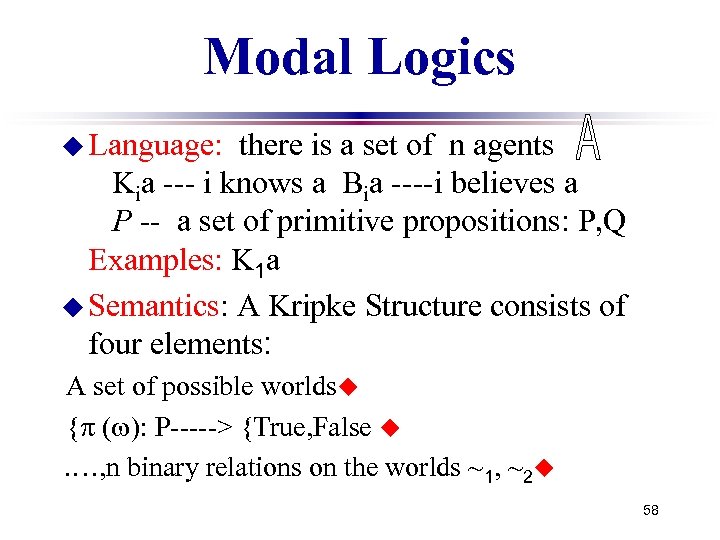

Modal Logics u Language: there is a set of n agents Kia --- i knows a Bia ----i believes a P -- a set of primitive propositions: P, Q Examples: K 1 a u Semantics: A Kripke Structure consists of four elements: A set of possible worldsu {p (w): P-----> {True, False u. …, n binary relations on the worlds ~1, ~2 u 58

Modal Logics u Language: there is a set of n agents Kia --- i knows a Bia ----i believes a P -- a set of primitive propositions: P, Q Examples: K 1 a u Semantics: A Kripke Structure consists of four elements: A set of possible worldsu {p (w): P-----> {True, False u. …, n binary relations on the worlds ~1, ~2 u 58

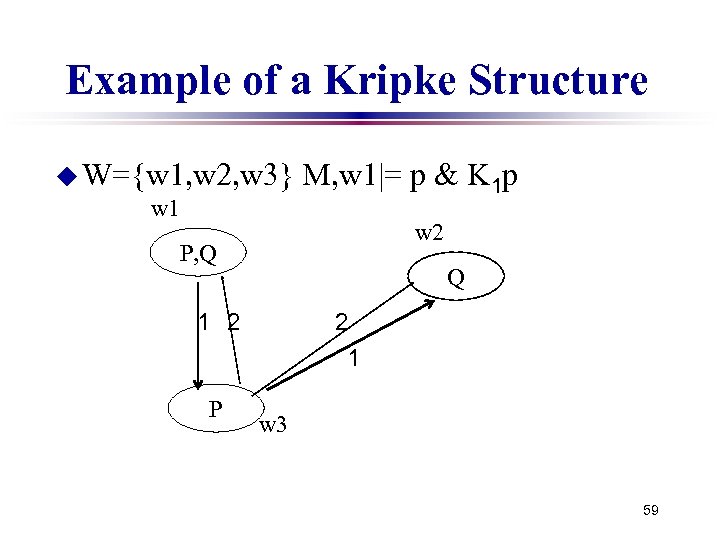

Example of a Kripke Structure u W={w 1, w 2, w 3} w 1 M, w 1|= p & K 1 p w 2 P, Q Q 1 2 P 2 1 w 3 59

Example of a Kripke Structure u W={w 1, w 2, w 3} w 1 M, w 1|= p & K 1 p w 2 P, Q Q 1 2 P 2 1 w 3 59

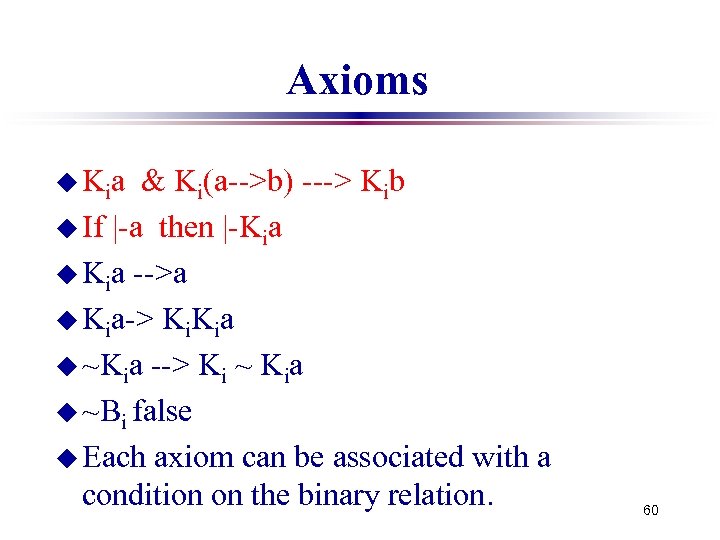

Axioms u Ki a & Ki(a-->b) ---> Kib u If |-a then |-Kia u Kia -->a u Kia-> Ki. Kia u ~Kia --> Ki ~ Kia u ~Bi false u Each axiom can be associated with a condition on the binary relation. 60

Axioms u Ki a & Ki(a-->b) ---> Kib u If |-a then |-Kia u Kia -->a u Kia-> Ki. Kia u ~Kia --> Ki ~ Kia u ~Bi false u Each axiom can be associated with a condition on the binary relation. 60

Problems in using Possible Worlds Semantics u Logical omniscience--the agent believes all the logical consequences of its belief. u The agent believes in all tautologies. u Philosophers: possible worlds do not exist. 61

Problems in using Possible Worlds Semantics u Logical omniscience--the agent believes all the logical consequences of its belief. u The agent believes in all tautologies. u Philosophers: possible worlds do not exist. 61

Minimal Models: partial solution u The intension of a sentence: the set of possible worlds in which the sentence is satisfied u Note: if two sentences have the same intensions then they are semantically equivalent. u. A sentence is a belief at a given world if its intension is belief-accessible. u According to this definition, the agent's beliefs are not closed under inferences; the agent may even believe in contradictions. 62

Minimal Models: partial solution u The intension of a sentence: the set of possible worlds in which the sentence is satisfied u Note: if two sentences have the same intensions then they are semantically equivalent. u. A sentence is a belief at a given world if its intension is belief-accessible. u According to this definition, the agent's beliefs are not closed under inferences; the agent may even believe in contradictions. 62

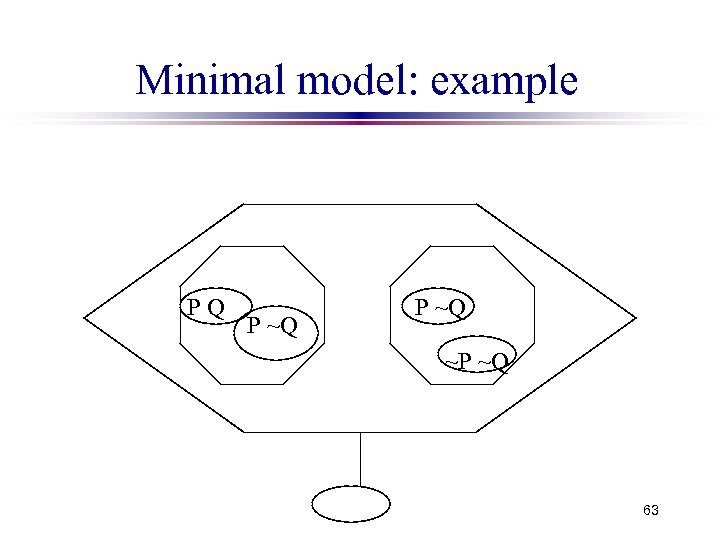

Minimal model: example PQ P ~Q ~P ~Q 63

Minimal model: example PQ P ~Q ~P ~Q 63

Beliefs, Desires, Goals and Intentions u u u u We use time lines rather than possible worlds. An agent's belief set includes beliefs concerning the world and beliefs concerning mental states of other agents. An agent may be mistaken in both kinds of beliefs and beliefs may be inconsistent. The beliefs are used to generate arguments in the negotiations. Desires: may be inconsistent. Goals: a consistent subset of the set of desires. Intentions serves to contribute to one or more of the agent's desires. 64

Beliefs, Desires, Goals and Intentions u u u u We use time lines rather than possible worlds. An agent's belief set includes beliefs concerning the world and beliefs concerning mental states of other agents. An agent may be mistaken in both kinds of beliefs and beliefs may be inconsistent. The beliefs are used to generate arguments in the negotiations. Desires: may be inconsistent. Goals: a consistent subset of the set of desires. Intentions serves to contribute to one or more of the agent's desires. 64

Intentions u Two types: Intention-To and Intention-That u Intention-to: refer to actions that are within the direct control of the agent. u Intention-that: refer to propositions that are not directly within the agent's realm of control, that it must rely on other agents for satisfying-- can be achieved through argumentation. 65

Intentions u Two types: Intention-To and Intention-That u Intention-to: refer to actions that are within the direct control of the agent. u Intention-that: refer to propositions that are not directly within the agent's realm of control, that it must rely on other agents for satisfying-- can be achieved through argumentation. 65

Argumentation Types promise of a future reward. u A threat. u An appeal to past promise. u Appeal to precedents as “counter example”. u Appeal to “prevailing practice ”. u Appeal to self-interests u. A 66

Argumentation Types promise of a future reward. u A threat. u An appeal to past promise. u Appeal to precedents as “counter example”. u Appeal to “prevailing practice ”. u Appeal to self-interests u. A 66

Example: 2 Robots u Two mobile robots on Mars each built to maximize its own utility. u R 1 requests R 2 to dig for a mineral. R 2 refuses. R 1 responds with a threat: ``If you do not dig for me, I will break your antenna''. R 2 needs to evaluate this threat. u Another possibility: R 1 promises a reward: ``If you dig for me today, I will help you move your equipment tomorrow. '' R 2 needs to evaluate the promise of future reward. 67

Example: 2 Robots u Two mobile robots on Mars each built to maximize its own utility. u R 1 requests R 2 to dig for a mineral. R 2 refuses. R 1 responds with a threat: ``If you do not dig for me, I will break your antenna''. R 2 needs to evaluate this threat. u Another possibility: R 1 promises a reward: ``If you dig for me today, I will help you move your equipment tomorrow. '' R 2 needs to evaluate the promise of future reward. 67

Usage of the logic u Specification for agent design: the model constraints certain planning and negotiation processes. Axioms for argumentation types u The logic is used by the agents themselves: ANA (Automated Negotiation Agent( 68

Usage of the logic u Specification for agent design: the model constraints certain planning and negotiation processes. Axioms for argumentation types u The logic is used by the agents themselves: ANA (Automated Negotiation Agent( 68

ANA u Complies with the definition of an Agent Oriented Programming (AOP) system (Shoham: ( s The agent is represented using notions of mental states ; s The agent's actions depend on these mental states; s The agent's mental state may change over time; s Mental state changes are driven by inference rules. 69

ANA u Complies with the definition of an Agent Oriented Programming (AOP) system (Shoham: ( s The agent is represented using notions of mental states ; s The agent's actions depend on these mental states; s The agent's mental state may change over time; s Mental state changes are driven by inference rules. 69

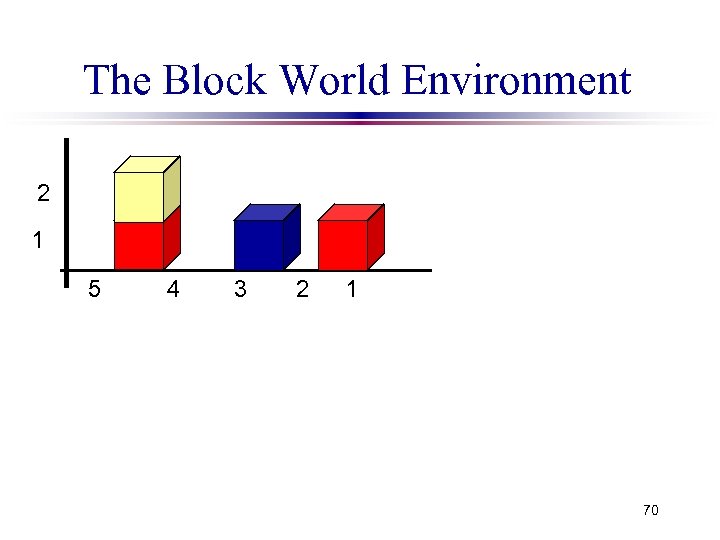

The Block World Environment 2 1 1 5ח 4 3 2 1 70

The Block World Environment 2 1 1 5ח 4 3 2 1 70

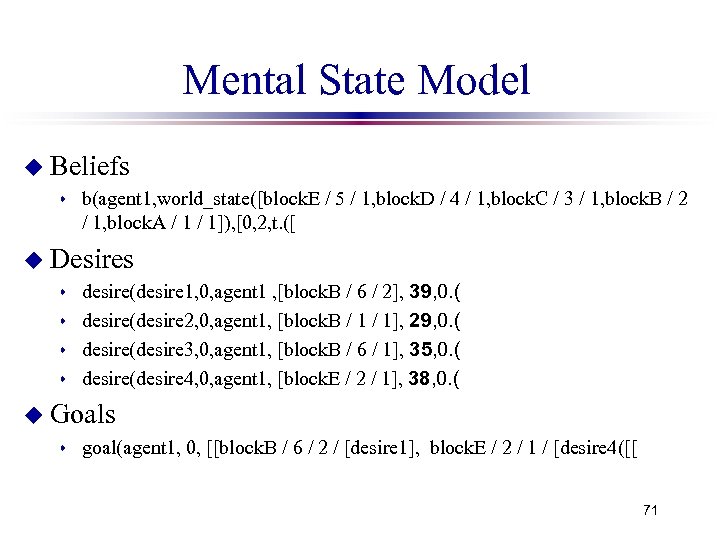

Mental State Model u Beliefs s b(agent 1, world_state([block. E / 5 / 1, block. D / 4 / 1, block. C / 3 / 1, block. B / 2 / 1, block. A / 1]), [0, 2, t. ([ u Desires desire(desire 1, 0, agent 1 , [block. B / 6 / 2], 39, 0. ( s desire(desire 2, 0, agent 1, [block. B / 1], 29, 0. ( s desire(desire 3, 0, agent 1, [block. B / 6 / 1], 35, 0. ( s desire(desire 4, 0, agent 1, [block. E / 2 / 1], 38, 0. ( s u Goals s goal(agent 1, 0, [[block. B / 6 / 2 / [desire 1], block. E / 2 / 1 / [desire 4([[ 71

Mental State Model u Beliefs s b(agent 1, world_state([block. E / 5 / 1, block. D / 4 / 1, block. C / 3 / 1, block. B / 2 / 1, block. A / 1]), [0, 2, t. ([ u Desires desire(desire 1, 0, agent 1 , [block. B / 6 / 2], 39, 0. ( s desire(desire 2, 0, agent 1, [block. B / 1], 29, 0. ( s desire(desire 3, 0, agent 1, [block. B / 6 / 1], 35, 0. ( s desire(desire 4, 0, agent 1, [block. E / 2 / 1], 38, 0. ( s u Goals s goal(agent 1, 0, [[block. B / 6 / 2 / [desire 1], block. E / 2 / 1 / [desire 4([[ 71

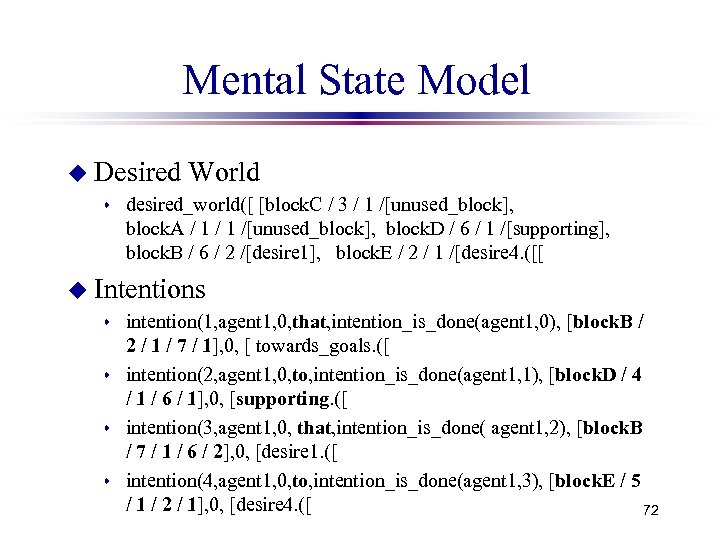

Mental State Model u Desired s World desired_world([ [block. C / 3 / 1 /[unused_block], block. A / 1 /[unused_block], block. D / 6 / 1 /[supporting], block. B / 6 / 2 /[desire 1], block. E / 2 / 1 /[desire 4. ([[ u Intentions intention(1, agent 1, 0, that, intention_is_done(agent 1, 0), [block. B / 2 / 1 / 7 / 1], 0, [ towards_goals. ([ s intention(2, agent 1, 0, to, intention_is_done(agent 1, 1), [block. D / 4 / 1 / 6 / 1], 0, [supporting. ([ s intention(3, agent 1, 0, that, intention_is_done( agent 1, 2), [block. B / 7 / 1 / 6 / 2], 0, [desire 1. ([ s intention(4, agent 1, 0, to, intention_is_done(agent 1, 3), [block. E / 5 / 1 / 2 / 1], 0, [desire 4. ([ 72 s

Mental State Model u Desired s World desired_world([ [block. C / 3 / 1 /[unused_block], block. A / 1 /[unused_block], block. D / 6 / 1 /[supporting], block. B / 6 / 2 /[desire 1], block. E / 2 / 1 /[desire 4. ([[ u Intentions intention(1, agent 1, 0, that, intention_is_done(agent 1, 0), [block. B / 2 / 1 / 7 / 1], 0, [ towards_goals. ([ s intention(2, agent 1, 0, to, intention_is_done(agent 1, 1), [block. D / 4 / 1 / 6 / 1], 0, [supporting. ([ s intention(3, agent 1, 0, that, intention_is_done( agent 1, 2), [block. B / 7 / 1 / 6 / 2], 0, [desire 1. ([ s intention(4, agent 1, 0, to, intention_is_done(agent 1, 3), [block. E / 5 / 1 / 2 / 1], 0, [desire 4. ([ 72 s

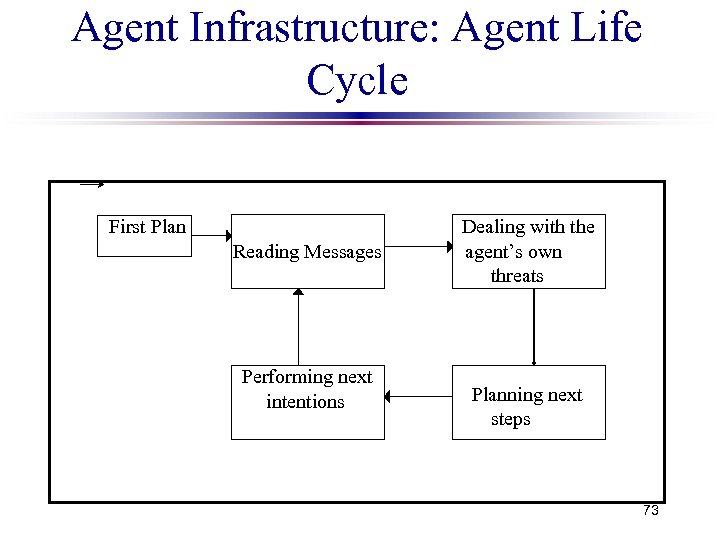

Agent Infrastructure: Agent Life Cycle First Plan Reading Messages Performing next intentions Dealing with the agent’s own threats Planning next steps 73

Agent Infrastructure: Agent Life Cycle First Plan Reading Messages Performing next intentions Dealing with the agent’s own threats Planning next steps 73

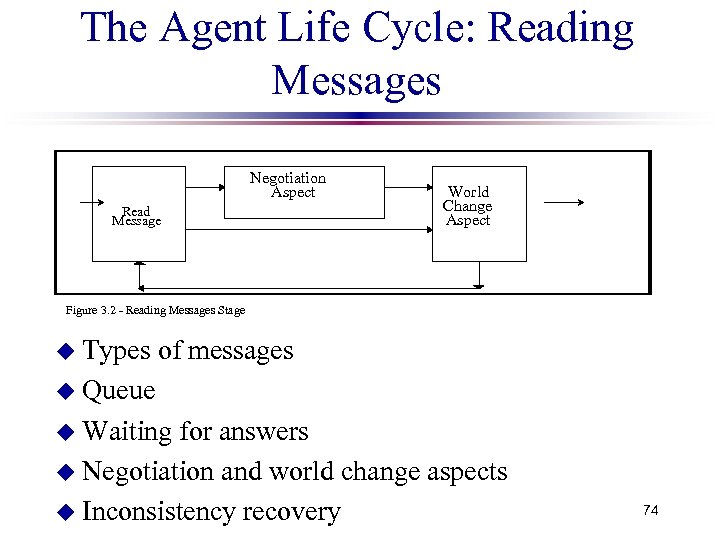

The Agent Life Cycle: Reading Messages Negotiation Aspect Read Message World Change Aspect Figure 3. 2 - Reading Messages Stage u Types of messages u Queue u Waiting for answers u Negotiation and world change aspects u Inconsistency recovery 74

The Agent Life Cycle: Reading Messages Negotiation Aspect Read Message World Change Aspect Figure 3. 2 - Reading Messages Stage u Types of messages u Queue u Waiting for answers u Negotiation and world change aspects u Inconsistency recovery 74

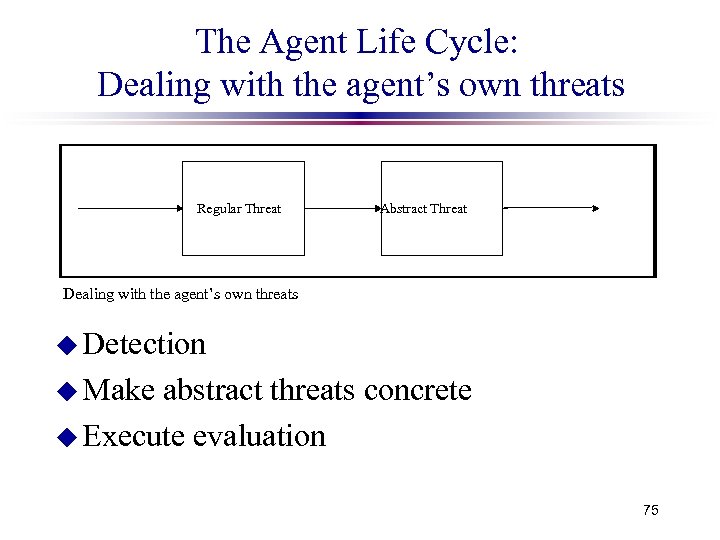

The Agent Life Cycle: Dealing with the agent’s own threats Regular Threat Abstract Threat Dealing with the agent’s own threats u Detection u Make abstract threats concrete u Execute evaluation 75

The Agent Life Cycle: Dealing with the agent’s own threats Regular Threat Abstract Threat Dealing with the agent’s own threats u Detection u Make abstract threats concrete u Execute evaluation 75

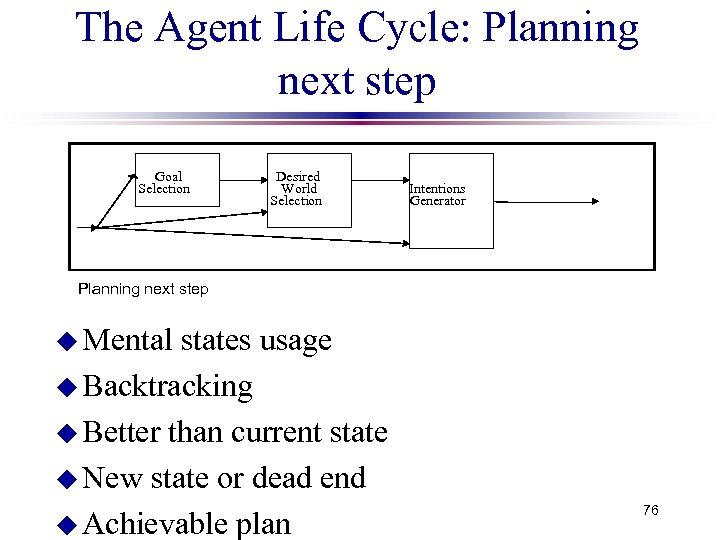

The Agent Life Cycle: Planning next step Goal Selection Desired World Selection Intentions Generator Planning next step u Mental states usage u Backtracking u Better than current state u New state or dead end u Achievable plan 76

The Agent Life Cycle: Planning next step Goal Selection Desired World Selection Intentions Generator Planning next step u Mental states usage u Backtracking u Better than current state u New state or dead end u Achievable plan 76

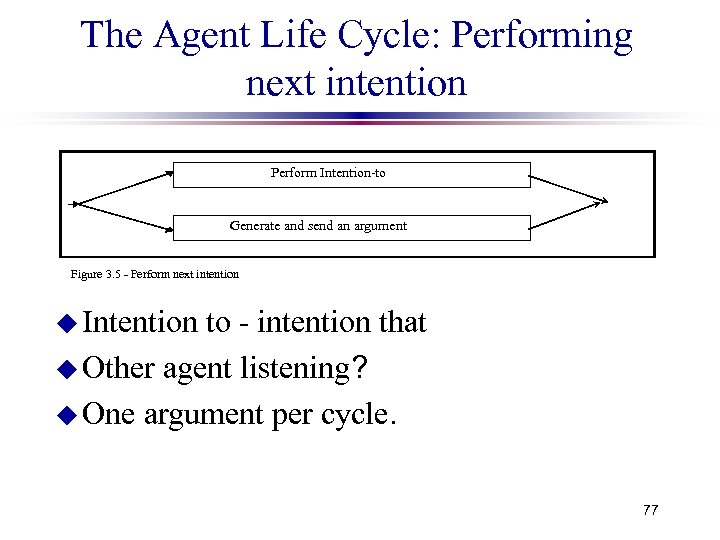

The Agent Life Cycle: Performing next intention Perform Intention-to Generate and send an argument Figure 3. 5 - Perform next intention u Intention to - intention that u Other agent listening? u One argument per cycle. 77

The Agent Life Cycle: Performing next intention Perform Intention-to Generate and send an argument Figure 3. 5 - Perform next intention u Intention to - intention that u Other agent listening? u One argument per cycle. 77

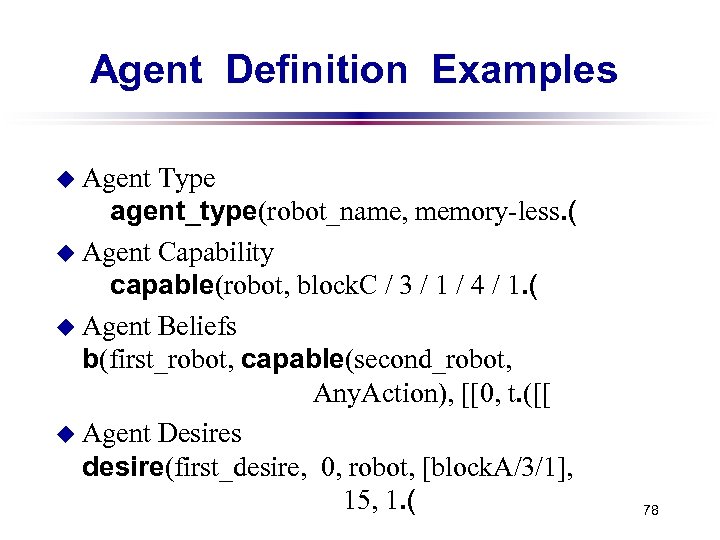

Agent Definition Examples u Agent Type agent_type(robot_name, memory-less. ( u Agent Capability capable(robot, block. C / 3 / 1 / 4 / 1. ( u Agent Beliefs b(first_robot, capable(second_robot, Any. Action), [[0, t. ([[ u Agent Desires desire(first_desire, 0, robot, [block. A/3/1], 15, 1. ( 78

Agent Definition Examples u Agent Type agent_type(robot_name, memory-less. ( u Agent Capability capable(robot, block. C / 3 / 1 / 4 / 1. ( u Agent Beliefs b(first_robot, capable(second_robot, Any. Action), [[0, t. ([[ u Agent Desires desire(first_desire, 0, robot, [block. A/3/1], 15, 1. ( 78

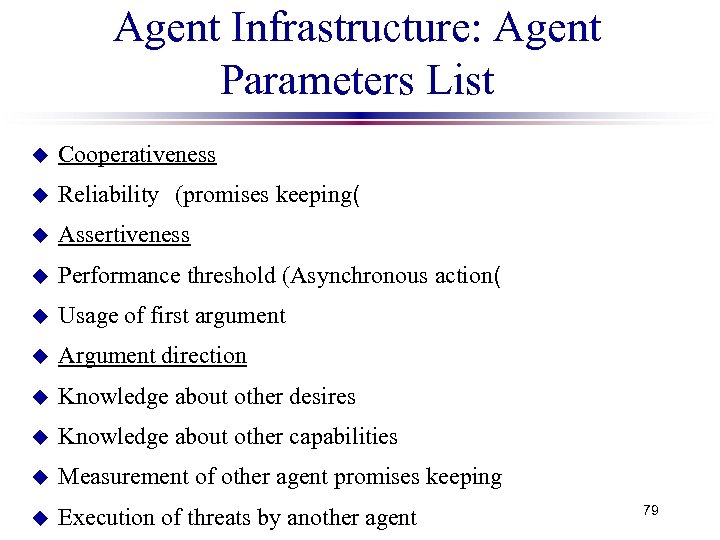

Agent Infrastructure: Agent Parameters List u Cooperativeness u Reliability (promises keeping( u Assertiveness u Performance threshold (Asynchronous action( u Usage of first argument u Argument direction u Knowledge about other desires u Knowledge about other capabilities u Measurement of other agent promises keeping u Execution of threats by another agent 79

Agent Infrastructure: Agent Parameters List u Cooperativeness u Reliability (promises keeping( u Assertiveness u Performance threshold (Asynchronous action( u Usage of first argument u Argument direction u Knowledge about other desires u Knowledge about other capabilities u Measurement of other agent promises keeping u Execution of threats by another agent 79

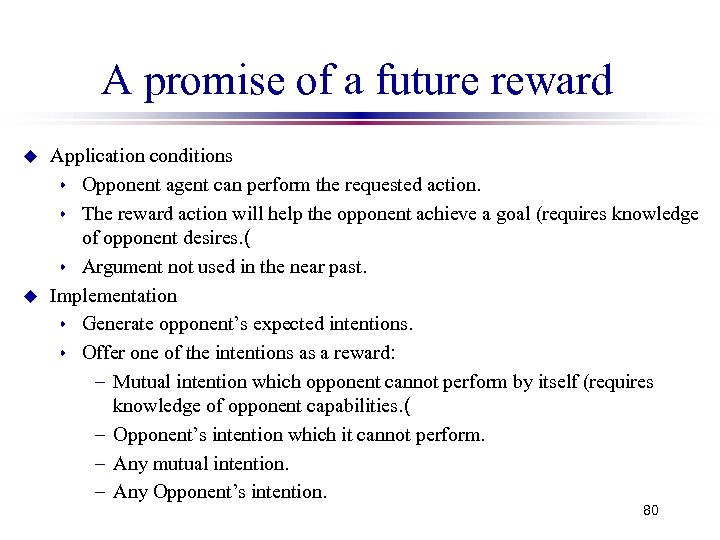

A promise of a future reward u u Application conditions s Opponent agent can perform the requested action. s The reward action will help the opponent achieve a goal (requires knowledge of opponent desires. ( s Argument not used in the near past. Implementation s Generate opponent’s expected intentions. s Offer one of the intentions as a reward: – Mutual intention which opponent cannot perform by itself (requires knowledge of opponent capabilities. ( – Opponent’s intention which it cannot perform. – Any mutual intention. – Any Opponent’s intention. 80

A promise of a future reward u u Application conditions s Opponent agent can perform the requested action. s The reward action will help the opponent achieve a goal (requires knowledge of opponent desires. ( s Argument not used in the near past. Implementation s Generate opponent’s expected intentions. s Offer one of the intentions as a reward: – Mutual intention which opponent cannot perform by itself (requires knowledge of opponent capabilities. ( – Opponent’s intention which it cannot perform. – Any mutual intention. – Any Opponent’s intention. 80

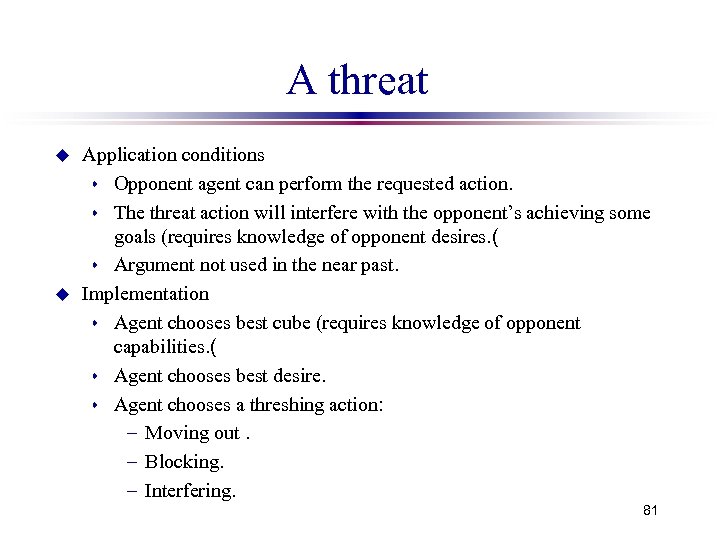

A threat u u Application conditions s Opponent agent can perform the requested action. s The threat action will interfere with the opponent’s achieving some goals (requires knowledge of opponent desires. ( s Argument not used in the near past. Implementation s Agent chooses best cube (requires knowledge of opponent capabilities. ( s Agent chooses best desire. s Agent chooses a threshing action: – Moving out. – Blocking. – Interfering. 81

A threat u u Application conditions s Opponent agent can perform the requested action. s The threat action will interfere with the opponent’s achieving some goals (requires knowledge of opponent desires. ( s Argument not used in the near past. Implementation s Agent chooses best cube (requires knowledge of opponent capabilities. ( s Agent chooses best desire. s Agent chooses a threshing action: – Moving out. – Blocking. – Interfering. 81

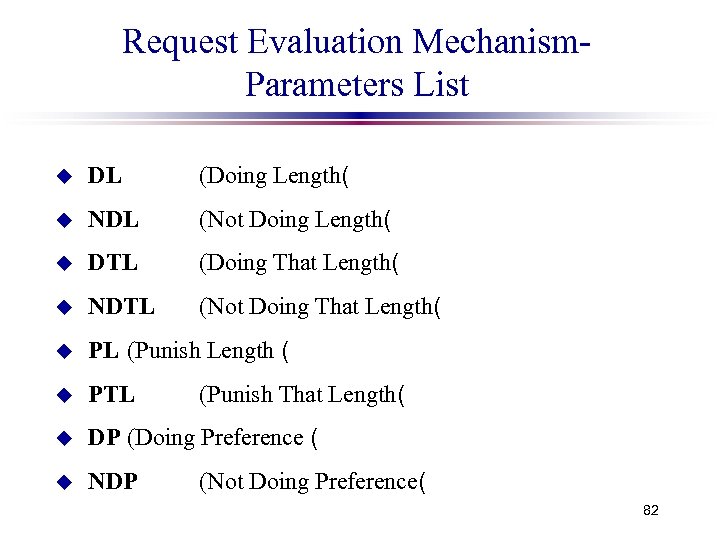

Request Evaluation Mechanism. Parameters List u DL (Doing Length( u NDL (Not Doing Length( u DTL (Doing That Length( u NDTL (Not Doing That Length( u PL (Punish Length ( u PTL u DP (Doing Preference ( u NDP (Punish That Length( (Not Doing Preference( 82

Request Evaluation Mechanism. Parameters List u DL (Doing Length( u NDL (Not Doing Length( u DTL (Doing That Length( u NDTL (Not Doing That Length( u PL (Punish Length ( u PTL u DP (Doing Preference ( u NDP (Punish That Length( (Not Doing Preference( 82

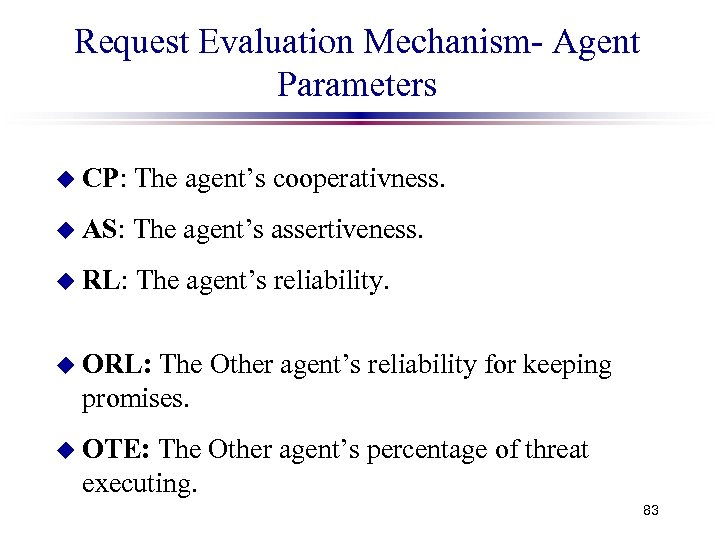

Request Evaluation Mechanism- Agent Parameters u CP: The agent’s cooperativness. u AS: The agent’s assertiveness. u RL: The agent’s reliability. u ORL: The Other agent’s reliability for keeping promises. u OTE: The Other agent’s percentage of threat executing. 83

Request Evaluation Mechanism- Agent Parameters u CP: The agent’s cooperativness. u AS: The agent’s assertiveness. u RL: The agent’s reliability. u ORL: The Other agent’s reliability for keeping promises. u OTE: The Other agent’s percentage of threat executing. 83

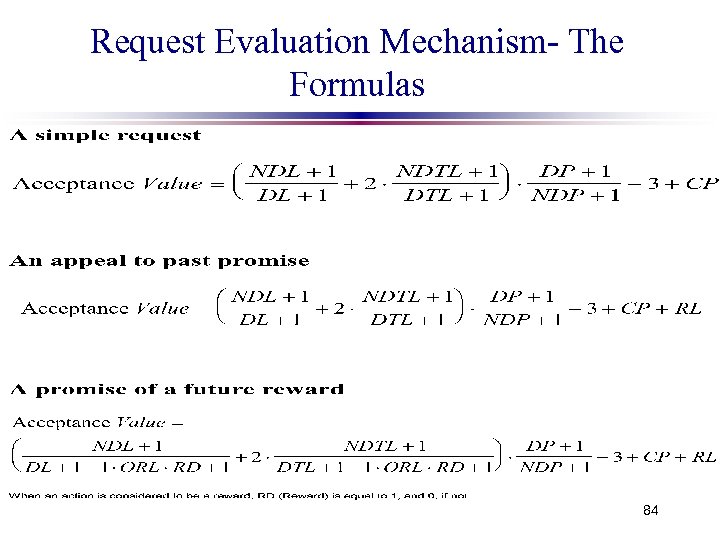

Request Evaluation Mechanism- The Formulas 84

Request Evaluation Mechanism- The Formulas 84

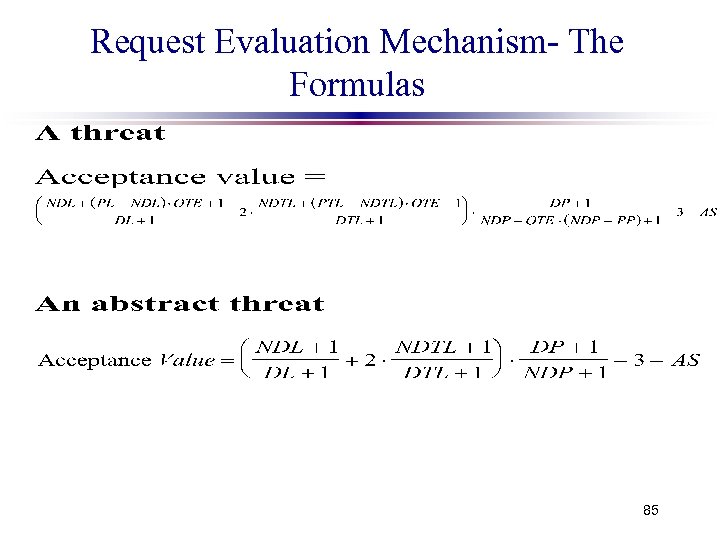

Request Evaluation Mechanism- The Formulas 85

Request Evaluation Mechanism- The Formulas 85

Experiments Results u Negotiating is better than not negotiating only where each agent has particular expertise. u Negotiating is better than not negotiating only where the agents have complete information. u Negotiating is better than not negotiating only for mutually cooperative agents or for an aggressive agent with a cooperative opponent. u Environment (game time, resources) effects the negotiations results. 86

Experiments Results u Negotiating is better than not negotiating only where each agent has particular expertise. u Negotiating is better than not negotiating only where the agents have complete information. u Negotiating is better than not negotiating only for mutually cooperative agents or for an aggressive agent with a cooperative opponent. u Environment (game time, resources) effects the negotiations results. 86

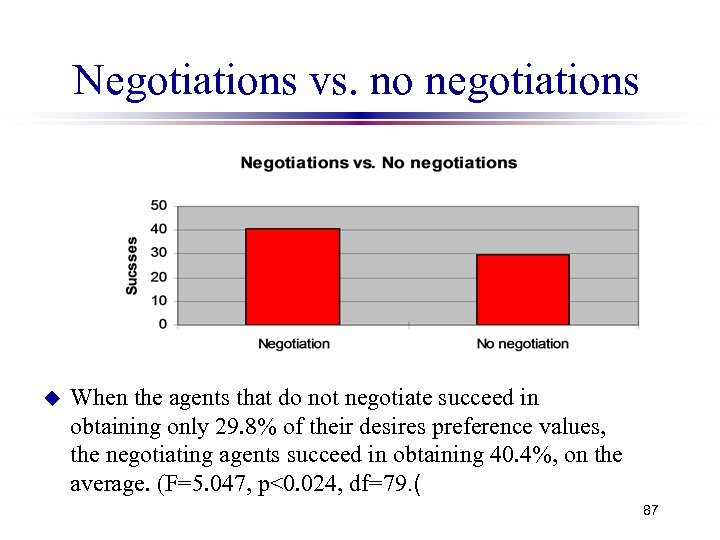

Negotiations vs. no negotiations u When the agents that do not negotiate succeed in obtaining only 29. 8% of their desires preference values, the negotiating agents succeed in obtaining 40. 4%, on the average. (F=5. 047, p<0. 024, df=79. ( 87

Negotiations vs. no negotiations u When the agents that do not negotiate succeed in obtaining only 29. 8% of their desires preference values, the negotiating agents succeed in obtaining 40. 4%, on the average. (F=5. 047, p<0. 024, df=79. ( 87

Complete information vs. no information u Agents that had no information succeed in obtaining a success rate of only 30. 8%, while agents that had full information succeed in obtaining 40. 4% on the average. (F=4. 326, p<0. 04, df=38. ( 88

Complete information vs. no information u Agents that had no information succeed in obtaining a success rate of only 30. 8%, while agents that had full information succeed in obtaining 40. 4% on the average. (F=4. 326, p<0. 04, df=38. ( 88

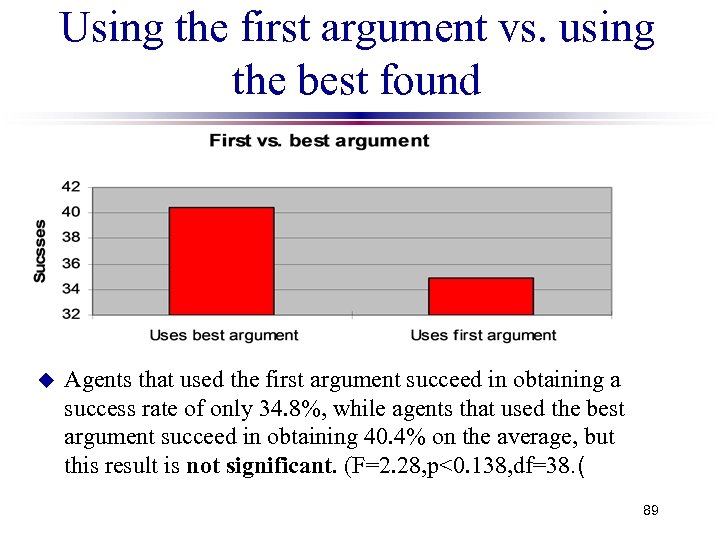

Using the first argument vs. using the best found u Agents that used the first argument succeed in obtaining a success rate of only 34. 8%, while agents that used the best argument succeed in obtaining 40. 4% on the average, but this result is not significant. (F=2. 28, p<0. 138, df=38. ( 89

Using the first argument vs. using the best found u Agents that used the first argument succeed in obtaining a success rate of only 34. 8%, while agents that used the best argument succeed in obtaining 40. 4% on the average, but this result is not significant. (F=2. 28, p<0. 138, df=38. ( 89

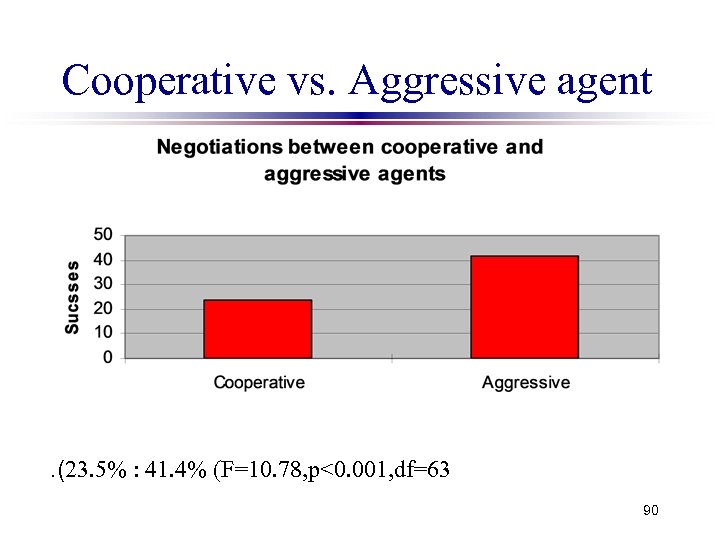

Cooperative vs. Aggressive agent . (23. 5% : 41. 4% (F=10. 78, p<0. 001, df=63 90

Cooperative vs. Aggressive agent . (23. 5% : 41. 4% (F=10. 78, p<0. 001, df=63 90

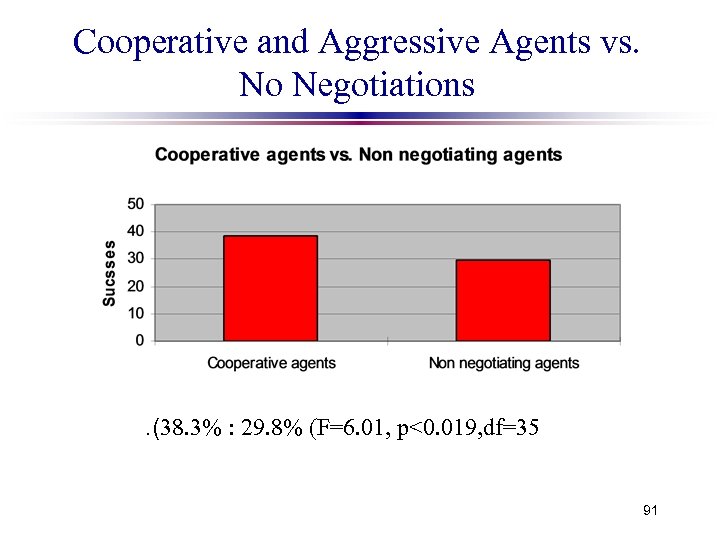

Cooperative and Aggressive Agents vs. No Negotiations . (38. 3% : 29. 8% (F=6. 01, p<0. 019, df=35 91

Cooperative and Aggressive Agents vs. No Negotiations . (38. 3% : 29. 8% (F=6. 01, p<0. 019, df=35 91

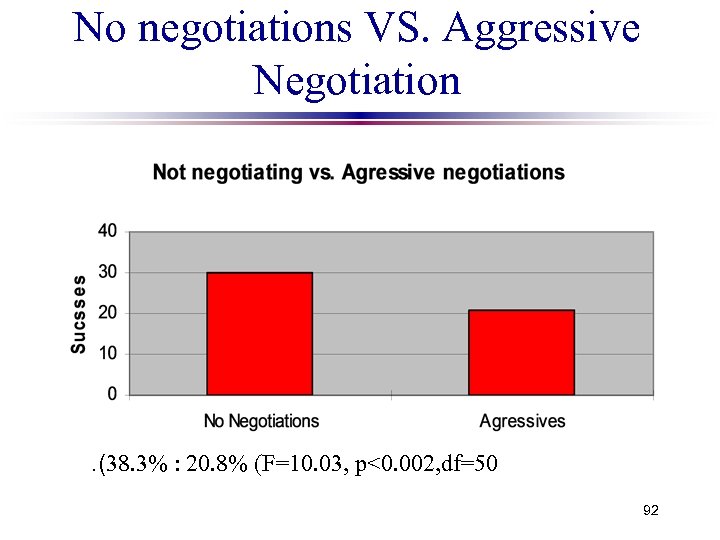

No negotiations VS. Aggressive Negotiation . (38. 3% : 20. 8% (F=10. 03, p<0. 002, df=50 92

No negotiations VS. Aggressive Negotiation . (38. 3% : 20. 8% (F=10. 03, p<0. 002, df=50 92

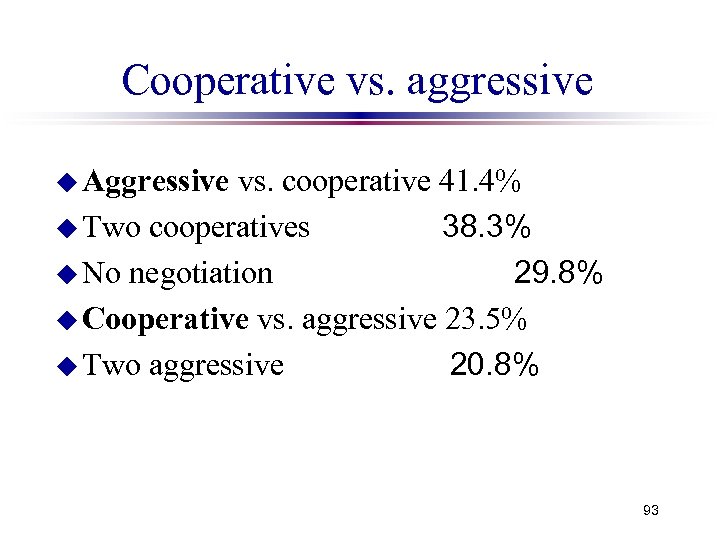

Cooperative vs. aggressive u Aggressive vs. cooperative 41. 4% u Two cooperatives 38. 3% u No negotiation 29. 8% u Cooperative vs. aggressive 23. 5% u Two aggressive 20. 8% 93

Cooperative vs. aggressive u Aggressive vs. cooperative 41. 4% u Two cooperatives 38. 3% u No negotiation 29. 8% u Cooperative vs. aggressive 23. 5% u Two aggressive 20. 8% 93

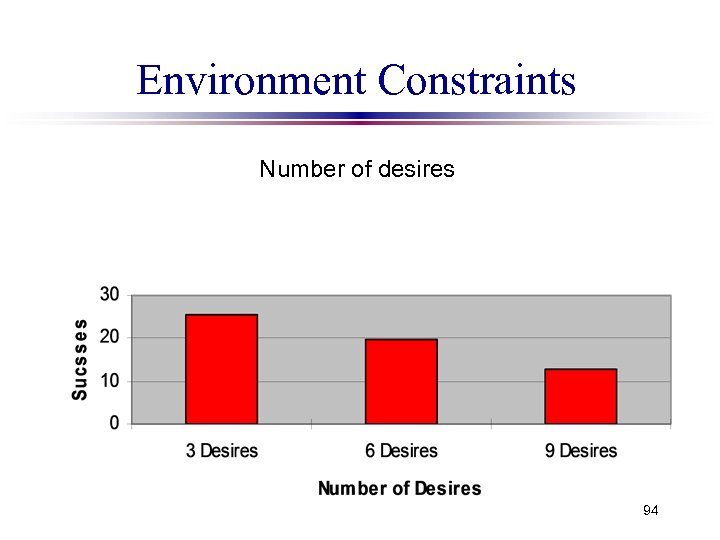

Environment Constraints Number of desires 94

Environment Constraints Number of desires 94

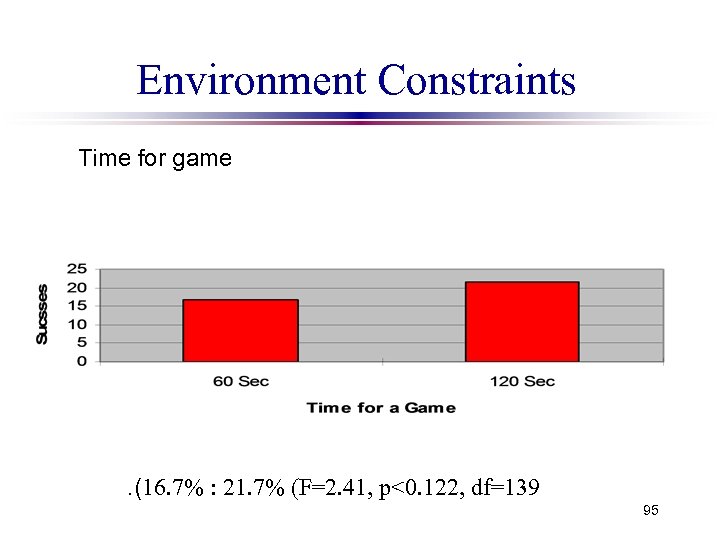

Environment Constraints Time for game . (16. 7% : 21. 7% (F=2. 41, p<0. 122, df=139 95

Environment Constraints Time for game . (16. 7% : 21. 7% (F=2. 41, p<0. 122, df=139 95

Is it worth it to use formal methods for Multi-Agent Systems in general and Negotiations in particular? 96

Is it worth it to use formal methods for Multi-Agent Systems in general and Negotiations in particular? 96

Game-theory Based Frameworks (Non-cooperative Models( u Strategic-negotiation model based on: alternating offers model of rubinstein. Applications: Data allocation (schwartz & kraus AAAI 97, ( s Resource allocation , task distribution (kraus wilkenfeld zlotkin AIJ 95, kraus AMAI 97), hostage crisis (kraus wilkenfeld TSMC 93. ( s 97

Game-theory Based Frameworks (Non-cooperative Models( u Strategic-negotiation model based on: alternating offers model of rubinstein. Applications: Data allocation (schwartz & kraus AAAI 97, ( s Resource allocation , task distribution (kraus wilkenfeld zlotkin AIJ 95, kraus AMAI 97), hostage crisis (kraus wilkenfeld TSMC 93. ( s 97

Advantages and Difficulties: Negotiation on Data Allocation u Beneficial results; proved to be better than current methods; simple strategies. u Problems: Need to develop utility functions; s Finding possible action: identifying optimal allocations is NP complete; s Incomplete information: game-theory provides limited solutions. s 98

Advantages and Difficulties: Negotiation on Data Allocation u Beneficial results; proved to be better than current methods; simple strategies. u Problems: Need to develop utility functions; s Finding possible action: identifying optimal allocations is NP complete; s Incomplete information: game-theory provides limited solutions. s 98

Game-theory Based Frameworks (Non-cooperative Models( u Auctions applications: , (Data allocation (schwartz & kraus ATAL 97 s. Electronic commerce s u Subcontracting based on: principle agent models. Applications: . (Task allocation (kraus, AIJ 96 s 99

Game-theory Based Frameworks (Non-cooperative Models( u Auctions applications: , (Data allocation (schwartz & kraus ATAL 97 s. Electronic commerce s u Subcontracting based on: principle agent models. Applications: . (Task allocation (kraus, AIJ 96 s 99

Advantages and Difficulties: Auctions for Data Allocation u Beneficial results; proved to be better than current methods. u Problems : Utility functions, s Applicable only when a server is concerned only about the data stored locally , s Difficult to find bidding when there is incomplete information and the evaluations are dependant on each other: no procedures. s 100

Advantages and Difficulties: Auctions for Data Allocation u Beneficial results; proved to be better than current methods. u Problems : Utility functions, s Applicable only when a server is concerned only about the data stored locally , s Difficult to find bidding when there is incomplete information and the evaluations are dependant on each other: no procedures. s 100

Game-theory Based Frameworks (Cooperative Models( u Coalition theories applications : . (Group and teams formation (shehory &kraus CI 99 s Benefits: well-defined concepts of stability; mechanisms to divide benefits. u Difficulties: utility functions, no procedures for coalition formation; exponential problems. u DPS model: combinatory theories & operations research (shehory &kraus AIJ 98. ( 101 u

Game-theory Based Frameworks (Cooperative Models( u Coalition theories applications : . (Group and teams formation (shehory &kraus CI 99 s Benefits: well-defined concepts of stability; mechanisms to divide benefits. u Difficulties: utility functions, no procedures for coalition formation; exponential problems. u DPS model: combinatory theories & operations research (shehory &kraus AIJ 98. ( 101 u

Decision-theory Based Frameworks u Multi-attributed decision making: application: s Intentions reconciliation in Shared. Plans (grosz & kraus, 98. ( u Benefits: using results of MADM, e. G. , Specific method is not so important, standardization techniques. u Problems: choosing attributes; assigning values, choosing weights. 102

Decision-theory Based Frameworks u Multi-attributed decision making: application: s Intentions reconciliation in Shared. Plans (grosz & kraus, 98. ( u Benefits: using results of MADM, e. G. , Specific method is not so important, standardization techniques. u Problems: choosing attributes; assigning values, choosing weights. 102

Logical Models u Modal logic: BDI models: applications: Automated argumentation's (kraus, sycara & eventchick AIJ 99. ( s Specification of sharedplans (grosz & kraus AIJ 96. ( s Bounded agents (nirkhe, kraus, perlis JLC 97. ( s Agents reasoning about other agents (kraus & s lehmann TCT 88 kraus & subrahmanian IJIS 95. ( 103

Logical Models u Modal logic: BDI models: applications: Automated argumentation's (kraus, sycara & eventchick AIJ 99. ( s Specification of sharedplans (grosz & kraus AIJ 96. ( s Bounded agents (nirkhe, kraus, perlis JLC 97. ( s Agents reasoning about other agents (kraus & s lehmann TCT 88 kraus & subrahmanian IJIS 95. ( 103

Advantages and Difficulties: Logical Models u Formal models with well studied properties: excellent for specification. u Problems : Some assumptions are not valid (e. g. , omnicience. ( s Complexity problems. s There are no procedures for actions: required a lot of programming; decision making; developing preferences. s 104

Advantages and Difficulties: Logical Models u Formal models with well studied properties: excellent for specification. u Problems : Some assumptions are not valid (e. g. , omnicience. ( s Complexity problems. s There are no procedures for actions: required a lot of programming; decision making; developing preferences. s 104

Physics Based Models u Physical models of particle-dynamics Applications: Cooperation in large-scale multi-agent systems: freight deliveries within a metropolitan area. (Shehory & Kraus ECAI 96 Shehory, Kraus & Yadgar ATAL 98. ( u Benefits: efficient; inherits the physics properties. u Problems: adjustments; potential functions 105

Physics Based Models u Physical models of particle-dynamics Applications: Cooperation in large-scale multi-agent systems: freight deliveries within a metropolitan area. (Shehory & Kraus ECAI 96 Shehory, Kraus & Yadgar ATAL 98. ( u Benefits: efficient; inherits the physics properties. u Problems: adjustments; potential functions 105

Summary u Benefits: formal models which have already been studied; lead to efficient results. No need to invent the wheel. u Problems : Restrictions and assumptions made by gametheory are not valid in real world MAS situations: extensions are needed. s It is difficult to develop utility functions. s Complexity problems. s 106

Summary u Benefits: formal models which have already been studied; lead to efficient results. No need to invent the wheel. u Problems : Restrictions and assumptions made by gametheory are not valid in real world MAS situations: extensions are needed. s It is difficult to develop utility functions. s Complexity problems. s 106