aaa2b9b75c3e1a4efa52206c01c2b50f.ppt

- Количество слайдов: 24

ATLAS Grid Computing in the Real World § § Taipei, 27 th April 2005 Roger Jones ATLAS International Computing Board Chair Grid. PP Applications Co-ordinator

ATLAS Grid Computing in the Real World § § Taipei, 27 th April 2005 Roger Jones ATLAS International Computing Board Chair Grid. PP Applications Co-ordinator

2 Overview § The ATLAS Computing Model § Distribution of data and jobs, roles of sites § Required Resources § Testing the Model § See Gilbert Poulard’s Data Challenge talk next § Observations about the Real World (taken from ATLAS DC experiences and those of Grid. PP) § Matching the global view with local expectations § Sharing with other user communities § Working across several Grid deployments § Resources required > resources available? RWL Jones, Lancaster University

2 Overview § The ATLAS Computing Model § Distribution of data and jobs, roles of sites § Required Resources § Testing the Model § See Gilbert Poulard’s Data Challenge talk next § Observations about the Real World (taken from ATLAS DC experiences and those of Grid. PP) § Matching the global view with local expectations § Sharing with other user communities § Working across several Grid deployments § Resources required > resources available? RWL Jones, Lancaster University

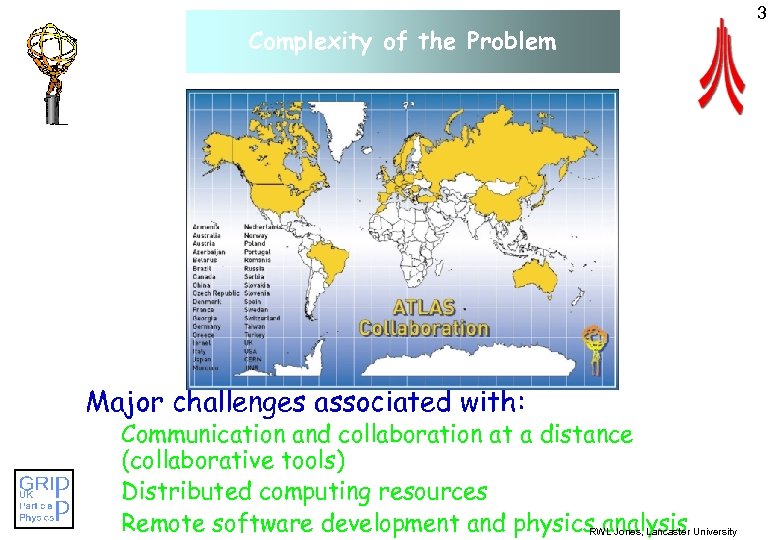

Complexity of the Problem Major challenges associated with: Communication and collaboration at a distance (collaborative tools) Distributed computing resources Remote software development and physics analysis University RWL Jones, Lancaster 3

Complexity of the Problem Major challenges associated with: Communication and collaboration at a distance (collaborative tools) Distributed computing resources Remote software development and physics analysis University RWL Jones, Lancaster 3

4 ATLAS is not one experiment Extra Dimensions Higgs Heavy Ion Physics SUSY Electroweak B physics QCD RWL Jones, Lancaster University

4 ATLAS is not one experiment Extra Dimensions Higgs Heavy Ion Physics SUSY Electroweak B physics QCD RWL Jones, Lancaster University

5 Computing Resources § Computing Model well evolved § Externally reviewed in January 2005 § There are (and will remain for some time) many unknowns § Calibration and alignment strategy is still evolving § Physics data access patterns MAY start to be exercised this soon ¨ Unlikely to know the real patterns until 2007/2008! § Still uncertainties on the event sizes § If there is a problem with resources, e. g. disk, the model will have to change § Lesson from the previous round of experiments at CERN (LEP, 1989 -2000) § Reviews in 1988 underestimated the computing requirements by an order of magnitude! RWL Jones, Lancaster University

5 Computing Resources § Computing Model well evolved § Externally reviewed in January 2005 § There are (and will remain for some time) many unknowns § Calibration and alignment strategy is still evolving § Physics data access patterns MAY start to be exercised this soon ¨ Unlikely to know the real patterns until 2007/2008! § Still uncertainties on the event sizes § If there is a problem with resources, e. g. disk, the model will have to change § Lesson from the previous round of experiments at CERN (LEP, 1989 -2000) § Reviews in 1988 underestimated the computing requirements by an order of magnitude! RWL Jones, Lancaster University

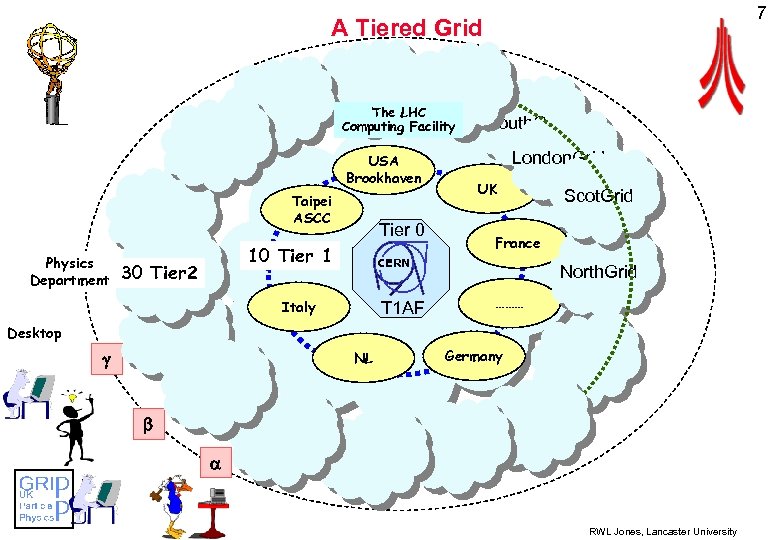

7 A Tiered Grid The LHC Computing Facility USA Brookhaven Taipei ASCC Physics Department Tier 0 10 Tier 1 30 Tier 2 South. Grid London. Grid UK France CERN Italy T 1 AF Scot. Grid North. Grid ……… Desktop NL Germany RWL Jones, Lancaster University

7 A Tiered Grid The LHC Computing Facility USA Brookhaven Taipei ASCC Physics Department Tier 0 10 Tier 1 30 Tier 2 South. Grid London. Grid UK France CERN Italy T 1 AF Scot. Grid North. Grid ……… Desktop NL Germany RWL Jones, Lancaster University

8 Processing Roles § Tier-0: § § First pass processing on express/calibration physics stream 24 -48 hours later, process full physics data stream with reasonable calibrations § Curate the full RAW data and curate the first pass processing à Getting good calibrations in a short time is a challenge à There will be large data movement from T 0 to T 1 s § Tier-1 s: § § Reprocess 1 -2 months after arrival with better calibrations Curate and reprocess all resident RAW at year end with improved calibration and software § Curate and allow access to all the reprocessed data sets à There will be large data movement from T 1 to T 1 and significant data movement from T 1 to T 2 § Tier-2 s: § § à § § Simulation will take place at T 2 s Simulated data stored at T 1 s There will be significant data movement from T 2 s to T 1 s Need partnerships to plan networking Must have fail-over to other sites RWL Jones, Lancaster University

8 Processing Roles § Tier-0: § § First pass processing on express/calibration physics stream 24 -48 hours later, process full physics data stream with reasonable calibrations § Curate the full RAW data and curate the first pass processing à Getting good calibrations in a short time is a challenge à There will be large data movement from T 0 to T 1 s § Tier-1 s: § § Reprocess 1 -2 months after arrival with better calibrations Curate and reprocess all resident RAW at year end with improved calibration and software § Curate and allow access to all the reprocessed data sets à There will be large data movement from T 1 to T 1 and significant data movement from T 1 to T 2 § Tier-2 s: § § à § § Simulation will take place at T 2 s Simulated data stored at T 1 s There will be significant data movement from T 2 s to T 1 s Need partnerships to plan networking Must have fail-over to other sites RWL Jones, Lancaster University

9 Analysis Roles Analysis model broken into two components § Scheduled central production of augmented AOD, tuples & TAG collections from ESD à Done at T 1 s à Derived files moved to other T 1 s and to T 2 s § Chaotic user analysis of (shared) augmented AOD streams, tuples, new selections etc and individual user simulation and CPU-bound tasks matching the official MC production à Done at T 2 s and local facility à Modest job traffic between T 2 s RWL Jones, Lancaster University

9 Analysis Roles Analysis model broken into two components § Scheduled central production of augmented AOD, tuples & TAG collections from ESD à Done at T 1 s à Derived files moved to other T 1 s and to T 2 s § Chaotic user analysis of (shared) augmented AOD streams, tuples, new selections etc and individual user simulation and CPU-bound tasks matching the official MC production à Done at T 2 s and local facility à Modest job traffic between T 2 s RWL Jones, Lancaster University

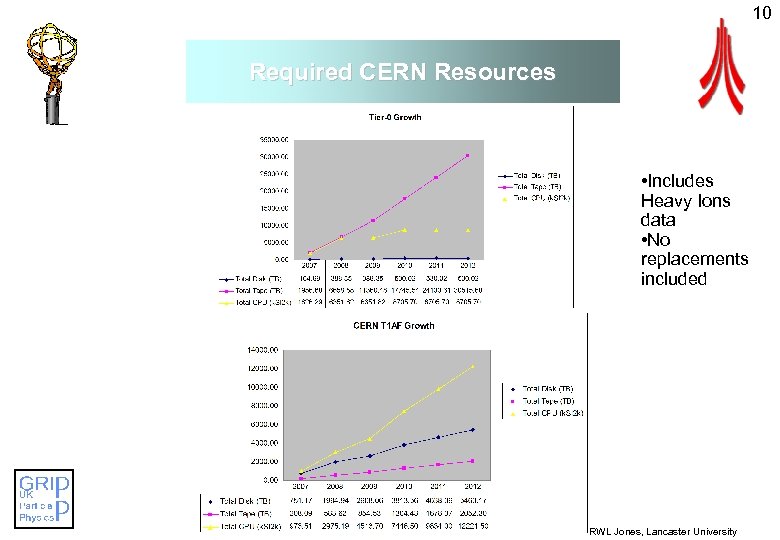

10 Required CERN Resources • Includes Heavy Ions data • No replacements included RWL Jones, Lancaster University

10 Required CERN Resources • Includes Heavy Ions data • No replacements included RWL Jones, Lancaster University

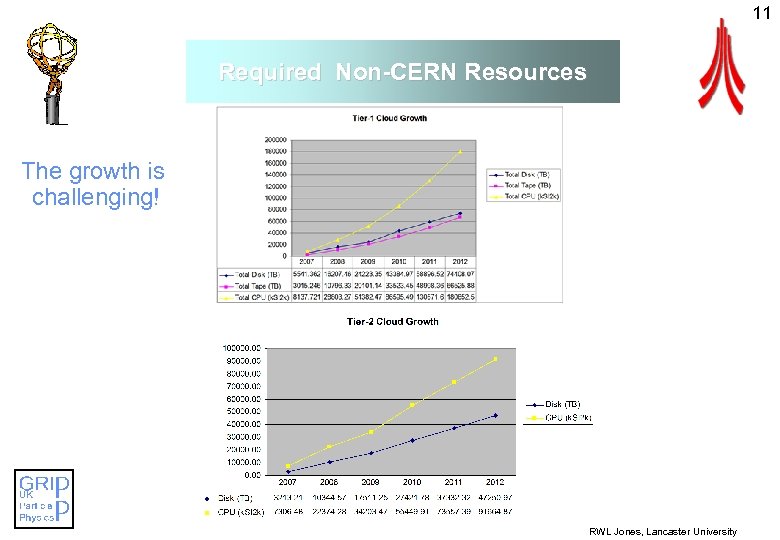

11 Required Non-CERN Resources The growth is challenging! RWL Jones, Lancaster University

11 Required Non-CERN Resources The growth is challenging! RWL Jones, Lancaster University

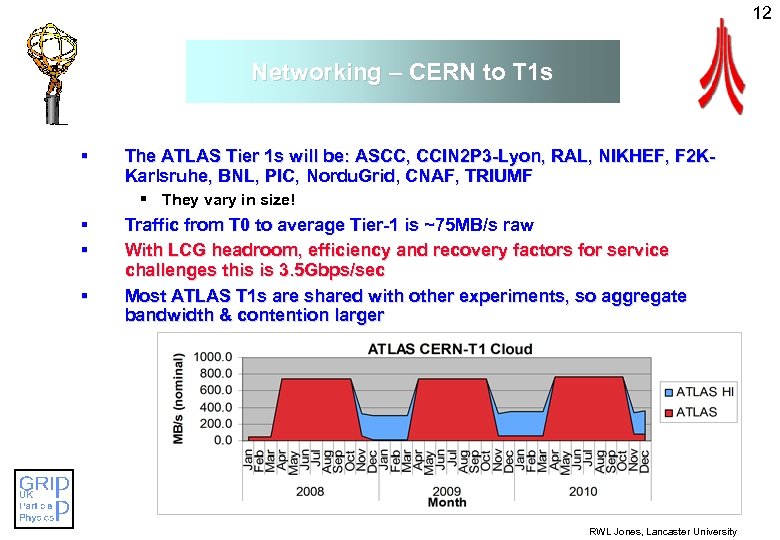

12 Networking – CERN to T 1 s § The ATLAS Tier 1 s will be: ASCC, CCIN 2 P 3 -Lyon, RAL, NIKHEF, F 2 KKarlsruhe, BNL, PIC, Nordu. Grid, CNAF, TRIUMF § They vary in size! § § § Traffic from T 0 to average Tier-1 is ~75 MB/s raw With LCG headroom, efficiency and recovery factors for service challenges this is 3. 5 Gbps/sec Most ATLAS T 1 s are shared with other experiments, so aggregate bandwidth & contention larger RWL Jones, Lancaster University

12 Networking – CERN to T 1 s § The ATLAS Tier 1 s will be: ASCC, CCIN 2 P 3 -Lyon, RAL, NIKHEF, F 2 KKarlsruhe, BNL, PIC, Nordu. Grid, CNAF, TRIUMF § They vary in size! § § § Traffic from T 0 to average Tier-1 is ~75 MB/s raw With LCG headroom, efficiency and recovery factors for service challenges this is 3. 5 Gbps/sec Most ATLAS T 1 s are shared with other experiments, so aggregate bandwidth & contention larger RWL Jones, Lancaster University

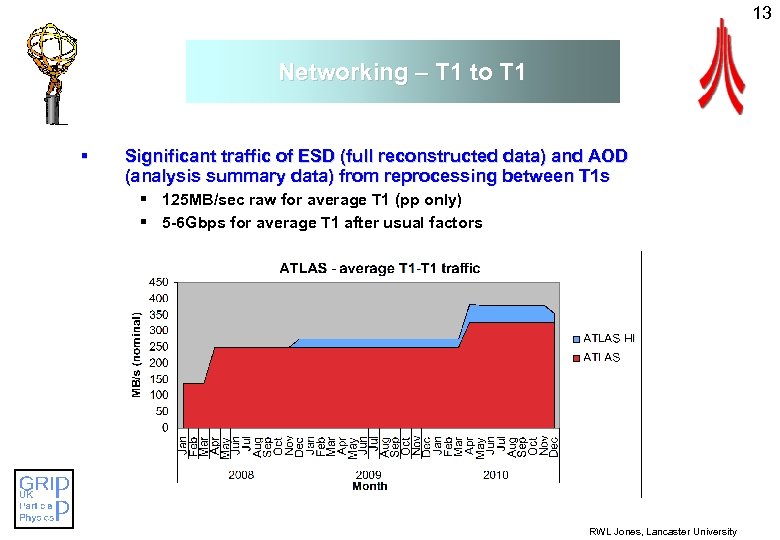

13 Networking – T 1 to T 1 § Significant traffic of ESD (full reconstructed data) and AOD (analysis summary data) from reprocessing between T 1 s § 125 MB/sec raw for average T 1 (pp only) § 5 -6 Gbps for average T 1 after usual factors RWL Jones, Lancaster University

13 Networking – T 1 to T 1 § Significant traffic of ESD (full reconstructed data) and AOD (analysis summary data) from reprocessing between T 1 s § 125 MB/sec raw for average T 1 (pp only) § 5 -6 Gbps for average T 1 after usual factors RWL Jones, Lancaster University

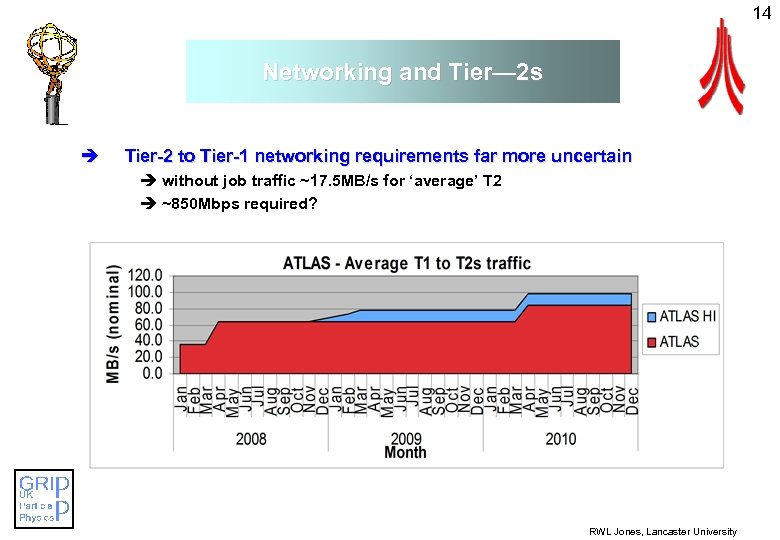

14 Networking and Tier— 2 s è Tier-2 to Tier-1 networking requirements far more uncertain è without job traffic ~17. 5 MB/s for ‘average’ T 2 è ~850 Mbps required? RWL Jones, Lancaster University

14 Networking and Tier— 2 s è Tier-2 to Tier-1 networking requirements far more uncertain è without job traffic ~17. 5 MB/s for ‘average’ T 2 è ~850 Mbps required? RWL Jones, Lancaster University

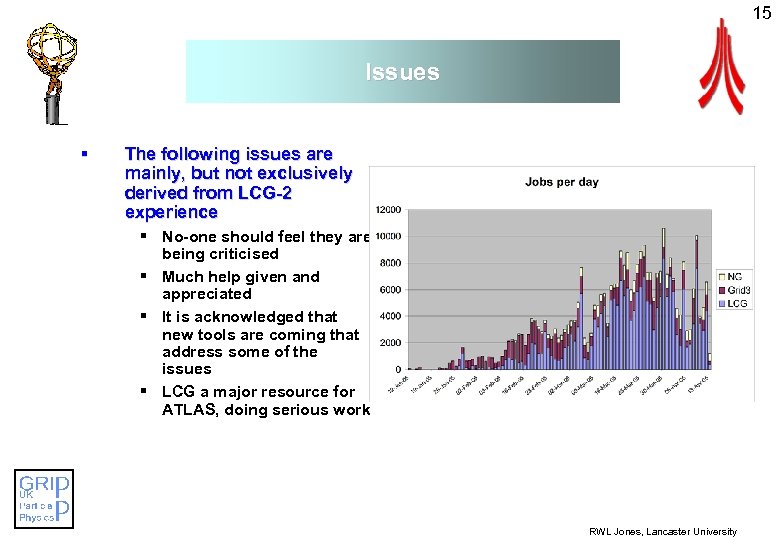

15 Issues § The following issues are mainly, but not exclusively derived from LCG-2 experience § No-one should feel they are being criticised § Much help given and appreciated § It is acknowledged that new tools are coming that address some of the issues § LCG a major resource for ATLAS, doing serious work RWL Jones, Lancaster University

15 Issues § The following issues are mainly, but not exclusively derived from LCG-2 experience § No-one should feel they are being criticised § Much help given and appreciated § It is acknowledged that new tools are coming that address some of the issues § LCG a major resource for ATLAS, doing serious work RWL Jones, Lancaster University

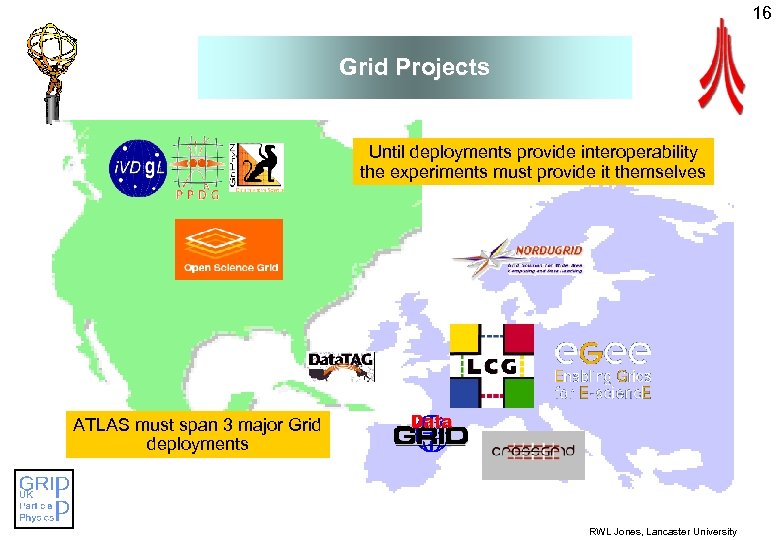

16 Grid Projects Until deployments provide interoperability the experiments must provide it themselves ATLAS must span 3 major Grid deployments RWL Jones, Lancaster University

16 Grid Projects Until deployments provide interoperability the experiments must provide it themselves ATLAS must span 3 major Grid deployments RWL Jones, Lancaster University

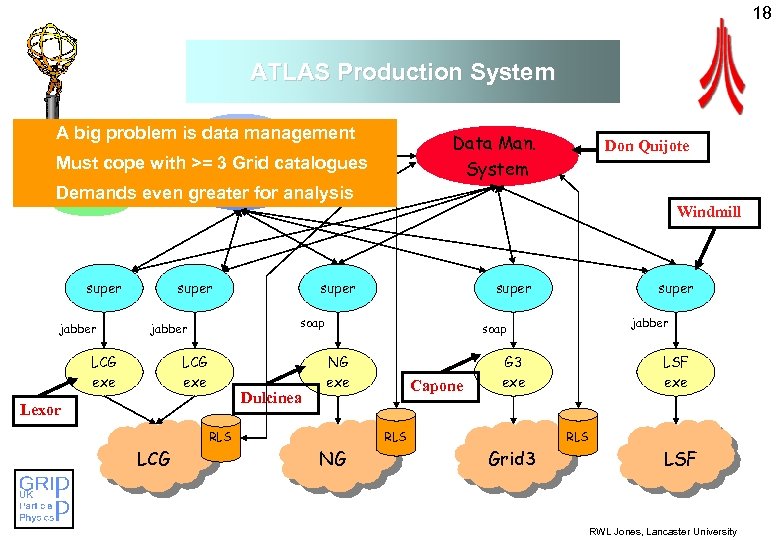

18 ATLAS Production System A big problem is data management Data Man. System Must cope with >=Prod. DB catalogues 3 Grid AMI Demands even greater for analysis super jabber super LCG exe super Dulcinea Lexor super jabber soap NG exe RLS LCG Windmill soap jabber Don Quijote Capone G 3 exe RLS NG LSF exe RLS Grid 3 LSF RWL Jones, Lancaster University

18 ATLAS Production System A big problem is data management Data Man. System Must cope with >=Prod. DB catalogues 3 Grid AMI Demands even greater for analysis super jabber super LCG exe super Dulcinea Lexor super jabber soap NG exe RLS LCG Windmill soap jabber Don Quijote Capone G 3 exe RLS NG LSF exe RLS Grid 3 LSF RWL Jones, Lancaster University

19 Data Management § Multiple deployments currently means multiple file catalogues § ATLAS build a meta-catalogue on top to join things up § Convergence would really help! § Existing data movement tools are not sophisticated in all three deployments § Anything beyond a single point-to-point movement has required user -written tools eg Don Quijote, Ph. Ed. Ex… § Data transfer scheduling is vital for large transfers § Should also take care of data integrity and retries. § file transfer service tools are emerging from deployments but… § Tools must exist in and/or span all three Grids § Other observations § Existing DM tools are too fragile: they don't have timeouts (so can hang a node) or retries and are not resilient against information system glitches. § They must also handle race conditions if there are multiple writes to a file § Catalogue failures and inconsistencies need to be well-handled RWL Jones, Lancaster University

19 Data Management § Multiple deployments currently means multiple file catalogues § ATLAS build a meta-catalogue on top to join things up § Convergence would really help! § Existing data movement tools are not sophisticated in all three deployments § Anything beyond a single point-to-point movement has required user -written tools eg Don Quijote, Ph. Ed. Ex… § Data transfer scheduling is vital for large transfers § Should also take care of data integrity and retries. § file transfer service tools are emerging from deployments but… § Tools must exist in and/or span all three Grids § Other observations § Existing DM tools are too fragile: they don't have timeouts (so can hang a node) or retries and are not resilient against information system glitches. § They must also handle race conditions if there are multiple writes to a file § Catalogue failures and inconsistencies need to be well-handled RWL Jones, Lancaster University

20 Workload Management § Resource brokerage is still an important idea when serving multiple VOs § ATLAS has tried to use the Resource Broker ‘as intended’ § However, submission to Resource Broker in LCG is slow (although much improved thanks to work on both sides) § Bulk job submission is needed in cases of shared input § Fair usage policies need to be clearly defined and enforced § Example: using a dummy job to obtain slots then pulling in a real job is very smart and helps get around RB submission problems § But is it reasonable? Pool-table analogy… § Ranking is an art not a science! § Default LCG-2 ranking is not very good! § Matchmaking with input files is not optimal ¨ Would want to add information on replicas in the site ranking ¨ Currently, if input files are specified, sites with at least one replica of any of them are always preferred, even if they have much less CPU than sites with no replicas. ¨ Important to know if file is on tape or disk RWL Jones, Lancaster University

20 Workload Management § Resource brokerage is still an important idea when serving multiple VOs § ATLAS has tried to use the Resource Broker ‘as intended’ § However, submission to Resource Broker in LCG is slow (although much improved thanks to work on both sides) § Bulk job submission is needed in cases of shared input § Fair usage policies need to be clearly defined and enforced § Example: using a dummy job to obtain slots then pulling in a real job is very smart and helps get around RB submission problems § But is it reasonable? Pool-table analogy… § Ranking is an art not a science! § Default LCG-2 ranking is not very good! § Matchmaking with input files is not optimal ¨ Would want to add information on replicas in the site ranking ¨ Currently, if input files are specified, sites with at least one replica of any of them are always preferred, even if they have much less CPU than sites with no replicas. ¨ Important to know if file is on tape or disk RWL Jones, Lancaster University

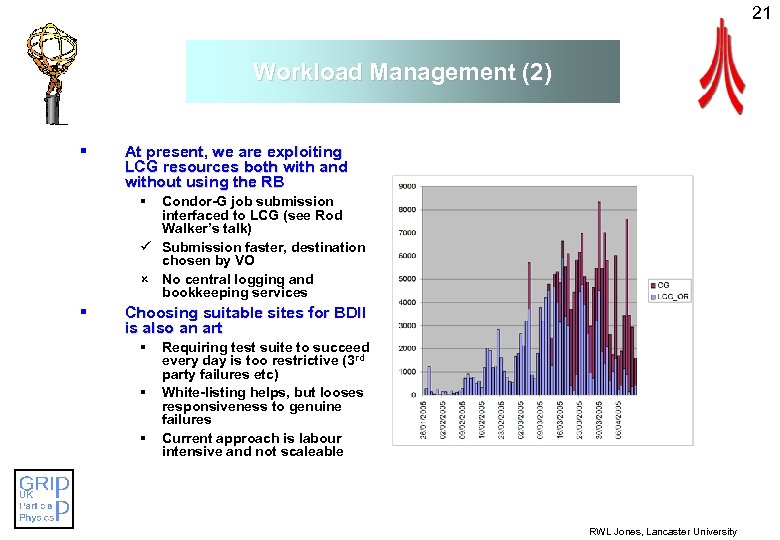

21 Workload Management (2) § At present, we are exploiting LCG resources both with and without using the RB § Condor-G job submission interfaced to LCG (see Rod Walker’s talk) ü Submission faster, destination chosen by VO û No central logging and bookkeeping services § Choosing suitable sites for BDII is also an art § § § Requiring test suite to succeed every day is too restrictive (3 rd party failures etc) White-listing helps, but looses responsiveness to genuine failures Current approach is labour intensive and not scaleable RWL Jones, Lancaster University

21 Workload Management (2) § At present, we are exploiting LCG resources both with and without using the RB § Condor-G job submission interfaced to LCG (see Rod Walker’s talk) ü Submission faster, destination chosen by VO û No central logging and bookkeeping services § Choosing suitable sites for BDII is also an art § § § Requiring test suite to succeed every day is too restrictive (3 rd party failures etc) White-listing helps, but looses responsiveness to genuine failures Current approach is labour intensive and not scaleable RWL Jones, Lancaster University

22 User Interaction § Running jobs § User needs global query commands for all her submitted jobs § Really need to be able to tag jobs into groups § Needs access to STDOUT/STDERR while job is running § Information system § § § Should span Grids! Should be accurate (e. g. evaluate free CPUs correctly) Should be robust Should have better user interface than ldapsearch! Debugging § When a job fails, information is not sufficient § Logging info is unfriendly and incomplete § Log files on RB and CE are not accessible to ordinary user and difficult to access by support team. § There is huge scope development here! RWL Jones, Lancaster University

22 User Interaction § Running jobs § User needs global query commands for all her submitted jobs § Really need to be able to tag jobs into groups § Needs access to STDOUT/STDERR while job is running § Information system § § § Should span Grids! Should be accurate (e. g. evaluate free CPUs correctly) Should be robust Should have better user interface than ldapsearch! Debugging § When a job fails, information is not sufficient § Logging info is unfriendly and incomplete § Log files on RB and CE are not accessible to ordinary user and difficult to access by support team. § There is huge scope development here! RWL Jones, Lancaster University

23 Site Issues § Sysadmin education and communication § § § Many people need to be trained ¨ Example: storage element usage ¨ Site defaults to ‘permanent’, but many sysadmins think storage is effectively a scratch area Need clear lines of communication to VOs and to Grid Operations Unannounced down times! Policies! Site misconfiguration. § § § It is the cause of most problems, Debugging site problems is very time-consuming Common misconfigurations: ¨ Wrong information published to Information system ¨ Worker Node disk space becomes full; ¨ Incorrect firewall settings, GLOBUS_TCP_RANGE, ssh keys not syncrhonized, ¨ NFS stale mounts, etc. ; ¨ Incorrect configuration of the experiment software area; ¨ Bad middleware installation. RWL Jones, Lancaster University

23 Site Issues § Sysadmin education and communication § § § Many people need to be trained ¨ Example: storage element usage ¨ Site defaults to ‘permanent’, but many sysadmins think storage is effectively a scratch area Need clear lines of communication to VOs and to Grid Operations Unannounced down times! Policies! Site misconfiguration. § § § It is the cause of most problems, Debugging site problems is very time-consuming Common misconfigurations: ¨ Wrong information published to Information system ¨ Worker Node disk space becomes full; ¨ Incorrect firewall settings, GLOBUS_TCP_RANGE, ssh keys not syncrhonized, ¨ NFS stale mounts, etc. ; ¨ Incorrect configuration of the experiment software area; ¨ Bad middleware installation. RWL Jones, Lancaster University

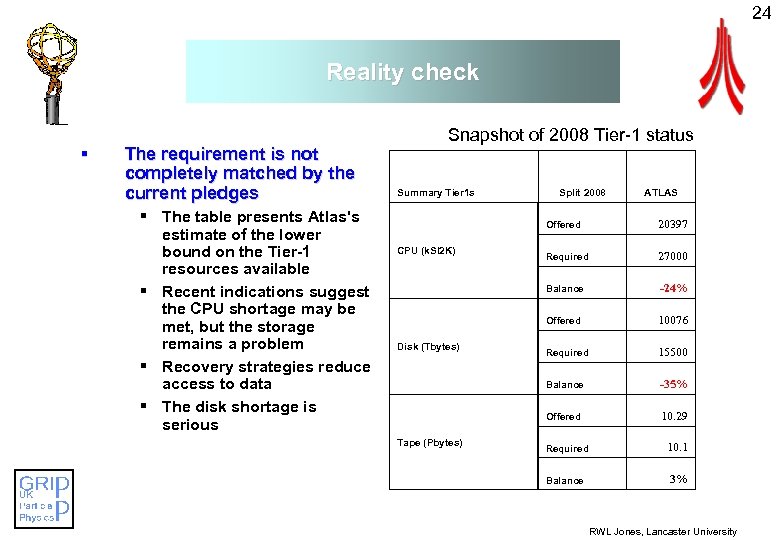

24 Reality check § The requirement is not completely matched by the current pledges § The table presents Atlas's estimate of the lower bound on the Tier-1 resources available § Recent indications suggest the CPU shortage may be met, but the storage remains a problem § Recovery strategies reduce access to data § The disk shortage is serious Snapshot of 2008 Tier-1 status Summary Tier 1 s Split 2008 ATLAS Offered -24% 10076 Required 15500 Balance -35% Offered Tape (Pbytes) 27000 Offered Disk (Tbytes) Required Balance CPU (k. SI 2 K) 20397 10. 29 Required 10. 1 Balance 3% RWL Jones, Lancaster University

24 Reality check § The requirement is not completely matched by the current pledges § The table presents Atlas's estimate of the lower bound on the Tier-1 resources available § Recent indications suggest the CPU shortage may be met, but the storage remains a problem § Recovery strategies reduce access to data § The disk shortage is serious Snapshot of 2008 Tier-1 status Summary Tier 1 s Split 2008 ATLAS Offered -24% 10076 Required 15500 Balance -35% Offered Tape (Pbytes) 27000 Offered Disk (Tbytes) Required Balance CPU (k. SI 2 K) 20397 10. 29 Required 10. 1 Balance 3% RWL Jones, Lancaster University

25 Conclusions § § The Grid is the only practical way to function as a world-wide collaboration First experiences have inevitably had problems, but we have real delivery § Slower than desirable § Problems of coherence § But a Ph. D student can submit 2500 simulation jobs at once and have them complete within 48 hours with 95. 3% success rate § (all failures were /nfs not accessible) § Real tests of the computing models contine § Analysis on the Grid is still to be seriously demonstrated § Calibration and alignment procedures need to be brought in § Resources are an issue § There are shortfalls with respect to the initial requirements § The requirements grow with time § This may be difficult for Tier-2 s especially RWL Jones, Lancaster University

25 Conclusions § § The Grid is the only practical way to function as a world-wide collaboration First experiences have inevitably had problems, but we have real delivery § Slower than desirable § Problems of coherence § But a Ph. D student can submit 2500 simulation jobs at once and have them complete within 48 hours with 95. 3% success rate § (all failures were /nfs not accessible) § Real tests of the computing models contine § Analysis on the Grid is still to be seriously demonstrated § Calibration and alignment procedures need to be brought in § Resources are an issue § There are shortfalls with respect to the initial requirements § The requirements grow with time § This may be difficult for Tier-2 s especially RWL Jones, Lancaster University

26 A Final Thought “It is amazing what can be achieved when you do not care who will get the credit” Harry S Trueman RWL Jones, Lancaster University

26 A Final Thought “It is amazing what can be achieved when you do not care who will get the credit” Harry S Trueman RWL Jones, Lancaster University