96f81b56b0c2c072f04db21e41e2756f.ppt

- Количество слайдов: 15

ATLAS DC 2 & Continuous production ATLAS Physics Plenary 26 February 2004 Gilbert Poulard CERN PH-ATC February 26, 2004 G. Poulard - ATLAS Physics plenary

ATLAS DC 2 & Continuous production ATLAS Physics Plenary 26 February 2004 Gilbert Poulard CERN PH-ATC February 26, 2004 G. Poulard - ATLAS Physics plenary

Outline q q q Goals Operation; scenario; time scale Resources Production; Grid and Tools Role of Tiers Comments on schedule q Analysis will be covered in tomorrow’s talk and in the next software week. February 26, 2004 G. Poulard - ATLAS Physics plenary 2

Outline q q q Goals Operation; scenario; time scale Resources Production; Grid and Tools Role of Tiers Comments on schedule q Analysis will be covered in tomorrow’s talk and in the next software week. February 26, 2004 G. Poulard - ATLAS Physics plenary 2

DC 2: goals q At this stage the goal includes: Ø Ø Ø Ø Ø Full use of Geant 4; POOL; LCG applications Pile-up and digitization in Athena Deployment of the complete Event Data Model and the Detector Description Simulation of full ATLAS and 2004 combined Testbeam Test the calibration and alignment procedures Use widely the GRID middleware and tools Large scale physics analysis Computing model studies (document end 2004) Run as much as possible of the production on LCG-2 February 26, 2004 G. Poulard - ATLAS Physics plenary 3

DC 2: goals q At this stage the goal includes: Ø Ø Ø Ø Ø Full use of Geant 4; POOL; LCG applications Pile-up and digitization in Athena Deployment of the complete Event Data Model and the Detector Description Simulation of full ATLAS and 2004 combined Testbeam Test the calibration and alignment procedures Use widely the GRID middleware and tools Large scale physics analysis Computing model studies (document end 2004) Run as much as possible of the production on LCG-2 February 26, 2004 G. Poulard - ATLAS Physics plenary 3

DC 2 operation q Consider DC 2 as a three-part operation: o part I: production of simulated data (May-June 2004) Ø Ø Ø o part II: test of Tier-0 operation (July 2004) Ø Ø Ø o needs full reconstruction software following RTF report design, definition of AODs and TAGs (calibration/alignment and) reconstruction will run on Tier-0 prototype as if data were coming from the online system (at 10% of the rate) output (ESD+AOD) will be distributed to Tier-1 s in real time for analysis part III: test of distributed analysis on the Grid (August-Oct. 2004) Ø o needs Geant 4, digitization and pile-up in Athena, POOL persistency “minimal” reconstruction just to validate simulation suite will run on any computing facilities we can get access to around the world access to event and non-event data from anywhere in the world both in organized and chaotic ways in parallel: run distributed reconstruction on simulated data February 26, 2004 G. Poulard - ATLAS Physics plenary 4

DC 2 operation q Consider DC 2 as a three-part operation: o part I: production of simulated data (May-June 2004) Ø Ø Ø o part II: test of Tier-0 operation (July 2004) Ø Ø Ø o needs full reconstruction software following RTF report design, definition of AODs and TAGs (calibration/alignment and) reconstruction will run on Tier-0 prototype as if data were coming from the online system (at 10% of the rate) output (ESD+AOD) will be distributed to Tier-1 s in real time for analysis part III: test of distributed analysis on the Grid (August-Oct. 2004) Ø o needs Geant 4, digitization and pile-up in Athena, POOL persistency “minimal” reconstruction just to validate simulation suite will run on any computing facilities we can get access to around the world access to event and non-event data from anywhere in the world both in organized and chaotic ways in parallel: run distributed reconstruction on simulated data February 26, 2004 G. Poulard - ATLAS Physics plenary 4

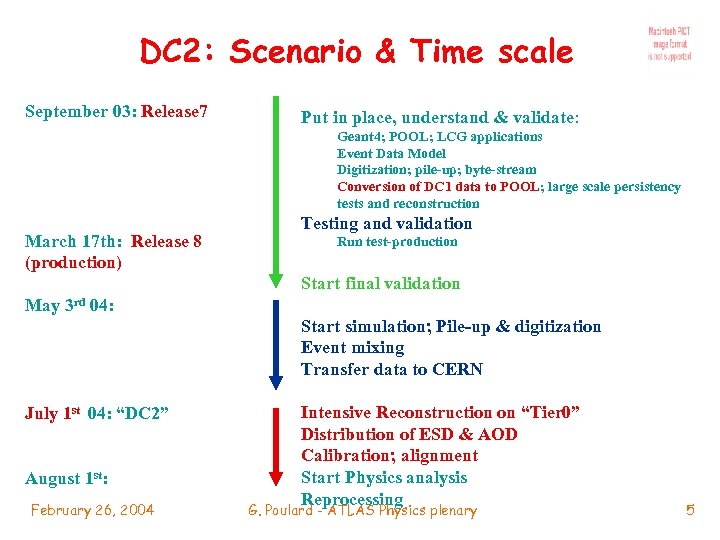

DC 2: Scenario & Time scale September 03: Release 7 Put in place, understand & validate: Geant 4; POOL; LCG applications Event Data Model Digitization; pile-up; byte-stream Conversion of DC 1 data to POOL; large scale persistency tests and reconstruction March 17 th: Release 8 (production) Testing and validation Run test-production Start final validation May 3 rd 04: Start simulation; Pile-up & digitization Event mixing Transfer data to CERN July 1 st 04: “DC 2” August 1 st: February 26, 2004 Intensive Reconstruction on “Tier 0” Distribution of ESD & AOD Calibration; alignment Start Physics analysis Reprocessing G. Poulard - ATLAS Physics plenary 5

DC 2: Scenario & Time scale September 03: Release 7 Put in place, understand & validate: Geant 4; POOL; LCG applications Event Data Model Digitization; pile-up; byte-stream Conversion of DC 1 data to POOL; large scale persistency tests and reconstruction March 17 th: Release 8 (production) Testing and validation Run test-production Start final validation May 3 rd 04: Start simulation; Pile-up & digitization Event mixing Transfer data to CERN July 1 st 04: “DC 2” August 1 st: February 26, 2004 Intensive Reconstruction on “Tier 0” Distribution of ESD & AOD Calibration; alignment Start Physics analysis Reprocessing G. Poulard - ATLAS Physics plenary 5

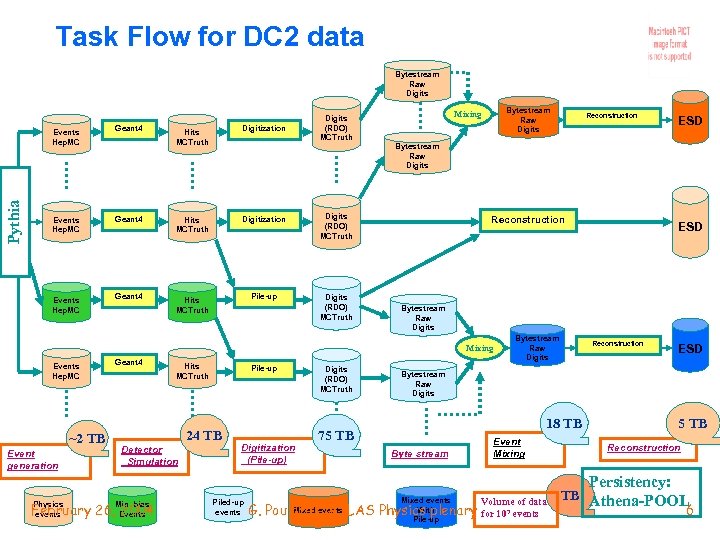

Task Flow for DC 2 data Bytestream Raw Digits Events Hep. MC Pythia Events Hep. MC Geant 4 Digits (RDO) MCTruth Hits MCTruth Digitization Geant 4 Hits MCTruth Digitization Digits (RDO) MCTruth Geant 4 Hits MCTruth Pile-up Digits (RDO) MCTruth Bytestream Raw Digits Mixing Events Hep. MC ~2 TB Event generation Pile-up 24 TB Detector Simulation February 26, Events 2004 events Physics Hits MCTruth Min. bias Digitization (Pile-up) Piled-up events Digits (RDO) MCTruth ESD Bytestream Raw Digits Reconstruction ESD Bytestream Raw Digits Mixing Geant 4 Reconstruction Bytestream Raw Digits Reconstruction ESD Bytestream Raw Digits 18 TB 75 TB Byte stream Mixed events Event Mixing Volume of data With Mixed events G. Poulard - ATLAS Physics plenary for 107 events Pile-up 5 TB Reconstruction Persistency: TB Athena-POOL 6

Task Flow for DC 2 data Bytestream Raw Digits Events Hep. MC Pythia Events Hep. MC Geant 4 Digits (RDO) MCTruth Hits MCTruth Digitization Geant 4 Hits MCTruth Digitization Digits (RDO) MCTruth Geant 4 Hits MCTruth Pile-up Digits (RDO) MCTruth Bytestream Raw Digits Mixing Events Hep. MC ~2 TB Event generation Pile-up 24 TB Detector Simulation February 26, Events 2004 events Physics Hits MCTruth Min. bias Digitization (Pile-up) Piled-up events Digits (RDO) MCTruth ESD Bytestream Raw Digits Reconstruction ESD Bytestream Raw Digits Mixing Geant 4 Reconstruction Bytestream Raw Digits Reconstruction ESD Bytestream Raw Digits 18 TB 75 TB Byte stream Mixed events Event Mixing Volume of data With Mixed events G. Poulard - ATLAS Physics plenary for 107 events Pile-up 5 TB Reconstruction Persistency: TB Athena-POOL 6

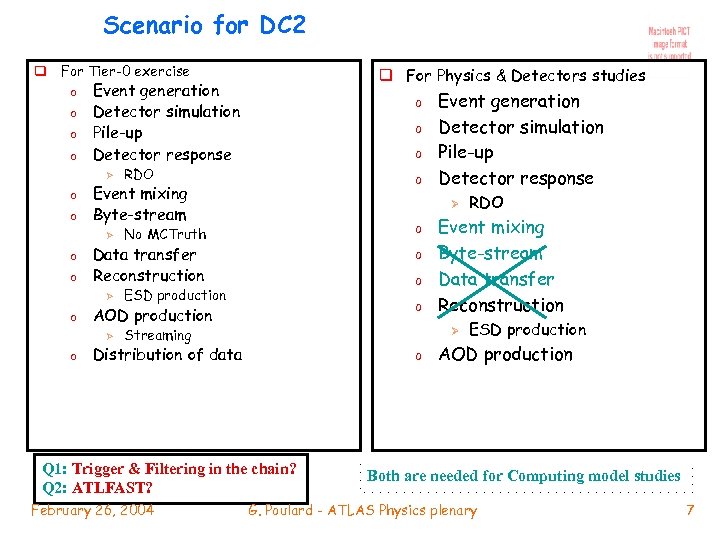

Scenario for DC 2 q For Tier-0 exercise o o Event generation Detector simulation Pile-up Detector response Ø o o RDO o o No MCTruth o o ESD production o Streaming o Q 1: Trigger & Filtering in the chain? Q 2: ATLFAST? RDO Event mixing Byte-stream Data transfer Reconstruction Ø Distribution of data February 26, 2004 Event generation Detector simulation Pile-up Detector response Ø AOD production Ø o o Data transfer Reconstruction Ø o o Event mixing Byte-stream Ø o q For Physics & Detectors studies ESD production AOD production Both are needed for Computing model studies G. Poulard - ATLAS Physics plenary 7

Scenario for DC 2 q For Tier-0 exercise o o Event generation Detector simulation Pile-up Detector response Ø o o RDO o o No MCTruth o o ESD production o Streaming o Q 1: Trigger & Filtering in the chain? Q 2: ATLFAST? RDO Event mixing Byte-stream Data transfer Reconstruction Ø Distribution of data February 26, 2004 Event generation Detector simulation Pile-up Detector response Ø AOD production Ø o o Data transfer Reconstruction Ø o o Event mixing Byte-stream Ø o q For Physics & Detectors studies ESD production AOD production Both are needed for Computing model studies G. Poulard - ATLAS Physics plenary 7

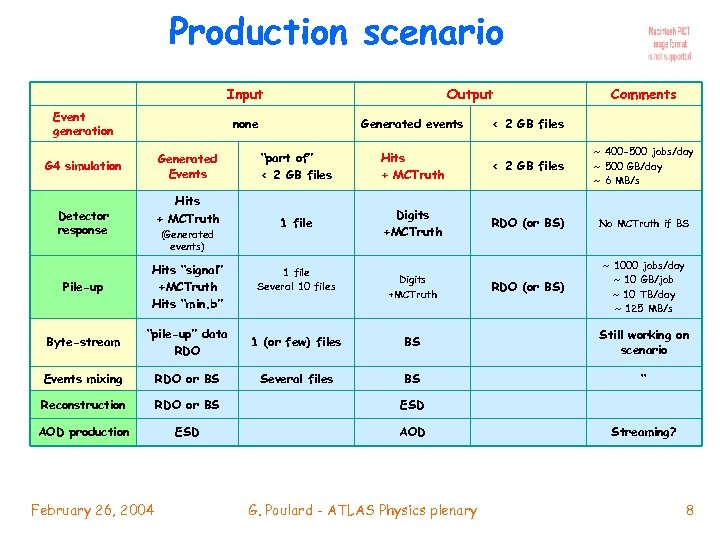

Production scenario Input Event generation Output none Generated events Comments < 2 GB files Generated Events Detector response Hits + MCTruth < 2 GB files ~ 400 -500 jobs/day ~ 500 GB/day ~ 6 MB/s Hits + MCTruth G 4 simulation “part of” < 2 GB files 1 file Digits +MCTruth RDO (or BS) No MCTruth if BS RDO (or BS) ~ 1000 jobs/day ~ 10 GB/job ~ 10 TB/day ~ 125 MB/s (Generated events) Pile-up Hits “signal” +MCTruth Hits “min. b” Byte-stream “pile-up” data RDO 1 (or few) files BS Still working on scenario Events mixing RDO or BS Several files BS “ Reconstruction RDO or BS ESD AOD production ESD AOD February 26, 2004 1 file Several 10 files Digits +MCTruth G. Poulard - ATLAS Physics plenary Streaming? 8

Production scenario Input Event generation Output none Generated events Comments < 2 GB files Generated Events Detector response Hits + MCTruth < 2 GB files ~ 400 -500 jobs/day ~ 500 GB/day ~ 6 MB/s Hits + MCTruth G 4 simulation “part of” < 2 GB files 1 file Digits +MCTruth RDO (or BS) No MCTruth if BS RDO (or BS) ~ 1000 jobs/day ~ 10 GB/job ~ 10 TB/day ~ 125 MB/s (Generated events) Pile-up Hits “signal” +MCTruth Hits “min. b” Byte-stream “pile-up” data RDO 1 (or few) files BS Still working on scenario Events mixing RDO or BS Several files BS “ Reconstruction RDO or BS ESD AOD production ESD AOD February 26, 2004 1 file Several 10 files Digits +MCTruth G. Poulard - ATLAS Physics plenary Streaming? 8

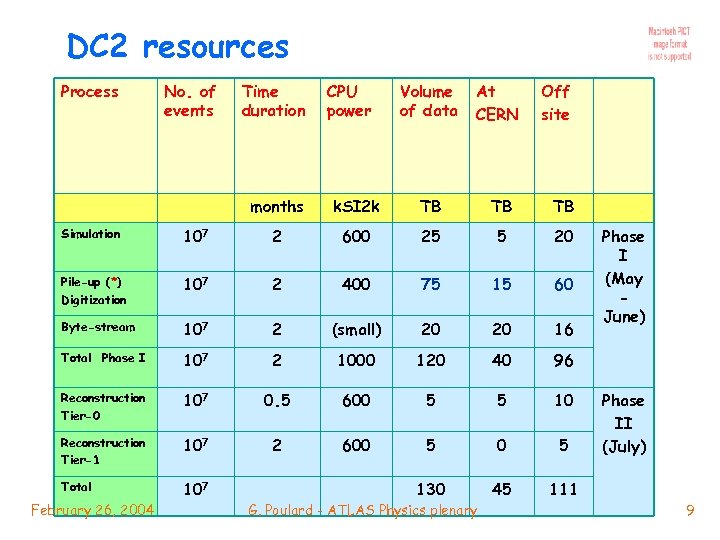

DC 2 resources Process No. of events Time duration CPU power Volume of data At CERN Off site months k. SI 2 k TB TB TB Simulation 107 2 600 25 5 20 Pile-up (*) Digitization 107 2 400 75 15 60 Byte-stream 107 2 (small) 20 20 16 Total Phase I 107 2 1000 120 40 96 Reconstruction Tier-0 107 0. 5 600 5 5 10 Reconstruction Tier-1 107 2 600 5 Total 107 130 45 111 February 26, 2004 G. Poulard - ATLAS Physics plenary Phase I (May June) Phase II (July) 9

DC 2 resources Process No. of events Time duration CPU power Volume of data At CERN Off site months k. SI 2 k TB TB TB Simulation 107 2 600 25 5 20 Pile-up (*) Digitization 107 2 400 75 15 60 Byte-stream 107 2 (small) 20 20 16 Total Phase I 107 2 1000 120 40 96 Reconstruction Tier-0 107 0. 5 600 5 5 10 Reconstruction Tier-1 107 2 600 5 Total 107 130 45 111 February 26, 2004 G. Poulard - ATLAS Physics plenary Phase I (May June) Phase II (July) 9

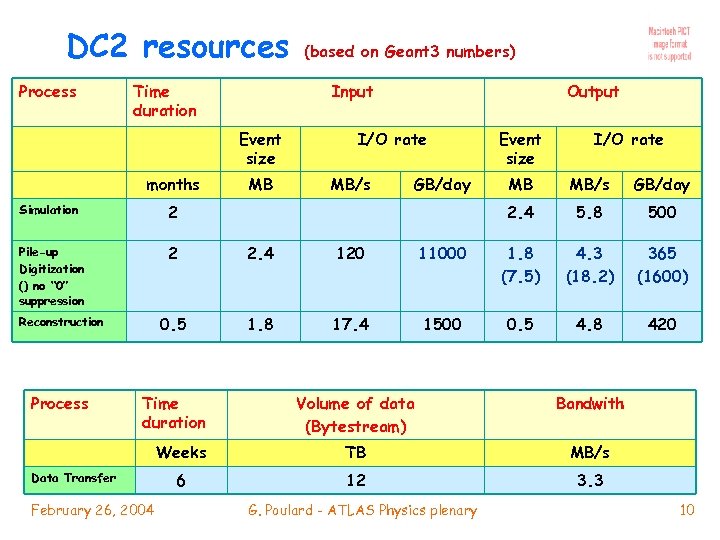

DC 2 resources Process Time duration Input Event size months (based on Geant 3 numbers) MB Output I/O rate MB/s GB/day Event size I/O rate MB MB/s GB/day 2. 4 5. 8 500 Simulation 2 Pile-up Digitization () no “ 0” suppression 2 2. 4 120 11000 1. 8 (7. 5) 4. 3 (18. 2) 365 (1600) Reconstruction 0. 5 1. 8 17. 4 1500 0. 5 4. 8 420 Process Time duration February 26, 2004 Bandwith Weeks Data Transfer Volume of data (Bytestream) TB MB/s 6 12 3. 3 G. Poulard - ATLAS Physics plenary 10

DC 2 resources Process Time duration Input Event size months (based on Geant 3 numbers) MB Output I/O rate MB/s GB/day Event size I/O rate MB MB/s GB/day 2. 4 5. 8 500 Simulation 2 Pile-up Digitization () no “ 0” suppression 2 2. 4 120 11000 1. 8 (7. 5) 4. 3 (18. 2) 365 (1600) Reconstruction 0. 5 1. 8 17. 4 1500 0. 5 4. 8 420 Process Time duration February 26, 2004 Bandwith Weeks Data Transfer Volume of data (Bytestream) TB MB/s 6 12 3. 3 G. Poulard - ATLAS Physics plenary 10

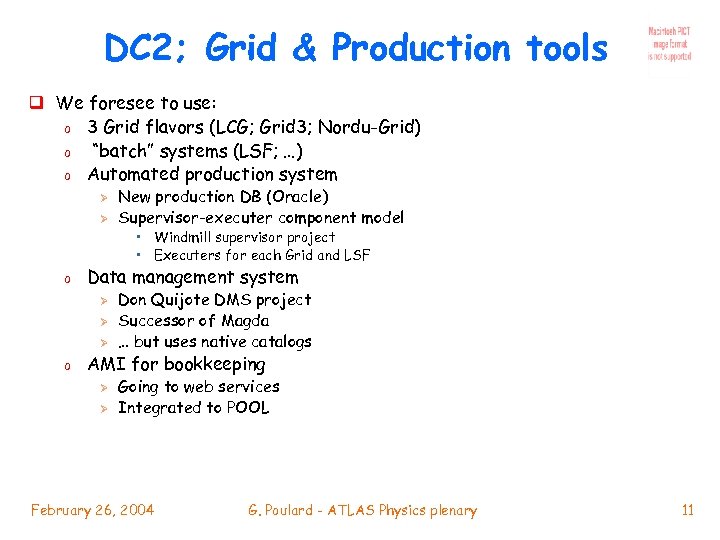

DC 2; Grid & Production tools q We foresee to use: o 3 Grid flavors (LCG; Grid 3; Nordu-Grid) o “batch” systems (LSF; …) o Automated production system Ø Ø New production DB (Oracle) Supervisor-executer component model • Windmill supervisor project • Executers for each Grid and LSF o Data management system Ø Ø Ø o Don Quijote DMS project Successor of Magda … but uses native catalogs AMI for bookkeeping Ø Ø Going to web services Integrated to POOL February 26, 2004 G. Poulard - ATLAS Physics plenary 11

DC 2; Grid & Production tools q We foresee to use: o 3 Grid flavors (LCG; Grid 3; Nordu-Grid) o “batch” systems (LSF; …) o Automated production system Ø Ø New production DB (Oracle) Supervisor-executer component model • Windmill supervisor project • Executers for each Grid and LSF o Data management system Ø Ø Ø o Don Quijote DMS project Successor of Magda … but uses native catalogs AMI for bookkeeping Ø Ø Going to web services Integrated to POOL February 26, 2004 G. Poulard - ATLAS Physics plenary 11

![Atlas Production System schema Task = [job]* Dataset = [partition]* Data Management System AMI Atlas Production System schema Task = [job]* Dataset = [partition]* Data Management System AMI](https://present5.com/presentation/96f81b56b0c2c072f04db21e41e2756f/image-12.jpg) Atlas Production System schema Task = [job]* Dataset = [partition]* Data Management System AMI Location Hint (Task) Task (Dataset) JOB DESCRIPTION Task Transf. Definition + physics signature Human intervention Job Run Info Location Hint (Job) Job (Partition) Partition Executable name Transf. Release version Definition signature Supervisor 1 Supervisor 2 Supervisor 3 US Grid Executer LCG Executer NG Executer RB LSF Executer NG Local Batch Chimera Supervisor 4 RB US Grid February 26, 2004 LCG G. Poulard - ATLAS Physics plenary 12

Atlas Production System schema Task = [job]* Dataset = [partition]* Data Management System AMI Location Hint (Task) Task (Dataset) JOB DESCRIPTION Task Transf. Definition + physics signature Human intervention Job Run Info Location Hint (Job) Job (Partition) Partition Executable name Transf. Release version Definition signature Supervisor 1 Supervisor 2 Supervisor 3 US Grid Executer LCG Executer NG Executer RB LSF Executer NG Local Batch Chimera Supervisor 4 RB US Grid February 26, 2004 LCG G. Poulard - ATLAS Physics plenary 12

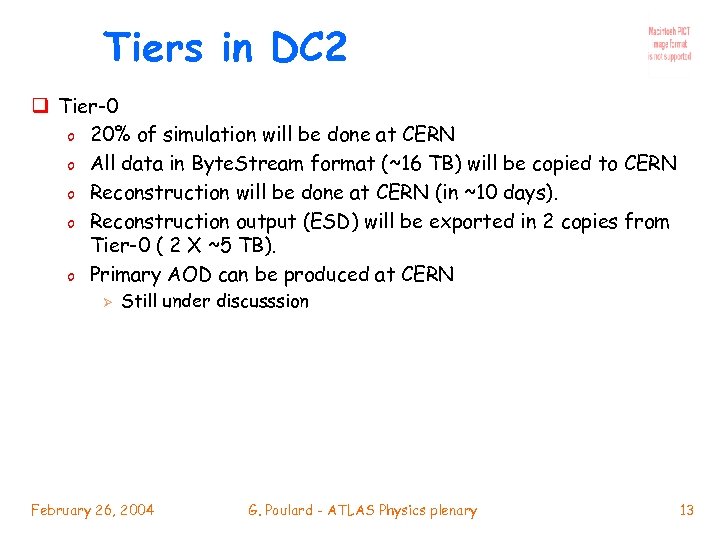

Tiers in DC 2 q Tier-0 o 20% of simulation will be done at CERN o All data in Byte. Stream format (~16 TB) will be copied to CERN o Reconstruction will be done at CERN (in ~10 days). o Reconstruction output (ESD) will be exported in 2 copies from Tier-0 ( 2 X ~5 TB). o Primary AOD can be produced at CERN Ø Still under discusssion February 26, 2004 G. Poulard - ATLAS Physics plenary 13

Tiers in DC 2 q Tier-0 o 20% of simulation will be done at CERN o All data in Byte. Stream format (~16 TB) will be copied to CERN o Reconstruction will be done at CERN (in ~10 days). o Reconstruction output (ESD) will be exported in 2 copies from Tier-0 ( 2 X ~5 TB). o Primary AOD can be produced at CERN Ø Still under discusssion February 26, 2004 G. Poulard - ATLAS Physics plenary 13

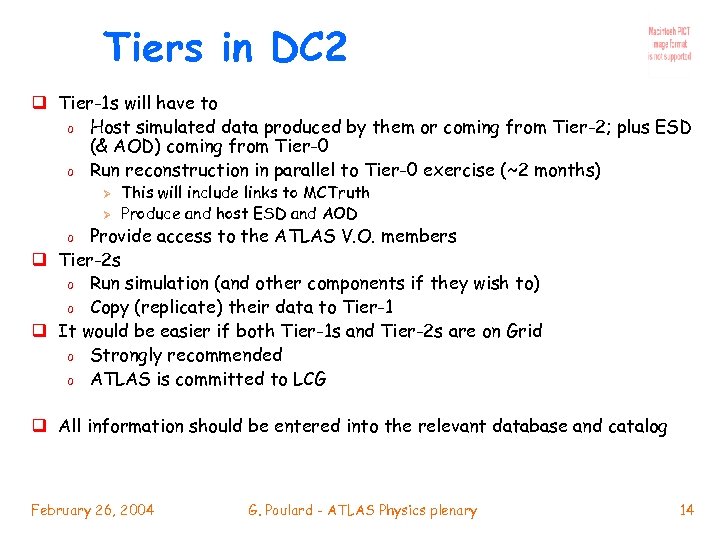

Tiers in DC 2 q Tier-1 s will have to o Host simulated data produced by them or coming from Tier-2; plus ESD (& AOD) coming from Tier-0 o Run reconstruction in parallel to Tier-0 exercise (~2 months) Ø Ø This will include links to MCTruth Produce and host ESD and AOD Provide access to the ATLAS V. O. members q Tier-2 s o Run simulation (and other components if they wish to) o Copy (replicate) their data to Tier-1 q It would be easier if both Tier-1 s and Tier-2 s are on Grid o Strongly recommended o ATLAS is committed to LCG o q All information should be entered into the relevant database and catalog February 26, 2004 G. Poulard - ATLAS Physics plenary 14

Tiers in DC 2 q Tier-1 s will have to o Host simulated data produced by them or coming from Tier-2; plus ESD (& AOD) coming from Tier-0 o Run reconstruction in parallel to Tier-0 exercise (~2 months) Ø Ø This will include links to MCTruth Produce and host ESD and AOD Provide access to the ATLAS V. O. members q Tier-2 s o Run simulation (and other components if they wish to) o Copy (replicate) their data to Tier-1 q It would be easier if both Tier-1 s and Tier-2 s are on Grid o Strongly recommended o ATLAS is committed to LCG o q All information should be entered into the relevant database and catalog February 26, 2004 G. Poulard - ATLAS Physics plenary 14

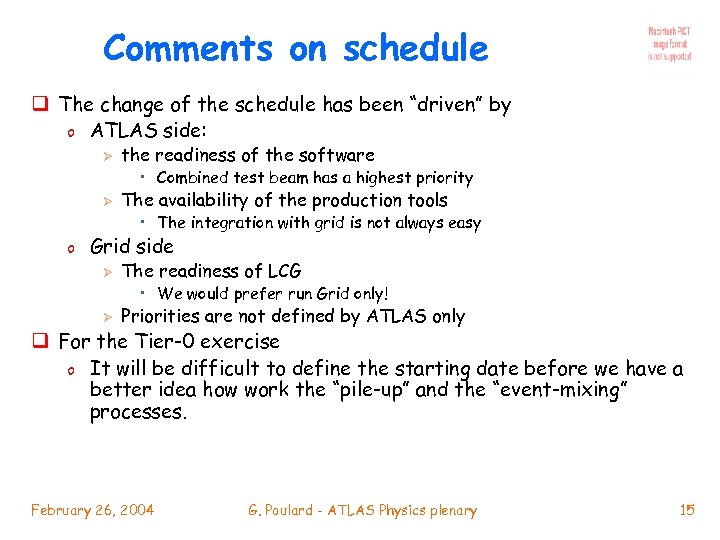

Comments on schedule q The change of the schedule has been “driven” by o ATLAS side: Ø the readiness of the software • Combined test beam has a highest priority Ø The availability of the production tools • The integration with grid is not always easy o Grid side Ø The readiness of LCG • We would prefer run Grid only! Ø Priorities are not defined by ATLAS only q For the Tier-0 exercise o It will be difficult to define the starting date before we have a better idea how work the “pile-up” and the “event-mixing” processes. February 26, 2004 G. Poulard - ATLAS Physics plenary 15

Comments on schedule q The change of the schedule has been “driven” by o ATLAS side: Ø the readiness of the software • Combined test beam has a highest priority Ø The availability of the production tools • The integration with grid is not always easy o Grid side Ø The readiness of LCG • We would prefer run Grid only! Ø Priorities are not defined by ATLAS only q For the Tier-0 exercise o It will be difficult to define the starting date before we have a better idea how work the “pile-up” and the “event-mixing” processes. February 26, 2004 G. Poulard - ATLAS Physics plenary 15