Assieme: Finding and Leveraging Implicit References in a Web Search Interface for Programmers Raphael Hoffmann, James Fogarty, Daniel S. Weld University of Washington, Seattle UIST 2007

Assieme: Finding and Leveraging Implicit References in a Web Search Interface for Programmers Raphael Hoffmann, James Fogarty, Daniel S. Weld University of Washington, Seattle UIST 2007

Programmers Use Search • To identify an API • To seek information about an API • To find examples on how to use an API Example Task: “Programmatically output an Acrobat PDF file in Java. ”

Programmers Use Search • To identify an API • To seek information about an API • To find examples on how to use an API Example Task: “Programmatically output an Acrobat PDF file in Java. ”

Example: General Web Search Interface

Example: General Web Search Interface

Example: Code-Specific Web Search Interface …

Example: Code-Specific Web Search Interface …

Problems • Information is dispersed: tutorials, API itself, documentation, pages with samples • Difficult and time-consuming to … – – locate required pieces, get an overview of alternatives, judge relevance and quality of results, understand dependencies. • Many page visits required

Problems • Information is dispersed: tutorials, API itself, documentation, pages with samples • Difficult and time-consuming to … – – locate required pieces, get an overview of alternatives, judge relevance and quality of results, understand dependencies. • Many page visits required

With Assieme we … • Designed a new Web search interface • Developed needed inference

With Assieme we … • Designed a new Web search interface • Developed needed inference

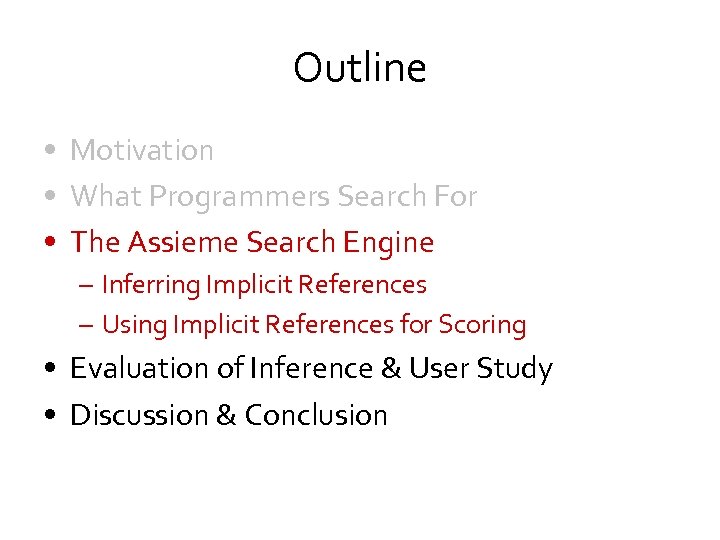

Outline • Motivation • What Programmers Search For • The Assieme Search Engine – Inferring Implicit References – Using Implicit References for Scoring • Evaluation of Inference & User Study • Discussion & Conclusion

Outline • Motivation • What Programmers Search For • The Assieme Search Engine – Inferring Implicit References – Using Implicit References for Scoring • Evaluation of Inference & User Study • Discussion & Conclusion

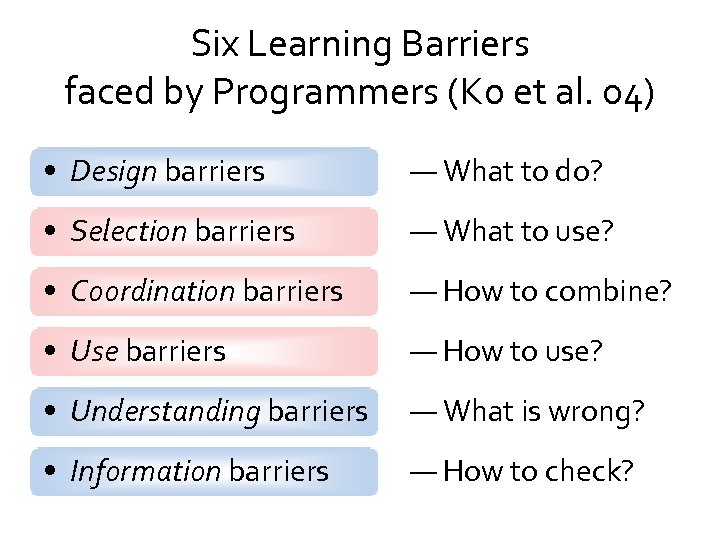

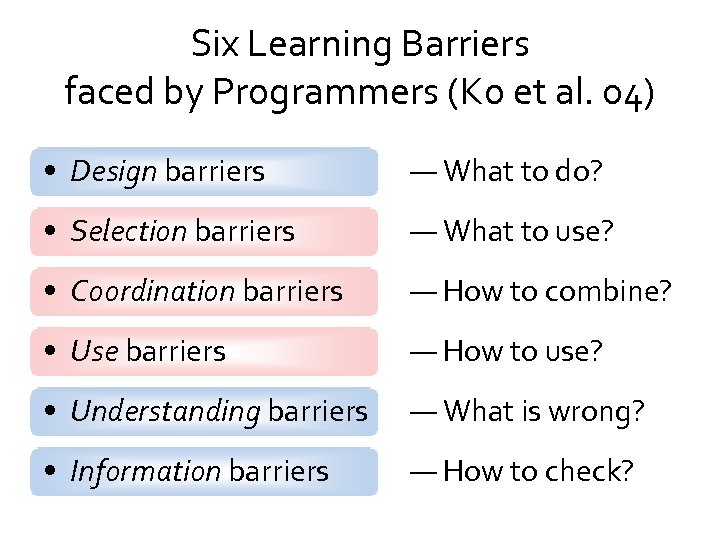

Six Learning Barriers faced by Programmers (Ko et al. 04) • Design barriers — What to do? • Selection barriers — What to use? • Coordination barriers — How to combine? • Use barriers — How to use? • Understanding barriers — What is wrong? • Information barriers — How to check?

Six Learning Barriers faced by Programmers (Ko et al. 04) • Design barriers — What to do? • Selection barriers — What to use? • Coordination barriers — How to combine? • Use barriers — How to use? • Understanding barriers — What is wrong? • Information barriers — How to check?

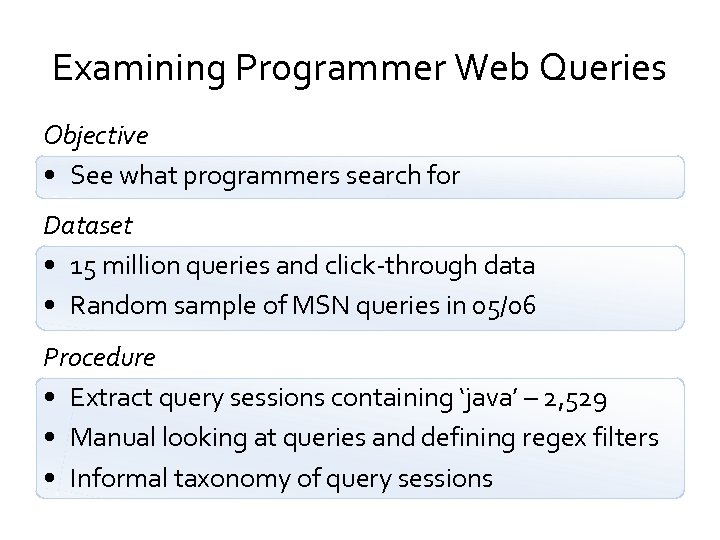

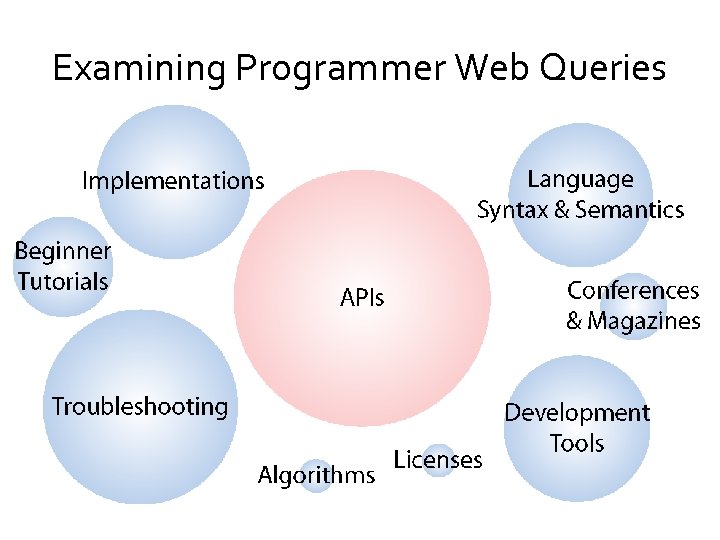

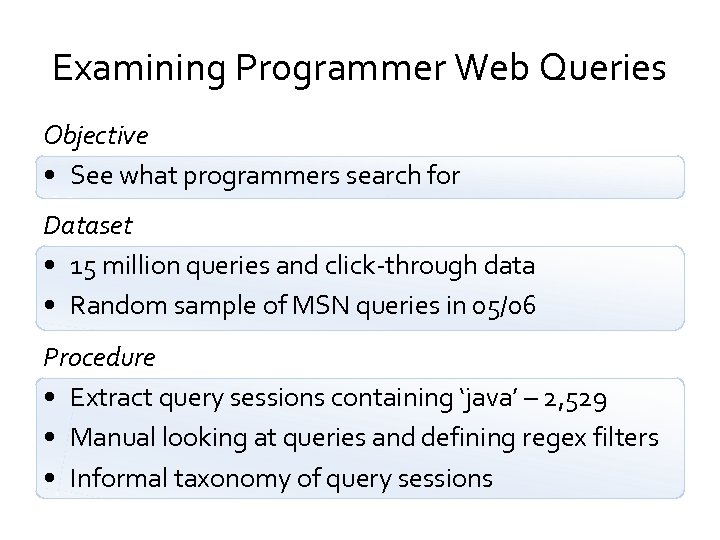

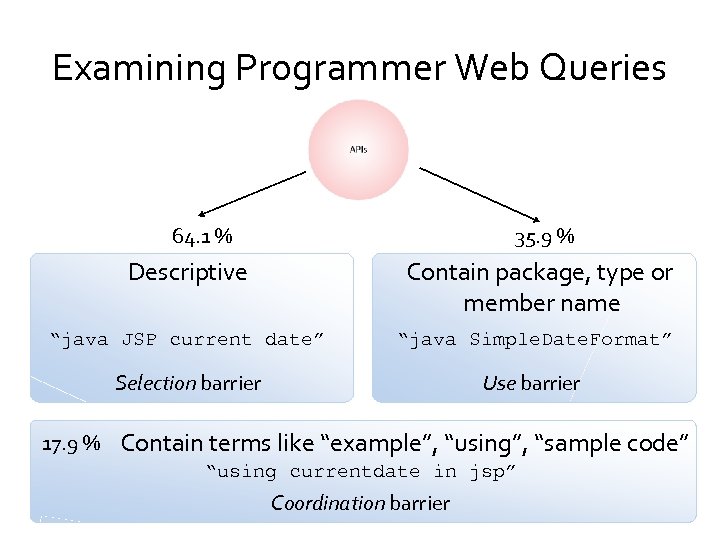

Examining Programmer Web Queries Objective • See what programmers search for Dataset • 15 million queries and click-through data • Random sample of MSN queries in 05/06 Procedure • Extract query sessions containing ‘java’ – 2, 529 • Manual looking at queries and defining regex filters • Informal taxonomy of query sessions

Examining Programmer Web Queries Objective • See what programmers search for Dataset • 15 million queries and click-through data • Random sample of MSN queries in 05/06 Procedure • Extract query sessions containing ‘java’ – 2, 529 • Manual looking at queries and defining regex filters • Informal taxonomy of query sessions

Examining Programmer Web Queries

Examining Programmer Web Queries

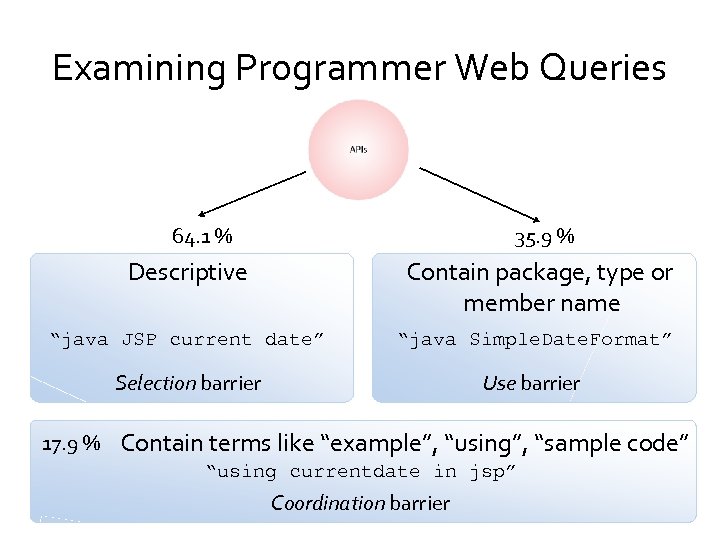

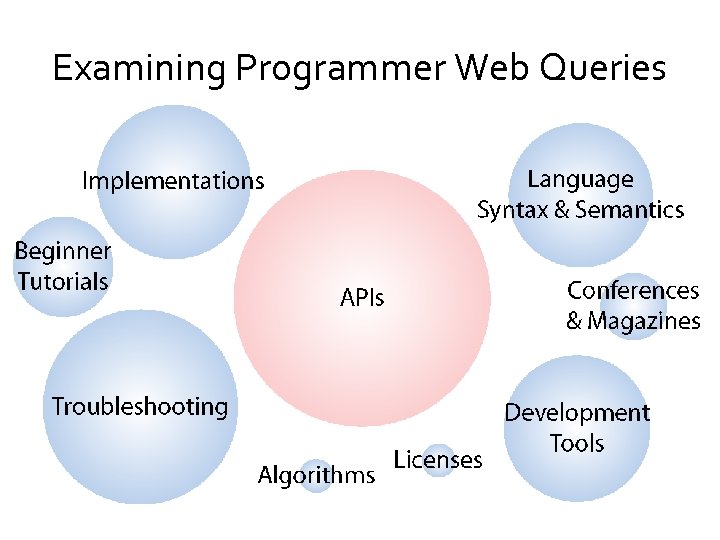

Examining Programmer Web Queries 64. 1 % 35. 9 % Descriptive Contain package, type or member name “java JSP current date” “java Simple. Date. Format” Selection barrier Use barrier 17. 9 % Contain terms like “example”, “using”, “sample code” “using currentdate in jsp” Coordination barrier

Examining Programmer Web Queries 64. 1 % 35. 9 % Descriptive Contain package, type or member name “java JSP current date” “java Simple. Date. Format” Selection barrier Use barrier 17. 9 % Contain terms like “example”, “using”, “sample code” “using currentdate in jsp” Coordination barrier

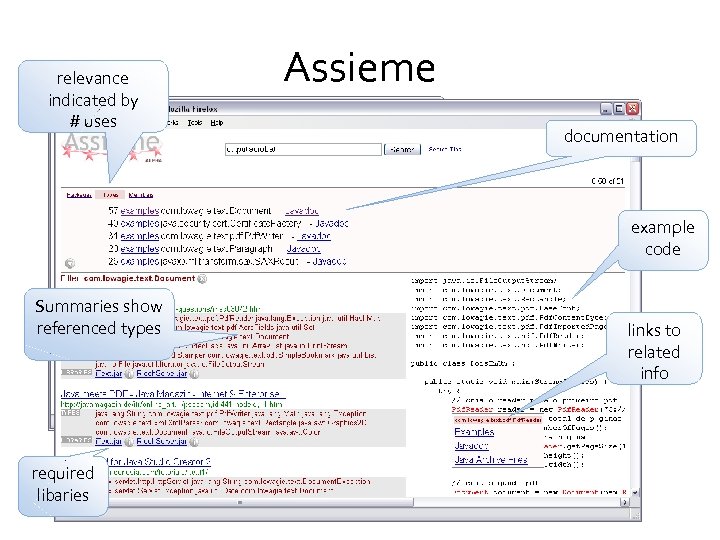

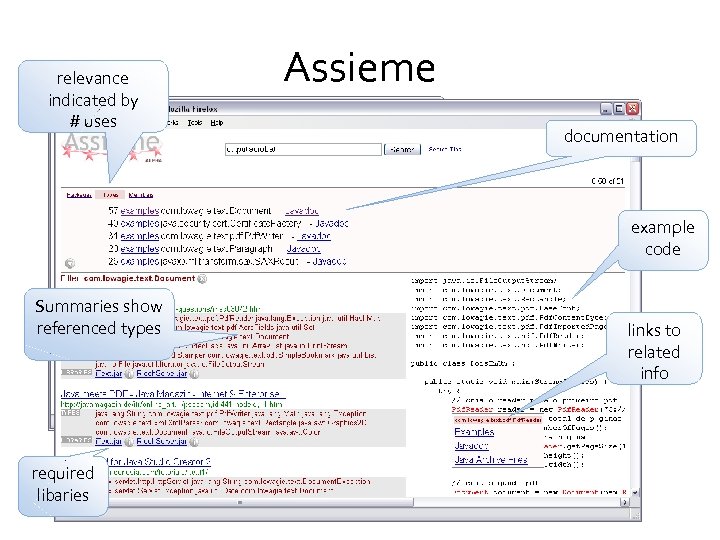

relevance indicated by # uses Assieme documentation example code Summaries show referenced types required libaries links to related info

relevance indicated by # uses Assieme documentation example code Summaries show referenced types required libaries links to related info

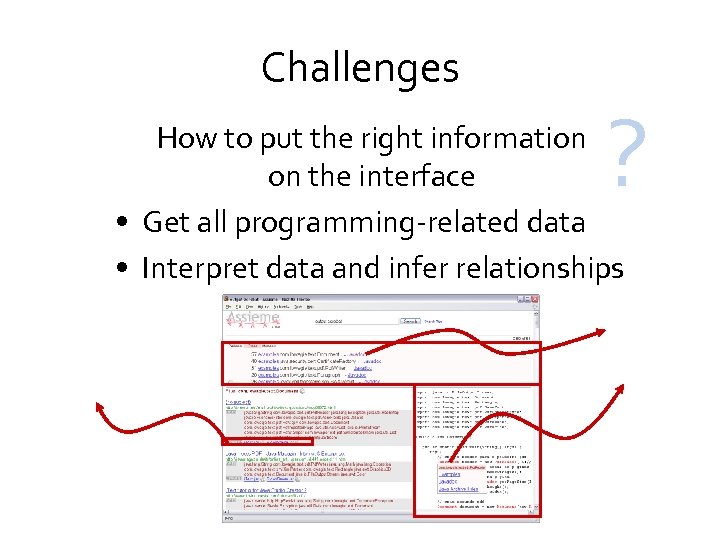

Challenges ? How to put the right information on the interface • Get all programming-related data • Interpret data and infer relationships

Challenges ? How to put the right information on the interface • Get all programming-related data • Interpret data and infer relationships

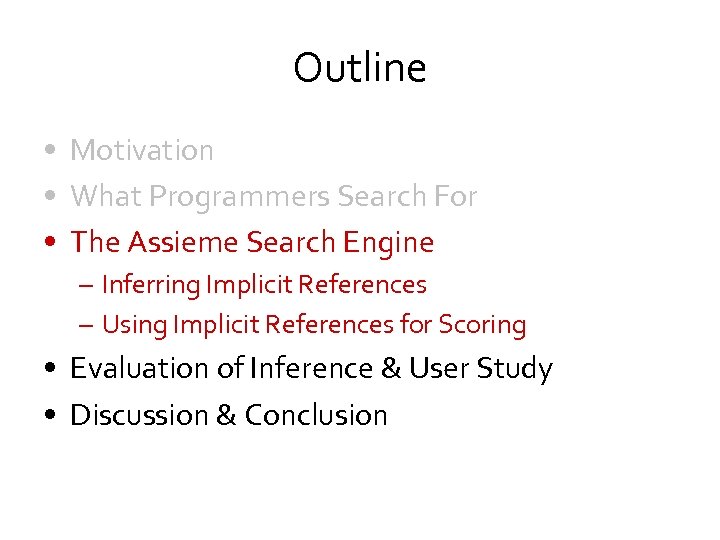

Outline • Motivation • What Programmers Search For • The Assieme Search Engine – Inferring Implicit References – Using Implicit References for Scoring • Evaluation of Inference & User Study • Discussion & Conclusion

Outline • Motivation • What Programmers Search For • The Assieme Search Engine – Inferring Implicit References – Using Implicit References for Scoring • Evaluation of Inference & User Study • Discussion & Conclusion

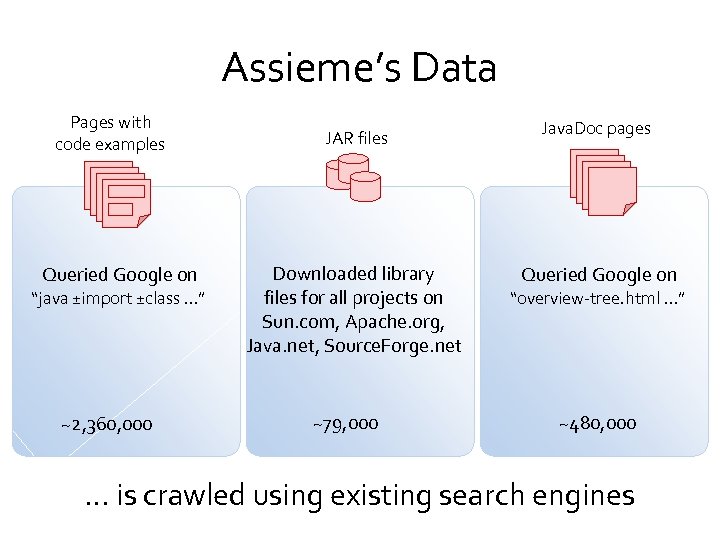

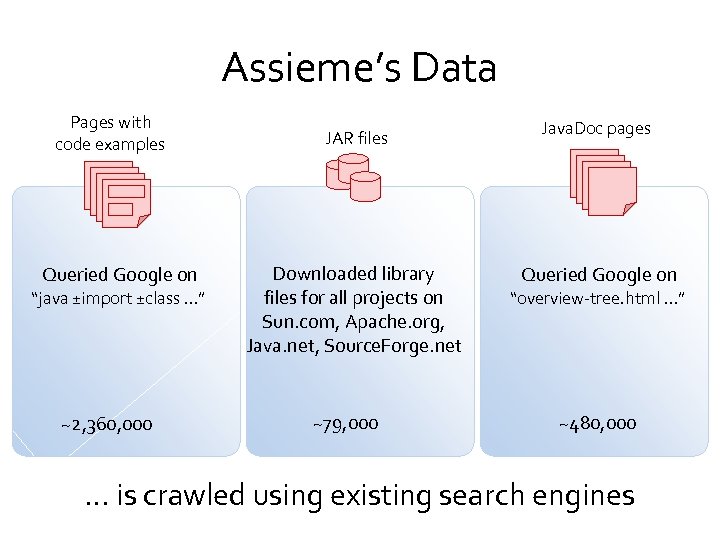

Assieme’s Data Pages with code examples Queried Google on “java ±import ±class …” ~2, 360, 000 JAR files Downloaded library files for all projects on Sun. com, Apache. org, Java. net, Source. Forge. net ~79, 000 Java. Doc pages Queried Google on “overview-tree. html …” ~480, 000 … is crawled using existing search engines

Assieme’s Data Pages with code examples Queried Google on “java ±import ±class …” ~2, 360, 000 JAR files Downloaded library files for all projects on Sun. com, Apache. org, Java. net, Source. Forge. net ~79, 000 Java. Doc pages Queried Google on “overview-tree. html …” ~480, 000 … is crawled using existing search engines

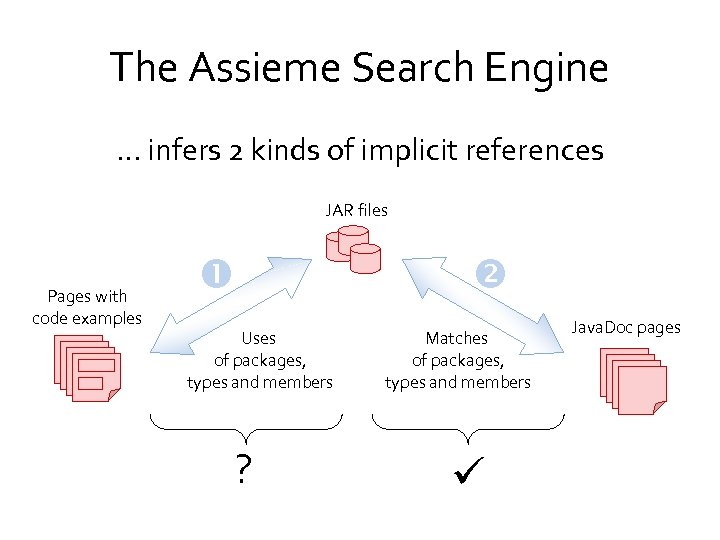

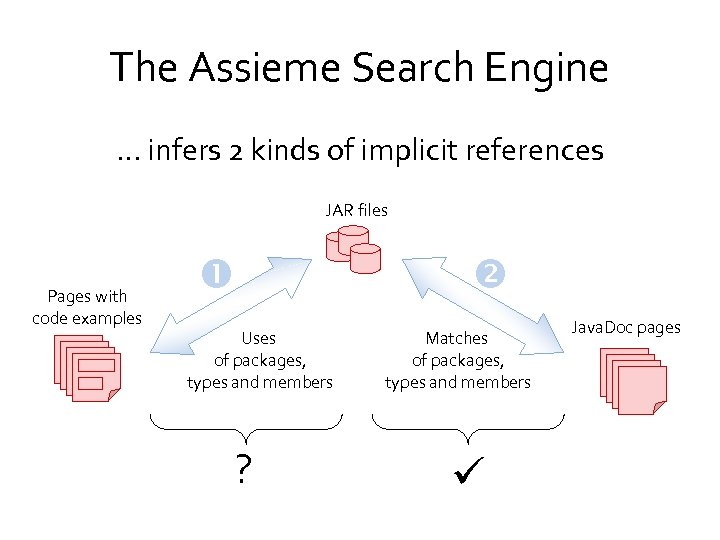

The Assieme Search Engine … infers 2 kinds of implicit references JAR files Pages with code examples Uses of packages, types and members ? Matches of packages, types and members Java. Doc pages

The Assieme Search Engine … infers 2 kinds of implicit references JAR files Pages with code examples Uses of packages, types and members ? Matches of packages, types and members Java. Doc pages

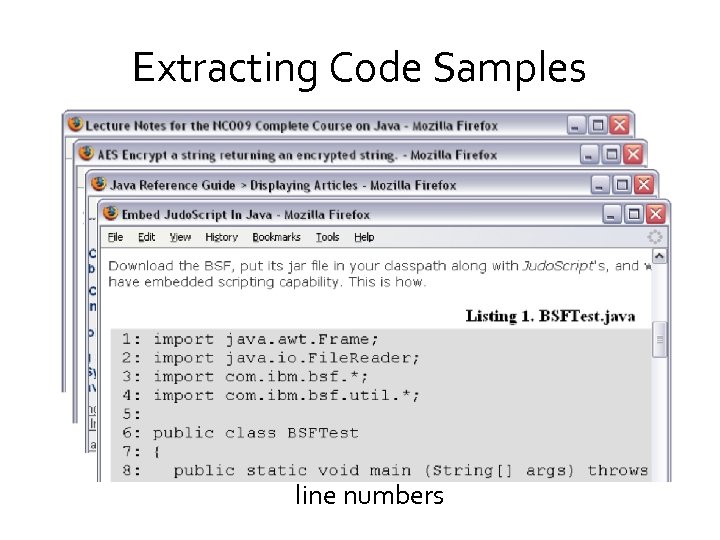

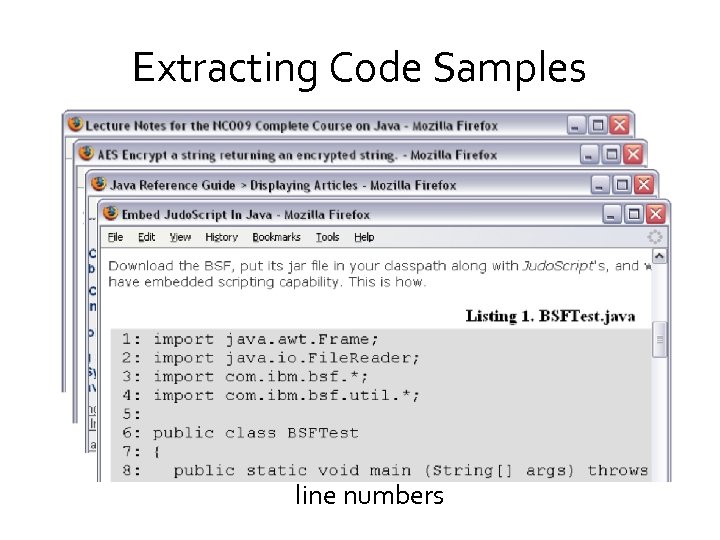

Extracting Code Samples unclear segmentation code in a different language (C++) distracting terms ‘…’ in code line numbers

Extracting Code Samples unclear segmentation code in a different language (C++) distracting terms ‘…’ in code line numbers

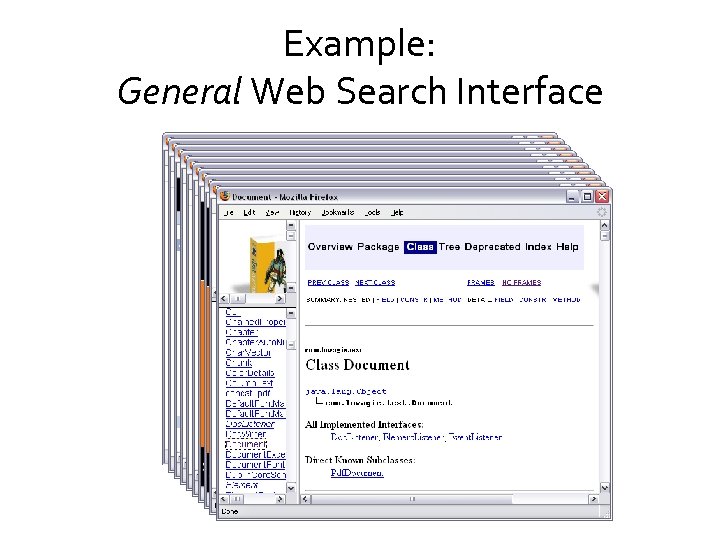

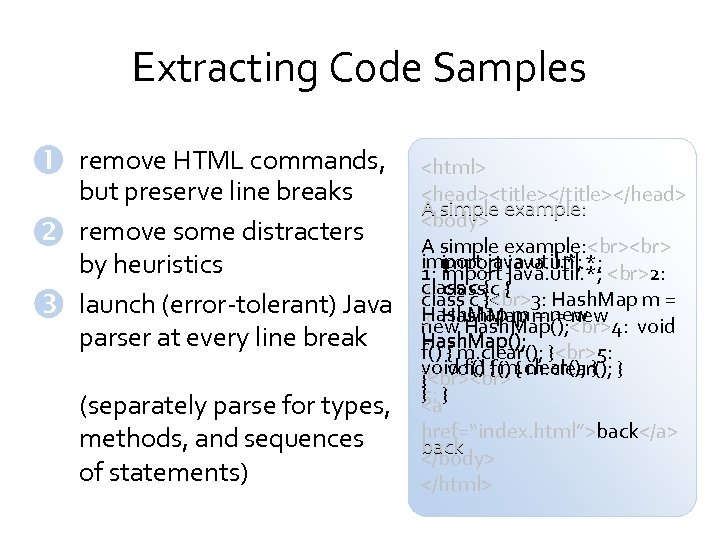

Extracting Code Samples remove HTML commands, but preserve line breaks remove some distracters by heuristics launch (error-tolerant) Java parser at every line break (separately parse for types, methods, and sequences of statements)

Extracting Code Samples remove HTML commands, but preserve line breaks remove some distracters by heuristics launch (error-tolerant) Java parser at every line break (separately parse for types, methods, and sequences of statements)

</head> A simple example: <body> A simple example: import java. util. *; 1: import java. util. *; 2: 1: import java. util. *; class c 2: class{ c { class c { 3: Hash. Map m = = new 3: Hash. Map mnew Hash. Map(); 4: void Hash. Map(); f() { m. clear(); } 5: void f() { m. clear(); 4: void { m. clear(); } } } 5: <a href=“index. html”>back</a> back </body> </html> </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Resolving External Code References Naïve approach of finding term matches does not work: 1" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-19.jpg" alt="Resolving External Code References Naïve approach of finding term matches does not work: 1" />

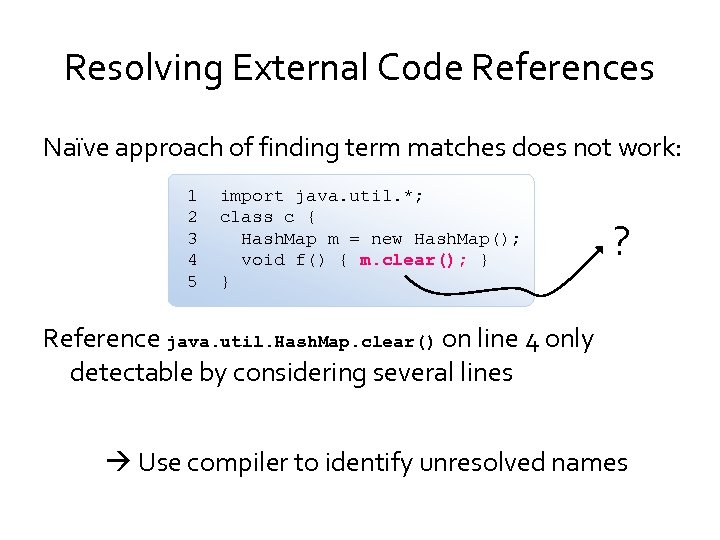

Resolving External Code References Naïve approach of finding term matches does not work: 1 2 3 4 5 import java. util. *; class c { Hash. Map m = new Hash. Map(); void f() { m. clear(); } } ? Reference java. util. Hash. Map. clear() on line 4 only detectable by considering several lines Use compiler to identify unresolved names </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Resolving External Code References • Index packages/types/members in Jar files java. util. Hash. Map." src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-20.jpg" alt="Resolving External Code References • Index packages/types/members in Jar files java. util. Hash. Map." />

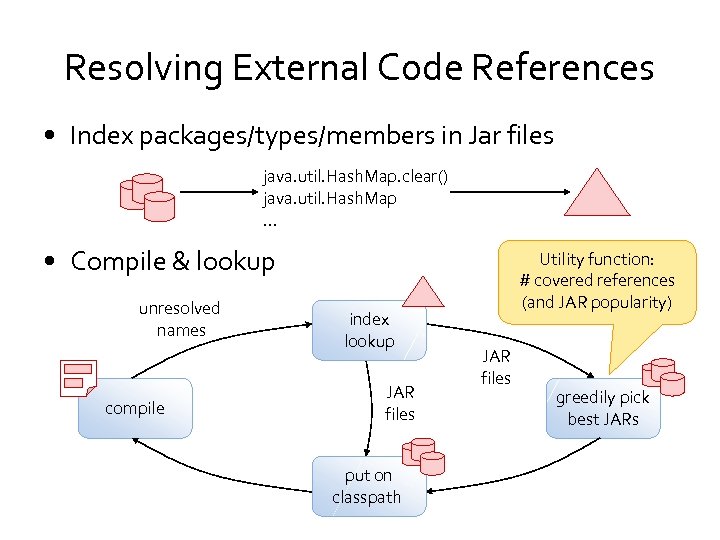

Resolving External Code References • Index packages/types/members in Jar files java. util. Hash. Map. clear() java. util. Hash. Map … • Compile & lookup unresolved names compile index lookup JAR files put on classpath Utility function: # covered references (and JAR popularity) JAR files greedily pick best JARs </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Scoring • Existing techniques … – Docs modeled as weighted term frequencies – Hypertext" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-21.jpg" alt="Scoring • Existing techniques … – Docs modeled as weighted term frequencies – Hypertext" />

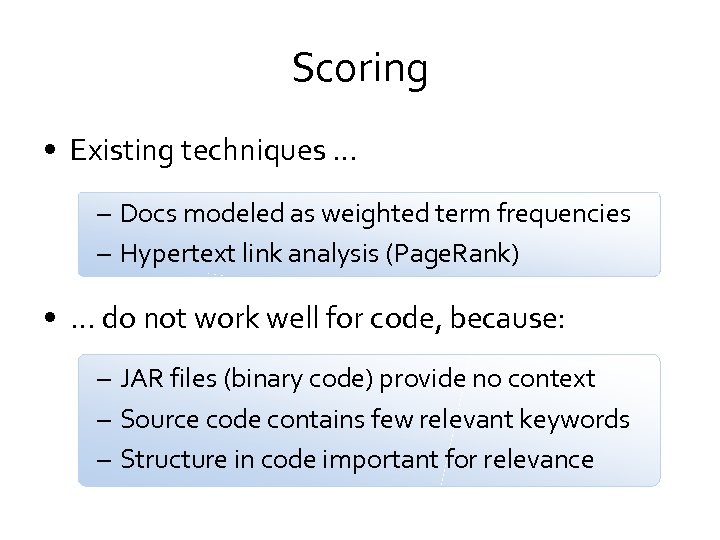

Scoring • Existing techniques … – Docs modeled as weighted term frequencies – Hypertext link analysis (Page. Rank) • … do not work well for code, because: – JAR files (binary code) provide no context – Source code contains few relevant keywords – Structure in code important for relevance </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Using Implicit References to Improve Scoring • Assieme exploits structure on Web pages and" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-22.jpg" alt="Using Implicit References to Improve Scoring • Assieme exploits structure on Web pages and" />

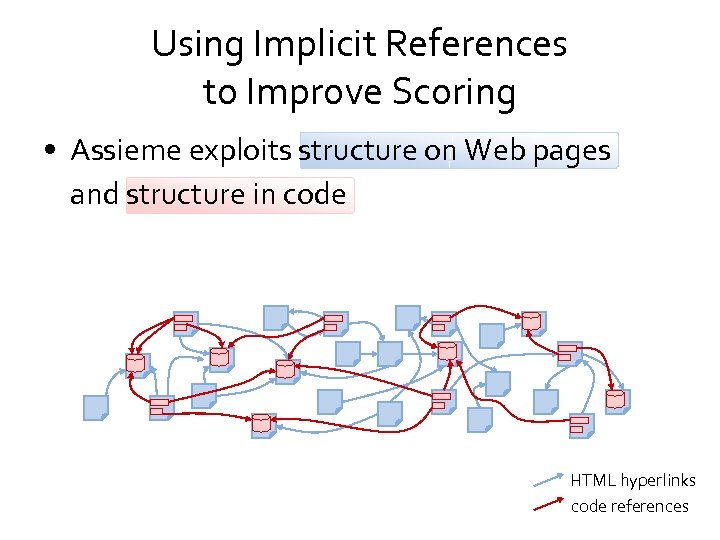

Using Implicit References to Improve Scoring • Assieme exploits structure on Web pages and structure in code HTML hyperlinks code references </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Scoring APIs (packages/types/members) Web pages " src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-23.jpg" alt="Scoring APIs (packages/types/members) Web pages " />

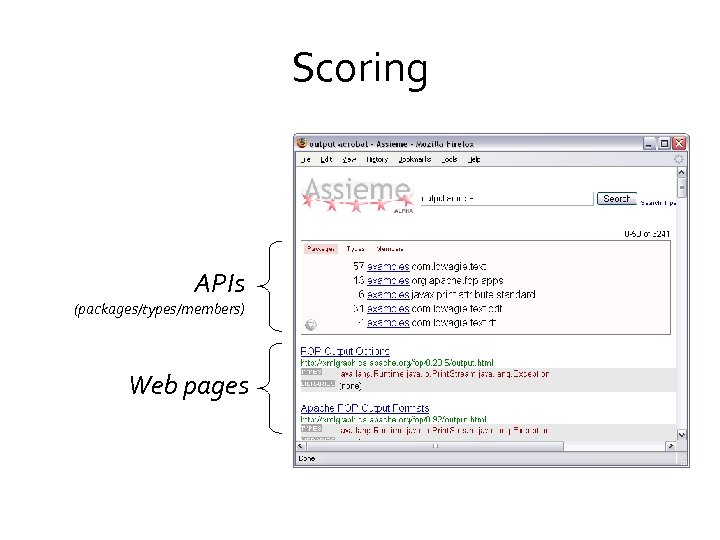

Scoring APIs (packages/types/members) Web pages </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Scoring APIs • Use text on doc pages and on pages with code samples" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-24.jpg" alt="Scoring APIs • Use text on doc pages and on pages with code samples" />

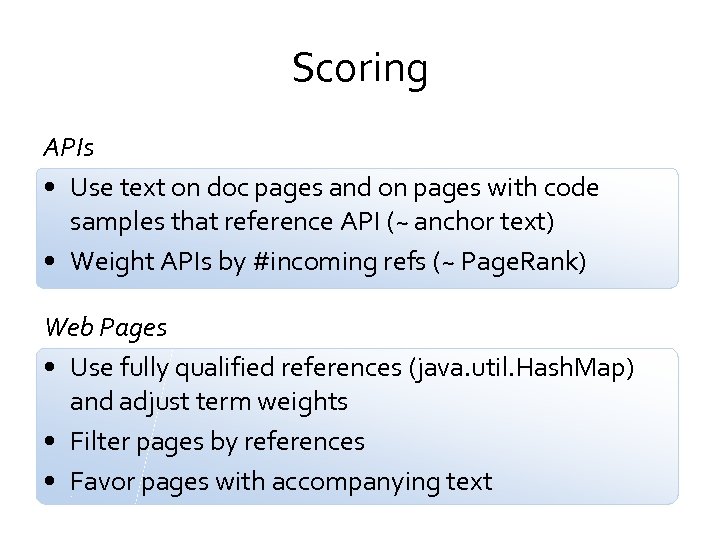

Scoring APIs • Use text on doc pages and on pages with code samples that reference API (~ anchor text) • Weight APIs by #incoming refs (~ Page. Rank) Web Pages • Use fully qualified references (java. util. Hash. Map) and adjust term weights • Filter pages by references • Favor pages with accompanying text </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Outline • Motivation • What Programmers Search For • The Assieme Search Engine –" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-25.jpg" alt="Outline • Motivation • What Programmers Search For • The Assieme Search Engine –" />

Outline • Motivation • What Programmers Search For • The Assieme Search Engine – Inferring Implicit References – Using Implicit References for Scoring • Evaluation of Inference & User Study • Discussion & Conclusion </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Evaluating Code Extraction and Reference Resolution … on 350 hand-labeled pages from Assieme’s data" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-26.jpg" alt="Evaluating Code Extraction and Reference Resolution … on 350 hand-labeled pages from Assieme’s data" />

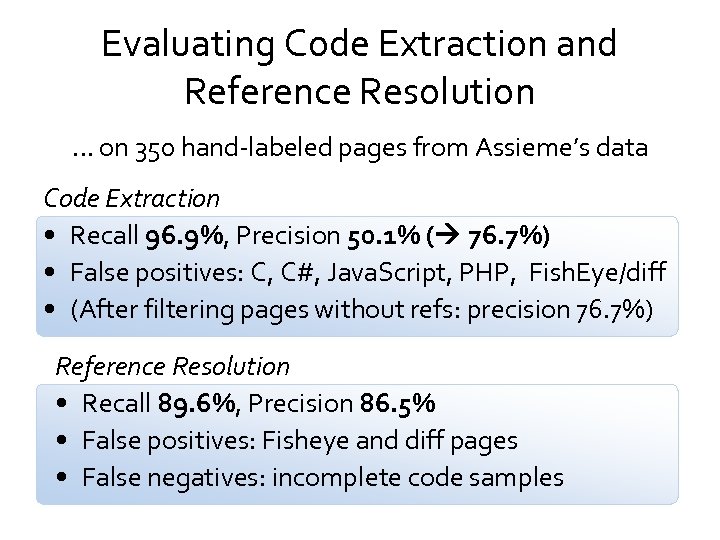

Evaluating Code Extraction and Reference Resolution … on 350 hand-labeled pages from Assieme’s data Code Extraction • Recall 96. 9%, Precision 50. 1% ( 76. 7%) • False positives: C, C#, Java. Script, PHP, Fish. Eye/diff • (After filtering pages without refs: precision 76. 7%) Reference Resolution • Recall 89. 6%, Precision 86. 5% • False positives: Fisheye and diff pages • False negatives: incomplete code samples </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="User Study Assieme vs. Google Code Search Design • 40 search tasks based on" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-27.jpg" alt="User Study Assieme vs. Google Code Search Design • 40 search tasks based on" />

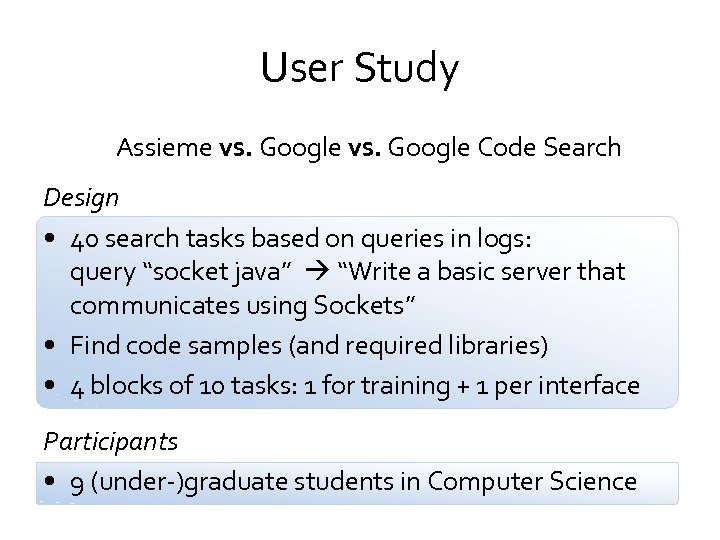

User Study Assieme vs. Google Code Search Design • 40 search tasks based on queries in logs: query “socket java” “Write a basic server that communicates using Sockets” • Find code samples (and required libraries) • 4 blocks of 10 tasks: 1 for training + 1 per interface Participants • 9 (under-)graduate students in Computer Science </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="User Study – Task Time F(1, 258)=5. 74 p ≈. 017 significant * F(1," src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-28.jpg" alt="User Study – Task Time F(1, 258)=5. 74 p ≈. 017 significant * F(1," />

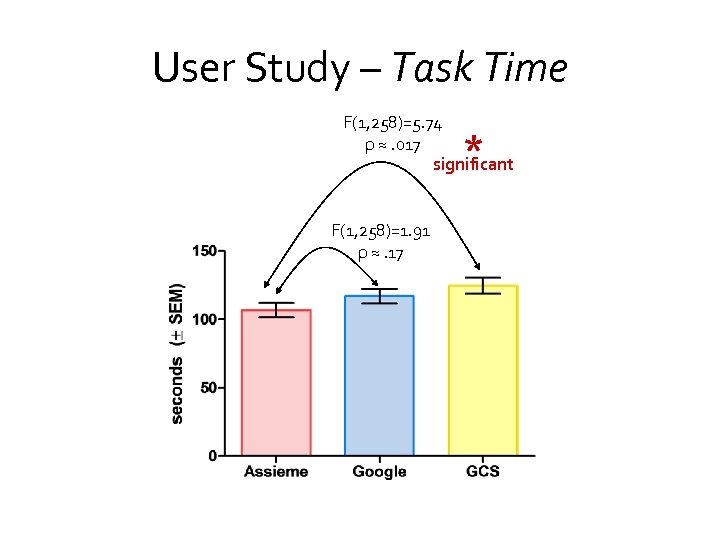

User Study – Task Time F(1, 258)=5. 74 p ≈. 017 significant * F(1, 258)=1. 91 p ≈. 17 </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="User Study – Solution Quality 0 seriously flawed. 5 generally good but fell short" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-29.jpg" alt="User Study – Solution Quality 0 seriously flawed. 5 generally good but fell short" />

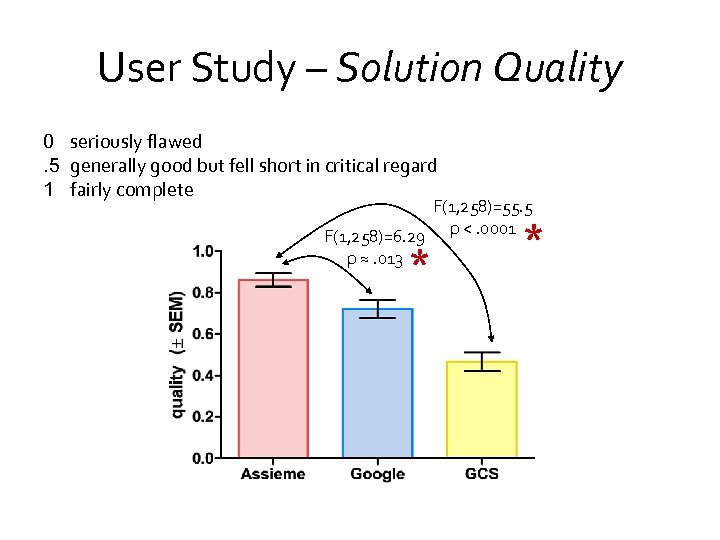

User Study – Solution Quality 0 seriously flawed. 5 generally good but fell short in critical regard 1 fairly complete F(1, 258)=55. 5 F(1, 258)=6. 29 p <. 0001 p ≈. 013 * * </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="User Study – # Queries Issued F(1, 259)=6. 85 p ≈. 001 F(1, 259)=9." src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-30.jpg" alt="User Study – # Queries Issued F(1, 259)=6. 85 p ≈. 001 F(1, 259)=9." />

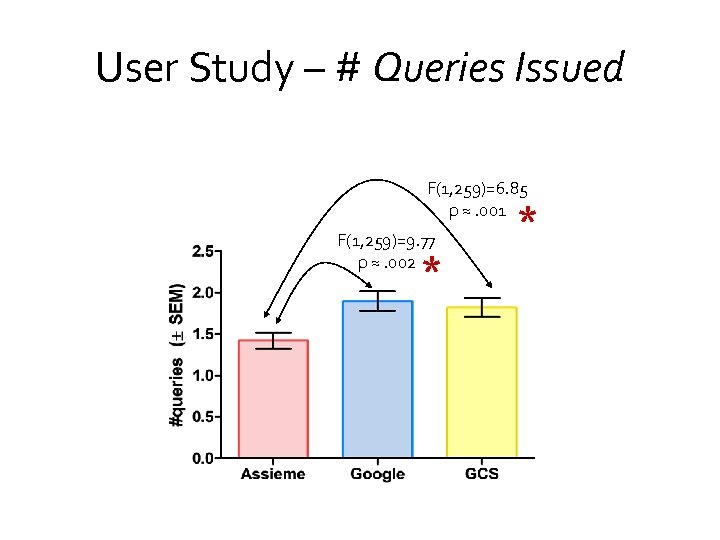

User Study – # Queries Issued F(1, 259)=6. 85 p ≈. 001 F(1, 259)=9. 77 p ≈. 002 * * </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Outline • Motivation • What Programmers Search For • The Assieme Search Engine –" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-31.jpg" alt="Outline • Motivation • What Programmers Search For • The Assieme Search Engine –" />

Outline • Motivation • What Programmers Search For • The Assieme Search Engine – Inferring Implicit References – Using Implicit References for Scoring • Evaluation of Inference & User Study • Discussion & Conclusion </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Discussion & Conclusion • Assieme – a novel web search interface • Programmers obtain" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-32.jpg" alt="Discussion & Conclusion • Assieme – a novel web search interface • Programmers obtain" />

Discussion & Conclusion • Assieme – a novel web search interface • Programmers obtain better solutions, using fewer queries, in the same amount of time • Using Google subjects visited 3. 3 pages/task, using Assieme only 0. 27 pages, but 4. 3 previews • Ability to quickly view code samples changed participants’ strategies </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="Thank You Raphael Hoffmann Computer Science & Engineering University of Washington raphaelh@cs. washington. edu" src="https://present5.com/presentation/198b2b584817116e954e3a9312dcf7a3/image-33.jpg" alt="Thank You Raphael Hoffmann Computer Science & Engineering University of Washington raphaelh@cs. washington. edu" />

Thank You Raphael Hoffmann Computer Science & Engineering University of Washington raphaelh@cs. washington. edu James Fogarty Computer Science & Engineering University of Washington jfogarty@cs. washington. edu Daniel S. Weld Computer Science & Engineering University of Washington weld@cs. washington. edu This material is based upon work supported by the National Science Foundation under grant IIS-0307906, by the Office of Naval Research under grant N 00014 -06 -10147, SRI International under CALO grant 03 -000225 and the Washington Research Foundation / TJ Cable Professorship. </p>

</div>

<div style="width: auto;" class="description columns twelve"><p><img class="imgdescription" title="" src="" alt="" />

</p>

</div>

</div>

<div id="inputform">

<script>$("#inputform").load("https://present5.com/wp-content/plugins/report-content/inc/report-form-aj.php");

</script>

</div>

</p>

<!--end entry-content-->

</div>

</article><!-- .post -->

</section><!-- #content -->

<div class="three columns">

<div class="widget-entry">

</div>

</div>

</div>

</div>

<!-- #content-wrapper -->

<footer id="footer" style="padding: 5px 0 5px;">

<div class="container">

<div class="columns twelve">

<!--noindex-->

<!--LiveInternet counter--><script type="text/javascript"><!--

document.write("<img src='//counter.yadro.ru/hit?t26.10;r"+

escape(document.referrer)+((typeof(screen)=="undefined")?"":

";s"+screen.width+"*"+screen.height+"*"+(screen.colorDepth?

screen.colorDepth:screen.pixelDepth))+";u"+escape(document.URL)+

";"+Math.random()+

"' alt='' title='"+" ' "+

"border='0' width='1' height='1'><\/a>")

//--></script><!--/LiveInternet-->

<a href="https://slidetodoc.com/" alt="Наш международный проект SlideToDoc.com!" target="_blank"><img src="https://present5.com/SlideToDoc.png"></a> <script>

$(window).load(function() {

var owl = document.getElementsByClassName('owl-carousel owl-theme owl-loaded owl-drag')[0];

document.getElementById("owlheader").insertBefore(owl, null);

$('#owlheader').css('display', 'inline-block');

});

</script>

<script type="text/javascript">

var yaParams = {'typepage': '1000_top_300k',

'author': '1000_top_300k'

};

</script>

<!-- Yandex.Metrika counter --> <script type="text/javascript" > (function(m,e,t,r,i,k,a){m[i]=m[i]||function(){(m[i].a=m[i].a||[]).push(arguments)}; m[i].l=1*new Date(); for (var j = 0; j < document.scripts.length; j++) {if (document.scripts[j].src === r) { return; }} k=e.createElement(t),a=e.getElementsByTagName(t)[0],k.async=1,k.src=r,a.parentNode.insertBefore(k,a)}) (window, document, "script", "https://mc.yandex.ru/metrika/tag.js", "ym"); ym(32395810, "init", { clickmap:true, trackLinks:true, accurateTrackBounce:true, webvisor:true }); </script> <noscript><div><img src="https://mc.yandex.ru/watch/32395810" style="position:absolute; left:-9999px;" alt="" /></div></noscript> <!-- /Yandex.Metrika counter -->

<!--/noindex-->

<nav id="top-nav">

<ul id="menu-top" class="top-menu clearfix">

</ul> </nav>

</div>

</div><!--.container-->

</footer>

<script type='text/javascript'>

/* <![CDATA[ */

var wpcf7 = {"apiSettings":{"root":"https:\/\/present5.com\/wp-json\/contact-form-7\/v1","namespace":"contact-form-7\/v1"}};

/* ]]> */

</script>

<script type='text/javascript' src='https://present5.com/wp-content/plugins/contact-form-7/includes/js/scripts.js?ver=5.1.4'></script>

<script type='text/javascript' src='https://present5.com/wp-content/themes/sampression-lite/lib/js/jquery.shuffle.js?ver=4.9.26'></script>

<script type='text/javascript' src='https://present5.com/wp-content/themes/sampression-lite/lib/js/scripts.js?ver=1.13'></script>

<script type='text/javascript' src='https://present5.com/wp-content/themes/sampression-lite/lib/js/shuffle.js?ver=4.9.26'></script>

<!--[if lt IE 9]>

<script type='text/javascript' src='https://present5.com/wp-content/themes/sampression-lite/lib/js/selectivizr.js?ver=1.0.2'></script>

<![endif]-->

<script type='text/javascript' src='https://present5.com/wp-content/themes/sampression-lite/lib/js/notify.js?ver=1771819635'></script>

<script type='text/javascript'>

/* <![CDATA[ */

var my_ajax_object = {"ajax_url":"https:\/\/present5.com\/wp-admin\/admin-ajax.php","nonce":"ad8b481b09"};

/* ]]> */

</script>

<script type='text/javascript' src='https://present5.com/wp-content/themes/sampression-lite/lib/js/filer.js?ver=1771819635'></script>

</body>

</html>  Assieme: Finding and Leveraging Implicit References in a Web Search Interface for Programmers Raphael Hoffmann, James Fogarty, Daniel S. Weld University of Washington, Seattle UIST 2007

Assieme: Finding and Leveraging Implicit References in a Web Search Interface for Programmers Raphael Hoffmann, James Fogarty, Daniel S. Weld University of Washington, Seattle UIST 2007  Programmers Use Search • To identify an API • To seek information about an API • To find examples on how to use an API Example Task: “Programmatically output an Acrobat PDF file in Java. ”

Programmers Use Search • To identify an API • To seek information about an API • To find examples on how to use an API Example Task: “Programmatically output an Acrobat PDF file in Java. ”  Example: General Web Search Interface

Example: General Web Search Interface  Example: Code-Specific Web Search Interface …

Example: Code-Specific Web Search Interface …  Problems • Information is dispersed: tutorials, API itself, documentation, pages with samples • Difficult and time-consuming to … – – locate required pieces, get an overview of alternatives, judge relevance and quality of results, understand dependencies. • Many page visits required

Problems • Information is dispersed: tutorials, API itself, documentation, pages with samples • Difficult and time-consuming to … – – locate required pieces, get an overview of alternatives, judge relevance and quality of results, understand dependencies. • Many page visits required  With Assieme we … • Designed a new Web search interface • Developed needed inference

With Assieme we … • Designed a new Web search interface • Developed needed inference  Outline • Motivation • What Programmers Search For • The Assieme Search Engine – Inferring Implicit References – Using Implicit References for Scoring • Evaluation of Inference & User Study • Discussion & Conclusion

Outline • Motivation • What Programmers Search For • The Assieme Search Engine – Inferring Implicit References – Using Implicit References for Scoring • Evaluation of Inference & User Study • Discussion & Conclusion  Six Learning Barriers faced by Programmers (Ko et al. 04) • Design barriers — What to do? • Selection barriers — What to use? • Coordination barriers — How to combine? • Use barriers — How to use? • Understanding barriers — What is wrong? • Information barriers — How to check?

Six Learning Barriers faced by Programmers (Ko et al. 04) • Design barriers — What to do? • Selection barriers — What to use? • Coordination barriers — How to combine? • Use barriers — How to use? • Understanding barriers — What is wrong? • Information barriers — How to check?  Examining Programmer Web Queries Objective • See what programmers search for Dataset • 15 million queries and click-through data • Random sample of MSN queries in 05/06 Procedure • Extract query sessions containing ‘java’ – 2, 529 • Manual looking at queries and defining regex filters • Informal taxonomy of query sessions

Examining Programmer Web Queries Objective • See what programmers search for Dataset • 15 million queries and click-through data • Random sample of MSN queries in 05/06 Procedure • Extract query sessions containing ‘java’ – 2, 529 • Manual looking at queries and defining regex filters • Informal taxonomy of query sessions  Examining Programmer Web Queries

Examining Programmer Web Queries  Examining Programmer Web Queries 64. 1 % 35. 9 % Descriptive Contain package, type or member name “java JSP current date” “java Simple. Date. Format” Selection barrier Use barrier 17. 9 % Contain terms like “example”, “using”, “sample code” “using currentdate in jsp” Coordination barrier

Examining Programmer Web Queries 64. 1 % 35. 9 % Descriptive Contain package, type or member name “java JSP current date” “java Simple. Date. Format” Selection barrier Use barrier 17. 9 % Contain terms like “example”, “using”, “sample code” “using currentdate in jsp” Coordination barrier  relevance indicated by # uses Assieme documentation example code Summaries show referenced types required libaries links to related info

relevance indicated by # uses Assieme documentation example code Summaries show referenced types required libaries links to related info  Challenges ? How to put the right information on the interface • Get all programming-related data • Interpret data and infer relationships

Challenges ? How to put the right information on the interface • Get all programming-related data • Interpret data and infer relationships  Outline • Motivation • What Programmers Search For • The Assieme Search Engine – Inferring Implicit References – Using Implicit References for Scoring • Evaluation of Inference & User Study • Discussion & Conclusion

Outline • Motivation • What Programmers Search For • The Assieme Search Engine – Inferring Implicit References – Using Implicit References for Scoring • Evaluation of Inference & User Study • Discussion & Conclusion  Assieme’s Data Pages with code examples Queried Google on “java ±import ±class …” ~2, 360, 000 JAR files Downloaded library files for all projects on Sun. com, Apache. org, Java. net, Source. Forge. net ~79, 000 Java. Doc pages Queried Google on “overview-tree. html …” ~480, 000 … is crawled using existing search engines

Assieme’s Data Pages with code examples Queried Google on “java ±import ±class …” ~2, 360, 000 JAR files Downloaded library files for all projects on Sun. com, Apache. org, Java. net, Source. Forge. net ~79, 000 Java. Doc pages Queried Google on “overview-tree. html …” ~480, 000 … is crawled using existing search engines  The Assieme Search Engine … infers 2 kinds of implicit references JAR files Pages with code examples Uses of packages, types and members ? Matches of packages, types and members Java. Doc pages

The Assieme Search Engine … infers 2 kinds of implicit references JAR files Pages with code examples Uses of packages, types and members ? Matches of packages, types and members Java. Doc pages  Extracting Code Samples unclear segmentation code in a different language (C++) distracting terms ‘…’ in code line numbers

Extracting Code Samples unclear segmentation code in a different language (C++) distracting terms ‘…’ in code line numbers  Extracting Code Samples remove HTML commands, but preserve line breaks remove some distracters by heuristics launch (error-tolerant) Java parser at every line break (separately parse for types, methods, and sequences of statements)

Extracting Code Samples remove HTML commands, but preserve line breaks remove some distracters by heuristics launch (error-tolerant) Java parser at every line break (separately parse for types, methods, and sequences of statements)