c1d3a30c190b71cf02116a4353c5d348.ppt

- Количество слайдов: 38

Artificial Intelligence Techniques for Misuse and Anomaly Detection Computer Science Department University of Wyoming Laramie, WY 82071

Artificial Intelligence Techniques for Misuse and Anomaly Detection Computer Science Department University of Wyoming Laramie, WY 82071

Project 1: Misuse Detection With Semantic Analogy Faculty: Diana Spears William Spears John Hitchcock (UW, consultant) Ph. D. Student: Adriana Alina Bilt (Relevant disciplines: AI planning, AI machine learning, case-based reasoning, complexity theory)

Project 1: Misuse Detection With Semantic Analogy Faculty: Diana Spears William Spears John Hitchcock (UW, consultant) Ph. D. Student: Adriana Alina Bilt (Relevant disciplines: AI planning, AI machine learning, case-based reasoning, complexity theory)

Misuse Detection n Misuse: Unauthorized behavior specified by usage patterns called signatures. In this project, we are currently working with signatures that are sequences of commands. The most significant open problem in misuse detection: False negatives, i. e. , errors of omission.

Misuse Detection n Misuse: Unauthorized behavior specified by usage patterns called signatures. In this project, we are currently working with signatures that are sequences of commands. The most significant open problem in misuse detection: False negatives, i. e. , errors of omission.

Project Objectives 1. 2. Develop a misuse detection algorithm that dramatically reduces the number of false negatives. (will be specified later)

Project Objectives 1. 2. Develop a misuse detection algorithm that dramatically reduces the number of false negatives. (will be specified later)

Our Approach n Analogy, also called Case-Based Reasoning (CBR), will be used to match a current ongoing intrusion against previously stored (in a database) signatures of attacks. n n Uses a flexible match between a new intrusion sequence and a previously stored signature. We use semantic, rather than syntactic, analogy.

Our Approach n Analogy, also called Case-Based Reasoning (CBR), will be used to match a current ongoing intrusion against previously stored (in a database) signatures of attacks. n n Uses a flexible match between a new intrusion sequence and a previously stored signature. We use semantic, rather than syntactic, analogy.

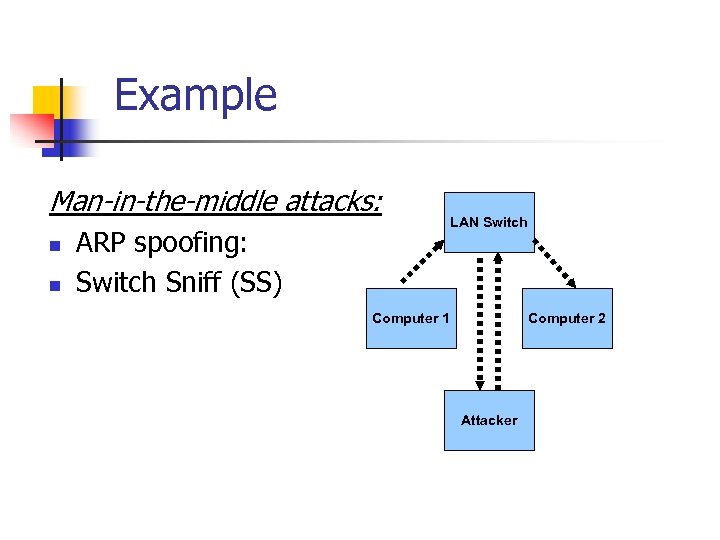

Example Man-in-the-middle attacks: n n ARP spoofing: Switch Sniff (SS) LAN Switch Computer 1 Computer 2 Attacker

Example Man-in-the-middle attacks: n n ARP spoofing: Switch Sniff (SS) LAN Switch Computer 1 Computer 2 Attacker

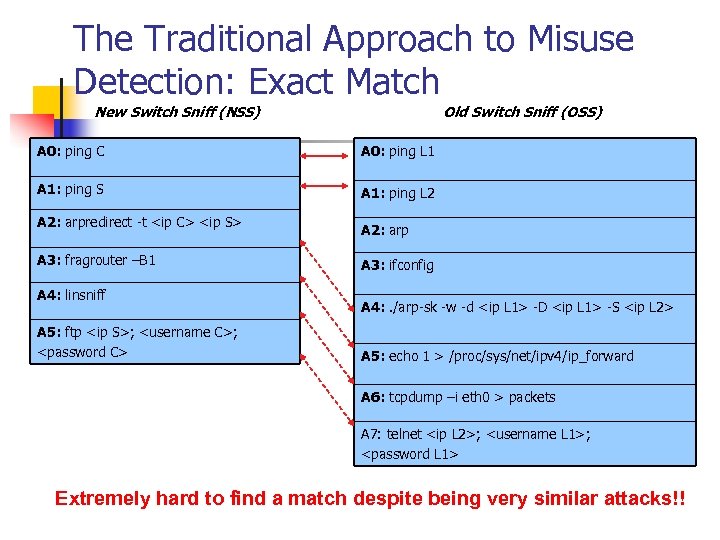

The Traditional Approach to Misuse Detection: Exact Match New Switch Sniff (NSS) Old Switch Sniff (OSS) A 0: ping C A 0: ping L 1 A 1: ping S A 1: ping L 2 A 2: arpredirect -t

The Traditional Approach to Misuse Detection: Exact Match New Switch Sniff (NSS) Old Switch Sniff (OSS) A 0: ping C A 0: ping L 1 A 1: ping S A 1: ping L 2 A 2: arpredirect -t

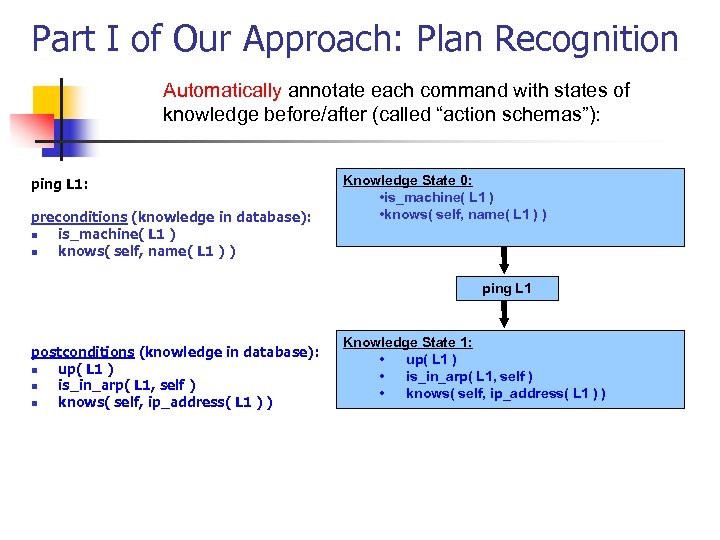

Part I of Our Approach: Plan Recognition Automatically annotate each command with states of knowledge before/after (called “action schemas”): ping L 1: preconditions (knowledge in database): n is_machine( L 1 ) n knows( self, name( L 1 ) ) Knowledge State 0: • is_machine( L 1 ) • knows( self, name( L 1 ) ) ping L 1 postconditions (knowledge in database): n up( L 1 ) n is_in_arp( L 1, self ) n knows( self, ip_address( L 1 ) ) Knowledge State 1: • up( L 1 ) • is_in_arp( L 1, self ) • knows( self, ip_address( L 1 ) )

Part I of Our Approach: Plan Recognition Automatically annotate each command with states of knowledge before/after (called “action schemas”): ping L 1: preconditions (knowledge in database): n is_machine( L 1 ) n knows( self, name( L 1 ) ) Knowledge State 0: • is_machine( L 1 ) • knows( self, name( L 1 ) ) ping L 1 postconditions (knowledge in database): n up( L 1 ) n is_in_arp( L 1, self ) n knows( self, ip_address( L 1 ) ) Knowledge State 1: • up( L 1 ) • is_in_arp( L 1, self ) • knows( self, ip_address( L 1 ) )

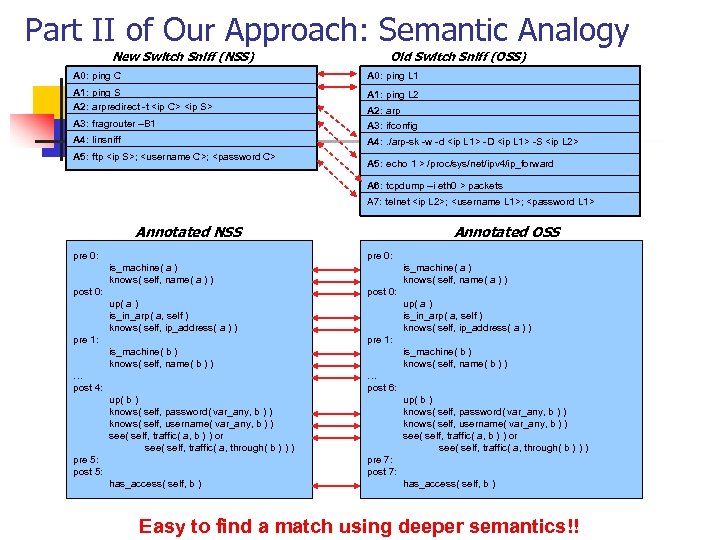

Part II of Our Approach: Semantic Analogy New Switch Sniff (NSS) Old Switch Sniff (OSS) A 0: ping C A 0: ping L 1 A 1: ping S A 2: arpredirect -t

Part II of Our Approach: Semantic Analogy New Switch Sniff (NSS) Old Switch Sniff (OSS) A 0: ping C A 0: ping L 1 A 1: ping S A 2: arpredirect -t

Our Important Contribution to Misuse Detection So Far n We have already substantially reduced the likelihood of making errors of omission over prior approaches by: n Adding PLAN RECOGNITION to fill in the deeper semantic knowledge. n Doing SEMANTIC ANALOGY.

Our Important Contribution to Misuse Detection So Far n We have already substantially reduced the likelihood of making errors of omission over prior approaches by: n Adding PLAN RECOGNITION to fill in the deeper semantic knowledge. n Doing SEMANTIC ANALOGY.

Project Objectives 1. 2. Develop a misuse detection algorithm that dramatically reduces the number of false negatives. Develop a similarity metric (for analogy) that performs well experimentally on intrusions, but is also universal (not specific to one type of intrusion or one computer language).

Project Objectives 1. 2. Develop a misuse detection algorithm that dramatically reduces the number of false negatives. Develop a similarity metric (for analogy) that performs well experimentally on intrusions, but is also universal (not specific to one type of intrusion or one computer language).

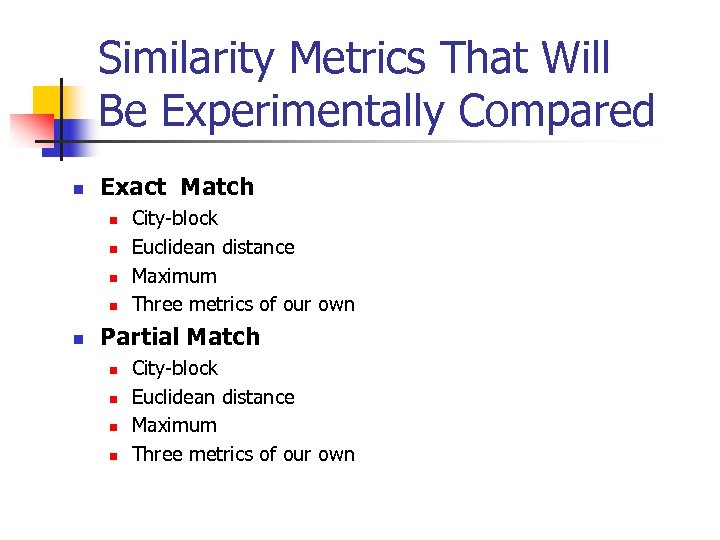

Similarity Metrics That Will Be Experimentally Compared n Exact Match n n n City-block Euclidean distance Maximum Three metrics of our own Partial Match n n City-block Euclidean distance Maximum Three metrics of our own

Similarity Metrics That Will Be Experimentally Compared n Exact Match n n n City-block Euclidean distance Maximum Three metrics of our own Partial Match n n City-block Euclidean distance Maximum Three metrics of our own

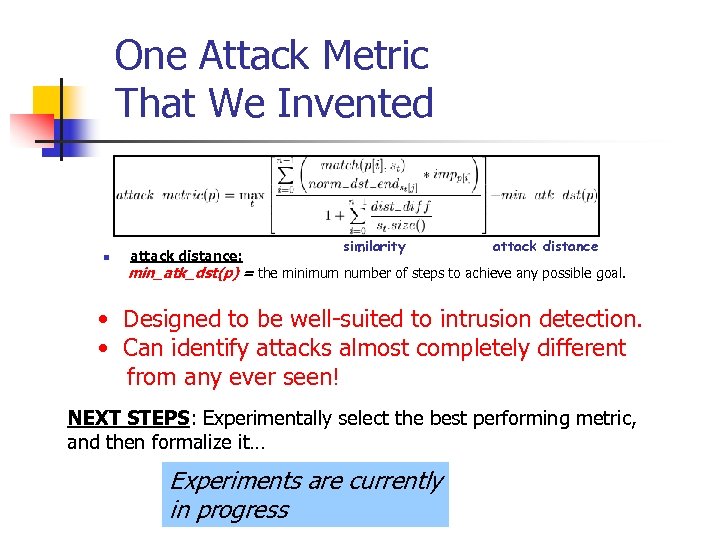

One Attack Metric That We Invented n attack distance: similarity attack distance min_atk_dst(p) = the minimum number of steps to achieve any possible goal. • Designed to be well-suited to intrusion detection. • Can identify attacks almost completely different from any ever seen! NEXT STEPS: Experimentally select the best performing metric, and then formalize it… Experiments are currently in progress

One Attack Metric That We Invented n attack distance: similarity attack distance min_atk_dst(p) = the minimum number of steps to achieve any possible goal. • Designed to be well-suited to intrusion detection. • Can identify attacks almost completely different from any ever seen! NEXT STEPS: Experimentally select the best performing metric, and then formalize it… Experiments are currently in progress

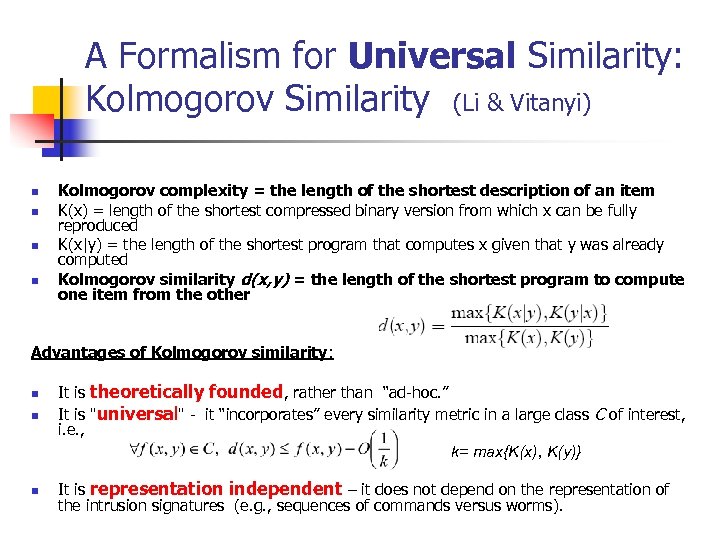

A Formalism for Universal Similarity: Kolmogorov Similarity (Li & Vitanyi) n n Kolmogorov complexity = the length of the shortest description of an item K(x) = length of the shortest compressed binary version from which x can be fully reproduced K(x|y) = the length of the shortest program that computes x given that y was already computed Kolmogorov similarity d(x, y) = the length of the shortest program to compute one item from the other Advantages of Kolmogorov similarity: n It is theoretically founded, rather than “ad-hoc. ” It is "universal" - it “incorporates” every similarity metric in a large class C of interest, i. e. , k= max{K(x), K(y)} n It is representation independent – it does not depend on the representation of the intrusion signatures (e. g. , sequences of commands versus worms). n

A Formalism for Universal Similarity: Kolmogorov Similarity (Li & Vitanyi) n n Kolmogorov complexity = the length of the shortest description of an item K(x) = length of the shortest compressed binary version from which x can be fully reproduced K(x|y) = the length of the shortest program that computes x given that y was already computed Kolmogorov similarity d(x, y) = the length of the shortest program to compute one item from the other Advantages of Kolmogorov similarity: n It is theoretically founded, rather than “ad-hoc. ” It is "universal" - it “incorporates” every similarity metric in a large class C of interest, i. e. , k= max{K(x), K(y)} n It is representation independent – it does not depend on the representation of the intrusion signatures (e. g. , sequences of commands versus worms). n

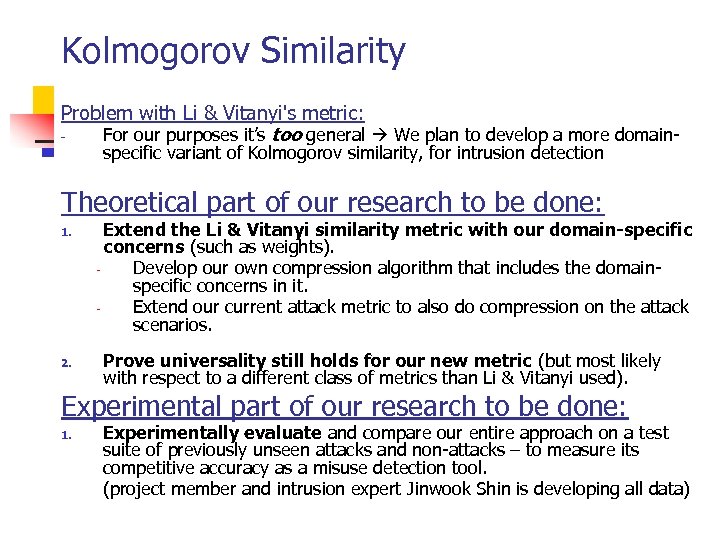

Kolmogorov Similarity Problem with Li & Vitanyi's metric: For our purposes it’s too general We plan to develop a more domainspecific variant of Kolmogorov similarity, for intrusion detection Theoretical part of our research to be done: 1. 2. Extend the Li & Vitanyi similarity metric with our domain-specific concerns (such as weights). Develop our own compression algorithm that includes the domainspecific concerns in it. Extend our current attack metric to also do compression on the attack scenarios. Prove universality still holds for our new metric (but most likely with respect to a different class of metrics than Li & Vitanyi used). Experimental part of our research to be done: 1. Experimentally evaluate and compare our entire approach on a test suite of previously unseen attacks and non-attacks – to measure its competitive accuracy as a misuse detection tool. (project member and intrusion expert Jinwook Shin is developing all data)

Kolmogorov Similarity Problem with Li & Vitanyi's metric: For our purposes it’s too general We plan to develop a more domainspecific variant of Kolmogorov similarity, for intrusion detection Theoretical part of our research to be done: 1. 2. Extend the Li & Vitanyi similarity metric with our domain-specific concerns (such as weights). Develop our own compression algorithm that includes the domainspecific concerns in it. Extend our current attack metric to also do compression on the attack scenarios. Prove universality still holds for our new metric (but most likely with respect to a different class of metrics than Li & Vitanyi used). Experimental part of our research to be done: 1. Experimentally evaluate and compare our entire approach on a test suite of previously unseen attacks and non-attacks – to measure its competitive accuracy as a misuse detection tool. (project member and intrusion expert Jinwook Shin is developing all data)

Project 2: Ensembles of Anomaly NIDS for Classification Faculty: Diana Spears Peter Polyakov (Math Dept, Kelly’s advisor, no cost to ONR) M. S. Students: Carlos Kelly Christer Karlson (EPSCo. R grant, no cost to ONR) (Relevant disciplines: mathematical formalization, AI machine learning)

Project 2: Ensembles of Anomaly NIDS for Classification Faculty: Diana Spears Peter Polyakov (Math Dept, Kelly’s advisor, no cost to ONR) M. S. Students: Carlos Kelly Christer Karlson (EPSCo. R grant, no cost to ONR) (Relevant disciplines: mathematical formalization, AI machine learning)

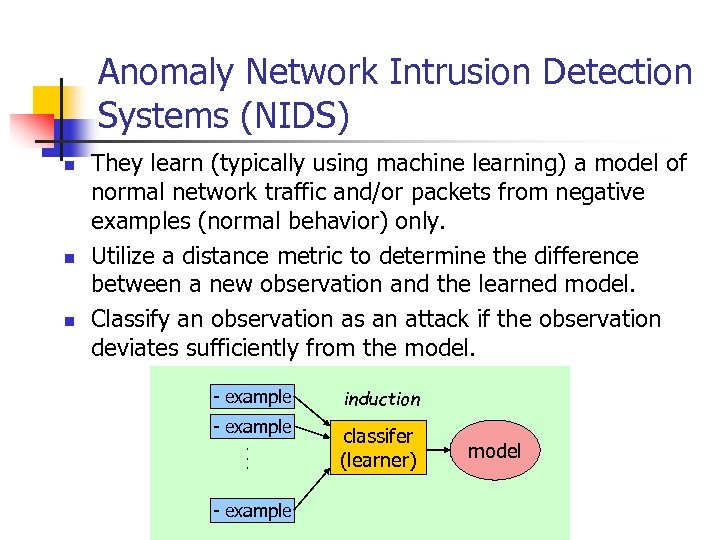

Anomaly Network Intrusion Detection Systems (NIDS) n n n They learn (typically using machine learning) a model of normal network traffic and/or packets from negative examples (normal behavior) only. Utilize a distance metric to determine the difference between a new observation and the learned model. Classify an observation as an attack if the observation deviates sufficiently from the model. - example induction - example classifer (learner) . . . - example model

Anomaly Network Intrusion Detection Systems (NIDS) n n n They learn (typically using machine learning) a model of normal network traffic and/or packets from negative examples (normal behavior) only. Utilize a distance metric to determine the difference between a new observation and the learned model. Classify an observation as an attack if the observation deviates sufficiently from the model. - example induction - example classifer (learner) . . . - example model

A Major Drawback of Current Anomaly NIDs n n Classification as “attack” or “not attack” can be inaccurate and not informative enough, e. g, . What kind of attack? Would like a front-end to a misuse detection algorithm that helps narrow down the class of the attack.

A Major Drawback of Current Anomaly NIDs n n Classification as “attack” or “not attack” can be inaccurate and not informative enough, e. g, . What kind of attack? Would like a front-end to a misuse detection algorithm that helps narrow down the class of the attack.

Our Approach n n Combine existing fast and efficient NIDS (which are really machine learning “classifiers”) together into an ensemble to increase the information. Will employ a combined theoretical and experimental approach. Currently working with the classifiers LERAD (Mahoney & Chan) and PAYL (Wang & Stolfo). Using data from the DARPA Lincoln Labs Intrusion Database

Our Approach n n Combine existing fast and efficient NIDS (which are really machine learning “classifiers”) together into an ensemble to increase the information. Will employ a combined theoretical and experimental approach. Currently working with the classifiers LERAD (Mahoney & Chan) and PAYL (Wang & Stolfo). Using data from the DARPA Lincoln Labs Intrusion Database

Ensembles of Classifiers n n n A very popular recent trend in machine learning. Main idea: Create an ensemble of existing classifiers – to increase the accuracy, e. g. , by doing a majority vote. Our novel twist: Create an ensemble of classifiers to increase the information, i. e. , don’t just classify as “positive” or “negative” – give a more specific class of attack if “positive. ” How? First we need to look at the “biases” of the classifiers…

Ensembles of Classifiers n n n A very popular recent trend in machine learning. Main idea: Create an ensemble of existing classifiers – to increase the accuracy, e. g. , by doing a majority vote. Our novel twist: Create an ensemble of classifiers to increase the information, i. e. , don’t just classify as “positive” or “negative” – give a more specific class of attack if “positive. ” How? First we need to look at the “biases” of the classifiers…

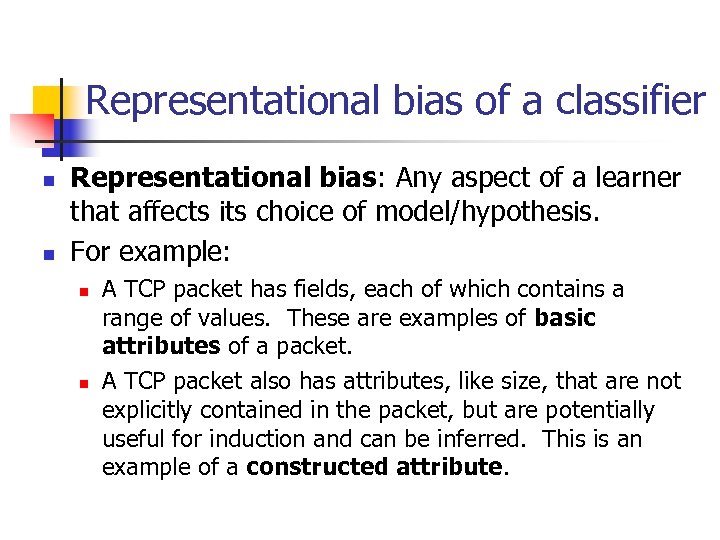

Representational bias of a classifier n n Representational bias: Any aspect of a learner that affects its choice of model/hypothesis. For example: n n A TCP packet has fields, each of which contains a range of values. These are examples of basic attributes of a packet. A TCP packet also has attributes, like size, that are not explicitly contained in the packet, but are potentially useful for induction and can be inferred. This is an example of a constructed attribute.

Representational bias of a classifier n n Representational bias: Any aspect of a learner that affects its choice of model/hypothesis. For example: n n A TCP packet has fields, each of which contains a range of values. These are examples of basic attributes of a packet. A TCP packet also has attributes, like size, that are not explicitly contained in the packet, but are potentially useful for induction and can be inferred. This is an example of a constructed attribute.

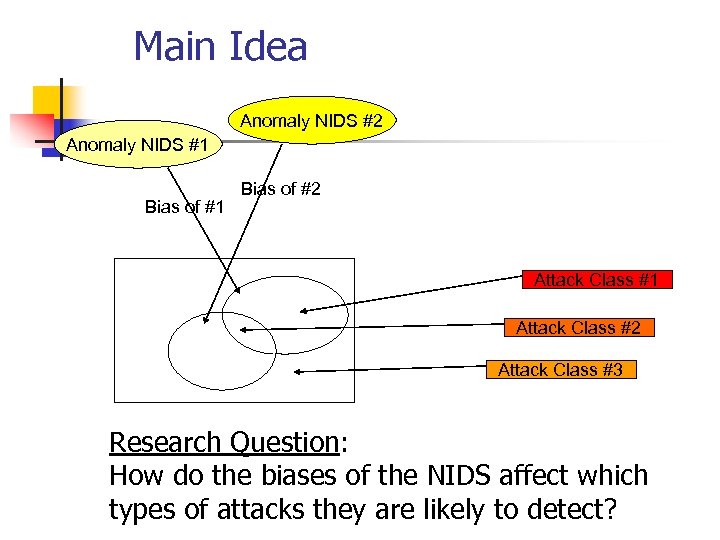

Main Idea Anomaly NIDS #2 Anomaly NIDS #1 Bias of #2 Attack Class #1 Attack Class #2 Attack Class #3 Research Question: How do the biases of the NIDS affect which types of attacks they are likely to detect?

Main Idea Anomaly NIDS #2 Anomaly NIDS #1 Bias of #2 Attack Class #1 Attack Class #2 Attack Class #3 Research Question: How do the biases of the NIDS affect which types of attacks they are likely to detect?

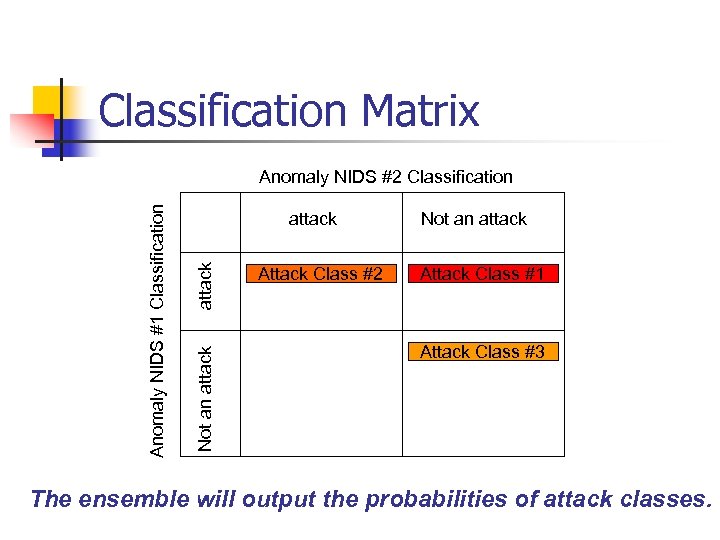

Classification Matrix attack Not an attack Anomaly NIDS #1 Classification Anomaly NIDS #2 Classification Attack Class #2 Not an attack Attack Class #1 Attack Class #3 The ensemble will output the probabilities of attack classes.

Classification Matrix attack Not an attack Anomaly NIDS #1 Classification Anomaly NIDS #2 Classification Attack Class #2 Not an attack Attack Class #1 Attack Class #3 The ensemble will output the probabilities of attack classes.

Contributions Completed n n n Mathematical formalizations of LERAD and PAYL. Explicitly formalized the biases of the two programs. Created a testbed for attacks with Lerad and Payl.

Contributions Completed n n n Mathematical formalizations of LERAD and PAYL. Explicitly formalized the biases of the two programs. Created a testbed for attacks with Lerad and Payl.

Research To Be Done: n Run many experiments with LERAD and PAYL in order to fill in the classification matrix. Experiments are currently in progress Update and refine the mathematical formalizations of system biases as needed for the classification matrix. n Revise the standard attack taxonomy to maximize information gain in the classification matrix. n Program the ensemble to output a probability distribution over classes of attacks associated with each alarm. n Test the accuracy of our ensemble on a test suite of previously unseen attack and non-attack examples, and compare just accuracy against that of LERAD and PAYL. Our approach is potentially scalable to lots of classifiers, but will not be done in the scope of the current MURI. n

Research To Be Done: n Run many experiments with LERAD and PAYL in order to fill in the classification matrix. Experiments are currently in progress Update and refine the mathematical formalizations of system biases as needed for the classification matrix. n Revise the standard attack taxonomy to maximize information gain in the classification matrix. n Program the ensemble to output a probability distribution over classes of attacks associated with each alarm. n Test the accuracy of our ensemble on a test suite of previously unseen attack and non-attack examples, and compare just accuracy against that of LERAD and PAYL. Our approach is potentially scalable to lots of classifiers, but will not be done in the scope of the current MURI. n

Project 3: The Basic Building Blocks of Attacks Faculty: Diana Spears (UW) William Spears (UW) Sampath Kannan (UPenn) Insup Lee (UPenn) Oleg Sokolsky (UPenn) M. S. Student: Jinwook Shin (UW) (Relevant disciplines: graphical models, compiler theory, AI machine learning)

Project 3: The Basic Building Blocks of Attacks Faculty: Diana Spears (UW) William Spears (UW) Sampath Kannan (UPenn) Insup Lee (UPenn) Oleg Sokolsky (UPenn) M. S. Student: Jinwook Shin (UW) (Relevant disciplines: graphical models, compiler theory, AI machine learning)

Research Question n What is an attack? In particular, “What are the basic building blocks of attacks? ” n n n Question posed by Insup Lee. To the best of our knowledge, this specific research question has not been previously addressed. Motivation: n Attack building blocks can be used in misuse detection systems – to look for key signatures of an attack.

Research Question n What is an attack? In particular, “What are the basic building blocks of attacks? ” n n n Question posed by Insup Lee. To the best of our knowledge, this specific research question has not been previously addressed. Motivation: n Attack building blocks can be used in misuse detection systems – to look for key signatures of an attack.

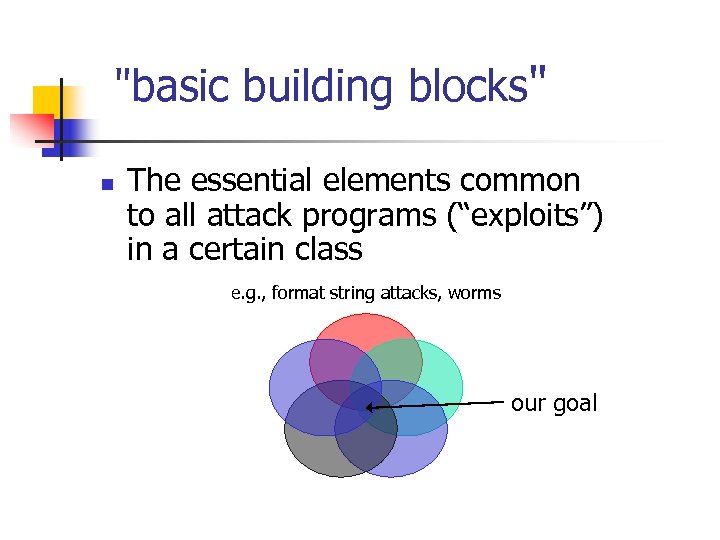

"basic building blocks" n The essential elements common to all attack programs (“exploits”) in a certain class e. g. , format string attacks, worms our goal

"basic building blocks" n The essential elements common to all attack programs (“exploits”) in a certain class e. g. , format string attacks, worms our goal

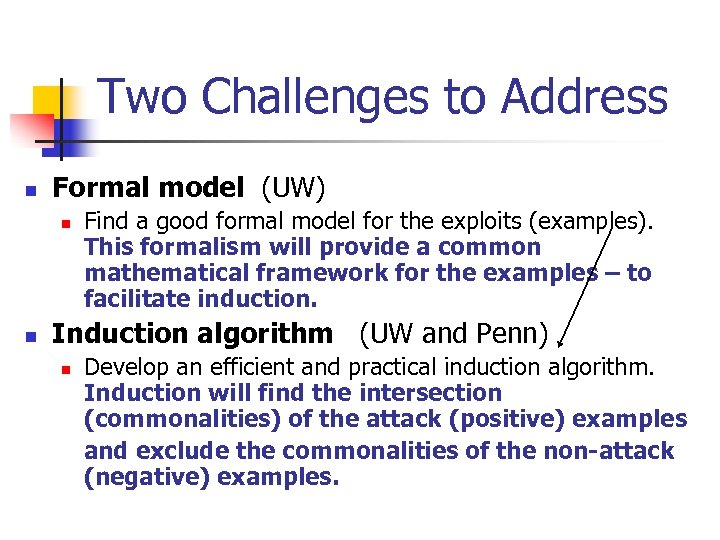

Two Challenges to Address n Formal model (UW) n n Find a good formal model for the exploits (examples). This formalism will provide a common mathematical framework for the examples – to facilitate induction. Induction algorithm (UW and Penn) n Develop an efficient and practical induction algorithm. Induction will find the intersection (commonalities) of the attack (positive) examples and exclude the commonalities of the non-attack (negative) examples.

Two Challenges to Address n Formal model (UW) n n Find a good formal model for the exploits (examples). This formalism will provide a common mathematical framework for the examples – to facilitate induction. Induction algorithm (UW and Penn) n Develop an efficient and practical induction algorithm. Induction will find the intersection (commonalities) of the attack (positive) examples and exclude the commonalities of the non-attack (negative) examples.

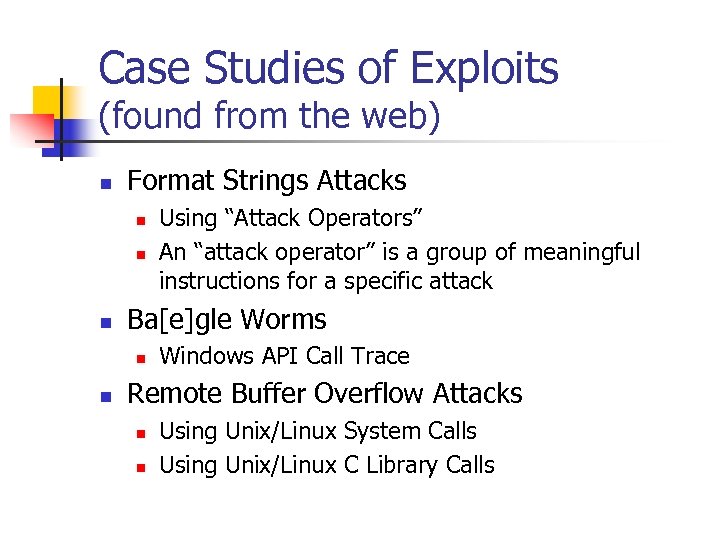

Case Studies of Exploits (found from the web) n Format Strings Attacks n n n Ba[e]gle Worms n n Using “Attack Operators” An “attack operator” is a group of meaningful instructions for a specific attack Windows API Call Trace Remote Buffer Overflow Attacks n n Using Unix/Linux System Calls Using Unix/Linux C Library Calls

Case Studies of Exploits (found from the web) n Format Strings Attacks n n n Ba[e]gle Worms n n Using “Attack Operators” An “attack operator” is a group of meaningful instructions for a specific attack Windows API Call Trace Remote Buffer Overflow Attacks n n Using Unix/Linux System Calls Using Unix/Linux C Library Calls

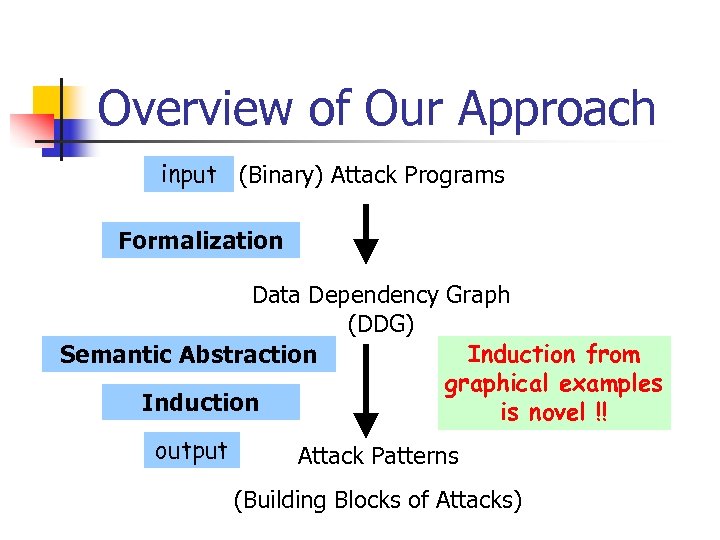

Overview of Our Approach input (Binary) Attack Programs Formalization Data Dependency Graph (DDG) Induction from Semantic Abstraction graphical examples Induction is novel !! output Attack Patterns (Building Blocks of Attacks)

Overview of Our Approach input (Binary) Attack Programs Formalization Data Dependency Graph (DDG) Induction from Semantic Abstraction graphical examples Induction is novel !! output Attack Patterns (Building Blocks of Attacks)

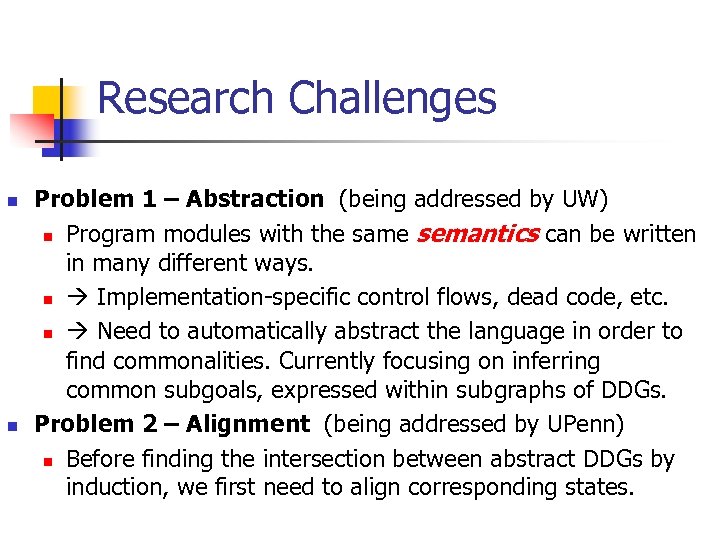

Research Challenges n n Problem 1 – Abstraction (being addressed by UW) n Program modules with the same semantics can be written in many different ways. n Implementation-specific control flows, dead code, etc. n Need to automatically abstract the language in order to find commonalities. Currently focusing on inferring common subgoals, expressed within subgraphs of DDGs. Problem 2 – Alignment (being addressed by UPenn) n Before finding the intersection between abstract DDGs by induction, we first need to align corresponding states.

Research Challenges n n Problem 1 – Abstraction (being addressed by UW) n Program modules with the same semantics can be written in many different ways. n Implementation-specific control flows, dead code, etc. n Need to automatically abstract the language in order to find commonalities. Currently focusing on inferring common subgoals, expressed within subgraphs of DDGs. Problem 2 – Alignment (being addressed by UPenn) n Before finding the intersection between abstract DDGs by induction, we first need to align corresponding states.

Attack Programs’ Goals n n There can be as many types of attacks as there are program bugs, hence as many ways to write attack programs but… The goal of most security attacks is to gain unauthorized access to a computer system by taking control of a vulnerable privileged program. There can be different attack steps according to particular attack classes. But, one final step of an attack is common: The transfer of control to malevolent code…

Attack Programs’ Goals n n There can be as many types of attacks as there are program bugs, hence as many ways to write attack programs but… The goal of most security attacks is to gain unauthorized access to a computer system by taking control of a vulnerable privileged program. There can be different attack steps according to particular attack classes. But, one final step of an attack is common: The transfer of control to malevolent code…

Attack program malicious inputs Target program Control Transfer n n n How does an attack program change a program’s control flow? Initially, an attacker has no control over the target program. But the attacker can control input(s) to the target program. Vulnerability in the program allows the malicious inputs to cause unexpected changes in memory locations which are not supposed to be affected by the inputs. Once the inputs are injected, the unexpected values can propagate into other locations, generating more unexpected values.

Attack program malicious inputs Target program Control Transfer n n n How does an attack program change a program’s control flow? Initially, an attacker has no control over the target program. But the attacker can control input(s) to the target program. Vulnerability in the program allows the malicious inputs to cause unexpected changes in memory locations which are not supposed to be affected by the inputs. Once the inputs are injected, the unexpected values can propagate into other locations, generating more unexpected values.

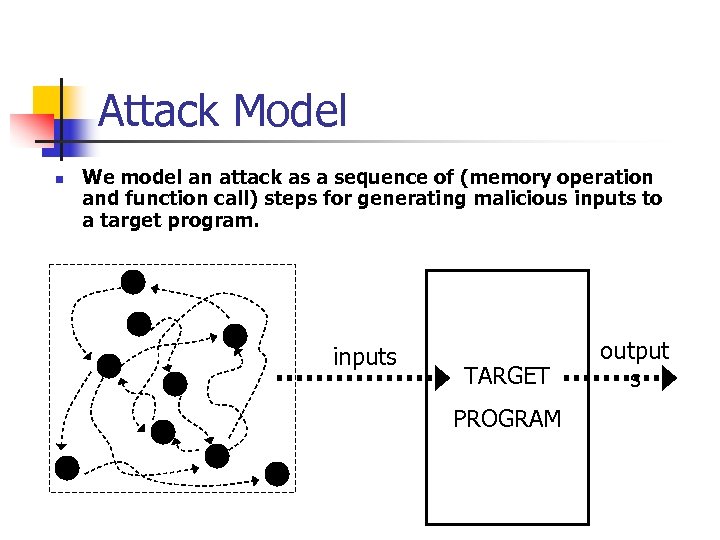

Attack Model n We model an attack as a sequence of (memory operation and function call) steps for generating malicious inputs to a target program. inputs TARGET PROGRAM output s

Attack Model n We model an attack as a sequence of (memory operation and function call) steps for generating malicious inputs to a target program. inputs TARGET PROGRAM output s

Output Backtrace n To extract only the relevant memory operations and function calls, we perform an automated static analysis of each exploit by beginning with the input(s) to the target program and then backtracing (following causality chains) through the control and data flow of the exploit.

Output Backtrace n To extract only the relevant memory operations and function calls, we perform an automated static analysis of each exploit by beginning with the input(s) to the target program and then backtracing (following causality chains) through the control and data flow of the exploit.

Research Already Accomplished n n n Study of attack patterns Automatic DDG Generator Automatic Output Backtracer (It involved a considerable amount of work in going from binary exploits to this point. Portions of the process were analogous to those in the development of Tim Teitelbaum’s Synthesizer-Generator)

Research Already Accomplished n n n Study of attack patterns Automatic DDG Generator Automatic Output Backtracer (It involved a considerable amount of work in going from binary exploits to this point. Portions of the process were analogous to those in the development of Tim Teitelbaum’s Synthesizer-Generator)

Research To Be Done n n Currently in progress Abstraction algorithm for DDGs Efficient induction algorithm over graphical examples Analysis of the time complexity of the induction algorithm Final evaluation of the approach

Research To Be Done n n Currently in progress Abstraction algorithm for DDGs Efficient induction algorithm over graphical examples Analysis of the time complexity of the induction algorithm Final evaluation of the approach