ba64483ba5b9852002e2db4febb85864.ppt

- Количество слайдов: 94

Artificial Intelligence Genetic Algorithms

Artificial Intelligence Genetic Algorithms

What is Learning The word "learning" has many different meanings. It is used, at least, to describe ©memorizing something ©learning facts through observation and exploration ©development of motor and/or cognitive skills through practice ©organization of new knowledge into general, effective representations

What is Learning The word "learning" has many different meanings. It is used, at least, to describe ©memorizing something ©learning facts through observation and exploration ©development of motor and/or cognitive skills through practice ©organization of new knowledge into general, effective representations

Learning ©Study of processes that lead to selfimprovement of machine performance. ©It implies the ability to use knowledge to create new knowledge or integrating new facts into an existing knowledge structure ©Learning typically requires repetition and practice to reduce differences between observed and actual performance 3

Learning ©Study of processes that lead to selfimprovement of machine performance. ©It implies the ability to use knowledge to create new knowledge or integrating new facts into an existing knowledge structure ©Learning typically requires repetition and practice to reduce differences between observed and actual performance 3

What is Learning? Herbert Simon: “Learning is any process by which a system improves performance from experience. ” 4

What is Learning? Herbert Simon: “Learning is any process by which a system improves performance from experience. ” 4

Learning Definition: A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience. 5

Learning Definition: A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience. 5

Learning & Adaptation • ”Modification of a behavioral tendency by expertise. ” (Webster 1984) • ”A learning machine, broadly defined as any device whose actions are influenced by past experiences. ” (Nilsson 1965) • ”Any change in a system that allows it to perform better the second time on repetition of the same task or on another task drawn from the same population. ” (Simon 1983) 6

Learning & Adaptation • ”Modification of a behavioral tendency by expertise. ” (Webster 1984) • ”A learning machine, broadly defined as any device whose actions are influenced by past experiences. ” (Nilsson 1965) • ”Any change in a system that allows it to perform better the second time on repetition of the same task or on another task drawn from the same population. ” (Simon 1983) 6

Negative Features of Human Learning ©Its slow (5 -6 years for motor skills 12 -20 years for abstract reasoning) ©Inefficient ©Expensive ©There is no copy process ©Learning strategy is often a function of knowledge available to learner 7

Negative Features of Human Learning ©Its slow (5 -6 years for motor skills 12 -20 years for abstract reasoning) ©Inefficient ©Expensive ©There is no copy process ©Learning strategy is often a function of knowledge available to learner 7

Applications of ML ©Learning to recognize spoken words ©Learning to drive an autonomous vehicle ©Learning to classify objects ©Learning to play world-class backgammon ©Designing the morphology and control structure of electromechanical artefacts 8

Applications of ML ©Learning to recognize spoken words ©Learning to drive an autonomous vehicle ©Learning to classify objects ©Learning to play world-class backgammon ©Designing the morphology and control structure of electromechanical artefacts 8

Motivating Problems © Handwritten Character Recognition

Motivating Problems © Handwritten Character Recognition

Motivating Problems © Fingerprint Recognition (e. g. , border control ( 10

Motivating Problems © Fingerprint Recognition (e. g. , border control ( 10

Motivating Problems © Face Recognition (security access to buildings etc( 11

Motivating Problems © Face Recognition (security access to buildings etc( 11

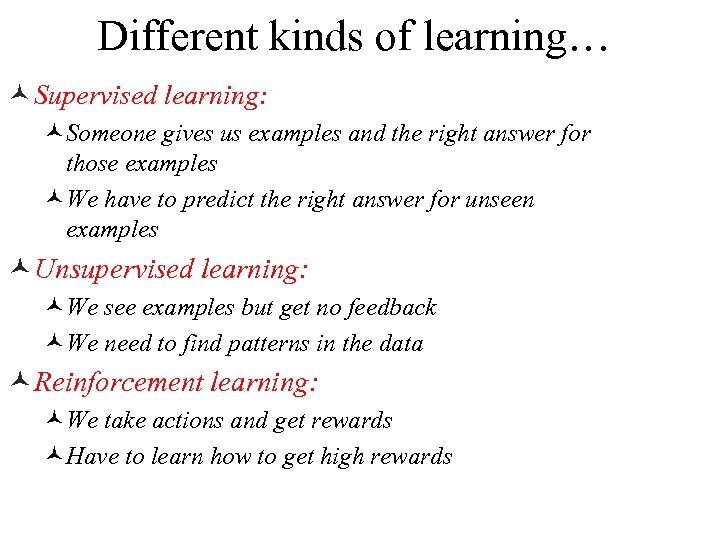

Different kinds of learning… © Supervised learning: ©Someone gives us examples and the right answer for those examples ©We have to predict the right answer for unseen examples ©Unsupervised learning: ©We see examples but get no feedback ©We need to find patterns in the data ©Reinforcement learning: ©We take actions and get rewards ©Have to learn how to get high rewards

Different kinds of learning… © Supervised learning: ©Someone gives us examples and the right answer for those examples ©We have to predict the right answer for unseen examples ©Unsupervised learning: ©We see examples but get no feedback ©We need to find patterns in the data ©Reinforcement learning: ©We take actions and get rewards ©Have to learn how to get high rewards

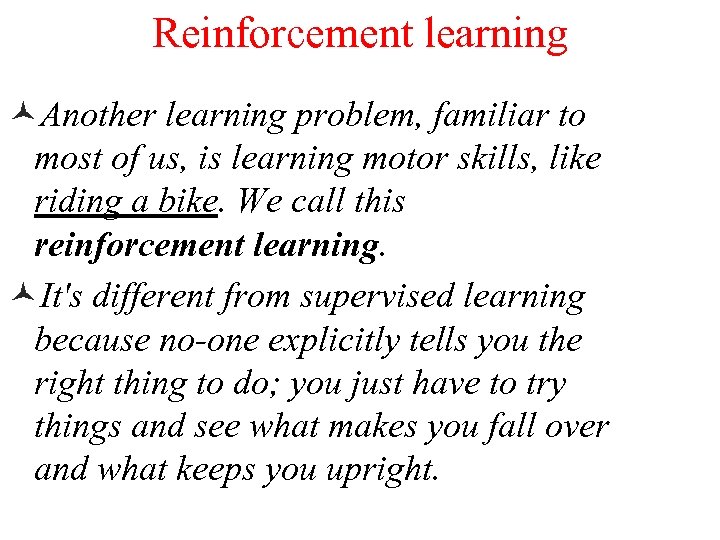

Reinforcement learning ©Another learning problem, familiar to most of us, is learning motor skills, like riding a bike. We call this reinforcement learning. ©It's different from supervised learning because no-one explicitly tells you the right thing to do; you just have to try things and see what makes you fall over and what keeps you upright.

Reinforcement learning ©Another learning problem, familiar to most of us, is learning motor skills, like riding a bike. We call this reinforcement learning. ©It's different from supervised learning because no-one explicitly tells you the right thing to do; you just have to try things and see what makes you fall over and what keeps you upright.

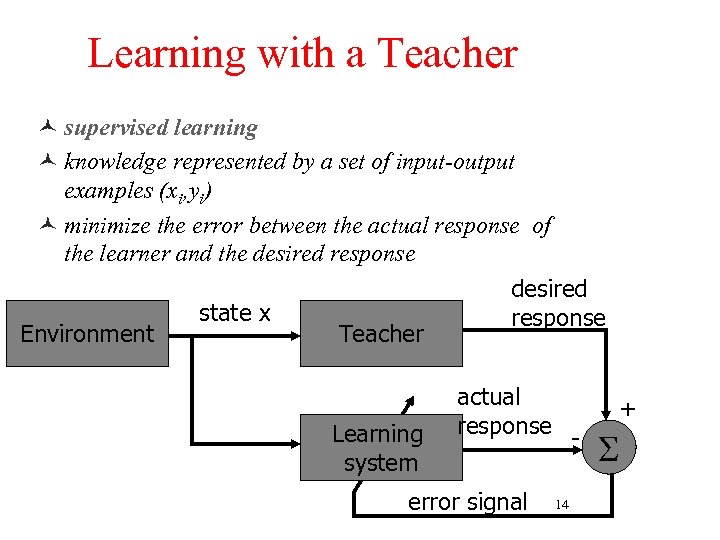

Learning with a Teacher © supervised learning © knowledge represented by a set of input-output examples (xi, yi) © minimize the error between the actual response of the learner and the desired response desired state x response Environment Teacher Learning system actual response error signal + 14 S

Learning with a Teacher © supervised learning © knowledge represented by a set of input-output examples (xi, yi) © minimize the error between the actual response of the learner and the desired response desired state x response Environment Teacher Learning system actual response error signal + 14 S

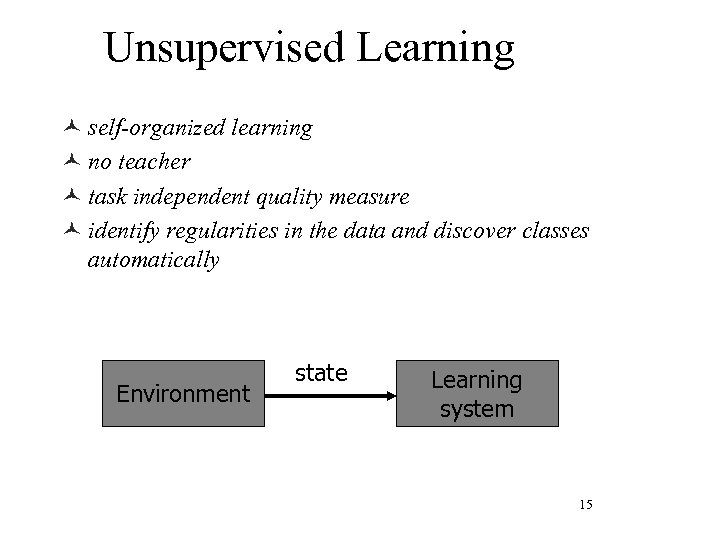

Unsupervised Learning © self-organized learning © no teacher © task independent quality measure © identify regularities in the data and discover classes automatically Environment state Learning system 15

Unsupervised Learning © self-organized learning © no teacher © task independent quality measure © identify regularities in the data and discover classes automatically Environment state Learning system 15

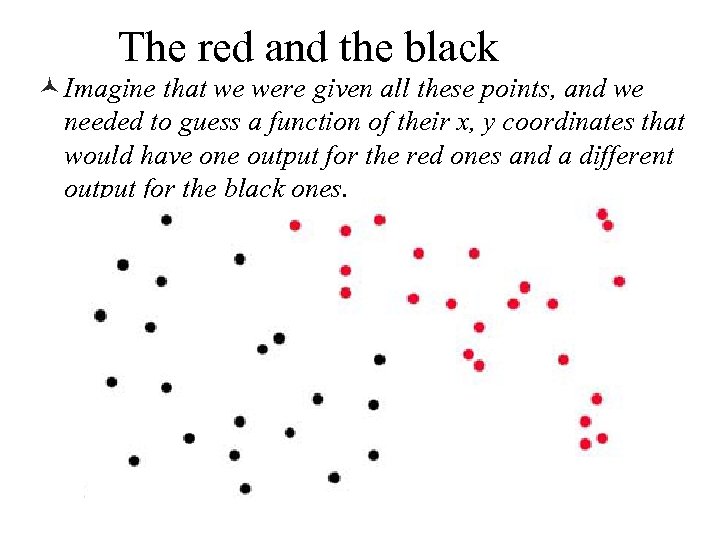

The red and the black © Imagine that we were given all these points, and we needed to guess a function of their x, y coordinates that would have one output for the red ones and a different output for the black ones.

The red and the black © Imagine that we were given all these points, and we needed to guess a function of their x, y coordinates that would have one output for the red ones and a different output for the black ones.

What’s the right hypothesis? ©In this case, it seems like we could do pretty well by defining a line that separates the two classes.

What’s the right hypothesis? ©In this case, it seems like we could do pretty well by defining a line that separates the two classes.

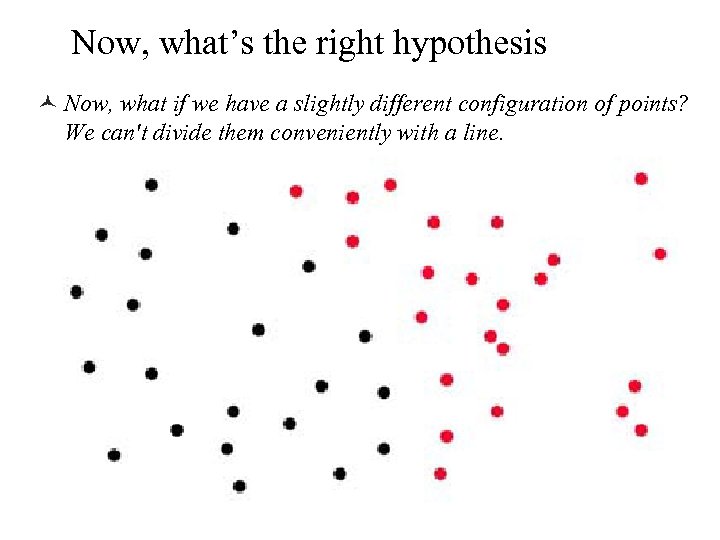

Now, what’s the right hypothesis © Now, what if we have a slightly different configuration of points? We can't divide them conveniently with a line.

Now, what’s the right hypothesis © Now, what if we have a slightly different configuration of points? We can't divide them conveniently with a line.

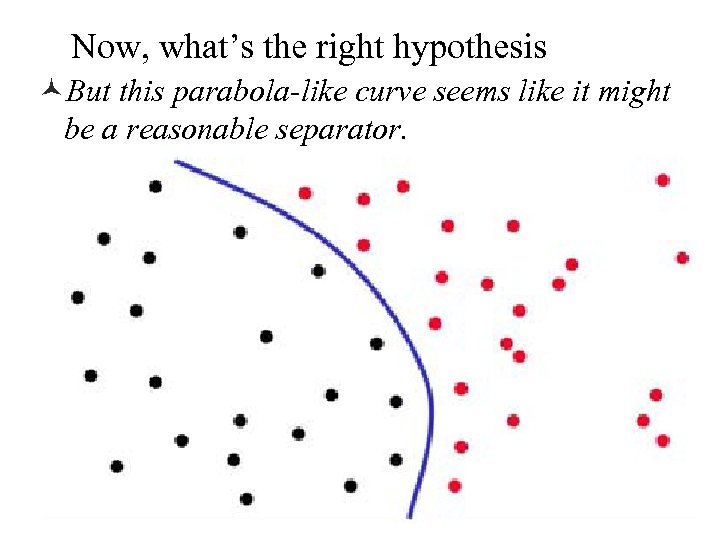

Now, what’s the right hypothesis ©But this parabola-like curve seems like it might be a reasonable separator.

Now, what’s the right hypothesis ©But this parabola-like curve seems like it might be a reasonable separator.

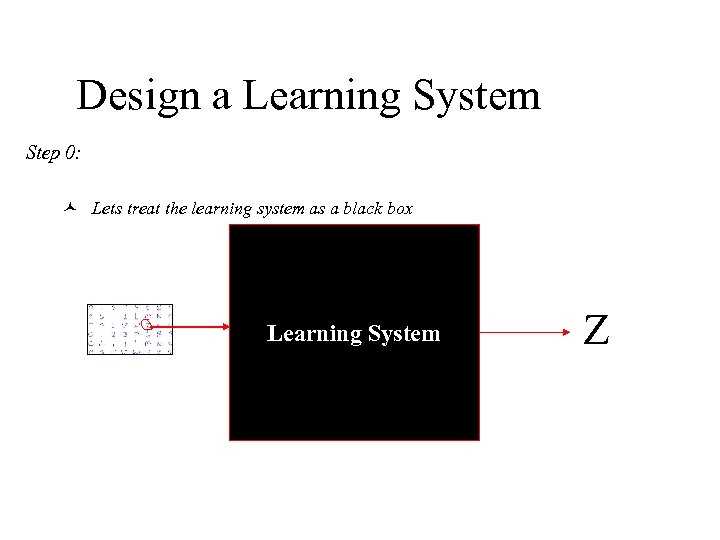

Design a Learning System Step 0: © Lets treat the learning system as a black box Learning System Z

Design a Learning System Step 0: © Lets treat the learning system as a black box Learning System Z

Design a Learning System Step 1: Collect Training Examples (Experience). © Without examples, our system will not learn (so-called learning from examples) 2 3 6 7 8 9

Design a Learning System Step 1: Collect Training Examples (Experience). © Without examples, our system will not learn (so-called learning from examples) 2 3 6 7 8 9

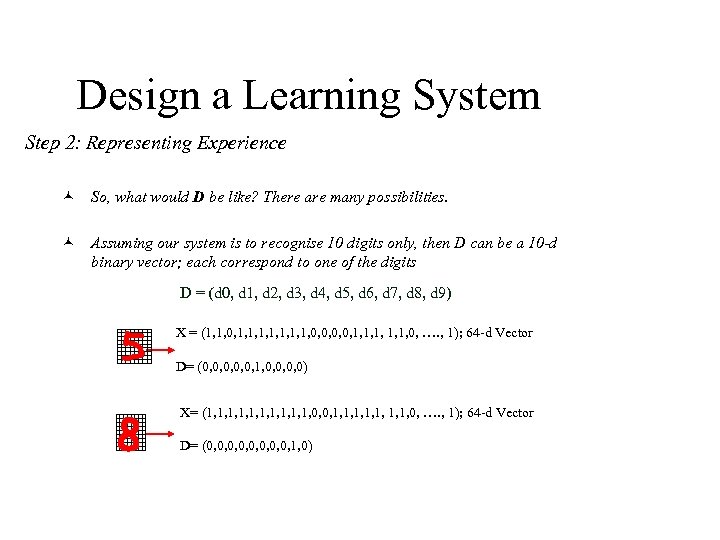

Design a Learning System Step 2: Representing Experience © So, what would D be like? There are many possibilities. © Assuming our system is to recognise 10 digits only, then D can be a 10 -d binary vector; each correspond to one of the digits D = (d 0, d 1, d 2, d 3, d 4, d 5, d 6, d 7, d 8, d 9) X = (1, 1, 0, 1, 1, 0, 0, 1, 1, 1, 0, …. , 1); 64 -d Vector D= (0, 0, 0, 1, 0, 0) X= (1, 1, 1, 0, 0, 1, 1, 0, …. , 1); 64 -d Vector D= (0, 0, 1, 0)

Design a Learning System Step 2: Representing Experience © So, what would D be like? There are many possibilities. © Assuming our system is to recognise 10 digits only, then D can be a 10 -d binary vector; each correspond to one of the digits D = (d 0, d 1, d 2, d 3, d 4, d 5, d 6, d 7, d 8, d 9) X = (1, 1, 0, 1, 1, 0, 0, 1, 1, 1, 0, …. , 1); 64 -d Vector D= (0, 0, 0, 1, 0, 0) X= (1, 1, 1, 0, 0, 1, 1, 0, …. , 1); 64 -d Vector D= (0, 0, 1, 0)

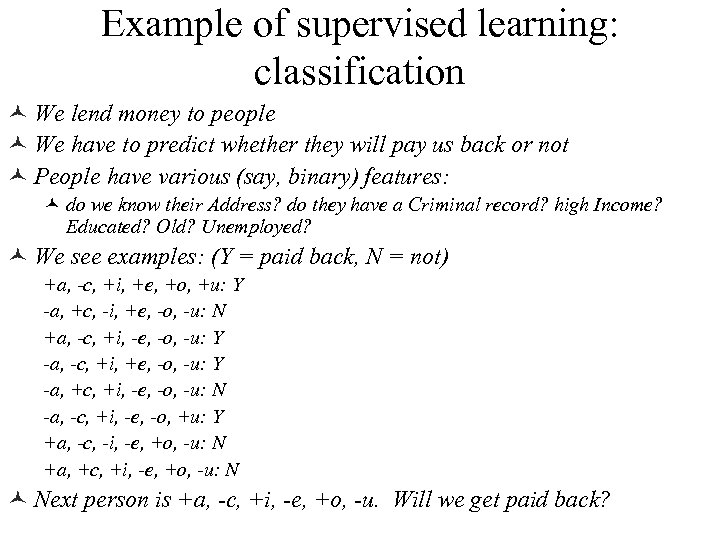

Example of supervised learning: classification © We lend money to people © We have to predict whether they will pay us back or not © People have various (say, binary) features: © do we know their Address? do they have a Criminal record? high Income? Educated? Old? Unemployed? © We see examples: (Y = paid back, N = not) +a, -c, +i, +e, +o, +u: Y -a, +c, -i, +e, -o, -u: N +a, -c, +i, -e, -o, -u: Y -a, -c, +i, +e, -o, -u: Y -a, +c, +i, -e, -o, -u: N -a, -c, +i, -e, -o, +u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N © Next person is +a, -c, +i, -e, +o, -u. Will we get paid back?

Example of supervised learning: classification © We lend money to people © We have to predict whether they will pay us back or not © People have various (say, binary) features: © do we know their Address? do they have a Criminal record? high Income? Educated? Old? Unemployed? © We see examples: (Y = paid back, N = not) +a, -c, +i, +e, +o, +u: Y -a, +c, -i, +e, -o, -u: N +a, -c, +i, -e, -o, -u: Y -a, -c, +i, +e, -o, -u: Y -a, +c, +i, -e, -o, -u: N -a, -c, +i, -e, -o, +u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N © Next person is +a, -c, +i, -e, +o, -u. Will we get paid back?

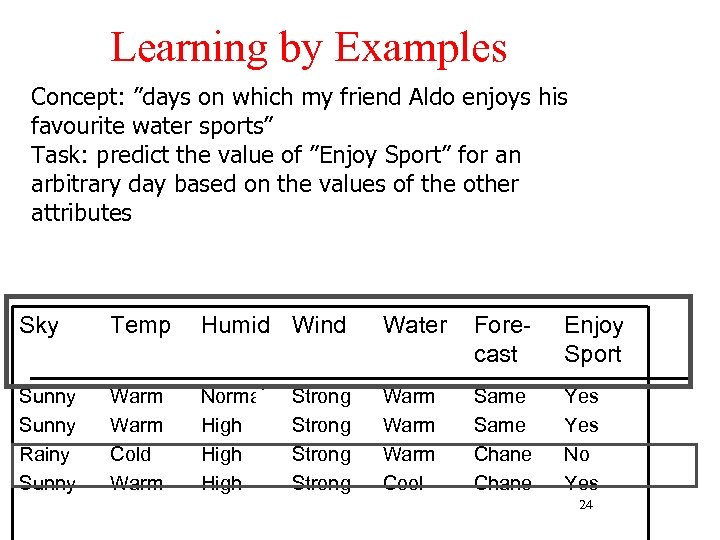

Learning by Examples Concept: ”days on which my friend Aldo enjoys his favourite water sports” Task: predict the value of ”Enjoy Sport” for an arbitrary day based on the values of the other attributes Sky Temp Humid Wind Water Forecast Enjoy Sport Sunny Rainy Sunny Warm Cold Warm Normal High Warm Cool Same Chane Yes No Yes Strong 24

Learning by Examples Concept: ”days on which my friend Aldo enjoys his favourite water sports” Task: predict the value of ”Enjoy Sport” for an arbitrary day based on the values of the other attributes Sky Temp Humid Wind Water Forecast Enjoy Sport Sunny Rainy Sunny Warm Cold Warm Normal High Warm Cool Same Chane Yes No Yes Strong 24

Decision trees high Income? yes no Criminal record? yes NO NO no YES

Decision trees high Income? yes no Criminal record? yes NO NO no YES

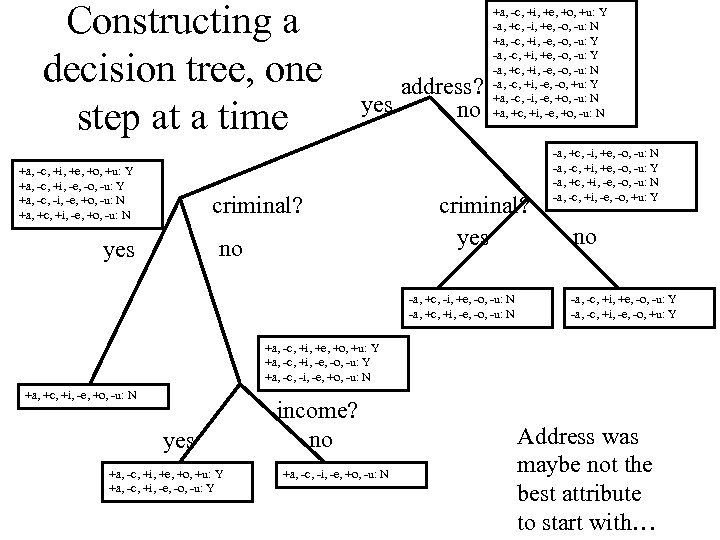

Constructing a decision tree, one step at a time +a, -c, +i, +e, +o, +u: Y +a, -c, +i, -e, -o, -u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N address? yes no criminal? no yes +a, -c, +i, +e, +o, +u: Y -a, +c, -i, +e, -o, -u: N +a, -c, +i, -e, -o, -u: Y -a, -c, +i, +e, -o, -u: Y -a, +c, +i, -e, -o, -u: N -a, -c, +i, -e, -o, +u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N criminal? yes -a, +c, -i, +e, -o, -u: N -a, +c, +i, -e, -o, -u: N -a, +c, -i, +e, -o, -u: N -a, -c, +i, +e, -o, -u: Y -a, +c, +i, -e, -o, -u: N -a, -c, +i, -e, -o, +u: Y no -a, -c, +i, +e, -o, -u: Y -a, -c, +i, -e, -o, +u: Y +a, -c, +i, +e, +o, +u: Y +a, -c, +i, -e, -o, -u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N yes +a, -c, +i, +e, +o, +u: Y +a, -c, +i, -e, -o, -u: Y income? no +a, -c, -i, -e, +o, -u: N Address was maybe not the best attribute to start with…

Constructing a decision tree, one step at a time +a, -c, +i, +e, +o, +u: Y +a, -c, +i, -e, -o, -u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N address? yes no criminal? no yes +a, -c, +i, +e, +o, +u: Y -a, +c, -i, +e, -o, -u: N +a, -c, +i, -e, -o, -u: Y -a, -c, +i, +e, -o, -u: Y -a, +c, +i, -e, -o, -u: N -a, -c, +i, -e, -o, +u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N criminal? yes -a, +c, -i, +e, -o, -u: N -a, +c, +i, -e, -o, -u: N -a, +c, -i, +e, -o, -u: N -a, -c, +i, +e, -o, -u: Y -a, +c, +i, -e, -o, -u: N -a, -c, +i, -e, -o, +u: Y no -a, -c, +i, +e, -o, -u: Y -a, -c, +i, -e, -o, +u: Y +a, -c, +i, +e, +o, +u: Y +a, -c, +i, -e, -o, -u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N yes +a, -c, +i, +e, +o, +u: Y +a, -c, +i, -e, -o, -u: Y income? no +a, -c, -i, -e, +o, -u: N Address was maybe not the best attribute to start with…

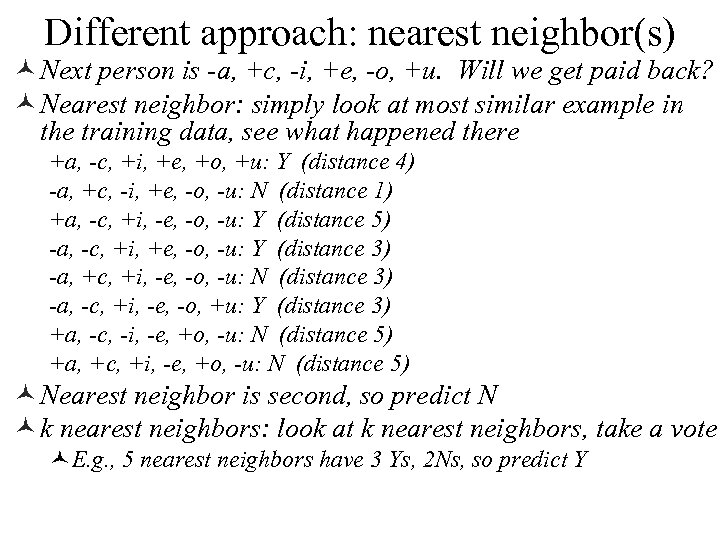

Different approach: nearest neighbor(s) © Next person is -a, +c, -i, +e, -o, +u. Will we get paid back? © Nearest neighbor: simply look at most similar example in the training data, see what happened there +a, -c, +i, +e, +o, +u: Y (distance 4) -a, +c, -i, +e, -o, -u: N (distance 1) +a, -c, +i, -e, -o, -u: Y (distance 5) -a, -c, +i, +e, -o, -u: Y (distance 3) -a, +c, +i, -e, -o, -u: N (distance 3) -a, -c, +i, -e, -o, +u: Y (distance 3) +a, -c, -i, -e, +o, -u: N (distance 5) +a, +c, +i, -e, +o, -u: N (distance 5) © Nearest neighbor is second, so predict N © k nearest neighbors: look at k nearest neighbors, take a vote ©E. g. , 5 nearest neighbors have 3 Ys, 2 Ns, so predict Y

Different approach: nearest neighbor(s) © Next person is -a, +c, -i, +e, -o, +u. Will we get paid back? © Nearest neighbor: simply look at most similar example in the training data, see what happened there +a, -c, +i, +e, +o, +u: Y (distance 4) -a, +c, -i, +e, -o, -u: N (distance 1) +a, -c, +i, -e, -o, -u: Y (distance 5) -a, -c, +i, +e, -o, -u: Y (distance 3) -a, +c, +i, -e, -o, -u: N (distance 3) -a, -c, +i, -e, -o, +u: Y (distance 3) +a, -c, -i, -e, +o, -u: N (distance 5) +a, +c, +i, -e, +o, -u: N (distance 5) © Nearest neighbor is second, so predict N © k nearest neighbors: look at k nearest neighbors, take a vote ©E. g. , 5 nearest neighbors have 3 Ys, 2 Ns, so predict Y

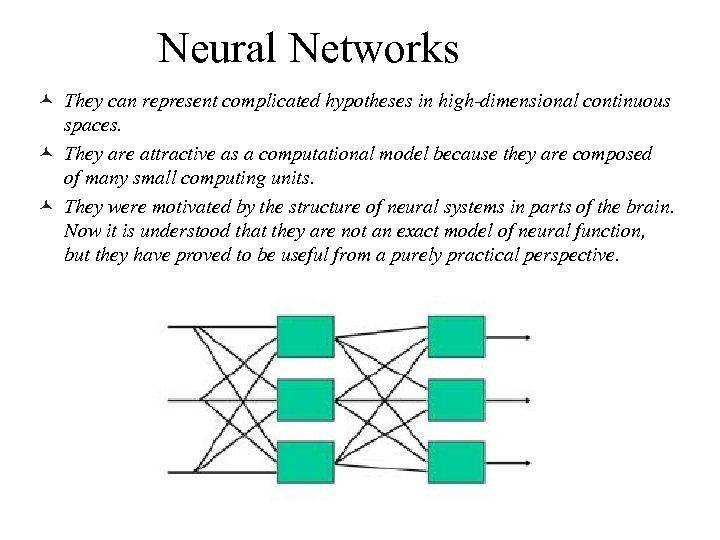

Neural Networks © They can represent complicated hypotheses in high-dimensional continuous spaces. © They are attractive as a computational model because they are composed of many small computing units. © They were motivated by the structure of neural systems in parts of the brain. Now it is understood that they are not an exact model of neural function, but they have proved to be useful from a purely practical perspective.

Neural Networks © They can represent complicated hypotheses in high-dimensional continuous spaces. © They are attractive as a computational model because they are composed of many small computing units. © They were motivated by the structure of neural systems in parts of the brain. Now it is understood that they are not an exact model of neural function, but they have proved to be useful from a purely practical perspective.

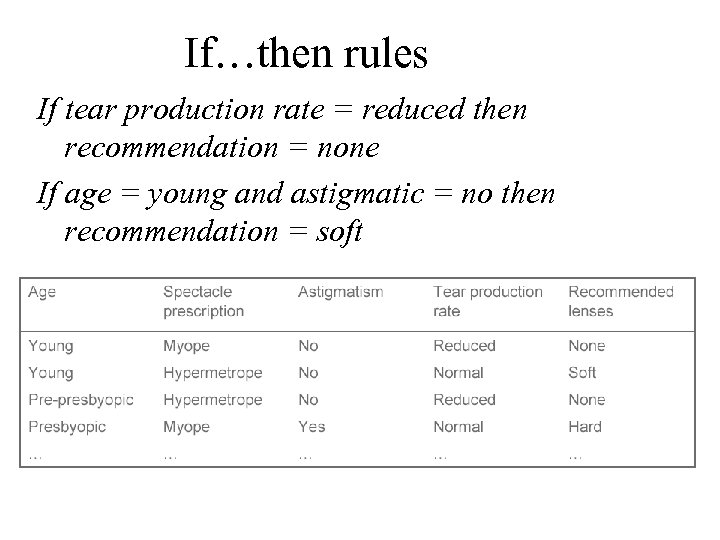

If…then rules If tear production rate = reduced then recommendation = none If age = young and astigmatic = no then recommendation = soft

If…then rules If tear production rate = reduced then recommendation = none If age = young and astigmatic = no then recommendation = soft

Approaches to Machine Learning ©Numerical approaches ©Build numeric model with parameters based on successes ©Structural approaches ©Concerned with the process of defining relationships by creating links between concepts 30

Approaches to Machine Learning ©Numerical approaches ©Build numeric model with parameters based on successes ©Structural approaches ©Concerned with the process of defining relationships by creating links between concepts 30

Learning methods ©Decision rules: ©If income < $30. 000 then reject ©Bayesian network: ©P(good | income, credit history, …. ) ©Neural Network: ©Nearest Neighbor: ©Take the same decision as for the customer in the data base that is most similar to the applicant 31

Learning methods ©Decision rules: ©If income < $30. 000 then reject ©Bayesian network: ©P(good | income, credit history, …. ) ©Neural Network: ©Nearest Neighbor: ©Take the same decision as for the customer in the data base that is most similar to the applicant 31

Classification © Assign object/event to one of a given finite set of categories. © Medical diagnosis © Credit card applications or transactions © Fraud detection in e-commerce © Worm detection in network packets © Spam filtering in email © Recommended articles in a newspaper © Recommended books, movies, music, or jokes © Financial investments © DNA sequences © Spoken words © Handwritten letters © Astronomical images 32

Classification © Assign object/event to one of a given finite set of categories. © Medical diagnosis © Credit card applications or transactions © Fraud detection in e-commerce © Worm detection in network packets © Spam filtering in email © Recommended articles in a newspaper © Recommended books, movies, music, or jokes © Financial investments © DNA sequences © Spoken words © Handwritten letters © Astronomical images 32

Problem Solving / Planning / Control © Performing actions in an environment in order to achieve a goal. ©Solving calculus problems ©Playing checkers, chess, or backgammon ©Balancing a pole ©Driving a car or a jeep ©Flying a plane, helicopter, or rocket ©Controlling an elevator ©Controlling a character in a video game ©Controlling a mobile robot 33

Problem Solving / Planning / Control © Performing actions in an environment in order to achieve a goal. ©Solving calculus problems ©Playing checkers, chess, or backgammon ©Balancing a pole ©Driving a car or a jeep ©Flying a plane, helicopter, or rocket ©Controlling an elevator ©Controlling a character in a video game ©Controlling a mobile robot 33

Another Example: Handwriting Recognition l Positive: – This is a letter S: © Background concepts: ©Pixel information © Categorisations: l Negative: – This is a letter Z: ©(Matrix, Letter) pairs ©Both positive & negative © Task ©Correctly categorise ©An unseen example ©Into 1 of 26 categories

Another Example: Handwriting Recognition l Positive: – This is a letter S: © Background concepts: ©Pixel information © Categorisations: l Negative: – This is a letter Z: ©(Matrix, Letter) pairs ©Both positive & negative © Task ©Correctly categorise ©An unseen example ©Into 1 of 26 categories

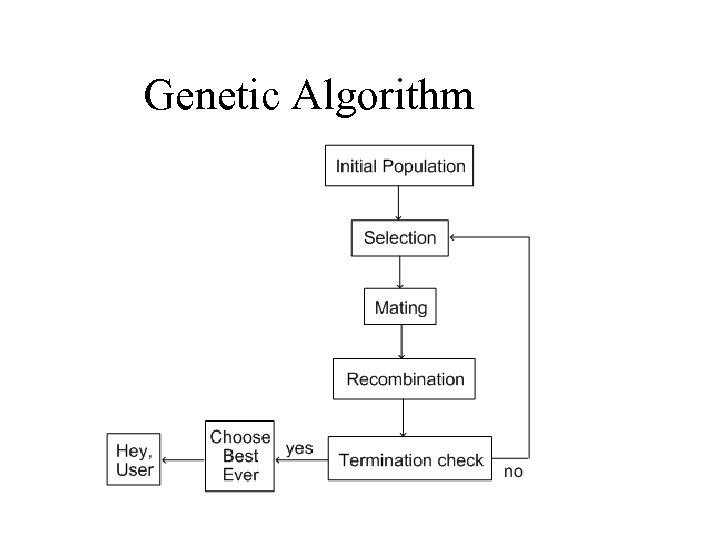

Genetic Algorithm ©Genetic Algorithms have been applied successfully to a variety of AI applications ©For example, they have been used to learn collections of rules for robot control. ©Genetic Algorithms and genetic programming are called Evolutionary Computation

Genetic Algorithm ©Genetic Algorithms have been applied successfully to a variety of AI applications ©For example, they have been used to learn collections of rules for robot control. ©Genetic Algorithms and genetic programming are called Evolutionary Computation

Theory of Evolution ©Every organism has unique attributes that can be transmitted to its offspring ©Selective breeding can be used to manage changes from one generation to the next ©Nature applies certain pressures that cause individuals to evolve over time

Theory of Evolution ©Every organism has unique attributes that can be transmitted to its offspring ©Selective breeding can be used to manage changes from one generation to the next ©Nature applies certain pressures that cause individuals to evolve over time

Genetic Algorithms (GAs) and Genetic Programming (GP) ©Genetic Algorithms ©Optimising parameters for problem solving ©Represent the parameters in the solution(s) ©As a “bit” string normally, but often something else ©Evolve answers in this representation ©Genetic Programming ©Representation of solutions is richer in general ©Solutions can be interpreted as programs ©Evolutionary process is very similar

Genetic Algorithms (GAs) and Genetic Programming (GP) ©Genetic Algorithms ©Optimising parameters for problem solving ©Represent the parameters in the solution(s) ©As a “bit” string normally, but often something else ©Evolve answers in this representation ©Genetic Programming ©Representation of solutions is richer in general ©Solutions can be interpreted as programs ©Evolutionary process is very similar

GA ©Genetic algorithms provide an AI method by an analogy of biological evolution ©It constructs a population of evolving solutions to solve the problem

GA ©Genetic algorithms provide an AI method by an analogy of biological evolution ©It constructs a population of evolving solutions to solve the problem

Genetic Algorithms © What are they? ©Evolutionary algorithms that make use of operations like mutation, recombination, and selection © Uses? ©Difficult search problems ©Optimization problems ©Machine learning ©Adaptive rule-bases

Genetic Algorithms © What are they? ©Evolutionary algorithms that make use of operations like mutation, recombination, and selection © Uses? ©Difficult search problems ©Optimization problems ©Machine learning ©Adaptive rule-bases

Classical GAs © Representation of parameters is a bit string ©Solutions to a problem represented in binary © 10101001001110101 © Start with a population (fairly large set) ©Of possible solutions known as individuals © Combine possible solutions by swapping material ©Choose the “best” solutions to swap material between and kill off the worse solutions ©This generates a new set of possible solutions © Requires a notion of “fitness” of the individual ©Base on an evaluation function with respect to the problem

Classical GAs © Representation of parameters is a bit string ©Solutions to a problem represented in binary © 10101001001110101 © Start with a population (fairly large set) ©Of possible solutions known as individuals © Combine possible solutions by swapping material ©Choose the “best” solutions to swap material between and kill off the worse solutions ©This generates a new set of possible solutions © Requires a notion of “fitness” of the individual ©Base on an evaluation function with respect to the problem

Genetic Algorithm

Genetic Algorithm

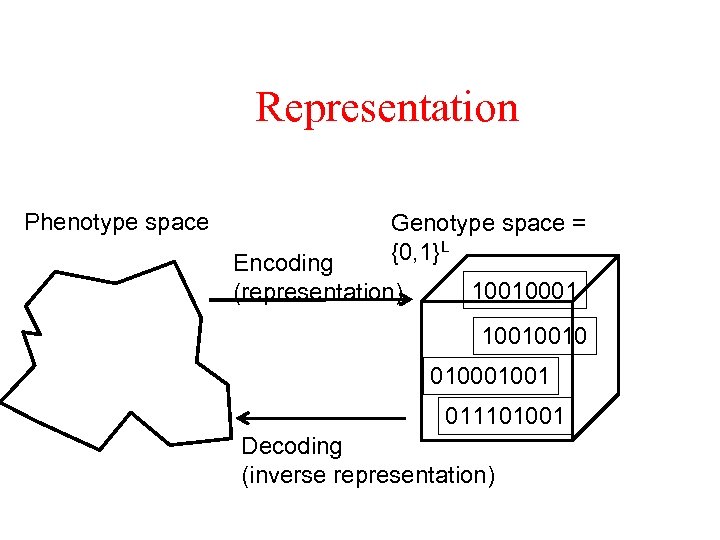

Representation Phenotype space Genotype space = {0, 1}L Encoding (representation) 10010001 10010010 01001 011101001 Decoding (inverse representation)

Representation Phenotype space Genotype space = {0, 1}L Encoding (representation) 10010001 10010010 01001 011101001 Decoding (inverse representation)

GA Representation ©Genetic algorithms are represented as gene ©Each population consists of a whole set of genes ©Using biological reproduction, new population is created from old one.

GA Representation ©Genetic algorithms are represented as gene ©Each population consists of a whole set of genes ©Using biological reproduction, new population is created from old one.

The Initial Population ©Represent solutions to problems ©As a bit string of length L ©Choose an initial population size ©Generate length L strings of 1 s & 0 s randomly ©Strings are sometimes called chromosomes ©Letters in the string are called “genes” ©We call the bit-string “individuals”

The Initial Population ©Represent solutions to problems ©As a bit string of length L ©Choose an initial population size ©Generate length L strings of 1 s & 0 s randomly ©Strings are sometimes called chromosomes ©Letters in the string are called “genes” ©We call the bit-string “individuals”

Initialization ©Initial population must be a representative sample of the search space ©Random initialization can be a good idea (if the sample is large enough)

Initialization ©Initial population must be a representative sample of the search space ©Random initialization can be a good idea (if the sample is large enough)

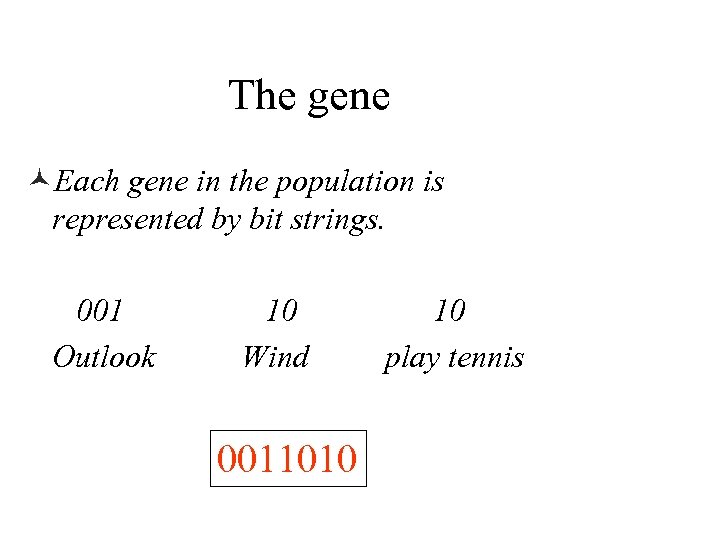

The gene ©Each gene in the population is represented by bit strings. 001 Outlook 10 Wind 0011010 10 play tennis

The gene ©Each gene in the population is represented by bit strings. 001 Outlook 10 Wind 0011010 10 play tennis

Gene Example ©The idea is to use a bit string to describe the value of attribute ©The attribute Outlook has 3 values (sunny, overcast, raining) ©So we use 3 bit length to represent attribute outlook © 010 represent the outlook = overcast

Gene Example ©The idea is to use a bit string to describe the value of attribute ©The attribute Outlook has 3 values (sunny, overcast, raining) ©So we use 3 bit length to represent attribute outlook © 010 represent the outlook = overcast

GA ©The fitness function evaluates each solution and decide it will be in next generation of solutions

GA ©The fitness function evaluates each solution and decide it will be in next generation of solutions

Selection ©Want to to give preference to “better” individuals to add to mating pool ©If entire population ends up being selected it may be desirable to conduct a tournament to order individuals in population ©Would like to keep the best in the mating pool and drop the worst

Selection ©Want to to give preference to “better” individuals to add to mating pool ©If entire population ends up being selected it may be desirable to conduct a tournament to order individuals in population ©Would like to keep the best in the mating pool and drop the worst

Selection methods Common selection methods used in GAs are ©Fitness Proportionate Selection ©Rank Selection ©Tournament Selection

Selection methods Common selection methods used in GAs are ©Fitness Proportionate Selection ©Rank Selection ©Tournament Selection

Rank Selection ©All individuals are sorted according to their fitness. ©Each individual is then assigned a probability of being selected from some prior probability density.

Rank Selection ©All individuals are sorted according to their fitness. ©Each individual is then assigned a probability of being selected from some prior probability density.

Selection © Main idea: better individuals get higher chance ©Chances proportional to fitness ©Implementation: roulette wheel technique ©Assign to each individual a part of the roulette wheel © Spin the wheel n times to select n individuals 1/6 = 17% A 3/6 = 50% B C 2/6 = 33% fitness(A) = 3 fitness(B) = 1 fitness(C) = 2

Selection © Main idea: better individuals get higher chance ©Chances proportional to fitness ©Implementation: roulette wheel technique ©Assign to each individual a part of the roulette wheel © Spin the wheel n times to select n individuals 1/6 = 17% A 3/6 = 50% B C 2/6 = 33% fitness(A) = 3 fitness(B) = 1 fitness(C) = 2

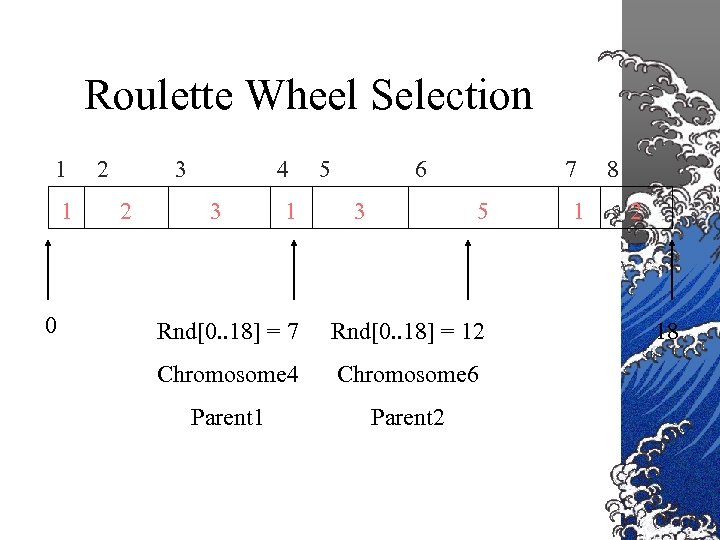

Roulette Wheel Selection 1 1 0 2 3 2 4 3 1 5 6 3 7 5 Rnd[0. . 18] = 7 Rnd[0. . 18] = 12 Chromosome 4 Chromosome 6 Parent 1 Parent 2 1 8 2 18

Roulette Wheel Selection 1 1 0 2 3 2 4 3 1 5 6 3 7 5 Rnd[0. . 18] = 7 Rnd[0. . 18] = 12 Chromosome 4 Chromosome 6 Parent 1 Parent 2 1 8 2 18

Tournament Selection ©Select a group of N (N>1) members. ©Select the fittest member of this group and discard the rest.

Tournament Selection ©Select a group of N (N>1) members. ©Select the fittest member of this group and discard the rest.

New Population ©To build new population from old one we use genetic operators to evolve the population of solutions ©Genetic operators are ©Crossover operator ©Mutation operator ©Production operator

New Population ©To build new population from old one we use genetic operators to evolve the population of solutions ©Genetic operators are ©Crossover operator ©Mutation operator ©Production operator

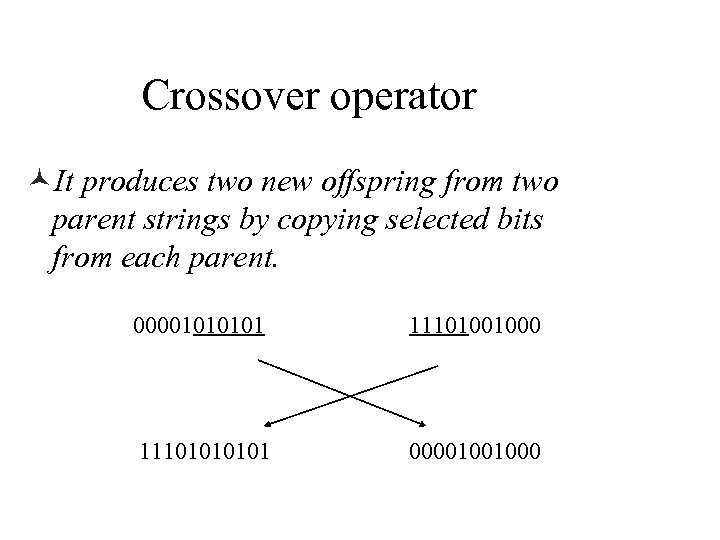

Crossover operator ©It produces two new offspring from two parent strings by copying selected bits from each parent. 0000101 11101001000 1110101 00001001000

Crossover operator ©It produces two new offspring from two parent strings by copying selected bits from each parent. 0000101 11101001000 1110101 00001001000

Crossover ©In sexual reproduction the genetic codes of both parents are combined to create offspring ©Would like to keep 60/40 split between parent contributions © 95/5 splits negate the benefits of crossover (too much like asexual reproduction)

Crossover ©In sexual reproduction the genetic codes of both parents are combined to create offspring ©Would like to keep 60/40 split between parent contributions © 95/5 splits negate the benefits of crossover (too much like asexual reproduction)

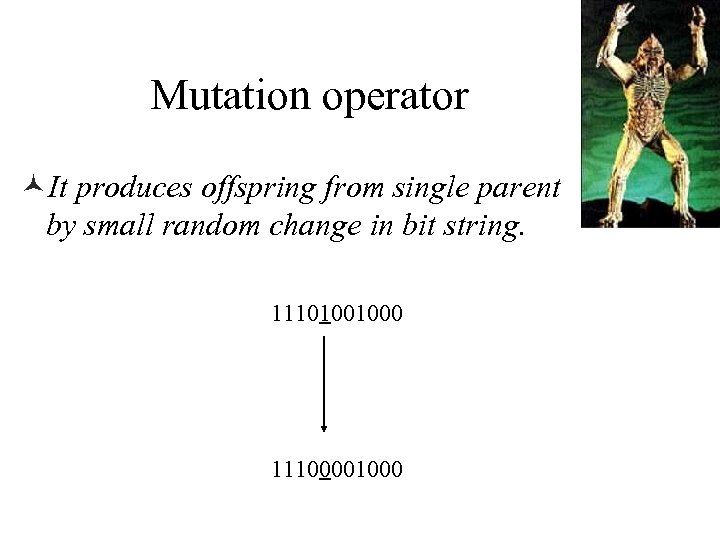

Mutation operator ©It produces offspring from single parent by small random change in bit string. 11101001000 11100001000

Mutation operator ©It produces offspring from single parent by small random change in bit string. 11101001000 11100001000

Mutation © Mutation is important for maintaining diversity in the genetic code © In humans, mutation was responsible for the evolution of intelleigence © Example: The occasional (low probably) alteration of a bit position in a string

Mutation © Mutation is important for maintaining diversity in the genetic code © In humans, mutation was responsible for the evolution of intelleigence © Example: The occasional (low probably) alteration of a bit position in a string

Replacement ©Determine when to insert new offspring into the population and which individuals to drop out based on fitness ©Steady state evolution calls for the same number of individuals in the population, so each new offspring processed one at a time so fit individuals can remain a long time

Replacement ©Determine when to insert new offspring into the population and which individuals to drop out based on fitness ©Steady state evolution calls for the same number of individuals in the population, so each new offspring processed one at a time so fit individuals can remain a long time

The Termination Check © Termination may involve testing whether an individual solves the problem to a good enough standard © Not necessarily having found a definitive answer © Alternatively, termination may occur after a fixed time © Or after a fixed number of generations © Note that the best individual in a population © So, your GA should: © Record the best from each generation © Output the best from all populations as the answer

The Termination Check © Termination may involve testing whether an individual solves the problem to a good enough standard © Not necessarily having found a definitive answer © Alternatively, termination may occur after a fixed time © Or after a fixed number of generations © Note that the best individual in a population © So, your GA should: © Record the best from each generation © Output the best from all populations as the answer

Why use genetic algorithms? © They can solve hard problems © Easy to interface genetic algorithms to existing simulations and models © GA’s are extensible © GA’s are easy to hybridize © GA’s work by sampling, so populations can be sized to detect differences with specified error rates © Use little problem specific code

Why use genetic algorithms? © They can solve hard problems © Easy to interface genetic algorithms to existing simulations and models © GA’s are extensible © GA’s are easy to hybridize © GA’s work by sampling, so populations can be sized to detect differences with specified error rates © Use little problem specific code

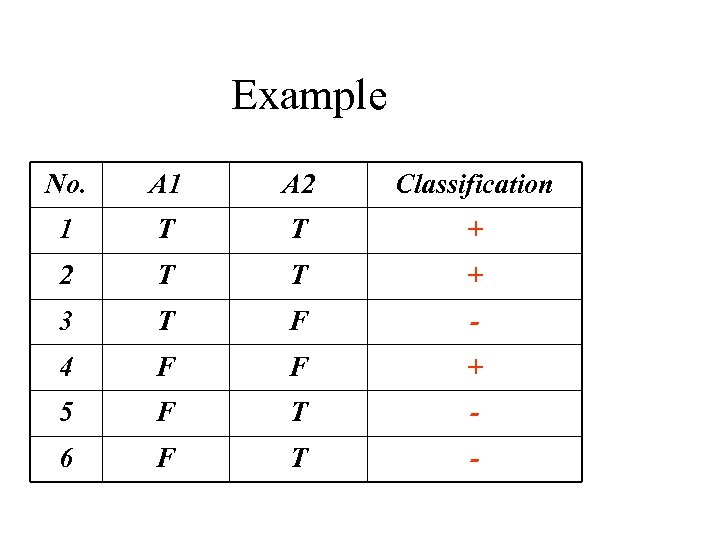

Example No. A 1 A 2 Classification 1 T T + 2 T T + 3 T F - 4 F F + 5 F T - 6 F T -

Example No. A 1 A 2 Classification 1 T T + 2 T T + 3 T F - 4 F F + 5 F T - 6 F T -

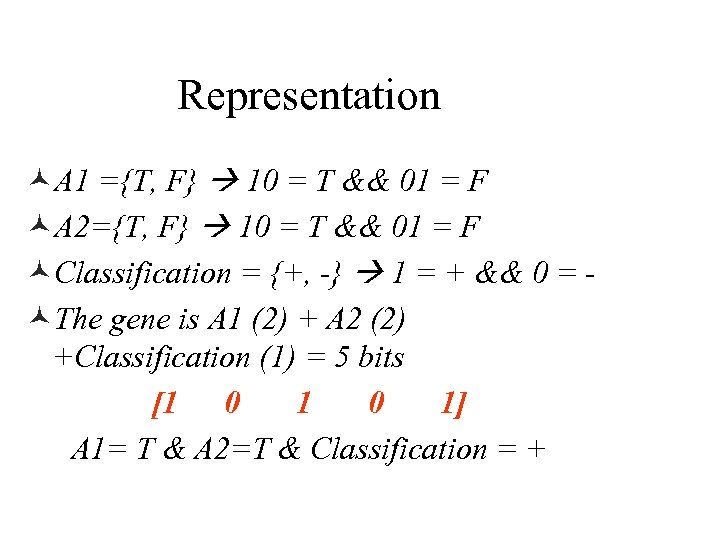

Representation ©A 1 ={T, F} 10 = T && 01 = F ©A 2={T, F} 10 = T && 01 = F ©Classification = {+, -} 1 = + && 0 = ©The gene is A 1 (2) + A 2 (2) +Classification (1) = 5 bits [1 0 1] A 1= T & A 2=T & Classification = +

Representation ©A 1 ={T, F} 10 = T && 01 = F ©A 2={T, F} 10 = T && 01 = F ©Classification = {+, -} 1 = + && 0 = ©The gene is A 1 (2) + A 2 (2) +Classification (1) = 5 bits [1 0 1] A 1= T & A 2=T & Classification = +

![Initial Population © Let we construct 10 genes randomly as [11101] fitness = 4 Initial Population © Let we construct 10 genes randomly as [11101] fitness = 4](https://present5.com/presentation/ba64483ba5b9852002e2db4febb85864/image-65.jpg) Initial Population © Let we construct 10 genes randomly as [11101] fitness = 4 cases { 2 true and 2 false}=0. 5 [10001] fitness = 0. 66 [01011] fitness = 0. 0 [01011] [01111] [11111] [01000] [00001] [11110] [01100]

Initial Population © Let we construct 10 genes randomly as [11101] fitness = 4 cases { 2 true and 2 false}=0. 5 [10001] fitness = 0. 66 [01011] fitness = 0. 0 [01011] [01111] [11111] [01000] [00001] [11110] [01100]

![Crossover operation [11101] X [01100] [11100] + [01101] [01011] X [01010] [10001] [11001] + Crossover operation [11101] X [01100] [11100] + [01101] [01011] X [01010] [10001] [11001] +](https://present5.com/presentation/ba64483ba5b9852002e2db4febb85864/image-66.jpg) Crossover operation [11101] X [01100] [11100] + [01101] [01011] X [01010] [10001] [11001] +

Crossover operation [11101] X [01100] [11100] + [01101] [01011] X [01010] [10001] [11001] +

![Mutation Operation ©[01011] [01010] ©[11111] [11011] Mutation Operation ©[01011] [01010] ©[11111] [11011]](https://present5.com/presentation/ba64483ba5b9852002e2db4febb85864/image-67.jpg) Mutation Operation ©[01011] [01010] ©[11111] [11011]

Mutation Operation ©[01011] [01010] ©[11111] [11011]

![New Population [11100] [01010] [11001] [01111] [11011] [01000] [00001] [11110] [01101] New Population [11100] [01010] [11001] [01111] [11011] [01000] [00001] [11110] [01101]](https://present5.com/presentation/ba64483ba5b9852002e2db4febb85864/image-68.jpg) New Population [11100] [01010] [11001] [01111] [11011] [01000] [00001] [11110] [01101]

New Population [11100] [01010] [11001] [01111] [11011] [01000] [00001] [11110] [01101]

GA Application ©Searching maximum of function ©Search for value of x to y=f(x) be maximum. ©X play the role of genes, binary code of x value in 8 bits gene (chromosome) ©Y the role of fitness

GA Application ©Searching maximum of function ©Search for value of x to y=f(x) be maximum. ©X play the role of genes, binary code of x value in 8 bits gene (chromosome) ©Y the role of fitness

GA Application ©Given the digits 0 through 9 and operators +, -, *, and /. ©Find sequence that represent given target number. ©E. g. Given number 23, the sequence +6+5*4/2+1 ©If number is 75. 5, then 5/2+9*7 -5

GA Application ©Given the digits 0 through 9 and operators +, -, *, and /. ©Find sequence that represent given target number. ©E. g. Given number 23, the sequence +6+5*4/2+1 ©If number is 75. 5, then 5/2+9*7 -5

GA Application ©Four bits are required to represent char © 0: 0000, ……. 9: 1001, +: 1010, -: 1011, *: 1100, /: 1101 ©Gene: 0110101001011100010011010010 10100001 is 6+5*4/2+1 = 23

GA Application ©Four bits are required to represent char © 0: 0000, ……. 9: 1001, +: 1010, -: 1011, *: 1100, /: 1101 ©Gene: 0110101001011100010011010010 10100001 is 6+5*4/2+1 = 23

TSP Application © Use a genetic algorithm to solve the traveling salesman problem we could begin by creating a population of candidate solutions © We need to define mutation, crossover, and selection methods to aid in evolving a solution from this population

TSP Application © Use a genetic algorithm to solve the traveling salesman problem we could begin by creating a population of candidate solutions © We need to define mutation, crossover, and selection methods to aid in evolving a solution from this population

Related Technologies ©Genetic Programming ©Existing programs are combined to breed new programs ©Artificial Life ©Using cellular automata to simulate population growth

Related Technologies ©Genetic Programming ©Existing programs are combined to breed new programs ©Artificial Life ©Using cellular automata to simulate population growth

Automatic Programming ©Getting software to write software ©Brilliant idea, but turned out to be very difficult ©Intuitively, should be easier than other tasks ©The automatic programming community ©Didn’t deliver on early promises ©So people began to avoid this area ©And “Automated Programming” became words of warning

Automatic Programming ©Getting software to write software ©Brilliant idea, but turned out to be very difficult ©Intuitively, should be easier than other tasks ©The automatic programming community ©Didn’t deliver on early promises ©So people began to avoid this area ©And “Automated Programming” became words of warning

Questions to be Answered © How is the programming task specified? © How are the ingredients chosen/specified/ © How do we represent programs? © Which enables reproduction, evaluation, etc. © How are individuals chosen for reproduction? © How are offspring produced? © How are termination conditions specified? © We will start by looking at the representation of programs, as this is required to answer many questions

Questions to be Answered © How is the programming task specified? © How are the ingredients chosen/specified/ © How do we represent programs? © Which enables reproduction, evaluation, etc. © How are individuals chosen for reproduction? © How are offspring produced? © How are termination conditions specified? © We will start by looking at the representation of programs, as this is required to answer many questions

Genetic Programming ©It same as GA but the gene is tree ©Each tree = computer program ©It contain a population of computer programs to solve the problem specifically

Genetic Programming ©It same as GA but the gene is tree ©Each tree = computer program ©It contain a population of computer programs to solve the problem specifically

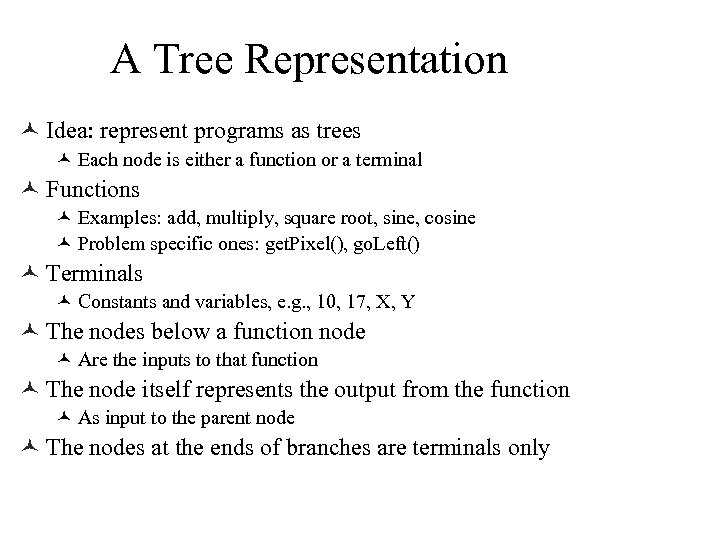

A Tree Representation © Idea: represent programs as trees © Each node is either a function or a terminal © Functions © Examples: add, multiply, square root, sine, cosine © Problem specific ones: get. Pixel(), go. Left() © Terminals © Constants and variables, e. g. , 10, 17, X, Y © The nodes below a function node © Are the inputs to that function © The node itself represents the output from the function © As input to the parent node © The nodes at the ends of branches are terminals only

A Tree Representation © Idea: represent programs as trees © Each node is either a function or a terminal © Functions © Examples: add, multiply, square root, sine, cosine © Problem specific ones: get. Pixel(), go. Left() © Terminals © Constants and variables, e. g. , 10, 17, X, Y © The nodes below a function node © Are the inputs to that function © The node itself represents the output from the function © As input to the parent node © The nodes at the ends of branches are terminals only

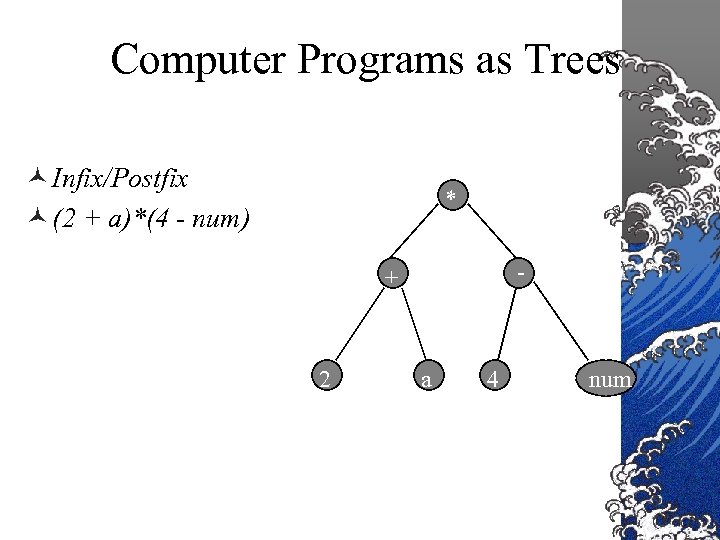

Computer Programs as Trees © Infix/Postfix © (2 + a)*(4 - num) * - + 2 a 4 num

Computer Programs as Trees © Infix/Postfix © (2 + a)*(4 - num) * - + 2 a 4 num

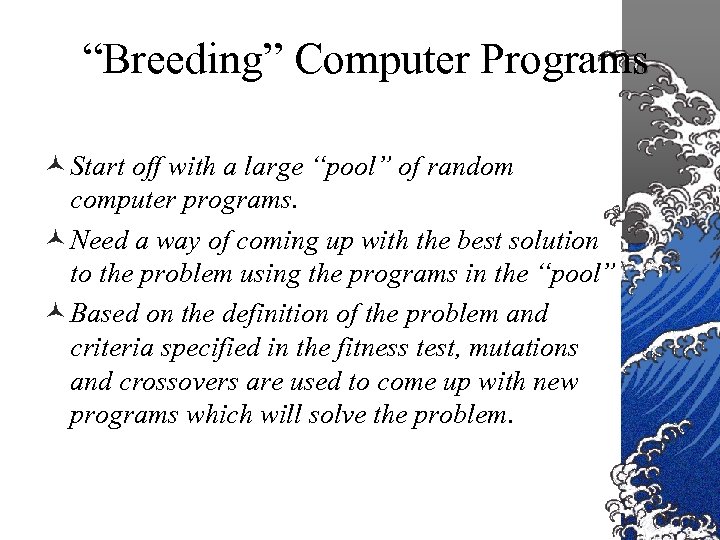

“Breeding” Computer Programs © Start off with a large “pool” of random computer programs. © Need a way of coming up with the best solution to the problem using the programs in the “pool” © Based on the definition of the problem and criteria specified in the fitness test, mutations and crossovers are used to come up with new programs which will solve the problem.

“Breeding” Computer Programs © Start off with a large “pool” of random computer programs. © Need a way of coming up with the best solution to the problem using the programs in the “pool” © Based on the definition of the problem and criteria specified in the fitness test, mutations and crossovers are used to come up with new programs which will solve the problem.

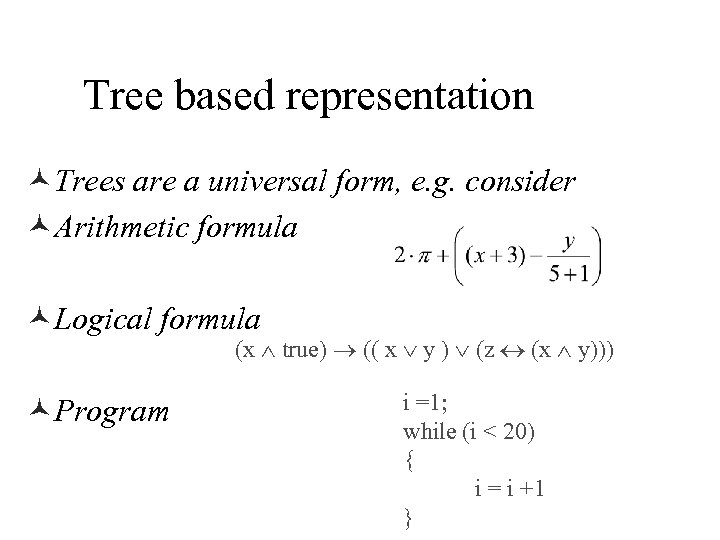

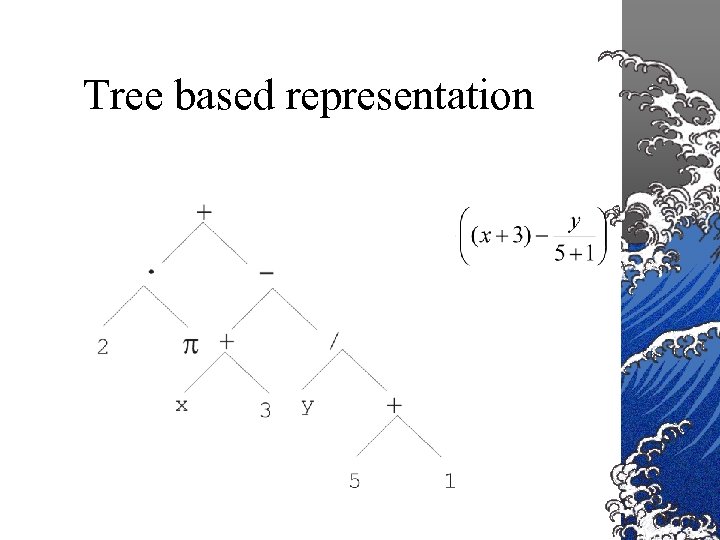

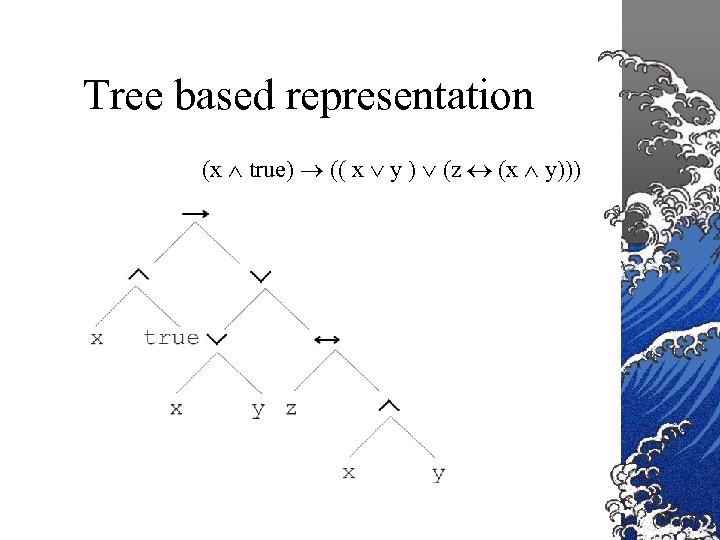

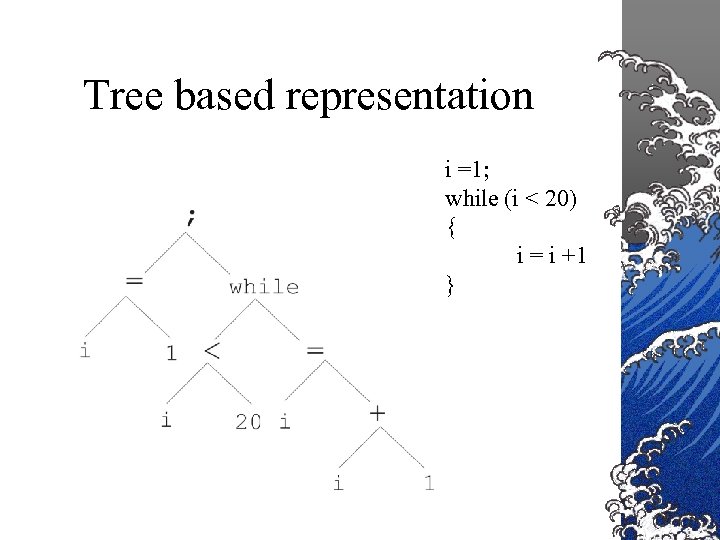

Tree based representation ©Trees are a universal form, e. g. consider ©Arithmetic formula ©Logical formula (x true) (( x y ) (z (x y))) ©Program i =1; while (i < 20) { i = i +1 }

Tree based representation ©Trees are a universal form, e. g. consider ©Arithmetic formula ©Logical formula (x true) (( x y ) (z (x y))) ©Program i =1; while (i < 20) { i = i +1 }

Tree based representation

Tree based representation

Tree based representation (x true) (( x y ) (z (x y)))

Tree based representation (x true) (( x y ) (z (x y)))

Tree based representation i =1; while (i < 20) { i = i +1 }

Tree based representation i =1; while (i < 20) { i = i +1 }

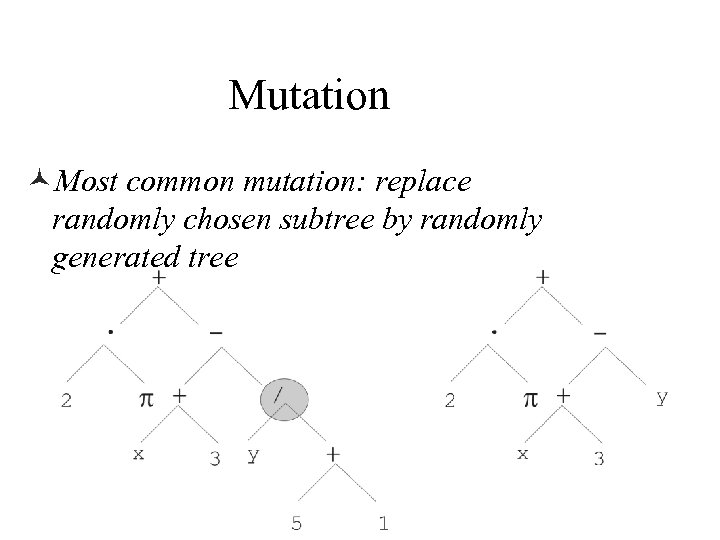

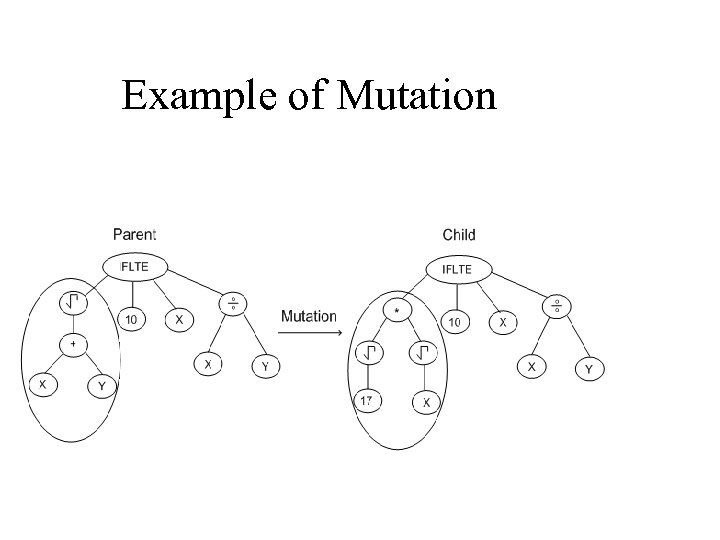

Mutation ©Most common mutation: replace randomly chosen subtree by randomly generated tree

Mutation ©Most common mutation: replace randomly chosen subtree by randomly generated tree

Example of Mutation

Example of Mutation

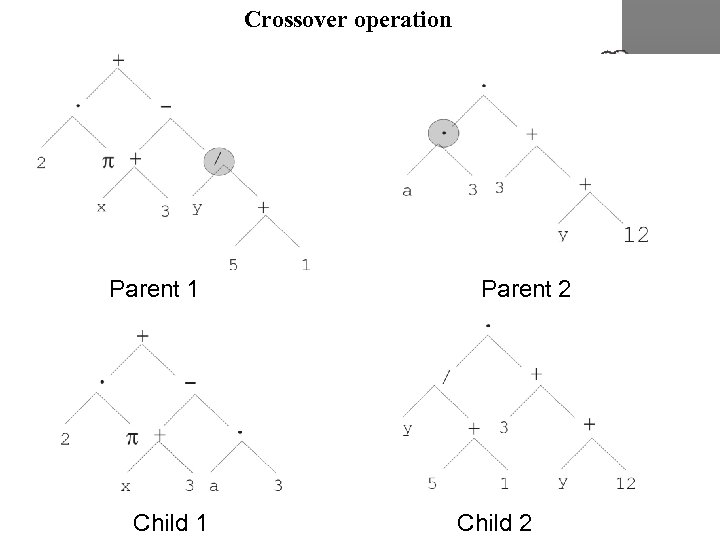

Crossover operation Parent 1 Child 1 Parent 2 Child 2

Crossover operation Parent 1 Child 1 Parent 2 Child 2

Application Domain #1 Evolving Electronic Circuits © John Koza ©Stanford Professor and CEO of “Genetic Programming Inc. ” ©Guru of Genetic Programming, very successful ©Made GP more mainstream by his success © Particularly fruitful area: ©Producing designs for circuit diagrams ©E. g. , antennas, amplifiers, etc. ©Functions mimic how transistors, resistors, etc. work

Application Domain #1 Evolving Electronic Circuits © John Koza ©Stanford Professor and CEO of “Genetic Programming Inc. ” ©Guru of Genetic Programming, very successful ©Made GP more mainstream by his success © Particularly fruitful area: ©Producing designs for circuit diagrams ©E. g. , antennas, amplifiers, etc. ©Functions mimic how transistors, resistors, etc. work

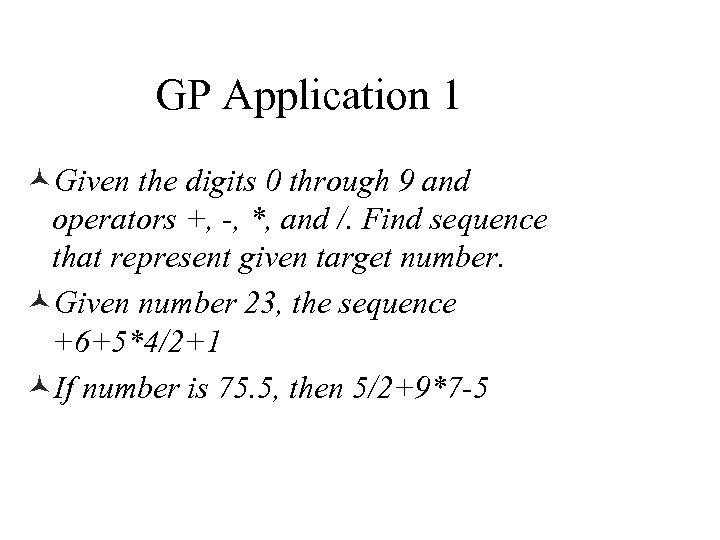

GP Application 1 ©Given the digits 0 through 9 and operators +, -, *, and /. Find sequence that represent given target number. ©Given number 23, the sequence +6+5*4/2+1 ©If number is 75. 5, then 5/2+9*7 -5

GP Application 1 ©Given the digits 0 through 9 and operators +, -, *, and /. Find sequence that represent given target number. ©Given number 23, the sequence +6+5*4/2+1 ©If number is 75. 5, then 5/2+9*7 -5

Generate Random Programs ©Generate well-formed formulas using prefix notation from variable A and a set of function symbols, for example { +, , *, %, sqrt } ©Examples: ©( + A ( * ( sqrt A ) ) ©(* A (- (* A A) (sqrt A) ) )

Generate Random Programs ©Generate well-formed formulas using prefix notation from variable A and a set of function symbols, for example { +, , *, %, sqrt } ©Examples: ©( + A ( * ( sqrt A ) ) ©(* A (- (* A A) (sqrt A) ) )

Examples of Genetic Programs © Symbolic Regression - the process of discovering both the functional form of a target function and all of its necessary coefficients, or at least an approximation to these. © Analog circuit design - Embryo circuit Initial circuit which is modified to create a new circuit according to functionality criteria.

Examples of Genetic Programs © Symbolic Regression - the process of discovering both the functional form of a target function and all of its necessary coefficients, or at least an approximation to these. © Analog circuit design - Embryo circuit Initial circuit which is modified to create a new circuit according to functionality criteria.

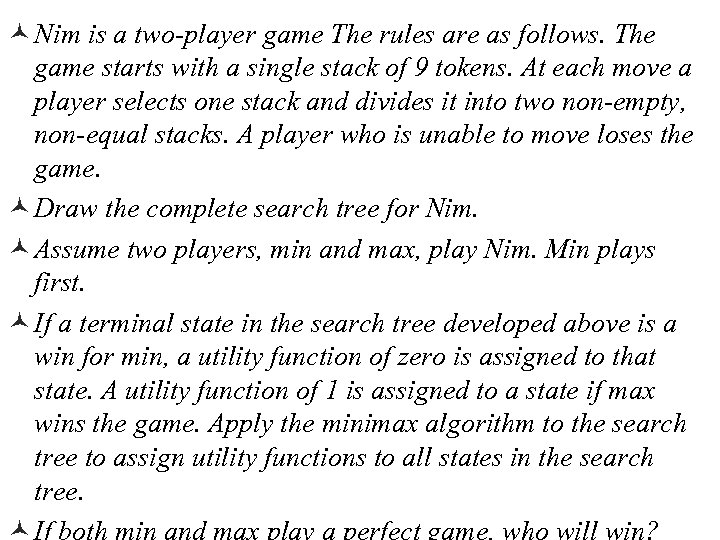

© Nim is a two-player game The rules are as follows. The game starts with a single stack of 9 tokens. At each move a player selects one stack and divides it into two non-empty, non-equal stacks. A player who is unable to move loses the game. © Draw the complete search tree for Nim. © Assume two players, min and max, play Nim. Min plays first. © If a terminal state in the search tree developed above is a win for min, a utility function of zero is assigned to that state. A utility function of 1 is assigned to a state if max wins the game. Apply the minimax algorithm to the search tree to assign utility functions to all states in the search tree. © If both min and max play a perfect game, who will win?

© Nim is a two-player game The rules are as follows. The game starts with a single stack of 9 tokens. At each move a player selects one stack and divides it into two non-empty, non-equal stacks. A player who is unable to move loses the game. © Draw the complete search tree for Nim. © Assume two players, min and max, play Nim. Min plays first. © If a terminal state in the search tree developed above is a win for min, a utility function of zero is assigned to that state. A utility function of 1 is assigned to a state if max wins the game. Apply the minimax algorithm to the search tree to assign utility functions to all states in the search tree. © If both min and max play a perfect game, who will win?

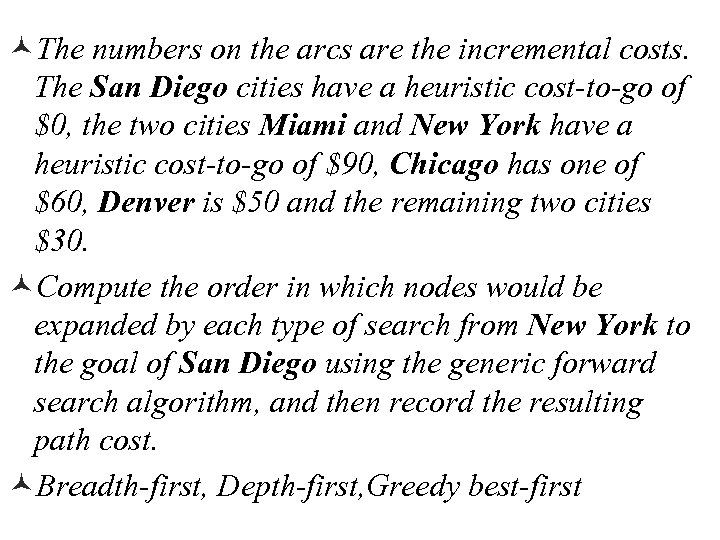

©The numbers on the arcs are the incremental costs. The San Diego cities have a heuristic cost-to-go of $0, the two cities Miami and New York have a heuristic cost-to-go of $90, Chicago has one of $60, Denver is $50 and the remaining two cities $30. ©Compute the order in which nodes would be expanded by each type of search from New York to the goal of San Diego using the generic forward search algorithm, and then record the resulting path cost. ©Breadth-first, Depth-first, Greedy best-first

©The numbers on the arcs are the incremental costs. The San Diego cities have a heuristic cost-to-go of $0, the two cities Miami and New York have a heuristic cost-to-go of $90, Chicago has one of $60, Denver is $50 and the remaining two cities $30. ©Compute the order in which nodes would be expanded by each type of search from New York to the goal of San Diego using the generic forward search algorithm, and then record the resulting path cost. ©Breadth-first, Depth-first, Greedy best-first