2a93be5bc0c0bdd6949afca1ca182dec.ppt

- Количество слайдов: 74

Artificial Intelligence and Applications School of Computer Science and Engineering Bai Xiao

Artificial Intelligence and Applications School of Computer Science and Engineering Bai Xiao

• • • Xiao Bai(Family name) Professor,Ph. D. Supervisor B. S. , Computer Science, Beihang University (BUAA) Ph. D. Computer Science, University of York, U. K. Research Interests: Computer Vision, Pattern Recognition, Image Processing, Remote Sensing Image Analysis, Machine Learning

• • • Xiao Bai(Family name) Professor,Ph. D. Supervisor B. S. , Computer Science, Beihang University (BUAA) Ph. D. Computer Science, University of York, U. K. Research Interests: Computer Vision, Pattern Recognition, Image Processing, Remote Sensing Image Analysis, Machine Learning

Email : buaa_ai_2016@163. com FTP : ftp: //219. 224. 169. 249/ username: student password: student

Email : buaa_ai_2016@163. com FTP : ftp: //219. 224. 169. 249/ username: student password: student

• • Artificial Intelligence is a broad area focus on how to let machines to have intelligence. It is also a modern research area. We will mainly learn machine learning especially learning algorithms, applications. Machine learning, pattern recognition, vision based learning and the corresponding applications.

• • Artificial Intelligence is a broad area focus on how to let machines to have intelligence. It is also a modern research area. We will mainly learn machine learning especially learning algorithms, applications. Machine learning, pattern recognition, vision based learning and the corresponding applications.

• • • Research based courses, not exam based. Teaching Assignment (30%) Attendance (30%) Final report (40%) Assignment: Reading research documents, i. e. research papers, books; presentation; coding assignment; applications and etc.

• • • Research based courses, not exam based. Teaching Assignment (30%) Attendance (30%) Final report (40%) Assignment: Reading research documents, i. e. research papers, books; presentation; coding assignment; applications and etc.

• Books: • R. Duda, P. Hart & D. Stork, Pattern Classification (2 nd ed. ), Wiley (Required) • T. Mitchell, Machine Learning, Mc. Graw-Hill (Recommended) • Papers: Canonical Papers and Recent Papers on this area. • Reports: From Experts or Researcher about their recent research progress.

• Books: • R. Duda, P. Hart & D. Stork, Pattern Classification (2 nd ed. ), Wiley (Required) • T. Mitchell, Machine Learning, Mc. Graw-Hill (Recommended) • Papers: Canonical Papers and Recent Papers on this area. • Reports: From Experts or Researcher about their recent research progress.

Introduction to Machine Learning Bai Xiao

Introduction to Machine Learning Bai Xiao

A Few Quotes • “A breakthrough in machine learning would be worth ten Microsofts” (Bill Gates, Chairman, Microsoft) • “Machine learning is the next Internet” (Tony Tether, Director, DARPA) • “Machine learning is the hot new thing” (John Hennessy, President, Stanford) • “Web rankings today are mostly a matter of machine learning” (Prabhakar Raghavan, Dir. Research, Yahoo) • “Machine learning is going to result in a real revolution” (Greg Papadopoulos, CTO, Sun) • “Machine learning is today’s discontinuity” (Jerry Yang, CEO, Yahoo)

A Few Quotes • “A breakthrough in machine learning would be worth ten Microsofts” (Bill Gates, Chairman, Microsoft) • “Machine learning is the next Internet” (Tony Tether, Director, DARPA) • “Machine learning is the hot new thing” (John Hennessy, President, Stanford) • “Web rankings today are mostly a matter of machine learning” (Prabhakar Raghavan, Dir. Research, Yahoo) • “Machine learning is going to result in a real revolution” (Greg Papadopoulos, CTO, Sun) • “Machine learning is today’s discontinuity” (Jerry Yang, CEO, Yahoo)

What is “Machine Learning” • Machine learning is a subfield of computer science that evolved from the study of pattern recognition and computational learning theory in artificial intelligence. • Machine learning explores the study and construction of algorithms that can learn from and make predictions on data. • Such algorithms operate by building a model from example inputs in order to make data-driven predictions or decisions, rather than following strictly static program instructions.

What is “Machine Learning” • Machine learning is a subfield of computer science that evolved from the study of pattern recognition and computational learning theory in artificial intelligence. • Machine learning explores the study and construction of algorithms that can learn from and make predictions on data. • Such algorithms operate by building a model from example inputs in order to make data-driven predictions or decisions, rather than following strictly static program instructions.

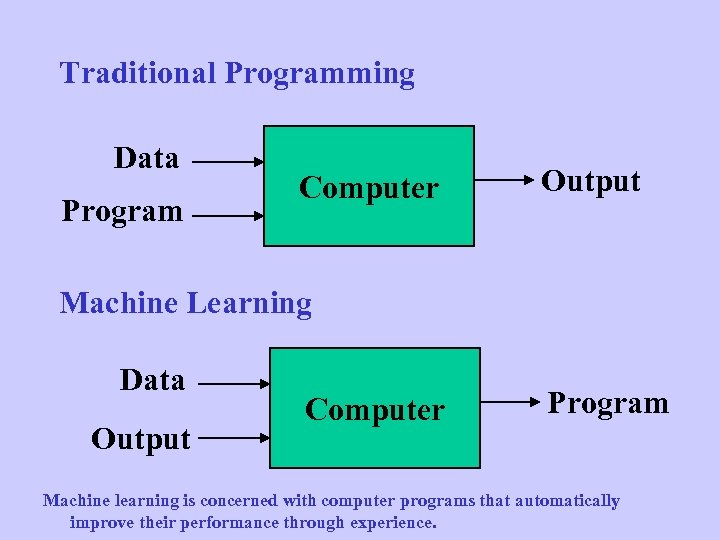

Traditional Programming Data Program Computer Output Machine Learning Data Output Computer Program Machine learning is concerned with computer programs that automatically improve their performance through experience.

Traditional Programming Data Program Computer Output Machine Learning Data Output Computer Program Machine learning is concerned with computer programs that automatically improve their performance through experience.

Why Machine Learning? • Develop systems that can automatically adapt and customize themselves to individuals users. – Personalized news or mail filters • Discover new knowledge from large databases – Market basket analysis • Ability to mimic human and replace certain monotonous tasks – which require some intelligence – Like recognizing handwritten characters • Develop systems that are too difficult/expensive to construct manually because they require specific detailed skills or knowledge tuned to a specific task • More reasons for you to discover

Why Machine Learning? • Develop systems that can automatically adapt and customize themselves to individuals users. – Personalized news or mail filters • Discover new knowledge from large databases – Market basket analysis • Ability to mimic human and replace certain monotonous tasks – which require some intelligence – Like recognizing handwritten characters • Develop systems that are too difficult/expensive to construct manually because they require specific detailed skills or knowledge tuned to a specific task • More reasons for you to discover

Why Now? • Flood of all kinds of available data (especially with the advent of the Internet) • Increasing computational power • Growing progress in available algorithms and theory developed by researchers • Increasing support from industries

Why Now? • Flood of all kinds of available data (especially with the advent of the Internet) • Increasing computational power • Growing progress in available algorithms and theory developed by researchers • Increasing support from industries

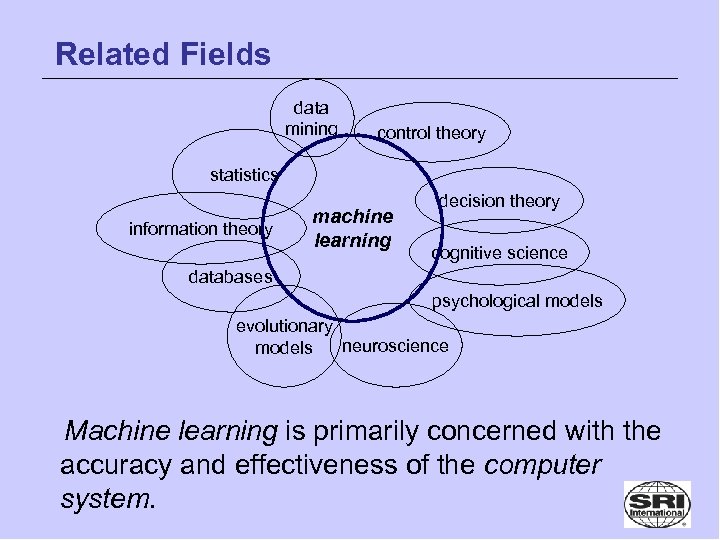

Related Fields data mining control theory statistics information theory machine learning decision theory cognitive science databases psychological models evolutionary neuroscience models Machine learning is primarily concerned with the accuracy and effectiveness of the computer system.

Related Fields data mining control theory statistics information theory machine learning decision theory cognitive science databases psychological models evolutionary neuroscience models Machine learning is primarily concerned with the accuracy and effectiveness of the computer system.

Terminology • Features – The number of features or distinct traits that can be used to describe each item in quantitative manner • Samples – A sample is an item to process i. e. classify. It can be a document, a picture, a sound, a video, a row in database or CSV file, or whatever you can describe with a fixed set of quantitative traits. • Feature vector – Is an n-dimensional vector of numerical features that represent some object • Feature extraction – Preparation of feature vector – Transforms the data in the high-dimensional space to a space of fewer dimensions • Training – Set of data to discover potentially predictive relationships

Terminology • Features – The number of features or distinct traits that can be used to describe each item in quantitative manner • Samples – A sample is an item to process i. e. classify. It can be a document, a picture, a sound, a video, a row in database or CSV file, or whatever you can describe with a fixed set of quantitative traits. • Feature vector – Is an n-dimensional vector of numerical features that represent some object • Feature extraction – Preparation of feature vector – Transforms the data in the high-dimensional space to a space of fewer dimensions • Training – Set of data to discover potentially predictive relationships

Sample Applications • • • Web search Computational biology Finance E-commerce Space exploration Robotics Information extraction Social networks Debugging [Your favorite area]

Sample Applications • • • Web search Computational biology Finance E-commerce Space exploration Robotics Information extraction Social networks Debugging [Your favorite area]

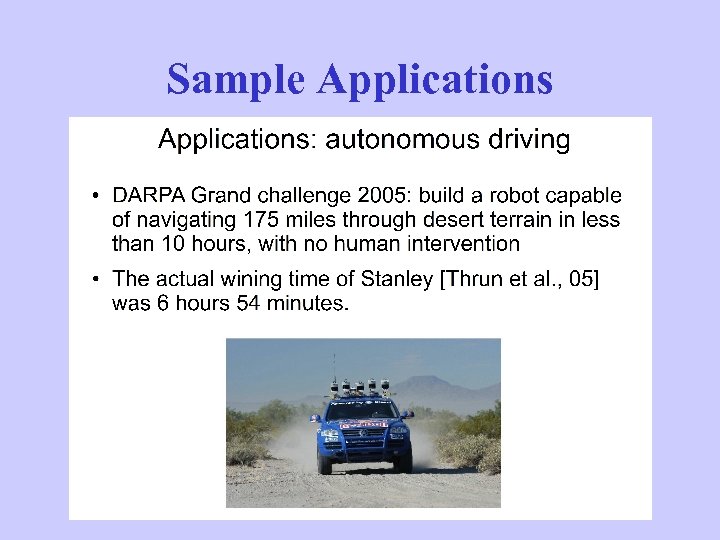

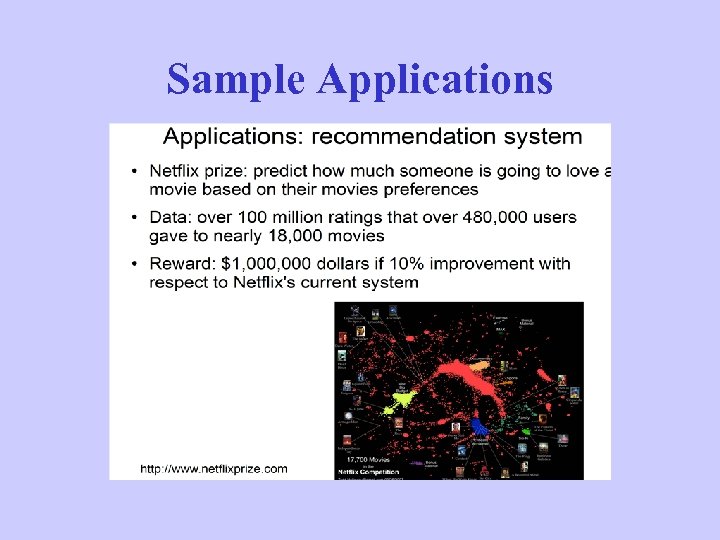

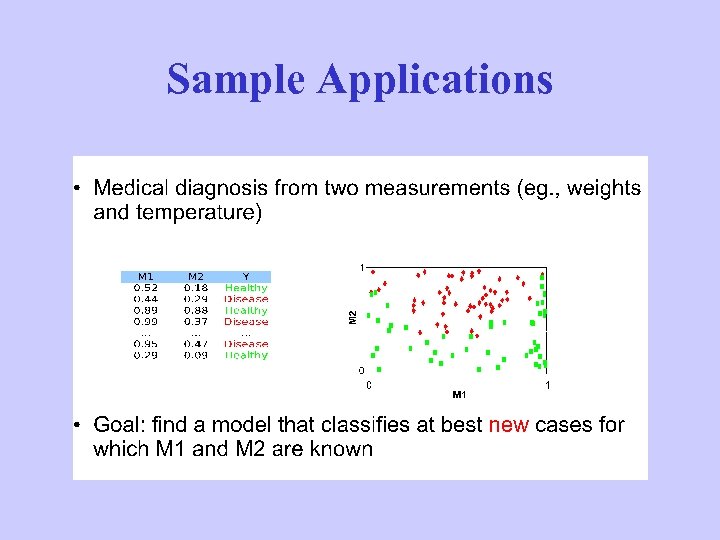

Sample Applications

Sample Applications

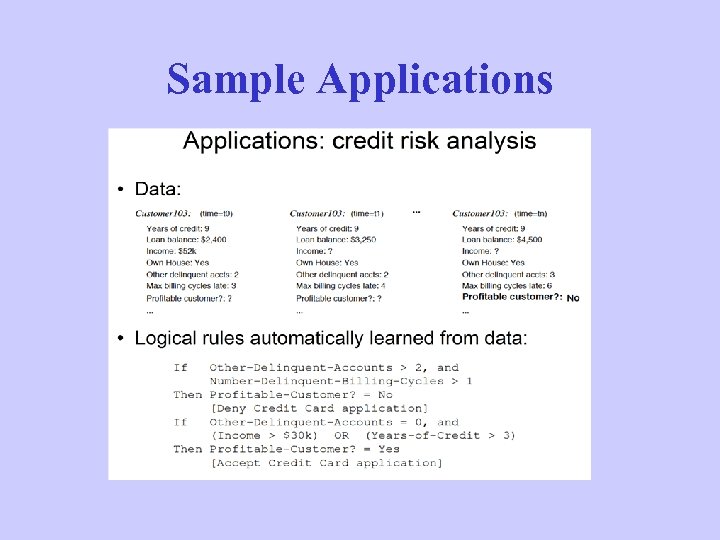

Sample Applications

Sample Applications

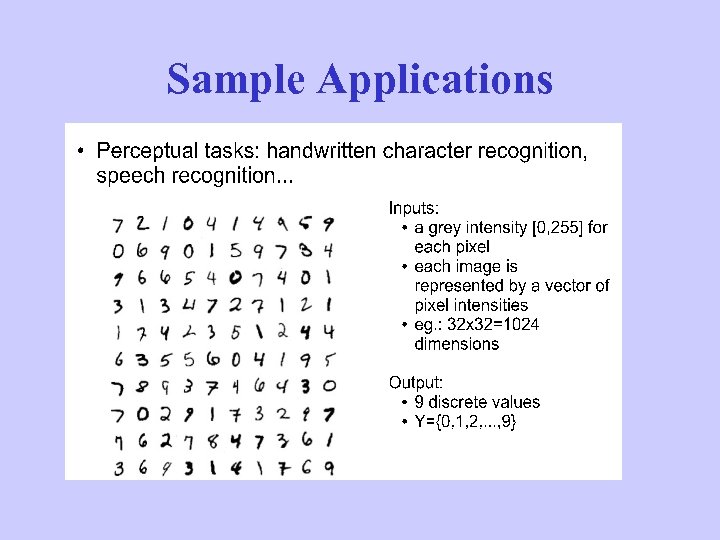

Sample Applications

Sample Applications

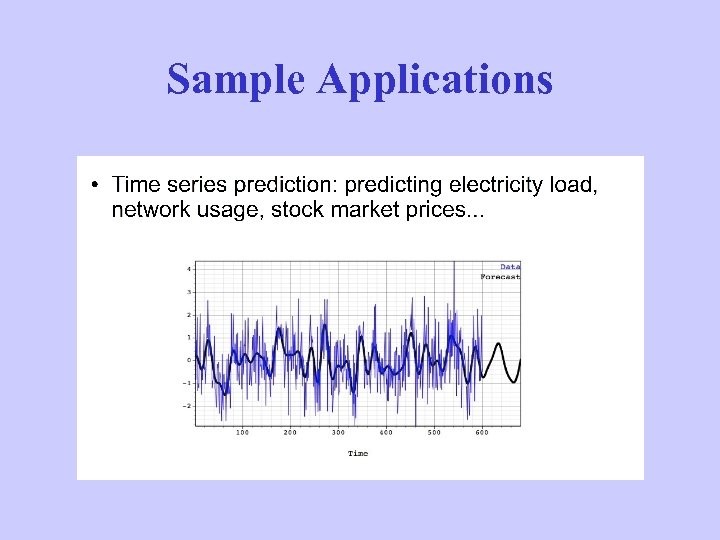

Sample Applications

Sample Applications

Sample Applications

Sample Applications

Sample Applications

Sample Applications

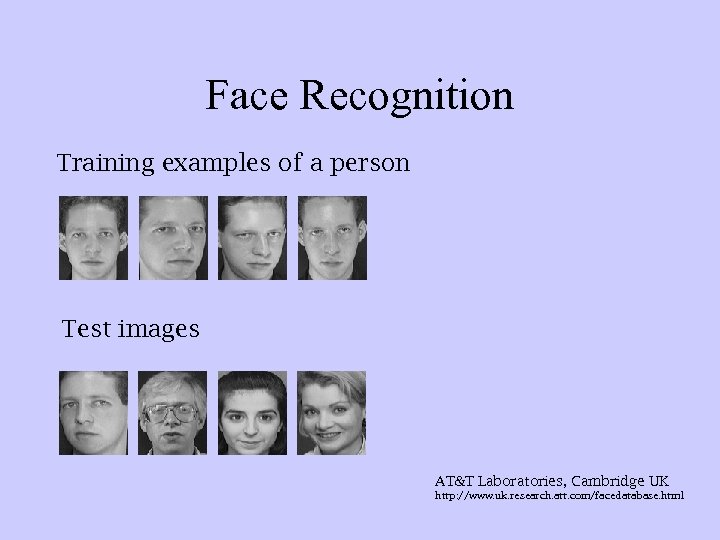

Face Recognition Training examples of a person Test images AT&T Laboratories, Cambridge UK http: //www. uk. research. att. com/facedatabase. html

Face Recognition Training examples of a person Test images AT&T Laboratories, Cambridge UK http: //www. uk. research. att. com/facedatabase. html

• • • Machine Learning Algorithms: Decision Tree, Concept Learning, Neural Network, Support Vector Machine, Clustering, Bayesian Learning, Reinforcement Learning, Dictionary based Learning, Semi-supervised Learning Application: Image Classification, Image Recognition, Image Retrieval. Interesting Topics: some related ongoing research topics.

• • • Machine Learning Algorithms: Decision Tree, Concept Learning, Neural Network, Support Vector Machine, Clustering, Bayesian Learning, Reinforcement Learning, Dictionary based Learning, Semi-supervised Learning Application: Image Classification, Image Recognition, Image Retrieval. Interesting Topics: some related ongoing research topics.

Types of Learning • Supervised (inductive) learning – Training data includes desired outputs • Unsupervised learning – Training data does not include desired outputs • Semi-supervised learning – Training data includes a few desired outputs • Reinforcement learning – Rewards from sequence of actions

Types of Learning • Supervised (inductive) learning – Training data includes desired outputs • Unsupervised learning – Training data does not include desired outputs • Semi-supervised learning – Training data includes a few desired outputs • Reinforcement learning – Rewards from sequence of actions

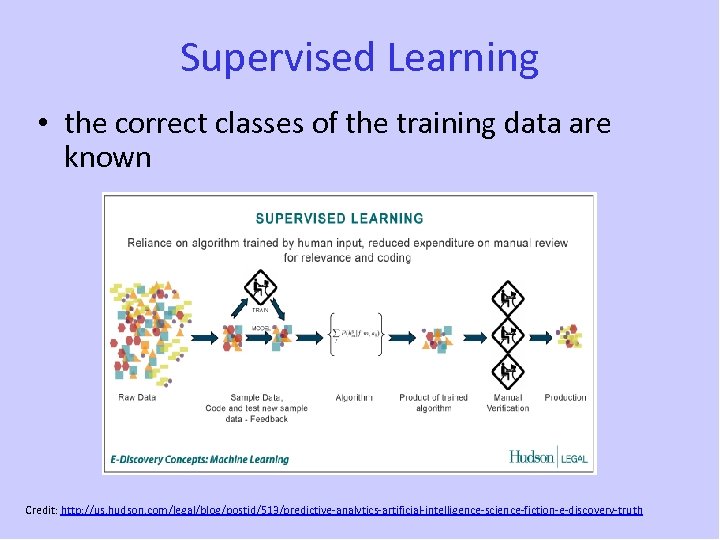

Supervised Learning • the correct classes of the training data are known Credit: http: //us. hudson. com/legal/blog/postid/513/predictive-analytics-artificial-intelligence-science-fiction-e-discovery-truth

Supervised Learning • the correct classes of the training data are known Credit: http: //us. hudson. com/legal/blog/postid/513/predictive-analytics-artificial-intelligence-science-fiction-e-discovery-truth

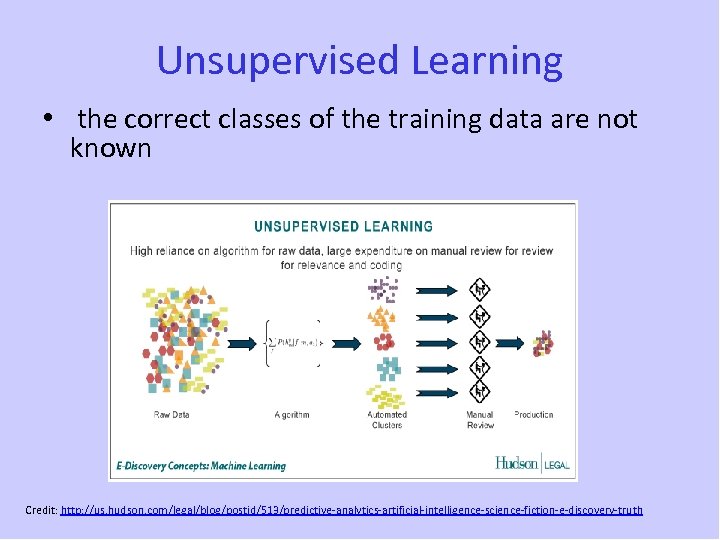

Unsupervised Learning • the correct classes of the training data are not known Credit: http: //us. hudson. com/legal/blog/postid/513/predictive-analytics-artificial-intelligence-science-fiction-e-discovery-truth

Unsupervised Learning • the correct classes of the training data are not known Credit: http: //us. hudson. com/legal/blog/postid/513/predictive-analytics-artificial-intelligence-science-fiction-e-discovery-truth

Supervised vs Unsupervised • In supervised learning, we are given a data set and already know what our correct output should look like, having the idea that there is a relationship between the input and the output • Unsupervised learning, on the other hand, allows us to approach problems with little or no idea what our results should look like. We can derive structure from data where we don't necessarily know the effect of the variables

Supervised vs Unsupervised • In supervised learning, we are given a data set and already know what our correct output should look like, having the idea that there is a relationship between the input and the output • Unsupervised learning, on the other hand, allows us to approach problems with little or no idea what our results should look like. We can derive structure from data where we don't necessarily know the effect of the variables

• suppose you had a basket and it is fulled with some fresh fruits your task is to arrange the same type fruits at one place. • suppose the fruits are apple, banana, cherry, grape. • so you already know from your previous work that, the shape of each and every fruit so it is easy to arrange the same type of fruits at one place. • here your previous work is called as train data mining. • so you already learn the things from your train data, This is because of you have a response variable which says you that if some fruit have so and so features it is grape, like that for each and every fruit. • This type of data you will get from the train data. • This type of learning is called as supervised learning. • This type solving problem come under Classification. • So you already learn the things so you can do you job confidently.

• suppose you had a basket and it is fulled with some fresh fruits your task is to arrange the same type fruits at one place. • suppose the fruits are apple, banana, cherry, grape. • so you already know from your previous work that, the shape of each and every fruit so it is easy to arrange the same type of fruits at one place. • here your previous work is called as train data mining. • so you already learn the things from your train data, This is because of you have a response variable which says you that if some fruit have so and so features it is grape, like that for each and every fruit. • This type of data you will get from the train data. • This type of learning is called as supervised learning. • This type solving problem come under Classification. • So you already learn the things so you can do you job confidently.

• suppose you had a basket and it is fulled with some fresh fruits your task is to arrange the same type fruits at one place. • This time you don't know any thing about that fruits, you are first time seeing these fruits so how will you arrange the same type of fruits. • What you will do first you take on fruit and you will select any physical character of that particular fruit. suppose you taken color. • Then you will arrange them base on the color, then the groups will be some thing like this. • RED COLOR GROUP: apples & cherry fruits. • GREEN COLOR GROUP: bananas & grapes. • so now you will take another physical character as size, so now the groups will be some thing like this. • RED COLOR AND BIG SIZE: apple. • RED COLOR AND SMALL SIZE: cherry fruits. • GREEN COLOR AND BIG SIZE: bananas. • GREEN COLOR AND SMALL SIZE: grapes. • job done happy ending. • here you didn't know learn any thing before means no train data and noresponse variable. • This type of learning is know unsupervised learning.

• suppose you had a basket and it is fulled with some fresh fruits your task is to arrange the same type fruits at one place. • This time you don't know any thing about that fruits, you are first time seeing these fruits so how will you arrange the same type of fruits. • What you will do first you take on fruit and you will select any physical character of that particular fruit. suppose you taken color. • Then you will arrange them base on the color, then the groups will be some thing like this. • RED COLOR GROUP: apples & cherry fruits. • GREEN COLOR GROUP: bananas & grapes. • so now you will take another physical character as size, so now the groups will be some thing like this. • RED COLOR AND BIG SIZE: apple. • RED COLOR AND SMALL SIZE: cherry fruits. • GREEN COLOR AND BIG SIZE: bananas. • GREEN COLOR AND SMALL SIZE: grapes. • job done happy ending. • here you didn't know learn any thing before means no train data and noresponse variable. • This type of learning is know unsupervised learning.

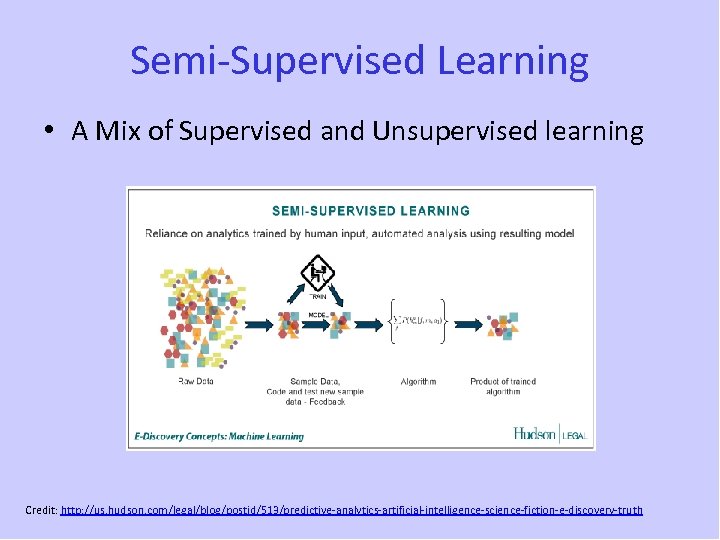

Semi-Supervised Learning • A Mix of Supervised and Unsupervised learning Credit: http: //us. hudson. com/legal/blog/postid/513/predictive-analytics-artificial-intelligence-science-fiction-e-discovery-truth

Semi-Supervised Learning • A Mix of Supervised and Unsupervised learning Credit: http: //us. hudson. com/legal/blog/postid/513/predictive-analytics-artificial-intelligence-science-fiction-e-discovery-truth

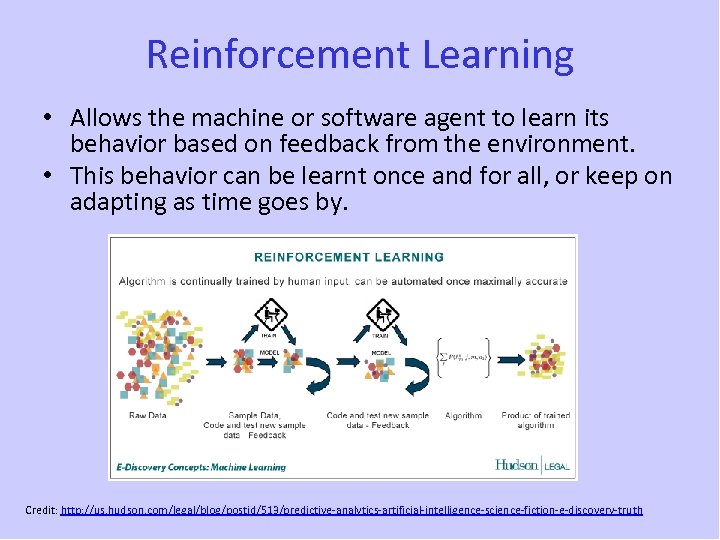

Reinforcement Learning • Allows the machine or software agent to learn its behavior based on feedback from the environment. • This behavior can be learnt once and for all, or keep on adapting as time goes by. Credit: http: //us. hudson. com/legal/blog/postid/513/predictive-analytics-artificial-intelligence-science-fiction-e-discovery-truth

Reinforcement Learning • Allows the machine or software agent to learn its behavior based on feedback from the environment. • This behavior can be learnt once and for all, or keep on adapting as time goes by. Credit: http: //us. hudson. com/legal/blog/postid/513/predictive-analytics-artificial-intelligence-science-fiction-e-discovery-truth

What We’ll Cover • Supervised learning – – – – Decision tree induction Concept Learning Instance-based learning Bayesian learning Neural networks Support vector machines Learning theory Nearest Neighbor Algorithm • Unsupervised learning – Clustering – K-means – Dimensionality reduction

What We’ll Cover • Supervised learning – – – – Decision tree induction Concept Learning Instance-based learning Bayesian learning Neural networks Support vector machines Learning theory Nearest Neighbor Algorithm • Unsupervised learning – Clustering – K-means – Dimensionality reduction

Machine Learning Decision Tree Bai Xiao

Machine Learning Decision Tree Bai Xiao

• Examples on Decision Tree • Problems Definition • ID 3 algorithm

• Examples on Decision Tree • Problems Definition • ID 3 algorithm

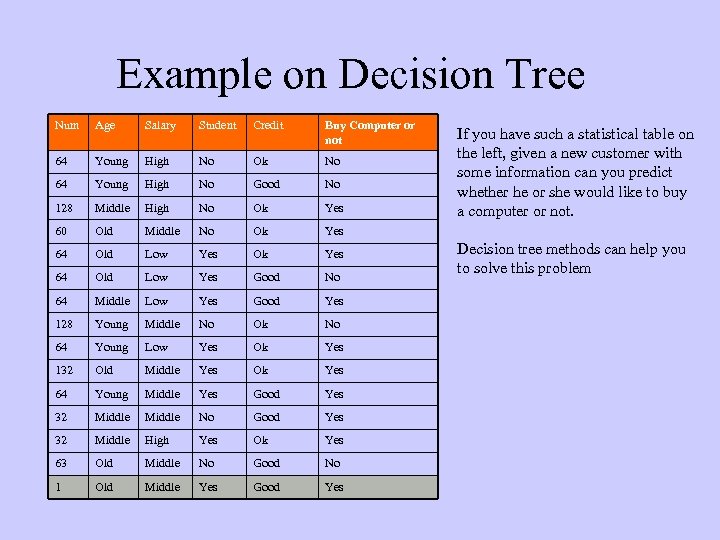

Example on Decision Tree Num Age Salary Student Credit Buy Computer or not 64 Young High No Ok No 64 Young High No Good No 128 Middle High No Ok Yes 60 Old Middle No Ok Yes 64 Old Low Yes Good No 64 Middle Low Yes Good Yes 128 Young Middle No Ok No 64 Young Low Yes Ok Yes 132 Old Middle Yes Ok Yes 64 Young Middle Yes Good Yes 32 Middle No Good Yes 32 Middle High Yes Ok Yes 63 Old Middle No Good No 1 Old Middle Yes Good Yes If you have such a statistical table on the left, given a new customer with some information can you predict whether he or she would like to buy a computer or not. Decision tree methods can help you to solve this problem

Example on Decision Tree Num Age Salary Student Credit Buy Computer or not 64 Young High No Ok No 64 Young High No Good No 128 Middle High No Ok Yes 60 Old Middle No Ok Yes 64 Old Low Yes Good No 64 Middle Low Yes Good Yes 128 Young Middle No Ok No 64 Young Low Yes Ok Yes 132 Old Middle Yes Ok Yes 64 Young Middle Yes Good Yes 32 Middle No Good Yes 32 Middle High Yes Ok Yes 63 Old Middle No Good No 1 Old Middle Yes Good Yes If you have such a statistical table on the left, given a new customer with some information can you predict whether he or she would like to buy a computer or not. Decision tree methods can help you to solve this problem

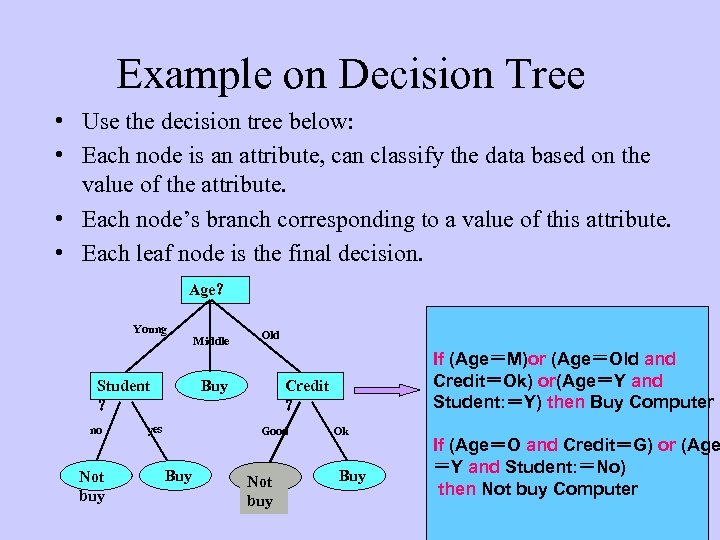

Example on Decision Tree • Use the decision tree below: • Each node is an attribute, can classify the data based on the value of the attribute. • Each node’s branch corresponding to a value of this attribute. • Each leaf node is the final decision. Age? Young Student ? no Not buy Middle Old Buy yes Credit ? Good Buy If (Age=M)or (Age=Old and Credit=Ok) or(Age=Y and Student: =Y) then Buy Computer Not buy Ok Buy If (Age=O and Credit=G) or (Age =Y and Student: =No) then Not buy Computer

Example on Decision Tree • Use the decision tree below: • Each node is an attribute, can classify the data based on the value of the attribute. • Each node’s branch corresponding to a value of this attribute. • Each leaf node is the final decision. Age? Young Student ? no Not buy Middle Old Buy yes Credit ? Good Buy If (Age=M)or (Age=Old and Credit=Ok) or(Age=Y and Student: =Y) then Buy Computer Not buy Ok Buy If (Age=O and Credit=G) or (Age =Y and Student: =No) then Not buy Computer

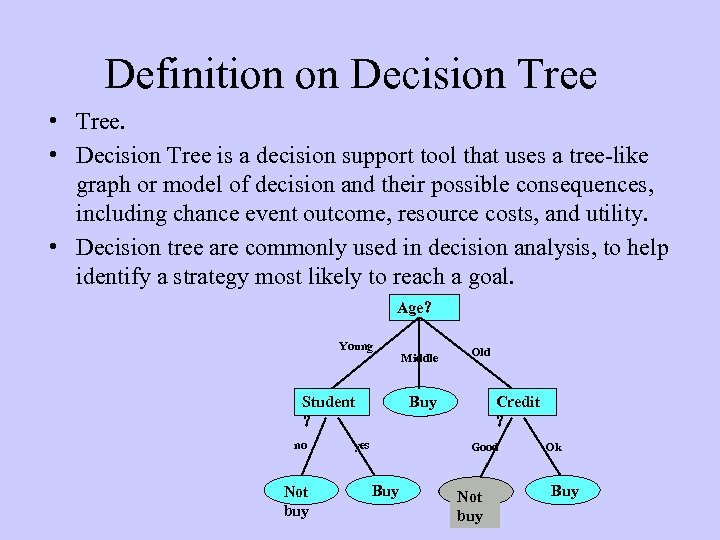

Definition on Decision Tree • Tree. • Decision Tree is a decision support tool that uses a tree-like graph or model of decision and their possible consequences, including chance event outcome, resource costs, and utility. • Decision tree are commonly used in decision analysis, to help identify a strategy most likely to reach a goal. Age? Young Not buy Old Buy Student ? no Middle yes Credit ? Good Buy Not buy Ok Buy

Definition on Decision Tree • Tree. • Decision Tree is a decision support tool that uses a tree-like graph or model of decision and their possible consequences, including chance event outcome, resource costs, and utility. • Decision tree are commonly used in decision analysis, to help identify a strategy most likely to reach a goal. Age? Young Not buy Old Buy Student ? no Middle yes Credit ? Good Buy Not buy Ok Buy

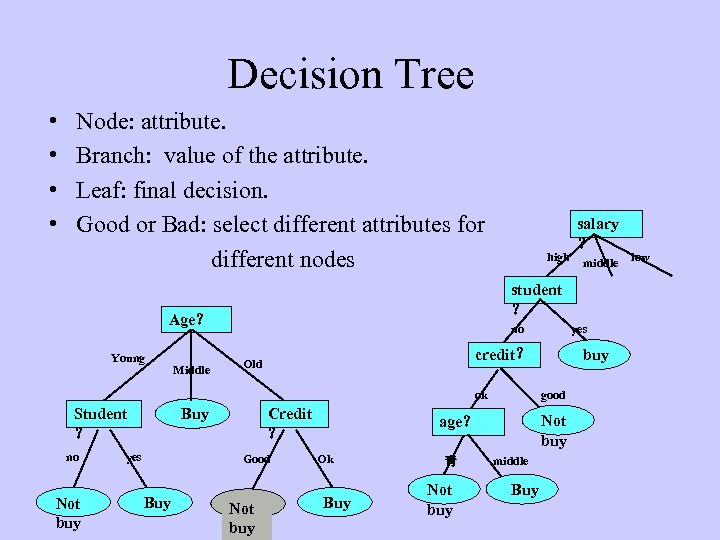

Decision Tree • Node: attribute. • Branch: value of the attribute. • Leaf: final decision. • Good or Bad: select different attributes for different nodes Middle no Buy no Not buy yes Credit ? Good Buy Not buy yes credit? Old ok Student ? middle student ? Age? Young high salary ? good Not buy age? Ok Buy 青 Not buy middle Buy low

Decision Tree • Node: attribute. • Branch: value of the attribute. • Leaf: final decision. • Good or Bad: select different attributes for different nodes Middle no Buy no Not buy yes Credit ? Good Buy Not buy yes credit? Old ok Student ? middle student ? Age? Young high salary ? good Not buy age? Ok Buy 青 Not buy middle Buy low

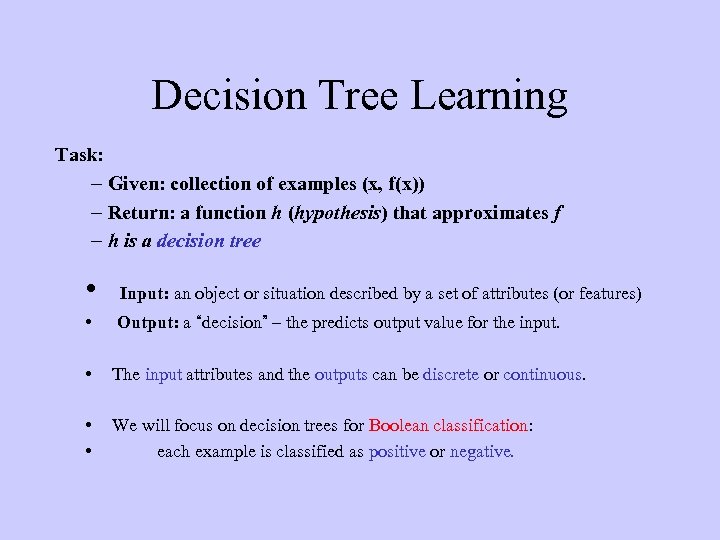

Decision Tree Learning Task: – Given: collection of examples (x, f(x)) – Return: a function h (hypothesis) that approximates f – h is a decision tree • Input: an object or situation described by a set of attributes (or features) • Output: a “decision” – the predicts output value for the input. • The input attributes and the outputs can be discrete or continuous. • • We will focus on decision trees for Boolean classification: each example is classified as positive or negative.

Decision Tree Learning Task: – Given: collection of examples (x, f(x)) – Return: a function h (hypothesis) that approximates f – h is a decision tree • Input: an object or situation described by a set of attributes (or features) • Output: a “decision” – the predicts output value for the input. • The input attributes and the outputs can be discrete or continuous. • • We will focus on decision trees for Boolean classification: each example is classified as positive or negative.

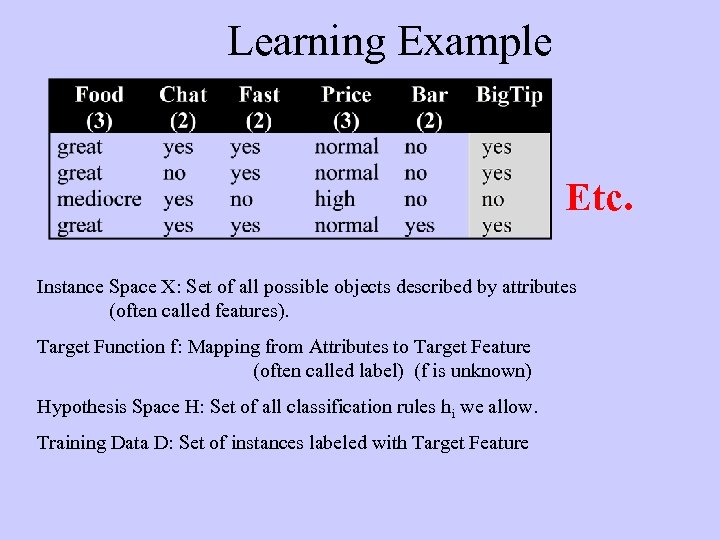

Learning Example Etc. Instance Space X: Set of all possible objects described by attributes (often called features). Target Function f: Mapping from Attributes to Target Feature (often called label) (f is unknown) Hypothesis Space H: Set of all classification rules hi we allow. Training Data D: Set of instances labeled with Target Feature

Learning Example Etc. Instance Space X: Set of all possible objects described by attributes (often called features). Target Function f: Mapping from Attributes to Target Feature (often called label) (f is unknown) Hypothesis Space H: Set of all classification rules hi we allow. Training Data D: Set of instances labeled with Target Feature

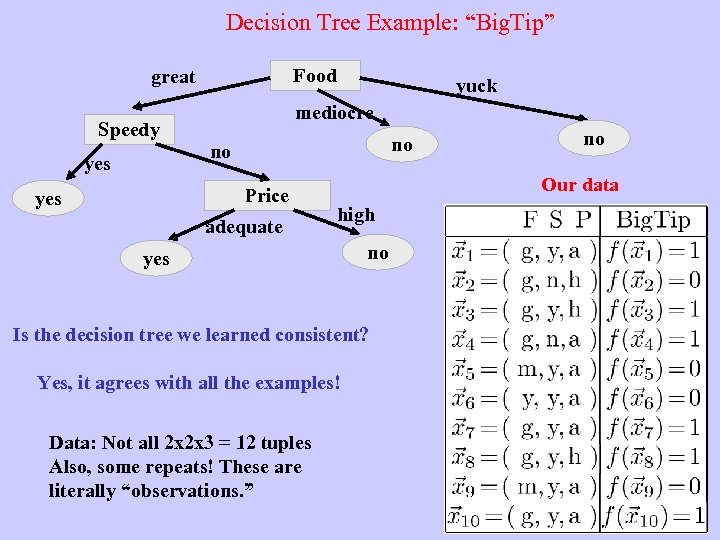

Decision Tree Example: “Big. Tip” Food great Speedy yes mediocre no no Price yes yuck adequate Our data high yes no Is the decision tree we learned consistent? Yes, it agrees with all the examples! Data: Not all 2 x 2 x 3 = 12 tuples Also, some repeats! These are literally “observations. ” no

Decision Tree Example: “Big. Tip” Food great Speedy yes mediocre no no Price yes yuck adequate Our data high yes no Is the decision tree we learned consistent? Yes, it agrees with all the examples! Data: Not all 2 x 2 x 3 = 12 tuples Also, some repeats! These are literally “observations. ” no

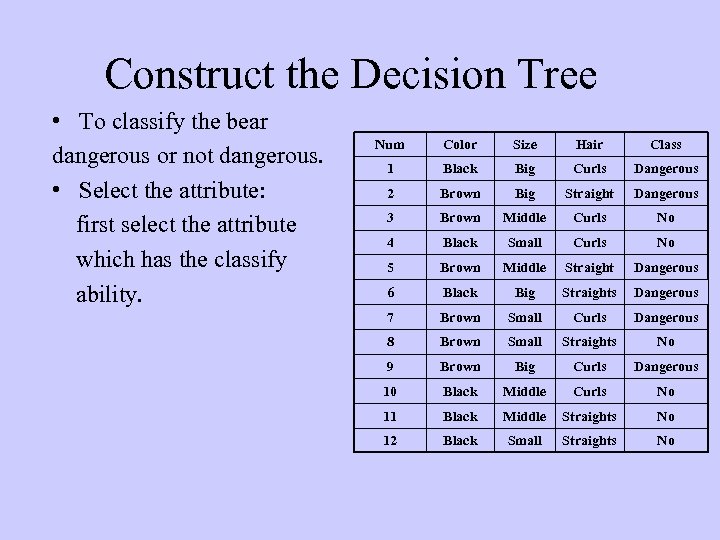

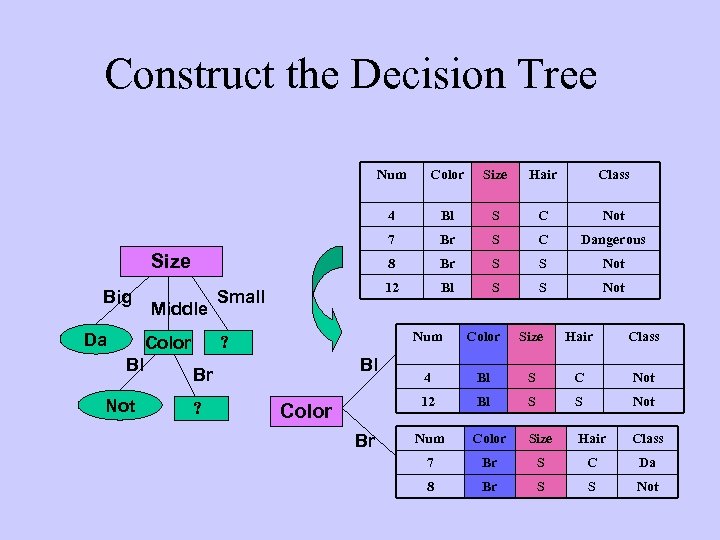

Construct the Decision Tree • To classify the bear dangerous or not dangerous. • Select the attribute: first select the attribute which has the classify ability. Num Color Size Hair Class 1 Black Big Curls Dangerous 2 Brown Big Straight Dangerous 3 Brown Middle Curls No 4 Black Small Curls No 5 Brown Middle Straight Dangerous 6 Black Big Straights Dangerous 7 Brown Small Curls Dangerous 8 Brown Small Straights No 9 Brown Big Curls Dangerous 10 Black Middle Curls No 11 Black Middle Straights No 12 Black Small Straights No

Construct the Decision Tree • To classify the bear dangerous or not dangerous. • Select the attribute: first select the attribute which has the classify ability. Num Color Size Hair Class 1 Black Big Curls Dangerous 2 Brown Big Straight Dangerous 3 Brown Middle Curls No 4 Black Small Curls No 5 Brown Middle Straight Dangerous 6 Black Big Straights Dangerous 7 Brown Small Curls Dangerous 8 Brown Small Straights No 9 Brown Big Curls Dangerous 10 Black Middle Curls No 11 Black Middle Straights No 12 Black Small Straights No

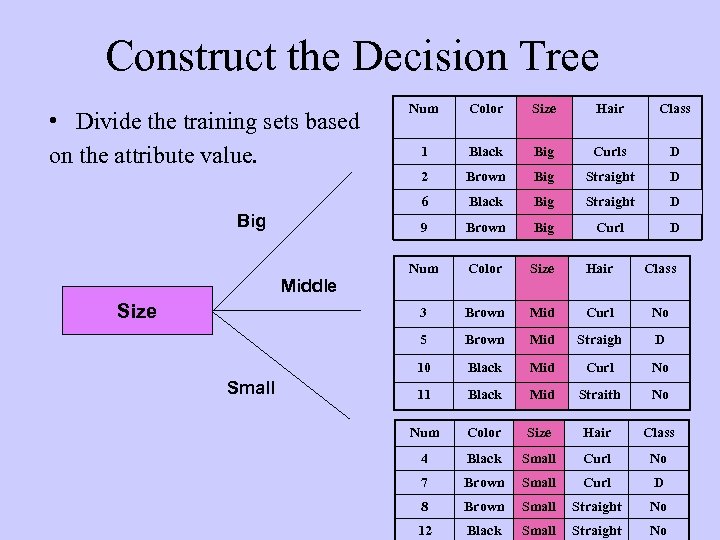

Construct the Decision Tree 1 Black Big Curls D Brown Big Straight D Black Big Straight D Brown Big Curl D Num Color Size Hair Class Brown Mid Curl No 5 Brown Mid Straigh D 10 Small Class 3 Size Hair 9 Middle Size 6 Big Color 2 • Divide the training sets based on the attribute value. Num Black Mid Curl No 11 Black Mid Straith No Num Color Size Hair Class 4 Black Small Curl No 7 Brown Small Curl D 8 Brown Small Straight No 12 Black Small Straight No

Construct the Decision Tree 1 Black Big Curls D Brown Big Straight D Black Big Straight D Brown Big Curl D Num Color Size Hair Class Brown Mid Curl No 5 Brown Mid Straigh D 10 Small Class 3 Size Hair 9 Middle Size 6 Big Color 2 • Divide the training sets based on the attribute value. Num Black Mid Curl No 11 Black Mid Straith No Num Color Size Hair Class 4 Black Small Curl No 7 Brown Small Curl D 8 Brown Small Straight No 12 Black Small Straight No

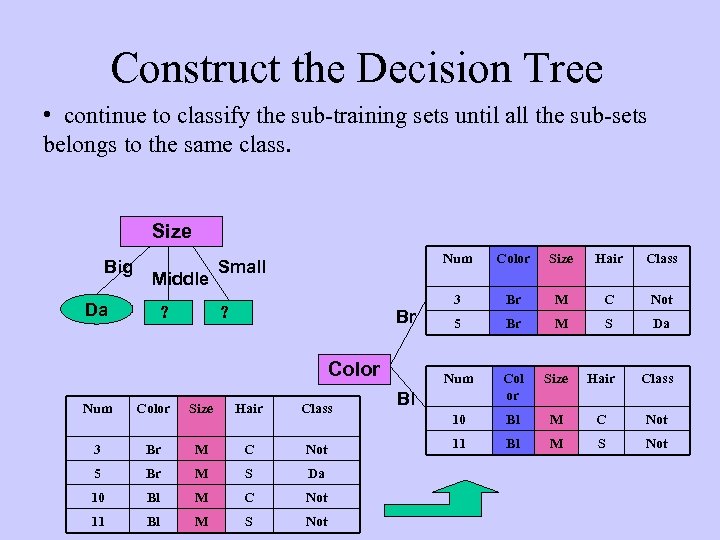

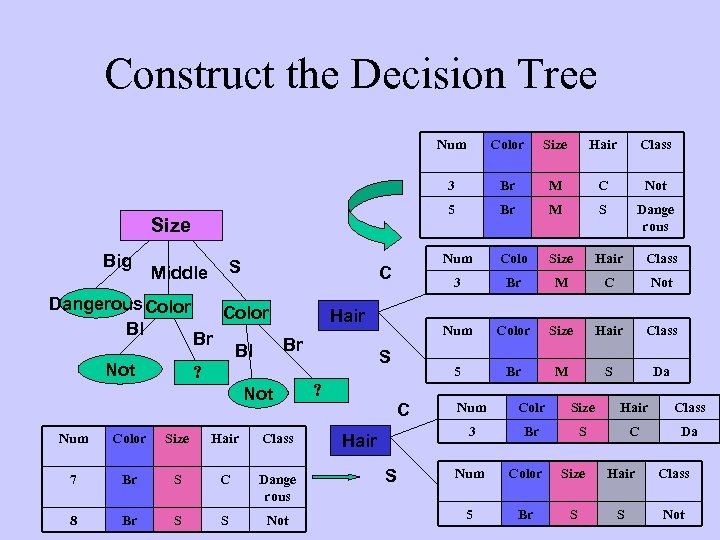

Construct the Decision Tree • continue to classify the sub-training sets until all the sub-sets belongs to the same class. Size Big Da Middle ? Num ? Br Color Num Color Size Hair Class 3 Br M C Not 5 Br M S Da 10 Bl M C Not 11 Bl M S Not Color Size Hair Class 3 Br M C Not 5 Br M S Da Num Small Col or Size Hair Class 10 Bl M C Not 11 Bl M S Not Bl

Construct the Decision Tree • continue to classify the sub-training sets until all the sub-sets belongs to the same class. Size Big Da Middle ? Num ? Br Color Num Color Size Hair Class 3 Br M C Not 5 Br M S Da 10 Bl M C Not 11 Bl M S Not Color Size Hair Class 3 Br M C Not 5 Br M S Da Num Small Col or Size Hair Class 10 Bl M C Not 11 Bl M S Not Bl

Construct the Decision Tree Num Color Size Hair Class 4 Bl S C Not 7 Br S C Dangerous 8 Br S S Not 12 Bl S S Not Num Color Size Hair Class 4 Bl S C Not 12 Bl S S Not Size Big Da Middle Color Bl Not Small ? Bl Br ? Color Br Num Color Size Hair Class 7 Br S C Da 8 Br S S Not

Construct the Decision Tree Num Color Size Hair Class 4 Bl S C Not 7 Br S C Dangerous 8 Br S S Not 12 Bl S S Not Num Color Size Hair Class 4 Bl S C Not 12 Bl S S Not Size Big Da Middle Color Bl Not Small ? Bl Br ? Color Br Num Color Size Hair Class 7 Br S C Da 8 Br S S Not

Construct the Decision Tree Num Big Middle Dangerous Color Bl Not Color Br Br Bl ? Not Num Color Size Hair Class 7 Br S C Dange rous 8 Br S S Not ? M C Not Br M S Dange rous Colo Size Hair Class Br M C Not Num Color Size Hair Class 5 S Br 3 Hair Class Num C Hair 5 S Size 3 Size Color Br M S Da Hair S Num Colr Size Hair Class 3 C Br S C Da Num Color Size Hair Class 5 Br S S Not

Construct the Decision Tree Num Big Middle Dangerous Color Bl Not Color Br Br Bl ? Not Num Color Size Hair Class 7 Br S C Dange rous 8 Br S S Not ? M C Not Br M S Dange rous Colo Size Hair Class Br M C Not Num Color Size Hair Class 5 S Br 3 Hair Class Num C Hair 5 S Size 3 Size Color Br M S Da Hair S Num Colr Size Hair Class 3 C Br S C Da Num Color Size Hair Class 5 Br S S Not

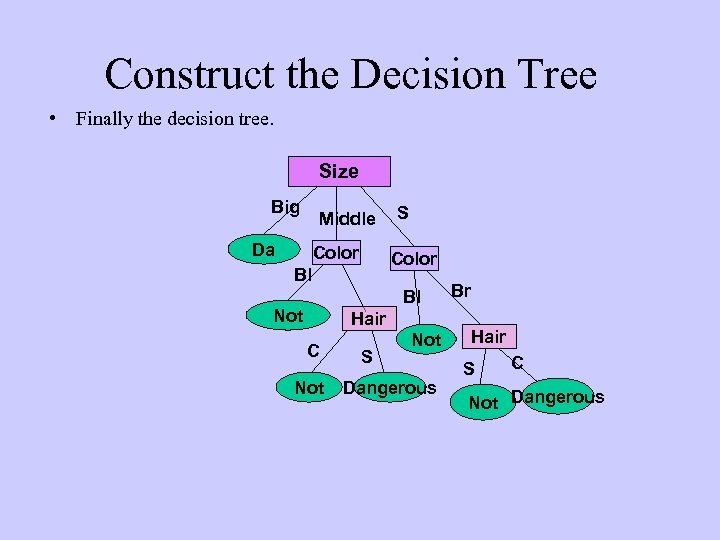

Construct the Decision Tree • Finally the decision tree. Size Big Middle Da Color S Color Bl Bl Not Hair C Not S Not Dangerous Br Hair S C Not Dangerous

Construct the Decision Tree • Finally the decision tree. Size Big Middle Da Color S Color Bl Bl Not Hair C Not S Not Dangerous Br Hair S C Not Dangerous

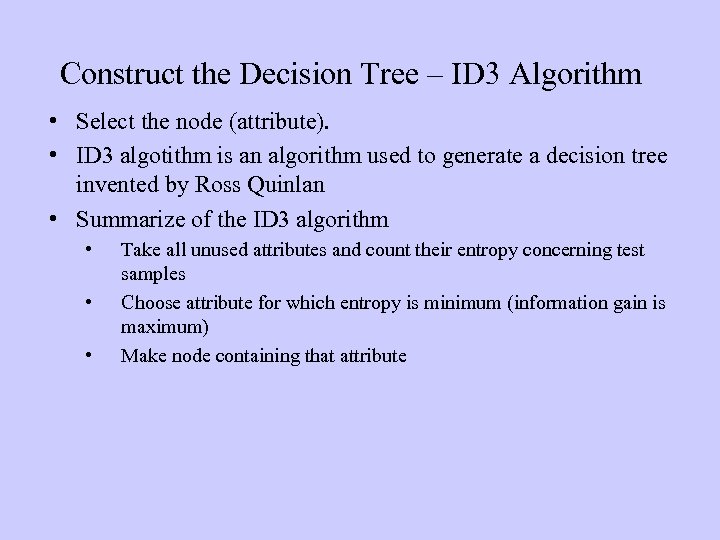

Construct the Decision Tree – ID 3 Algorithm • Select the node (attribute). • ID 3 algotithm is an algorithm used to generate a decision tree invented by Ross Quinlan • Summarize of the ID 3 algorithm • • • Take all unused attributes and count their entropy concerning test samples Choose attribute for which entropy is minimum (information gain is maximum) Make node containing that attribute

Construct the Decision Tree – ID 3 Algorithm • Select the node (attribute). • ID 3 algotithm is an algorithm used to generate a decision tree invented by Ross Quinlan • Summarize of the ID 3 algorithm • • • Take all unused attributes and count their entropy concerning test samples Choose attribute for which entropy is minimum (information gain is maximum) Make node containing that attribute

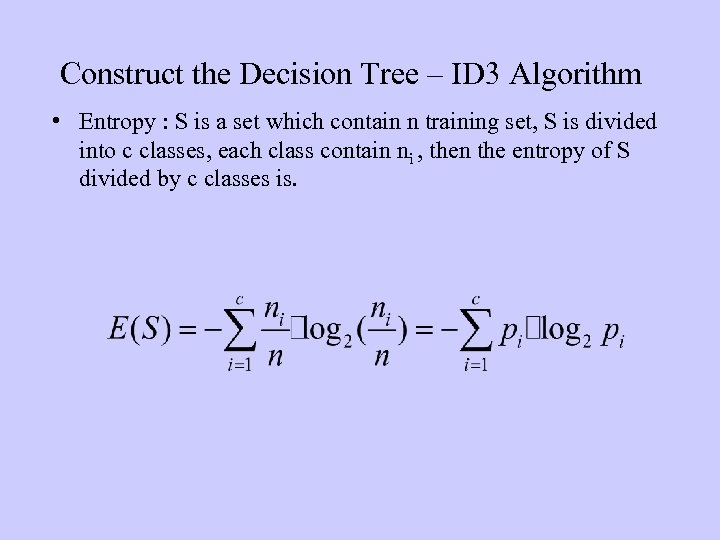

Construct the Decision Tree – ID 3 Algorithm • Entropy : S is a set which contain n training set, S is divided into c classes, each class contain ni , then the entropy of S divided by c classes is.

Construct the Decision Tree – ID 3 Algorithm • Entropy : S is a set which contain n training set, S is divided into c classes, each class contain ni , then the entropy of S divided by c classes is.

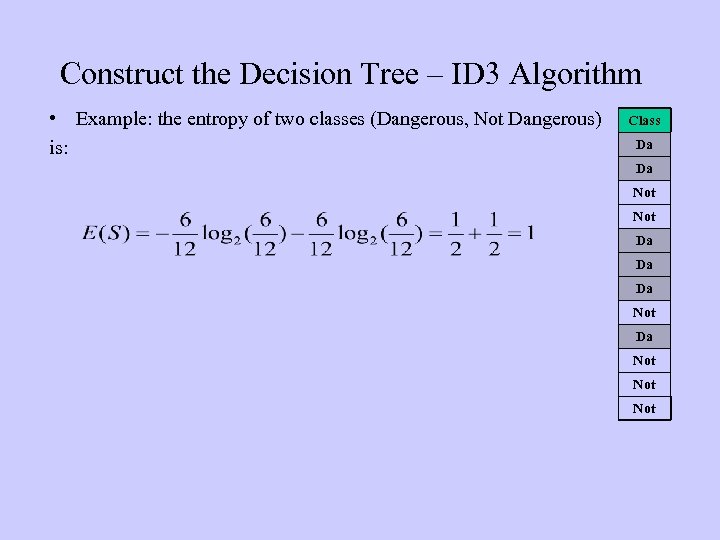

Construct the Decision Tree – ID 3 Algorithm • Example: the entropy of two classes (Dangerous, Not Dangerous) is: Class Da Da Not Da Da Da Not Not

Construct the Decision Tree – ID 3 Algorithm • Example: the entropy of two classes (Dangerous, Not Dangerous) is: Class Da Da Not Da Da Da Not Not

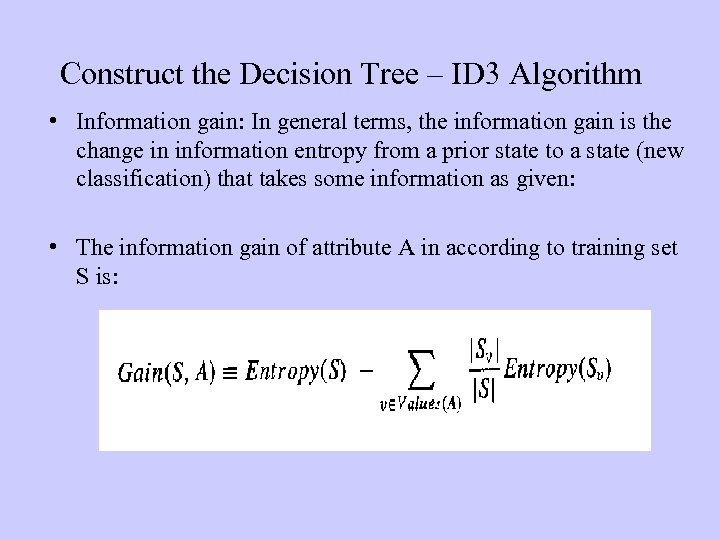

Construct the Decision Tree – ID 3 Algorithm • Information gain: In general terms, the information gain is the change in information entropy from a prior state to a state (new classification) that takes some information as given: • The information gain of attribute A in according to training set S is:

Construct the Decision Tree – ID 3 Algorithm • Information gain: In general terms, the information gain is the change in information entropy from a prior state to a state (new classification) that takes some information as given: • The information gain of attribute A in according to training set S is:

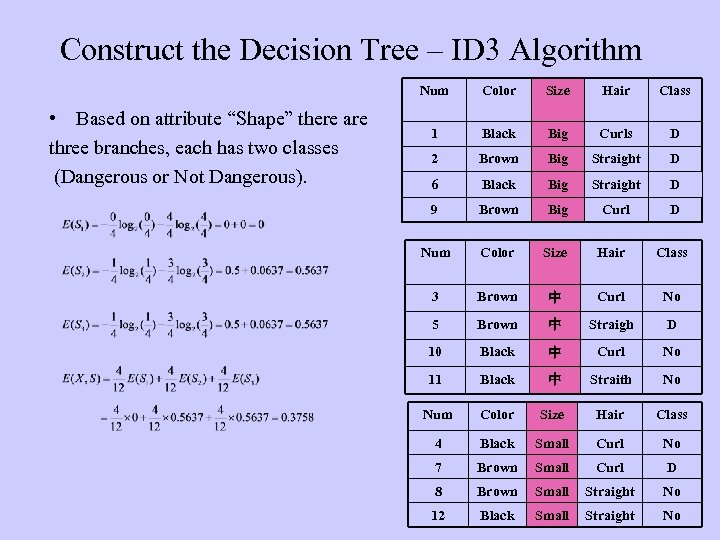

Construct the Decision Tree – ID 3 Algorithm Num • Based on attribute “Shape” there are three branches, each has two classes (Dangerous or Not Dangerous). Color Size Hair Class 1 Black Big Curls D 2 Brown Big Straight D 6 Black Big Straight D 9 Brown Big Curl D Num Color Size Hair Class 3 Brown 中 Curl No 5 Brown 中 Straigh D 10 Black 中 Curl No 11 Black 中 Straith No Num Color Size Hair Class 4 Black Small Curl No 7 Brown Small Curl D 8 Brown Small Straight No 12 Black Small Straight No

Construct the Decision Tree – ID 3 Algorithm Num • Based on attribute “Shape” there are three branches, each has two classes (Dangerous or Not Dangerous). Color Size Hair Class 1 Black Big Curls D 2 Brown Big Straight D 6 Black Big Straight D 9 Brown Big Curl D Num Color Size Hair Class 3 Brown 中 Curl No 5 Brown 中 Straigh D 10 Black 中 Curl No 11 Black 中 Straith No Num Color Size Hair Class 4 Black Small Curl No 7 Brown Small Curl D 8 Brown Small Straight No 12 Black Small Straight No

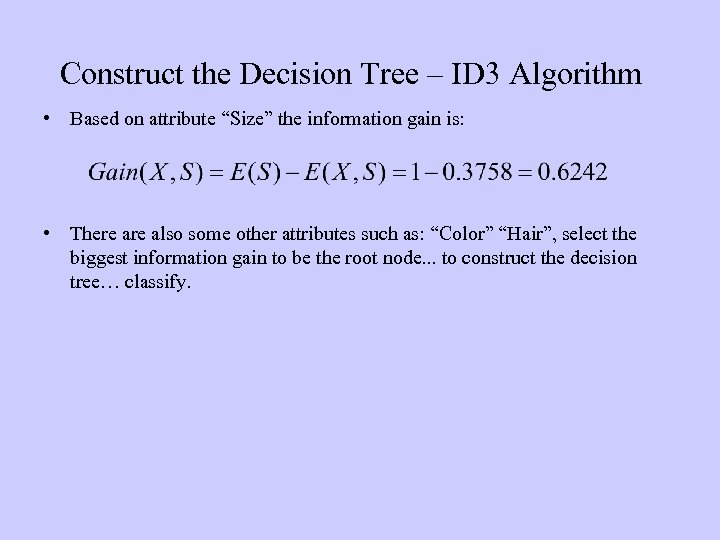

Construct the Decision Tree – ID 3 Algorithm • Based on attribute “Size” the information gain is: • There also some other attributes such as: “Color” “Hair”, select the biggest information gain to be the root node. . . to construct the decision tree… classify.

Construct the Decision Tree – ID 3 Algorithm • Based on attribute “Size” the information gain is: • There also some other attributes such as: “Color” “Hair”, select the biggest information gain to be the root node. . . to construct the decision tree… classify.

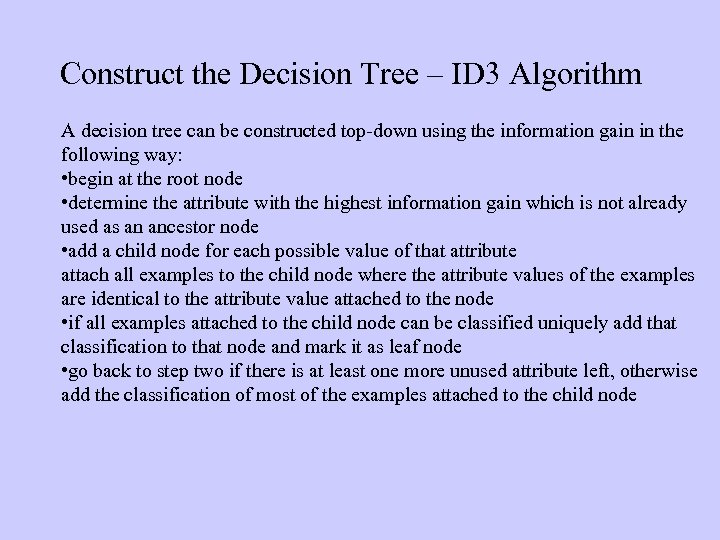

Construct the Decision Tree – ID 3 Algorithm A decision tree can be constructed top-down using the information gain in the following way: • begin at the root node • determine the attribute with the highest information gain which is not already used as an ancestor node • add a child node for each possible value of that attribute attach all examples to the child node where the attribute values of the examples are identical to the attribute value attached to the node • if all examples attached to the child node can be classified uniquely add that classification to that node and mark it as leaf node • go back to step two if there is at least one more unused attribute left, otherwise add the classification of most of the examples attached to the child node

Construct the Decision Tree – ID 3 Algorithm A decision tree can be constructed top-down using the information gain in the following way: • begin at the root node • determine the attribute with the highest information gain which is not already used as an ancestor node • add a child node for each possible value of that attribute attach all examples to the child node where the attribute values of the examples are identical to the attribute value attached to the node • if all examples attached to the child node can be classified uniquely add that classification to that node and mark it as leaf node • go back to step two if there is at least one more unused attribute left, otherwise add the classification of most of the examples attached to the child node

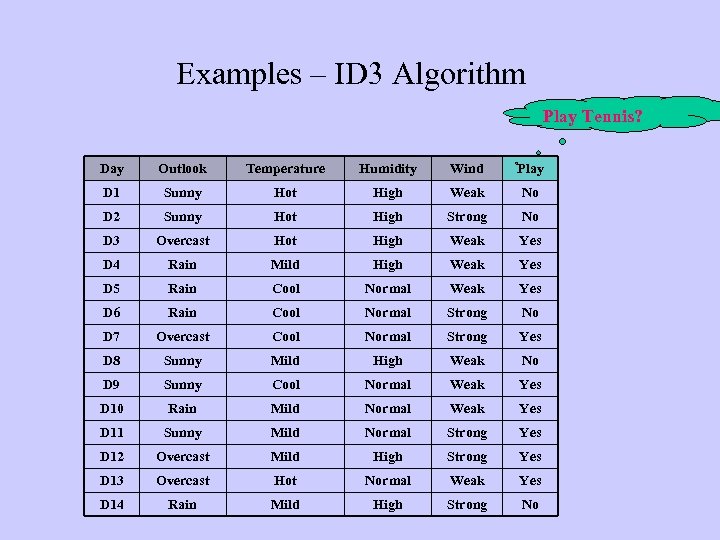

Examples – ID 3 Algorithm Play Tennis? Day Outlook Temperature Humidity Wind Play D 1 Sunny Hot High Weak No D 2 Sunny Hot High Strong No D 3 Overcast Hot High Weak Yes D 4 Rain Mild High Weak Yes D 5 Rain Cool Normal Weak Yes D 6 Rain Cool Normal Strong No D 7 Overcast Cool Normal Strong Yes D 8 Sunny Mild High Weak No D 9 Sunny Cool Normal Weak Yes D 10 Rain Mild Normal Weak Yes D 11 Sunny Mild Normal Strong Yes D 12 Overcast Mild High Strong Yes D 13 Overcast Hot Normal Weak Yes D 14 Rain Mild High Strong No

Examples – ID 3 Algorithm Play Tennis? Day Outlook Temperature Humidity Wind Play D 1 Sunny Hot High Weak No D 2 Sunny Hot High Strong No D 3 Overcast Hot High Weak Yes D 4 Rain Mild High Weak Yes D 5 Rain Cool Normal Weak Yes D 6 Rain Cool Normal Strong No D 7 Overcast Cool Normal Strong Yes D 8 Sunny Mild High Weak No D 9 Sunny Cool Normal Weak Yes D 10 Rain Mild Normal Weak Yes D 11 Sunny Mild Normal Strong Yes D 12 Overcast Mild High Strong Yes D 13 Overcast Hot Normal Weak Yes D 14 Rain Mild High Strong No

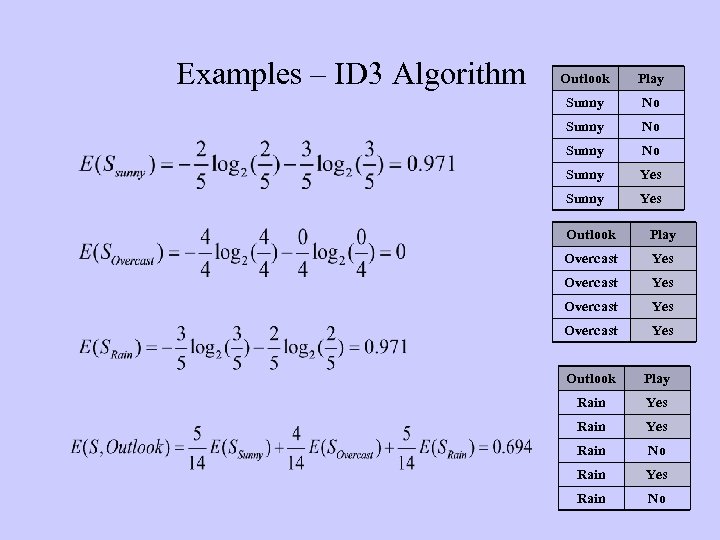

Examples – ID 3 Algorithm • The information gain of the S Sample is: Outlook Sunny No Overcast No Rain • Attribute “Outlook”, compute its information gain: three properties “Sunny” “Overcast” “Rain” Play Yes Rain Yes Overcast No Sunny Yes Sunny No Rain Yes Sunny Yes Overcast Yes Rain Yes No

Examples – ID 3 Algorithm • The information gain of the S Sample is: Outlook Sunny No Overcast No Rain • Attribute “Outlook”, compute its information gain: three properties “Sunny” “Overcast” “Rain” Play Yes Rain Yes Overcast No Sunny Yes Sunny No Rain Yes Sunny Yes Overcast Yes Rain Yes No

Examples – ID 3 Algorithm Outlook Play Sunny No Sunny Yes Outlook Play Overcast Yes Outlook Play Rain Yes Rain No

Examples – ID 3 Algorithm Outlook Play Sunny No Sunny Yes Outlook Play Overcast Yes Outlook Play Rain Yes Rain No

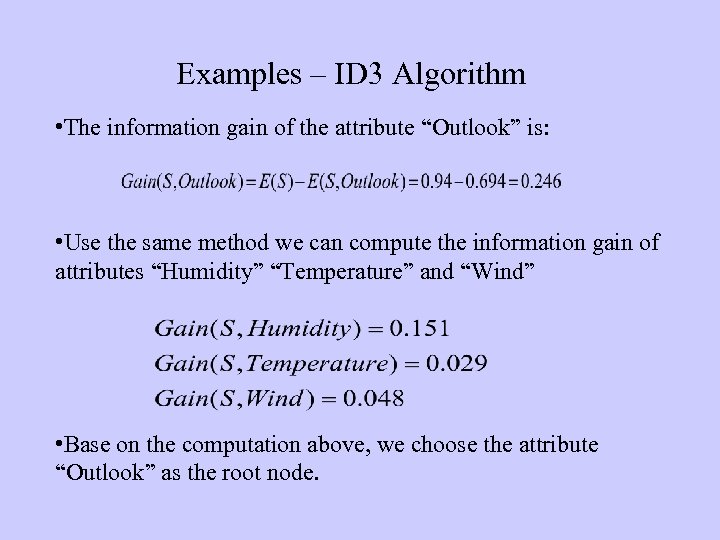

Examples – ID 3 Algorithm • The information gain of the attribute “Outlook” is: • Use the same method we can compute the information gain of attributes “Humidity” “Temperature” and “Wind” • Base on the computation above, we choose the attribute “Outlook” as the root node.

Examples – ID 3 Algorithm • The information gain of the attribute “Outlook” is: • Use the same method we can compute the information gain of attributes “Humidity” “Temperature” and “Wind” • Base on the computation above, we choose the attribute “Outlook” as the root node.

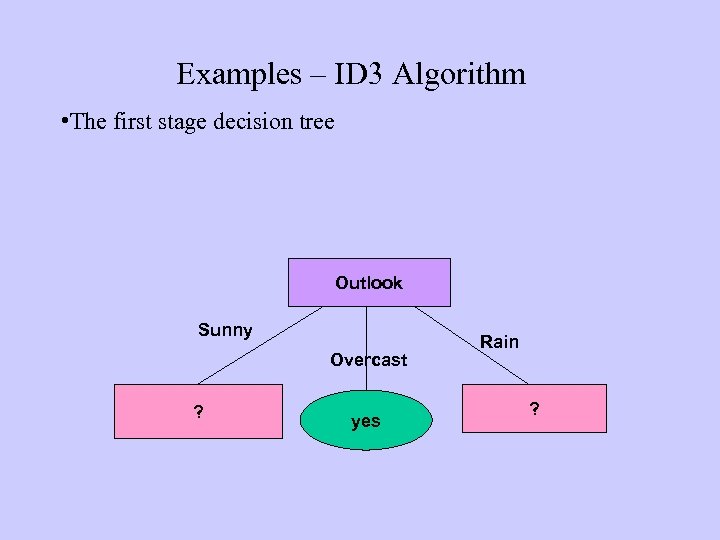

Examples – ID 3 Algorithm • The first stage decision tree Outlook Sunny Overcast ? yes Rain ?

Examples – ID 3 Algorithm • The first stage decision tree Outlook Sunny Overcast ? yes Rain ?

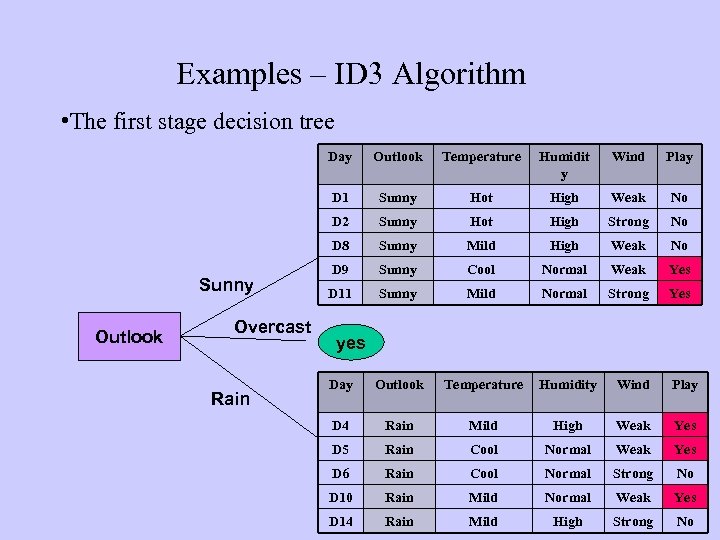

Examples – ID 3 Algorithm • The first stage decision tree Day Rain Wind Play Sunny Hot High Weak No Sunny Hot High Strong No D 8 Overcast Humidit y D 2 Outlook Temperature D 1 Sunny Outlook Sunny Mild High Weak No D 9 Sunny Cool Normal Weak Yes D 11 Sunny Mild Normal Strong Yes Day Outlook Temperature Humidity Wind Play D 4 Rain Mild High Weak Yes D 5 Rain Cool Normal Weak Yes D 6 Rain Cool Normal Strong No D 10 Rain Mild Normal Weak Yes D 14 Rain Mild High Strong No yes

Examples – ID 3 Algorithm • The first stage decision tree Day Rain Wind Play Sunny Hot High Weak No Sunny Hot High Strong No D 8 Overcast Humidit y D 2 Outlook Temperature D 1 Sunny Outlook Sunny Mild High Weak No D 9 Sunny Cool Normal Weak Yes D 11 Sunny Mild Normal Strong Yes Day Outlook Temperature Humidity Wind Play D 4 Rain Mild High Weak Yes D 5 Rain Cool Normal Weak Yes D 6 Rain Cool Normal Strong No D 10 Rain Mild Normal Weak Yes D 14 Rain Mild High Strong No yes

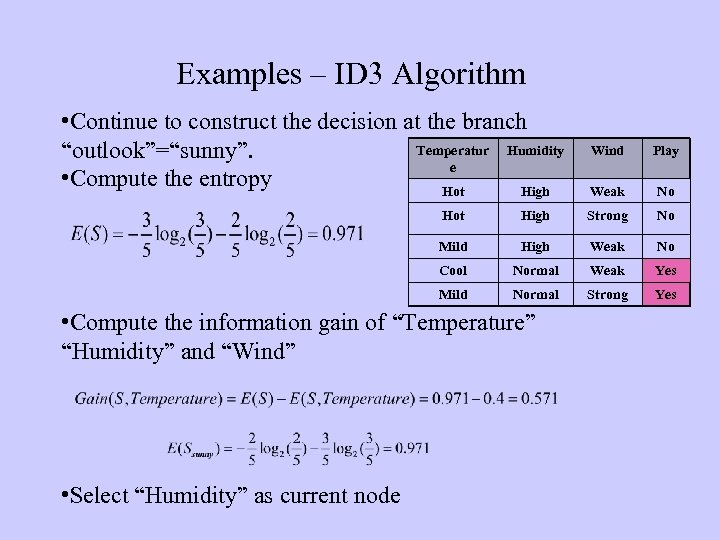

Examples – ID 3 Algorithm • Continue to construct the decision at the branch Temperatur Humidity “outlook”=“sunny”. e • Compute the entropy Hot High Wind Play Weak No Hot High Strong No Mild High Weak No Cool Normal Weak Yes Mild Normal Strong Yes • Compute the information gain of “Temperature” “Humidity” and “Wind” • Select “Humidity” as current node

Examples – ID 3 Algorithm • Continue to construct the decision at the branch Temperatur Humidity “outlook”=“sunny”. e • Compute the entropy Hot High Wind Play Weak No Hot High Strong No Mild High Weak No Cool Normal Weak Yes Mild Normal Strong Yes • Compute the information gain of “Temperature” “Humidity” and “Wind” • Select “Humidity” as current node

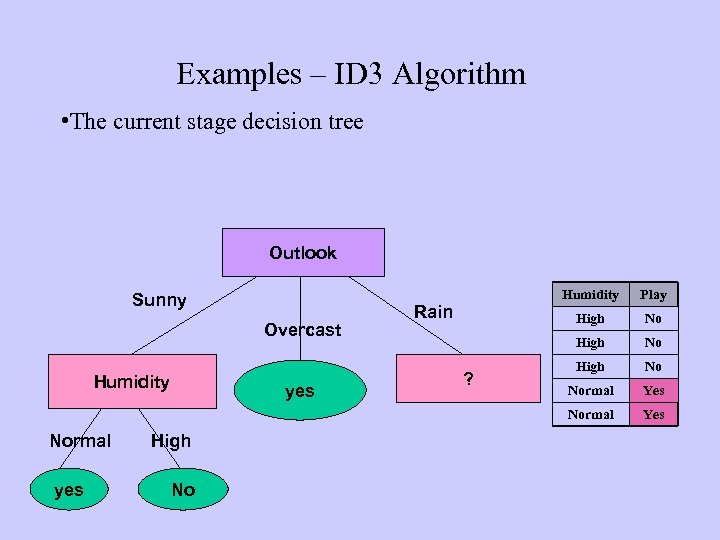

Examples – ID 3 Algorithm • The current stage decision tree Outlook Overcast Humidity Normal yes High No Humidity High ? No High Rain Play No High No Normal Yes Normal Sunny Yes

Examples – ID 3 Algorithm • The current stage decision tree Outlook Overcast Humidity Normal yes High No Humidity High ? No High Rain Play No High No Normal Yes Normal Sunny Yes

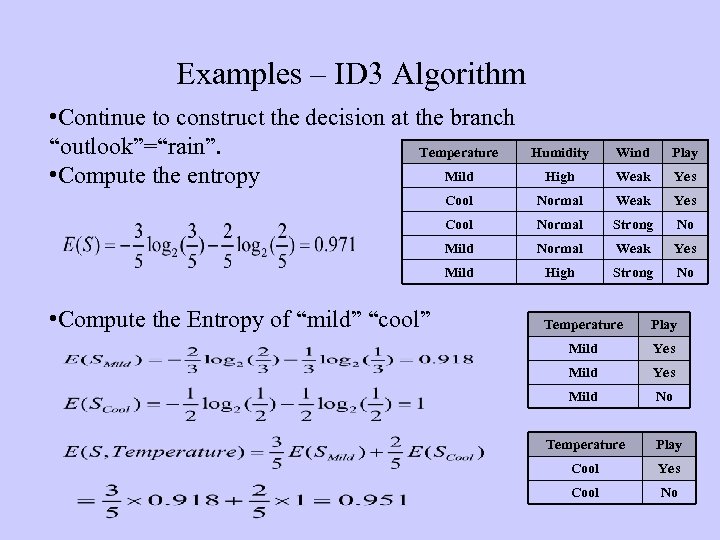

Examples – ID 3 Algorithm • Continue to construct the decision at the branch “outlook”=“rain”. Temperature Mild • Compute the entropy Wind Play High Weak Yes Cool Normal Strong No Mild Normal Weak Yes Mild • Compute the Entropy of “mild” “cool” Humidity High Strong No Temperature Play Mild Yes Mild No Temperature Play Cool Yes Cool No

Examples – ID 3 Algorithm • Continue to construct the decision at the branch “outlook”=“rain”. Temperature Mild • Compute the entropy Wind Play High Weak Yes Cool Normal Strong No Mild Normal Weak Yes Mild • Compute the Entropy of “mild” “cool” Humidity High Strong No Temperature Play Mild Yes Mild No Temperature Play Cool Yes Cool No

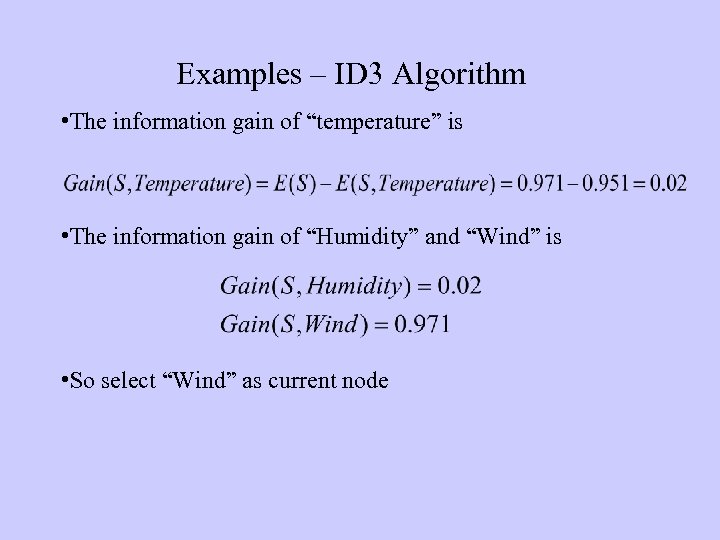

Examples – ID 3 Algorithm • The information gain of “temperature” is • The information gain of “Humidity” and “Wind” is • So select “Wind” as current node

Examples – ID 3 Algorithm • The information gain of “temperature” is • The information gain of “Humidity” and “Wind” is • So select “Wind” as current node

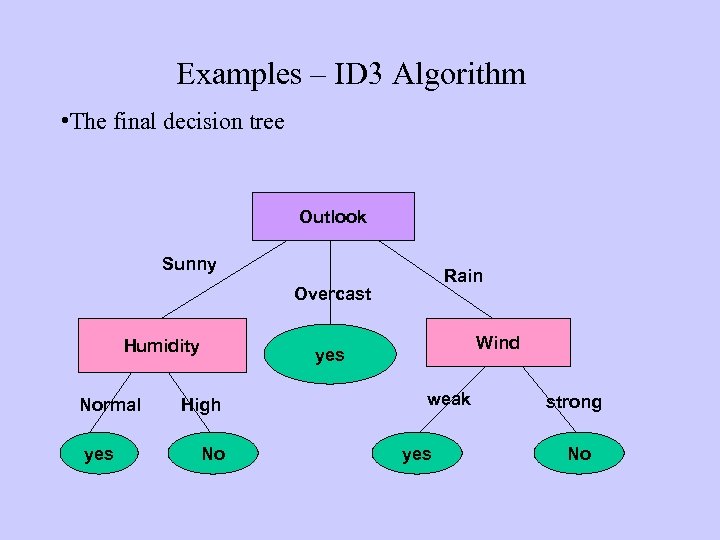

Examples – ID 3 Algorithm • The final decision tree Outlook Sunny Rain Overcast Humidity Normal yes Wind yes High No weak yes strong No

Examples – ID 3 Algorithm • The final decision tree Outlook Sunny Rain Overcast Humidity Normal yes Wind yes High No weak yes strong No

Problems with ID 3 • ID 3 is not optimal – Uses expected entropy reduction, not actual reduction • Must use discrete (or discretized) attributes – What if the wind is just fine(neither strong nor weak )? – We could break down the attributes into smaller values…

Problems with ID 3 • ID 3 is not optimal – Uses expected entropy reduction, not actual reduction • Must use discrete (or discretized) attributes – What if the wind is just fine(neither strong nor weak )? – We could break down the attributes into smaller values…

Problems with Decision Trees • While decision trees classify quickly, the time for building a tree may be higher than another type of classifier • Decision trees suffer from a problem of errors propagating throughout a tree – A very serious problem as the number of classes increases

Problems with Decision Trees • While decision trees classify quickly, the time for building a tree may be higher than another type of classifier • Decision trees suffer from a problem of errors propagating throughout a tree – A very serious problem as the number of classes increases

Error Propagation • Since decision trees work by a series of local decisions, what happens when one of these local decisions is wrong? – Every decision from that point on may be wrong – We may never return to the correct path of the tree

Error Propagation • Since decision trees work by a series of local decisions, what happens when one of these local decisions is wrong? – Every decision from that point on may be wrong – We may never return to the correct path of the tree

ID 3 in Gaming • Black & White, developed by Lionhead Studios, and released in 2001 used ID 3 • Used to predict a player’s reaction to a certain creature’s action • In this model, a greater feedback value means the creature should attack

ID 3 in Gaming • Black & White, developed by Lionhead Studios, and released in 2001 used ID 3 • Used to predict a player’s reaction to a certain creature’s action • In this model, a greater feedback value means the creature should attack

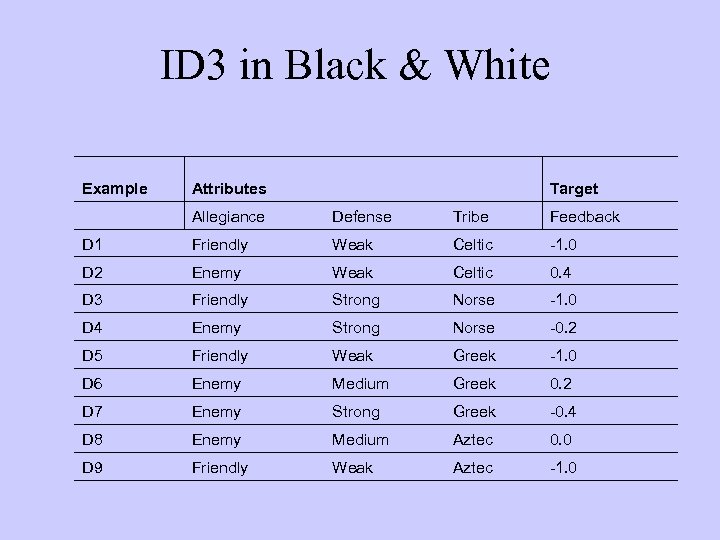

ID 3 in Black & White Example Attributes Target Allegiance Defense Tribe Feedback D 1 Friendly Weak Celtic -1. 0 D 2 Enemy Weak Celtic 0. 4 D 3 Friendly Strong Norse -1. 0 D 4 Enemy Strong Norse -0. 2 D 5 Friendly Weak Greek -1. 0 D 6 Enemy Medium Greek 0. 2 D 7 Enemy Strong Greek -0. 4 D 8 Enemy Medium Aztec 0. 0 D 9 Friendly Weak Aztec -1. 0

ID 3 in Black & White Example Attributes Target Allegiance Defense Tribe Feedback D 1 Friendly Weak Celtic -1. 0 D 2 Enemy Weak Celtic 0. 4 D 3 Friendly Strong Norse -1. 0 D 4 Enemy Strong Norse -0. 2 D 5 Friendly Weak Greek -1. 0 D 6 Enemy Medium Greek 0. 2 D 7 Enemy Strong Greek -0. 4 D 8 Enemy Medium Aztec 0. 0 D 9 Friendly Weak Aztec -1. 0

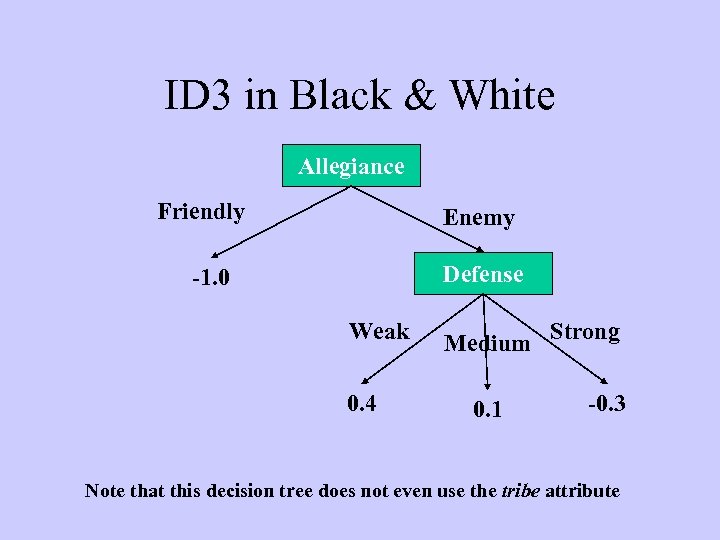

ID 3 in Black & White Allegiance Friendly Enemy Defense -1. 0 Weak 0. 4 Medium Strong 0. 1 -0. 3 Note that this decision tree does not even use the tribe attribute

ID 3 in Black & White Allegiance Friendly Enemy Defense -1. 0 Weak 0. 4 Medium Strong 0. 1 -0. 3 Note that this decision tree does not even use the tribe attribute

ID 3 in Black & White • Now suppose we don’t want the entire decision tree, but we just want the 2 highest feedback values • We can create a Boolean expressions, such as ((Allegiance = Enemy) ^ (Defense = Weak)) v ((Allegiance = Enemy) ^ (Defense = Medium))

ID 3 in Black & White • Now suppose we don’t want the entire decision tree, but we just want the 2 highest feedback values • We can create a Boolean expressions, such as ((Allegiance = Enemy) ^ (Defense = Weak)) v ((Allegiance = Enemy) ^ (Defense = Medium))

Summary • Decision trees can be used to help predict the future • The trees are easy to understand • Decision trees work more efficiently with discrete attributes • The trees may suffer from error propagation

Summary • Decision trees can be used to help predict the future • The trees are easy to understand • Decision trees work more efficiently with discrete attributes • The trees may suffer from error propagation

Reading Assignment • Find some research papers about decision tree applications from google scholar. • http: //en. wikipedia. org/wiki/Decision_tree_learnin g

Reading Assignment • Find some research papers about decision tree applications from google scholar. • http: //en. wikipedia. org/wiki/Decision_tree_learnin g