e98a8b3c721f5ff303c0a0ed485f34cd.ppt

- Количество слайдов: 37

Architecture of Parallel Computers CSC / ECE 506 Open. Fabrics Alliance Lecture 18 7/17/2006 Dr Steve Hunter

Architecture of Parallel Computers CSC / ECE 506 Open. Fabrics Alliance Lecture 18 7/17/2006 Dr Steve Hunter

Outline • Infiniband Ethernet Review • DDP and RDMA • Open. Fabrics Alliance – IP over Infiniband (IPo. IB) – Sockets Direct Protocol (SDP) – Network File System (NFS) – SCSI RDMA Protocol (SRP) – i. SCSI Extensions for RDMA (i. SER) – Reliable Datagram Sockets (RDS) Arch of Parallel Computers 2

Outline • Infiniband Ethernet Review • DDP and RDMA • Open. Fabrics Alliance – IP over Infiniband (IPo. IB) – Sockets Direct Protocol (SDP) – Network File System (NFS) – SCSI RDMA Protocol (SRP) – i. SCSI Extensions for RDMA (i. SER) – Reliable Datagram Sockets (RDS) Arch of Parallel Computers 2

Infiniband Goals - Review • Interconnect for server I/O and efficient interprocess communications • Standard across the industry – backed by all the major players » 200+ companies • With an architecture able to match future systems: – – – Low overhead Scalable bandwidth, up and down Scalable fanout, few to thousands Low cost, excellent price/performance Robust reliability, availability, and serviceability Leverages Internet Protocol suite and paradigms Arch of Parallel Computers CSC / ECE 506 3

Infiniband Goals - Review • Interconnect for server I/O and efficient interprocess communications • Standard across the industry – backed by all the major players » 200+ companies • With an architecture able to match future systems: – – – Low overhead Scalable bandwidth, up and down Scalable fanout, few to thousands Low cost, excellent price/performance Robust reliability, availability, and serviceability Leverages Internet Protocol suite and paradigms Arch of Parallel Computers CSC / ECE 506 3

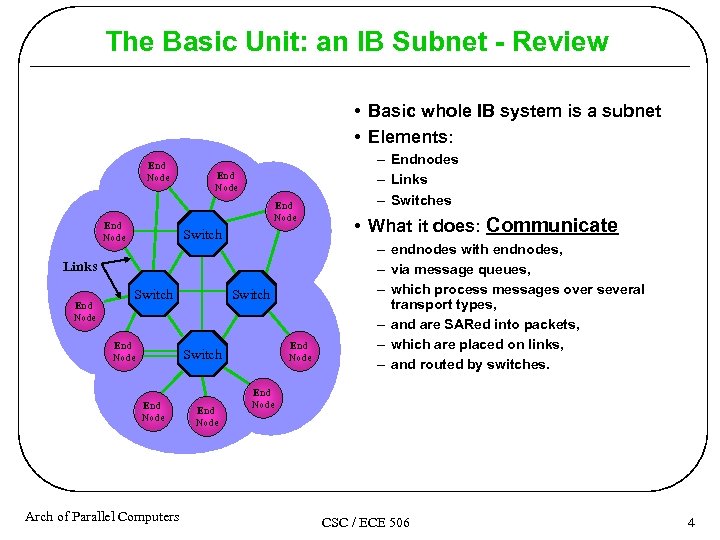

The Basic Unit: an IB Subnet - Review • Basic whole IB system is a subnet • Elements: End Node Switch Links End Node Switch End Node Arch of Parallel Computers End Node – Endnodes – Links – Switches • What it does: Communicate – endnodes with endnodes, – via message queues, – which process messages over several transport types, – and are SARed into packets, – which are placed on links, – and routed by switches. End Node CSC / ECE 506 4

The Basic Unit: an IB Subnet - Review • Basic whole IB system is a subnet • Elements: End Node Switch Links End Node Switch End Node Arch of Parallel Computers End Node – Endnodes – Links – Switches • What it does: Communicate – endnodes with endnodes, – via message queues, – which process messages over several transport types, – and are SARed into packets, – which are placed on links, – and routed by switches. End Node CSC / ECE 506 4

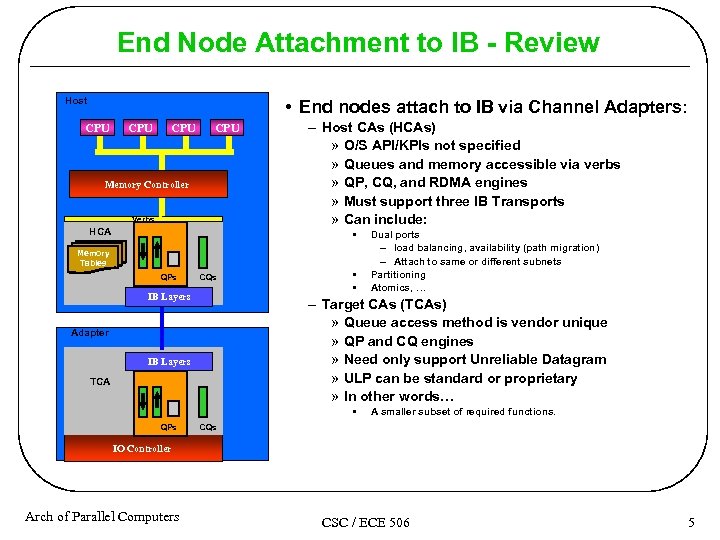

End Node Attachment to IB - Review Host • End nodes attach to IB via Channel Adapters: CPU CPU Memory Controller Verbs HCA – Host CAs (HCAs) » O/S API/KPIs not specified » Queues and memory accessible via verbs » QP, CQ, and RDMA engines » Must support three IB Transports » Can include: • Memory Tables QPs CQs IB Layers • • – Target CAs (TCAs) » Queue access method is vendor unique » QP and CQ engines » Need only support Unreliable Datagram » ULP can be standard or proprietary » In other words… Adapter IB Layers TCA • QPs Dual ports – load balancing, availability (path migration) – Attach to same or different subnets Partitioning Atomics, … A smaller subset of required functions. CQs IO Controller Arch of Parallel Computers CSC / ECE 506 5

End Node Attachment to IB - Review Host • End nodes attach to IB via Channel Adapters: CPU CPU Memory Controller Verbs HCA – Host CAs (HCAs) » O/S API/KPIs not specified » Queues and memory accessible via verbs » QP, CQ, and RDMA engines » Must support three IB Transports » Can include: • Memory Tables QPs CQs IB Layers • • – Target CAs (TCAs) » Queue access method is vendor unique » QP and CQ engines » Need only support Unreliable Datagram » ULP can be standard or proprietary » In other words… Adapter IB Layers TCA • QPs Dual ports – load balancing, availability (path migration) – Attach to same or different subnets Partitioning Atomics, … A smaller subset of required functions. CQs IO Controller Arch of Parallel Computers CSC / ECE 506 5

Infiniband Summary • Infini. Band architecture is a very high performance, low latency interconnect technology based on an industry-standard approach to Remote Direct Memory Access (RDMA) – An Infini. Band fabric is built from hardware and software that are configured, monitored and operated to deliver a variety of services to users and applications • Characteristics of the technology that differentiate it from comparative interconnects such as the traditional Ethernet include: – End-to-end reliable delivery, – Scalable bandwidths from 10 to 60 Gbps available today moving to 120 Gbps in the near future – Scalability without performance degradation – Low latency between devices – Greatly reduced server CPU utilization for protocol processing – Efficient I/O channel architecture for network and storage virtualizations Arch of Parallel Computers 6

Infiniband Summary • Infini. Band architecture is a very high performance, low latency interconnect technology based on an industry-standard approach to Remote Direct Memory Access (RDMA) – An Infini. Band fabric is built from hardware and software that are configured, monitored and operated to deliver a variety of services to users and applications • Characteristics of the technology that differentiate it from comparative interconnects such as the traditional Ethernet include: – End-to-end reliable delivery, – Scalable bandwidths from 10 to 60 Gbps available today moving to 120 Gbps in the near future – Scalability without performance degradation – Low latency between devices – Greatly reduced server CPU utilization for protocol processing – Efficient I/O channel architecture for network and storage virtualizations Arch of Parallel Computers 6

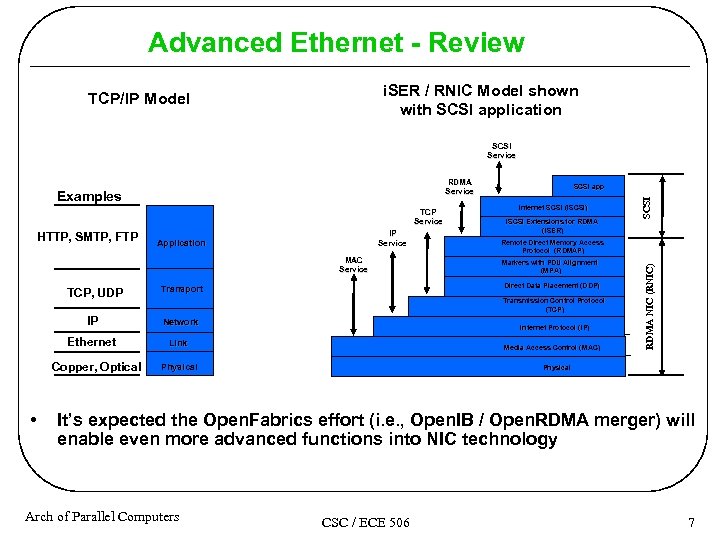

Advanced Ethernet - Review i. SER / RNIC Model shown with SCSI application TCP/IP Model SCSI Service RDMA Service HTTP, SMTP, FTP IP Service Application MAC Service TCP, UDP Network Ethernet Link Copper, Optical • i. SCSI Extensions for RDMA (i. SER) Remote Direct Memory Access Protocol (RDMAP) Markers with PDU Alignment (MPA) Direct Data Placement (DDP) Transport IP Internet SCSI (i. SCSI) Transmission Control Protocol (TCP) Internet Protocol (IP) Media Access Control (MAC) Physical SCSI TCP Service SCSl app RDMA NIC (RNIC) Examples Physical It’s expected the Open. Fabrics effort (i. e. , Open. IB / Open. RDMA merger) will enable even more advanced functions into NIC technology Arch of Parallel Computers CSC / ECE 506 7

Advanced Ethernet - Review i. SER / RNIC Model shown with SCSI application TCP/IP Model SCSI Service RDMA Service HTTP, SMTP, FTP IP Service Application MAC Service TCP, UDP Network Ethernet Link Copper, Optical • i. SCSI Extensions for RDMA (i. SER) Remote Direct Memory Access Protocol (RDMAP) Markers with PDU Alignment (MPA) Direct Data Placement (DDP) Transport IP Internet SCSI (i. SCSI) Transmission Control Protocol (TCP) Internet Protocol (IP) Media Access Control (MAC) Physical SCSI TCP Service SCSl app RDMA NIC (RNIC) Examples Physical It’s expected the Open. Fabrics effort (i. e. , Open. IB / Open. RDMA merger) will enable even more advanced functions into NIC technology Arch of Parallel Computers CSC / ECE 506 7

Advanced Ethernet Summary • The i. WARP technology, implemented as RDMA Network Interface Card (RNIC), achieves Zero-copy, RDMA, and protocol offload over existing TCP/IP networks – It was demonstrated that a 10 Gb. E based RNIC can reduce the CPU processing overhead from 80 -90% to less than 10% comparing to its host stack equivalent – Additionally, its achievable end-to-end latency is now 5 microseconds or less. • i. WARP together with the emerging low latency (low hundreds of nanoseconds) 10 Gb. E switches can also provide a powerful infrastructure for clustered computing, server-to-server processing, visualization and file system – The advantage of the i. WARP technology includes its ability to leverage the widely deployed TCP/IP infrastructure, its broad knowledge base, and mature management and monitoring capabilities. – In addition, an i. WARP infrastructure is a routable infrastructure, thereby eliminating the need for gateways to connect to the LAN or WAN internet. Arch of Parallel Computers 8

Advanced Ethernet Summary • The i. WARP technology, implemented as RDMA Network Interface Card (RNIC), achieves Zero-copy, RDMA, and protocol offload over existing TCP/IP networks – It was demonstrated that a 10 Gb. E based RNIC can reduce the CPU processing overhead from 80 -90% to less than 10% comparing to its host stack equivalent – Additionally, its achievable end-to-end latency is now 5 microseconds or less. • i. WARP together with the emerging low latency (low hundreds of nanoseconds) 10 Gb. E switches can also provide a powerful infrastructure for clustered computing, server-to-server processing, visualization and file system – The advantage of the i. WARP technology includes its ability to leverage the widely deployed TCP/IP infrastructure, its broad knowledge base, and mature management and monitoring capabilities. – In addition, an i. WARP infrastructure is a routable infrastructure, thereby eliminating the need for gateways to connect to the LAN or WAN internet. Arch of Parallel Computers 8

DDP and RDMA • IETF RFC http: //rfc. net/rfc 4296. html • The central idea of general-purpose DDP is that a data sender will supplement the data it sends with placement information that allows the receiver's network interface to place the data directly at its final destination without any copying. – DDP can be used to steer received data to its final destination, without requiring layerspecific behavior for each different layer. – Data sent with such DDP information is said to be `tagged'. • The central components of the DDP architecture are the “buffer”, which is an object with beginning and ending addresses, and a method (set()), which sets the value of an octet at an address. – In many cases, a buffer corresponds directly to a portion of host user memory. However, DDP does not depend on this; a buffer could be a disk file, or anything else that can be viewed as an addressable collection of octets. Arch of Parallel Computers 9

DDP and RDMA • IETF RFC http: //rfc. net/rfc 4296. html • The central idea of general-purpose DDP is that a data sender will supplement the data it sends with placement information that allows the receiver's network interface to place the data directly at its final destination without any copying. – DDP can be used to steer received data to its final destination, without requiring layerspecific behavior for each different layer. – Data sent with such DDP information is said to be `tagged'. • The central components of the DDP architecture are the “buffer”, which is an object with beginning and ending addresses, and a method (set()), which sets the value of an octet at an address. – In many cases, a buffer corresponds directly to a portion of host user memory. However, DDP does not depend on this; a buffer could be a disk file, or anything else that can be viewed as an addressable collection of octets. Arch of Parallel Computers 9

DDP and RDMA • Remote Direct Memory Access (RDMA) extends the capabilities of DDP with two primary functions. – It adds the ability to read from buffers registered to a socket (RDMA Read). » This allows a client protocol to perform arbitrary, bidirectional data movement without involving the remote client. » When RDMA is implemented in hardware, arbitrary data movement can be performed without involving the remote host CPU at all. • RDMA specifies a transport-independent untagged message service (Send) with characteristics that are both very efficient to implement in hardware, and convenient for client protocols. – The RDMA architecture is patterned after the traditional model for device programming, where the client requests an operation using Send-like actions (programmed I/O), the server performs the necessary data transfers for the operation (DMA reads and writes), and notifies the client of completion. » The programmed I/O+DMA model efficiently supports a high degree of concurrency and flexibility for both the client and server, even when operations have a wide range of intrinsic latencies. Arch of Parallel Computers 10

DDP and RDMA • Remote Direct Memory Access (RDMA) extends the capabilities of DDP with two primary functions. – It adds the ability to read from buffers registered to a socket (RDMA Read). » This allows a client protocol to perform arbitrary, bidirectional data movement without involving the remote client. » When RDMA is implemented in hardware, arbitrary data movement can be performed without involving the remote host CPU at all. • RDMA specifies a transport-independent untagged message service (Send) with characteristics that are both very efficient to implement in hardware, and convenient for client protocols. – The RDMA architecture is patterned after the traditional model for device programming, where the client requests an operation using Send-like actions (programmed I/O), the server performs the necessary data transfers for the operation (DMA reads and writes), and notifies the client of completion. » The programmed I/O+DMA model efficiently supports a high degree of concurrency and flexibility for both the client and server, even when operations have a wide range of intrinsic latencies. Arch of Parallel Computers 10

Open. Fabrics Alliance • The Open. Fabric Alliance is an international organization comprised of industry, academic and research groups that have developed a unified core of open source software stacks (Open. STAC) leveraging RDMA architectures for both the Linux and Windows operating systems over both Infini. Band Ethernet. – RDMA is a communications technique allowing data to be transmitted from the memory of one computer to the memory of another computer without passing through either devices CPU, without needing extensive buffering, and without calling to an operating system kernel • The core Open. STAC software supports all the well known standard upper layer protocols such as MPI, IP, SDP, NFS, SRP, i. SER, and RDS on top of Ethernet and Infini. Band (IB) infrastructures – The Open. Fabric software and supporting services better enables low-latency Infini. Band 10 Gb. E to deliver clustered computing, server-to-server processing, visualization and file system access Arch of Parallel Computers 11

Open. Fabrics Alliance • The Open. Fabric Alliance is an international organization comprised of industry, academic and research groups that have developed a unified core of open source software stacks (Open. STAC) leveraging RDMA architectures for both the Linux and Windows operating systems over both Infini. Band Ethernet. – RDMA is a communications technique allowing data to be transmitted from the memory of one computer to the memory of another computer without passing through either devices CPU, without needing extensive buffering, and without calling to an operating system kernel • The core Open. STAC software supports all the well known standard upper layer protocols such as MPI, IP, SDP, NFS, SRP, i. SER, and RDS on top of Ethernet and Infini. Band (IB) infrastructures – The Open. Fabric software and supporting services better enables low-latency Infini. Band 10 Gb. E to deliver clustered computing, server-to-server processing, visualization and file system access Arch of Parallel Computers 11

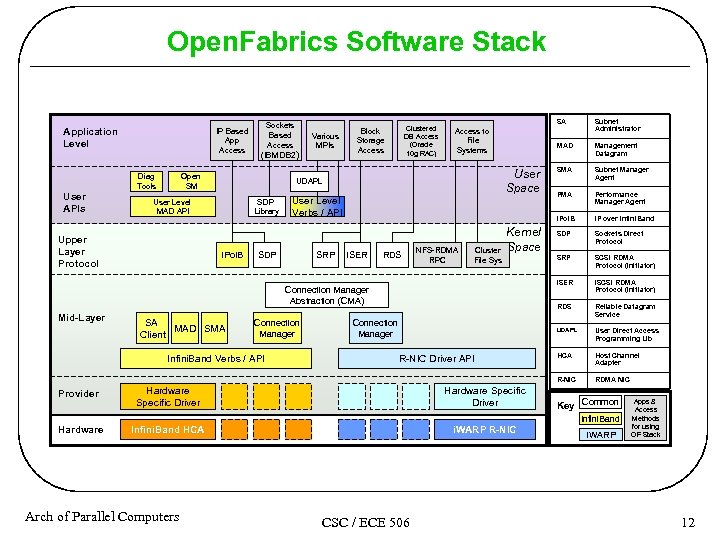

Open. Fabrics Software Stack User APIs IP Based App Access Diag Tools Sockets Based Access (IBM DB 2) Open SM SDP Library IPo. IB SDP SRP i. SER Cluster File Sys Connection Manager Abstraction (CMA) Mid-Layer SA MAD SMA Client Connection Manager Infini. Band Verbs / API Connection Manager R-NIC Driver API Provider Hardware Specific Driver Hardware Infini. Band HCA i. WARP R-NIC PMA Performance Manager Agent IP over Infini. Band SDP Sockets Direct Protocol SRP SCSI RDMA Protocol (Initiator) i. SCSI RDMA Protocol (Initiator) Reliable Datagram Service UDAPL NFS-RDMA RPC Subnet Manager Agent RDS Kernel Space SMA i. SER User Level Verbs / API Management Datagram IPo. IB User Space Subnet Administrator MAD Access to File Systems UDAPL User Level MAD API Upper Layer Protocol Various MPIs Clustered DB Access (Oracle 10 g RAC) Block Storage Access SA User Direct Access Programming Lib HCA Host Channel Adapter R-NIC Application Level RDMA NIC Key Common Infini. Band Arch of Parallel Computers CSC / ECE 506 i. WARP Apps & Access Methods for using OF Stack 12

Open. Fabrics Software Stack User APIs IP Based App Access Diag Tools Sockets Based Access (IBM DB 2) Open SM SDP Library IPo. IB SDP SRP i. SER Cluster File Sys Connection Manager Abstraction (CMA) Mid-Layer SA MAD SMA Client Connection Manager Infini. Band Verbs / API Connection Manager R-NIC Driver API Provider Hardware Specific Driver Hardware Infini. Band HCA i. WARP R-NIC PMA Performance Manager Agent IP over Infini. Band SDP Sockets Direct Protocol SRP SCSI RDMA Protocol (Initiator) i. SCSI RDMA Protocol (Initiator) Reliable Datagram Service UDAPL NFS-RDMA RPC Subnet Manager Agent RDS Kernel Space SMA i. SER User Level Verbs / API Management Datagram IPo. IB User Space Subnet Administrator MAD Access to File Systems UDAPL User Level MAD API Upper Layer Protocol Various MPIs Clustered DB Access (Oracle 10 g RAC) Block Storage Access SA User Direct Access Programming Lib HCA Host Channel Adapter R-NIC Application Level RDMA NIC Key Common Infini. Band Arch of Parallel Computers CSC / ECE 506 i. WARP Apps & Access Methods for using OF Stack 12

IP over IB (IPo. IB) • IETF Standard for mapping Internet protocols to Infiniband – IETF IPo. IB Working Group • Covers – Fabric initialization – Multicast/Broadcast – Address resolution (IPv 4/IPv 6) – IP Datagram encapsulation (IPv 4/IPv 6) – MIBs Arch of Parallel Computers 13

IP over IB (IPo. IB) • IETF Standard for mapping Internet protocols to Infiniband – IETF IPo. IB Working Group • Covers – Fabric initialization – Multicast/Broadcast – Address resolution (IPv 4/IPv 6) – IP Datagram encapsulation (IPv 4/IPv 6) – MIBs Arch of Parallel Computers 13

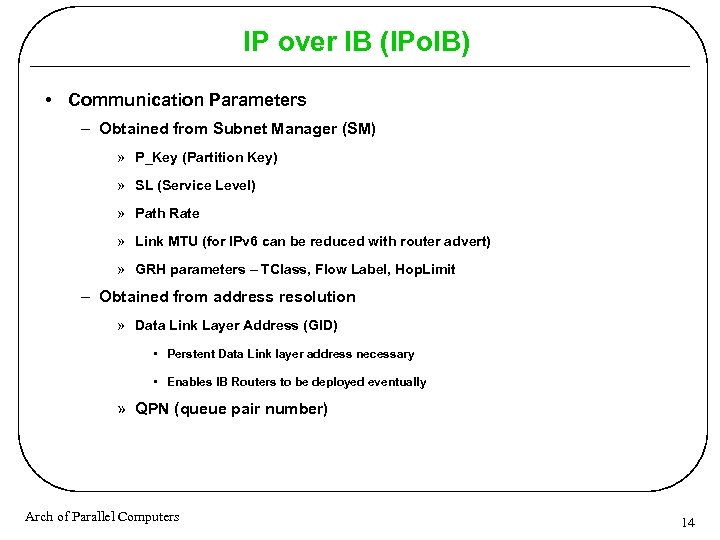

IP over IB (IPo. IB) • Communication Parameters – Obtained from Subnet Manager (SM) » P_Key (Partition Key) » SL (Service Level) » Path Rate » Link MTU (for IPv 6 can be reduced with router advert) » GRH parameters – TClass, Flow Label, Hop. Limit – Obtained from address resolution » Data Link Layer Address (GID) • Perstent Data Link layer address necessary • Enables IB Routers to be deployed eventually » QPN (queue pair number) Arch of Parallel Computers 14

IP over IB (IPo. IB) • Communication Parameters – Obtained from Subnet Manager (SM) » P_Key (Partition Key) » SL (Service Level) » Path Rate » Link MTU (for IPv 6 can be reduced with router advert) » GRH parameters – TClass, Flow Label, Hop. Limit – Obtained from address resolution » Data Link Layer Address (GID) • Perstent Data Link layer address necessary • Enables IB Routers to be deployed eventually » QPN (queue pair number) Arch of Parallel Computers 14

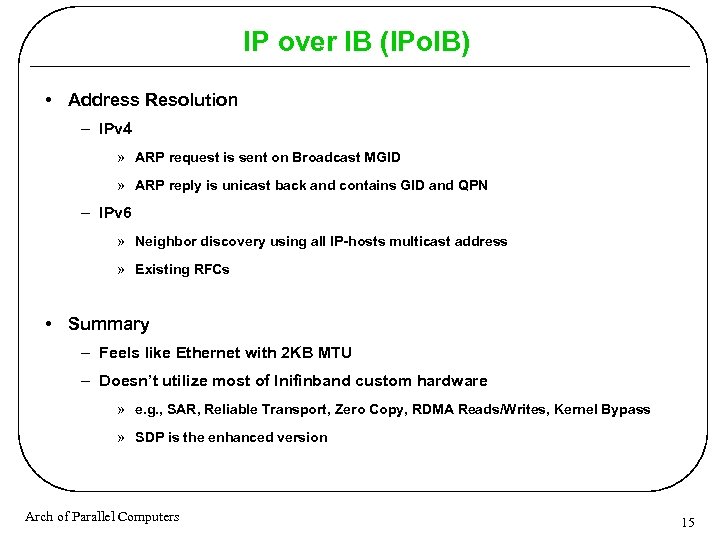

IP over IB (IPo. IB) • Address Resolution – IPv 4 » ARP request is sent on Broadcast MGID » ARP reply is unicast back and contains GID and QPN – IPv 6 » Neighbor discovery using all IP-hosts multicast address » Existing RFCs • Summary – Feels like Ethernet with 2 KB MTU – Doesn’t utilize most of Inifinband custom hardware » e. g. , SAR, Reliable Transport, Zero Copy, RDMA Reads/Writes, Kernel Bypass » SDP is the enhanced version Arch of Parallel Computers 15

IP over IB (IPo. IB) • Address Resolution – IPv 4 » ARP request is sent on Broadcast MGID » ARP reply is unicast back and contains GID and QPN – IPv 6 » Neighbor discovery using all IP-hosts multicast address » Existing RFCs • Summary – Feels like Ethernet with 2 KB MTU – Doesn’t utilize most of Inifinband custom hardware » e. g. , SAR, Reliable Transport, Zero Copy, RDMA Reads/Writes, Kernel Bypass » SDP is the enhanced version Arch of Parallel Computers 15

Sockets Direct Protocol (SDP) • Based on Microsoft’s Winsock Direct Protocol • SDP Feature Summary – Maps sockets SOCK_STREAM to RDMA semantics – Optimizations for transaction oriented protocols – Optimizations for mixing of small and large messages • Uses advanced Infiniband features – Reliable Connected (RC) service – Uses RDMA Writes, Reads, and Sends – Supports Automatic Path Migration Arch of Parallel Computers 16

Sockets Direct Protocol (SDP) • Based on Microsoft’s Winsock Direct Protocol • SDP Feature Summary – Maps sockets SOCK_STREAM to RDMA semantics – Optimizations for transaction oriented protocols – Optimizations for mixing of small and large messages • Uses advanced Infiniband features – Reliable Connected (RC) service – Uses RDMA Writes, Reads, and Sends – Supports Automatic Path Migration Arch of Parallel Computers 16

SDP Terminology • Data Source – Side of connection which is sourcing the ULP data to be transferred • Data Sink – Side of connection which is receiving (sinking) the ULP data • Data Transfer Mechanism – To move ULP data from Data Source to Data Sink (e. g. , Bcopy, Receiver Initiated Zcopy, Read Zcopy) • Flow Control Mode – State that the half connection is currently in (Combined, Pipelined, Buffered) • Bcopy Threshold – If message length is under threshold, use Bcopy mechanism. Threshold is locally defined. Arch of Parallel Computers 17

SDP Terminology • Data Source – Side of connection which is sourcing the ULP data to be transferred • Data Sink – Side of connection which is receiving (sinking) the ULP data • Data Transfer Mechanism – To move ULP data from Data Source to Data Sink (e. g. , Bcopy, Receiver Initiated Zcopy, Read Zcopy) • Flow Control Mode – State that the half connection is currently in (Combined, Pipelined, Buffered) • Bcopy Threshold – If message length is under threshold, use Bcopy mechanism. Threshold is locally defined. Arch of Parallel Computers 17

SDP Modes • Flow Control Modes restrict data transfer mechanisms • Buffered Mode – Used when receiver wishes to force all transfers to use the Bcopy Mechanism • Combined Mode – Used when receiver is not pre-posting buffers and uses peek/select interface (Bcopy or Read Zcopy, only one outstanding) • Pipelined Mode – Highly optimized transfer mode – multiple write or read buffers outstanding, can use all data transfer mechanisms (Bcopy, Read Zcopy, Receive Initiated Write Zcopy) Arch of Parallel Computers 18

SDP Modes • Flow Control Modes restrict data transfer mechanisms • Buffered Mode – Used when receiver wishes to force all transfers to use the Bcopy Mechanism • Combined Mode – Used when receiver is not pre-posting buffers and uses peek/select interface (Bcopy or Read Zcopy, only one outstanding) • Pipelined Mode – Highly optimized transfer mode – multiple write or read buffers outstanding, can use all data transfer mechanisms (Bcopy, Read Zcopy, Receive Initiated Write Zcopy) Arch of Parallel Computers 18

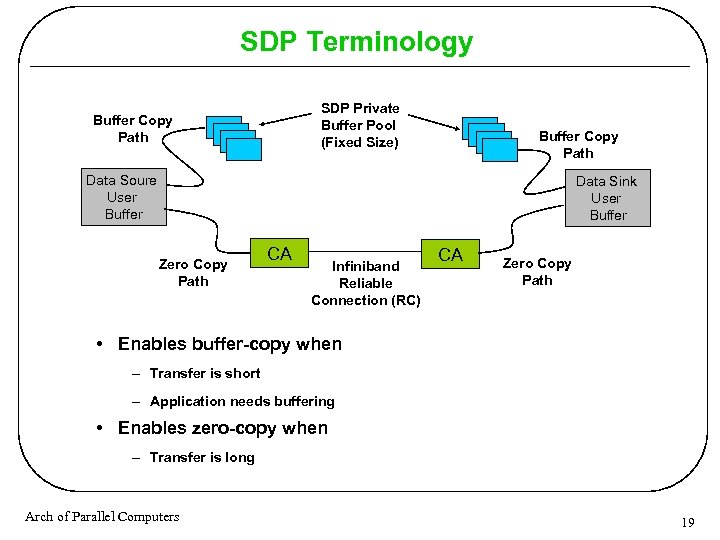

SDP Terminology SDP Private Buffer Pool (Fixed Size) Buffer Copy Path Data Soure User Buffer Data Sink User Buffer Zero Copy Path CA Infiniband Reliable Connection (RC) CA Zero Copy Path • Enables buffer-copy when – Transfer is short – Application needs buffering • Enables zero-copy when – Transfer is long Arch of Parallel Computers 19

SDP Terminology SDP Private Buffer Pool (Fixed Size) Buffer Copy Path Data Soure User Buffer Data Sink User Buffer Zero Copy Path CA Infiniband Reliable Connection (RC) CA Zero Copy Path • Enables buffer-copy when – Transfer is short – Application needs buffering • Enables zero-copy when – Transfer is long Arch of Parallel Computers 19

Network File System (NFS) • Network File System (NFS) is a protocol originally developed by Sun Microsystems in 1984 and defined in RFCs 1094, 1813, and 3530 (obsoletes 3010), as a distributed file system which allows a computer to access files over a network as easily as if they were on its local disks. – NFS is one of many protocols built on the Open Network Computing Remote Procedure Call system (ONC RPC) • Version 2 of the protocol – originally operated entirely over UDP and was meant to keep the protocol stateless, with locking (for example) implemented outside of the core protocol • Version 3 added: – support for 64 -bit file sizes and offsets, to handle files larger than 4 GB – support for asynchronous writes on the server, to improve write performance; – additional file attributes in many replies, to avoid the need to refetch them; – a READDIRPLUS operation, to get file handles and attributes along with file names when scanning a directory; – assorted other improvements. Arch of Parallel Computers 20

Network File System (NFS) • Network File System (NFS) is a protocol originally developed by Sun Microsystems in 1984 and defined in RFCs 1094, 1813, and 3530 (obsoletes 3010), as a distributed file system which allows a computer to access files over a network as easily as if they were on its local disks. – NFS is one of many protocols built on the Open Network Computing Remote Procedure Call system (ONC RPC) • Version 2 of the protocol – originally operated entirely over UDP and was meant to keep the protocol stateless, with locking (for example) implemented outside of the core protocol • Version 3 added: – support for 64 -bit file sizes and offsets, to handle files larger than 4 GB – support for asynchronous writes on the server, to improve write performance; – additional file attributes in many replies, to avoid the need to refetch them; – a READDIRPLUS operation, to get file handles and attributes along with file names when scanning a directory; – assorted other improvements. Arch of Parallel Computers 20

Network File System (NFS) • Version 4 (RFC 3530) – Influenced by AFS and CIFS, includes performance improvements, mandates strong security, and introduces a stateful protocol. Version 4 was the first version developed with the Internet Engineering Task Force (IETF) after Sun Microsystems handed over the development of the NFS protocols. • Various side-band protocols have been added to NFS, including: – The byte-range advisory Network Lock Manager (NLM) protocol which was added to support System V UNIX file locking APIs. – The remote quota reporting (RQUOTAD) protocol to allow NFS users to view their data storage quotas on NFS servers. • Web. NFS is an extension to Version 2 and Version 3 which allows NFS to be more easily integrated into Web browsers and to enable operation through firewalls. Arch of Parallel Computers 21

Network File System (NFS) • Version 4 (RFC 3530) – Influenced by AFS and CIFS, includes performance improvements, mandates strong security, and introduces a stateful protocol. Version 4 was the first version developed with the Internet Engineering Task Force (IETF) after Sun Microsystems handed over the development of the NFS protocols. • Various side-band protocols have been added to NFS, including: – The byte-range advisory Network Lock Manager (NLM) protocol which was added to support System V UNIX file locking APIs. – The remote quota reporting (RQUOTAD) protocol to allow NFS users to view their data storage quotas on NFS servers. • Web. NFS is an extension to Version 2 and Version 3 which allows NFS to be more easily integrated into Web browsers and to enable operation through firewalls. Arch of Parallel Computers 21

SCSI RDMA Protocol (SRP) • SRP defines a SCSI protocol mapping onto the Infini. Band Architecture and/or functionally similar cluster protocols • RDMA Consortium voted to create i. SER instead of porting SRP to IP – SRP doesn’t have a wide following – SRP doesn’t have a discovery or management protocol – Version 2 of SRP hasn’t been updated for 1. 5 years Arch of Parallel Computers 22

SCSI RDMA Protocol (SRP) • SRP defines a SCSI protocol mapping onto the Infini. Band Architecture and/or functionally similar cluster protocols • RDMA Consortium voted to create i. SER instead of porting SRP to IP – SRP doesn’t have a wide following – SRP doesn’t have a discovery or management protocol – Version 2 of SRP hasn’t been updated for 1. 5 years Arch of Parallel Computers 22

i. SCSI Extensions for RDMA (i. SER) • i. SER combines SRP and i. SCSI with new RDMA capabilities • i. SER is maintained as part of i. SCSI in IETF – Recently extended to IB by IBM, Voltaire, HP, EMC, and others • Benefits to add i. SER to IB – Combines same (almost) storage protocol across all RDMA Networks » Easier to train staff » Bridging products more staight-forward » Motivate storage community to i. SCSI/i. SER mentality and may help with acceptance on IP – Desire for a common Discovery and Management protocol across i. SCSI, i. SER/i. WARP, and IP » i. e. , same Management and discovery process and software to handle IP networks and IB networks Arch of Parallel Computers 23

i. SCSI Extensions for RDMA (i. SER) • i. SER combines SRP and i. SCSI with new RDMA capabilities • i. SER is maintained as part of i. SCSI in IETF – Recently extended to IB by IBM, Voltaire, HP, EMC, and others • Benefits to add i. SER to IB – Combines same (almost) storage protocol across all RDMA Networks » Easier to train staff » Bridging products more staight-forward » Motivate storage community to i. SCSI/i. SER mentality and may help with acceptance on IP – Desire for a common Discovery and Management protocol across i. SCSI, i. SER/i. WARP, and IP » i. e. , same Management and discovery process and software to handle IP networks and IB networks Arch of Parallel Computers 23

i. SCSI Extensions for RDMA (i. SER) • i. SCSI’s main performance deficiencies stem from TCP/IP – TCP is a complex protocol requiring significant processing – Stream based, making it hard to separate data and headers – Requires copies that increase latency and CPU overhead – Using checksums requiring additional CRCs in the ULP • i. SER eliminates the bottlenecks through: – Zero copy using RDMA – CRC calculated by hardware – Work with message boundaries instead of streams – Transport protocol implemented in hardware (minimal CPU cycles per i. O) Arch of Parallel Computers 24

i. SCSI Extensions for RDMA (i. SER) • i. SCSI’s main performance deficiencies stem from TCP/IP – TCP is a complex protocol requiring significant processing – Stream based, making it hard to separate data and headers – Requires copies that increase latency and CPU overhead – Using checksums requiring additional CRCs in the ULP • i. SER eliminates the bottlenecks through: – Zero copy using RDMA – CRC calculated by hardware – Work with message boundaries instead of streams – Transport protocol implemented in hardware (minimal CPU cycles per i. O) Arch of Parallel Computers 24

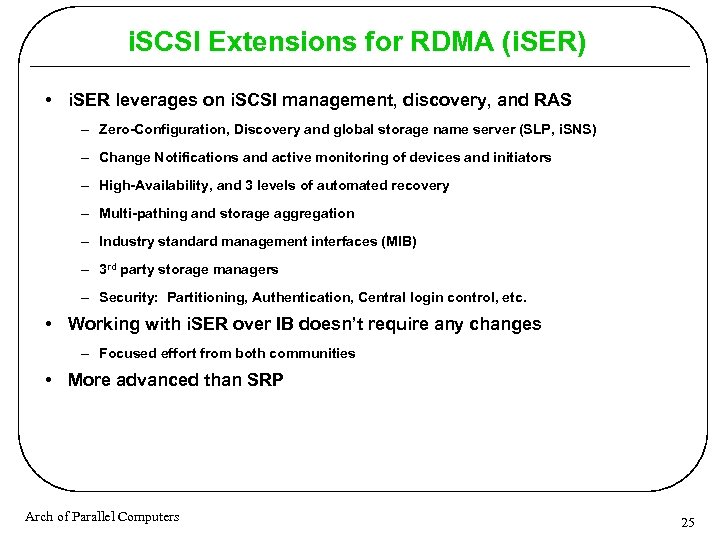

i. SCSI Extensions for RDMA (i. SER) • i. SER leverages on i. SCSI management, discovery, and RAS – Zero-Configuration, Discovery and global storage name server (SLP, i. SNS) – Change Notifications and active monitoring of devices and initiators – High-Availability, and 3 levels of automated recovery – Multi-pathing and storage aggregation – Industry standard management interfaces (MIB) – 3 rd party storage managers – Security: Partitioning, Authentication, Central login control, etc. • Working with i. SER over IB doesn’t require any changes – Focused effort from both communities • More advanced than SRP Arch of Parallel Computers 25

i. SCSI Extensions for RDMA (i. SER) • i. SER leverages on i. SCSI management, discovery, and RAS – Zero-Configuration, Discovery and global storage name server (SLP, i. SNS) – Change Notifications and active monitoring of devices and initiators – High-Availability, and 3 levels of automated recovery – Multi-pathing and storage aggregation – Industry standard management interfaces (MIB) – 3 rd party storage managers – Security: Partitioning, Authentication, Central login control, etc. • Working with i. SER over IB doesn’t require any changes – Focused effort from both communities • More advanced than SRP Arch of Parallel Computers 25

i. SCSI Extensions for RDMA (i. SER) • i. SCSI specification: – http: //www. ietf. org/rfc 3720. txt • i. SER and DA Introduction – http: //www. rdmaconsortium. org/home/i. SER_DA_intro. pdf • i. SER specification – http: //www. ietf. org/internet-drafts/draft-ietf-ips-iser-05. txt • i. SER over IB Overview – http: //www. haifa. il. ibm. com/satran/ips/i. SER-in-an-IB-network-V 9. pdf Arch of Parallel Computers 26

i. SCSI Extensions for RDMA (i. SER) • i. SCSI specification: – http: //www. ietf. org/rfc 3720. txt • i. SER and DA Introduction – http: //www. rdmaconsortium. org/home/i. SER_DA_intro. pdf • i. SER specification – http: //www. ietf. org/internet-drafts/draft-ietf-ips-iser-05. txt • i. SER over IB Overview – http: //www. haifa. il. ibm. com/satran/ips/i. SER-in-an-IB-network-V 9. pdf Arch of Parallel Computers 26

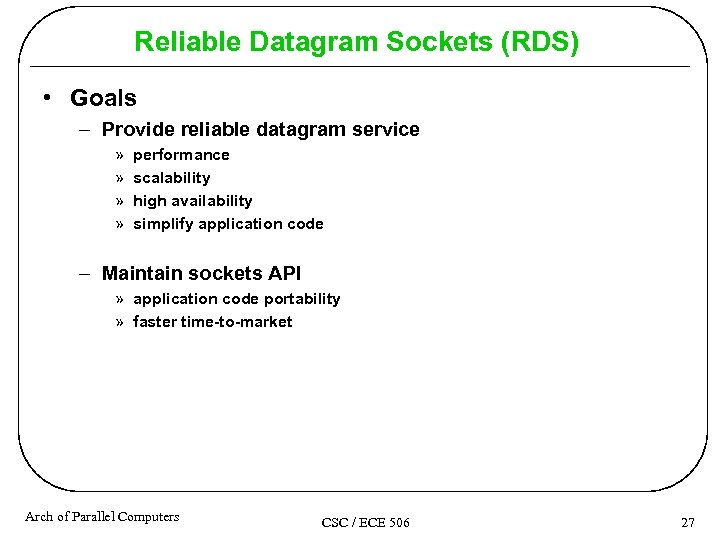

Reliable Datagram Sockets (RDS) • Goals – Provide reliable datagram service » » performance scalability high availability simplify application code – Maintain sockets API » application code portability » faster time-to-market Arch of Parallel Computers CSC / ECE 506 27

Reliable Datagram Sockets (RDS) • Goals – Provide reliable datagram service » » performance scalability high availability simplify application code – Maintain sockets API » application code portability » faster time-to-market Arch of Parallel Computers CSC / ECE 506 27

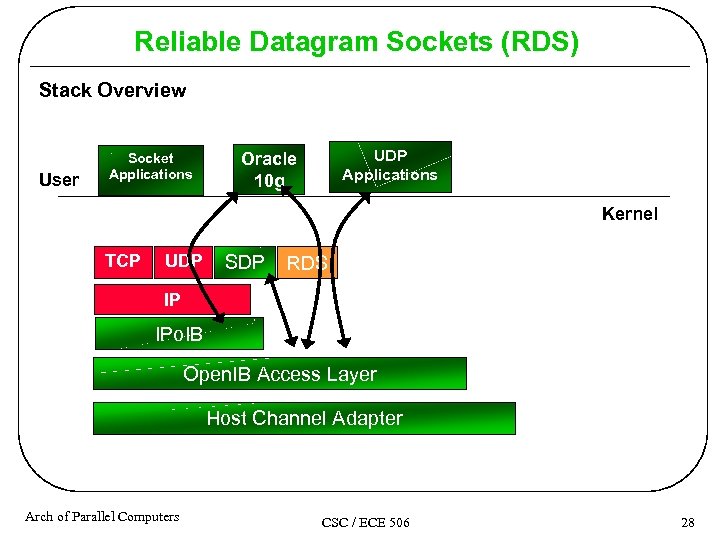

Reliable Datagram Sockets (RDS) Stack Overview User Socket Applications UDP Applications Oracle 10 g Kernel TCP UDP SDP RDS IP IPo. IB Open. IB Access Layer Host Channel Adapter Arch of Parallel Computers CSC / ECE 506 28

Reliable Datagram Sockets (RDS) Stack Overview User Socket Applications UDP Applications Oracle 10 g Kernel TCP UDP SDP RDS IP IPo. IB Open. IB Access Layer Host Channel Adapter Arch of Parallel Computers CSC / ECE 506 28

Reliable Datagram Sockets (RDS) • Application connectionless – – RDS maintains node-to-node connection IP addressing Uses CMA On-demand connection setup » connect on first sendmsg()or data recv » disconnect on error or policy like inactivity – Connection setup/teardown transparent to applications Arch of Parallel Computers CSC / ECE 506 29

Reliable Datagram Sockets (RDS) • Application connectionless – – RDS maintains node-to-node connection IP addressing Uses CMA On-demand connection setup » connect on first sendmsg()or data recv » disconnect on error or policy like inactivity – Connection setup/teardown transparent to applications Arch of Parallel Computers CSC / ECE 506 29

Reliable Datagram Sockets (RDS) • Data and Control Channel – – – Uses RC QP for node level connections Data and Control QPs per session Selectable MTU b-copy send/recv H/W flow control Arch of Parallel Computers CSC / ECE 506 30

Reliable Datagram Sockets (RDS) • Data and Control Channel – – – Uses RC QP for node level connections Data and Control QPs per session Selectable MTU b-copy send/recv H/W flow control Arch of Parallel Computers CSC / ECE 506 30

The End Arch of Parallel Computers CSC / ECE 506 31

The End Arch of Parallel Computers CSC / ECE 506 31

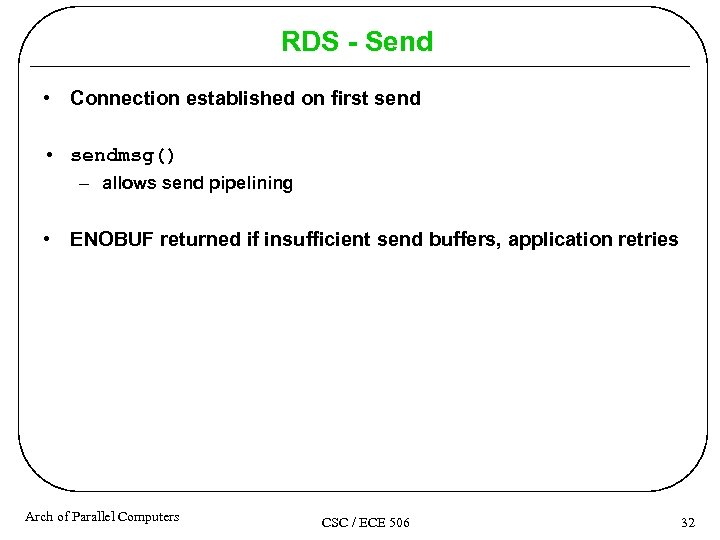

RDS - Send • Connection established on first send • sendmsg() – allows send pipelining • ENOBUF returned if insufficient send buffers, application retries Arch of Parallel Computers CSC / ECE 506 32

RDS - Send • Connection established on first send • sendmsg() – allows send pipelining • ENOBUF returned if insufficient send buffers, application retries Arch of Parallel Computers CSC / ECE 506 32

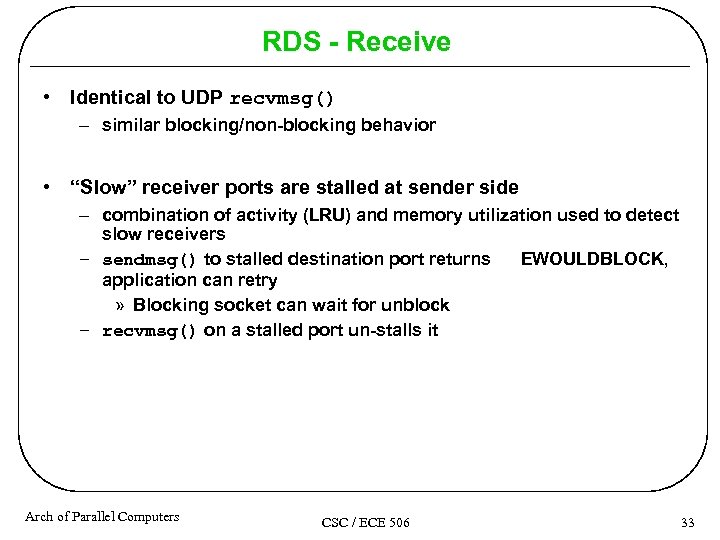

RDS - Receive • Identical to UDP recvmsg() – similar blocking/non-blocking behavior • “Slow” receiver ports are stalled at sender side – combination of activity (LRU) and memory utilization used to detect slow receivers – sendmsg() to stalled destination port returns EWOULDBLOCK, application can retry » Blocking socket can wait for unblock – recvmsg() on a stalled port un-stalls it Arch of Parallel Computers CSC / ECE 506 33

RDS - Receive • Identical to UDP recvmsg() – similar blocking/non-blocking behavior • “Slow” receiver ports are stalled at sender side – combination of activity (LRU) and memory utilization used to detect slow receivers – sendmsg() to stalled destination port returns EWOULDBLOCK, application can retry » Blocking socket can wait for unblock – recvmsg() on a stalled port un-stalls it Arch of Parallel Computers CSC / ECE 506 33

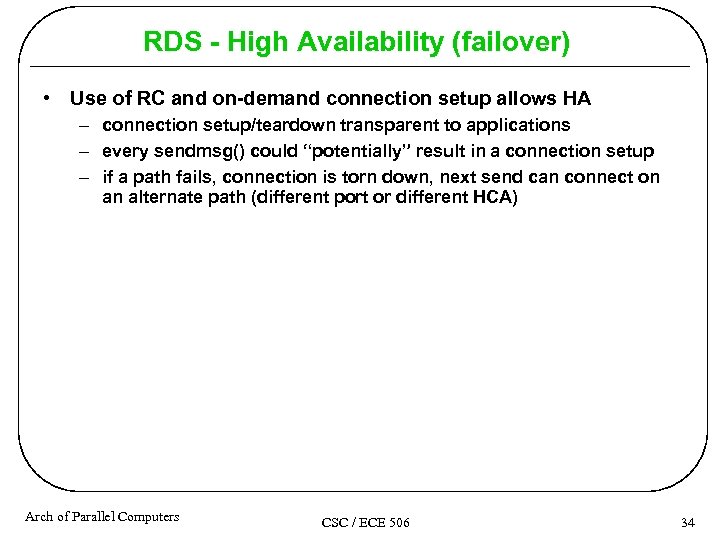

RDS - High Availability (failover) • Use of RC and on-demand connection setup allows HA – connection setup/teardown transparent to applications – every sendmsg() could “potentially” result in a connection setup – if a path fails, connection is torn down, next send can connect on an alternate path (different port or different HCA) Arch of Parallel Computers CSC / ECE 506 34

RDS - High Availability (failover) • Use of RC and on-demand connection setup allows HA – connection setup/teardown transparent to applications – every sendmsg() could “potentially” result in a connection setup – if a path fails, connection is torn down, next send can connect on an alternate path (different port or different HCA) Arch of Parallel Computers CSC / ECE 506 34

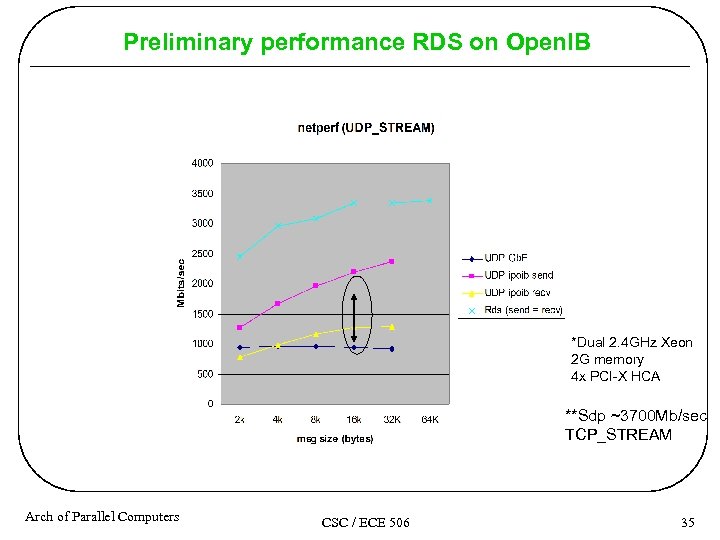

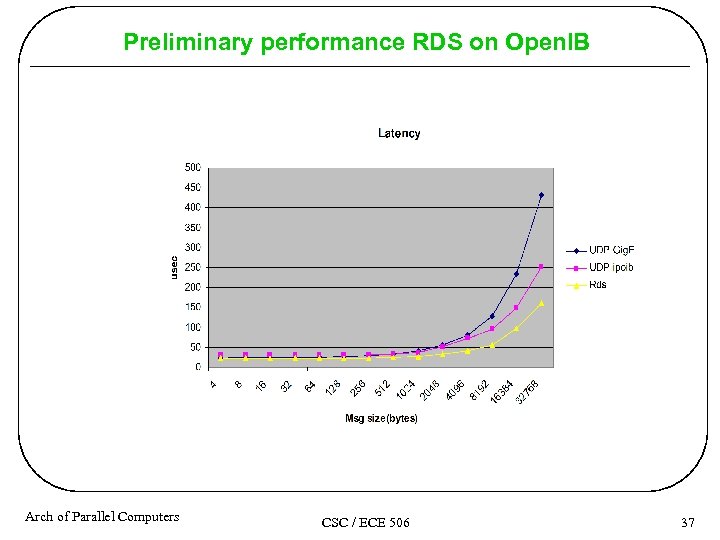

Preliminary performance RDS on Open. IB *Dual 2. 4 GHz Xeon 2 G memory 4 x PCI-X HCA **Sdp ~3700 Mb/sec TCP_STREAM Arch of Parallel Computers CSC / ECE 506 35

Preliminary performance RDS on Open. IB *Dual 2. 4 GHz Xeon 2 G memory 4 x PCI-X HCA **Sdp ~3700 Mb/sec TCP_STREAM Arch of Parallel Computers CSC / ECE 506 35

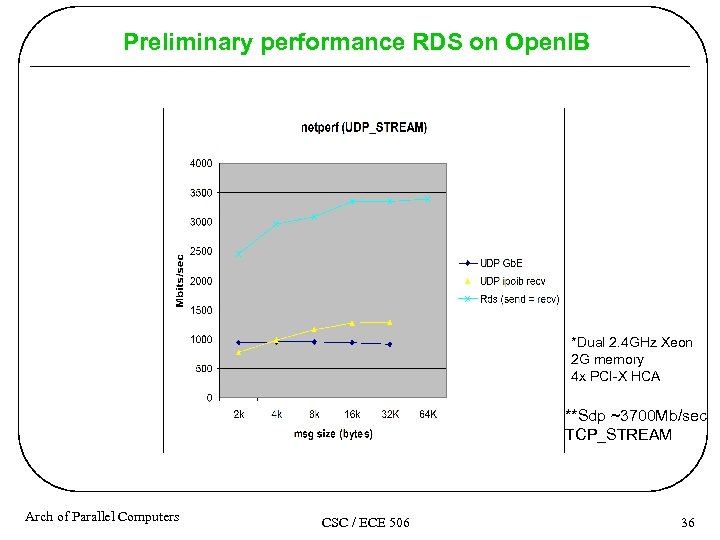

Preliminary performance RDS on Open. IB *Dual 2. 4 GHz Xeon 2 G memory 4 x PCI-X HCA **Sdp ~3700 Mb/sec TCP_STREAM Arch of Parallel Computers CSC / ECE 506 36

Preliminary performance RDS on Open. IB *Dual 2. 4 GHz Xeon 2 G memory 4 x PCI-X HCA **Sdp ~3700 Mb/sec TCP_STREAM Arch of Parallel Computers CSC / ECE 506 36

Preliminary performance RDS on Open. IB Arch of Parallel Computers CSC / ECE 506 37

Preliminary performance RDS on Open. IB Arch of Parallel Computers CSC / ECE 506 37