cbec70e4effac1756f80418a129f5299.ppt

- Количество слайдов: 49

Approaches to Detection of Source Code Plagiarism amongst Students Mike Joy 23 November 2010

Approaches to Detection of Source Code Plagiarism amongst Students Mike Joy 23 November 2010

Overview of Talk 1) Process for detecting plagiarism 2) Technologies 3) Establishing the facts

Overview of Talk 1) Process for detecting plagiarism 2) Technologies 3) Establishing the facts

Part 1 – Process Four Stages Collection Detection Confirmation Investigation From Culwin and Lancaster (2002)

Part 1 – Process Four Stages Collection Detection Confirmation Investigation From Culwin and Lancaster (2002)

Stage 1: Collection • Get all documents together online – so they can be processed – formats? – security? • BOSS (Warwick) • Coursemaster (Nottingham) • Managed Learning Environment

Stage 1: Collection • Get all documents together online – so they can be processed – formats? – security? • BOSS (Warwick) • Coursemaster (Nottingham) • Managed Learning Environment

Stage 2: Detection • Compare with other submissions • Compare with external documents – essay-based assignments? • We’ll come back to this later – Technology

Stage 2: Detection • Compare with other submissions • Compare with external documents – essay-based assignments? • We’ll come back to this later – Technology

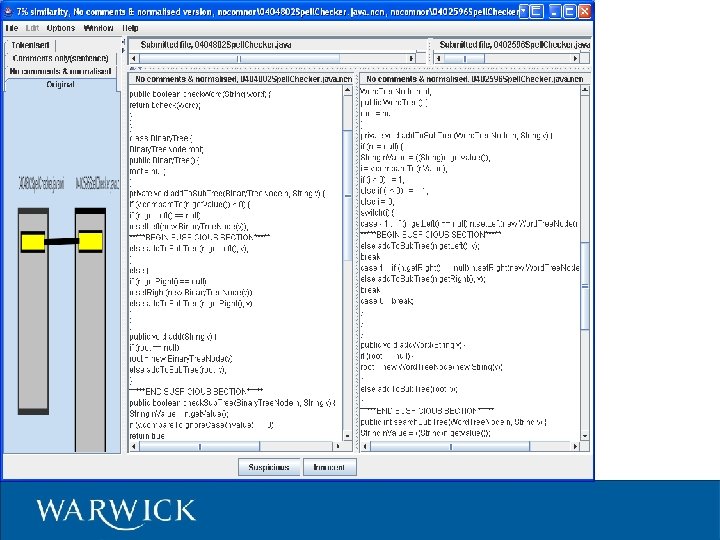

Stage 3: Confirmation Software tool says “A and B similar” – Are they? Never rely on a computer program! – Requires expert human judgement – Evidence must be compelling – Might go to court

Stage 3: Confirmation Software tool says “A and B similar” – Are they? Never rely on a computer program! – Requires expert human judgement – Evidence must be compelling – Might go to court

Stage 4: Investigation A from B, or B from A, or joint work? If A from B, did B know? – open networked file / printer output Did the culprit/s understand? Was code written externally? University processes must be followed Establishing the facts

Stage 4: Investigation A from B, or B from A, or joint work? If A from B, did B know? – open networked file / printer output Did the culprit/s understand? Was code written externally? University processes must be followed Establishing the facts

Why is this Interesting? How do you compare two programs? – This is an algorithm question – Stages 2 and 3: detection and confirmation How do you use the results (of a comparison) to educate students? – This is a pedagogic question – Stage 4, and before stage 1!

Why is this Interesting? How do you compare two programs? – This is an algorithm question – Stages 2 and 3: detection and confirmation How do you use the results (of a comparison) to educate students? – This is a pedagogic question – Stage 4, and before stage 1!

Digression: Essays Plagiarism in essays is easier to detect Lots of “tricks” a lecturer can use! – Google search on phrases – Abnormal style –. . . etc. Software tools – Let's have a look. . .

Digression: Essays Plagiarism in essays is easier to detect Lots of “tricks” a lecturer can use! – Google search on phrases – Abnormal style –. . . etc. Software tools – Let's have a look. . .

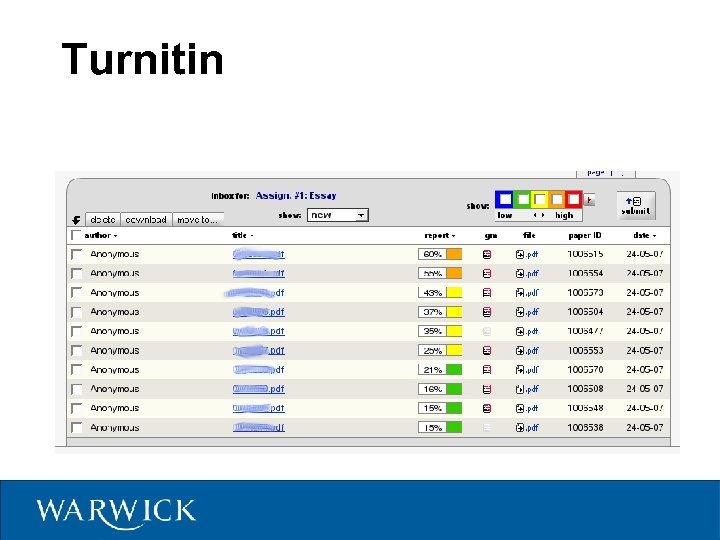

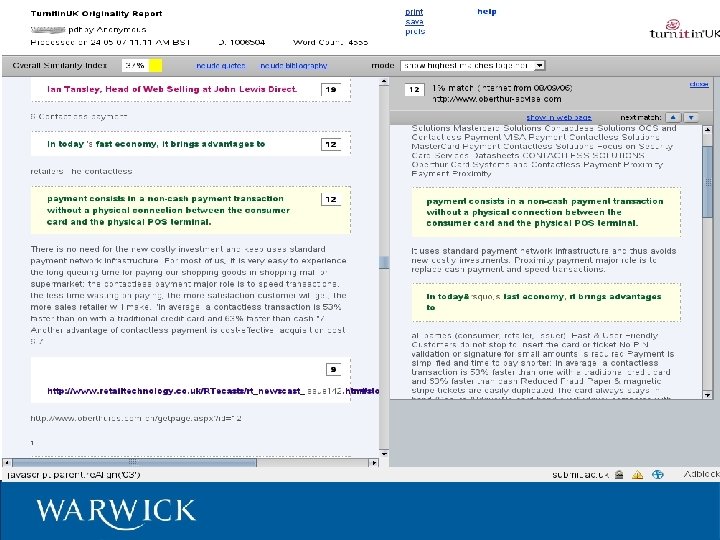

Turnitin

Turnitin

Part 2 – Technologies Collection Detection Confirmation Investigation

Part 2 – Technologies Collection Detection Confirmation Investigation

Why not use Turnitin? It won’t work! – String matching algorithm inappropriate – Database does not contain code Commercial involvement – E. g. Black Duck Software

Why not use Turnitin? It won’t work! – String matching algorithm inappropriate – Database does not contain code Commercial involvement – E. g. Black Duck Software

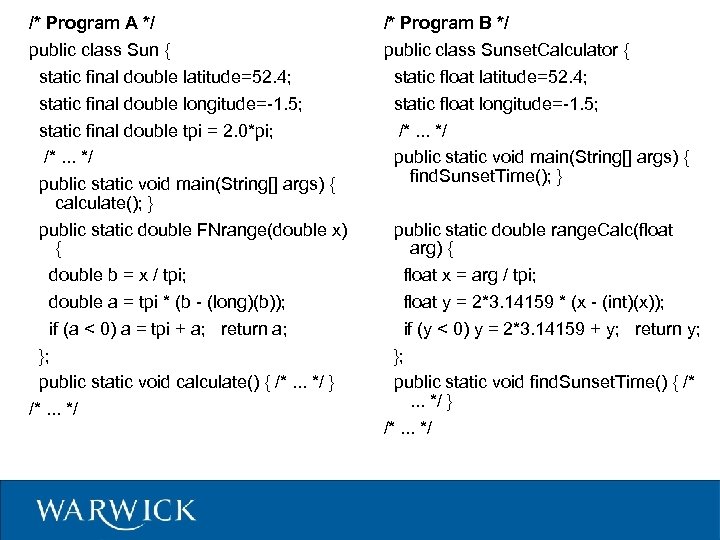

/* Program A */ public class Sun { static final double latitude=52. 4; static final double longitude=-1. 5; static final double tpi = 2. 0*pi; /*. . . */ public static void main(String[] args) { calculate(); } public static double FNrange(double x) { double b = x / tpi; double a = tpi * (b - (long)(b)); if (a < 0) a = tpi + a; return a; }; public static void calculate() { /*. . . */ } /*. . . */ /* Program B */ public class Sunset. Calculator { static float latitude=52. 4; static float longitude=-1. 5; /*. . . */ public static void main(String[] args) { find. Sunset. Time(); } public static double range. Calc(float arg) { float x = arg / tpi; float y = 2*3. 14159 * (x - (int)(x)); if (y < 0) y = 2*3. 14159 + y; return y; }; public static void find. Sunset. Time() { /*. . . */ } /*. . . */

/* Program A */ public class Sun { static final double latitude=52. 4; static final double longitude=-1. 5; static final double tpi = 2. 0*pi; /*. . . */ public static void main(String[] args) { calculate(); } public static double FNrange(double x) { double b = x / tpi; double a = tpi * (b - (long)(b)); if (a < 0) a = tpi + a; return a; }; public static void calculate() { /*. . . */ } /*. . . */ /* Program B */ public class Sunset. Calculator { static float latitude=52. 4; static float longitude=-1. 5; /*. . . */ public static void main(String[] args) { find. Sunset. Time(); } public static double range. Calc(float arg) { float x = arg / tpi; float y = 2*3. 14159 * (x - (int)(x)); if (y < 0) y = 2*3. 14159 + y; return y; }; public static void find. Sunset. Time() { /*. . . */ } /*. . . */

Is This Plagiarism? • Is Program B derived from Program A in a manner which is “plagiarism”? • Maybe – Structure is similar – cosmetic changes – But the algorithm is public domain – Maybe 6 derived from 5, maybe the other way round

Is This Plagiarism? • Is Program B derived from Program A in a manner which is “plagiarism”? • Maybe – Structure is similar – cosmetic changes – But the algorithm is public domain – Maybe 6 derived from 5, maybe the other way round

History (1) Attribute counting systems (Halstead, 1972; Ottenstein, 1976): • • Numbers of unique operators Numbers of unique operands Total numbers of operator occurrences Total numbers of operand occurrences

History (1) Attribute counting systems (Halstead, 1972; Ottenstein, 1976): • • Numbers of unique operators Numbers of unique operands Total numbers of operator occurrences Total numbers of operand occurrences

History (2) Structure-based systems: – Each program is converted into token strings (or something similar) – Token streams are compared for determining similar source-code fragments – Tools: YAP 3, JPlag, Plague, MOSS, and Sherlock

History (2) Structure-based systems: – Each program is converted into token strings (or something similar) – Token streams are compared for determining similar source-code fragments – Tools: YAP 3, JPlag, Plague, MOSS, and Sherlock

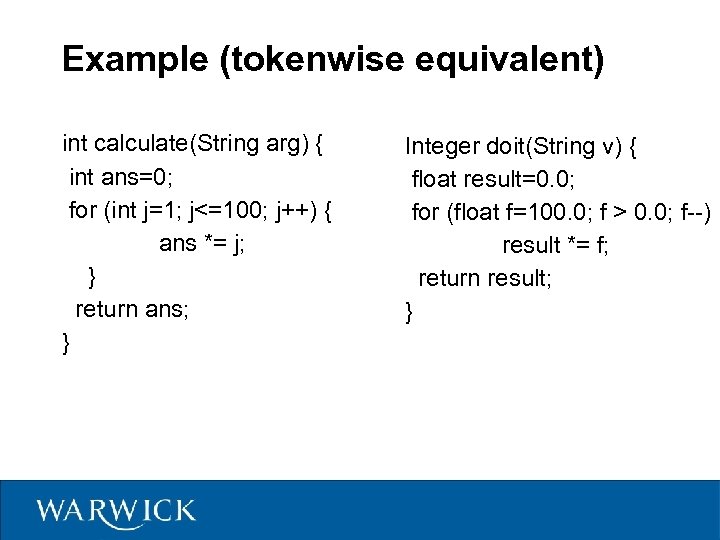

Example (tokenwise equivalent) int calculate(String arg) { int ans=0; for (int j=1; j<=100; j++) { ans *= j; } return ans; } Integer doit(String v) { float result=0. 0; for (float f=100. 0; f > 0. 0; f--) result *= f; return result; }

Example (tokenwise equivalent) int calculate(String arg) { int ans=0; for (int j=1; j<=100; j++) { ans *= j; } return ans; } Integer doit(String v) { float result=0. 0; for (float f=100. 0; f > 0. 0; f--) result *= f; return result; }

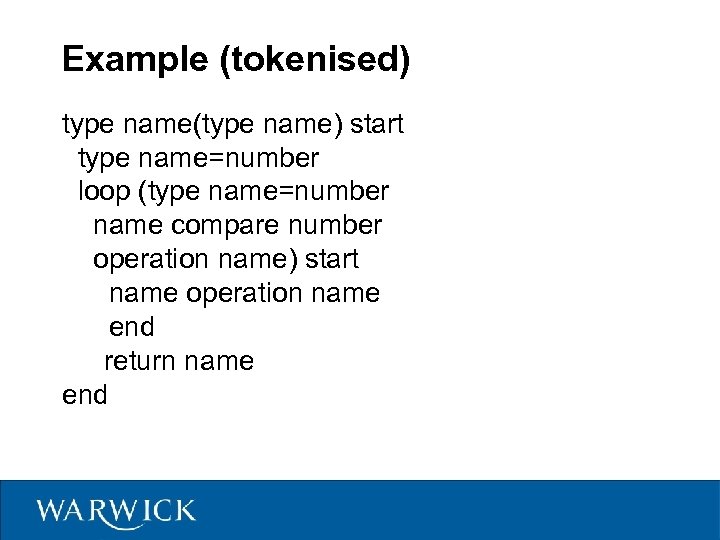

Example (tokenised) type name(type name) start type name=number loop (type name=number name compare number operation name) start name operation name end return name end

Example (tokenised) type name(type name) start type name=number loop (type name=number name compare number operation name) start name operation name end return name end

Detectors MOSS (Alex Aiken: Berkeley/Stanford, USA, 1994) JPlag (Guido Malpohl: Karlsruhe, Germany) – Java only – Programs must compile? Sherlock (Warwick, UK) (Joy and Luck, 1999)

Detectors MOSS (Alex Aiken: Berkeley/Stanford, USA, 1994) JPlag (Guido Malpohl: Karlsruhe, Germany) – Java only – Programs must compile? Sherlock (Warwick, UK) (Joy and Luck, 1999)

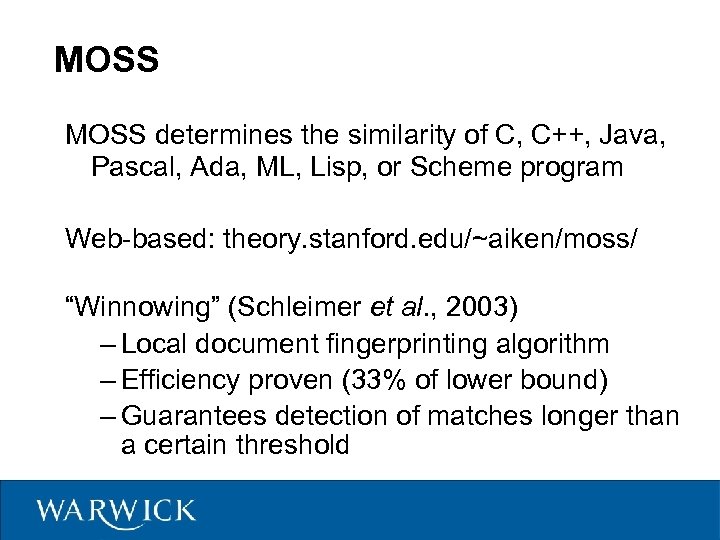

MOSS determines the similarity of C, C++, Java, Pascal, Ada, ML, Lisp, or Scheme program Web-based: theory. stanford. edu/~aiken/moss/ “Winnowing” (Schleimer et al. , 2003) – Local document fingerprinting algorithm – Efficiency proven (33% of lower bound) – Guarantees detection of matches longer than a certain threshold

MOSS determines the similarity of C, C++, Java, Pascal, Ada, ML, Lisp, or Scheme program Web-based: theory. stanford. edu/~aiken/moss/ “Winnowing” (Schleimer et al. , 2003) – Local document fingerprinting algorithm – Efficiency proven (33% of lower bound) – Guarantees detection of matches longer than a certain threshold

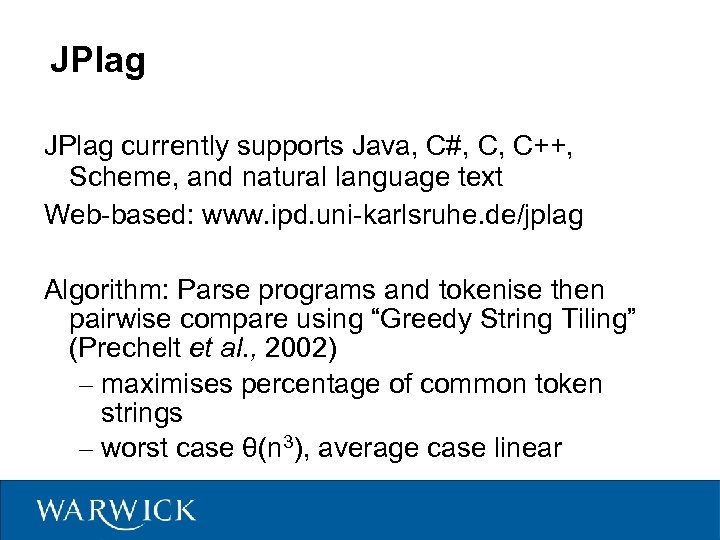

JPlag currently supports Java, C#, C, C++, Scheme, and natural language text Web-based: www. ipd. uni-karlsruhe. de/jplag Algorithm: Parse programs and tokenise then pairwise compare using “Greedy String Tiling” (Prechelt et al. , 2002) – maximises percentage of common token strings – worst case θ(n 3), average case linear

JPlag currently supports Java, C#, C, C++, Scheme, and natural language text Web-based: www. ipd. uni-karlsruhe. de/jplag Algorithm: Parse programs and tokenise then pairwise compare using “Greedy String Tiling” (Prechelt et al. , 2002) – maximises percentage of common token strings – worst case θ(n 3), average case linear

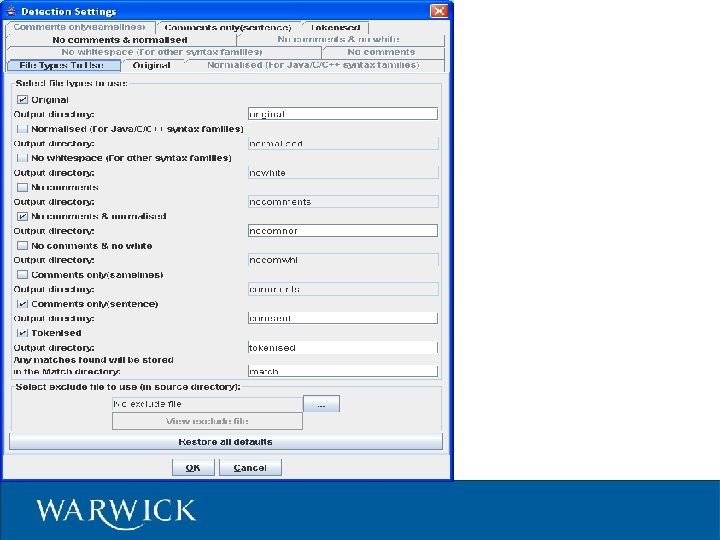

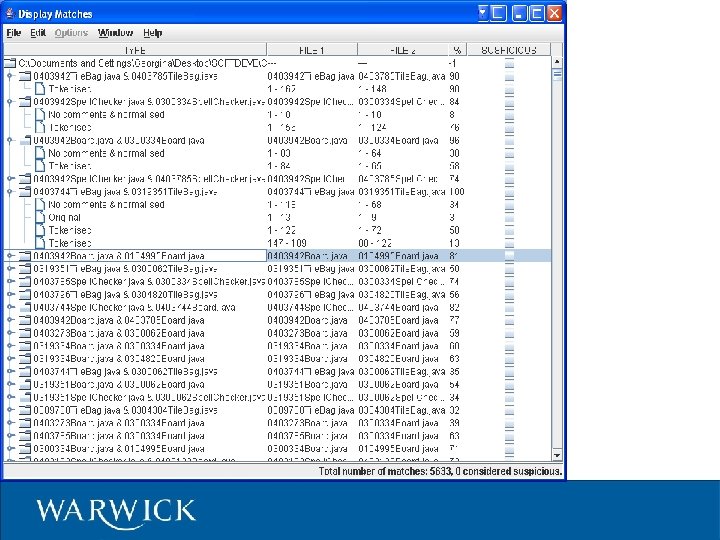

Sherlock Developed at the University of Warwick Department of Computer Science Open-Source application coded in Java Sherlock detects plagiarism on source-code and natural language assignments BOSS home page: www. boss. org. uk Preprocesses code (not a full parse!) then simple string comparison

Sherlock Developed at the University of Warwick Department of Computer Science Open-Source application coded in Java Sherlock detects plagiarism on source-code and natural language assignments BOSS home page: www. boss. org. uk Preprocesses code (not a full parse!) then simple string comparison

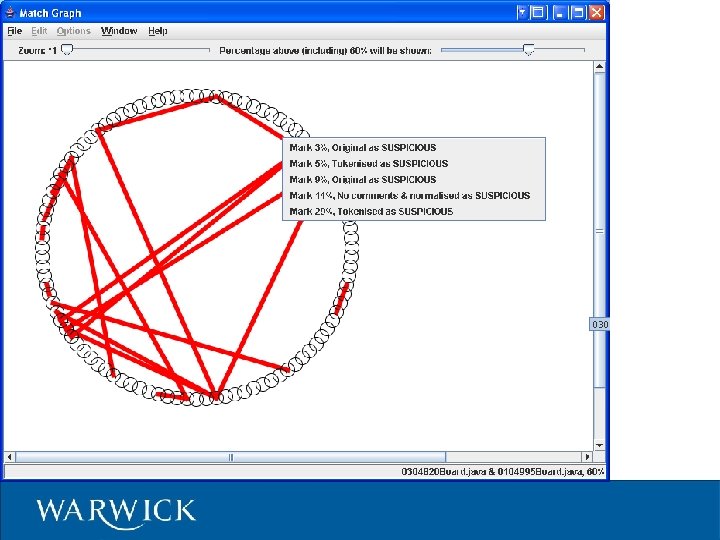

Code. Match Commercial product – www. safe-corp. biz – exact algorithm not published – patent pending? Free academic use for small data sets

Code. Match Commercial product – www. safe-corp. biz – exact algorithm not published – patent pending? Free academic use for small data sets

Code. Match – Algorithm 1) Remove comments, whitespace and lines containing only keywords/syntax; compare sequences of instructions 2) Extract comments, and compare 3) Extract identifiers, and count similar; x, xx 12345 are “similar” 4) Combine (1), (2) and (3) to give correlation score

Code. Match – Algorithm 1) Remove comments, whitespace and lines containing only keywords/syntax; compare sequences of instructions 2) Extract comments, and compare 3) Extract identifiers, and count similar; x, xx 12345 are “similar” 4) Combine (1), (2) and (3) to give correlation score

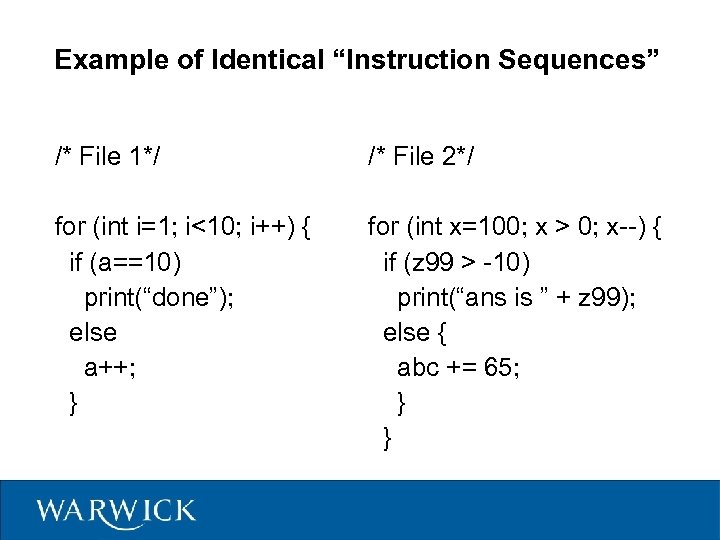

Example of Identical “Instruction Sequences” /* File 1*/ /* File 2*/ for (int i=1; i<10; i++) { if (a==10) print(“done”); else a++; } for (int x=100; x > 0; x--) { if (z 99 > -10) print(“ans is ” + z 99); else { abc += 65; } }

Example of Identical “Instruction Sequences” /* File 1*/ /* File 2*/ for (int i=1; i<10; i++) { if (a==10) print(“done”); else a++; } for (int x=100; x > 0; x--) { if (z 99 > -10) print(“ans is ” + z 99); else { abc += 65; } }

Latent Semantic Analysis Documents as “bags of words” Known technique in IR Handles synonymy and polysemy Maths is nasty Results reported in (Cosma and Joy, 2010)

Latent Semantic Analysis Documents as “bags of words” Known technique in IR Handles synonymy and polysemy Maths is nasty Results reported in (Cosma and Joy, 2010)

Heuristics Comments – Spelling mistakes – Unusual English (Thai, German, …) Use of Search Engines Unusual style Code errors

Heuristics Comments – Spelling mistakes – Unusual English (Thai, German, …) Use of Search Engines Unusual style Code errors

Technical Issues Data protection (e. g. MOSS is in USA) Accuracy Faulty code may not be accepted Results returned by different tools are similar (but not identical) User interface Availability of sets of test data

Technical Issues Data protection (e. g. MOSS is in USA) Accuracy Faulty code may not be accepted Results returned by different tools are similar (but not identical) User interface Availability of sets of test data

Part 3 – Establishing the Facts Collection Detection Confirmation Investigation

Part 3 – Establishing the Facts Collection Detection Confirmation Investigation

What is “Similarity”? What do we actually mean by “similar”? This is where the problems start … Evidence … ?

What is “Similarity”? What do we actually mean by “similar”? This is where the problems start … Evidence … ?

(1) Staff Survey We carried out a survey in order to: – gather the perceptions of academics on what constitutes source-code plagiarism (Cosma and Joy, 2006), and – create a structured description of what constitutes source-code plagiarism from a UK academic perspective (Cosma and Joy, 2008)

(1) Staff Survey We carried out a survey in order to: – gather the perceptions of academics on what constitutes source-code plagiarism (Cosma and Joy, 2006), and – create a structured description of what constitutes source-code plagiarism from a UK academic perspective (Cosma and Joy, 2008)

Data Source • On-line questionnaire distributed to 120 academics – Questions were in the form of small scenarios – Mostly multiple-choice responses – Comments box below each question – Anonymous – option for providing details • Received 59 responses, from more that 34 different institutions • Responses were analysed and collated to create a universally acceptable source-code plagiarism description.

Data Source • On-line questionnaire distributed to 120 academics – Questions were in the form of small scenarios – Mostly multiple-choice responses – Comments box below each question – Anonymous – option for providing details • Received 59 responses, from more that 34 different institutions • Responses were analysed and collated to create a universally acceptable source-code plagiarism description.

Results Plagiaristic activities: – Source-code reuse and self-plagiarism – Use of (O-O) templates – Converting source to another language – Inappropriate collusion/collaboration – Using code-generator software – Obtaining source-code written by other authors – False and “pretend” references Copying with adaptation: minimal, moderate, extreme – How to decide?

Results Plagiaristic activities: – Source-code reuse and self-plagiarism – Use of (O-O) templates – Converting source to another language – Inappropriate collusion/collaboration – Using code-generator software – Obtaining source-code written by other authors – False and “pretend” references Copying with adaptation: minimal, moderate, extreme – How to decide?

(2) Student Survey We carried out a survey (Joy et al. , 2010) in order to: – gather the perceptions of students on what (source code) plagiarism means – identify types of plagiarism which are poorly understood – identify categories of student who perceive the issue differently to others

(2) Student Survey We carried out a survey (Joy et al. , 2010) in order to: – gather the perceptions of students on what (source code) plagiarism means – identify types of plagiarism which are poorly understood – identify categories of student who perceive the issue differently to others

Data Source • Online questionnaire answered by 770 students from computing departments across the UK • Anonymised, but brief demographic information included • Used 15 “scenarios”, each of which may describe a plagiaristic activity

Data Source • Online questionnaire answered by 770 students from computing departments across the UK • Anonymised, but brief demographic information included • Used 15 “scenarios”, each of which may describe a plagiaristic activity

Results (1) No significant difference in perspectives in terms of – university – degree programme – level of study (BS, MS, Ph. D)

Results (1) No significant difference in perspectives in terms of – university – degree programme – level of study (BS, MS, Ph. D)

Results (2) Issues which students misunderstood: – open source code – translating between languages – re-use of code from previous assignments – placing references within technical documentation

Results (2) Issues which students misunderstood: – open source code – translating between languages – re-use of code from previous assignments – placing references within technical documentation

Summary. . . Big Issues Making policy clear to students Identifying external contributors • web sites with code to download • enthusiasts forums, Wikis, etc. Cheat sites • “Rent-A-Coder” (etc. )

Summary. . . Big Issues Making policy clear to students Identifying external contributors • web sites with code to download • enthusiasts forums, Wikis, etc. Cheat sites • “Rent-A-Coder” (etc. )

![References (1) F. Culwin and T. Lancaster, “Plagiarism, prevention, deterrence and detection”, [online] available References (1) F. Culwin and T. Lancaster, “Plagiarism, prevention, deterrence and detection”, [online] available](https://present5.com/presentation/cbec70e4effac1756f80418a129f5299/image-47.jpg) References (1) F. Culwin and T. Lancaster, “Plagiarism, prevention, deterrence and detection”, [online] available from: www. heacademy. ac. uk/assets/York/documents /resources/resourcedatabase/ id 426_plagiarism_prevention_deterrence_detection. pdf (2002) G. Cosma and M. S. Joy, “An Approach to Source-Code Plagiarism Detection and Investigation using Latent Semantic Analysis” IEEE Transactions on Computers, to appear (2010) G. Cosma and M. S. Joy, “Towards a Definition on Source-Code Plagiarism”, IEEE Transactions on Education 51(2) pp. 195 -200 (2008)

References (1) F. Culwin and T. Lancaster, “Plagiarism, prevention, deterrence and detection”, [online] available from: www. heacademy. ac. uk/assets/York/documents /resources/resourcedatabase/ id 426_plagiarism_prevention_deterrence_detection. pdf (2002) G. Cosma and M. S. Joy, “An Approach to Source-Code Plagiarism Detection and Investigation using Latent Semantic Analysis” IEEE Transactions on Computers, to appear (2010) G. Cosma and M. S. Joy, “Towards a Definition on Source-Code Plagiarism”, IEEE Transactions on Education 51(2) pp. 195 -200 (2008)

References (2) G. Cosma and M. S. Joy, “Source-code Plagiarism: a UK Academic Perspective”, Proceedings of the 7 th Annual Conference of the HEA Network for Information and Computer Sciences (2006) M. Halstead, “Natural Laws Controlling Algorithm Structure, ACM SIGPLAN Notices 7(2) pp. 19 -26 (1972) M. S. Joy, G. Cosma, J. Y-K. Yau and J. E. Sinclair, “Source Code Plagiarism – a Student Perspective”, IEEE Transactions on Education (to appear) (2010) M. S. Joy and M. Luck, “Plagiarism in Programming Assignments”, IEEE Transactions on Education 42(2), pp. 129 -133 (1999)

References (2) G. Cosma and M. S. Joy, “Source-code Plagiarism: a UK Academic Perspective”, Proceedings of the 7 th Annual Conference of the HEA Network for Information and Computer Sciences (2006) M. Halstead, “Natural Laws Controlling Algorithm Structure, ACM SIGPLAN Notices 7(2) pp. 19 -26 (1972) M. S. Joy, G. Cosma, J. Y-K. Yau and J. E. Sinclair, “Source Code Plagiarism – a Student Perspective”, IEEE Transactions on Education (to appear) (2010) M. S. Joy and M. Luck, “Plagiarism in Programming Assignments”, IEEE Transactions on Education 42(2), pp. 129 -133 (1999)

References (3) K. Ottenstein, “An Algorithmic Approach to the Detection and Prevention of Plagiarism”, ACM SIGCSE Bulletin 8(4) pp. 30 -41 (1976) L. Prechelt, G. Malpohl and M. Philippsen, “Finding “Plagiarisms among a Set of Programs with JPlag”. Journal of Universal Computer Science 8(11) pp. 1016 -1038 (2002) S. Schleimer, D. S. Wilkerson and A. Aitken, “Winnowing: Local Algorithms for Document Fingerprinting”, Proceedings of the ACM SIGMOD International Conference on Management of Data, pp. 76 -85 (2003)

References (3) K. Ottenstein, “An Algorithmic Approach to the Detection and Prevention of Plagiarism”, ACM SIGCSE Bulletin 8(4) pp. 30 -41 (1976) L. Prechelt, G. Malpohl and M. Philippsen, “Finding “Plagiarisms among a Set of Programs with JPlag”. Journal of Universal Computer Science 8(11) pp. 1016 -1038 (2002) S. Schleimer, D. S. Wilkerson and A. Aitken, “Winnowing: Local Algorithms for Document Fingerprinting”, Proceedings of the ACM SIGMOD International Conference on Management of Data, pp. 76 -85 (2003)