7e50872d1bb0929bfd62eeb94b792135.ppt

- Количество слайдов: 27

Applications of Automated Model Based Testing with Tor. X Ed Brinksma Course 2004

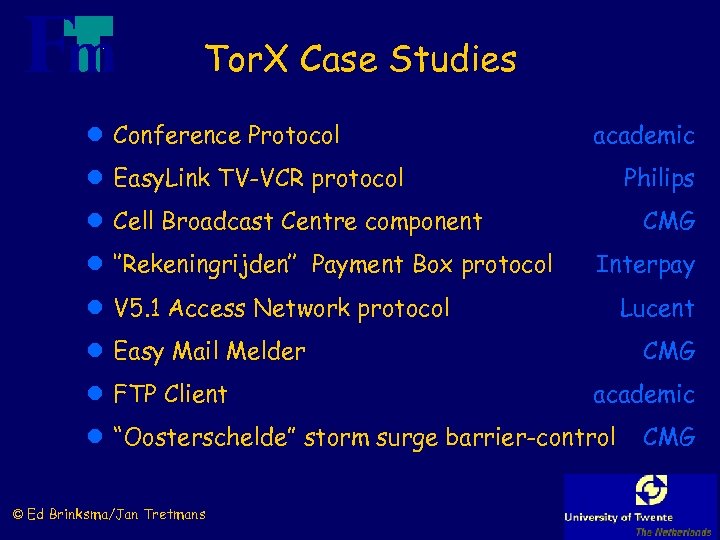

Tor. X Case Studies l Conference Protocol academic l Easy. Link TV-VCR protocol Philips l Cell Broadcast Centre component l ‘’Rekeningrijden’’ Payment Box protocol CMG Interpay l V 5. 1 Access Network protocol Lucent l Easy Mail Melder l FTP Client CMG academic l “Oosterschelde” storm surge barrier-control © Ed Brinksma/Jan Tretmans CMG

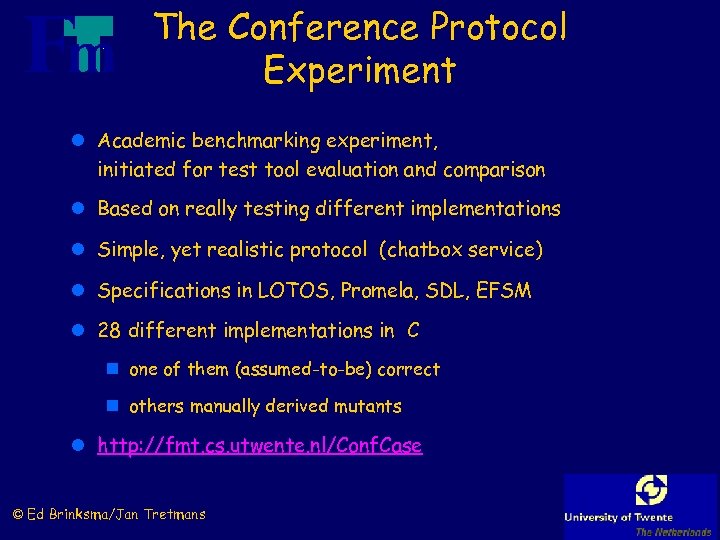

The Conference Protocol Experiment l Academic benchmarking experiment, initiated for test tool evaluation and comparison l Based on really testing different implementations l Simple, yet realistic protocol (chatbox service) l Specifications in LOTOS, Promela, SDL, EFSM l 28 different implementations in C n one of them (assumed-to-be) correct n others manually derived mutants l http: //fmt. cs. utwente. nl/Conf. Case © Ed Brinksma/Jan Tretmans

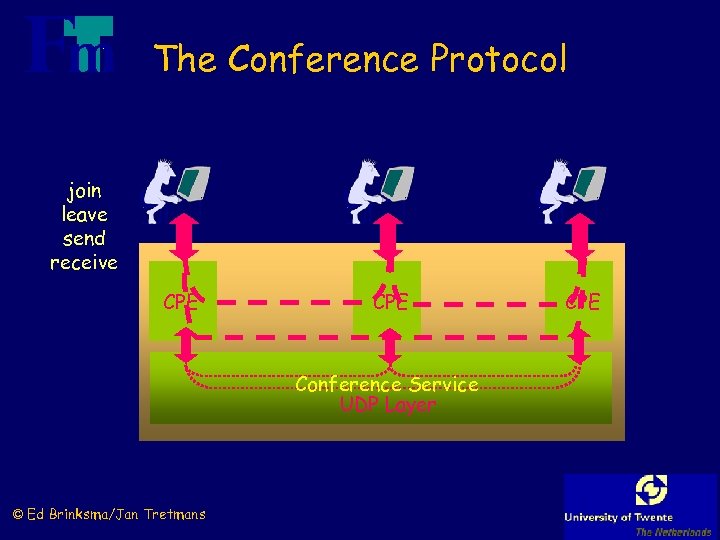

The Conference Protocol join leave send receive CPE Conference Service UDP Layer © Ed Brinksma/Jan Tretmans CPE

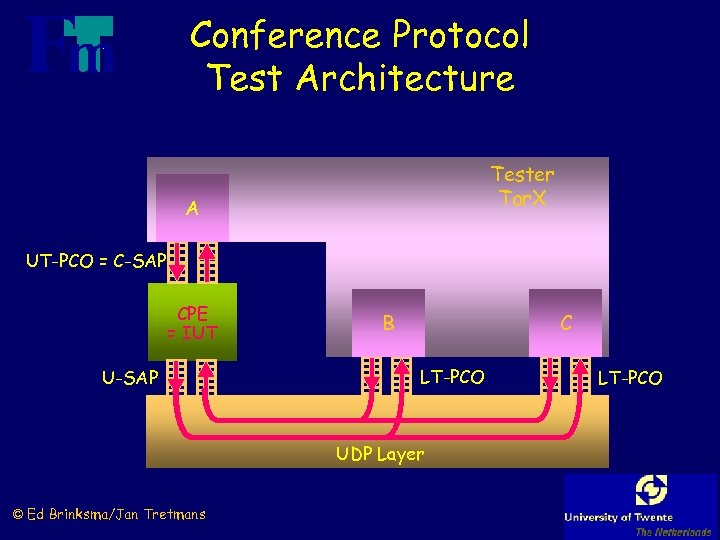

Conference Protocol Test Architecture Tester Tor. X A UT-PCO = C-SAP CPE = IUT U-SAP B C LT-PCO UDP Layer © Ed Brinksma/Jan Tretmans LT-PCO

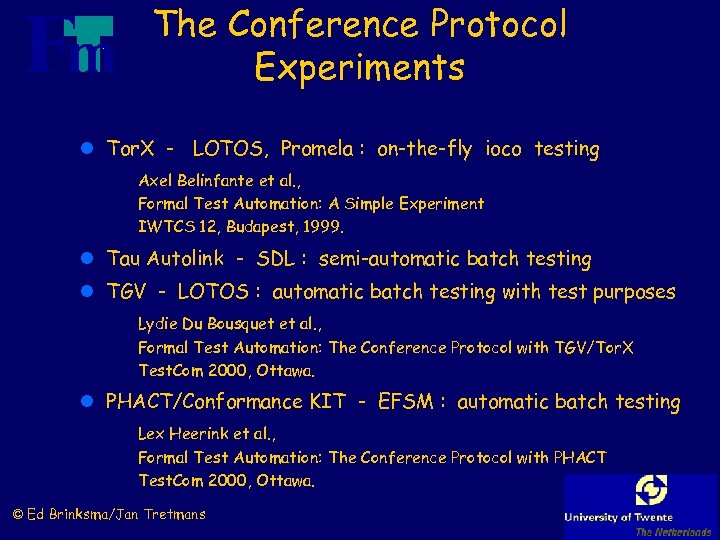

The Conference Protocol Experiments l Tor. X - LOTOS, Promela : on-the-fly ioco testing Axel Belinfante et al. , Formal Test Automation: A Simple Experiment IWTCS 12, Budapest, 1999. l Tau Autolink - SDL : semi-automatic batch testing l TGV - LOTOS : automatic batch testing with test purposes Lydie Du Bousquet et al. , Formal Test Automation: The Conference Protocol with TGV/Tor. X Test. Com 2000, Ottawa. l PHACT/Conformance KIT - EFSM : automatic batch testing Lex Heerink et al. , Formal Test Automation: The Conference Protocol with PHACT Test. Com 2000, Ottawa. © Ed Brinksma/Jan Tretmans

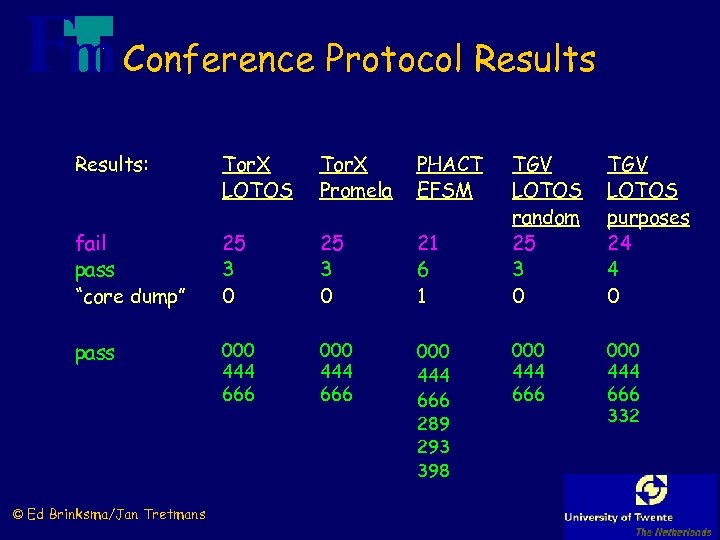

Conference Protocol Results: Tor. X LOTOS Tor. X Promela PHACT EFSM fail pass “core dump” 25 3 0 21 6 1 pass 000 444 666 289 293 398 © Ed Brinksma/Jan Tretmans TGV LOTOS random 25 3 0 TGV LOTOS purposes 24 4 0 000 444 666 332

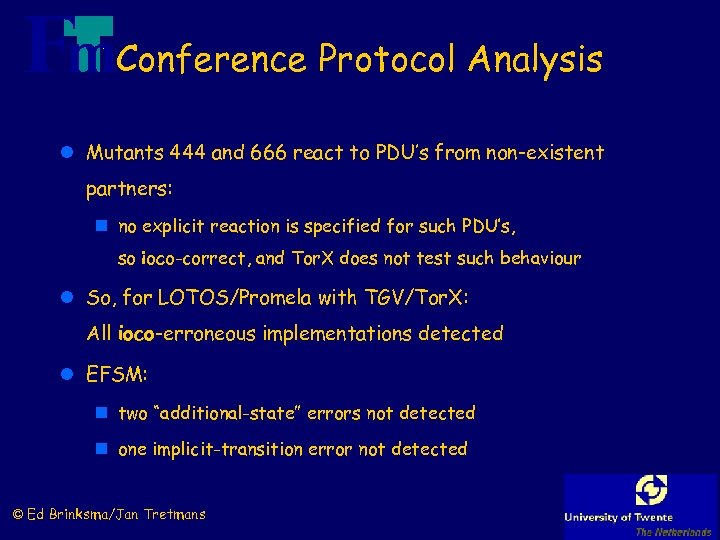

Conference Protocol Analysis l Mutants 444 and 666 react to PDU’s from non-existent partners: n no explicit reaction is specified for such PDU’s, so ioco-correct, and Tor. X does not test such behaviour l So, for LOTOS/Promela with TGV/Tor. X: All ioco-erroneous implementations detected l EFSM: n two “additional-state” errors not detected n one implicit-transition error not detected © Ed Brinksma/Jan Tretmans

Conference Protocol Analysis l Tor. X statistics n all errors found after 2 - 498 test events n maximum length of tests : > 500, 000 test events l EFSM statistics n 82 test cases with “partitioned tour method” ( = UIO ) n length per test case : < 16 test events l TGV with manual test purposes n ~ 20 test cases of various length l TGV with random test purposes n ~ 200 test cases of 200 test events © Ed Brinksma/Jan Tretmans

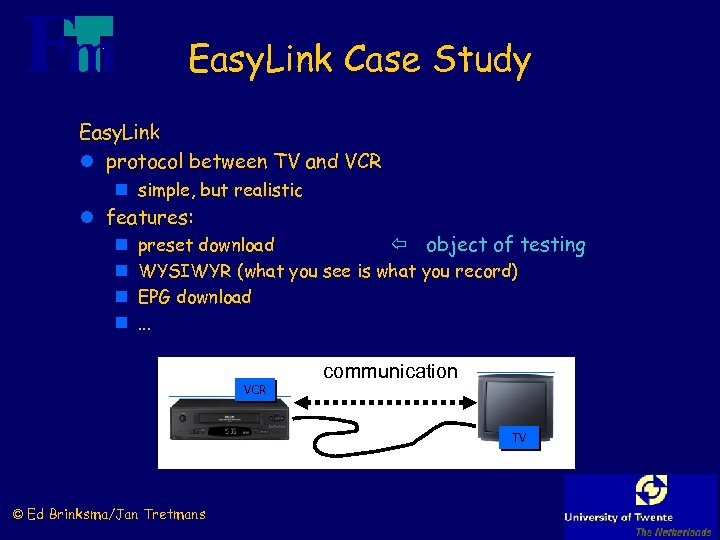

Easy. Link Case Study Easy. Link l protocol between TV and VCR n simple, but realistic l features: n n preset download object of testing WYSIWYR (what you see is what you record) EPG download. . . communication VCR TV © Ed Brinksma/Jan Tretmans

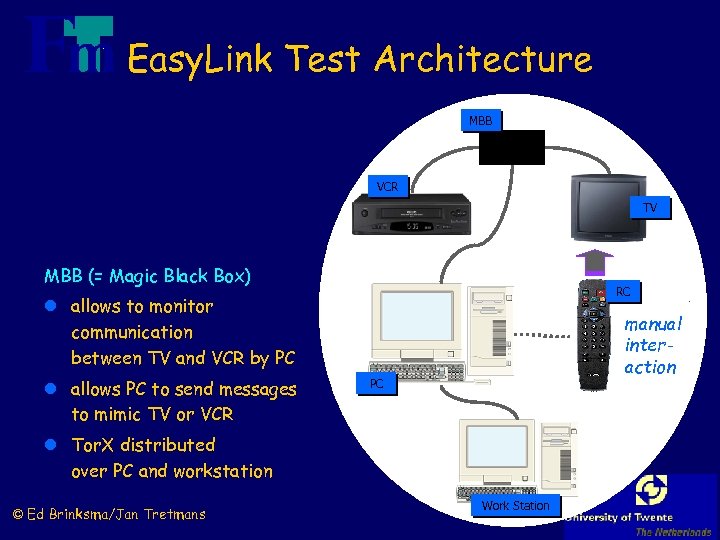

Easy. Link Test Architecture MBB VCR TV MBB (= Magic Black Box) RC l allows to monitor communication between TV and VCR by PC l allows PC to send messages to mimic TV or VCR manual interaction PC l Tor. X distributed over PC and workstation © Ed Brinksma/Jan Tretmans Work Station

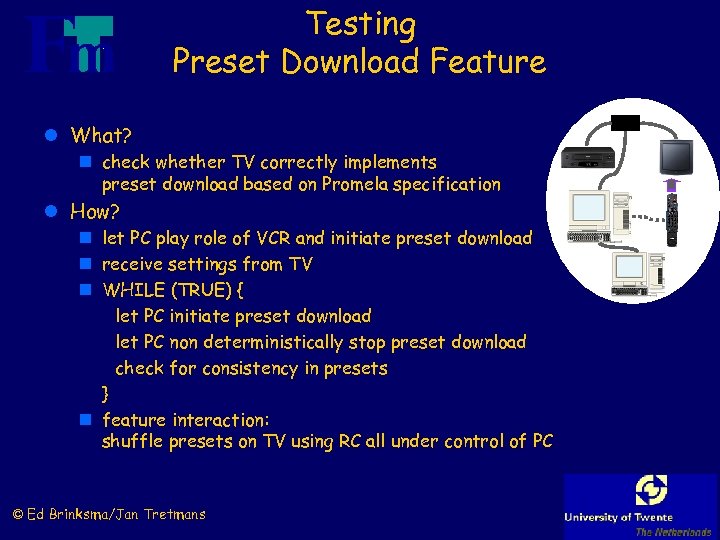

Testing Preset Download Feature l What? n check whether TV correctly implements preset download based on Promela specification l How? n let PC play role of VCR and initiate preset download n receive settings from TV n WHILE (TRUE) { let PC initiate preset download let PC non deterministically stop preset download check for consistency in presets } n feature interaction: shuffle presets on TV using RC all under control of PC © Ed Brinksma/Jan Tretmans

Easy. Link Experiences Results: l test environment influences what can be tested n testing power is limited by functionality of MBB l initially, state of TV is unknown n tester must be prepared for all possible states l some “hacks” needed in specification and tool architecture in order to decrease state space © Ed Brinksma/Jan Tretmans l automatic specification based testing feasible l tool architecture also suitable to cope with user interaction l some (non fatal) nonconformances detected

CMG - CBC Component Test l Test one component of Cell Broadcast Centre l LOTOS (process algebra) specification of 28 pp. l Using existing test execution environment l Based on automatic generation of “adapter” based on IDL l Comparison (simple): existing test Tor. X n code coverage 82 % 83 % n detected mutants/10 5 7 l Conclusion: n Tor. X is as least as good as conventional testing (with potential to do better) n LOTOS is not nice (= terrible) to specify such systems © Ed Brinksma/Jan Tretmans

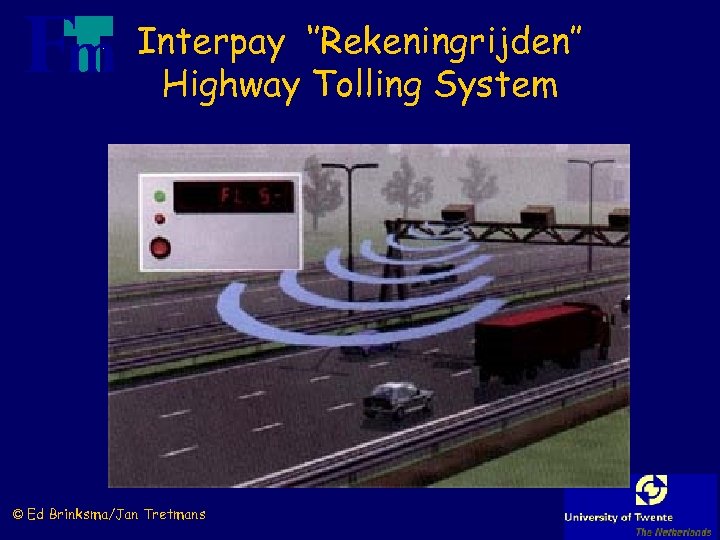

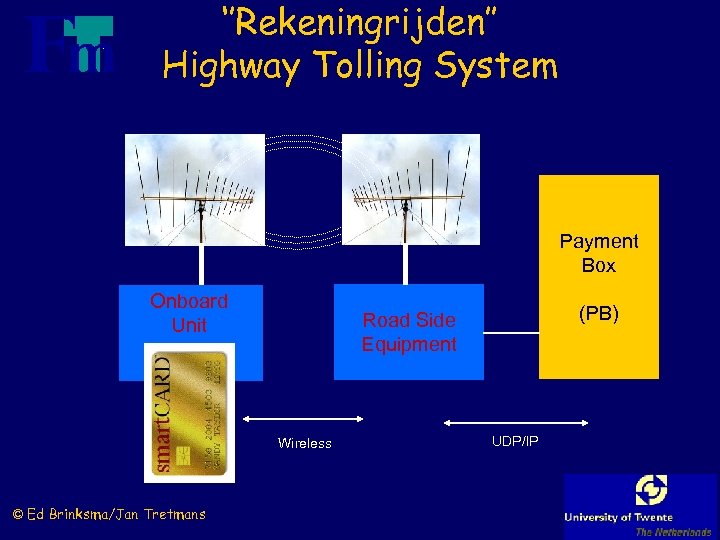

Interpay ‘’Rekeningrijden’’ Highway Tolling System © Ed Brinksma/Jan Tretmans

“Rekeningrijden” Characteristics : l Simple protocol l Parallellism : n many cars at the same time l Encryption l Real-time issues l System passed traditional testing phase © Ed Brinksma/Jan Tretmans

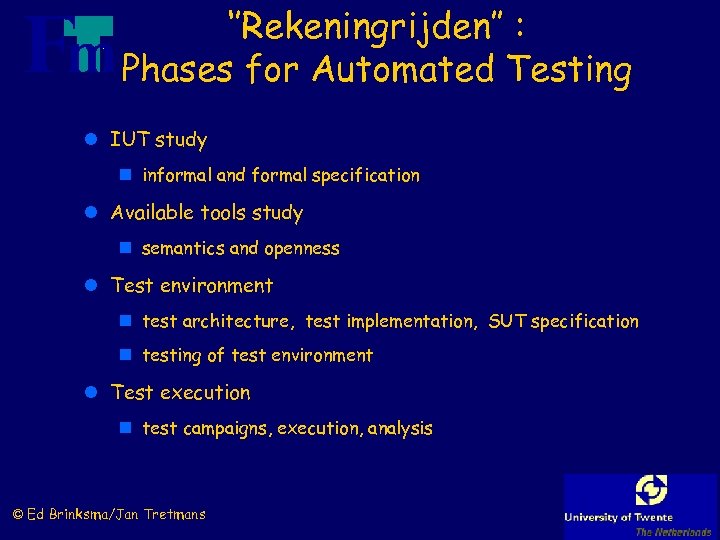

‘’Rekeningrijden’’ : Phases for Automated Testing l IUT study n informal and formal specification l Available tools study n semantics and openness l Test environment n test architecture, test implementation, SUT specification n testing of test environment l Test execution n test campaigns, execution, analysis © Ed Brinksma/Jan Tretmans

‘’Rekeningrijden’’ Highway Tolling System Payment Box Onboard Unit Wireless © Ed Brinksma/Jan Tretmans (PB) Road Side Equipment UDP/IP

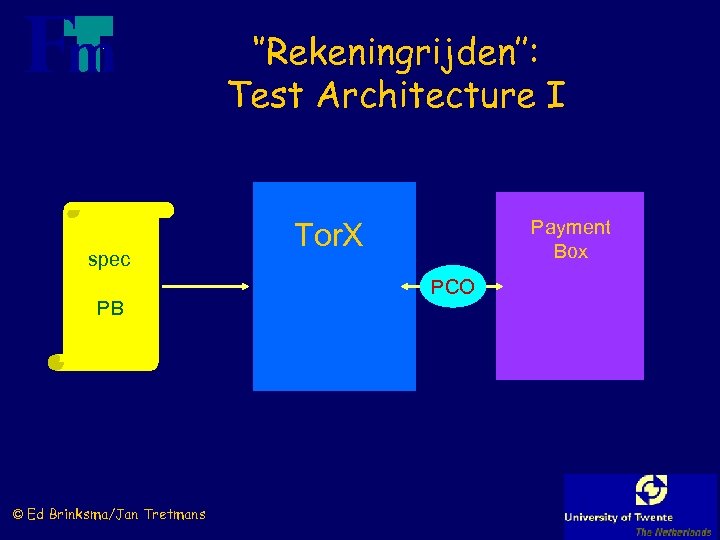

‘’Rekeningrijden’’: Test Architecture I spec PB © Ed Brinksma/Jan Tretmans Payment Box Tor. X PCO

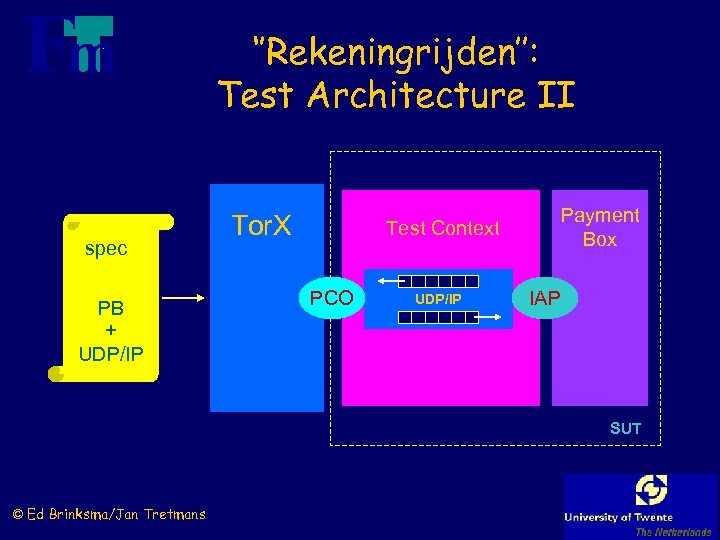

‘’Rekeningrijden’’: Test Architecture II spec PB + UDP/IP Tor. X Test Context PCO UDP/IP Payment Box IAP SUT © Ed Brinksma/Jan Tretmans

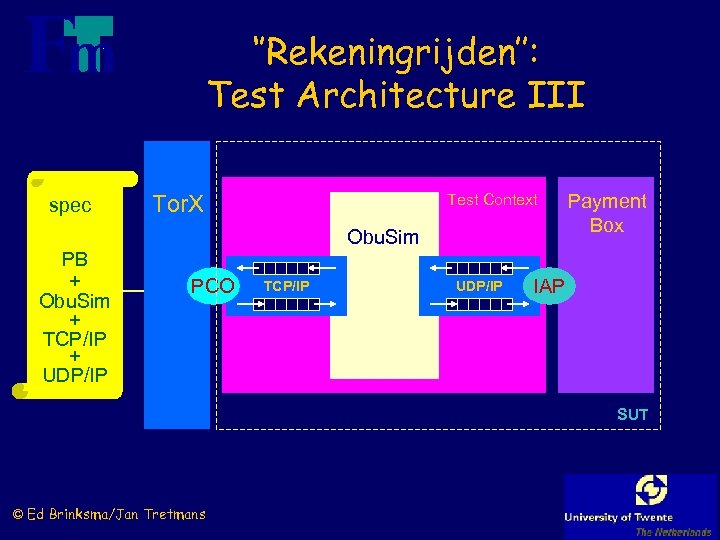

‘’Rekeningrijden’’: Test Architecture III spec PB + Obu. Sim + TCP/IP + UDP/IP Test Context Tor. X Obu. Sim PCO TCP/IP UDP/IP Payment Box IAP SUT © Ed Brinksma/Jan Tretmans

‘’Rekeningrijden’’: Test Campaigns l Introduction and use of Test Campaigns : n Management of test tool configurations n Management of IUT configurations n Steering of test derivation n Scheduling of test runs n Archiving of results © Ed Brinksma/Jan Tretmans

‘’Rekeningrijden’’: Issues l Parallellism : n very easy l Encryption : n Not all events can be synthesized : Leads to reduced testing power l Real-time : n How to cope with real time constraints ? n Efficient computation for on-the-fly testing ? n Lack of theory: quiescence vs. time-out © Ed Brinksma/Jan Tretmans

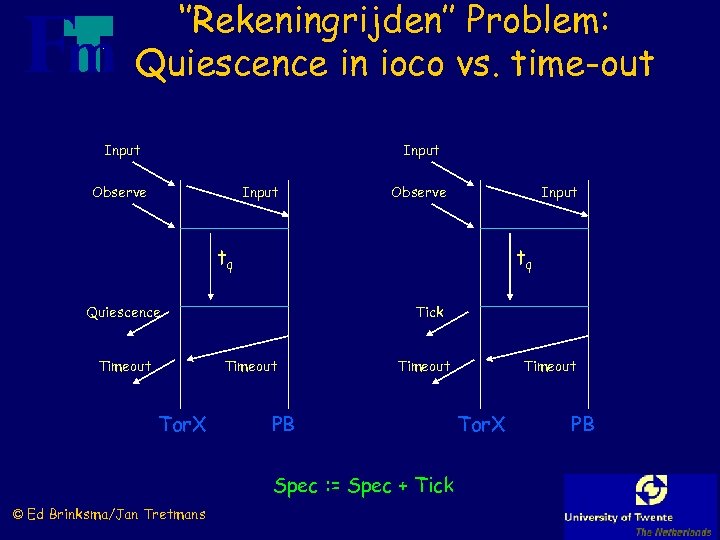

‘’Rekeningrijden’’ Problem: Quiescence in ioco vs. time-out Input Observe Input tq tq Quiescence Timeout Tick Timeout Tor. X Timeout PB Spec : = Spec + Tick © Ed Brinksma/Jan Tretmans Timeout Tor. X PB

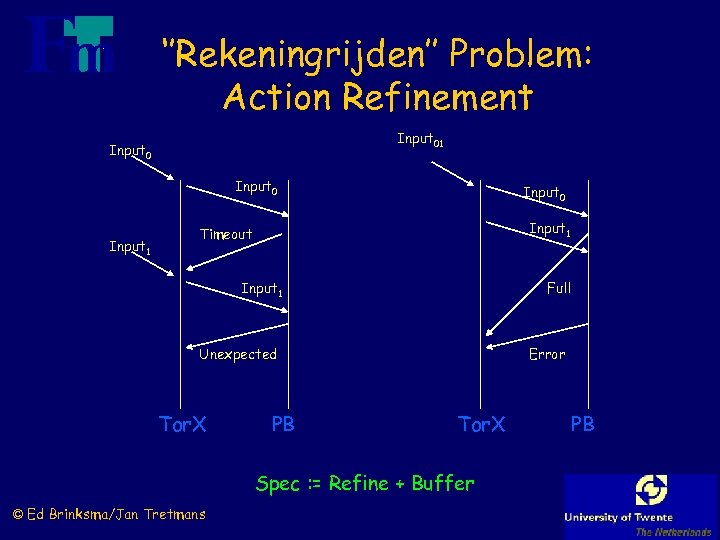

‘’Rekeningrijden’’ Problem: Action Refinement Input 01 Input 0 Input 1 Timeout Input 1 Full Unexpected Tor. X PB Error Tor. X Spec : = Refine + Buffer © Ed Brinksma/Jan Tretmans PB

‘’Rekeningrijden’’: Issues l Modelling language: LOTOS Promela l Spec for testing Spec for validation l Development of specification is iterative process l Development of test environment is laborious l Parameters are fixed in the model l Preprocessing: M 4/CPP l Promela problem: Guarded inputs l Test Campaigns for bookkeeping and control of experiments l Probabilities incorporated © Ed Brinksma/Jan Tretmans

‘’Rekeningrijden” : Results l Test results : n 1 error during validation (design error) n 1 error during testing (coding error) l Automated testing : n beneficial: high volume and reliability n many and long tests executed ( > 50, 000 test events ) n very flexible: adaptation and many configurations l Step ahead in formal testing of realistic systems © Ed Brinksma/Jan Tretmans

7e50872d1bb0929bfd62eeb94b792135.ppt