c8d911793e5411b94e4bba0a95b13ccd.ppt

- Количество слайдов: 55

Antonio Gulli

Antonio Gulli

AGENDA n Overview of Spidering Technology … hey, how can I get that page? n Overview of a Web Graph … tell me something about the arena n Google Overview Mining the Web Discovering Knowledge from Hypertext Data Soumen Chakrabarti (CAP. 2) Morgan-Kaufmann Publishers 352 pages, cloth/hard-bound ISBN 1 -55860 -754 -4

AGENDA n Overview of Spidering Technology … hey, how can I get that page? n Overview of a Web Graph … tell me something about the arena n Google Overview Mining the Web Discovering Knowledge from Hypertext Data Soumen Chakrabarti (CAP. 2) Morgan-Kaufmann Publishers 352 pages, cloth/hard-bound ISBN 1 -55860 -754 -4

Spidering n n 24 h, 7 days “walking” over a Graph, getting data What about the Graph? n n n Recall the sample of 150 sites (last lesson) Direct graph G = (N, E) N changes (insert, delete) ~ 4 -6 * 109 nodes E changes (insert, delete) ~ 10 links for node Size 10*4*109 = 4*1010 non zero entries in adj matrix EX: suppose a 64 bit hash for URL, how much space for storing the adj matrix?

Spidering n n 24 h, 7 days “walking” over a Graph, getting data What about the Graph? n n n Recall the sample of 150 sites (last lesson) Direct graph G = (N, E) N changes (insert, delete) ~ 4 -6 * 109 nodes E changes (insert, delete) ~ 10 links for node Size 10*4*109 = 4*1010 non zero entries in adj matrix EX: suppose a 64 bit hash for URL, how much space for storing the adj matrix?

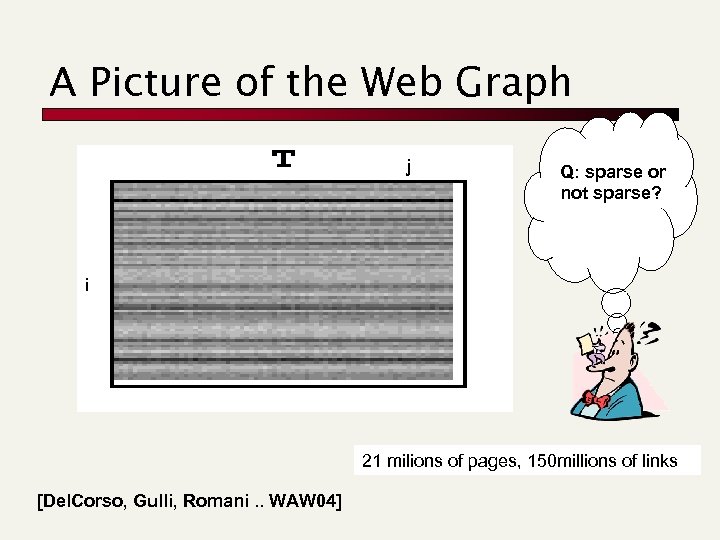

A Picture of the Web Graph j Q: sparse or not sparse? i 21 milions of pages, 150 millions of links [Del. Corso, Gulli, Romani. . WAW 04]

A Picture of the Web Graph j Q: sparse or not sparse? i 21 milions of pages, 150 millions of links [Del. Corso, Gulli, Romani. . WAW 04]

![A Picture of the Web Graph [BRODER, www 9] A Picture of the Web Graph [BRODER, www 9]](https://present5.com/presentation/c8d911793e5411b94e4bba0a95b13ccd/image-5.jpg) A Picture of the Web Graph [BRODER, www 9]

A Picture of the Web Graph [BRODER, www 9]

A Picture of the Web Graph Berkeley Stanford Q: what kind of sorting is this? [Hawelivala, www 12]

A Picture of the Web Graph Berkeley Stanford Q: what kind of sorting is this? [Hawelivala, www 12]

![A Picture of the Web Graph [Ravaghan, www 9] READ IT!!!!! A Picture of the Web Graph [Ravaghan, www 9] READ IT!!!!!](https://present5.com/presentation/c8d911793e5411b94e4bba0a95b13ccd/image-7.jpg) A Picture of the Web Graph [Ravaghan, www 9] READ IT!!!!!

A Picture of the Web Graph [Ravaghan, www 9] READ IT!!!!!

The Web’s Characteristics n Size n n Over a billion pages available 5 -10 K per page => tens of terabytes Size doubles every 2 years Change n n n 23% change daily Half life time of about 10 days Bowtie structure

The Web’s Characteristics n Size n n Over a billion pages available 5 -10 K per page => tens of terabytes Size doubles every 2 years Change n n n 23% change daily Half life time of about 10 days Bowtie structure

Search Engine Structure Page Repository Collection Indexer Analysis Queries Query Engine Crawlers Text Crawl Control Structure Indexes Utility Results Ranking

Search Engine Structure Page Repository Collection Indexer Analysis Queries Query Engine Crawlers Text Crawl Control Structure Indexes Utility Results Ranking

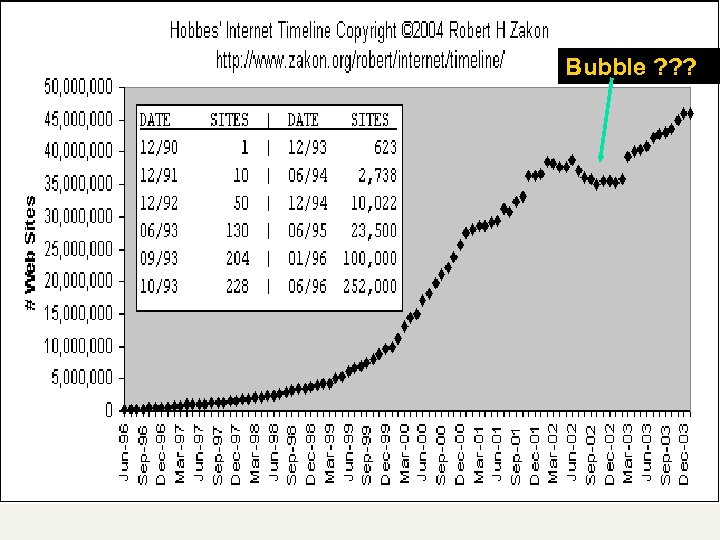

Bubble ? ? ?

Bubble ? ? ?

Crawler “cycle of life” Link Extractor: while(

Crawler “cycle of life” Link Extractor: while(

Architecture of Incremental Crawler INTERNET DNS Revolvers LEGENDA DNS Cache Parallel Downloaders Strutture Dati Already Seen Pages Moduli Software Parsers Parallel Crawler Managers … Priority Que Indexer … Robot. txt Cache [Gulli, 98] SPIDERS … Parallel Link Extractors Distributed Page Repository Page INDEXERS Analysis

Architecture of Incremental Crawler INTERNET DNS Revolvers LEGENDA DNS Cache Parallel Downloaders Strutture Dati Already Seen Pages Moduli Software Parsers Parallel Crawler Managers … Priority Que Indexer … Robot. txt Cache [Gulli, 98] SPIDERS … Parallel Link Extractors Distributed Page Repository Page INDEXERS Analysis

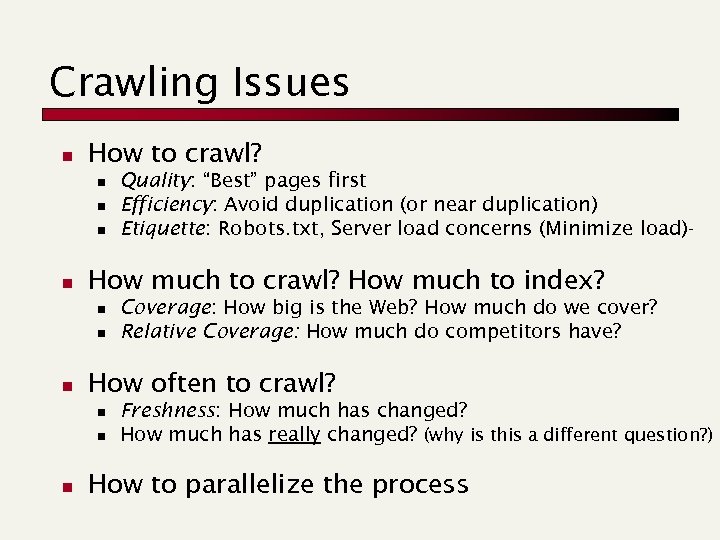

Crawling Issues n How to crawl? n n How much to crawl? How much to index? n n n Coverage: How big is the Web? How much do we cover? Relative Coverage: How much do competitors have? How often to crawl? n n n Quality: “Best” pages first Efficiency: Avoid duplication (or near duplication) Etiquette: Robots. txt, Server load concerns (Minimize load)- Freshness: How much has changed? How much has really changed? (why is this a different question? ) How to parallelize the process

Crawling Issues n How to crawl? n n How much to crawl? How much to index? n n n Coverage: How big is the Web? How much do we cover? Relative Coverage: How much do competitors have? How often to crawl? n n n Quality: “Best” pages first Efficiency: Avoid duplication (or near duplication) Etiquette: Robots. txt, Server load concerns (Minimize load)- Freshness: How much has changed? How much has really changed? (why is this a different question? ) How to parallelize the process

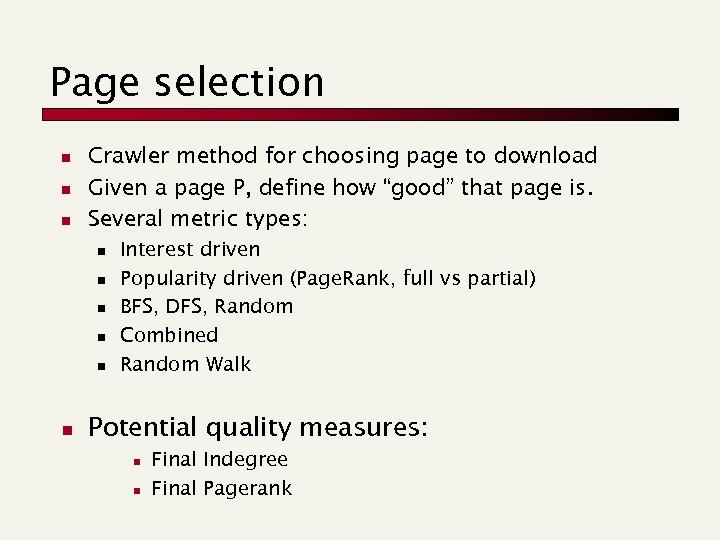

Page selection n Crawler method for choosing page to download Given a page P, define how “good” that page is. Several metric types: n n n Interest driven Popularity driven (Page. Rank, full vs partial) BFS, DFS, Random Combined Random Walk Potential quality measures: n n Final Indegree Final Pagerank

Page selection n Crawler method for choosing page to download Given a page P, define how “good” that page is. Several metric types: n n n Interest driven Popularity driven (Page. Rank, full vs partial) BFS, DFS, Random Combined Random Walk Potential quality measures: n n Final Indegree Final Pagerank

BFS n n “…breadth-first search order discovers the highest quality pages during the early stages of the crawl BFS” 328 milioni di URL nel testbed Q: how this is related to SCC, Power Laws. domains hierarchy in a Web Graph? See more when we will do Page. Rank [Najork 01]

BFS n n “…breadth-first search order discovers the highest quality pages during the early stages of the crawl BFS” 328 milioni di URL nel testbed Q: how this is related to SCC, Power Laws. domains hierarchy in a Web Graph? See more when we will do Page. Rank [Najork 01]

![Stanford Web Base (179 K, 1998) [Cho 98] Perc. Overlap with best x% by Stanford Web Base (179 K, 1998) [Cho 98] Perc. Overlap with best x% by](https://present5.com/presentation/c8d911793e5411b94e4bba0a95b13ccd/image-16.jpg) Stanford Web Base (179 K, 1998) [Cho 98] Perc. Overlap with best x% by indegree x% crawled by O(u) Perc. Overlap with best x% by pagerank x% crawled by O(u)

Stanford Web Base (179 K, 1998) [Cho 98] Perc. Overlap with best x% by indegree x% crawled by O(u) Perc. Overlap with best x% by pagerank x% crawled by O(u)

BFS & Spam (Worst case scenario) Start Page BFS depth = 2 Normal avg outdegree = 10 100 URLs on the queue including a spam page. Assume the spammer is able to generate dynamic pages with 1000 outlinks BFS depth = 3 2000 URLs on the queue 50% belong to the spammer BFS depth = 4 1. 01 million URLs on the queue 99% belong to the spammer

BFS & Spam (Worst case scenario) Start Page BFS depth = 2 Normal avg outdegree = 10 100 URLs on the queue including a spam page. Assume the spammer is able to generate dynamic pages with 1000 outlinks BFS depth = 3 2000 URLs on the queue 50% belong to the spammer BFS depth = 4 1. 01 million URLs on the queue 99% belong to the spammer

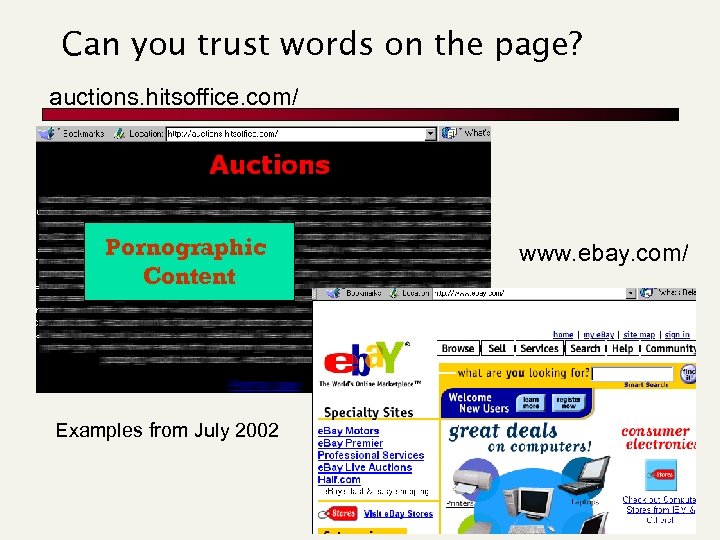

Can you trust words on the page? auctions. hitsoffice. com/ Pornographic Content Examples from July 2002 www. ebay. com/

Can you trust words on the page? auctions. hitsoffice. com/ Pornographic Content Examples from July 2002 www. ebay. com/

A few spam technologies n Cloaking n n n Doorway pages n n n Misleading meta-keywords, excessive repetition of a term, fake “anchor text” Hidden text with colors, CSS tricks, etc. Cloaking Y SPAM Is this a Search Engine spider? N Real Doc Link spamming n n n Pages optimized for a single keyword that redirect to the real target page Keyword Spam n n Serve fake content to search engine robot DNS cloaking: Switch IP address. Impersonate Mutual admiration societies, hidden links, awards Domain flooding: numerous domains that point or re-direct to a target page Robots n n n Fake click stream Fake query stream Millions of submissions via Add-Url Meta-Keywords = “… London hotels, hotel, holiday inn, hilton, discount, booking, reservation, sex, mp 3, britney spears, viagra, …”

A few spam technologies n Cloaking n n n Doorway pages n n n Misleading meta-keywords, excessive repetition of a term, fake “anchor text” Hidden text with colors, CSS tricks, etc. Cloaking Y SPAM Is this a Search Engine spider? N Real Doc Link spamming n n n Pages optimized for a single keyword that redirect to the real target page Keyword Spam n n Serve fake content to search engine robot DNS cloaking: Switch IP address. Impersonate Mutual admiration societies, hidden links, awards Domain flooding: numerous domains that point or re-direct to a target page Robots n n n Fake click stream Fake query stream Millions of submissions via Add-Url Meta-Keywords = “… London hotels, hotel, holiday inn, hilton, discount, booking, reservation, sex, mp 3, britney spears, viagra, …”

Parallel Crawlers • • Web is too big to be crawled by a single crawler, work should be divided Independent assignment • • Each crawler starts with its own set of URLs Follows links without consulting other crawlers Reduces communication overhead Some overlap is unavoidable

Parallel Crawlers • • Web is too big to be crawled by a single crawler, work should be divided Independent assignment • • Each crawler starts with its own set of URLs Follows links without consulting other crawlers Reduces communication overhead Some overlap is unavoidable

Parallel Crawlers • Dynamic assignment • • Central coordinator divides web into partitions Crawlers crawl their assigned partition Links to other URLs are given to Central coordinator Static assignment • • Web is partitioned and divided to each crawler Crawler only crawls its part of the web

Parallel Crawlers • Dynamic assignment • • Central coordinator divides web into partitions Crawlers crawl their assigned partition Links to other URLs are given to Central coordinator Static assignment • • Web is partitioned and divided to each crawler Crawler only crawls its part of the web

URL-Seen Problem n n Need to check if file has been parsed or downloaded before - after 20 million pages, we have “seen” over 100 million URLs - each URL is 50 to 75 bytes on average Options: compress URLs in main memory, or use disk - Bloom Filter (Archive) [we will discuss this later] - disk access with caching (Mercator, Altavista)

URL-Seen Problem n n Need to check if file has been parsed or downloaded before - after 20 million pages, we have “seen” over 100 million URLs - each URL is 50 to 75 bytes on average Options: compress URLs in main memory, or use disk - Bloom Filter (Archive) [we will discuss this later] - disk access with caching (Mercator, Altavista)

Virtual Documents n n n P = “whitehouse. org”, not yet reached {P 1…. Pr} reached, {P 1…. Pr} points to P Insert into the index the anchors context n …George Bush, President of U. S. lives at White. House Pagina Web e Documento Virtuale White House bush Washington

Virtual Documents n n n P = “whitehouse. org”, not yet reached {P 1…. Pr} reached, {P 1…. Pr} points to P Insert into the index the anchors context n …George Bush, President of U. S. lives at White. House Pagina Web e Documento Virtuale White House bush Washington

Focused Crawling n - - Focused Crawler: selectively seeks out pages that are relevant to a pre-defined set of topics. Topics specified by using exemplary documents (not keywords) Crawl most relevant links Ignore irrelevant parts. Leads to significant savings in hardware and network resources.

Focused Crawling n - - Focused Crawler: selectively seeks out pages that are relevant to a pre-defined set of topics. Topics specified by using exemplary documents (not keywords) Crawl most relevant links Ignore irrelevant parts. Leads to significant savings in hardware and network resources.

![Focused Crawling Pr[documento rilevante | il termine t è presente] Pr[documento irrilevante | il Focused Crawling Pr[documento rilevante | il termine t è presente] Pr[documento irrilevante | il](https://present5.com/presentation/c8d911793e5411b94e4bba0a95b13ccd/image-25.jpg) Focused Crawling Pr[documento rilevante | il termine t è presente] Pr[documento irrilevante | il termine t è presente] Pr[termine t sia presente | il doc sia rilevante] Pr[termine t sia presente | il doc sia irrilevante]

Focused Crawling Pr[documento rilevante | il termine t è presente] Pr[documento irrilevante | il termine t è presente] Pr[termine t sia presente | il doc sia rilevante] Pr[termine t sia presente | il doc sia irrilevante]

An example of crawler Polybot n n n crawl of 120 million pages over 19 days 161 million HTTP request 16 million robots. txt requests 138 million successful non-robots requests 17 million HTTP errors (401, 403, 404 etc) 121 million pages retrieved slow during day, fast at night peak about 300 pages/s over T 3 many downtimes due to attacks, crashes, revisions http: //cis. poly. edu/polybot/ [Suel 02]

An example of crawler Polybot n n n crawl of 120 million pages over 19 days 161 million HTTP request 16 million robots. txt requests 138 million successful non-robots requests 17 million HTTP errors (401, 403, 404 etc) 121 million pages retrieved slow during day, fast at night peak about 300 pages/s over T 3 many downtimes due to attacks, crashes, revisions http: //cis. poly. edu/polybot/ [Suel 02]

Examples: Open Source n Nutch, also used by Overture n n http: //www. nutch. org Hentrix, used by Archive. org n http: //archive-crawler. sourceforge. net/index. html

Examples: Open Source n Nutch, also used by Overture n n http: //www. nutch. org Hentrix, used by Archive. org n http: //archive-crawler. sourceforge. net/index. html

Where we are? n n n Spidering Technologies Web Graph (a glimpse) Now, some funny math on two crawling issues n n 1) Hash for robust load balance 2) Mirror Detection

Where we are? n n n Spidering Technologies Web Graph (a glimpse) Now, some funny math on two crawling issues n n 1) Hash for robust load balance 2) Mirror Detection

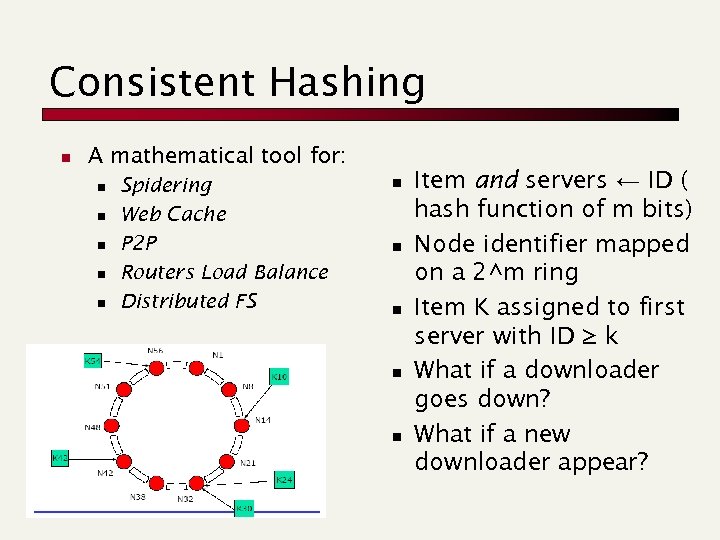

Consistent Hashing n A mathematical tool for: n n n Spidering Web Cache P 2 P Routers Load Balance Distributed FS n n n Item and servers ← ID ( hash function of m bits) Node identifier mapped on a 2^m ring Item K assigned to first server with ID ≥ k What if a downloader goes down? What if a new downloader appear?

Consistent Hashing n A mathematical tool for: n n n Spidering Web Cache P 2 P Routers Load Balance Distributed FS n n n Item and servers ← ID ( hash function of m bits) Node identifier mapped on a 2^m ring Item K assigned to first server with ID ≥ k What if a downloader goes down? What if a new downloader appear?

Duplicate/Near-Duplicate Detection n n Duplication: Exact match with fingerprints Near-Duplication: Approximate match n Overview n n Compute syntactic similarity with an editdistance measure Use similarity threshold to detect nearduplicates n n E. g. , Similarity > 80% => Documents are “near duplicates” Not transitive though sometimes used transitively

Duplicate/Near-Duplicate Detection n n Duplication: Exact match with fingerprints Near-Duplication: Approximate match n Overview n n Compute syntactic similarity with an editdistance measure Use similarity threshold to detect nearduplicates n n E. g. , Similarity > 80% => Documents are “near duplicates” Not transitive though sometimes used transitively

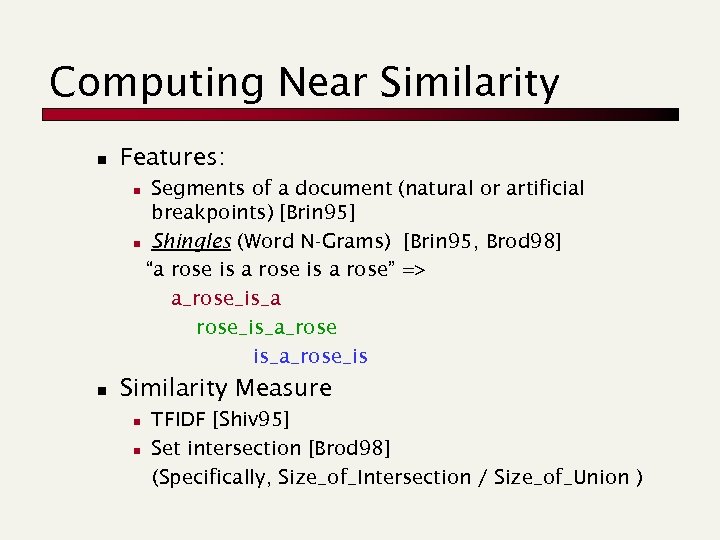

Computing Near Similarity n Features: Segments of a document (natural or artificial breakpoints) [Brin 95] n Shingles (Word N-Grams) [Brin 95, Brod 98] “a rose is a rose” => a_rose_is_a_rose is_a_rose_is n n Similarity Measure n n TFIDF [Shiv 95] Set intersection [Brod 98] (Specifically, Size_of_Intersection / Size_of_Union )

Computing Near Similarity n Features: Segments of a document (natural or artificial breakpoints) [Brin 95] n Shingles (Word N-Grams) [Brin 95, Brod 98] “a rose is a rose” => a_rose_is_a_rose is_a_rose_is n n Similarity Measure n n TFIDF [Shiv 95] Set intersection [Brod 98] (Specifically, Size_of_Intersection / Size_of_Union )

Shingles + Set Intersection Computing exact set intersection of shingles between all pairs of documents is expensive and infeasible n n Approximate using a cleverly chosen subset of shingles from each (a sketch)

Shingles + Set Intersection Computing exact set intersection of shingles between all pairs of documents is expensive and infeasible n n Approximate using a cleverly chosen subset of shingles from each (a sketch)

Shingles + Set Intersection Estimate size_of_intersection / size_of_union based on a short sketch ( [Brod 97, Brod 98] ) n n Create a “sketch vector” (e. g. , of size 200) for each document Documents which share more than t (say 80%) corresponding vector elements are similar For doc D, sketch[ i ] is computed as follows: n n n Let f map all shingles in the universe to 0. . 2 m (e. g. , f = fingerprinting) Let pi be a specific random permutation on 0. . 2 m Pick sketch[i] : = MIN pi ( f(s) ) over all shingles s in D

Shingles + Set Intersection Estimate size_of_intersection / size_of_union based on a short sketch ( [Brod 97, Brod 98] ) n n Create a “sketch vector” (e. g. , of size 200) for each document Documents which share more than t (say 80%) corresponding vector elements are similar For doc D, sketch[ i ] is computed as follows: n n n Let f map all shingles in the universe to 0. . 2 m (e. g. , f = fingerprinting) Let pi be a specific random permutation on 0. . 2 m Pick sketch[i] : = MIN pi ( f(s) ) over all shingles s in D

![Computing Sketch[i] for Doc 1 Document 1 264 Start with 64 bit shingles Permute Computing Sketch[i] for Doc 1 Document 1 264 Start with 64 bit shingles Permute](https://present5.com/presentation/c8d911793e5411b94e4bba0a95b13ccd/image-34.jpg) Computing Sketch[i] for Doc 1 Document 1 264 Start with 64 bit shingles Permute on the number line pi 264 with 264 Pick the min value

Computing Sketch[i] for Doc 1 Document 1 264 Start with 64 bit shingles Permute on the number line pi 264 with 264 Pick the min value

![Test if Doc 1. Sketch[i] = Doc 2. Sketch[i] Document 2 Document 1 264 Test if Doc 1. Sketch[i] = Doc 2. Sketch[i] Document 2 Document 1 264](https://present5.com/presentation/c8d911793e5411b94e4bba0a95b13ccd/image-35.jpg) Test if Doc 1. Sketch[i] = Doc 2. Sketch[i] Document 2 Document 1 264 264 A 264 B 264 Are these equal? Test for 200 random permutations: p 1, p 2, … p 200 264

Test if Doc 1. Sketch[i] = Doc 2. Sketch[i] Document 2 Document 1 264 264 A 264 B 264 Are these equal? Test for 200 random permutations: p 1, p 2, … p 200 264

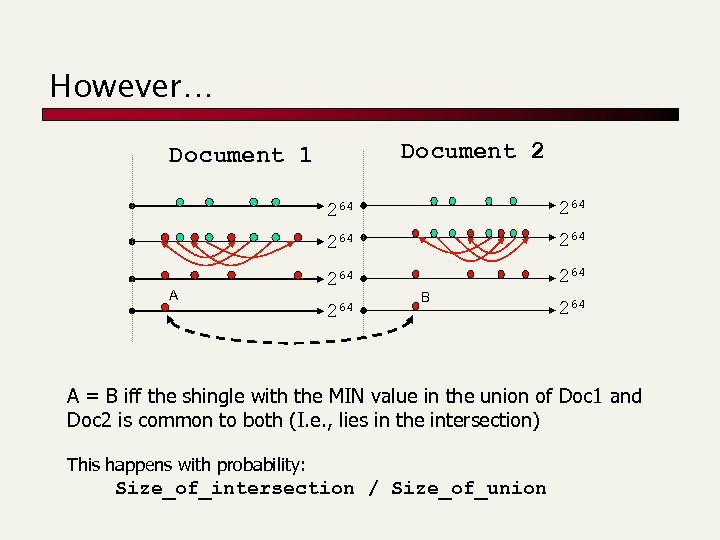

However… Document 2 Document 1 264 A 264 264 264 B 264 A = B iff the shingle with the MIN value in the union of Doc 1 and Doc 2 is common to both (I. e. , lies in the intersection) This happens with probability: Size_of_intersection / Size_of_union

However… Document 2 Document 1 264 A 264 264 264 B 264 A = B iff the shingle with the MIN value in the union of Doc 1 and Doc 2 is common to both (I. e. , lies in the intersection) This happens with probability: Size_of_intersection / Size_of_union

Mirror Detection n n Mirroring is systematic replication of web pages across hosts. n Single largest cause of duplication on the web Host 1/a and Host 2/b are mirrors iff For all (or most) paths p such that when http: //Host 1/ a / p exists http: //Host 2/ b / p exists as well with identical (or near identical) content, and vice versa.

Mirror Detection n n Mirroring is systematic replication of web pages across hosts. n Single largest cause of duplication on the web Host 1/a and Host 2/b are mirrors iff For all (or most) paths p such that when http: //Host 1/ a / p exists http: //Host 2/ b / p exists as well with identical (or near identical) content, and vice versa.

Mirror Detection example n n http: //www. elsevier. com and http: //www. elsevier. nl / / Structural Classification of Proteins n n n http: //scop. mrc-lmb. cam. ac. uk /scop http: //scop. berkeley. edu / http: //scop. wehi. edu. au/ scop http: //pdb. weizmann. ac. il scop / http: //scop. protres. ru /

Mirror Detection example n n http: //www. elsevier. com and http: //www. elsevier. nl / / Structural Classification of Proteins n n n http: //scop. mrc-lmb. cam. ac. uk /scop http: //scop. berkeley. edu / http: //scop. wehi. edu. au/ scop http: //pdb. weizmann. ac. il scop / http: //scop. protres. ru /

Motivation n Why detect mirrors? n Smart crawling n n n Better connectivity analysis n n n Combine inlinks Avoid double counting outlinks Redundancy in result listings n n Fetch from the fastest or freshest server Avoid duplication “If that fails you can try:

Motivation n Why detect mirrors? n Smart crawling n n n Better connectivity analysis n n n Combine inlinks Avoid double counting outlinks Redundancy in result listings n n Fetch from the fastest or freshest server Avoid duplication “If that fails you can try:

![Bottom Up Mirror Detection [Cho 00] n n Maintain clusters of subgraphs Initialize clusters Bottom Up Mirror Detection [Cho 00] n n Maintain clusters of subgraphs Initialize clusters](https://present5.com/presentation/c8d911793e5411b94e4bba0a95b13ccd/image-40.jpg) Bottom Up Mirror Detection [Cho 00] n n Maintain clusters of subgraphs Initialize clusters of trivial subgraphs n n Group near-duplicate single documents into a cluster Subsequent passes n n n Merge clusters of the same cardinality and corresponding linkage Avoid decreasing cluster cardinality To detect mirrors we need: n n Adequate path overlap Contents of corresponding pages within a small time range

Bottom Up Mirror Detection [Cho 00] n n Maintain clusters of subgraphs Initialize clusters of trivial subgraphs n n Group near-duplicate single documents into a cluster Subsequent passes n n n Merge clusters of the same cardinality and corresponding linkage Avoid decreasing cluster cardinality To detect mirrors we need: n n Adequate path overlap Contents of corresponding pages within a small time range

Can we use URLs to find mirrors? www. synthesis. org c b a synthesis. stanford. edu d c d www. synthesis. org/Docs/Proj. Abs/synsys/synalysis. html synthesis. stanford. edu/Docs/Proj. Abs/deliv/high-tech-… www. synthesis. org/Docs/Proj. Abs/synsys/visual-semi-quant. html synthesis. stanford. edu/Docs/Proj. Abs/mech-enhanced… www. synthesis. org/Docs/annual. report 96. final. html synthesis. stanford. edu/Docs/Proj. Abs/mech-intro-… www. synthesis. org/Docs/cicee-berlin-paper. html synthesis. stanford. edu/Docs/Proj. Abs/mech-mm-case-… www. synthesis. org/Docs/myr 5 synthesis. stanford. edu/Docs/Proj. Abs/synsys/quant-dev-new-… www. synthesis. org/Docs/myr 5/cicee/bridge-gap. html synthesis. stanford. edu/Docs/annual. report 96. final. html www. synthesis. org/Docs/myr 5/cs/cs-meta. html synthesis. stanford. edu/Docs/annual. report 96. final_fn. html www. synthesis. org/Docs/myr 5/mech-intro-mechatron. html synthesis. stanford. edu/Docs/myr 5/assessment www. synthesis. org/Docs/myr 5/mech-take-home. html synthesis. stanford. edu/Docs/myr 5/assessment-… www. synthesis. org/Docs/myr 5/synsys/experiential-learning. html synthesis. stanford. edu/Docs/myr 5/assessment/mm-forum-kiosk-… www. synthesis. org/Docs/myr 5/synsys/mm-mech-dissec. html synthesis. stanford. edu/Docs/myr 5/assessment/neato-ucb. html www. synthesis. org/Docs/yr 5 ar synthesis. stanford. edu/Docs/myr 5/assessment/not-available. html www. synthesis. org/Docs/yr 5 ar/assess synthesis. stanford. edu/Docs/myr 5/cicee www. synthesis. org/Docs/yr 5 ar/cicee synthesis. stanford. edu/Docs/myr 5/cicee/bridge-gap. html www. synthesis. org/Docs/yr 5 ar/cicee/bridge-gap. html synthesis. stanford. edu/Docs/myr 5/cicee-main. html www. synthesis. org/Docs/yr 5 ar/cicee/comp-integ-analysis. html synthesis. stanford. edu/Docs/myr 5/cicee/comp-integ-analysis. html

Can we use URLs to find mirrors? www. synthesis. org c b a synthesis. stanford. edu d c d www. synthesis. org/Docs/Proj. Abs/synsys/synalysis. html synthesis. stanford. edu/Docs/Proj. Abs/deliv/high-tech-… www. synthesis. org/Docs/Proj. Abs/synsys/visual-semi-quant. html synthesis. stanford. edu/Docs/Proj. Abs/mech-enhanced… www. synthesis. org/Docs/annual. report 96. final. html synthesis. stanford. edu/Docs/Proj. Abs/mech-intro-… www. synthesis. org/Docs/cicee-berlin-paper. html synthesis. stanford. edu/Docs/Proj. Abs/mech-mm-case-… www. synthesis. org/Docs/myr 5 synthesis. stanford. edu/Docs/Proj. Abs/synsys/quant-dev-new-… www. synthesis. org/Docs/myr 5/cicee/bridge-gap. html synthesis. stanford. edu/Docs/annual. report 96. final. html www. synthesis. org/Docs/myr 5/cs/cs-meta. html synthesis. stanford. edu/Docs/annual. report 96. final_fn. html www. synthesis. org/Docs/myr 5/mech-intro-mechatron. html synthesis. stanford. edu/Docs/myr 5/assessment www. synthesis. org/Docs/myr 5/mech-take-home. html synthesis. stanford. edu/Docs/myr 5/assessment-… www. synthesis. org/Docs/myr 5/synsys/experiential-learning. html synthesis. stanford. edu/Docs/myr 5/assessment/mm-forum-kiosk-… www. synthesis. org/Docs/myr 5/synsys/mm-mech-dissec. html synthesis. stanford. edu/Docs/myr 5/assessment/neato-ucb. html www. synthesis. org/Docs/yr 5 ar synthesis. stanford. edu/Docs/myr 5/assessment/not-available. html www. synthesis. org/Docs/yr 5 ar/assess synthesis. stanford. edu/Docs/myr 5/cicee www. synthesis. org/Docs/yr 5 ar/cicee synthesis. stanford. edu/Docs/myr 5/cicee/bridge-gap. html www. synthesis. org/Docs/yr 5 ar/cicee/bridge-gap. html synthesis. stanford. edu/Docs/myr 5/cicee-main. html www. synthesis. org/Docs/yr 5 ar/cicee/comp-integ-analysis. html synthesis. stanford. edu/Docs/myr 5/cicee/comp-integ-analysis. html

Where we are? n n n Spidering Web Graph Some nice mathematical tools n n Many others funny algorithmic for crawling issues… Now, a glimpse on a Google: (thanks to Jungoo Cho, 3 rd founder. . )

Where we are? n n n Spidering Web Graph Some nice mathematical tools n n Many others funny algorithmic for crawling issues… Now, a glimpse on a Google: (thanks to Jungoo Cho, 3 rd founder. . )

Google: Scale n n Number of pages indexed: 3 B in November 2002 Index refresh interval: Once per month ~ 1200 pages/sec Number of queries per day: 200 M in April 2003 ~ 2000 queries/sec Runs on commodity Intel-Linux boxes [Cho, 02]

Google: Scale n n Number of pages indexed: 3 B in November 2002 Index refresh interval: Once per month ~ 1200 pages/sec Number of queries per day: 200 M in April 2003 ~ 2000 queries/sec Runs on commodity Intel-Linux boxes [Cho, 02]

Google: Other Statistics n n n n Average page size: 10 KB Average query size: 40 B Average result size: 5 KB Average number of links per page: 10 Total raw HTML data size 3 G x 10 KB = 30 TB! Inverted index roughly the same size as raw corpus: 30 TB for index itself With appropriate compression, 3: 1 n 20 TB data residing in disk (and memory!!!)

Google: Other Statistics n n n n Average page size: 10 KB Average query size: 40 B Average result size: 5 KB Average number of links per page: 10 Total raw HTML data size 3 G x 10 KB = 30 TB! Inverted index roughly the same size as raw corpus: 30 TB for index itself With appropriate compression, 3: 1 n 20 TB data residing in disk (and memory!!!)

Google: Data Size and Crawling n Efficient crawl is very important n n Well-optimized crawler n n n 1 page/sec 1200 machines just for crawling Parallelization through thread/event queue necessary Complex crawling algorithm -- No, No! ~ 100 pages/sec (10 ms/page) ~ 12 machines for crawling Bandwidth consumption n n 1200 x 10 KB x 8 bit ~ 100 Mbps One dedicated OC 3 line (155 Mbps) for crawling ~ $400, 000 per year

Google: Data Size and Crawling n Efficient crawl is very important n n Well-optimized crawler n n n 1 page/sec 1200 machines just for crawling Parallelization through thread/event queue necessary Complex crawling algorithm -- No, No! ~ 100 pages/sec (10 ms/page) ~ 12 machines for crawling Bandwidth consumption n n 1200 x 10 KB x 8 bit ~ 100 Mbps One dedicated OC 3 line (155 Mbps) for crawling ~ $400, 000 per year

Google: Data Size, Query Processing n n n Index size: 10 TB 100 disks Typically less than 5 disks per machine Potentially 20 -machine cluster to answer a query n n If one machine goes down, the cluster goes down Two-tier index structure can be helpful n n n Tier 1: Popular (high Page. Rank) page index Tier 2: Less popular page index Most queries can be answered by tier-1 cluster (with fewer machines)

Google: Data Size, Query Processing n n n Index size: 10 TB 100 disks Typically less than 5 disks per machine Potentially 20 -machine cluster to answer a query n n If one machine goes down, the cluster goes down Two-tier index structure can be helpful n n n Tier 1: Popular (high Page. Rank) page index Tier 2: Less popular page index Most queries can be answered by tier-1 cluster (with fewer machines)

Google: Implication of Query Load n n 2000 queries / sec Rule of thumb: 1 query / sec per CPU n n n Depends on number of disks, memory size, etc. ~ 2000 machines just to answer queries 5 KB / answer page n n 2000 x 5 KB x 8 bit ~ 80 Mbps Half dedicated OC 3 line (155 Mbps) ~ $300, 000

Google: Implication of Query Load n n 2000 queries / sec Rule of thumb: 1 query / sec per CPU n n n Depends on number of disks, memory size, etc. ~ 2000 machines just to answer queries 5 KB / answer page n n 2000 x 5 KB x 8 bit ~ 80 Mbps Half dedicated OC 3 line (155 Mbps) ~ $300, 000

Google: Hardware n n 50, 000 Intel-Linux cluster Assuming 99. 9% uptime (8 hour downtime per year) n n n 50 machines are always down Nightmare for system administrators Assuming 3 -year hardware replacement n n Set up, replace and dump 50 machines every day Heterogeneity is unavoidable

Google: Hardware n n 50, 000 Intel-Linux cluster Assuming 99. 9% uptime (8 hour downtime per year) n n n 50 machines are always down Nightmare for system administrators Assuming 3 -year hardware replacement n n Set up, replace and dump 50 machines every day Heterogeneity is unavoidable

Shingles computation (for Web Clustering) n s 1=a_rose_is_a_rose_in_the = w 1 w 2 w 3 w 4 w 5 w 6 w 7 s 2=rose_is_a_rose_in_the_garden = w 1 w 2 w 3 w 4 w 5 w 6 w 7 n 0/1 representation (using ascii code) n n HP: a word is ~8 byte → length(si)=7*8 byte=448 bit This represent S, a poly with coefficient in 0/1 (Z 2) Rabin fingerprint n Map 448 bit in a K=40 bit space, with low collision prob. n Generate an irreducible poly P with degree Z 2) n F(S) = S mod P K-1 (see Galois

Shingles computation (for Web Clustering) n s 1=a_rose_is_a_rose_in_the = w 1 w 2 w 3 w 4 w 5 w 6 w 7 s 2=rose_is_a_rose_in_the_garden = w 1 w 2 w 3 w 4 w 5 w 6 w 7 n 0/1 representation (using ascii code) n n HP: a word is ~8 byte → length(si)=7*8 byte=448 bit This represent S, a poly with coefficient in 0/1 (Z 2) Rabin fingerprint n Map 448 bit in a K=40 bit space, with low collision prob. n Generate an irreducible poly P with degree Z 2) n F(S) = S mod P K-1 (see Galois

Shingles computation (an example) n No explicit random permutation n better to work forcing length(si)=K*z, z in N (for instance 480 bit) Induce a “random permutation” shifting of 480 -448 bits (32 position) Simple example (just 3 words, of 1 char each) n n S 1=a_b_c_d, S 2=b_c_d_e (a is 97 in ascii) S 10/1=01100001 01100010 01100011 01100100 = 32 bit S 20/1=01100010 01100011 01100100 01100101 = 32 bit HP K = 5 bit S 10/1=01100001 01100010 01100011 01100100 000 = 35 bit S 0/1=01100010 01100011 01100100 01100101 000 = 35 bit

Shingles computation (an example) n No explicit random permutation n better to work forcing length(si)=K*z, z in N (for instance 480 bit) Induce a “random permutation” shifting of 480 -448 bits (32 position) Simple example (just 3 words, of 1 char each) n n S 1=a_b_c_d, S 2=b_c_d_e (a is 97 in ascii) S 10/1=01100001 01100010 01100011 01100100 = 32 bit S 20/1=01100010 01100011 01100100 01100101 = 32 bit HP K = 5 bit S 10/1=01100001 01100010 01100011 01100100 000 = 35 bit S 0/1=01100010 01100011 01100100 01100101 000 = 35 bit

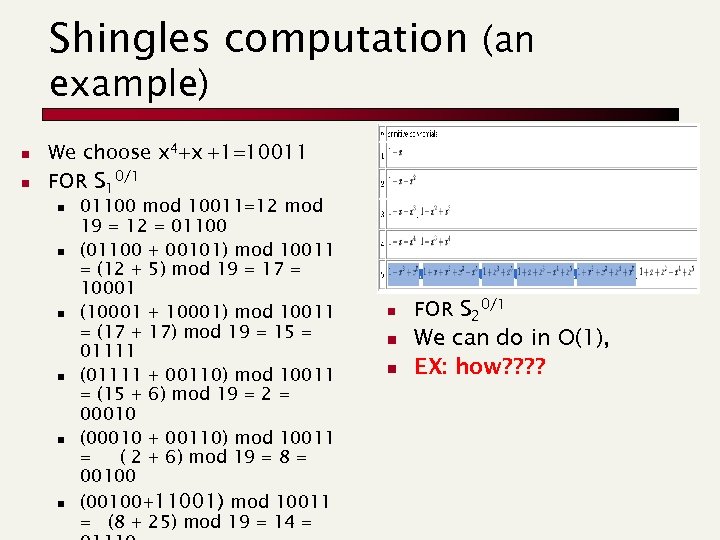

Shingles computation (an example) n n We choose x 4+x +1=10011 FOR S 10/1 n n n 01100 mod 10011=12 mod 19 = 12 = 01100 (01100 + 00101) mod 10011 = (12 + 5) mod 19 = 17 = 10001 (10001 + 10001) mod 10011 = (17 + 17) mod 19 = 15 = 01111 (01111 + 00110) mod 10011 = (15 + 6) mod 19 = 2 = 00010 (00010 + 00110) mod 10011 = ( 2 + 6) mod 19 = 8 = 00100 (00100+11001) mod 10011 = (8 + 25) mod 19 = 14 = n n n FOR S 20/1 We can do in O(1), EX: how? ?

Shingles computation (an example) n n We choose x 4+x +1=10011 FOR S 10/1 n n n 01100 mod 10011=12 mod 19 = 12 = 01100 (01100 + 00101) mod 10011 = (12 + 5) mod 19 = 17 = 10001 (10001 + 10001) mod 10011 = (17 + 17) mod 19 = 15 = 01111 (01111 + 00110) mod 10011 = (15 + 6) mod 19 = 2 = 00010 (00010 + 00110) mod 10011 = ( 2 + 6) mod 19 = 8 = 00100 (00100+11001) mod 10011 = (8 + 25) mod 19 = 14 = n n n FOR S 20/1 We can do in O(1), EX: how? ?

Shingle Computation (using xor and other operations) n n since coefficients are 0 or 1, can represent any such polynomial as a bit string addition becomes XOR of these bit strings multiplication is shift & XOR modulo reduction done by repeatedly substituting highest power with remainder of irreducible poly (also shift & XOR)

Shingle Computation (using xor and other operations) n n since coefficients are 0 or 1, can represent any such polynomial as a bit string addition becomes XOR of these bit strings multiplication is shift & XOR modulo reduction done by repeatedly substituting highest power with remainder of irreducible poly (also shift & XOR)

Shingles computation (final step) n n Given the set of fingerprints Take 1 out of m fingerprints (instead of the minimum) n This is the set of fingerprints, for a given document D n Repeat the generation for each document D 1, …, Dn n We obtain the set of tuple T ={

Shingles computation (final step) n n Given the set of fingerprints Take 1 out of m fingerprints (instead of the minimum) n This is the set of fingerprints, for a given document D n Repeat the generation for each document D 1, …, Dn n We obtain the set of tuple T ={

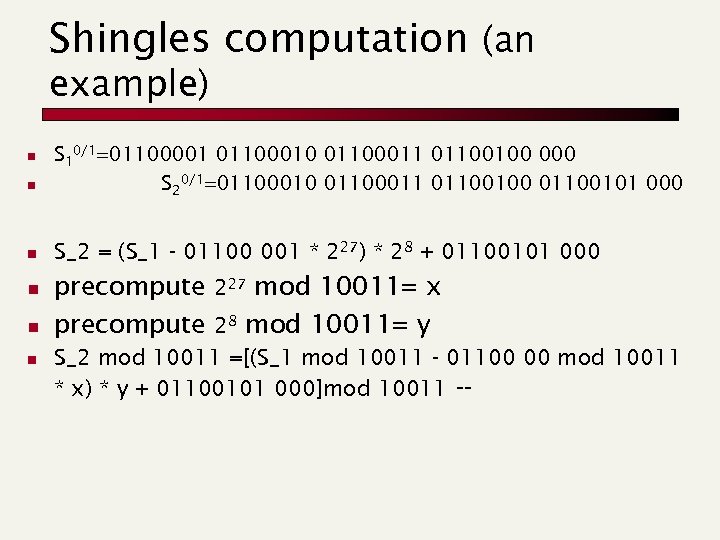

Shingles computation (an example) n S 10/1=01100001 01100010 01100011 01100100 000 S 20/1=01100010 01100011 01100100 01100101 000 n S_2 = (S_1 - 01100 001 * 227) * 28 + 01100101 000 n n precompute 227 mod 10011= x precompute 28 mod 10011= y S_2 mod 10011 =[(S_1 mod 10011 - 01100 00 mod 10011 * x) * y + 01100101 000]mod 10011 --

Shingles computation (an example) n S 10/1=01100001 01100010 01100011 01100100 000 S 20/1=01100010 01100011 01100100 01100101 000 n S_2 = (S_1 - 01100 001 * 227) * 28 + 01100101 000 n n precompute 227 mod 10011= x precompute 28 mod 10011= y S_2 mod 10011 =[(S_1 mod 10011 - 01100 00 mod 10011 * x) * y + 01100101 000]mod 10011 --