8ad2b8fb1c56fb8e08412696388af526.ppt

- Количество слайдов: 84

Annotation A Tutorial Eduard Hovy Information Sciences Institute University of Southern California 4676 Admiralty Way Marina del Rey, CA 90292 USA hovy@isi. edu http: //www. isi. edu/~hovy

Annotation A Tutorial Eduard Hovy Information Sciences Institute University of Southern California 4676 Admiralty Way Marina del Rey, CA 90292 USA hovy@isi. edu http: //www. isi. edu/~hovy

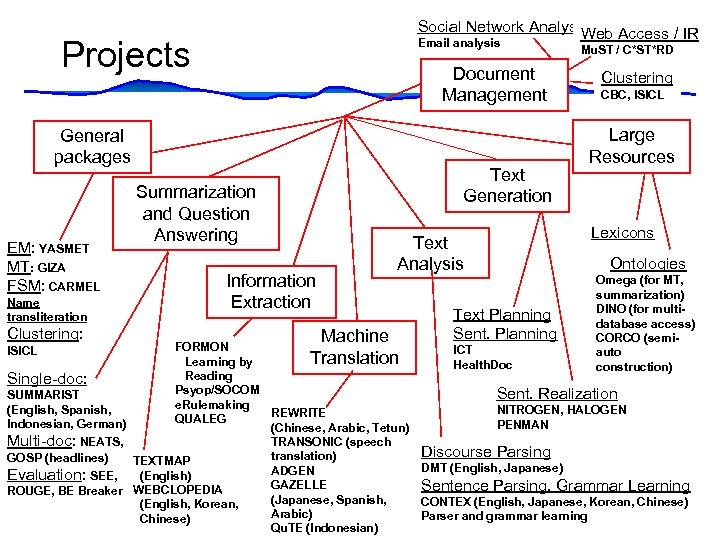

Social Network Analysis Web Access / IR Projects Email analysis Document Management General packages EM: YASMET MT: GIZA FSM: CARMEL Name transliteration Clustering: Text Generation Summarization and Question Answering Information Extraction Machine Text Analysis Text Planning Sent. Planning Mu. ST / C*ST*RD Clustering CBC, ISICL Large Resources Lexicons Ontologies Omega (for MT, summarization) DINO (for multidatabase access) CORCO (semiauto construction) FORMON ICT Translation Learning by Health. Doc Reading Single-doc: Psyop/SOCOM Sent. Realization SUMMARIST e. Rulemaking (English, Spanish, NITROGEN, HALOGEN REWRITE QUALEG Indonesian, German) PENMAN (Chinese, Arabic, Tetun) TRANSONIC (speech Multi-doc: NEATS, Discourse Parsing translation) GOSP (headlines) TEXTMAP DMT (English, Japanese) ADGEN Evaluation: SEE, (English) GAZELLE Sentence Parsing, Grammar Learning ROUGE, BE Breaker WEBCLOPEDIA (Japanese, Spanish, CONTEX (English, Japanese, Korean, Chinese) (English, Korean, Arabic) Parser and grammar learning Chinese) Qu. TE (Indonesian) ISICL

Social Network Analysis Web Access / IR Projects Email analysis Document Management General packages EM: YASMET MT: GIZA FSM: CARMEL Name transliteration Clustering: Text Generation Summarization and Question Answering Information Extraction Machine Text Analysis Text Planning Sent. Planning Mu. ST / C*ST*RD Clustering CBC, ISICL Large Resources Lexicons Ontologies Omega (for MT, summarization) DINO (for multidatabase access) CORCO (semiauto construction) FORMON ICT Translation Learning by Health. Doc Reading Single-doc: Psyop/SOCOM Sent. Realization SUMMARIST e. Rulemaking (English, Spanish, NITROGEN, HALOGEN REWRITE QUALEG Indonesian, German) PENMAN (Chinese, Arabic, Tetun) TRANSONIC (speech Multi-doc: NEATS, Discourse Parsing translation) GOSP (headlines) TEXTMAP DMT (English, Japanese) ADGEN Evaluation: SEE, (English) GAZELLE Sentence Parsing, Grammar Learning ROUGE, BE Breaker WEBCLOPEDIA (Japanese, Spanish, CONTEX (English, Japanese, Korean, Chinese) (English, Korean, Arabic) Parser and grammar learning Chinese) Qu. TE (Indonesian) ISICL

3

3

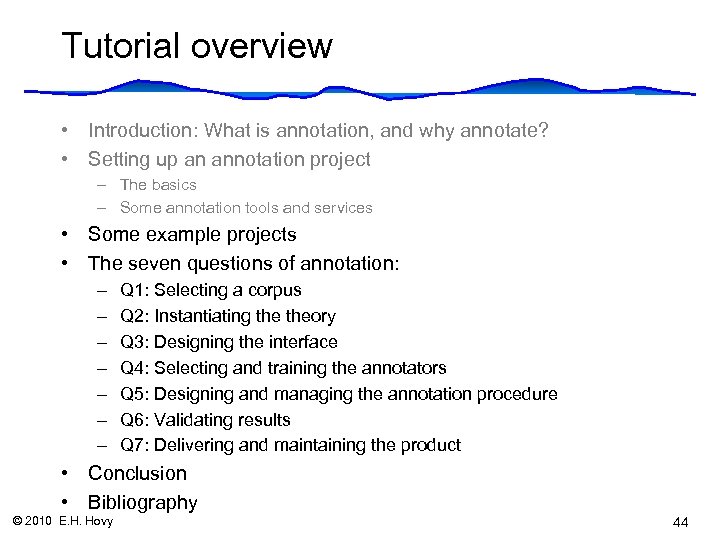

Tutorial overview • Introduction: What is annotation, and why annotate? • Setting up an annotation project: – The basics – Some annotation tools and services • Some example projects • The seven questions of annotation: – – – – Q 1: Selecting a corpus Q 2: Instantiating theory Q 3: Designing the interface Q 4: Selecting and training the annotators Q 5: Designing and managing the annotation procedure Q 6: Validating results Q 7: Delivering and maintaining the product • Conclusion • Bibliography © 2010 E. H. Hovy 4

Tutorial overview • Introduction: What is annotation, and why annotate? • Setting up an annotation project: – The basics – Some annotation tools and services • Some example projects • The seven questions of annotation: – – – – Q 1: Selecting a corpus Q 2: Instantiating theory Q 3: Designing the interface Q 4: Selecting and training the annotators Q 5: Designing and managing the annotation procedure Q 6: Validating results Q 7: Delivering and maintaining the product • Conclusion • Bibliography © 2010 E. H. Hovy 4

INTRODUCTION: WHAT IS ANNOTATION, AND WHY ANNOTATE? 5

INTRODUCTION: WHAT IS ANNOTATION, AND WHY ANNOTATE? 5

Definition of Annotation • Definition: Annotation (‘tagging’) is the process of adding new information into raw data by humans (annotators). Usually, the information is added by many small individual decisions, in many places throughout the data. The addition process usually requires some sort of mental decision that depends both on the raw data and on some theory or knowledge that the annotator has internalized earlier. • Typical annotation steps: – Decide which fragment of the data to annotate – Add to that fragment a specific bit of information, usually chosen from a fixed set of options © 2010 E. H. Hovy 6

Definition of Annotation • Definition: Annotation (‘tagging’) is the process of adding new information into raw data by humans (annotators). Usually, the information is added by many small individual decisions, in many places throughout the data. The addition process usually requires some sort of mental decision that depends both on the raw data and on some theory or knowledge that the annotator has internalized earlier. • Typical annotation steps: – Decide which fragment of the data to annotate – Add to that fragment a specific bit of information, usually chosen from a fixed set of options © 2010 E. H. Hovy 6

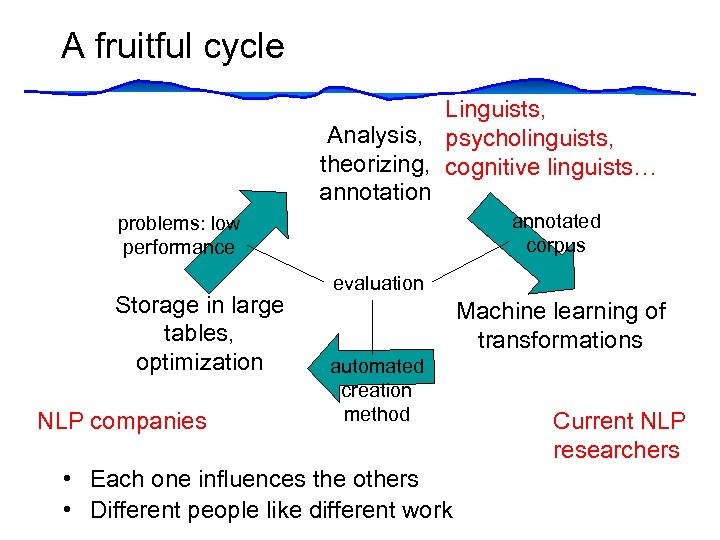

A fruitful cycle Linguists, Analysis, psycholinguists, theorizing, cognitive linguists… annotation annotated corpus problems: low performance Storage in large tables, optimization NLP companies evaluation Machine learning of transformations automated creation method • Each one influences the others • Different people like different work Current NLP researchers

A fruitful cycle Linguists, Analysis, psycholinguists, theorizing, cognitive linguists… annotation annotated corpus problems: low performance Storage in large tables, optimization NLP companies evaluation Machine learning of transformations automated creation method • Each one influences the others • Different people like different work Current NLP researchers

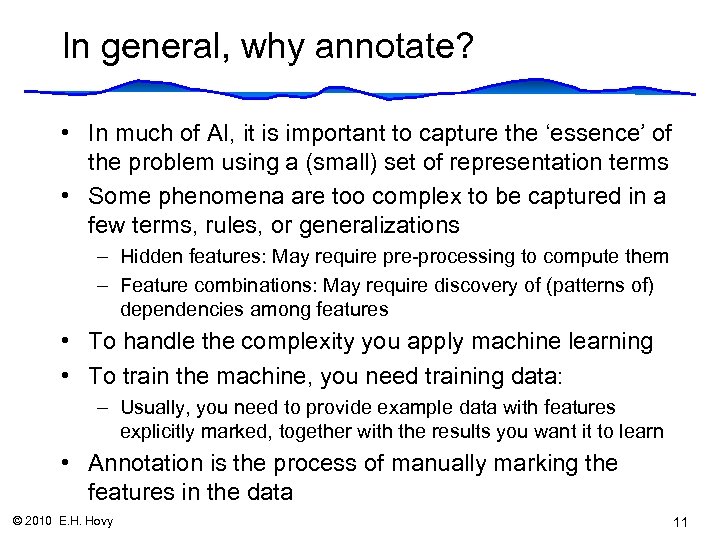

In general, why annotate? • In much of AI, it is important to capture the ‘essence’ of the problem using a (small) set of representation terms • Some phenomena are too complex to be captured in a few terms, rules, or generalizations – Hidden features: May require pre-processing to compute them – Feature combinations: May require discovery of (patterns of) dependencies among features • To handle the complexity you apply machine learning • To train the machine, you need training data: – Usually, you need to provide example data with features explicitly marked, together with the results you want it to learn • Annotation is the process of manually marking the features in the data © 2010 E. H. Hovy 11

In general, why annotate? • In much of AI, it is important to capture the ‘essence’ of the problem using a (small) set of representation terms • Some phenomena are too complex to be captured in a few terms, rules, or generalizations – Hidden features: May require pre-processing to compute them – Feature combinations: May require discovery of (patterns of) dependencies among features • To handle the complexity you apply machine learning • To train the machine, you need training data: – Usually, you need to provide example data with features explicitly marked, together with the results you want it to learn • Annotation is the process of manually marking the features in the data © 2010 E. H. Hovy 11

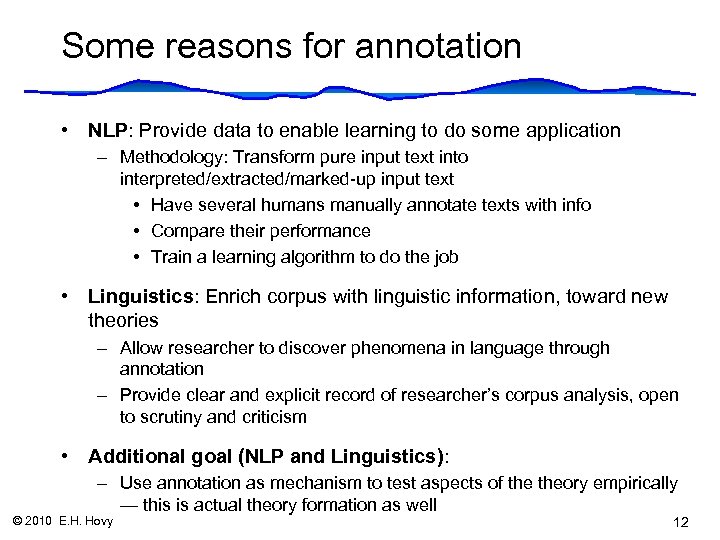

Some reasons for annotation • NLP: Provide data to enable learning to do some application – Methodology: Transform pure input text into interpreted/extracted/marked-up input text • Have several humans manually annotate texts with info • Compare their performance • Train a learning algorithm to do the job • Linguistics: Enrich corpus with linguistic information, toward new theories – Allow researcher to discover phenomena in language through annotation – Provide clear and explicit record of researcher’s corpus analysis, open to scrutiny and criticism • Additional goal (NLP and Linguistics): – Use annotation as mechanism to test aspects of theory empirically — this is actual theory formation as well © 2010 E. H. Hovy 12

Some reasons for annotation • NLP: Provide data to enable learning to do some application – Methodology: Transform pure input text into interpreted/extracted/marked-up input text • Have several humans manually annotate texts with info • Compare their performance • Train a learning algorithm to do the job • Linguistics: Enrich corpus with linguistic information, toward new theories – Allow researcher to discover phenomena in language through annotation – Provide clear and explicit record of researcher’s corpus analysis, open to scrutiny and criticism • Additional goal (NLP and Linguistics): – Use annotation as mechanism to test aspects of theory empirically — this is actual theory formation as well © 2010 E. H. Hovy 12

Some NL phenomena to annotate Somewhat easier Bracketing (scope) of predications Word sense selection (incl. copula) NP structure: genitives, modifiers… Concepts: ontology definition Concept structure (incl. frames and thematic roles) Coreference (entities and events) Pronoun classification (ref, bound, event, generic, other) Identification of events Temporal relations (incl. discourse and aspect) Manner relations Spatial relations Direct quotation and reported speech © 2010 E. H. Hovy More difficult Quantifier phrases and numerical expressions Comparatives Coordination Information structure (theme/rheme) Focus Discourse structure Other adverbials (epistemic modals, evidentials) Identification of propositions (modality) Opinions and subjectivity Pragmatics/speech acts Polarity/negation Presuppositions Metaphors 14

Some NL phenomena to annotate Somewhat easier Bracketing (scope) of predications Word sense selection (incl. copula) NP structure: genitives, modifiers… Concepts: ontology definition Concept structure (incl. frames and thematic roles) Coreference (entities and events) Pronoun classification (ref, bound, event, generic, other) Identification of events Temporal relations (incl. discourse and aspect) Manner relations Spatial relations Direct quotation and reported speech © 2010 E. H. Hovy More difficult Quantifier phrases and numerical expressions Comparatives Coordination Information structure (theme/rheme) Focus Discourse structure Other adverbials (epistemic modals, evidentials) Identification of propositions (modality) Opinions and subjectivity Pragmatics/speech acts Polarity/negation Presuppositions Metaphors 14

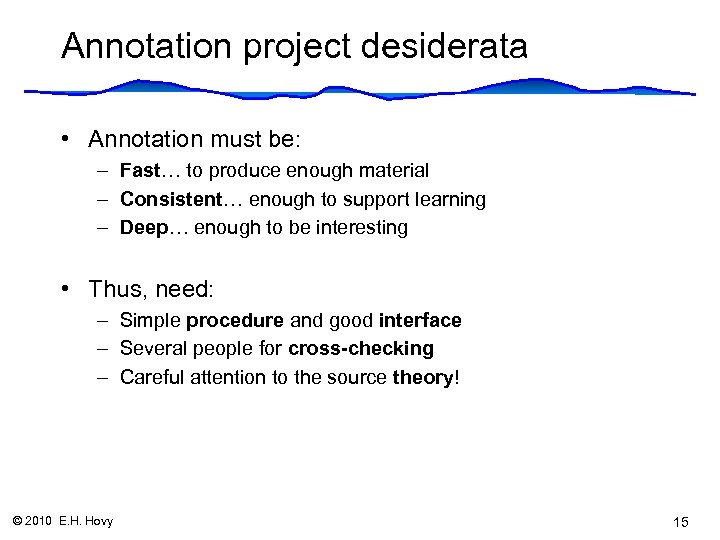

Annotation project desiderata • Annotation must be: – Fast… to produce enough material – Consistent… enough to support learning – Deep… enough to be interesting • Thus, need: – Simple procedure and good interface – Several people for cross-checking – Careful attention to the source theory! © 2010 E. H. Hovy 15

Annotation project desiderata • Annotation must be: – Fast… to produce enough material – Consistent… enough to support learning – Deep… enough to be interesting • Thus, need: – Simple procedure and good interface – Several people for cross-checking – Careful attention to the source theory! © 2010 E. H. Hovy 15

Tutorial overview • Introduction: What is annotation, and why annotate? • Setting up an annotation project – The basics – Some annotation tools and services • Some example projects • The seven questions of annotation: – – – – Q 1: Selecting a corpus Q 2: Instantiating theory Q 3: Designing the interface Q 4: Selecting and training the annotators Q 5: Designing and managing the annotation procedure Q 6: Validating results Q 7: Delivering and maintaining the product • Conclusion • Bibliography © 2010 E. H. Hovy 16

Tutorial overview • Introduction: What is annotation, and why annotate? • Setting up an annotation project – The basics – Some annotation tools and services • Some example projects • The seven questions of annotation: – – – – Q 1: Selecting a corpus Q 2: Instantiating theory Q 3: Designing the interface Q 4: Selecting and training the annotators Q 5: Designing and managing the annotation procedure Q 6: Validating results Q 7: Delivering and maintaining the product • Conclusion • Bibliography © 2010 E. H. Hovy 16

SETTING UP AN ANNOTATION PROJECT: THE BASICS 17

SETTING UP AN ANNOTATION PROJECT: THE BASICS 17

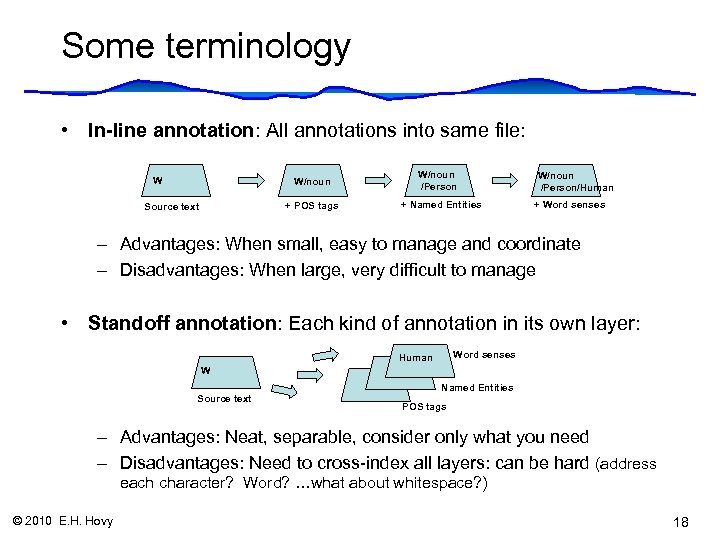

Some terminology • In-line annotation: All annotations into same file: W W/noun + POS tags Source text W/noun /Person + Named Entities W/noun /Person/Human + Word senses – Advantages: When small, easy to manage and coordinate – Disadvantages: When large, very difficult to manage • Standoff annotation: Each kind of annotation in its own layer: Word senses Human W Source text Named Entities POS tags – Advantages: Neat, separable, consider only what you need – Disadvantages: Need to cross-index all layers: can be hard (address each character? Word? …what about whitespace? ) © 2010 E. H. Hovy 18

Some terminology • In-line annotation: All annotations into same file: W W/noun + POS tags Source text W/noun /Person + Named Entities W/noun /Person/Human + Word senses – Advantages: When small, easy to manage and coordinate – Disadvantages: When large, very difficult to manage • Standoff annotation: Each kind of annotation in its own layer: Word senses Human W Source text Named Entities POS tags – Advantages: Neat, separable, consider only what you need – Disadvantages: Need to cross-index all layers: can be hard (address each character? Word? …what about whitespace? ) © 2010 E. H. Hovy 18

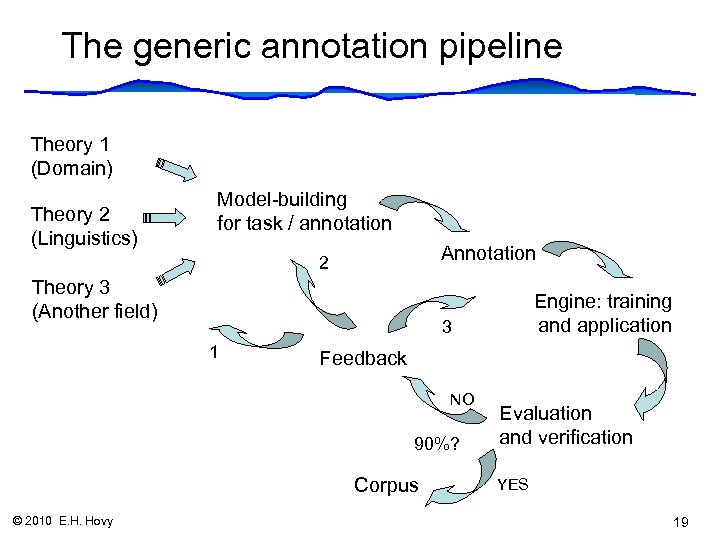

The generic annotation pipeline Theory 1 (Domain) Theory 2 (Linguistics) Model-building for task / annotation Annotation 2 Theory 3 (Another field) 3 1 Feedback NO 90%? Corpus © 2010 E. H. Hovy Engine: training and application Evaluation and verification YES 19

The generic annotation pipeline Theory 1 (Domain) Theory 2 (Linguistics) Model-building for task / annotation Annotation 2 Theory 3 (Another field) 3 1 Feedback NO 90%? Corpus © 2010 E. H. Hovy Engine: training and application Evaluation and verification YES 19

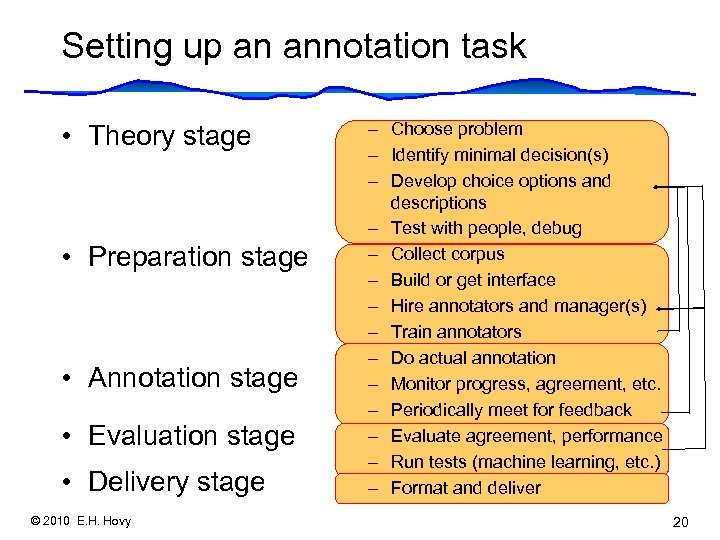

Setting up an annotation task • Theory stage • Preparation stage • Annotation stage • Evaluation stage • Delivery stage © 2010 E. H. Hovy – Choose problem – Identify minimal decision(s) – Develop choice options and descriptions – Test with people, debug – Collect corpus – Build or get interface – Hire annotators and manager(s) – Train annotators – Do actual annotation – Monitor progress, agreement, etc. – Periodically meet for feedback – Evaluate agreement, performance – Run tests (machine learning, etc. ) – Format and deliver 20

Setting up an annotation task • Theory stage • Preparation stage • Annotation stage • Evaluation stage • Delivery stage © 2010 E. H. Hovy – Choose problem – Identify minimal decision(s) – Develop choice options and descriptions – Test with people, debug – Collect corpus – Build or get interface – Hire annotators and manager(s) – Train annotators – Do actual annotation – Monitor progress, agreement, etc. – Periodically meet for feedback – Evaluate agreement, performance – Run tests (machine learning, etc. ) – Format and deliver 20

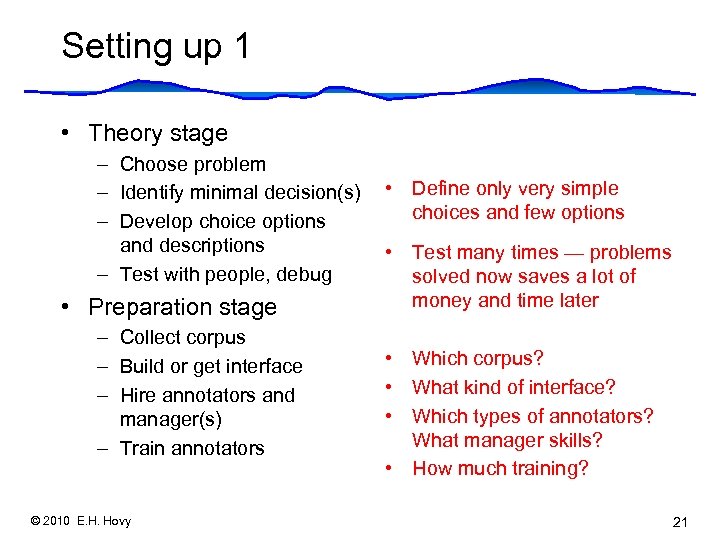

Setting up 1 • Theory stage – Choose problem – Identify minimal decision(s) • Define only very simple choices and few options – Develop choice options and descriptions • Test many times — problems – Test with people, debug solved now saves a lot of money and time later • Preparation stage – Collect corpus • Which corpus? – Build or get interface • What kind of interface? – Hire annotators and • Which types of annotators? manager(s) What manager skills? – Train annotators • How much training? © 2010 E. H. Hovy 21

Setting up 1 • Theory stage – Choose problem – Identify minimal decision(s) • Define only very simple choices and few options – Develop choice options and descriptions • Test many times — problems – Test with people, debug solved now saves a lot of money and time later • Preparation stage – Collect corpus • Which corpus? – Build or get interface • What kind of interface? – Hire annotators and • Which types of annotators? manager(s) What manager skills? – Train annotators • How much training? © 2010 E. H. Hovy 21

Setting up 2 • Annotation stage – Do actual annotation – Monitor progress, agreement, etc. – Periodically meet for feedback • What procedure? • What to monitor? • Evaluation stage – Evaluate agreement, performance – Run tests (machine learning, etc. ) • How to evaluate? • What to test? • When is it ‘good enough’? • Delivery stage – Format and deliver © 2010 E. H. Hovy • What format? 22

Setting up 2 • Annotation stage – Do actual annotation – Monitor progress, agreement, etc. – Periodically meet for feedback • What procedure? • What to monitor? • Evaluation stage – Evaluate agreement, performance – Run tests (machine learning, etc. ) • How to evaluate? • What to test? • When is it ‘good enough’? • Delivery stage – Format and deliver © 2010 E. H. Hovy • What format? 22

SETTING UP: ANNOTATION TOOLS AND SERVICES 24

SETTING UP: ANNOTATION TOOLS AND SERVICES 24

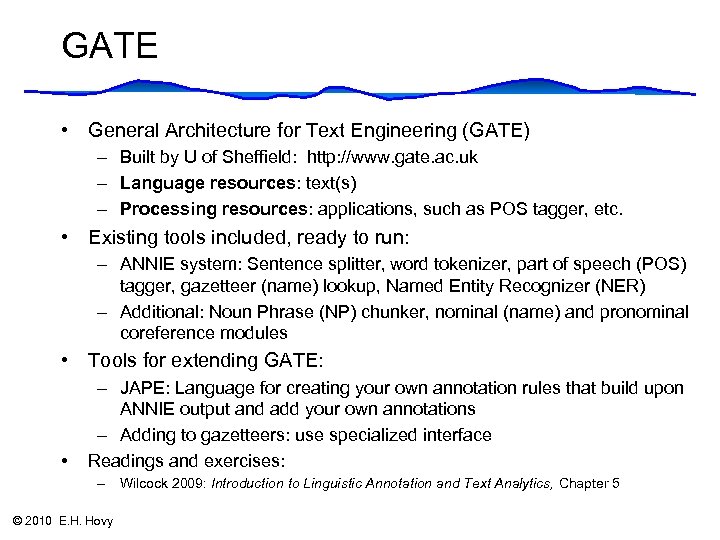

GATE • General Architecture for Text Engineering (GATE) – Built by U of Sheffield: http: //www. gate. ac. uk – Language resources: text(s) – Processing resources: applications, such as POS tagger, etc. • Existing tools included, ready to run: – ANNIE system: Sentence splitter, word tokenizer, part of speech (POS) tagger, gazetteer (name) lookup, Named Entity Recognizer (NER) – Additional: Noun Phrase (NP) chunker, nominal (name) and pronominal coreference modules • Tools for extending GATE: • – JAPE: Language for creating your own annotation rules that build upon ANNIE output and add your own annotations – Adding to gazetteers: use specialized interface Readings and exercises: – Wilcock 2009: Introduction to Linguistic Annotation and Text Analytics, Chapter 5 © 2010 E. H. Hovy

GATE • General Architecture for Text Engineering (GATE) – Built by U of Sheffield: http: //www. gate. ac. uk – Language resources: text(s) – Processing resources: applications, such as POS tagger, etc. • Existing tools included, ready to run: – ANNIE system: Sentence splitter, word tokenizer, part of speech (POS) tagger, gazetteer (name) lookup, Named Entity Recognizer (NER) – Additional: Noun Phrase (NP) chunker, nominal (name) and pronominal coreference modules • Tools for extending GATE: • – JAPE: Language for creating your own annotation rules that build upon ANNIE output and add your own annotations – Adding to gazetteers: use specialized interface Readings and exercises: – Wilcock 2009: Introduction to Linguistic Annotation and Text Analytics, Chapter 5 © 2010 E. H. Hovy

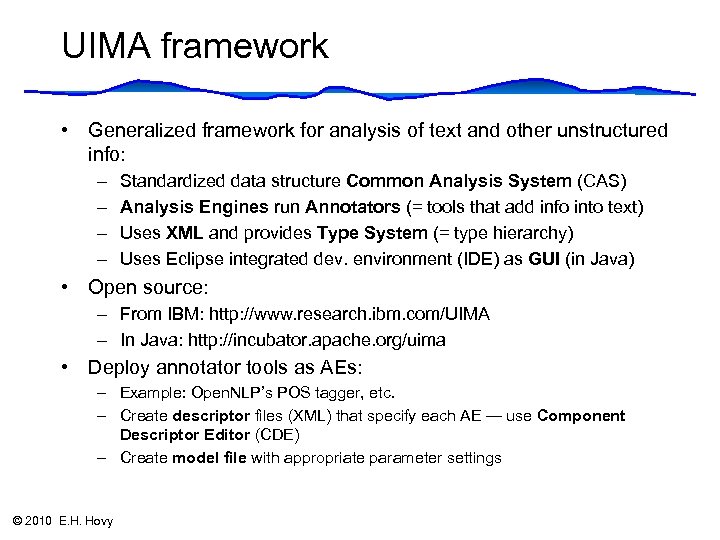

UIMA framework • Generalized framework for analysis of text and other unstructured info: – – Standardized data structure Common Analysis System (CAS) Analysis Engines run Annotators (= tools that add info into text) Uses XML and provides Type System (= type hierarchy) Uses Eclipse integrated dev. environment (IDE) as GUI (in Java) • Open source: – From IBM: http: //www. research. ibm. com/UIMA – In Java: http: //incubator. apache. org/uima • Deploy annotator tools as AEs: – Example: Open. NLP’s POS tagger, etc. – Create descriptor files (XML) that specify each AE — use Component Descriptor Editor (CDE) – Create model file with appropriate parameter settings © 2010 E. H. Hovy

UIMA framework • Generalized framework for analysis of text and other unstructured info: – – Standardized data structure Common Analysis System (CAS) Analysis Engines run Annotators (= tools that add info into text) Uses XML and provides Type System (= type hierarchy) Uses Eclipse integrated dev. environment (IDE) as GUI (in Java) • Open source: – From IBM: http: //www. research. ibm. com/UIMA – In Java: http: //incubator. apache. org/uima • Deploy annotator tools as AEs: – Example: Open. NLP’s POS tagger, etc. – Create descriptor files (XML) that specify each AE — use Component Descriptor Editor (CDE) – Create model file with appropriate parameter settings © 2010 E. H. Hovy

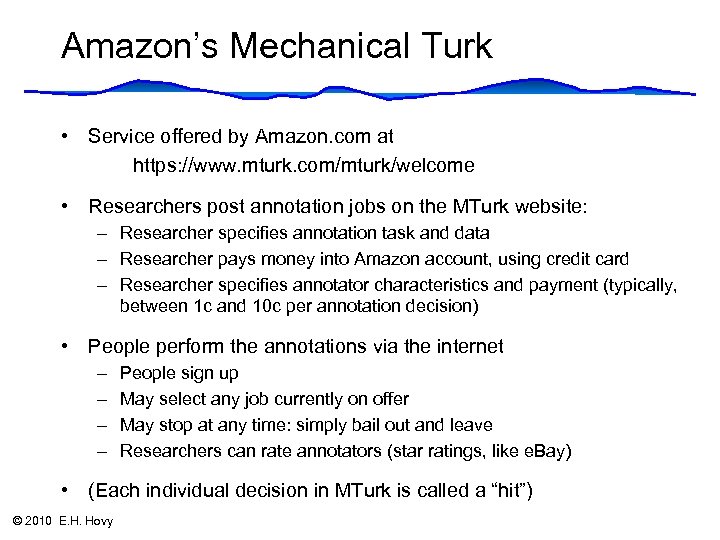

Amazon’s Mechanical Turk • Service offered by Amazon. com at https: //www. mturk. com/mturk/welcome • Researchers post annotation jobs on the MTurk website: – Researcher specifies annotation task and data – Researcher pays money into Amazon account, using credit card – Researcher specifies annotator characteristics and payment (typically, between 1 c and 10 c per annotation decision) • People perform the annotations via the internet – – People sign up May select any job currently on offer May stop at any time: simply bail out and leave Researchers can rate annotators (star ratings, like e. Bay) • (Each individual decision in MTurk is called a “hit”) © 2010 E. H. Hovy

Amazon’s Mechanical Turk • Service offered by Amazon. com at https: //www. mturk. com/mturk/welcome • Researchers post annotation jobs on the MTurk website: – Researcher specifies annotation task and data – Researcher pays money into Amazon account, using credit card – Researcher specifies annotator characteristics and payment (typically, between 1 c and 10 c per annotation decision) • People perform the annotations via the internet – – People sign up May select any job currently on offer May stop at any time: simply bail out and leave Researchers can rate annotators (star ratings, like e. Bay) • (Each individual decision in MTurk is called a “hit”) © 2010 E. H. Hovy

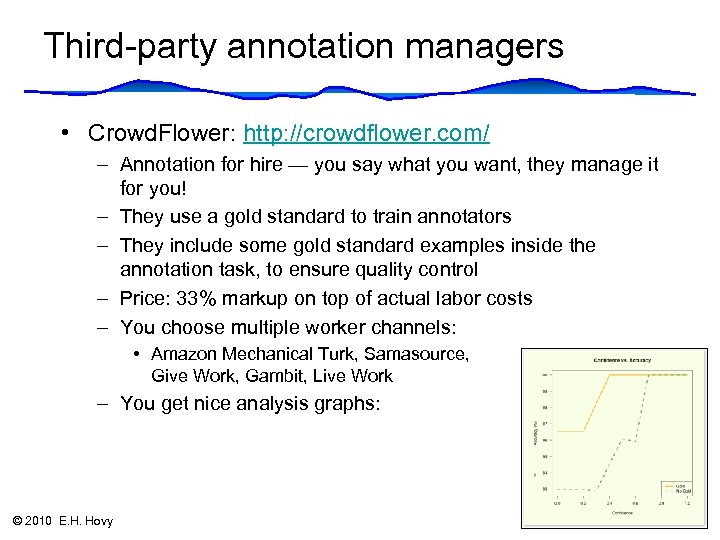

Third-party annotation managers • Crowd. Flower: http: //crowdflower. com/ – Annotation for hire — you say what you want, they manage it for you! – They use a gold standard to train annotators – They include some gold standard examples inside the annotation task, to ensure quality control – Price: 33% markup on top of actual labor costs – You choose multiple worker channels: • Amazon Mechanical Turk, Samasource, Give Work, Gambit, Live Work – You get nice analysis graphs: © 2010 E. H. Hovy 29

Third-party annotation managers • Crowd. Flower: http: //crowdflower. com/ – Annotation for hire — you say what you want, they manage it for you! – They use a gold standard to train annotators – They include some gold standard examples inside the annotation task, to ensure quality control – Price: 33% markup on top of actual labor costs – You choose multiple worker channels: • Amazon Mechanical Turk, Samasource, Give Work, Gambit, Live Work – You get nice analysis graphs: © 2010 E. H. Hovy 29

Tutorial overview • Introduction: What is annotation, and why annotate? • Setting up an annotation project – The basics – Some annotation tools and services • Some example projects • The seven questions of annotation: – – – – Q 1: Selecting a corpus Q 2: Instantiating theory Q 3: Designing the interface Q 4: Selecting and training the annotators Q 5: Designing and managing the annotation procedure Q 6: Validating results Q 7: Delivering and maintaining the product • Conclusion • Bibliography © 2010 E. H. Hovy 44

Tutorial overview • Introduction: What is annotation, and why annotate? • Setting up an annotation project – The basics – Some annotation tools and services • Some example projects • The seven questions of annotation: – – – – Q 1: Selecting a corpus Q 2: Instantiating theory Q 3: Designing the interface Q 4: Selecting and training the annotators Q 5: Designing and managing the annotation procedure Q 6: Validating results Q 7: Delivering and maintaining the product • Conclusion • Bibliography © 2010 E. H. Hovy 44

SOME EXAMPLE PROJECTS 45

SOME EXAMPLE PROJECTS 45

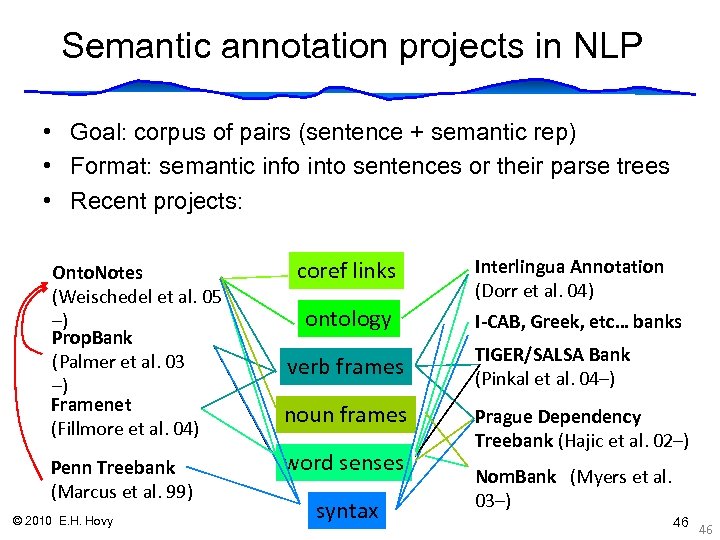

Semantic annotation projects in NLP • Goal: corpus of pairs (sentence + semantic rep) • Format: semantic info into sentences or their parse trees • Recent projects: Onto. Notes (Weischedel et al. 05 –) Prop. Bank (Palmer et al. 03 –) Framenet (Fillmore et al. 04) Penn Treebank (Marcus et al. 99) © 2010 E. H. Hovy coref links ontology Interlingua Annotation (Dorr et al. 04) I-CAB, Greek, etc… banks verb frames TIGER/SALSA Bank (Pinkal et al. 04–) noun frames Prague Dependency Treebank (Hajic et al. 02–) word senses syntax Nom. Bank (Myers et al. 03–) 46 46

Semantic annotation projects in NLP • Goal: corpus of pairs (sentence + semantic rep) • Format: semantic info into sentences or their parse trees • Recent projects: Onto. Notes (Weischedel et al. 05 –) Prop. Bank (Palmer et al. 03 –) Framenet (Fillmore et al. 04) Penn Treebank (Marcus et al. 99) © 2010 E. H. Hovy coref links ontology Interlingua Annotation (Dorr et al. 04) I-CAB, Greek, etc… banks verb frames TIGER/SALSA Bank (Pinkal et al. 04–) noun frames Prague Dependency Treebank (Hajic et al. 02–) word senses syntax Nom. Bank (Myers et al. 03–) 46 46

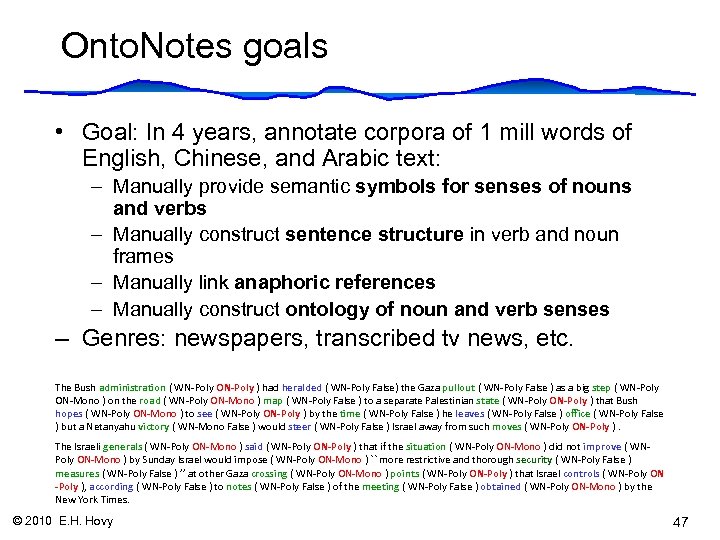

Onto. Notes goals • Goal: In 4 years, annotate corpora of 1 mill words of English, Chinese, and Arabic text: – Manually provide semantic symbols for senses of nouns and verbs – Manually construct sentence structure in verb and noun frames – Manually link anaphoric references – Manually construct ontology of noun and verb senses – Genres: newspapers, transcribed tv news, etc. The Bush administration ( WN-Poly ON-Poly ) had heralded ( WN-Poly False) the Gaza pullout ( WN-Poly False ) as a big step ( WN-Poly ON-Mono ) on the road ( WN-Poly ON-Mono ) map ( WN-Poly False ) to a separate Palestinian state ( WN-Poly ON-Poly ) that Bush hopes ( WN-Poly ON-Mono ) to see ( WN-Poly ON-Poly ) by the time ( WN-Poly False ) he leaves ( WN-Poly False ) office ( WN-Poly False ) but a Netanyahu victory ( WN-Mono False ) would steer ( WN-Poly False ) Israel away from such moves ( WN-Poly ON-Poly ). The Israeli generals ( WN-Poly ON-Mono ) said ( WN-Poly ON-Poly ) that if the situation ( WN-Poly ON-Mono ) did not improve ( WNPoly ON-Mono ) by Sunday Israel would impose ( WN-Poly ON-Mono ) `` more restrictive and thorough security ( WN-Poly False ) measures ( WN-Poly False ) ’’ at other Gaza crossing ( WN-Poly ON-Mono ) points ( WN-Poly ON-Poly ) that Israel controls ( WN-Poly ON -Poly ), according ( WN-Poly False ) to notes ( WN-Poly False ) of the meeting ( WN-Poly False ) obtained ( WN-Poly ON-Mono ) by the New York Times. © 2010 E. H. Hovy 47

Onto. Notes goals • Goal: In 4 years, annotate corpora of 1 mill words of English, Chinese, and Arabic text: – Manually provide semantic symbols for senses of nouns and verbs – Manually construct sentence structure in verb and noun frames – Manually link anaphoric references – Manually construct ontology of noun and verb senses – Genres: newspapers, transcribed tv news, etc. The Bush administration ( WN-Poly ON-Poly ) had heralded ( WN-Poly False) the Gaza pullout ( WN-Poly False ) as a big step ( WN-Poly ON-Mono ) on the road ( WN-Poly ON-Mono ) map ( WN-Poly False ) to a separate Palestinian state ( WN-Poly ON-Poly ) that Bush hopes ( WN-Poly ON-Mono ) to see ( WN-Poly ON-Poly ) by the time ( WN-Poly False ) he leaves ( WN-Poly False ) office ( WN-Poly False ) but a Netanyahu victory ( WN-Mono False ) would steer ( WN-Poly False ) Israel away from such moves ( WN-Poly ON-Poly ). The Israeli generals ( WN-Poly ON-Mono ) said ( WN-Poly ON-Poly ) that if the situation ( WN-Poly ON-Mono ) did not improve ( WNPoly ON-Mono ) by Sunday Israel would impose ( WN-Poly ON-Mono ) `` more restrictive and thorough security ( WN-Poly False ) measures ( WN-Poly False ) ’’ at other Gaza crossing ( WN-Poly ON-Mono ) points ( WN-Poly ON-Poly ) that Israel controls ( WN-Poly ON -Poly ), according ( WN-Poly False ) to notes ( WN-Poly False ) of the meeting ( WN-Poly False ) obtained ( WN-Poly ON-Mono ) by the New York Times. © 2010 E. H. Hovy 47

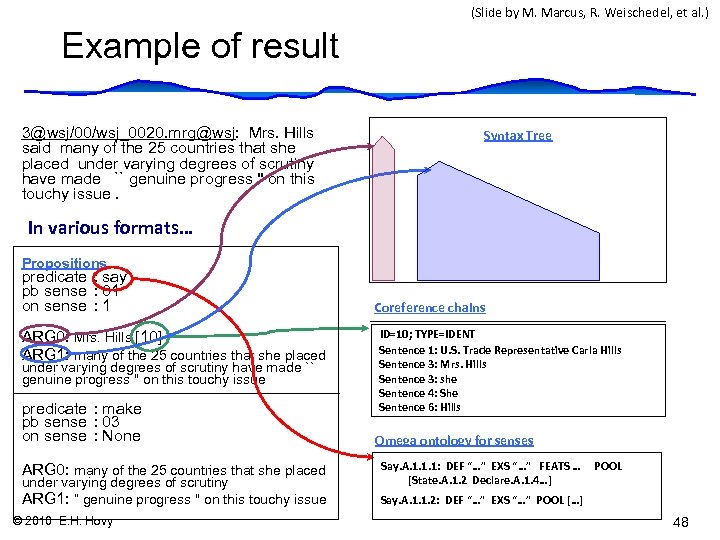

(Slide by M. Marcus, R. Weischedel, et al. ) Example of result 3@wsj/00/wsj_0020. mrg@wsj: Mrs. Hills said many of the 25 countries that she placed under varying degrees of scrutiny have made `` genuine progress '' on this touchy issue. Syntax Tree In various formats… Propositions predicate : say pb sense : 01 on sense : 1 ARG 0: Mrs. Hills [10] ARG 1: many of the 25 countries that she placed under varying degrees of scrutiny have made `` genuine progress '' on this touchy issue predicate : make pb sense : 03 on sense : None ARG 0: many of the 25 countries that she placed under varying degrees of scrutiny ARG 1: “ genuine progress '' on this touchy issue © 2010 E. H. Hovy Coreference chains ID=10; TYPE=IDENT Sentence 1: U. S. Trade Representative Carla Hills Sentence 3: Mrs. Hills Sentence 3: she Sentence 4: She Sentence 6: Hills Omega ontology for senses Say. A. 1. 1. 1: DEF “…” EXS “…” FEATS … [State. A. 1. 2 Declare. A. 1. 4…] POOL Say. A. 1. 1. 2: DEF “…” EXS “…” POOL […] 48

(Slide by M. Marcus, R. Weischedel, et al. ) Example of result 3@wsj/00/wsj_0020. mrg@wsj: Mrs. Hills said many of the 25 countries that she placed under varying degrees of scrutiny have made `` genuine progress '' on this touchy issue. Syntax Tree In various formats… Propositions predicate : say pb sense : 01 on sense : 1 ARG 0: Mrs. Hills [10] ARG 1: many of the 25 countries that she placed under varying degrees of scrutiny have made `` genuine progress '' on this touchy issue predicate : make pb sense : 03 on sense : None ARG 0: many of the 25 countries that she placed under varying degrees of scrutiny ARG 1: “ genuine progress '' on this touchy issue © 2010 E. H. Hovy Coreference chains ID=10; TYPE=IDENT Sentence 1: U. S. Trade Representative Carla Hills Sentence 3: Mrs. Hills Sentence 3: she Sentence 4: She Sentence 6: Hills Omega ontology for senses Say. A. 1. 1. 1: DEF “…” EXS “…” FEATS … [State. A. 1. 2 Declare. A. 1. 4…] POOL Say. A. 1. 1. 2: DEF “…” EXS “…” POOL […] 48

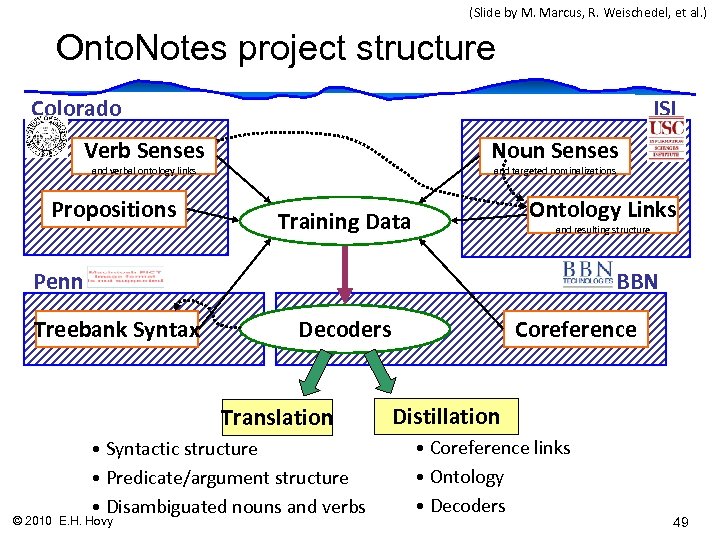

(Slide by M. Marcus, R. Weischedel, et al. ) Onto. Notes project structure Colorado ISI Verb Senses Noun Senses and verbal ontology links Propositions and targeted nominalizations Ontology Links Training Data and resulting structure Penn BBN Treebank Syntax Translation • Syntactic structure • Predicate/argument structure • Disambiguated nouns and verbs © 2010 E. H. Hovy Coreference Decoders Distillation • Coreference links • Ontology • Decoders 49

(Slide by M. Marcus, R. Weischedel, et al. ) Onto. Notes project structure Colorado ISI Verb Senses Noun Senses and verbal ontology links Propositions and targeted nominalizations Ontology Links Training Data and resulting structure Penn BBN Treebank Syntax Translation • Syntactic structure • Predicate/argument structure • Disambiguated nouns and verbs © 2010 E. H. Hovy Coreference Decoders Distillation • Coreference links • Ontology • Decoders 49

Tutorial overview • Introduction: What is annotation, and why annotate? • Setting up an annotation project – The basics – Some annotation tools and services • Some example projects • The seven questions of annotation: – – – – Q 1: Selecting a corpus Q 2: Instantiating theory Q 3: Designing the interface Q 4: Selecting and training the annotators Q 5: Designing and managing the annotation procedure Q 6: Validating results Q 7: Delivering and maintaining the product • Conclusion • Bibliography © 2010 E. H. Hovy 51

Tutorial overview • Introduction: What is annotation, and why annotate? • Setting up an annotation project – The basics – Some annotation tools and services • Some example projects • The seven questions of annotation: – – – – Q 1: Selecting a corpus Q 2: Instantiating theory Q 3: Designing the interface Q 4: Selecting and training the annotators Q 5: Designing and managing the annotation procedure Q 6: Validating results Q 7: Delivering and maintaining the product • Conclusion • Bibliography © 2010 E. H. Hovy 51

THE SEVEN QUESTIONS OF ANNOTATION © 2010 E. H. Hovy 52

THE SEVEN QUESTIONS OF ANNOTATION © 2010 E. H. Hovy 52

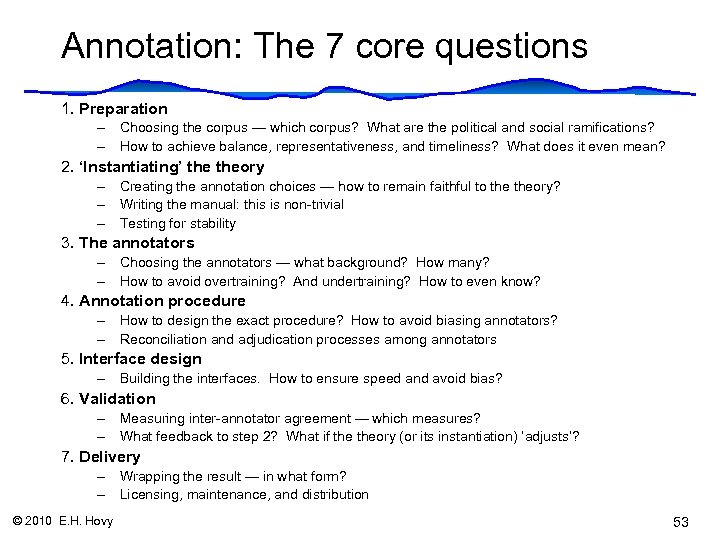

Annotation: The 7 core questions 1. Preparation – Choosing the corpus — which corpus? What are the political and social ramifications? – How to achieve balance, representativeness, and timeliness? What does it even mean? 2. ‘Instantiating’ theory – Creating the annotation choices — how to remain faithful to theory? – Writing the manual: this is non-trivial – Testing for stability 3. The annotators – Choosing the annotators — what background? How many? – How to avoid overtraining? And undertraining? How to even know? 4. Annotation procedure – How to design the exact procedure? How to avoid biasing annotators? – Reconciliation and adjudication processes among annotators 5. Interface design – Building the interfaces. How to ensure speed and avoid bias? 6. Validation – Measuring inter-annotator agreement — which measures? – What feedback to step 2? What if theory (or its instantiation) ‘adjusts’? 7. Delivery – Wrapping the result — in what form? – Licensing, maintenance, and distribution © 2010 E. H. Hovy 53

Annotation: The 7 core questions 1. Preparation – Choosing the corpus — which corpus? What are the political and social ramifications? – How to achieve balance, representativeness, and timeliness? What does it even mean? 2. ‘Instantiating’ theory – Creating the annotation choices — how to remain faithful to theory? – Writing the manual: this is non-trivial – Testing for stability 3. The annotators – Choosing the annotators — what background? How many? – How to avoid overtraining? And undertraining? How to even know? 4. Annotation procedure – How to design the exact procedure? How to avoid biasing annotators? – Reconciliation and adjudication processes among annotators 5. Interface design – Building the interfaces. How to ensure speed and avoid bias? 6. Validation – Measuring inter-annotator agreement — which measures? – What feedback to step 2? What if theory (or its instantiation) ‘adjusts’? 7. Delivery – Wrapping the result — in what form? – Licensing, maintenance, and distribution © 2010 E. H. Hovy 53

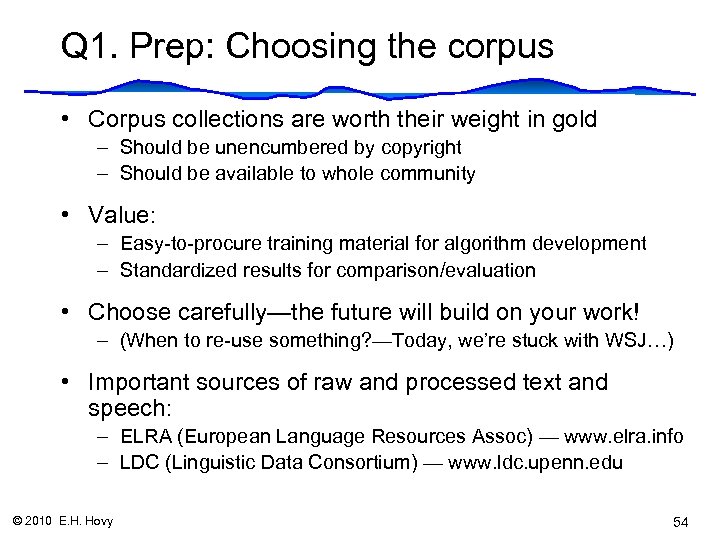

Q 1. Prep: Choosing the corpus • Corpus collections are worth their weight in gold – Should be unencumbered by copyright – Should be available to whole community • Value: – Easy-to-procure training material for algorithm development – Standardized results for comparison/evaluation • Choose carefully—the future will build on your work! – (When to re-use something? —Today, we’re stuck with WSJ…) • Important sources of raw and processed text and speech: – ELRA (European Language Resources Assoc) — www. elra. info – LDC (Linguistic Data Consortium) — www. ldc. upenn. edu © 2010 E. H. Hovy 54

Q 1. Prep: Choosing the corpus • Corpus collections are worth their weight in gold – Should be unencumbered by copyright – Should be available to whole community • Value: – Easy-to-procure training material for algorithm development – Standardized results for comparison/evaluation • Choose carefully—the future will build on your work! – (When to re-use something? —Today, we’re stuck with WSJ…) • Important sources of raw and processed text and speech: – ELRA (European Language Resources Assoc) — www. elra. info – LDC (Linguistic Data Consortium) — www. ldc. upenn. edu © 2010 E. H. Hovy 54

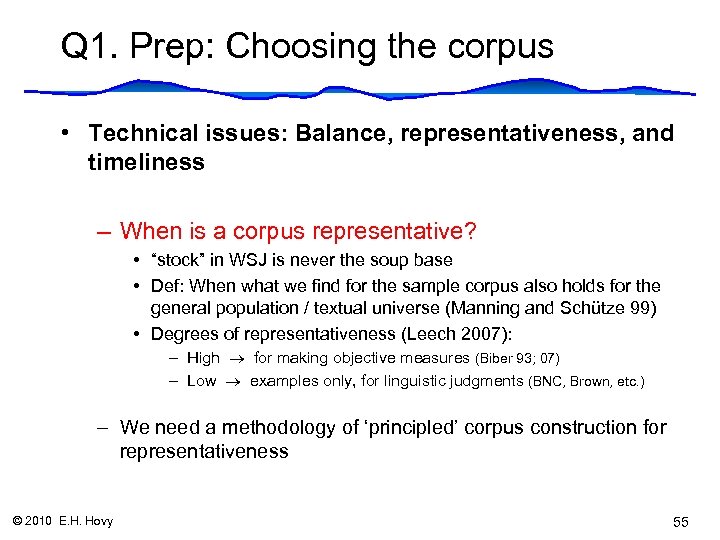

Q 1. Prep: Choosing the corpus • Technical issues: Balance, representativeness, and timeliness – When is a corpus representative? • “stock” in WSJ is never the soup base • Def: When what we find for the sample corpus also holds for the general population / textual universe (Manning and Schütze 99) • Degrees of representativeness (Leech 2007): – High for making objective measures (Biber 93; 07) – Low examples only, for linguistic judgments (BNC, Brown, etc. ) – We need a methodology of ‘principled’ corpus construction for representativeness © 2010 E. H. Hovy 55

Q 1. Prep: Choosing the corpus • Technical issues: Balance, representativeness, and timeliness – When is a corpus representative? • “stock” in WSJ is never the soup base • Def: When what we find for the sample corpus also holds for the general population / textual universe (Manning and Schütze 99) • Degrees of representativeness (Leech 2007): – High for making objective measures (Biber 93; 07) – Low examples only, for linguistic judgments (BNC, Brown, etc. ) – We need a methodology of ‘principled’ corpus construction for representativeness © 2010 E. H. Hovy 55

Q 1: Choosing the corpus • How to balance genre, era, domain, etc. ? – Decision depends on (expected) usage of corpus (Kilgarriff and Grefenstette CL 2003) – Does balance equal proportionality? But proportionality of what? • Variation of genre (= news, blogs, literature, etc. ) • Variation of register (= formal, informal, etc. ) (Biber 93; 07) • Not production, but reception (= number of hearers/readers) (Czech Natn’l Corpus) • Variation of era (= historical, modern, etc. ) • Social, political, funding issues – How do you ensure agreement / complementarity with others? Should you bother? – How do you choose which phenomena to annotate? Need high payoff… – How do you convince funders to invest in the effort? © 2010 E. H. Hovy 56

Q 1: Choosing the corpus • How to balance genre, era, domain, etc. ? – Decision depends on (expected) usage of corpus (Kilgarriff and Grefenstette CL 2003) – Does balance equal proportionality? But proportionality of what? • Variation of genre (= news, blogs, literature, etc. ) • Variation of register (= formal, informal, etc. ) (Biber 93; 07) • Not production, but reception (= number of hearers/readers) (Czech Natn’l Corpus) • Variation of era (= historical, modern, etc. ) • Social, political, funding issues – How do you ensure agreement / complementarity with others? Should you bother? – How do you choose which phenomena to annotate? Need high payoff… – How do you convince funders to invest in the effort? © 2010 E. H. Hovy 56

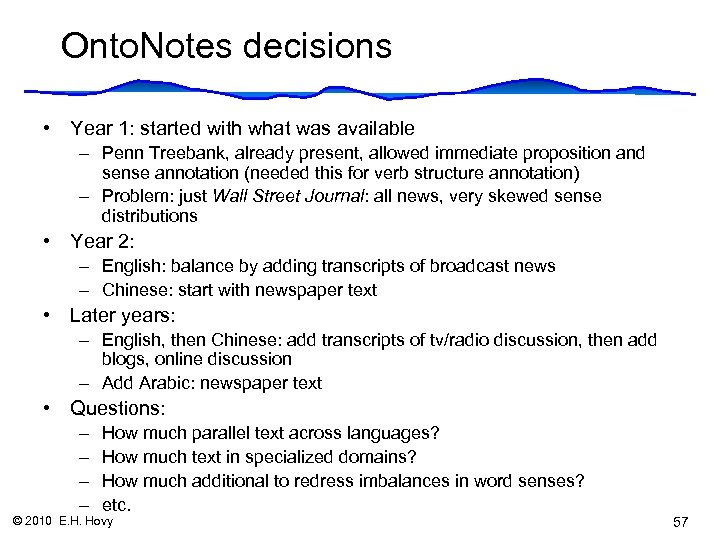

Onto. Notes decisions • Year 1: started with what was available – Penn Treebank, already present, allowed immediate proposition and sense annotation (needed this for verb structure annotation) – Problem: just Wall Street Journal: all news, very skewed sense distributions • Year 2: – English: balance by adding transcripts of broadcast news – Chinese: start with newspaper text • Later years: – English, then Chinese: add transcripts of tv/radio discussion, then add blogs, online discussion – Add Arabic: newspaper text • Questions: – – How much parallel text across languages? How much text in specialized domains? How much additional to redress imbalances in word senses? etc. © 2010 E. H. Hovy 57

Onto. Notes decisions • Year 1: started with what was available – Penn Treebank, already present, allowed immediate proposition and sense annotation (needed this for verb structure annotation) – Problem: just Wall Street Journal: all news, very skewed sense distributions • Year 2: – English: balance by adding transcripts of broadcast news – Chinese: start with newspaper text • Later years: – English, then Chinese: add transcripts of tv/radio discussion, then add blogs, online discussion – Add Arabic: newspaper text • Questions: – – How much parallel text across languages? How much text in specialized domains? How much additional to redress imbalances in word senses? etc. © 2010 E. H. Hovy 57

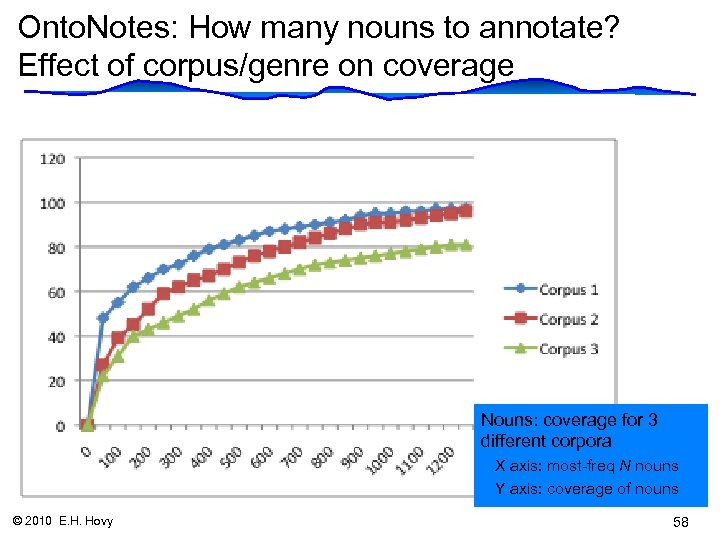

Onto. Notes: How many nouns to annotate? Effect of corpus/genre on coverage Nouns: coverage for 3 different corpora X axis: most-freq N nouns Y axis: coverage of nouns © 2010 E. H. Hovy 58

Onto. Notes: How many nouns to annotate? Effect of corpus/genre on coverage Nouns: coverage for 3 different corpora X axis: most-freq N nouns Y axis: coverage of nouns © 2010 E. H. Hovy 58

Q 2: ‘Instantiating’ theory • Most complex question: What phenomena to annotate, with which options? • Issues: – Task/theory provides annotation categories/choices – Problem: Tradeoff between desired detail (sophistication) of categories and practical attainability of trustworthy annotation results – General solution: simplify categories to ensure dependable results – Problem: What’s the right level of ‘granularity’? • Consider the main goal: Is this for a practical task (like IE), theory building (linguistics), or both? © 2010 E. H. Hovy 59

Q 2: ‘Instantiating’ theory • Most complex question: What phenomena to annotate, with which options? • Issues: – Task/theory provides annotation categories/choices – Problem: Tradeoff between desired detail (sophistication) of categories and practical attainability of trustworthy annotation results – General solution: simplify categories to ensure dependable results – Problem: What’s the right level of ‘granularity’? • Consider the main goal: Is this for a practical task (like IE), theory building (linguistics), or both? © 2010 E. H. Hovy 59

Q 2: ‘Instantiating’ theory • How ‘deeply’ to instantiate theory? – Design rep scheme / formalism very carefully — simple and transparent – ? Depends on theory — but also (yes? how much? ) on corpus and annotators – Do tests first, to determine what is annotatable in practice • Experts must create: – Annotation categories – Annotator instructions: (coding) manual — very important – Who should build the manual: experts/theoreticians? Or exactly NOT theoreticians? • Both must be tested! — Don’t ‘freeze’ the manual too soon – Experts annotate a sample set; measure agreements – Annotators keep annotating a sample set until stability is achieved © 2010 E. H. Hovy 60

Q 2: ‘Instantiating’ theory • How ‘deeply’ to instantiate theory? – Design rep scheme / formalism very carefully — simple and transparent – ? Depends on theory — but also (yes? how much? ) on corpus and annotators – Do tests first, to determine what is annotatable in practice • Experts must create: – Annotation categories – Annotator instructions: (coding) manual — very important – Who should build the manual: experts/theoreticians? Or exactly NOT theoreticians? • Both must be tested! — Don’t ‘freeze’ the manual too soon – Experts annotate a sample set; measure agreements – Annotators keep annotating a sample set until stability is achieved © 2010 E. H. Hovy 60

Q 2: Instantiating theory • Issues: – Before building theory, you don’t know how many categories (types) really appear in the data – When annotating, you don’t know how easy it will be for the annotators to identify all the categories your theory specifies • Likely problems: – Categories not exhaustive over phenomena in the data – Categories difficult to define / unclear (due to intrinsic ambiguity, or because you rely too much on background knowledge? ) • What you can do: – Work in close cycle with annotators, see week by week what they do – Hold weekly discussions with all the annotators – Create and constantly update the Annotator Handbook (manual) • (Penn Treebank Codebook: 300 pages!) – Modify your categories as needed—is the problem with the annotators or theory? Make sure the annotators are not inadequate… – Measure the annotators’ agreement as you develop the manual © 2010 E. H. Hovy 61

Q 2: Instantiating theory • Issues: – Before building theory, you don’t know how many categories (types) really appear in the data – When annotating, you don’t know how easy it will be for the annotators to identify all the categories your theory specifies • Likely problems: – Categories not exhaustive over phenomena in the data – Categories difficult to define / unclear (due to intrinsic ambiguity, or because you rely too much on background knowledge? ) • What you can do: – Work in close cycle with annotators, see week by week what they do – Hold weekly discussions with all the annotators – Create and constantly update the Annotator Handbook (manual) • (Penn Treebank Codebook: 300 pages!) – Modify your categories as needed—is the problem with the annotators or theory? Make sure the annotators are not inadequate… – Measure the annotators’ agreement as you develop the manual © 2010 E. H. Hovy 61

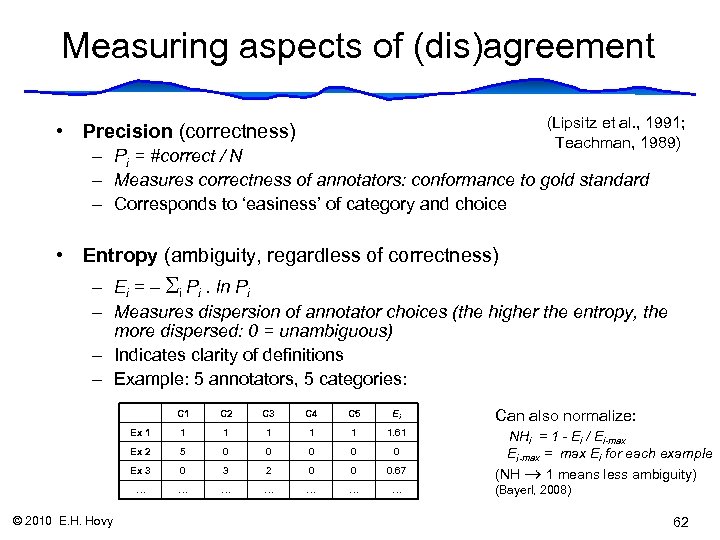

Measuring aspects of (dis)agreement (Lipsitz et al. , 1991; Teachman, 1989) • Precision (correctness) – Pi = #correct / N – Measures correctness of annotators: conformance to gold standard – Corresponds to ‘easiness’ of category and choice • Entropy (ambiguity, regardless of correctness) – Ei = – i Pi. ln Pi – Measures dispersion of annotator choices (the higher the entropy, the more dispersed: 0 = unambiguous) – Indicates clarity of definitions – Example: 5 annotators, 5 categories: C 1 C 3 C 4 C 5 Ei Ex 1 1 1 1. 61 Ex 2 5 0 0 0 Ex 3 0 3 2 0 0 0. 67 … © 2010 E. H. Hovy C 2 … … … Can also normalize: NHi = 1 - Ei / Ei-max = max Ei for each example (NH 1 means less ambiguity) (Bayerl, 2008) 62

Measuring aspects of (dis)agreement (Lipsitz et al. , 1991; Teachman, 1989) • Precision (correctness) – Pi = #correct / N – Measures correctness of annotators: conformance to gold standard – Corresponds to ‘easiness’ of category and choice • Entropy (ambiguity, regardless of correctness) – Ei = – i Pi. ln Pi – Measures dispersion of annotator choices (the higher the entropy, the more dispersed: 0 = unambiguous) – Indicates clarity of definitions – Example: 5 annotators, 5 categories: C 1 C 3 C 4 C 5 Ei Ex 1 1 1 1. 61 Ex 2 5 0 0 0 Ex 3 0 3 2 0 0 0. 67 … © 2010 E. H. Hovy C 2 … … … Can also normalize: NHi = 1 - Ei / Ei-max = max Ei for each example (NH 1 means less ambiguity) (Bayerl, 2008) 62

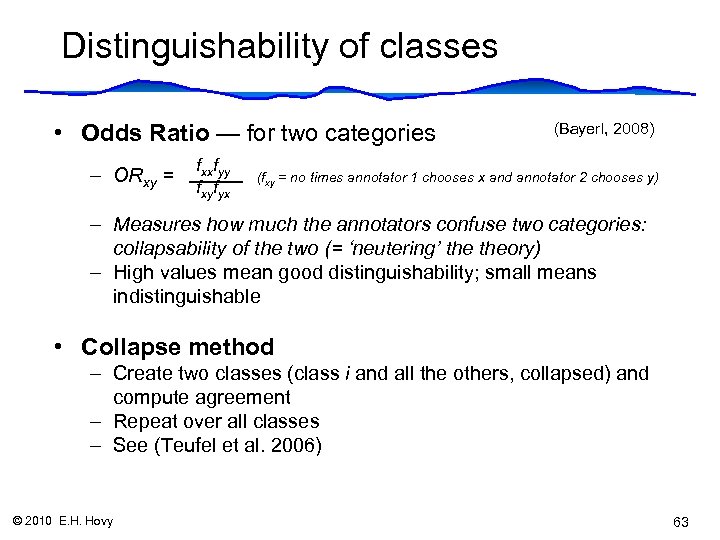

Distinguishability of classes • Odds Ratio — for two categories – ORxy = fxxfyy fxyfyx (Bayerl, 2008) (fxy = no times annotator 1 chooses x and annotator 2 chooses y) – Measures how much the annotators confuse two categories: collapsability of the two (= ‘neutering’ theory) – High values mean good distinguishability; small means indistinguishable • Collapse method – Create two classes (class i and all the others, collapsed) and compute agreement – Repeat over all classes – See (Teufel et al. 2006) © 2010 E. H. Hovy 63

Distinguishability of classes • Odds Ratio — for two categories – ORxy = fxxfyy fxyfyx (Bayerl, 2008) (fxy = no times annotator 1 chooses x and annotator 2 chooses y) – Measures how much the annotators confuse two categories: collapsability of the two (= ‘neutering’ theory) – High values mean good distinguishability; small means indistinguishable • Collapse method – Create two classes (class i and all the others, collapsed) and compute agreement – Repeat over all classes – See (Teufel et al. 2006) © 2010 E. H. Hovy 63

Q 2: Theory and model • First, you obtain theory and annotate • But sometimes theory is controversial, or you simply cannot obtain stability (using the previous measures) • All is not lost! You can ‘neuter’ theory and still be able to annotate, using a more neutral set of classes/types – Ex 1: from Case Roles (Agent, Patient, Instrument) to Prop. Bank’s roles (arg 0, arg 1, arg. M) — user chooses desired role labels and maps Prop. Bank roles to them – Ex 2: from detailed sense differences to cruder / less detailed ones • When to neuter? — you must decide acceptability levels for the measures • How much to neuter? — do you aim to achieve high agreement levels? Or balanced class representativeness for all categories? • What does this say about theory, however? © 2010 E. H. Hovy 64

Q 2: Theory and model • First, you obtain theory and annotate • But sometimes theory is controversial, or you simply cannot obtain stability (using the previous measures) • All is not lost! You can ‘neuter’ theory and still be able to annotate, using a more neutral set of classes/types – Ex 1: from Case Roles (Agent, Patient, Instrument) to Prop. Bank’s roles (arg 0, arg 1, arg. M) — user chooses desired role labels and maps Prop. Bank roles to them – Ex 2: from detailed sense differences to cruder / less detailed ones • When to neuter? — you must decide acceptability levels for the measures • How much to neuter? — do you aim to achieve high agreement levels? Or balanced class representativeness for all categories? • What does this say about theory, however? © 2010 E. H. Hovy 64

Onto. Notes acceptability threshold: Ensuring trustworthiness/stability • Problematic issues for Onto. Notes: 1. 2. 3. 4. • What sense are there? Are the senses stable/good/clear? Is the sense annotation trustworthy? What things should corefer? Is the coref annotation trustworthy? Approach: “the 90% solution”: – Sense granularity and stability: Test with annotators to ensure agreement at 90%+ on real text – If not, then redefine and re-do until 90% agreement reached – Coref stability: only annotate the types of aspects/phenomena for which 90%+ agreement can be achieved © 2010 E. H. Hovy 65

Onto. Notes acceptability threshold: Ensuring trustworthiness/stability • Problematic issues for Onto. Notes: 1. 2. 3. 4. • What sense are there? Are the senses stable/good/clear? Is the sense annotation trustworthy? What things should corefer? Is the coref annotation trustworthy? Approach: “the 90% solution”: – Sense granularity and stability: Test with annotators to ensure agreement at 90%+ on real text – If not, then redefine and re-do until 90% agreement reached – Coref stability: only annotate the types of aspects/phenomena for which 90%+ agreement can be achieved © 2010 E. H. Hovy 65

Q 3: The interface • How to design adequate interfaces? – Maximize speed! • Create very simple tasks—but how simple? Boredom factor, but simple task means less to annotate before you have enough • Don’t use the mouse • Customize the interface for each annotation project? – Don’t bias annotators (avoid priming!) • Beware of order of choice options • Beware of presentation of choices • Is it ok to present together a whole series of choices with expected identical annotation? — annotate en bloc? – Check agreements and hard cases in-line? • Do you show the annotator how ‘well’ he/she is doing? Why not? • Experts: Psych experimenters; Gallup Poll question creators • Experts: interface design specialists © 2010 E. H. Hovy 66

Q 3: The interface • How to design adequate interfaces? – Maximize speed! • Create very simple tasks—but how simple? Boredom factor, but simple task means less to annotate before you have enough • Don’t use the mouse • Customize the interface for each annotation project? – Don’t bias annotators (avoid priming!) • Beware of order of choice options • Beware of presentation of choices • Is it ok to present together a whole series of choices with expected identical annotation? — annotate en bloc? – Check agreements and hard cases in-line? • Do you show the annotator how ‘well’ he/she is doing? Why not? • Experts: Psych experimenters; Gallup Poll question creators • Experts: interface design specialists © 2010 E. H. Hovy 66

Q 3: Types of annotation interfaces • Select: choose one of N fixed categories – Avoid more than 10 or so choices (7 2 rule) – Avoid menus because of mousework – If possible, randomize choice sequence across sessions • Delimit: delimit a region inside a larger context – Often, problems with exact start/end of region (e. g. , exact NP) — but preprocessing and pre-delimiting chunks introduces bias – Evaluation of partial overlaps is harder • Delimit and select: combine the above – Evaluation is harder: need two semi-independent scores • Enter: instead of select, enter own commentary – Evaluation is very hard © 2010 E. H. Hovy 67

Q 3: Types of annotation interfaces • Select: choose one of N fixed categories – Avoid more than 10 or so choices (7 2 rule) – Avoid menus because of mousework – If possible, randomize choice sequence across sessions • Delimit: delimit a region inside a larger context – Often, problems with exact start/end of region (e. g. , exact NP) — but preprocessing and pre-delimiting chunks introduces bias – Evaluation of partial overlaps is harder • Delimit and select: combine the above – Evaluation is harder: need two semi-independent scores • Enter: instead of select, enter own commentary – Evaluation is very hard © 2010 E. H. Hovy 67

Q 4: Annotators • How to choose annotators? – Annotator backgrounds — should they be experts, or precisely not? – Biases, preferences, etc. – Experts: Psych experimenters • Who should train the annotators? Who is the most impartial? – Domain expert/theorist? – Interface builder? – Builder of learning system? • When to train? – Need training session(s) before starting – Extremely helpful to continue weekly general discussions: • Identify and address hard problems • Expand the annotation Handbook – BUT need to go back (re-annotate) to ensure that there’s no ‘annotation drift’ © 2010 E. H. Hovy 69

Q 4: Annotators • How to choose annotators? – Annotator backgrounds — should they be experts, or precisely not? – Biases, preferences, etc. – Experts: Psych experimenters • Who should train the annotators? Who is the most impartial? – Domain expert/theorist? – Interface builder? – Builder of learning system? • When to train? – Need training session(s) before starting – Extremely helpful to continue weekly general discussions: • Identify and address hard problems • Expand the annotation Handbook – BUT need to go back (re-annotate) to ensure that there’s no ‘annotation drift’ © 2010 E. H. Hovy 69

How much to train annotators? • Undertrain: Instructions are too vague or insufficient. Result: annotators create their own ‘patterns of thought’ and diverge from the gold standard, each in their own particular way (Bayerl 2006) – How to determine? : Use Odds Ratio to measure pairwise distinguishability of categories – Then collapse indistinguishable categories, recompute scores, and (? ) reformulate theory — is this ok? – Basic choice: EITHER ‘fit’ the annotation to the annotators — is this ok? OR train annotators more — is this ok? • Overtrain: Instructions are so exhaustive that there is no room for thought or interpretation (annotators follow a ‘table lookup’ procedure) – How to determine: is task simply easy, or are annotators overtrained? – What’s really wrong with overtraining? No predictive power… © 2010 E. H. Hovy 70

How much to train annotators? • Undertrain: Instructions are too vague or insufficient. Result: annotators create their own ‘patterns of thought’ and diverge from the gold standard, each in their own particular way (Bayerl 2006) – How to determine? : Use Odds Ratio to measure pairwise distinguishability of categories – Then collapse indistinguishable categories, recompute scores, and (? ) reformulate theory — is this ok? – Basic choice: EITHER ‘fit’ the annotation to the annotators — is this ok? OR train annotators more — is this ok? • Overtrain: Instructions are so exhaustive that there is no room for thought or interpretation (annotators follow a ‘table lookup’ procedure) – How to determine: is task simply easy, or are annotators overtrained? – What’s really wrong with overtraining? No predictive power… © 2010 E. H. Hovy 70

Agreement analysis in Onto. Notes Sometimes, one annotator is bad Sometimes, the defs are bad Sometimes, the choice is just hard Annotators vs. Adjudicator 71

Agreement analysis in Onto. Notes Sometimes, one annotator is bad Sometimes, the defs are bad Sometimes, the choice is just hard Annotators vs. Adjudicator 71

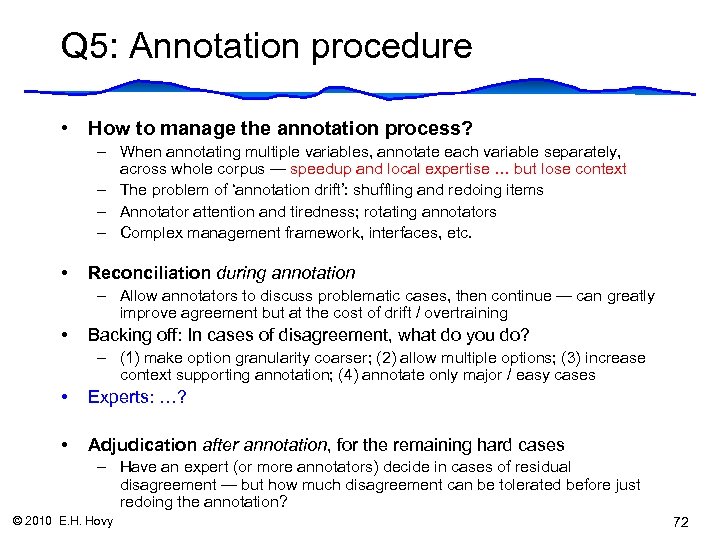

Q 5: Annotation procedure • How to manage the annotation process? – When annotating multiple variables, annotate each variable separately, across whole corpus — speedup and local expertise … but lose context – The problem of ‘annotation drift’: shuffling and redoing items – Annotator attention and tiredness; rotating annotators – Complex management framework, interfaces, etc. • Reconciliation during annotation – Allow annotators to discuss problematic cases, then continue — can greatly improve agreement but at the cost of drift / overtraining • Backing off: In cases of disagreement, what do you do? – (1) make option granularity coarser; (2) allow multiple options; (3) increase context supporting annotation; (4) annotate only major / easy cases • Experts: …? • Adjudication after annotation, for the remaining hard cases – Have an expert (or more annotators) decide in cases of residual disagreement — but how much disagreement can be tolerated before just redoing the annotation? © 2010 E. H. Hovy 72

Q 5: Annotation procedure • How to manage the annotation process? – When annotating multiple variables, annotate each variable separately, across whole corpus — speedup and local expertise … but lose context – The problem of ‘annotation drift’: shuffling and redoing items – Annotator attention and tiredness; rotating annotators – Complex management framework, interfaces, etc. • Reconciliation during annotation – Allow annotators to discuss problematic cases, then continue — can greatly improve agreement but at the cost of drift / overtraining • Backing off: In cases of disagreement, what do you do? – (1) make option granularity coarser; (2) allow multiple options; (3) increase context supporting annotation; (4) annotate only major / easy cases • Experts: …? • Adjudication after annotation, for the remaining hard cases – Have an expert (or more annotators) decide in cases of residual disagreement — but how much disagreement can be tolerated before just redoing the annotation? © 2010 E. H. Hovy 72

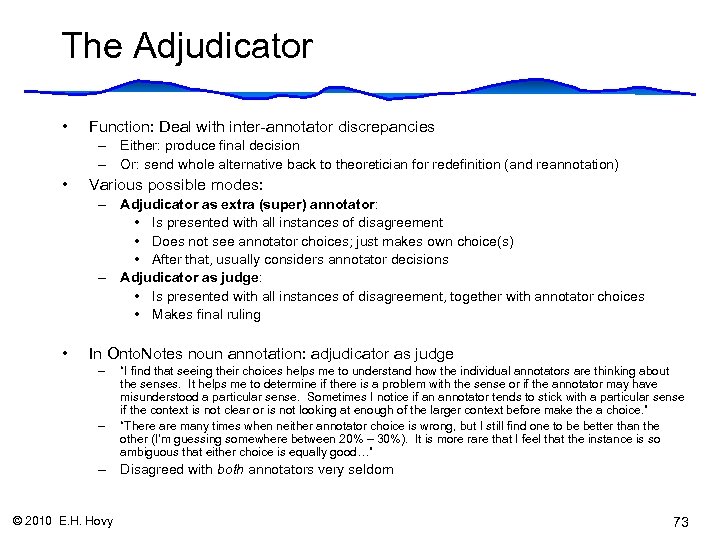

The Adjudicator • Function: Deal with inter-annotator discrepancies – Either: produce final decision – Or: send whole alternative back to theoretician for redefinition (and reannotation) • Various possible modes: – Adjudicator as extra (super) annotator: • Is presented with all instances of disagreement • Does not see annotator choices; just makes own choice(s) • After that, usually considers annotator decisions – Adjudicator as judge: • Is presented with all instances of disagreement, together with annotator choices • Makes final ruling • In Onto. Notes noun annotation: adjudicator as judge – – “I find that seeing their choices helps me to understand how the individual annotators are thinking about the senses. It helps me to determine if there is a problem with the sense or if the annotator may have misunderstood a particular sense. Sometimes I notice if an annotator tends to stick with a particular sense if the context is not clear or is not looking at enough of the larger context before make the a choice. ” “There are many times when neither annotator choice is wrong, but I still find one to be better than the other (I'm guessing somewhere between 20% – 30%). It is more rare that I feel that the instance is so ambiguous that either choice is equally good…” – Disagreed with both annotators very seldom © 2010 E. H. Hovy 73

The Adjudicator • Function: Deal with inter-annotator discrepancies – Either: produce final decision – Or: send whole alternative back to theoretician for redefinition (and reannotation) • Various possible modes: – Adjudicator as extra (super) annotator: • Is presented with all instances of disagreement • Does not see annotator choices; just makes own choice(s) • After that, usually considers annotator decisions – Adjudicator as judge: • Is presented with all instances of disagreement, together with annotator choices • Makes final ruling • In Onto. Notes noun annotation: adjudicator as judge – – “I find that seeing their choices helps me to understand how the individual annotators are thinking about the senses. It helps me to determine if there is a problem with the sense or if the annotator may have misunderstood a particular sense. Sometimes I notice if an annotator tends to stick with a particular sense if the context is not clear or is not looking at enough of the larger context before make the a choice. ” “There are many times when neither annotator choice is wrong, but I still find one to be better than the other (I'm guessing somewhere between 20% – 30%). It is more rare that I feel that the instance is so ambiguous that either choice is equally good…” – Disagreed with both annotators very seldom © 2010 E. H. Hovy 73

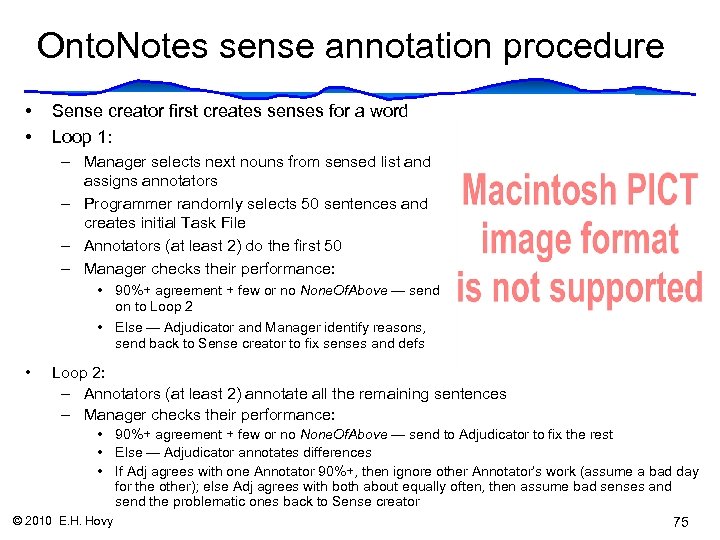

Onto. Notes sense annotation procedure • • Sense creator first creates senses for a word Loop 1: – Manager selects next nouns from sensed list and assigns annotators – Programmer randomly selects 50 sentences and creates initial Task File – Annotators (at least 2) do the first 50 – Manager checks their performance: • 90%+ agreement + few or no None. Of. Above — send on to Loop 2 • Else — Adjudicator and Manager identify reasons, send back to Sense creator to fix senses and defs • Loop 2: – Annotators (at least 2) annotate all the remaining sentences – Manager checks their performance: • 90%+ agreement + few or no None. Of. Above — send to Adjudicator to fix the rest • Else — Adjudicator annotates differences • If Adj agrees with one Annotator 90%+, then ignore other Annotator’s work (assume a bad day for the other); else Adj agrees with both about equally often, then assume bad senses and send the problematic ones back to Sense creator © 2010 E. H. Hovy 75

Onto. Notes sense annotation procedure • • Sense creator first creates senses for a word Loop 1: – Manager selects next nouns from sensed list and assigns annotators – Programmer randomly selects 50 sentences and creates initial Task File – Annotators (at least 2) do the first 50 – Manager checks their performance: • 90%+ agreement + few or no None. Of. Above — send on to Loop 2 • Else — Adjudicator and Manager identify reasons, send back to Sense creator to fix senses and defs • Loop 2: – Annotators (at least 2) annotate all the remaining sentences – Manager checks their performance: • 90%+ agreement + few or no None. Of. Above — send to Adjudicator to fix the rest • Else — Adjudicator annotates differences • If Adj agrees with one Annotator 90%+, then ignore other Annotator’s work (assume a bad day for the other); else Adj agrees with both about equally often, then assume bad senses and send the problematic ones back to Sense creator © 2010 E. H. Hovy 75

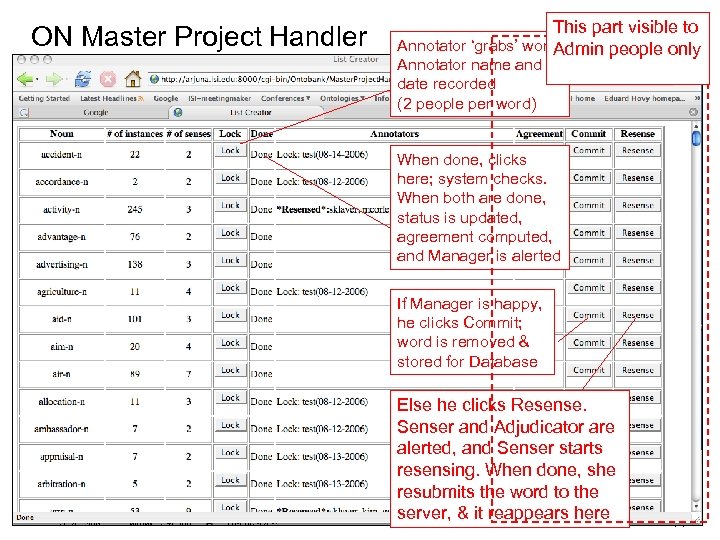

ON Master Project Handler This part visible to Annotator ‘grabs’ word: Admin people only Annotator name and date recorded (2 people per word) When done, clicks here; system checks. When both are done, status is updated, agreement computed, and Manager is alerted If Manager is happy, he clicks Commit; word is removed & stored for Database Else he clicks Resense. Senser and Adjudicator are alerted, and Senser starts resensing. When done, she resubmits the word to the server, & it reappears here 77

ON Master Project Handler This part visible to Annotator ‘grabs’ word: Admin people only Annotator name and date recorded (2 people per word) When done, clicks here; system checks. When both are done, status is updated, agreement computed, and Manager is alerted If Manager is happy, he clicks Commit; word is removed & stored for Database Else he clicks Resense. Senser and Adjudicator are alerted, and Senser starts resensing. When done, she resubmits the word to the server, & it reappears here 77

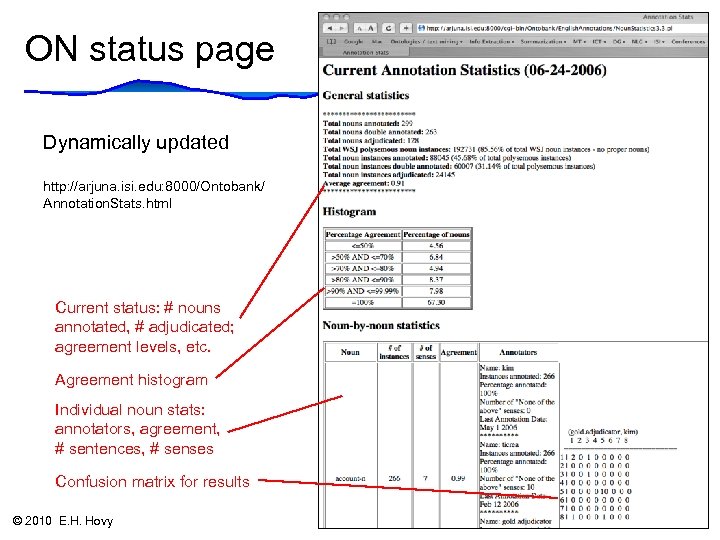

ON status page Dynamically updated http: //arjuna. isi. edu: 8000/Ontobank/ Annotation. Stats. html Current status: # nouns annotated, # adjudicated; agreement levels, etc. Agreement histogram Individual noun stats: annotators, agreement, # sentences, # senses Confusion matrix for results © 2010 E. H. Hovy 78

ON status page Dynamically updated http: //arjuna. isi. edu: 8000/Ontobank/ Annotation. Stats. html Current status: # nouns annotated, # adjudicated; agreement levels, etc. Agreement histogram Individual noun stats: annotators, agreement, # sentences, # senses Confusion matrix for results © 2010 E. H. Hovy 78

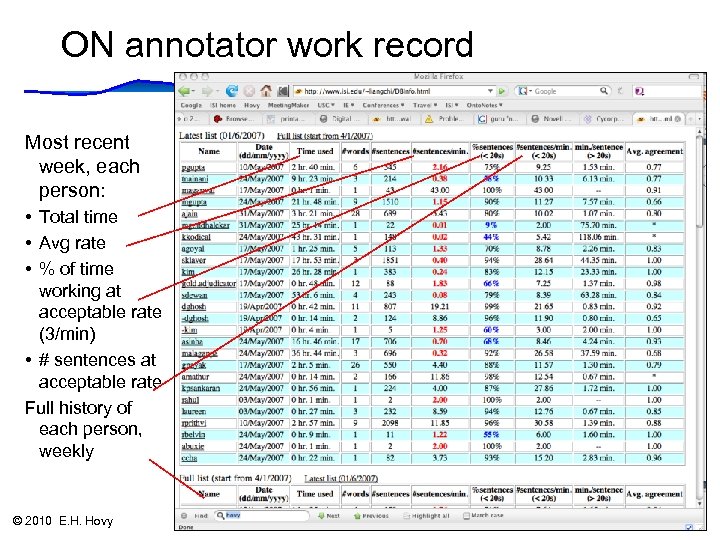

ON annotator work record Most recent week, each person: • Total time • Avg rate • % of time working at acceptable rate (3/min) • # sentences at acceptable rate Full history of each person, weekly © 2010 E. H. Hovy 79

ON annotator work record Most recent week, each person: • Total time • Avg rate • % of time working at acceptable rate (3/min) • # sentences at acceptable rate Full history of each person, weekly © 2010 E. H. Hovy 79

Q 6: Evaluation. What to measure? • Fundamental assumption: The work is trustworthy when independent annotators agree • But what to measure for ‘agreement’? Q 6. 1 Measuring individual agreements – Pairwise agreements and averages Q 6. 2 Measuring overall group behavior – Group averages and trends Q 6. 3 Measuring characteristics of corpus – Skewedness, internal homogeniety, etc. © 2010 E. H. Hovy 80

Q 6: Evaluation. What to measure? • Fundamental assumption: The work is trustworthy when independent annotators agree • But what to measure for ‘agreement’? Q 6. 1 Measuring individual agreements – Pairwise agreements and averages Q 6. 2 Measuring overall group behavior – Group averages and trends Q 6. 3 Measuring characteristics of corpus – Skewedness, internal homogeniety, etc. © 2010 E. H. Hovy 80

Three common evaluation metrics • Simple agreement – Good when there’s a serious imbalance in annotation values (kappa is low, but you still need some indication of agreement) • Cohen’s kappa – – Removes chance agreement Works only pairwise: two annotators at a time Doesn’t handle multiple correct answers Cohen, J. 1960. A coefficient of agreement for nominal scales. Educational and Psychological Measurement 20(1) pp. 37– 46. • Fleiss’s kappa – Extends Cohen’s kappa: multiple annotators together – Equations on Wikipedia http: //en. wikipedia. org/wiki/Fleiss%27_kappa – Still doesn’t handle multiple correct answers – Fleiss, J. L. 1971. Measuring nominal scale agreement among many raters. Psychological Bulletin 76(5) pp. 378– 382. © 2010 E. H. Hovy

Three common evaluation metrics • Simple agreement – Good when there’s a serious imbalance in annotation values (kappa is low, but you still need some indication of agreement) • Cohen’s kappa – – Removes chance agreement Works only pairwise: two annotators at a time Doesn’t handle multiple correct answers Cohen, J. 1960. A coefficient of agreement for nominal scales. Educational and Psychological Measurement 20(1) pp. 37– 46. • Fleiss’s kappa – Extends Cohen’s kappa: multiple annotators together – Equations on Wikipedia http: //en. wikipedia. org/wiki/Fleiss%27_kappa – Still doesn’t handle multiple correct answers – Fleiss, J. L. 1971. Measuring nominal scale agreement among many raters. Psychological Bulletin 76(5) pp. 378– 382. © 2010 E. H. Hovy

6. 1: Measuring individual agreements • Evaluating individual pieces of information: – What to evaluate: • Individual agreement scores between creators • Overall agreement averages and trends – What measure(s) to use: • Simple agreement is biased by chance agreement — however, this may be fine, if all you care about is a system that mirrors human behavior • Kappa is better for testing inter-annotator agreement. But it is not sufficient — cannot handle multiple correct choices, and works only pairwise • Krippendorff’s alpha, Kappa variations…; see (Krippendorff 07; Bortz 05 in German) – Tolerances: • When is the agreement no longer good enough? — why the 90% rule? (Marcus’s rule: if humans get N%, systems will achieve (N-10)% ) – The problem of asymmetrical/unbalanced corpora • When you get high agreement but low Kappa — does it matter? An unbalanced corpus (almost all decisions have one value) makes choice easy but Kappa low. This is often fine if that’s what your task requires • Experts: Psych experimenters and Corpus Analysis statisticians © 2010 E. H. Hovy 82

6. 1: Measuring individual agreements • Evaluating individual pieces of information: – What to evaluate: • Individual agreement scores between creators • Overall agreement averages and trends – What measure(s) to use: • Simple agreement is biased by chance agreement — however, this may be fine, if all you care about is a system that mirrors human behavior • Kappa is better for testing inter-annotator agreement. But it is not sufficient — cannot handle multiple correct choices, and works only pairwise • Krippendorff’s alpha, Kappa variations…; see (Krippendorff 07; Bortz 05 in German) – Tolerances: • When is the agreement no longer good enough? — why the 90% rule? (Marcus’s rule: if humans get N%, systems will achieve (N-10)% ) – The problem of asymmetrical/unbalanced corpora • When you get high agreement but low Kappa — does it matter? An unbalanced corpus (almost all decisions have one value) makes choice easy but Kappa low. This is often fine if that’s what your task requires • Experts: Psych experimenters and Corpus Analysis statisticians © 2010 E. H. Hovy 82

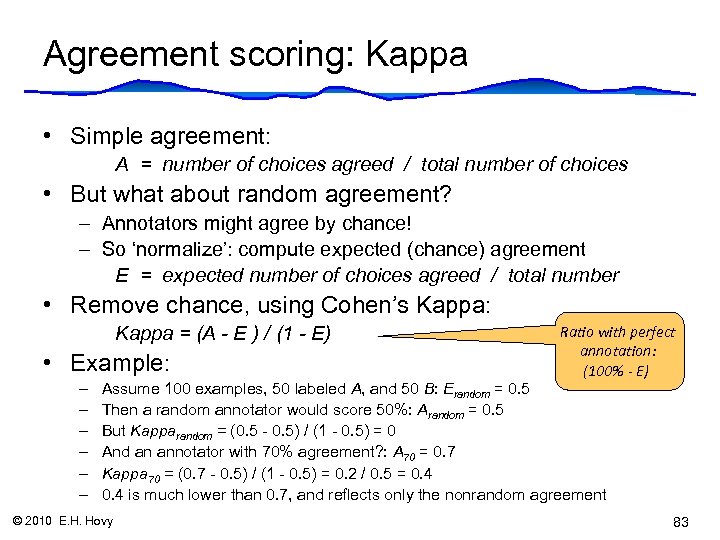

Agreement scoring: Kappa • Simple agreement: A = number of choices agreed / total number of choices • But what about random agreement? – Annotators might agree by chance! – So ‘normalize’: compute expected (chance) agreement E = expected number of choices agreed / total number • Remove chance, using Cohen’s Kappa: Kappa = (A - E ) / (1 - E) • Example: – – – Ratio with perfect annotation: (100% - E) Assume 100 examples, 50 labeled A, and 50 B: Erandom = 0. 5 Then a random annotator would score 50%: Arandom = 0. 5 But Kapparandom = (0. 5 - 0. 5) / (1 - 0. 5) = 0 And an annotator with 70% agreement? : A 70 = 0. 7 Kappa 70 = (0. 7 - 0. 5) / (1 - 0. 5) = 0. 2 / 0. 5 = 0. 4 is much lower than 0. 7, and reflects only the nonrandom agreement © 2010 E. H. Hovy 83

Agreement scoring: Kappa • Simple agreement: A = number of choices agreed / total number of choices • But what about random agreement? – Annotators might agree by chance! – So ‘normalize’: compute expected (chance) agreement E = expected number of choices agreed / total number • Remove chance, using Cohen’s Kappa: Kappa = (A - E ) / (1 - E) • Example: – – – Ratio with perfect annotation: (100% - E) Assume 100 examples, 50 labeled A, and 50 B: Erandom = 0. 5 Then a random annotator would score 50%: Arandom = 0. 5 But Kapparandom = (0. 5 - 0. 5) / (1 - 0. 5) = 0 And an annotator with 70% agreement? : A 70 = 0. 7 Kappa 70 = (0. 7 - 0. 5) / (1 - 0. 5) = 0. 2 / 0. 5 = 0. 4 is much lower than 0. 7, and reflects only the nonrandom agreement © 2010 E. H. Hovy 83

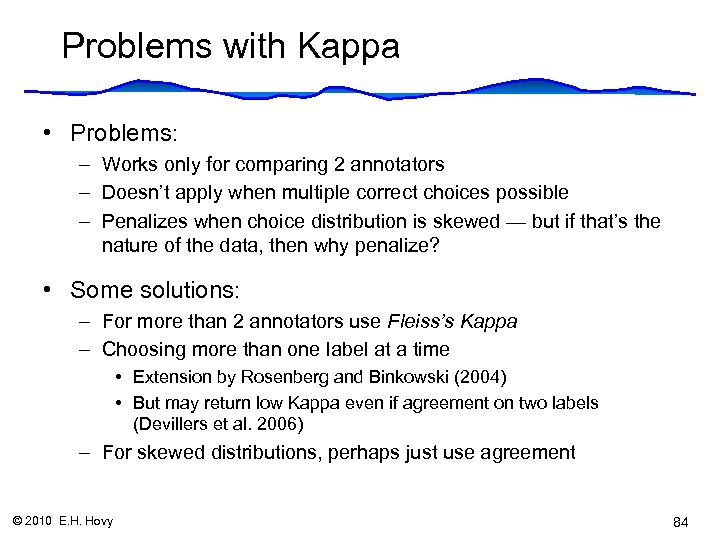

Problems with Kappa • Problems: – Works only for comparing 2 annotators – Doesn’t apply when multiple correct choices possible – Penalizes when choice distribution is skewed — but if that’s the nature of the data, then why penalize? • Some solutions: – For more than 2 annotators use Fleiss’s Kappa – Choosing more than one label at a time • Extension by Rosenberg and Binkowski (2004) • But may return low Kappa even if agreement on two labels (Devillers et al. 2006) – For skewed distributions, perhaps just use agreement © 2010 E. H. Hovy 84

Problems with Kappa • Problems: – Works only for comparing 2 annotators – Doesn’t apply when multiple correct choices possible – Penalizes when choice distribution is skewed — but if that’s the nature of the data, then why penalize? • Some solutions: – For more than 2 annotators use Fleiss’s Kappa – Choosing more than one label at a time • Extension by Rosenberg and Binkowski (2004) • But may return low Kappa even if agreement on two labels (Devillers et al. 2006) – For skewed distributions, perhaps just use agreement © 2010 E. H. Hovy 84

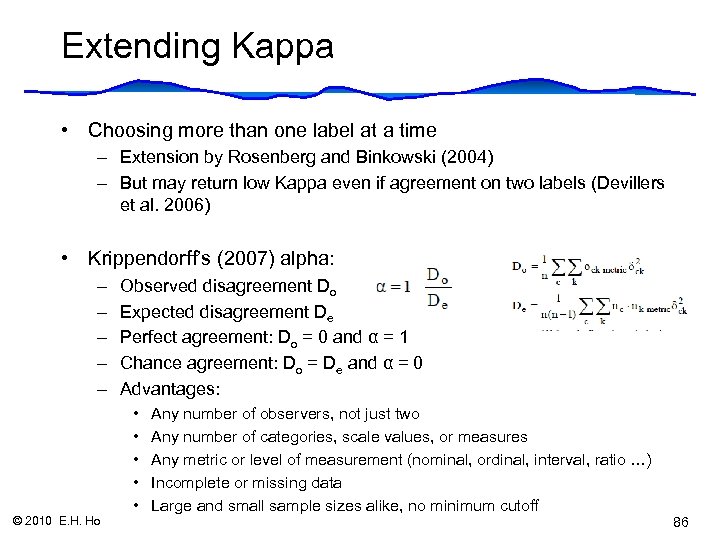

Extending Kappa • Choosing more than one label at a time – Extension by Rosenberg and Binkowski (2004) – But may return low Kappa even if agreement on two labels (Devillers et al. 2006) • Krippendorff’s (2007) alpha: – – – Observed disagreement Do Expected disagreement De Perfect agreement: Do = 0 and α = 1 Chance agreement: Do = De and α = 0 Advantages: • • • © 2010 E. H. Ho Any number of observers, not just two Any number of categories, scale values, or measures Any metric or level of measurement (nominal, ordinal, interval, ratio …) Incomplete or missing data Large and small sample sizes alike, no minimum cutoff 86

Extending Kappa • Choosing more than one label at a time – Extension by Rosenberg and Binkowski (2004) – But may return low Kappa even if agreement on two labels (Devillers et al. 2006) • Krippendorff’s (2007) alpha: – – – Observed disagreement Do Expected disagreement De Perfect agreement: Do = 0 and α = 1 Chance agreement: Do = De and α = 0 Advantages: • • • © 2010 E. H. Ho Any number of observers, not just two Any number of categories, scale values, or measures Any metric or level of measurement (nominal, ordinal, interval, ratio …) Incomplete or missing data Large and small sample sizes alike, no minimum cutoff 86

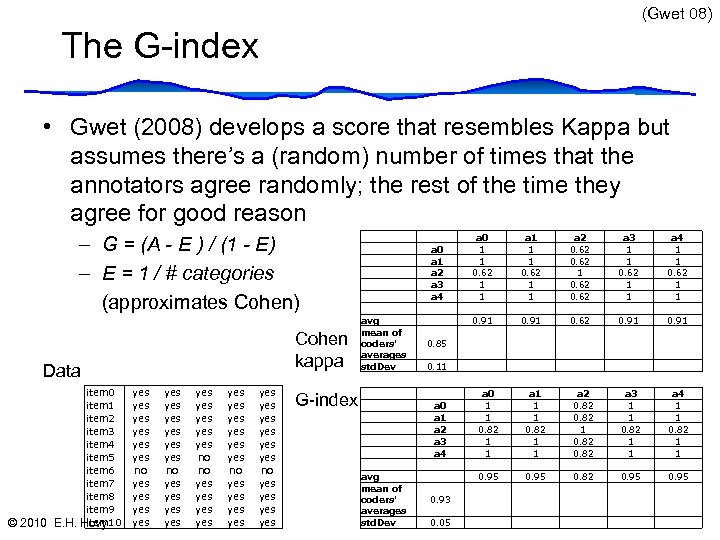

(Gwet 08) The G-index • Gwet (2008) develops a score that resembles Kappa but assumes there’s a (random) number of times that the annotators agree randomly; the rest of the time they agree for good reason – G = (A - E ) / (1 - E) – E = 1 / # categories (approximates Cohen) Cohen kappa Data item 0 item 1 item 2 item 3 item 4 item 5 item 6 item 7 item 8 item 9 item 10 © 2010 E. H. Hovy yes yes yes no yes yes yes yes no no yes yes yes yes yes no yes yes avg mean of coders' averages std. Dev G-index a 1 1 1 0. 62 1 1 a 2 0. 62 1 0. 62 a 3 1 1 0. 62 1 1 a 4 1 1 0. 62 1 1 0. 91 0. 62 0. 91 0. 85 0. 11 0. 93 0. 05 a 0 1 1 0. 82 1 1 a 1 1 1 0. 82 1 1 a 2 0. 82 1 0. 82 a 3 1 1 0. 82 1 1 a 4 1 1 0. 82 1 1 0. 95 a 0 a 1 a 2 a 3 a 4 avg mean of coders' averages std. Dev a 0 1 1 0. 62 1 1 0. 91 a 0 a 1 a 2 a 3 a 4 0. 95 0. 82 0. 95

(Gwet 08) The G-index • Gwet (2008) develops a score that resembles Kappa but assumes there’s a (random) number of times that the annotators agree randomly; the rest of the time they agree for good reason – G = (A - E ) / (1 - E) – E = 1 / # categories (approximates Cohen) Cohen kappa Data item 0 item 1 item 2 item 3 item 4 item 5 item 6 item 7 item 8 item 9 item 10 © 2010 E. H. Hovy yes yes yes no yes yes yes yes no no yes yes yes yes yes no yes yes avg mean of coders' averages std. Dev G-index a 1 1 1 0. 62 1 1 a 2 0. 62 1 0. 62 a 3 1 1 0. 62 1 1 a 4 1 1 0. 62 1 1 0. 91 0. 62 0. 91 0. 85 0. 11 0. 93 0. 05 a 0 1 1 0. 82 1 1 a 1 1 1 0. 82 1 1 a 2 0. 82 1 0. 82 a 3 1 1 0. 82 1 1 a 4 1 1 0. 82 1 1 0. 95 a 0 a 1 a 2 a 3 a 4 avg mean of coders' averages std. Dev a 0 1 1 0. 62 1 1 0. 91 a 0 a 1 a 2 a 3 a 4 0. 95 0. 82 0. 95

(Bayerl 08) Measuring group behavior 2 • Study pairwise (dis)agreement over time – Count number of disagreements between each pair of annotators at selected times in project – Example (Bayerl 2008), scores normalized: • Trend: higher agreement in Phase 2 • Puzzling: some people ‘diverge’ – Implication: Agreement between people at one time is not necessarily a guarantee for agreement at another © 2010 E. H. Hovy 90

(Bayerl 08) Measuring group behavior 2 • Study pairwise (dis)agreement over time – Count number of disagreements between each pair of annotators at selected times in project – Example (Bayerl 2008), scores normalized: • Trend: higher agreement in Phase 2 • Puzzling: some people ‘diverge’ – Implication: Agreement between people at one time is not necessarily a guarantee for agreement at another © 2010 E. H. Hovy 90

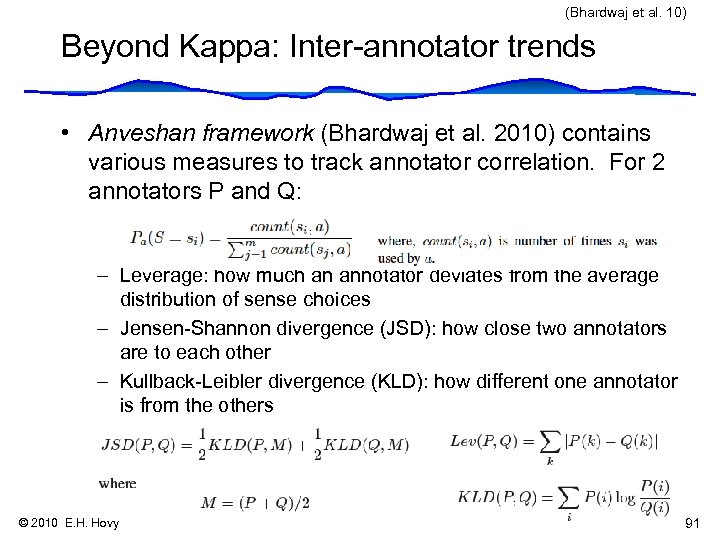

(Bhardwaj et al. 10) Beyond Kappa: Inter-annotator trends • Anveshan framework (Bhardwaj et al. 2010) contains various measures to track annotator correlation. For 2 annotators P and Q: – Leverage: how much an annotator deviates from the average distribution of sense choices – Jensen-Shannon divergence (JSD): how close two annotators are to each other – Kullback-Leibler divergence (KLD): how different one annotator is from the others © 2010 E. H. Hovy 91

(Bhardwaj et al. 10) Beyond Kappa: Inter-annotator trends • Anveshan framework (Bhardwaj et al. 2010) contains various measures to track annotator correlation. For 2 annotators P and Q: – Leverage: how much an annotator deviates from the average distribution of sense choices – Jensen-Shannon divergence (JSD): how close two annotators are to each other – Kullback-Leibler divergence (KLD): how different one annotator is from the others © 2010 E. H. Hovy 91

Trends in annotation correctness • If you have a Gold Standard, you can check how annotators perform over time — ‘annotator drift’ • Example (Bayerl 2008) – – – – 10 annotators Averaged precision (correctness) scores over groups of 20 examples annotated a) some people go up and down b) some people slump but then perk up c) some people get steadily better d) some just get tired… Suggestion: Don’t let annotators work for too long at a time • Why the drift? – Annotators develop own models – Annotators develop ‘cues’ and use them as short cuts — may be wrong © 2010 E. H. Hovy 92

Trends in annotation correctness • If you have a Gold Standard, you can check how annotators perform over time — ‘annotator drift’ • Example (Bayerl 2008) – – – – 10 annotators Averaged precision (correctness) scores over groups of 20 examples annotated a) some people go up and down b) some people slump but then perk up c) some people get steadily better d) some just get tired… Suggestion: Don’t let annotators work for too long at a time • Why the drift? – Annotators develop own models – Annotators develop ‘cues’ and use them as short cuts — may be wrong © 2010 E. H. Hovy 92

6. 2: Measuring group behavior 1 Compare behavior/statistics of annotators as a group • Distribution of choices, and change of choice distribution over time — ‘agreement drift’ – Example (Bayerl 2008) – Check for systematic under - or over-use of categories (for this, compare against Gold Standard, or against majority annotations) © 2010 E. H. Hovy Category choice freq • 10 annotators, 20 categories • Annotator S 04 uses only 3 categories for over 50% of the examples, and ignores 30% of categories — not good Annotators 93

6. 2: Measuring group behavior 1 Compare behavior/statistics of annotators as a group • Distribution of choices, and change of choice distribution over time — ‘agreement drift’ – Example (Bayerl 2008) – Check for systematic under - or over-use of categories (for this, compare against Gold Standard, or against majority annotations) © 2010 E. H. Hovy Category choice freq • 10 annotators, 20 categories • Annotator S 04 uses only 3 categories for over 50% of the examples, and ignores 30% of categories — not good Annotators 93

Measuring group behavior 2 • Study pairwise (dis)agreement over time – Count number of disagreements between each pair of annotators at selected times in project – Example (Bayerl 2008), scores normalized: • Trend: higher agreement in Phase 2 • Puzzling: some people ‘diverge’ – Implication: Agreement between people at one time is not necessarily a guarantee for agreement at another © 2010 E. H. Hovy 94

Measuring group behavior 2 • Study pairwise (dis)agreement over time – Count number of disagreements between each pair of annotators at selected times in project – Example (Bayerl 2008), scores normalized: • Trend: higher agreement in Phase 2 • Puzzling: some people ‘diverge’ – Implication: Agreement between people at one time is not necessarily a guarantee for agreement at another © 2010 E. H. Hovy 94

6. 3: Measuring characteristics of corpus 1. Is the corpus consistent (enough)? – Many corpora are compilations of smaller elements – Different subcorpus characteristics may produce imbalances in important respects regarding theory – How to determine this? What to do to fix it? 2. Is the annotated result enough? What does ‘enough’ mean? – (Sufficiency: when the machine learning system shows no increase in accuracy despite more training data) © 2010 E. H. Hovy 95

6. 3: Measuring characteristics of corpus 1. Is the corpus consistent (enough)? – Many corpora are compilations of smaller elements – Different subcorpus characteristics may produce imbalances in important respects regarding theory – How to determine this? What to do to fix it? 2. Is the annotated result enough? What does ‘enough’ mean? – (Sufficiency: when the machine learning system shows no increase in accuracy despite more training data) © 2010 E. H. Hovy 95

Q 7: Delivery • It’s not just about annotation… How do you make sure others use the corpus? • Technical issues: – – – © 2010 E. H. Hovy Licensing Distribution Support/maintenance (over years? ) Incorporating new annotations/updates: layering Experts: Data managers 102

Q 7: Delivery • It’s not just about annotation… How do you make sure others use the corpus? • Technical issues: – – – © 2010 E. H. Hovy Licensing Distribution Support/maintenance (over years? ) Incorporating new annotations/updates: layering Experts: Data managers 102