39b019678c3f6d412d617c2f32f2133d.ppt

- Количество слайдов: 16

ANL HEP Scientific and Divisional Computing News Tom Le. Compte High Energy Physics Division Argonne National Laboratory

ANL HEP Scientific and Divisional Computing News Tom Le. Compte High Energy Physics Division Argonne National Laboratory

Headlines § New developments in HPC computing § Divisional Computing & Reorganization § Some Future Opportunities and Directions What can be done at Argonne because we are at Argonne? 2

Headlines § New developments in HPC computing § Divisional Computing & Reorganization § Some Future Opportunities and Directions What can be done at Argonne because we are at Argonne? 2

High Performance Computing § § § ANL-HEP received two ASCR Leadership Computing Challenge awards for 2014: “Cosmic Frontier Computational End Station” (Habib et al. ) and “Simulation of Large Hadron Collider Events Using Leadership Computing” (Le. Compte et al. ) The 2 nd one is a new direction for accelerator-based particle physics We have received 50 million cpu-hours at the Argonne Leadership Computing Facillity and 2 million cpu-hours at NERSC (Berkeley) to provide simulated events to ATLAS to – Extend the science – Expand the computing capacity by going beyond the Grid – Investigate computer architectures that are closer to where industry is moving 50 M is ~4 -5% of ATLAS Grid use. This was chosen to be large enough to make a difference, but small enough to avoid unnecessary risk. The C stands for “Challenge”. 3

High Performance Computing § § § ANL-HEP received two ASCR Leadership Computing Challenge awards for 2014: “Cosmic Frontier Computational End Station” (Habib et al. ) and “Simulation of Large Hadron Collider Events Using Leadership Computing” (Le. Compte et al. ) The 2 nd one is a new direction for accelerator-based particle physics We have received 50 million cpu-hours at the Argonne Leadership Computing Facillity and 2 million cpu-hours at NERSC (Berkeley) to provide simulated events to ATLAS to – Extend the science – Expand the computing capacity by going beyond the Grid – Investigate computer architectures that are closer to where industry is moving 50 M is ~4 -5% of ATLAS Grid use. This was chosen to be large enough to make a difference, but small enough to avoid unnecessary risk. The C stands for “Challenge”. 3

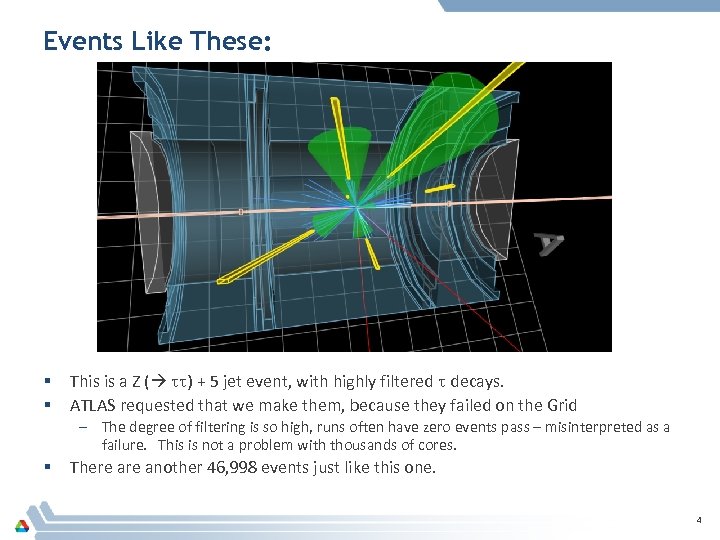

Events Like These: § § This is a Z ( tt) + 5 jet event, with highly filtered t decays. ATLAS requested that we make them, because they failed on the Grid – The degree of filtering is so high, runs often have zero events pass – misinterpreted as a failure. This is not a problem with thousands of cores. § There another 46, 998 events just like this one. 4

Events Like These: § § This is a Z ( tt) + 5 jet event, with highly filtered t decays. ATLAS requested that we make them, because they failed on the Grid – The degree of filtering is so high, runs often have zero events pass – misinterpreted as a failure. This is not a problem with thousands of cores. § There another 46, 998 events just like this one. 4

ALCF Partners Hal Finkel – Catalyst (formerly HEP) Tom Uram - Software Development Specialist Venkat Vishwanath Computer Scientist These are among ALCF’s very best people – it shows they are serious in applying high performance computing to HEP. Doug Benjamin (Duke) has also been very helpful – particularly where this work touches the rest of ATLAS. 5

ALCF Partners Hal Finkel – Catalyst (formerly HEP) Tom Uram - Software Development Specialist Venkat Vishwanath Computer Scientist These are among ALCF’s very best people – it shows they are serious in applying high performance computing to HEP. Doug Benjamin (Duke) has also been very helpful – particularly where this work touches the rest of ATLAS. 5

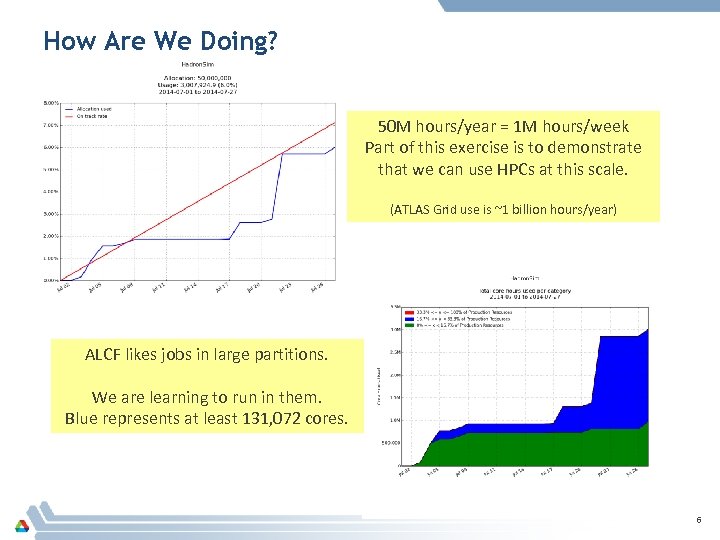

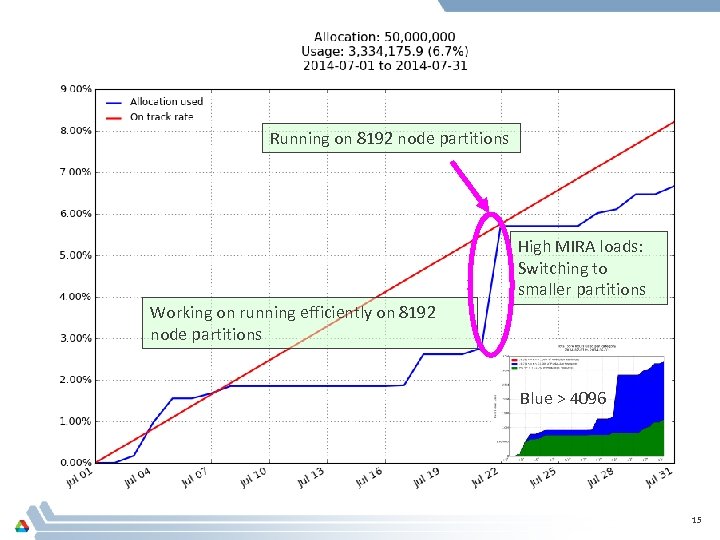

How Are We Doing? 50 M hours/year = 1 M hours/week Part of this exercise is to demonstrate that we can use HPCs at this scale. (ATLAS Grid use is ~1 billion hours/year) ALCF likes jobs in large partitions. We are learning to run in them. Blue represents at least 131, 072 cores. 6

How Are We Doing? 50 M hours/year = 1 M hours/week Part of this exercise is to demonstrate that we can use HPCs at this scale. (ATLAS Grid use is ~1 billion hours/year) ALCF likes jobs in large partitions. We are learning to run in them. Blue represents at least 131, 072 cores. 6

What Are We Doing? § Z ( tt) + 5 jets (8 Te. V Alpgen+Pythia) – – – § Z ( tt) + 4 jets (8 Te. V Alpgen+Pythia) – – – § 98 K events Would have required 3500 24 -hour Grid jobs Saved 14 K CPU-hours compared to the Grid by avoiding duplicate steps in the workflow Z ( ll) + 4/5/6 jets (13 Te. V Alpgen+Pythia) – – – § § 48 k events Would have required 12, 250 24 -hour Grid jobs Saved 50 K CPU-hours compared to the Grid by avoiding duplicate steps in the workflow 6. 5 M/5. 1 M/0. 9 M events on their way to ATLAS (Mira is done and they are in post-processing now) An early start for the ATLAS simulation campaign beginning in September These events are also being provided to CMS W + heavy flavor (13 Te. V Alpgen+Pythia) Sherpa W+N jets – – 11 K events Part of ATLAS’ Sherpa 2. 1 validation Corresponds to a total of 3, 000 CPU-hours. 7

What Are We Doing? § Z ( tt) + 5 jets (8 Te. V Alpgen+Pythia) – – – § Z ( tt) + 4 jets (8 Te. V Alpgen+Pythia) – – – § 98 K events Would have required 3500 24 -hour Grid jobs Saved 14 K CPU-hours compared to the Grid by avoiding duplicate steps in the workflow Z ( ll) + 4/5/6 jets (13 Te. V Alpgen+Pythia) – – – § § 48 k events Would have required 12, 250 24 -hour Grid jobs Saved 50 K CPU-hours compared to the Grid by avoiding duplicate steps in the workflow 6. 5 M/5. 1 M/0. 9 M events on their way to ATLAS (Mira is done and they are in post-processing now) An early start for the ATLAS simulation campaign beginning in September These events are also being provided to CMS W + heavy flavor (13 Te. V Alpgen+Pythia) Sherpa W+N jets – – 11 K events Part of ATLAS’ Sherpa 2. 1 validation Corresponds to a total of 3, 000 CPU-hours. 7

Issues We Are Addressing § Alpgen runs beautifully at 512 nodes and 64 hardware threads per node. – 512 nodes is the minimum Mira partition – It took us several months to get to this point § We have been pushing towards larger and larger partitions – We can run on 8192 nodes (32 threads per node), but we are starting to see where the boundaries and limitations are now. § We want a balance between producing useful events (favoring small partitions) and learning to effectively use these machines at scale (favoring large ones) § Sherpa 2. 1 is running on mid-sized (100’s, not 1000’s of processes) machines – ATLAS has not yet validated 2. 1 (we’re a part of that validation) – We need to scale up to 1000’s of processes. § Geant 4. 10 runs just as well as Alpgen – But running Geant 4. 10 inside the ATLAS framework complicates matters 8

Issues We Are Addressing § Alpgen runs beautifully at 512 nodes and 64 hardware threads per node. – 512 nodes is the minimum Mira partition – It took us several months to get to this point § We have been pushing towards larger and larger partitions – We can run on 8192 nodes (32 threads per node), but we are starting to see where the boundaries and limitations are now. § We want a balance between producing useful events (favoring small partitions) and learning to effectively use these machines at scale (favoring large ones) § Sherpa 2. 1 is running on mid-sized (100’s, not 1000’s of processes) machines – ATLAS has not yet validated 2. 1 (we’re a part of that validation) – We need to scale up to 1000’s of processes. § Geant 4. 10 runs just as well as Alpgen – But running Geant 4. 10 inside the ATLAS framework complicates matters 8

Forum on Computational Excellence Response to P 5 Recommendation #29 § HEP computational needs are continually growing § For 30 years, our solution was ever larger farms of commodity PCs – PCs are not the commodities they once were – The industry is trending towards more and smaller cores with less memory per core – HPCs are ahead of the curve on these trends § The HEP community will need to face these challenges together – This is more difficult to do when much of the software R&D is isolated within the LHC Operations program § The Forum is intended to reduce this isolation and frontier stove-piping. 9

Forum on Computational Excellence Response to P 5 Recommendation #29 § HEP computational needs are continually growing § For 30 years, our solution was ever larger farms of commodity PCs – PCs are not the commodities they once were – The industry is trending towards more and smaller cores with less memory per core – HPCs are ahead of the curve on these trends § The HEP community will need to face these challenges together – This is more difficult to do when much of the software R&D is isolated within the LHC Operations program § The Forum is intended to reduce this isolation and frontier stove-piping. 9

Divisional Computing Reorganization § § The Division has reorganized our computing Three activities are now in one place – David Malon’s ATLAS Computing Group – The HPC activity you just heard about – Divisional computing – e. g. neutrino, theory and ATLAS clusters § Allows us to leverage Laboratory resources for Divisional needs – e. g. a “cloudy” system like Magellan could support multiple experiments and build expertise in mid-scale supercomputing, without having to buy more processors. § Allows us to better align the divisional expertise with the national program 10

Divisional Computing Reorganization § § The Division has reorganized our computing Three activities are now in one place – David Malon’s ATLAS Computing Group – The HPC activity you just heard about – Divisional computing – e. g. neutrino, theory and ATLAS clusters § Allows us to leverage Laboratory resources for Divisional needs – e. g. a “cloudy” system like Magellan could support multiple experiments and build expertise in mid-scale supercomputing, without having to buy more processors. § Allows us to better align the divisional expertise with the national program 10

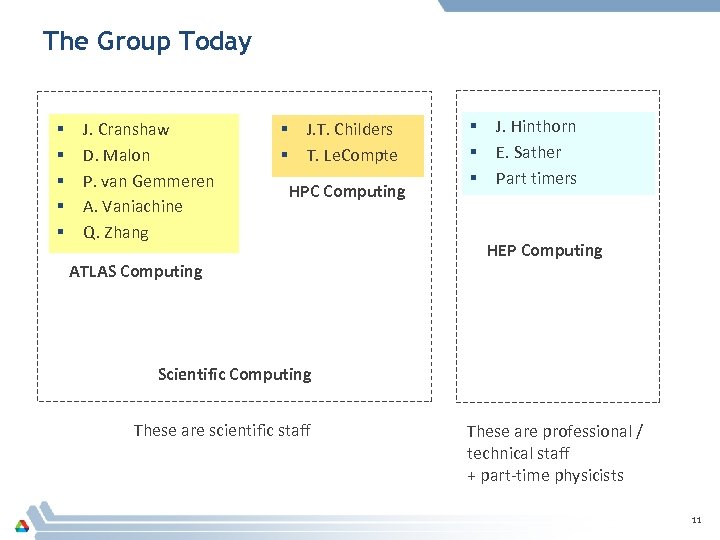

The Group Today § § § J. Cranshaw D. Malon P. van Gemmeren A. Vaniachine Q. Zhang § § J. T. Childers T. Le. Compte HPC Computing ATLAS Computing § § § J. Hinthorn E. Sather Part timers HEP Computing Scientific Computing These are scientific staff These are professional / technical staff + part-time physicists 11

The Group Today § § § J. Cranshaw D. Malon P. van Gemmeren A. Vaniachine Q. Zhang § § J. T. Childers T. Le. Compte HPC Computing ATLAS Computing § § § J. Hinthorn E. Sather Part timers HEP Computing Scientific Computing These are scientific staff These are professional / technical staff + part-time physicists 11

Fermilab g-2 § Interested in simulating a full run of the experiment – Comparable to an ATLAS simulation campaign – 1000 x as many events, each requiring 1/1000 the computation • Big, but not unprecedented – Geant 4. 10 is well suited to Mira • (Not an accident: we’ve been working with Dennis Wright and Andrea Dotti (SLAC) on this) § Wouldn’t have to do this all at once – A one-tenth scale run fits nicely as an 2015 ALCC candidate § We don’t anticipate leading this effort – but we believe we can play a role: we understand the issues in getting HEP software running at these scales. 12

Fermilab g-2 § Interested in simulating a full run of the experiment – Comparable to an ATLAS simulation campaign – 1000 x as many events, each requiring 1/1000 the computation • Big, but not unprecedented – Geant 4. 10 is well suited to Mira • (Not an accident: we’ve been working with Dennis Wright and Andrea Dotti (SLAC) on this) § Wouldn’t have to do this all at once – A one-tenth scale run fits nicely as an 2015 ALCC candidate § We don’t anticipate leading this effort – but we believe we can play a role: we understand the issues in getting HEP software running at these scales. 12

Mu 2 e & LBNF § mu 2 e – We have expressed interest in working on the mu 2 e DAQ – This is a system built entirely out of off-the-shelf hardware, running a customized version of Fermilab’s artdaq – This bridges two areas of current expertise: Trigger/DAQ hardware (we built the ROI Builder for ATLAS) and offline core software – We see the potential of bringing a turn-key DAQ solution to other areas of Argonne: e. g. Photon Sciences § LBNF – We were negotiating with LBNE to work on offline software before the P 5 report • A very-near-term role in database and data access infrastructure for the 35 -ton prototype • Mid- and long-term roles in offline software, leveraging our data, metadata, and I/O infrastructure expertise from ATLAS – We are still interested as LBNE transitions to LBNF • We continue to participate in the LBNE software and computing meetings 13

Mu 2 e & LBNF § mu 2 e – We have expressed interest in working on the mu 2 e DAQ – This is a system built entirely out of off-the-shelf hardware, running a customized version of Fermilab’s artdaq – This bridges two areas of current expertise: Trigger/DAQ hardware (we built the ROI Builder for ATLAS) and offline core software – We see the potential of bringing a turn-key DAQ solution to other areas of Argonne: e. g. Photon Sciences § LBNF – We were negotiating with LBNE to work on offline software before the P 5 report • A very-near-term role in database and data access infrastructure for the 35 -ton prototype • Mid- and long-term roles in offline software, leveraging our data, metadata, and I/O infrastructure expertise from ATLAS – We are still interested as LBNE transitions to LBNF • We continue to participate in the LBNE software and computing meetings 13

Summary § Building on the foundation of ATLAS data management and computational cosmology… § We have started a small but well-leveraged HPC effort for ATLAS – We are on-track to deliver 52 M hours of computation to ATLAS (our ALCC award) – We have two event generators working; simulation is harder § We would like to take advantage of emerging opportunities in the evolving National program 14

Summary § Building on the foundation of ATLAS data management and computational cosmology… § We have started a small but well-leveraged HPC effort for ATLAS – We are on-track to deliver 52 M hours of computation to ATLAS (our ALCC award) – We have two event generators working; simulation is harder § We would like to take advantage of emerging opportunities in the evolving National program 14

Running on 8192 node partitions High MIRA loads: Switching to smaller partitions Working on running efficiently on 8192 node partitions Blue > 4096 15

Running on 8192 node partitions High MIRA loads: Switching to smaller partitions Working on running efficiently on 8192 node partitions Blue > 4096 15

A Brief Update on ATLAS Core Software § Argonne continues to hold lead responsibility within the collaboration for I/O, persistence, and metadata infrastructure supporting a globally distributed, 100+petabyte data store – And for production workflow development and operation § Intensive development effort now underway to support the requirements of ATLAS Run 2 computing – New and radically different event data model – New computing model and derivation framework for analysis data products § § Argonne was recently asked to lead ATLAS ROOT 6 migration as well And to organize and coordinate a distributed I/O performance working group – Integrating core software, distributed computing, operations, and site experts to improve analysis performance in as-deployed distributed environments 16

A Brief Update on ATLAS Core Software § Argonne continues to hold lead responsibility within the collaboration for I/O, persistence, and metadata infrastructure supporting a globally distributed, 100+petabyte data store – And for production workflow development and operation § Intensive development effort now underway to support the requirements of ATLAS Run 2 computing – New and radically different event data model – New computing model and derivation framework for analysis data products § § Argonne was recently asked to lead ATLAS ROOT 6 migration as well And to organize and coordinate a distributed I/O performance working group – Integrating core software, distributed computing, operations, and site experts to improve analysis performance in as-deployed distributed environments 16