79093d1270c936d60beddbf18309c7b5.ppt

- Количество слайдов: 25

An open security defense architecture for open collaborative cyber infrastructures Xinming (Simon) Ou Kansas State University The Great Plains Network Annual Meeting 2009 Kansas City, Missouri GPN 2009 May 29, Kansas City, Missouri

Challenges to securing cyber infrastructures • Cyber warfare is asymmetric – Attack only needs to break a few points – Defense has to be comprehensive • Attackers have an upper hand in automation – Many automated exploit tools – Not so many good defense tools • Openness of academic cyber infrastructures – Unrealistic to have draconic control on access GPN 2009 May 29, Kansas City, Missouri 1

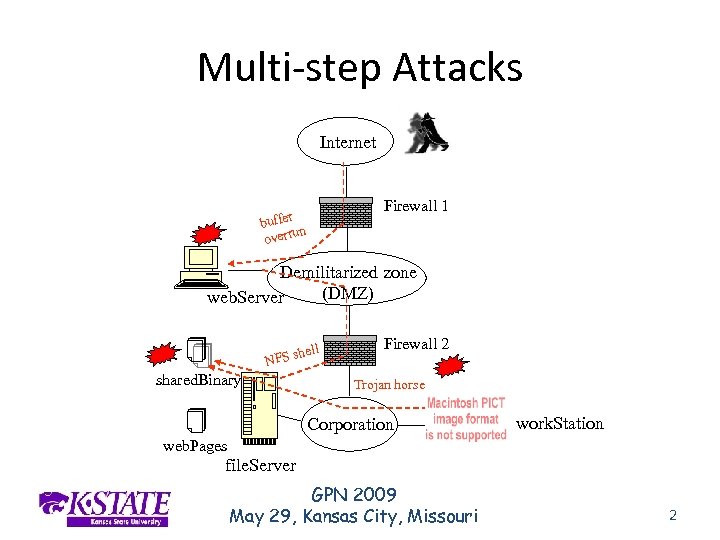

Multi-step Attacks Internet r buffe un overr Firewall 1 Demilitarized zone (DMZ) web. Server sh NFS shared. Binary ell Firewall 2 Trojan horse Corporation work. Station web. Pages file. Server GPN 2009 May 29, Kansas City, Missouri 2

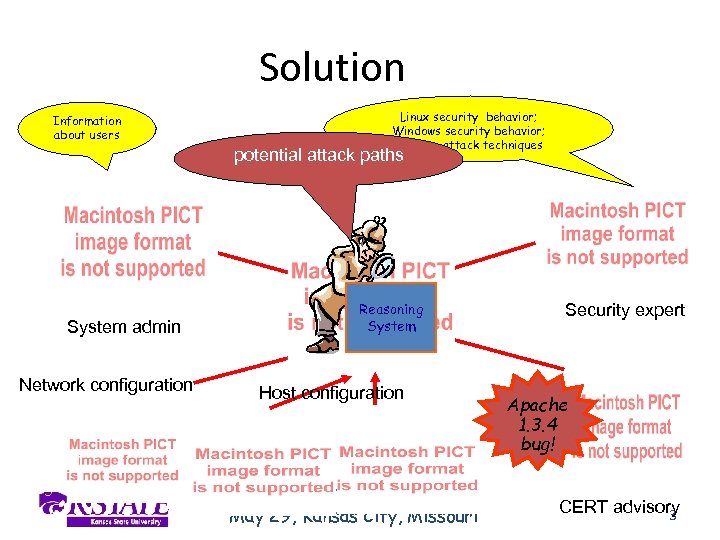

Solution Information about users System admin Network configuration Linux security behavior; Windows security behavior; Common attack techniques potential attack paths Reasoning System Host configuration GPN 2009 May 29, Kansas City, Missouri Security expert Apache 1. 3. 4 bug! CERT advisory 3

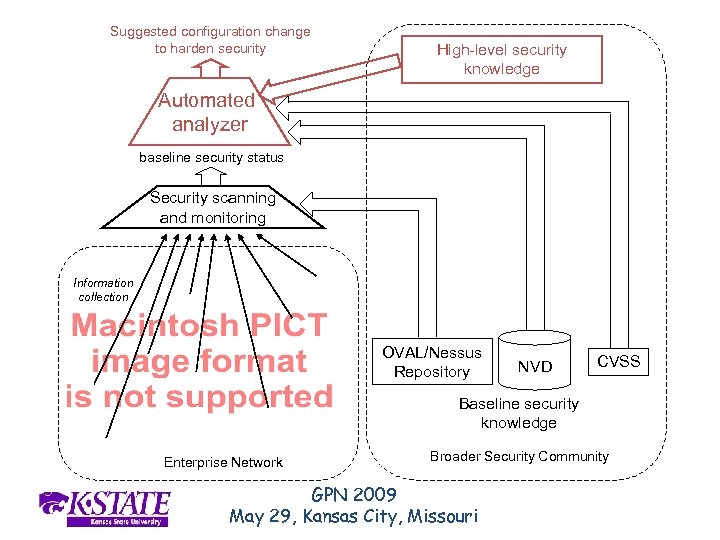

Suggested configuration change to harden security High-level security knowledge Automated analyzer baseline security status Security scanning and monitoring Information collection OVAL/Nessus Repository NVD CVSS Baseline security knowledge Enterprise Network Broader Security Community GPN 2009 May 29, Kansas City, Missouri

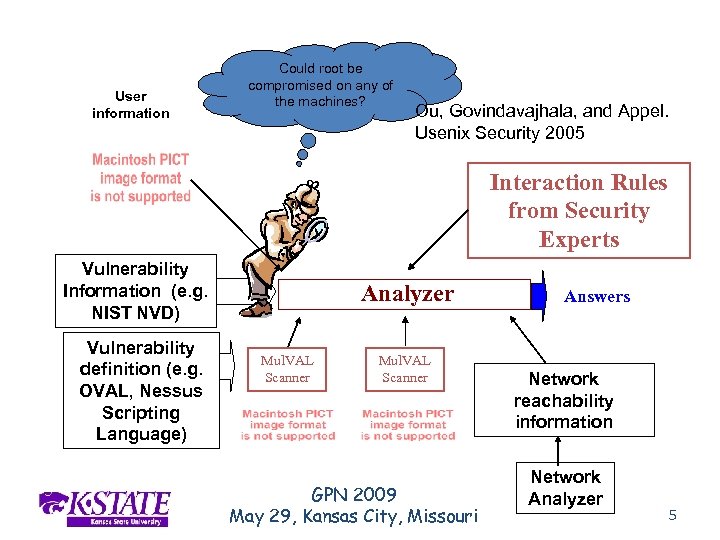

User information Mul. VAL Could root be compromised on any of the machines? Ou, Govindavajhala, and Appel. Usenix Security 2005 Interaction Rules from Security Experts Vulnerability Information (e. g. NIST NVD) Vulnerability definition (e. g. OVAL, Nessus Scripting Language) Analyzer Mul. VAL Scanner GPN 2009 May 29, Kansas City, Missouri Answers Network reachability information Network Analyzer 5

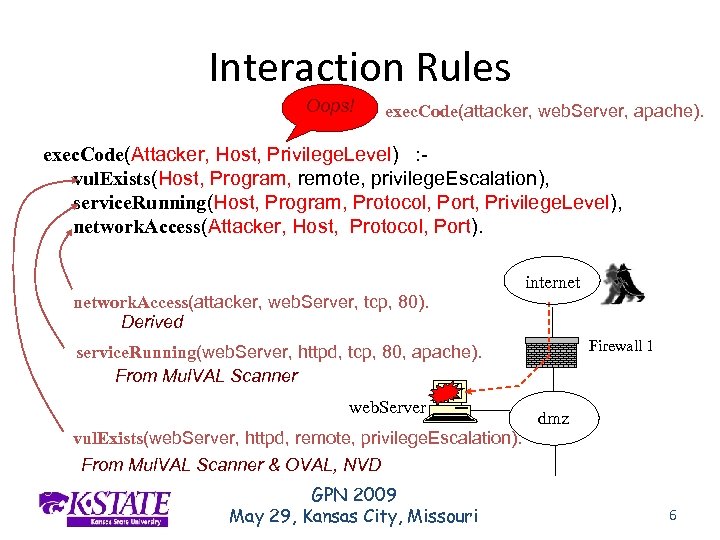

Interaction Rules Oops! exec. Code(attacker, web. Server, apache). exec. Code(Attacker, Host, Privilege. Level) : vul. Exists(Host, Program, remote, privilege. Escalation), service. Running(Host, Program, Protocol, Port, Privilege. Level), network. Access(Attacker, Host, Protocol, Port). network. Access(attacker, web. Server, tcp, 80). Derived internet Firewall 1 service. Running(web. Server, httpd, tcp, 80, apache). From Mul. VAL Scanner web. Server dmz vul. Exists(web. Server, httpd, remote, privilege. Escalation). From Mul. VAL Scanner & OVAL, NVD GPN 2009 May 29, Kansas City, Missouri 6

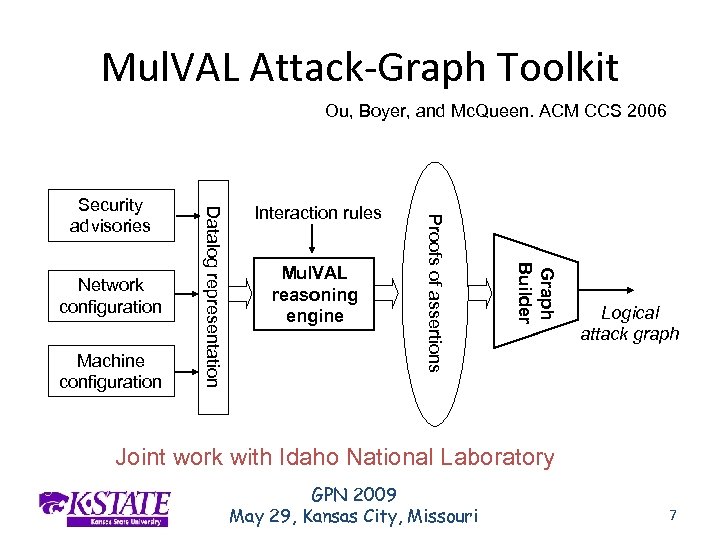

Mul. VAL Attack-Graph Toolkit Ou, Boyer, and Mc. Queen. ACM CCS 2006 Mul. VAL reasoning engine Graph Builder Machine configuration Interaction rules Proofs of assertions Network configuration Datalog representation Security advisories Logical attack graph Joint work with Idaho National Laboratory GPN 2009 May 29, Kansas City, Missouri 7

Test on a Real Network • Used Mul. VAL to check the configuration of four Linux servers – Reported a potential two-stage attack path due to multiple vulnerabilities on a server. • Three local kernel vulnerabilities • One buffer overflow bug in libpng • Local users are trusted • Web browser links libpng GPN 2009 May 29, Kansas City, Missouri 8

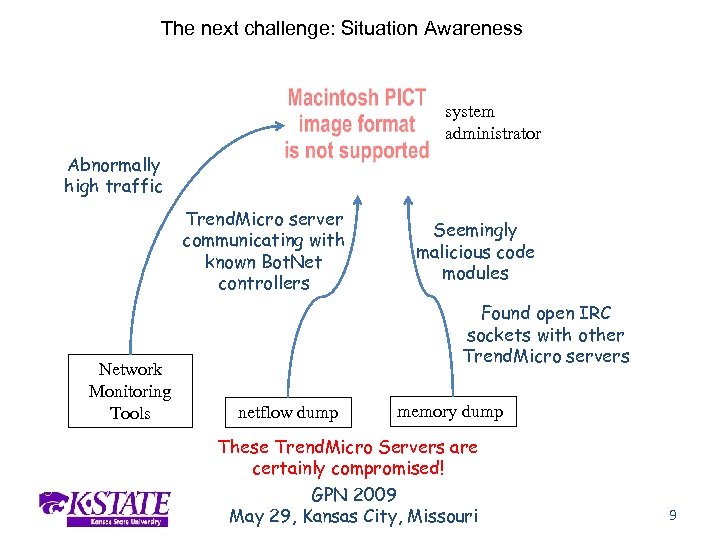

The next challenge: Situation Awareness system administrator Abnormally high traffic Trend. Micro server communicating with known Bot. Net controllers Network Monitoring Tools Seemingly malicious code modules Found open IRC sockets with other Trend. Micro servers netflow dump memory dump These Trend. Micro Servers are certainly compromised! GPN 2009 May 29, Kansas City, Missouri 9

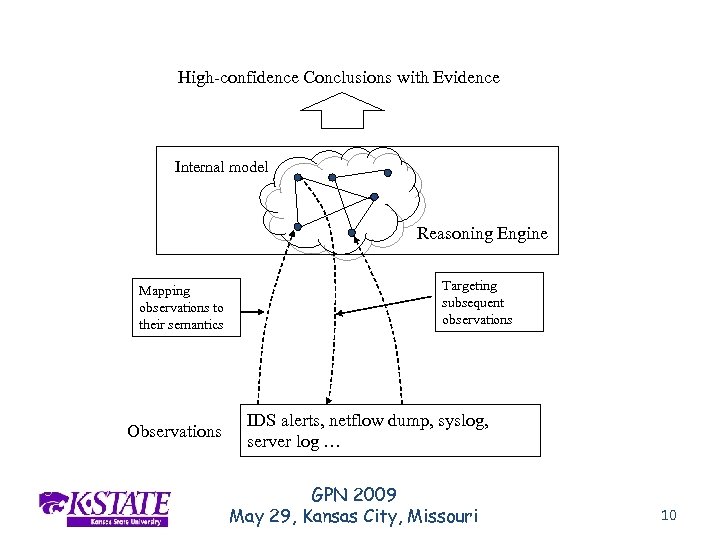

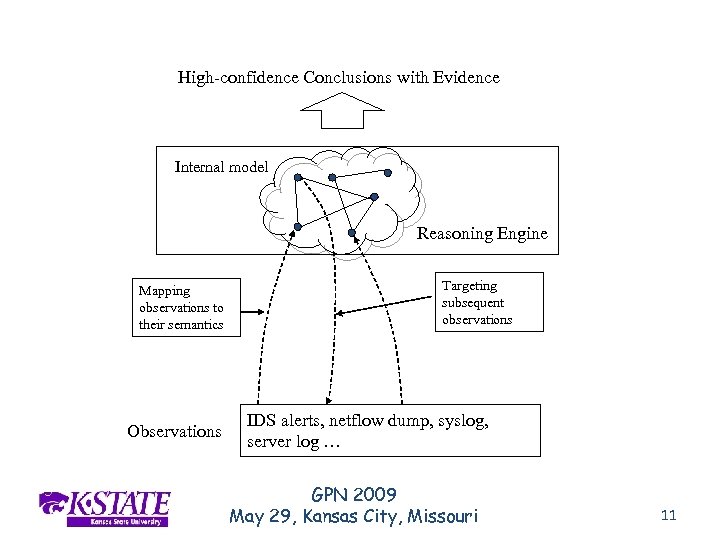

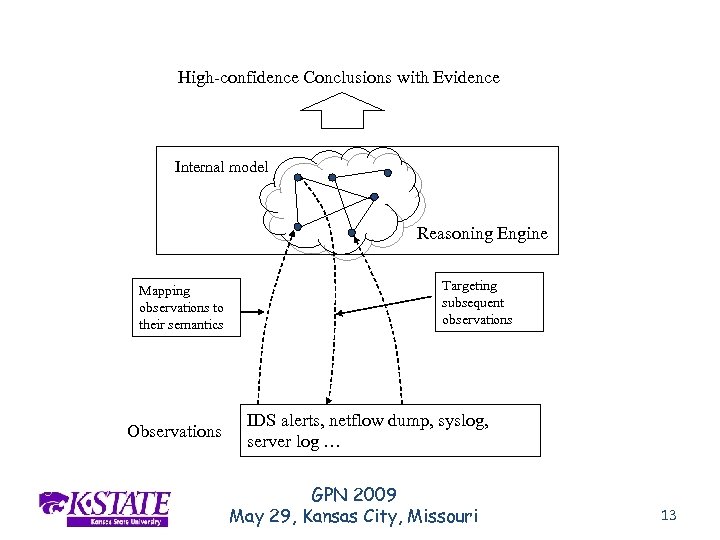

High-confidence Conclusions with Evidence Internal model Reasoning Engine Mapping observations to their semantics Observations Targeting subsequent observations IDS alerts, netflow dump, syslog, server log … GPN 2009 May 29, Kansas City, Missouri 10

High-confidence Conclusions with Evidence Internal model Reasoning Engine Mapping observations to their semantics Observations Targeting subsequent observations IDS alerts, netflow dump, syslog, server log … GPN 2009 May 29, Kansas City, Missouri 11

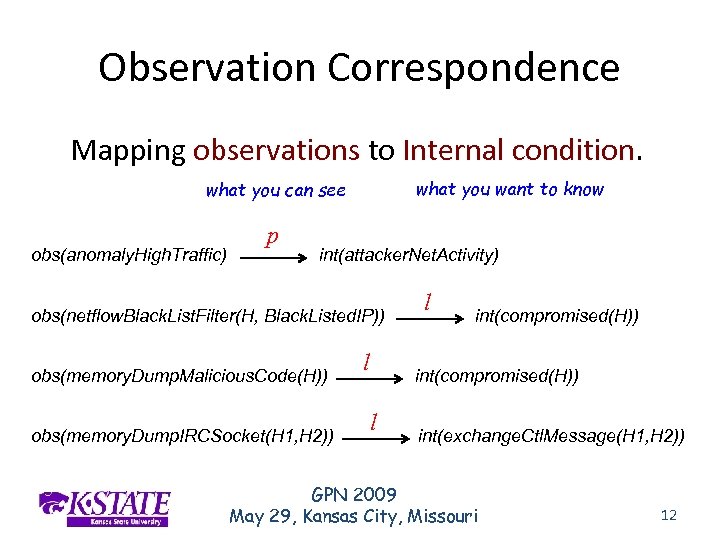

Observation Correspondence Mapping observations to Internal condition. what you want to know what you can see obs(anomaly. High. Traffic) p int(attacker. Net. Activity) obs(netflow. Black. List. Filter(H, Black. Listed. IP)) obs(memory. Dump. Malicious. Code(H)) obs(memory. Dump. IRCSocket(H 1, H 2)) l l int(compromised(H)) l int(exchange. Ctl. Message(H 1, H 2)) GPN 2009 May 29, Kansas City, Missouri 12

High-confidence Conclusions with Evidence Internal model Reasoning Engine Mapping observations to their semantics Observations Targeting subsequent observations IDS alerts, netflow dump, syslog, server log … GPN 2009 May 29, Kansas City, Missouri 13

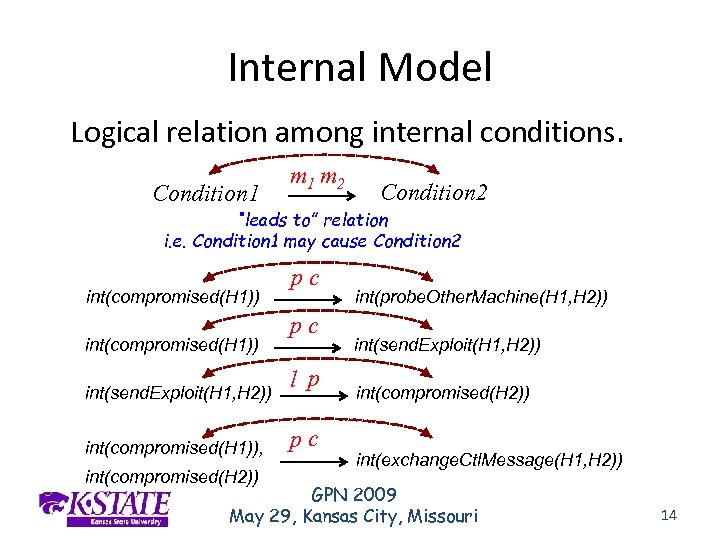

Internal Model Logical relation among internal conditions. Condition 1 m 2 Condition 2 “leads to” relation i. e. Condition 1 may cause Condition 2 int(compromised(H 1)) int(send. Exploit(H 1, H 2)) int(compromised(H 1)), int(compromised(H 2)) pc pc l p pc int(probe. Other. Machine(H 1, H 2)) int(send. Exploit(H 1, H 2)) int(compromised(H 2)) int(exchange. Ctl. Message(H 1, H 2)) GPN 2009 May 29, Kansas City, Missouri 14

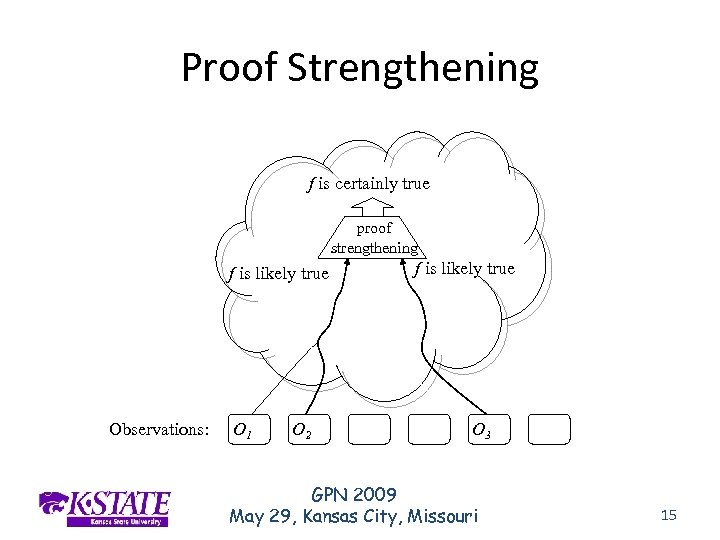

Proof Strengthening f is certainly true proof strengthening f is likely true Observations: O 1 O 2 f is likely true O 3 GPN 2009 May 29, Kansas City, Missouri 15

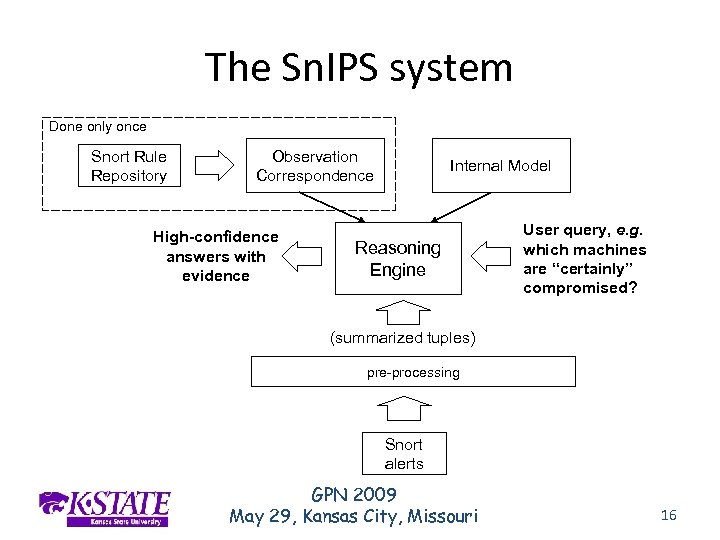

The Sn. IPS system Done only once Snort Rule Repository Observation Correspondence High-confidence answers with evidence Internal Model Reasoning Engine User query, e. g. which machines are “certainly” compromised? (summarized tuples) pre-processing Snort alerts GPN 2009 May 29, Kansas City, Missouri 16

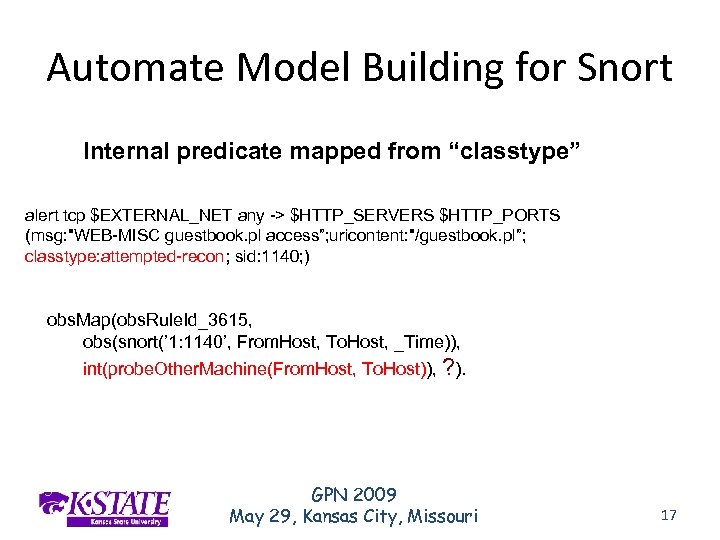

Automate Model Building for Snort Internal predicate mapped from “classtype” alert tcp $EXTERNAL_NET any -> $HTTP_SERVERS $HTTP_PORTS (msg: "WEB-MISC guestbook. pl access”; uricontent: "/guestbook. pl”; classtype: attempted-recon; sid: 1140; ) obs. Map(obs. Rule. Id_3615, obs(snort(’ 1: 1140’, From. Host, To. Host, _Time)), int(probe. Other. Machine(From. Host, To. Host)), ? ). GPN 2009 May 29, Kansas City, Missouri 17

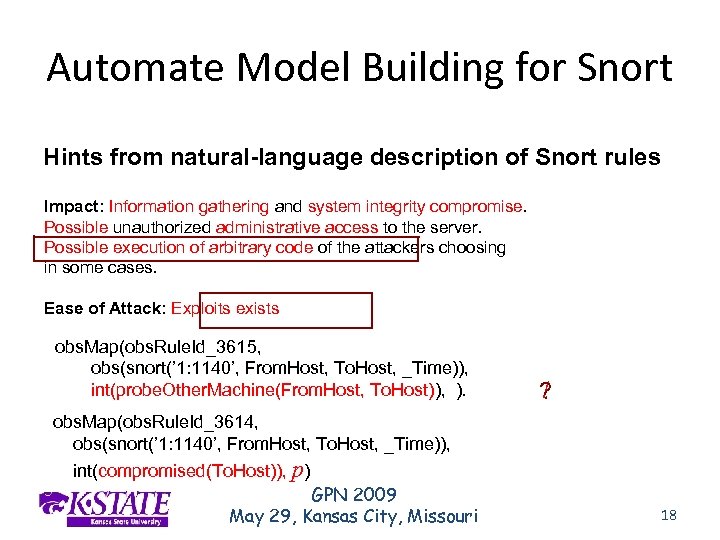

Automate Model Building for Snort Hints from natural-language description of Snort rules Impact: Information gathering and system integrity compromise. Possible unauthorized administrative access to the server. Possible execution of arbitrary code of the attackers choosing in some cases. Ease of Attack: Exploits exists obs. Map(obs. Rule. Id_3615, obs(snort(’ 1: 1140’, From. Host, To. Host, _Time)), int(probe. Other. Machine(From. Host, To. Host)), ). l ? obs. Map(obs. Rule. Id_3614, obs(snort(’ 1: 1140’, From. Host, To. Host, _Time)), int(compromised(To. Host)), p) GPN 2009 May 29, Kansas City, Missouri 18

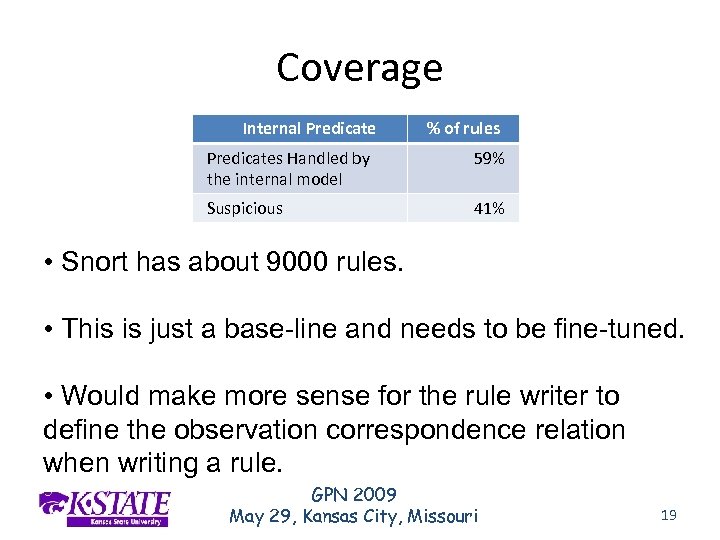

Coverage Internal Predicate % of rules Predicates Handled by the internal model 59% Suspicious 41% • Snort has about 9000 rules. • This is just a base-line and needs to be fine-tuned. • Would make more sense for the rule writer to define the observation correspondence relation when writing a rule. GPN 2009 May 29, Kansas City, Missouri 19

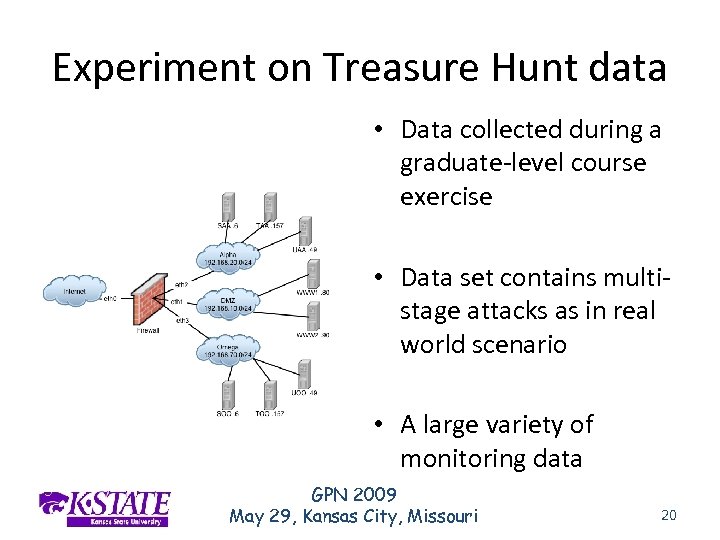

Experiment on Treasure Hunt data • Data collected during a graduate-level course exercise • Data set contains multistage attacks as in real world scenario • A large variety of monitoring data GPN 2009 May 29, Kansas City, Missouri 20

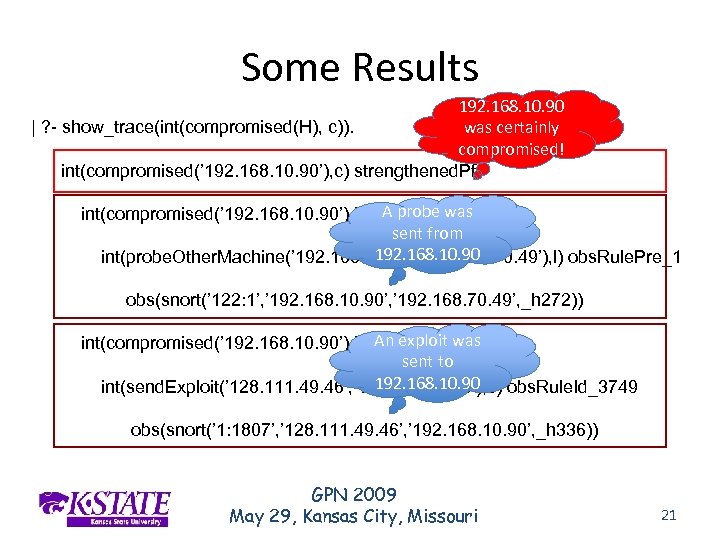

Some Results 192. 168. 10. 90 was certainly | ? - show_trace(int(compromised(H), c)). compromised! int(compromised(’ 192. 168. 10. 90’), c) strengthened. Pf A probe int(compromised(’ 192. 168. 10. 90’), l) int. Rule_1 was sent from 192. 168. 10. 90 int(probe. Other. Machine(’ 192. 168. 10. 90’, ’ 192. 168. 70. 49’), l) obs. Rule. Pre_1 obs(snort(’ 122: 1’, ’ 192. 168. 10. 90’, ’ 192. 168. 70. 49’, _h 272)) An exploit int(compromised(’ 192. 168. 10. 90’), l) int. Rule_3 was sent to 192. 168. 10. 90 int(send. Exploit(’ 128. 111. 49. 46’, ’ 192. 168. 10. 90’), c) obs. Rule. Id_3749 obs(snort(’ 1: 1807’, ’ 128. 111. 49. 46’, ’ 192. 168. 10. 90’, _h 336)) GPN 2009 May 29, Kansas City, Missouri 21

Summary • Open knowledge sharing and automated knowledge reuse is key in effective cyber defense • Advantages of logic-based techniques – Publishing and incorporation of knowledge/information through well-understood logical semantics – Efficient and sound analysis by leveraging the reasoning power of well-developed logic-deduction systems GPN 2009 May 29, Kansas City, Missouri 22

Who We Argus: Cyber Security Research Group at Kansas State University http: //people. cis. ksu. edu/~xou/argus/ Contact me: Simon Ou xou@ksu. edu GPN 2009 May 29, Kansas City, Missouri 23

Thank You! Questions? GPN 2009 May 29, Kansas City, Missouri

79093d1270c936d60beddbf18309c7b5.ppt