4cb81f4c24027576eef37ea30fc84efb.ppt

- Количество слайдов: 59

An Introduction to ESnet and its Services William E. Johnston, ESnet Manager and Senior Scientist Michael S. Collins, Stan Kluz, Joseph Burrescia, and James V. Gagliardi, ESnet Leads and the ESnet Team 1

An Introduction to ESnet and its Services William E. Johnston, ESnet Manager and Senior Scientist Michael S. Collins, Stan Kluz, Joseph Burrescia, and James V. Gagliardi, ESnet Leads and the ESnet Team 1

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 2

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 2

ESnet • An infrastructure that is critical to DOE’s science mission and that serves all of DOE • • Focused on the Office of Science Labs • You can’t go out and buy this – ESnet integrates commercial products and in-house software into a complex management system for operating the net • You can’t go out and take a class in how to run this sort of network – it is specialized and is learned from experience • • Extremely reliable in several dimensions Complex and specialized – both in the network engineering and the network management ESnet has functioned flawlessly during the current turmoil 3

ESnet • An infrastructure that is critical to DOE’s science mission and that serves all of DOE • • Focused on the Office of Science Labs • You can’t go out and buy this – ESnet integrates commercial products and in-house software into a complex management system for operating the net • You can’t go out and take a class in how to run this sort of network – it is specialized and is learned from experience • • Extremely reliable in several dimensions Complex and specialized – both in the network engineering and the network management ESnet has functioned flawlessly during the current turmoil 3

Stakeholders • • DOE MICS Office, ESnet program ESnet Steering Committee (ESSC) o • ESnet Coordinating Committee (ESCC) o • represents the Science Offices (strategic needs) site representatives (operational issues) Users o Mostly DOE Office of Science o NNSA / Defense Programs o DOE collaborators o A few others (e. g. the NSF LIGO site) 4

Stakeholders • • DOE MICS Office, ESnet program ESnet Steering Committee (ESSC) o • ESnet Coordinating Committee (ESCC) o • represents the Science Offices (strategic needs) site representatives (operational issues) Users o Mostly DOE Office of Science o NNSA / Defense Programs o DOE collaborators o A few others (e. g. the NSF LIGO site) 4

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 5

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 5

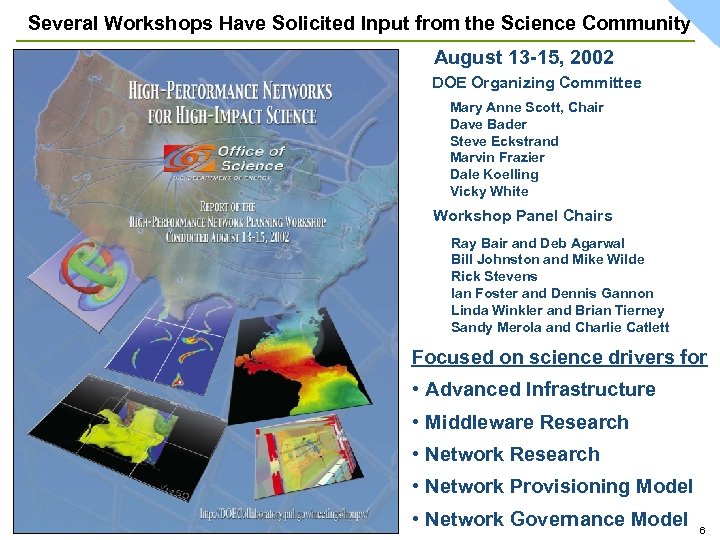

Several Workshops Have Solicited Input from the Science Community August 13 -15, 2002 DOE Organizing Committee Mary Anne Scott, Chair Dave Bader Steve Eckstrand Marvin Frazier Dale Koelling Vicky White Workshop Panel Chairs Ray Bair and Deb Agarwal Bill Johnston and Mike Wilde Rick Stevens Ian Foster and Dennis Gannon Linda Winkler and Brian Tierney Sandy Merola and Charlie Catlett Focused on science drivers for • Advanced Infrastructure • Middleware Research • Network Provisioning Model • Network Governance Model 6

Several Workshops Have Solicited Input from the Science Community August 13 -15, 2002 DOE Organizing Committee Mary Anne Scott, Chair Dave Bader Steve Eckstrand Marvin Frazier Dale Koelling Vicky White Workshop Panel Chairs Ray Bair and Deb Agarwal Bill Johnston and Mike Wilde Rick Stevens Ian Foster and Dennis Gannon Linda Winkler and Brian Tierney Sandy Merola and Charlie Catlett Focused on science drivers for • Advanced Infrastructure • Middleware Research • Network Provisioning Model • Network Governance Model 6

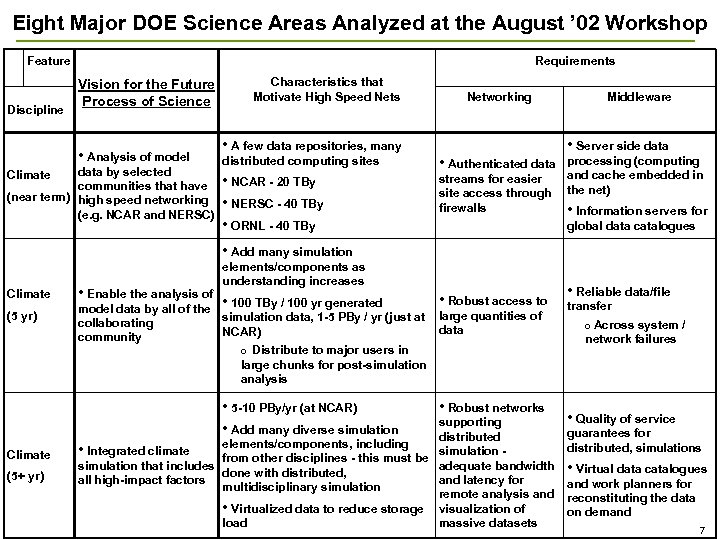

Eight Major DOE Science Areas Analyzed at the August ’ 02 Workshop Feature Discipline Requirements Vision for the Future Process of Science • Analysis of model data by selected communities that have (near term) high speed networking (e. g. NCAR and NERSC) Climate Characteristics that Motivate High Speed Nets Networking Middleware • Server side data • A few data repositories, many distributed computing sites • Authenticated data • NCAR - 20 TBy • NERSC - 40 TBy • ORNL - 40 TBy streams for easier site access through firewalls processing (computing and cache embedded in the net) • Information servers for global data catalogues • Add many simulation Climate (5 yr) • Enable the analysis of elements/components as understanding increases • 100 TBy / 100 yr generated model data by all of the simulation data, 1 -5 PBy / yr (just at collaborating NCAR) community o Distribute to major users in large chunks for post-simulation analysis • 5 -10 PBy/yr (at NCAR) • Add many diverse simulation Climate (5+ yr) • Robust access to large quantities of data • Robust networks supporting distributed elements/components, including simulation • Integrated climate from other disciplines - this must be simulation that includes adequate bandwidth done with distributed, all high-impact factors and latency for multidisciplinary simulation remote analysis and • Virtualized data to reduce storage visualization of load massive datasets • Reliable data/file transfer o Across system / network failures • Quality of service guarantees for distributed, simulations • Virtual data catalogues and work planners for reconstituting the data on demand 7

Eight Major DOE Science Areas Analyzed at the August ’ 02 Workshop Feature Discipline Requirements Vision for the Future Process of Science • Analysis of model data by selected communities that have (near term) high speed networking (e. g. NCAR and NERSC) Climate Characteristics that Motivate High Speed Nets Networking Middleware • Server side data • A few data repositories, many distributed computing sites • Authenticated data • NCAR - 20 TBy • NERSC - 40 TBy • ORNL - 40 TBy streams for easier site access through firewalls processing (computing and cache embedded in the net) • Information servers for global data catalogues • Add many simulation Climate (5 yr) • Enable the analysis of elements/components as understanding increases • 100 TBy / 100 yr generated model data by all of the simulation data, 1 -5 PBy / yr (just at collaborating NCAR) community o Distribute to major users in large chunks for post-simulation analysis • 5 -10 PBy/yr (at NCAR) • Add many diverse simulation Climate (5+ yr) • Robust access to large quantities of data • Robust networks supporting distributed elements/components, including simulation • Integrated climate from other disciplines - this must be simulation that includes adequate bandwidth done with distributed, all high-impact factors and latency for multidisciplinary simulation remote analysis and • Virtualized data to reduce storage visualization of load massive datasets • Reliable data/file transfer o Across system / network failures • Quality of service guarantees for distributed, simulations • Virtual data catalogues and work planners for reconstituting the data on demand 7

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 8

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 8

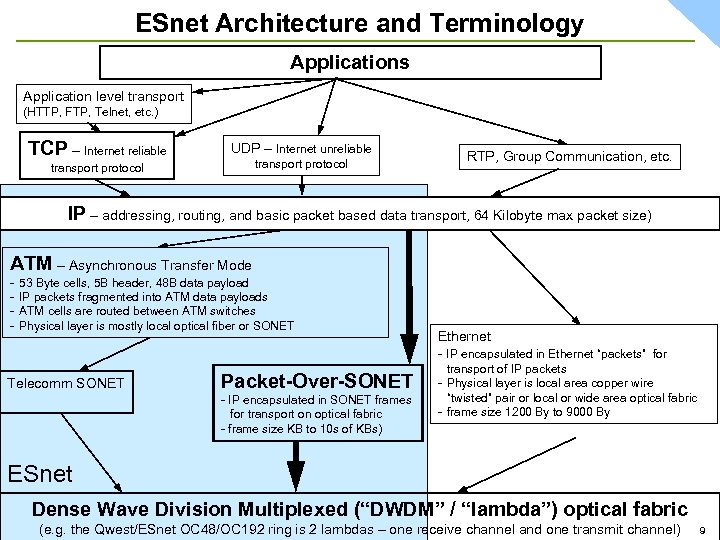

ESnet Architecture and Terminology Applications Application level transport (HTTP, FTP, Telnet, etc. ) TCP – Internet reliable UDP – Internet unreliable transport protocol RTP, Group Communication, etc. IP – addressing, routing, and basic packet based data transport, 64 Kilobyte max packet size) ATM – Asynchronous Transfer Mode - 53 Byte cells, 5 B header, 48 B data payload IP packets fragmented into ATM data payloads ATM cells are routed between ATM switches Physical layer is mostly local optical fiber or SONET Telecomm SONET Packet-Over-SONET - IP encapsulated in SONET frames for transport on optical fabric - frame size KB to 10 s of KBs) Ethernet - IP encapsulated in Ethernet “packets” for transport of IP packets - Physical layer is local area copper wire “twisted” pair or local or wide area optical fabric - frame size 1200 By to 9000 By ESnet Dense Wave Division Multiplexed (“DWDM” / “lambda”) optical fabric (e. g. the Qwest/ESnet OC 48/OC 192 ring is 2 lambdas – one receive channel and one transmit channel) 9

ESnet Architecture and Terminology Applications Application level transport (HTTP, FTP, Telnet, etc. ) TCP – Internet reliable UDP – Internet unreliable transport protocol RTP, Group Communication, etc. IP – addressing, routing, and basic packet based data transport, 64 Kilobyte max packet size) ATM – Asynchronous Transfer Mode - 53 Byte cells, 5 B header, 48 B data payload IP packets fragmented into ATM data payloads ATM cells are routed between ATM switches Physical layer is mostly local optical fiber or SONET Telecomm SONET Packet-Over-SONET - IP encapsulated in SONET frames for transport on optical fabric - frame size KB to 10 s of KBs) Ethernet - IP encapsulated in Ethernet “packets” for transport of IP packets - Physical layer is local area copper wire “twisted” pair or local or wide area optical fabric - frame size 1200 By to 9000 By ESnet Dense Wave Division Multiplexed (“DWDM” / “lambda”) optical fabric (e. g. the Qwest/ESnet OC 48/OC 192 ring is 2 lambdas – one receive channel and one transmit channel) 9

Terminology • Network bandwidth is typically given in bits/second o • E. g. 1 Gigabit/sec (“G/s”) is 1000 Megabits/sec) Data transport rates are typically given in Bytes/month o E. g. 1 Terabyte/month is 1, 000 Megabytes/month 10

Terminology • Network bandwidth is typically given in bits/second o • E. g. 1 Gigabit/sec (“G/s”) is 1000 Megabits/sec) Data transport rates are typically given in Bytes/month o E. g. 1 Terabyte/month is 1, 000 Megabytes/month 10

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 11

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 11

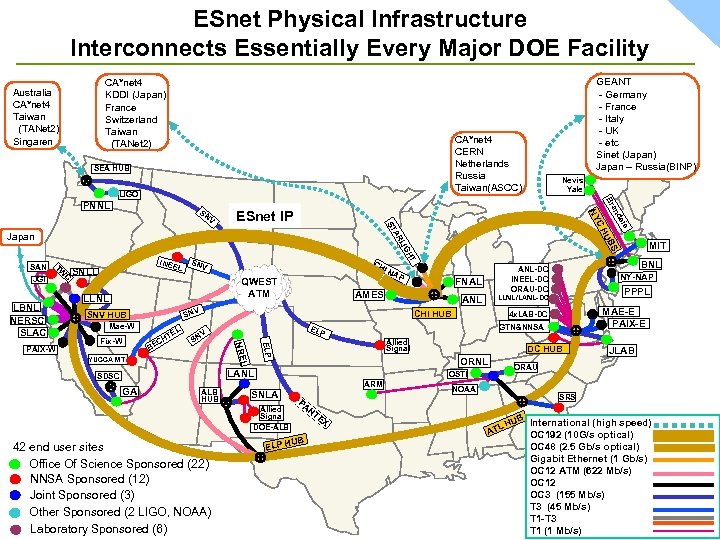

ESnet Physical Infrastructure Interconnects Essentially Every Major DOE Facility CA*net 4 KDDI (Japan) France Switzerland Taiwan (TANet 2) Australia CA*net 4 Taiwan (TANet 2) Singaren GEANT - Germany - France - Italy - UK - etc Sinet (Japan) Japan – Russia(BINP) CA*net 4 CERN Netherlands Russia Taiwan(ASCC) SEA HUB LIGO JGI LBNL NERSC SLAC C INEE L SNLL SNV CH IN LLNL Mae-W EL V SN Allied Signal LANL GA ALB HUB OSTI ARM SNLA 42 end user sites Office Of Science Sponsored (22) NNSA Sponsored (12) Joint Sponsored (3) Other Sponsored (2 LIGO, NOAA) Laboratory Sponsored (6) NOAA SRS NT EX UB ELP H JLAB ORAU PA Allied Signal DOE-ALB MAE-E PAIX-E DC HUB ORNL L SDSC PPPL GTN&NNSA P YUCCA MT PAIX-W NY-NAP 4 x. LAB-DC EL ELP BE T CH LLNL/LANL-DC CHI HUB NRE Fix-W BNL ANL-DC INEEL-DC ORAU-DC ANL AMES SNV HUB FNAL AP QWEST ATM is TW HT LIG SAN MIT BS AR HU ST Japan de ESnet IP C NY SN V an Br PNNL Nevis Yale L AT B International (high speed) HU OC 192 (10 G/s optical) OC 48 (2. 5 Gb/s optical) Gigabit Ethernet (1 Gb/s) OC 12 ATM (622 Mb/s) OC 12 OC 3 (155 Mb/s) T 3 (45 Mb/s) T 1 -T 3 T 1 (1 Mb/s)

ESnet Physical Infrastructure Interconnects Essentially Every Major DOE Facility CA*net 4 KDDI (Japan) France Switzerland Taiwan (TANet 2) Australia CA*net 4 Taiwan (TANet 2) Singaren GEANT - Germany - France - Italy - UK - etc Sinet (Japan) Japan – Russia(BINP) CA*net 4 CERN Netherlands Russia Taiwan(ASCC) SEA HUB LIGO JGI LBNL NERSC SLAC C INEE L SNLL SNV CH IN LLNL Mae-W EL V SN Allied Signal LANL GA ALB HUB OSTI ARM SNLA 42 end user sites Office Of Science Sponsored (22) NNSA Sponsored (12) Joint Sponsored (3) Other Sponsored (2 LIGO, NOAA) Laboratory Sponsored (6) NOAA SRS NT EX UB ELP H JLAB ORAU PA Allied Signal DOE-ALB MAE-E PAIX-E DC HUB ORNL L SDSC PPPL GTN&NNSA P YUCCA MT PAIX-W NY-NAP 4 x. LAB-DC EL ELP BE T CH LLNL/LANL-DC CHI HUB NRE Fix-W BNL ANL-DC INEEL-DC ORAU-DC ANL AMES SNV HUB FNAL AP QWEST ATM is TW HT LIG SAN MIT BS AR HU ST Japan de ESnet IP C NY SN V an Br PNNL Nevis Yale L AT B International (high speed) HU OC 192 (10 G/s optical) OC 48 (2. 5 Gb/s optical) Gigabit Ethernet (1 Gb/s) OC 12 ATM (622 Mb/s) OC 12 OC 3 (155 Mb/s) T 3 (45 Mb/s) T 1 -T 3 T 1 (1 Mb/s)

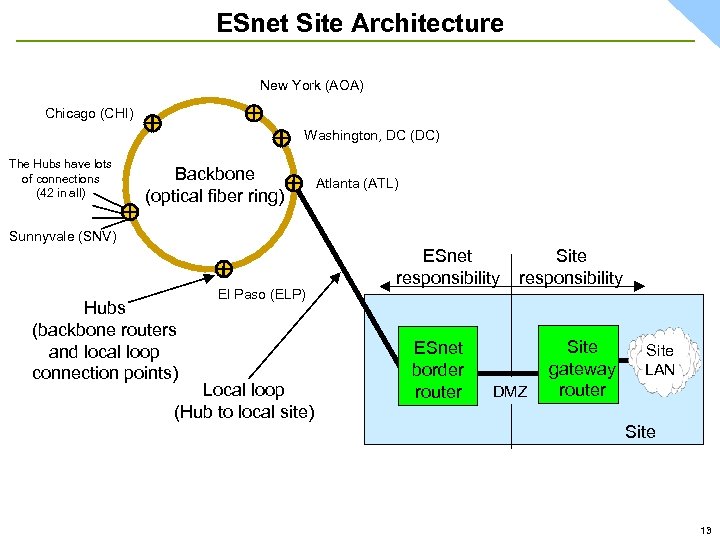

ESnet Site Architecture New York (AOA) Chicago (CHI) Washington, DC (DC) The Hubs have lots of connections (42 in all) Backbone (optical fiber ring) Atlanta (ATL) Sunnyvale (SNV) Hubs (backbone routers and local loop connection points) El Paso (ELP) Local loop (Hub to local site) ESnet responsibility ESnet border router Site responsibility DMZ Site gateway router Site LAN Site 13

ESnet Site Architecture New York (AOA) Chicago (CHI) Washington, DC (DC) The Hubs have lots of connections (42 in all) Backbone (optical fiber ring) Atlanta (ATL) Sunnyvale (SNV) Hubs (backbone routers and local loop connection points) El Paso (ELP) Local loop (Hub to local site) ESnet responsibility ESnet border router Site responsibility DMZ Site gateway router Site LAN Site 13

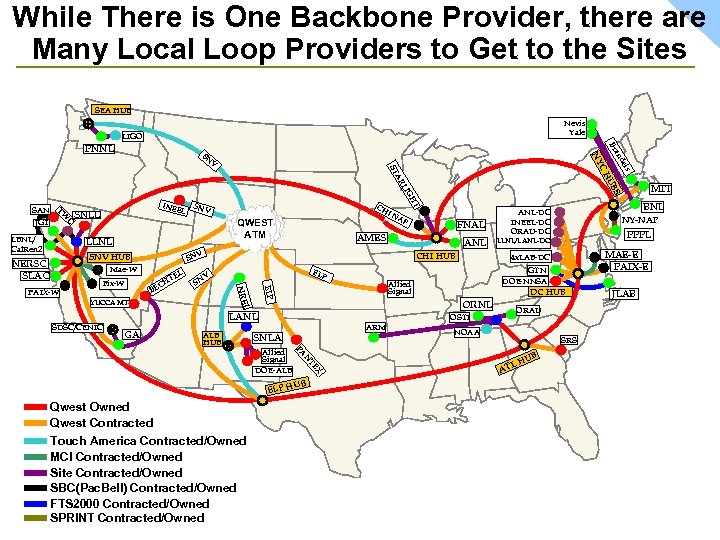

While There is One Backbone Provider, there are Many Local Loop Providers to Get to the Sites SEA HUB Nevis Yale LIGO GA ALB HUB OSTI ARM X TE N B U ELP H Qwest Owned Qwest Contracted Touch America Contracted/Owned MCI Contracted/Owned Site Contracted/Owned SBC(Pac. Bell) Contracted/Owned FTS 2000 Contracted/Owned SPRINT Contracted/Owned ORAU NOAA SRS PA Allied Signal DOE-ALB MAE-E PAIX-E DC HUB ORNL SNLA PPPL GTN DOE-NNSA Allied Signal LANL SDSC/CENIC S YUCCA MT NY-NAP 4 x. LAB-DC ELP V SN ELP B LLNL/LANL-DC CHI HUB L NRE PAIX-W L TE H EC is Mae-W Fix-W MIT BNL ANL-DC INEEL-DC ORAU-DC ANL AMES SNV HUB FNAL AP QWEST ATM LLNL NERSC SLAC CH IN T LBNL/ Cal. Ren 2 INEE SNV L SNLL GH LI SAN TW C JGI UB AR ST CH V de NY SN an Br PNNL L AT B HU JLAB

While There is One Backbone Provider, there are Many Local Loop Providers to Get to the Sites SEA HUB Nevis Yale LIGO GA ALB HUB OSTI ARM X TE N B U ELP H Qwest Owned Qwest Contracted Touch America Contracted/Owned MCI Contracted/Owned Site Contracted/Owned SBC(Pac. Bell) Contracted/Owned FTS 2000 Contracted/Owned SPRINT Contracted/Owned ORAU NOAA SRS PA Allied Signal DOE-ALB MAE-E PAIX-E DC HUB ORNL SNLA PPPL GTN DOE-NNSA Allied Signal LANL SDSC/CENIC S YUCCA MT NY-NAP 4 x. LAB-DC ELP V SN ELP B LLNL/LANL-DC CHI HUB L NRE PAIX-W L TE H EC is Mae-W Fix-W MIT BNL ANL-DC INEEL-DC ORAU-DC ANL AMES SNV HUB FNAL AP QWEST ATM LLNL NERSC SLAC CH IN T LBNL/ Cal. Ren 2 INEE SNV L SNLL GH LI SAN TW C JGI UB AR ST CH V de NY SN an Br PNNL L AT B HU JLAB

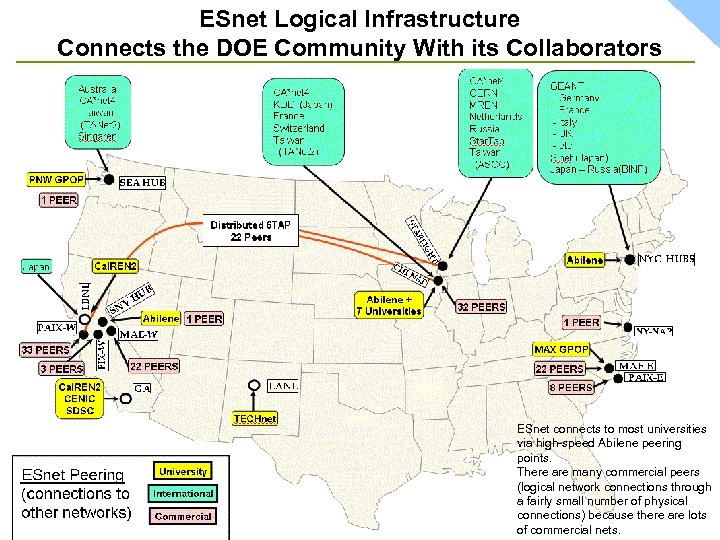

ESnet Logical Infrastructure Connects the DOE Community With its Collaborators ESnet connects to most universities via high-speed Abilene peering points. There are many commercial peers (logical network connections through a fairly small number of physical connections) because there are lots of commercial nets.

ESnet Logical Infrastructure Connects the DOE Community With its Collaborators ESnet connects to most universities via high-speed Abilene peering points. There are many commercial peers (logical network connections through a fairly small number of physical connections) because there are lots of commercial nets.

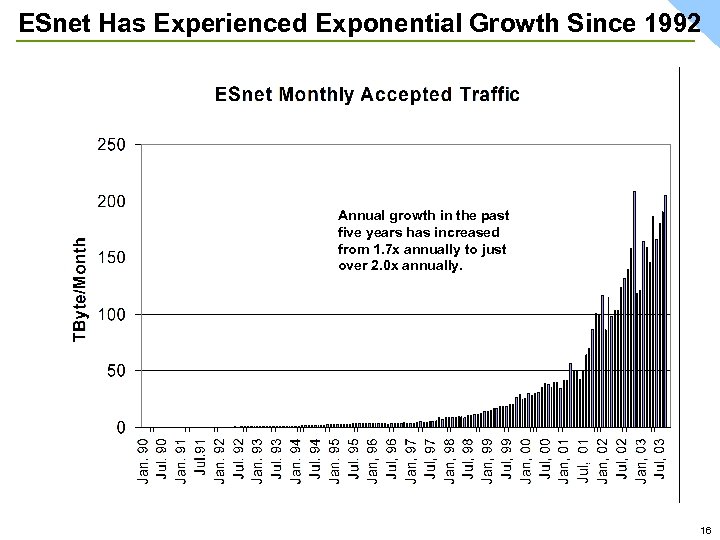

ESnet Has Experienced Exponential Growth Since 1992 Annual growth in the past five years has increased from 1. 7 x annually to just over 2. 0 x annually. 16

ESnet Has Experienced Exponential Growth Since 1992 Annual growth in the past five years has increased from 1. 7 x annually to just over 2. 0 x annually. 16

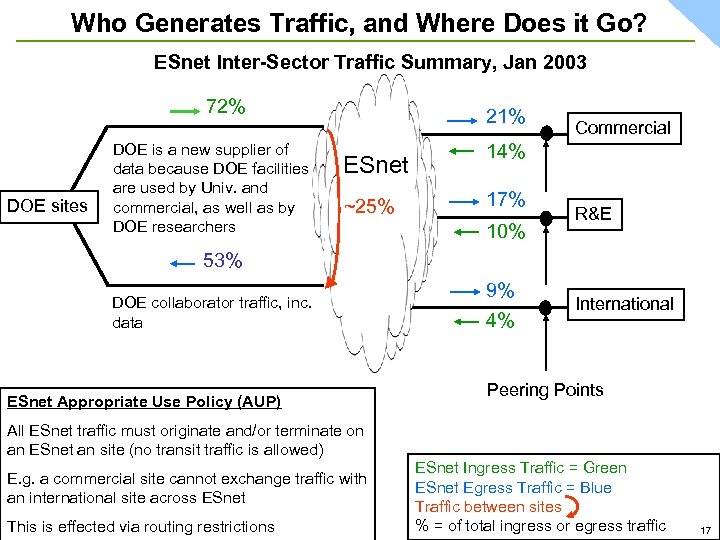

Who Generates Traffic, and Where Does it Go? ESnet Inter-Sector Traffic Summary, Jan 2003 72% DOE sites DOE is a new supplier of data because DOE facilities are used by Univ. and commercial, as well as by DOE researchers 21% ESnet ~25% Commercial 14% 17% 10% R&E 53% DOE collaborator traffic, inc. data ESnet Appropriate Use Policy (AUP) 9% 4% International Peering Points All ESnet traffic must originate and/or terminate on an ESnet an site (no transit traffic is allowed) E. g. a commercial site cannot exchange traffic with an international site across ESnet This is effected via routing restrictions ESnet Ingress Traffic = Green ESnet Egress Traffic = Blue Traffic between sites % = of total ingress or egress traffic 17

Who Generates Traffic, and Where Does it Go? ESnet Inter-Sector Traffic Summary, Jan 2003 72% DOE sites DOE is a new supplier of data because DOE facilities are used by Univ. and commercial, as well as by DOE researchers 21% ESnet ~25% Commercial 14% 17% 10% R&E 53% DOE collaborator traffic, inc. data ESnet Appropriate Use Policy (AUP) 9% 4% International Peering Points All ESnet traffic must originate and/or terminate on an ESnet an site (no transit traffic is allowed) E. g. a commercial site cannot exchange traffic with an international site across ESnet This is effected via routing restrictions ESnet Ingress Traffic = Green ESnet Egress Traffic = Blue Traffic between sites % = of total ingress or egress traffic 17

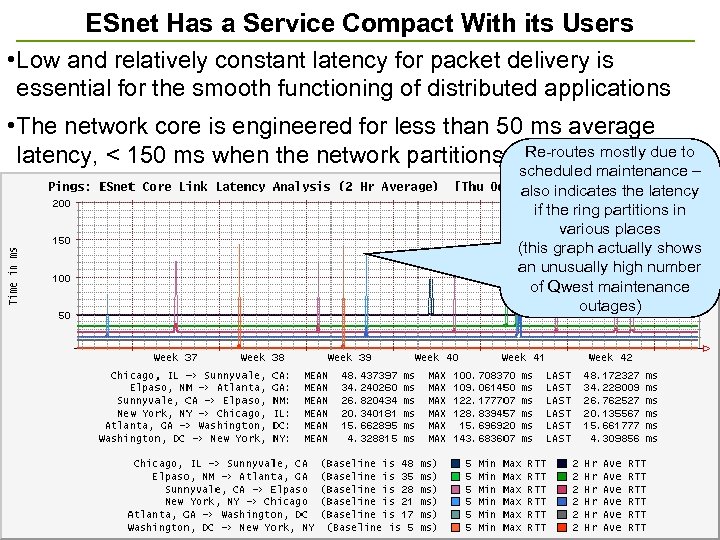

ESnet Has a Service Compact With its Users • Low and relatively constant latency for packet delivery is essential for the smooth functioning of distributed applications • The network core is engineered for less than 50 ms average latency, < 150 ms when the network partitions Re-routes mostly due to scheduled maintenance – also indicates the latency if the ring partitions in various places (this graph actually shows an unusually high number of Qwest maintenance outages) 18

ESnet Has a Service Compact With its Users • Low and relatively constant latency for packet delivery is essential for the smooth functioning of distributed applications • The network core is engineered for less than 50 ms average latency, < 150 ms when the network partitions Re-routes mostly due to scheduled maintenance – also indicates the latency if the ring partitions in various places (this graph actually shows an unusually high number of Qwest maintenance outages) 18

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 19

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 19

ESnet is Not Just One Network • Part of the complexity of ESnet is that it is actually four networks 1) The IP Internet (IPv 4) network that most people see (as described above) 2) Secure. Net that serves the NNSA / Defense Programs Labs (encrypted, encapsulated ATM) 3) IPv 6 network backbone (next generation Internet protocol network) 4) IP Multicast Each of these uses a different routing and/or addressing mechanism 20

ESnet is Not Just One Network • Part of the complexity of ESnet is that it is actually four networks 1) The IP Internet (IPv 4) network that most people see (as described above) 2) Secure. Net that serves the NNSA / Defense Programs Labs (encrypted, encapsulated ATM) 3) IPv 6 network backbone (next generation Internet protocol network) 4) IP Multicast Each of these uses a different routing and/or addressing mechanism 20

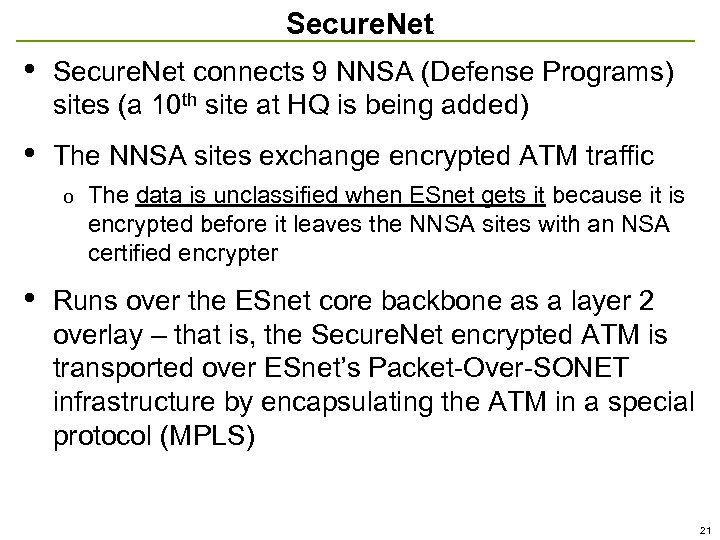

Secure. Net • Secure. Net connects 9 NNSA (Defense Programs) sites (a 10 th site at HQ is being added) • The NNSA sites exchange encrypted ATM traffic o • The data is unclassified when ESnet gets it because it is encrypted before it leaves the NNSA sites with an NSA certified encrypter Runs over the ESnet core backbone as a layer 2 overlay – that is, the Secure. Net encrypted ATM is transported over ESnet’s Packet-Over-SONET infrastructure by encapsulating the ATM in a special protocol (MPLS) 21

Secure. Net • Secure. Net connects 9 NNSA (Defense Programs) sites (a 10 th site at HQ is being added) • The NNSA sites exchange encrypted ATM traffic o • The data is unclassified when ESnet gets it because it is encrypted before it leaves the NNSA sites with an NSA certified encrypter Runs over the ESnet core backbone as a layer 2 overlay – that is, the Secure. Net encrypted ATM is transported over ESnet’s Packet-Over-SONET infrastructure by encapsulating the ATM in a special protocol (MPLS) 21

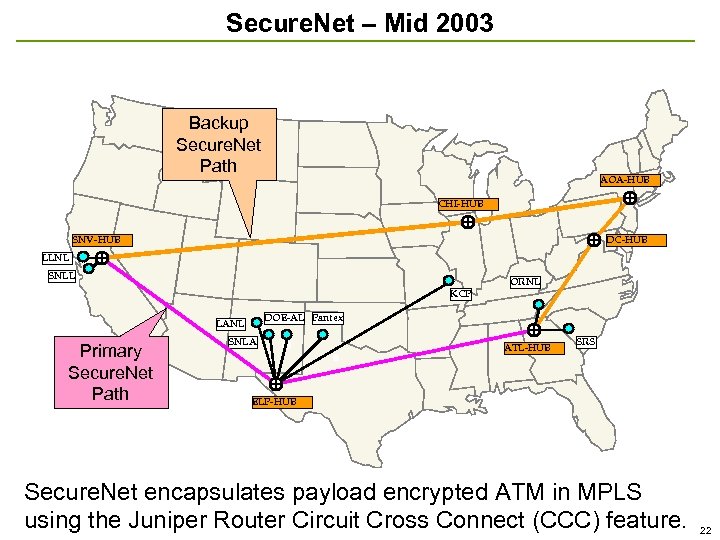

Secure. Net – Mid 2003 Backup Secure. Net Path AOA-HUB CHI-HUB SNV-HUB DC-HUB LLNL SNLL ORNL KCP DOE-AL Pantex LANL Primary Secure. Net Path SNLA ATL-HUB SRS ELP-HUB Secure. Net encapsulates payload encrypted ATM in MPLS using the Juniper Router Circuit Cross Connect (CCC) feature. 22

Secure. Net – Mid 2003 Backup Secure. Net Path AOA-HUB CHI-HUB SNV-HUB DC-HUB LLNL SNLL ORNL KCP DOE-AL Pantex LANL Primary Secure. Net Path SNLA ATL-HUB SRS ELP-HUB Secure. Net encapsulates payload encrypted ATM in MPLS using the Juniper Router Circuit Cross Connect (CCC) feature. 22

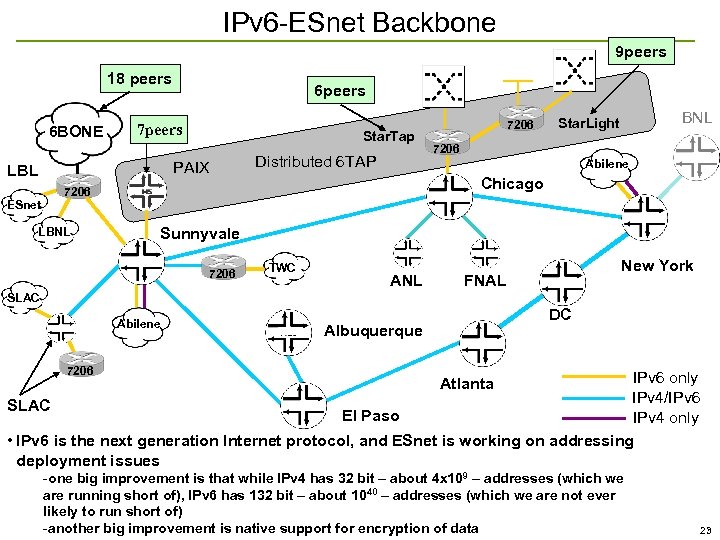

IPv 6 -ESnet Backbone 9 peers 18 peers 6 BONE 6 peers 7 peers Star. Tap PAIX LBL ESnet Distributed 6 TAP BNL Star. Light 7206 Abilene Chicago 7206 LBNL 7206 Sunnyvale 7206 TWC ANL New York FNAL SLAC Abilene 7206 DC Albuquerque IPv 6 only IPv 4/IPv 6 SLAC El Paso IPv 4 only • IPv 6 is the next generation Internet protocol, and ESnet is working on addressing deployment issues Atlanta -one big improvement is that while IPv 4 has 32 bit – about 4 x 10 9 – addresses (which we are running short of), IPv 6 has 132 bit – about 1040 – addresses (which we are not ever likely to run short of) -another big improvement is native support for encryption of data 23

IPv 6 -ESnet Backbone 9 peers 18 peers 6 BONE 6 peers 7 peers Star. Tap PAIX LBL ESnet Distributed 6 TAP BNL Star. Light 7206 Abilene Chicago 7206 LBNL 7206 Sunnyvale 7206 TWC ANL New York FNAL SLAC Abilene 7206 DC Albuquerque IPv 6 only IPv 4/IPv 6 SLAC El Paso IPv 4 only • IPv 6 is the next generation Internet protocol, and ESnet is working on addressing deployment issues Atlanta -one big improvement is that while IPv 4 has 32 bit – about 4 x 10 9 – addresses (which we are running short of), IPv 6 has 132 bit – about 1040 – addresses (which we are not ever likely to run short of) -another big improvement is native support for encryption of data 23

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 24

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 24

Services for Science Collaboration • Seamless voice, video, and data teleconferencing is important for geographically dispersed collaborators o ESnet currently provides voice conferencing, videoconferencing (H. 320/ISDN scheduled, H. 323/IP ad-hoc), and data collaboration services to more than a thousand DOE researchers worldwide o Heavily used services, averaging around - 4600 port hours per month for H. 320 videoconferences, - 2000 port hours per month for audio conferences - 1100 port hours per month for H. 323 - approximately 200 port hours per month for data conferencing 25

Services for Science Collaboration • Seamless voice, video, and data teleconferencing is important for geographically dispersed collaborators o ESnet currently provides voice conferencing, videoconferencing (H. 320/ISDN scheduled, H. 323/IP ad-hoc), and data collaboration services to more than a thousand DOE researchers worldwide o Heavily used services, averaging around - 4600 port hours per month for H. 320 videoconferences, - 2000 port hours per month for audio conferences - 1100 port hours per month for H. 323 - approximately 200 port hours per month for data conferencing 25

Voice, Video, and Data Collaboration • Web-Based registration and scheduling for all of these services o authorizes users efficiently o lets them schedule meetings Such an automated approach is essential for a scalable service – ESnet staff could never handle all of the reservations manually 26

Voice, Video, and Data Collaboration • Web-Based registration and scheduling for all of these services o authorizes users efficiently o lets them schedule meetings Such an automated approach is essential for a scalable service – ESnet staff could never handle all of the reservations manually 26

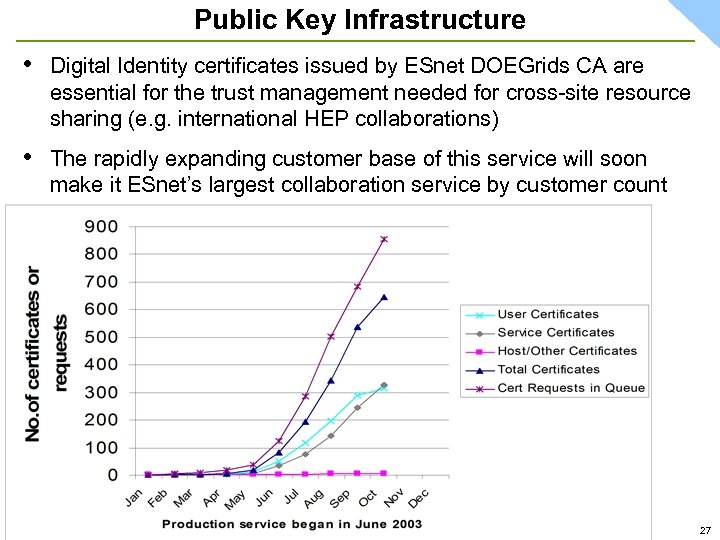

Public Key Infrastructure • Digital Identity certificates issued by ESnet DOEGrids CA are essential for the trust management needed for cross-site resource sharing (e. g. international HEP collaborations) • The rapidly expanding customer base of this service will soon make it ESnet’s largest collaboration service by customer count 27

Public Key Infrastructure • Digital Identity certificates issued by ESnet DOEGrids CA are essential for the trust management needed for cross-site resource sharing (e. g. international HEP collaborations) • The rapidly expanding customer base of this service will soon make it ESnet’s largest collaboration service by customer count 27

Services for Science Collaboration • Public Key Infrastructure to support cross-site, crossorganization, and international trust relationships that permit sharing computing and data resources o Digital identity certificates for people, hosts and services – essential core service for Grid middleware - provides formal and verified trust management – an essential service for widely distributed heterogeneous collaboration, e. g. in the International High Energy Physics community o Policy Management Authority – negotiates and manages the formal trust instrument (Certificate Policy - CP) o Certificate Authority (CA) validates users against the CP and issues digital identity certs. o Certificate Revocation Lists are provided o This service was the basis of the first routine sharing of HEP computing resources between US and Europe 28

Services for Science Collaboration • Public Key Infrastructure to support cross-site, crossorganization, and international trust relationships that permit sharing computing and data resources o Digital identity certificates for people, hosts and services – essential core service for Grid middleware - provides formal and verified trust management – an essential service for widely distributed heterogeneous collaboration, e. g. in the International High Energy Physics community o Policy Management Authority – negotiates and manages the formal trust instrument (Certificate Policy - CP) o Certificate Authority (CA) validates users against the CP and issues digital identity certs. o Certificate Revocation Lists are provided o This service was the basis of the first routine sharing of HEP computing resources between US and Europe 28

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 29

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 29

ESnet is Different from a Commercial ISP or University Network • A fairly small number of very high bandwidth sites (commercial ISPs have thousands of low b/w sites) • • Runs Secure. Net as an overlay network • ESnet “owns” all network trouble tickets (even from end users) until they are resolved Provides direct support of DOE science through various collaboration services o one stop shopping for user network problems

ESnet is Different from a Commercial ISP or University Network • A fairly small number of very high bandwidth sites (commercial ISPs have thousands of low b/w sites) • • Runs Secure. Net as an overlay network • ESnet “owns” all network trouble tickets (even from end users) until they are resolved Provides direct support of DOE science through various collaboration services o one stop shopping for user network problems

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 31

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 31

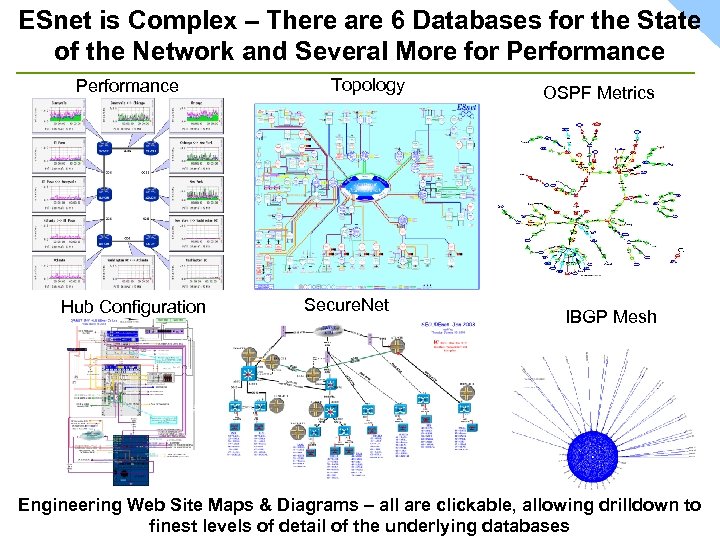

ESnet is Complex – There are 6 Databases for the State of the Network and Several More for Performance Hub Configuration Topology Secure. Net OSPF Metrics IBGP Mesh Engineering Web Site Maps & Diagrams – all are clickable, allowing drilldown to finest levels of detail of the underlying databases

ESnet is Complex – There are 6 Databases for the State of the Network and Several More for Performance Hub Configuration Topology Secure. Net OSPF Metrics IBGP Mesh Engineering Web Site Maps & Diagrams – all are clickable, allowing drilldown to finest levels of detail of the underlying databases

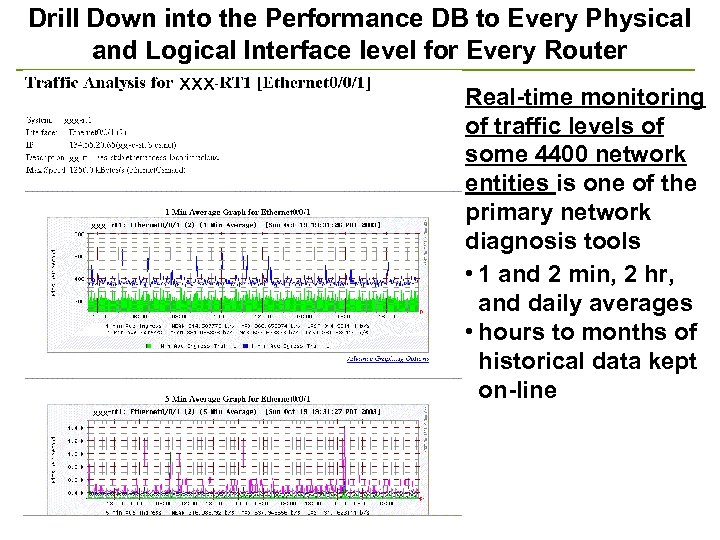

Drill Down into the Performance DB to Every Physical and Logical Interface level for Every Router xxx xxx Real-time monitoring of traffic levels of some 4400 network entities is one of the primary network diagnosis tools • 1 and 2 min, 2 hr, and daily averages • hours to months of historical data kept on-line

Drill Down into the Performance DB to Every Physical and Logical Interface level for Every Router xxx xxx Real-time monitoring of traffic levels of some 4400 network entities is one of the primary network diagnosis tools • 1 and 2 min, 2 hr, and daily averages • hours to months of historical data kept on-line

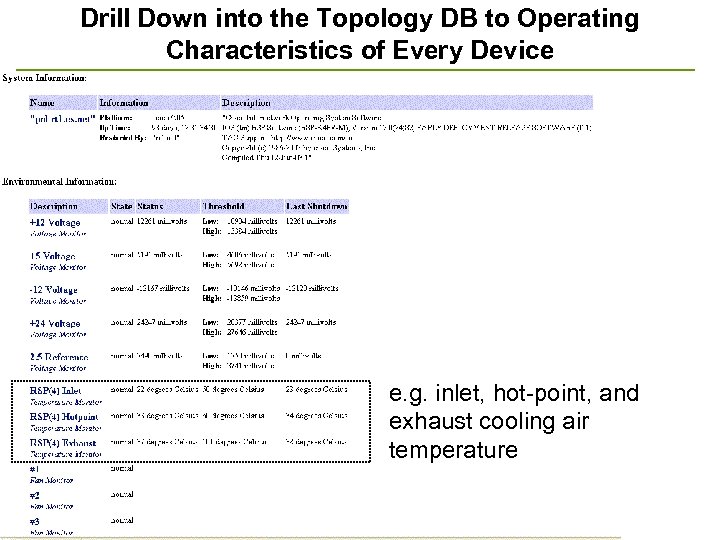

Drill Down into the Topology DB to Operating Characteristics of Every Device e. g. inlet, hot-point, and exhaust cooling air temperature

Drill Down into the Topology DB to Operating Characteristics of Every Device e. g. inlet, hot-point, and exhaust cooling air temperature

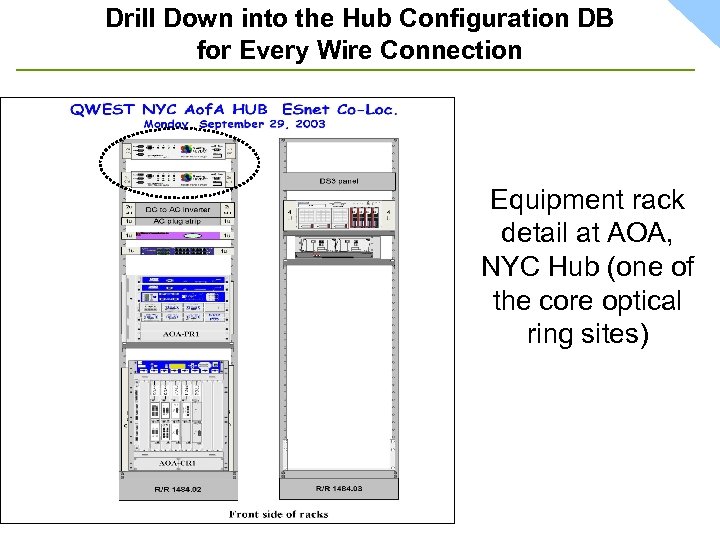

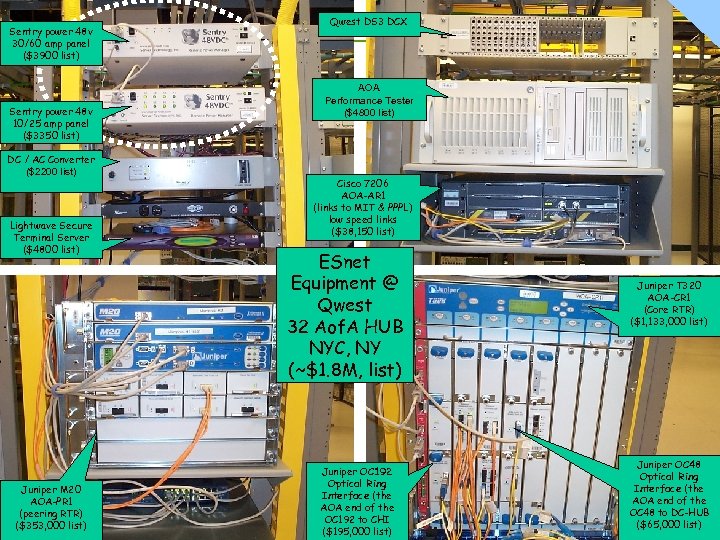

Drill Down into the Hub Configuration DB for Every Wire Connection Equipment rack detail at AOA, NYC Hub (one of the core optical ring sites)

Drill Down into the Hub Configuration DB for Every Wire Connection Equipment rack detail at AOA, NYC Hub (one of the core optical ring sites)

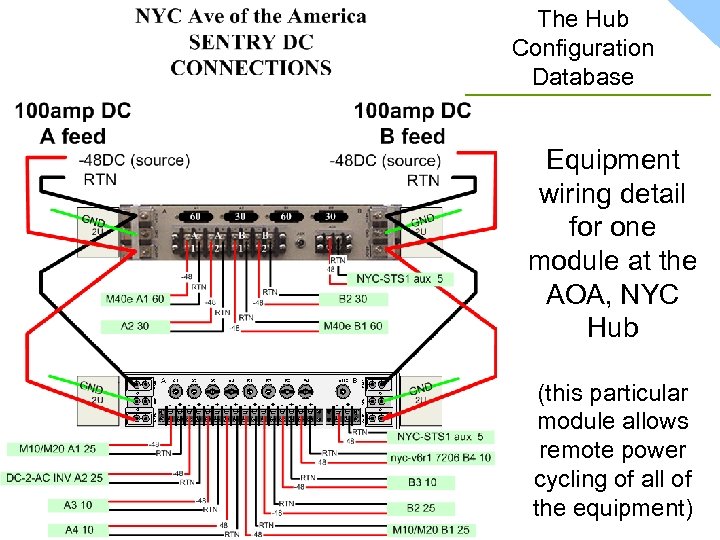

The Hub Configuration Database Equipment wiring detail for one module at the AOA, NYC Hub (this particular module allows remote power cycling of all of the equipment)

The Hub Configuration Database Equipment wiring detail for one module at the AOA, NYC Hub (this particular module allows remote power cycling of all of the equipment)

Sentry power 48 v 30/60 amp panel ($3900 list) Sentry power 48 v 10/25 amp panel ($3350 list) DC / AC Converter ($2200 list) Lightwave Secure Terminal Server ($4800 list) Juniper M 20 AOA-PR 1 (peering RTR) ($353, 000 list) Qwest DS 3 DCX AOA Performance Tester ($4800 list) Cisco 7206 AOA-AR 1 (links to MIT & PPPL) low speed links ($38, 150 list) ESnet Equipment @ Qwest 32 Aof. A HUB NYC, NY (~$1. 8 M, list) Juniper OC 192 Optical Ring Interface (the AOA end of the OC 192 to CHI ($195, 000 list) Juniper T 320 AOA-CR 1 (Core RTR) ($1, 133, 000 list) Juniper OC 48 Optical Ring Interface (the AOA end of the OC 48 to DC-HUB ($65, 000 list) 37

Sentry power 48 v 30/60 amp panel ($3900 list) Sentry power 48 v 10/25 amp panel ($3350 list) DC / AC Converter ($2200 list) Lightwave Secure Terminal Server ($4800 list) Juniper M 20 AOA-PR 1 (peering RTR) ($353, 000 list) Qwest DS 3 DCX AOA Performance Tester ($4800 list) Cisco 7206 AOA-AR 1 (links to MIT & PPPL) low speed links ($38, 150 list) ESnet Equipment @ Qwest 32 Aof. A HUB NYC, NY (~$1. 8 M, list) Juniper OC 192 Optical Ring Interface (the AOA end of the OC 192 to CHI ($195, 000 list) Juniper T 320 AOA-CR 1 (Core RTR) ($1, 133, 000 list) Juniper OC 48 Optical Ring Interface (the AOA end of the OC 48 to DC-HUB ($65, 000 list) 37

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 38

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 38

Operating Science Mission Critical Infrastructure • ESnet is a visible and critical pieces of DOE science infrastructure o • if ESnet fails, 10 s of thousands of DOE and University users know it within minutes if not seconds Requires high reliability and high operational security in the systems that are integral to the operation and management of the network o Secure and redundant mail and Web systems are central to the operation and security of ESnet - trouble tickets are by email - engineering communication by email - engineering database interface is via Web Secure network access to Hub equipment o Backup secure telephony access to Hub equipment o 24 x 7 help desk (joint with NERSC) o 24 x 7 on-call network engineer o 39

Operating Science Mission Critical Infrastructure • ESnet is a visible and critical pieces of DOE science infrastructure o • if ESnet fails, 10 s of thousands of DOE and University users know it within minutes if not seconds Requires high reliability and high operational security in the systems that are integral to the operation and management of the network o Secure and redundant mail and Web systems are central to the operation and security of ESnet - trouble tickets are by email - engineering communication by email - engineering database interface is via Web Secure network access to Hub equipment o Backup secure telephony access to Hub equipment o 24 x 7 help desk (joint with NERSC) o 24 x 7 on-call network engineer o 39

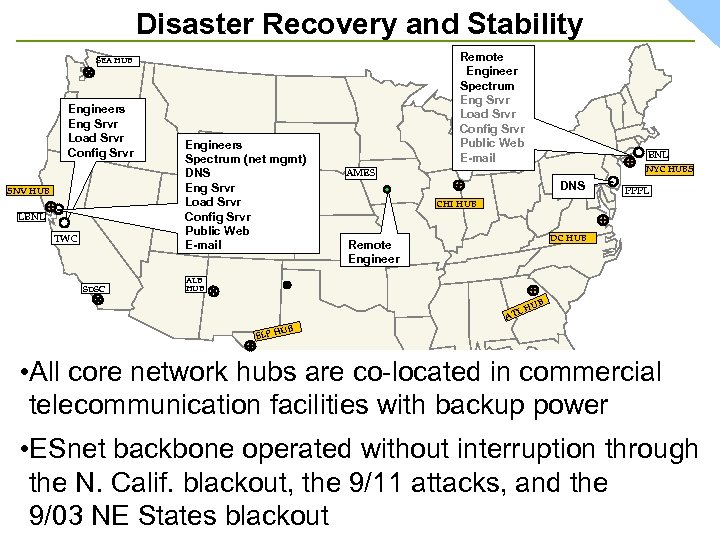

Disaster Recovery and Stability • The network operational services must be kept available even if, e. g. , the West coast is disabled by a massive earthquake, etc. o Network engineers in four locations across the country o Full and partial engineering databases and network operational service replicas in three locations o Telephone modem backup access to all hub equipment 40

Disaster Recovery and Stability • The network operational services must be kept available even if, e. g. , the West coast is disabled by a massive earthquake, etc. o Network engineers in four locations across the country o Full and partial engineering databases and network operational service replicas in three locations o Telephone modem backup access to all hub equipment 40

Disaster Recovery and Stability Remote Engineer Spectrum Eng Srvr Load Srvr Config Srvr Public Web E-mail SEA HUB Engineers Eng Srvr Load Srvr Config Srvr SNV HUB LBNL TWC SDSC Engineers Spectrum (net mgmt) DNS Eng Srvr Load Srvr Config Srvr Public Web E-mail BNL NYC HUBS AMES DNS PPPL CHI HUB DC HUB Remote Engineer ALB HUB UB LH AT UB ELP H • All core network hubs are co-located in commercial telecommunication facilities with backup power • ESnet backbone operated without interruption through the N. Calif. blackout, the 9/11 attacks, and the 9/03 NE States blackout 41

Disaster Recovery and Stability Remote Engineer Spectrum Eng Srvr Load Srvr Config Srvr Public Web E-mail SEA HUB Engineers Eng Srvr Load Srvr Config Srvr SNV HUB LBNL TWC SDSC Engineers Spectrum (net mgmt) DNS Eng Srvr Load Srvr Config Srvr Public Web E-mail BNL NYC HUBS AMES DNS PPPL CHI HUB DC HUB Remote Engineer ALB HUB UB LH AT UB ELP H • All core network hubs are co-located in commercial telecommunication facilities with backup power • ESnet backbone operated without interruption through the N. Calif. blackout, the 9/11 attacks, and the 9/03 NE States blackout 41

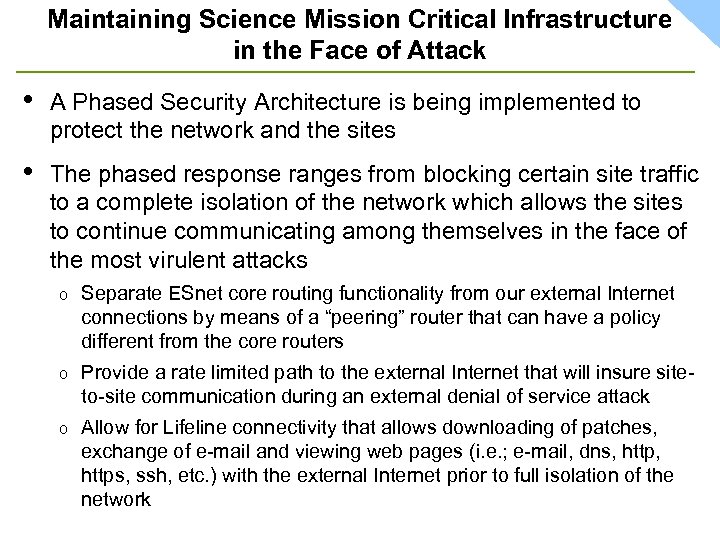

Maintaining Science Mission Critical Infrastructure in the Face of Attack • A Phased Security Architecture is being implemented to protect the network and the sites • The phased response ranges from blocking certain site traffic to a complete isolation of the network which allows the sites to continue communicating among themselves in the face of the most virulent attacks o Separate ESnet core routing functionality from our external Internet connections by means of a “peering” router that can have a policy different from the core routers o Provide a rate limited path to the external Internet that will insure siteto-site communication during an external denial of service attack o Allow for Lifeline connectivity that allows downloading of patches, exchange of e-mail and viewing web pages (i. e. ; e-mail, dns, https, ssh, etc. ) with the external Internet prior to full isolation of the network

Maintaining Science Mission Critical Infrastructure in the Face of Attack • A Phased Security Architecture is being implemented to protect the network and the sites • The phased response ranges from blocking certain site traffic to a complete isolation of the network which allows the sites to continue communicating among themselves in the face of the most virulent attacks o Separate ESnet core routing functionality from our external Internet connections by means of a “peering” router that can have a policy different from the core routers o Provide a rate limited path to the external Internet that will insure siteto-site communication during an external denial of service attack o Allow for Lifeline connectivity that allows downloading of patches, exchange of e-mail and viewing web pages (i. e. ; e-mail, dns, https, ssh, etc. ) with the external Internet prior to full isolation of the network

ESnet WAN Security and Cybersecurity • ESnet security for its own network equipment is provided by o secure access to devices o patching router operating systems o confidentially of configuration data, etc. 43

ESnet WAN Security and Cybersecurity • ESnet security for its own network equipment is provided by o secure access to devices o patching router operating systems o confidentially of configuration data, etc. 43

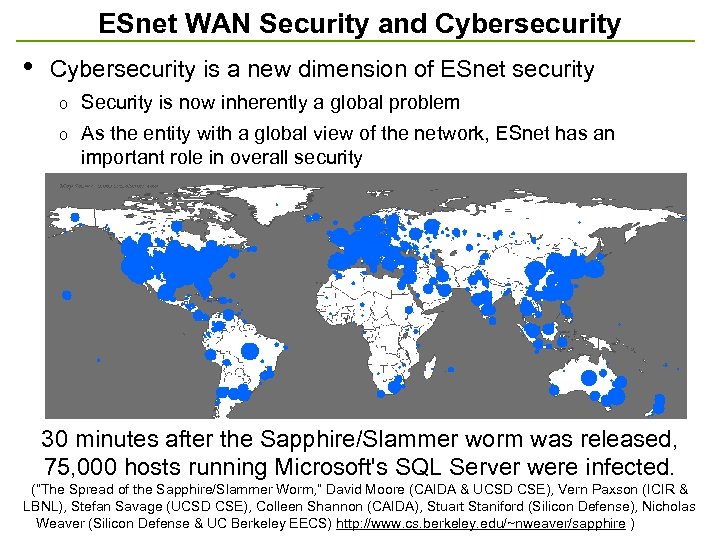

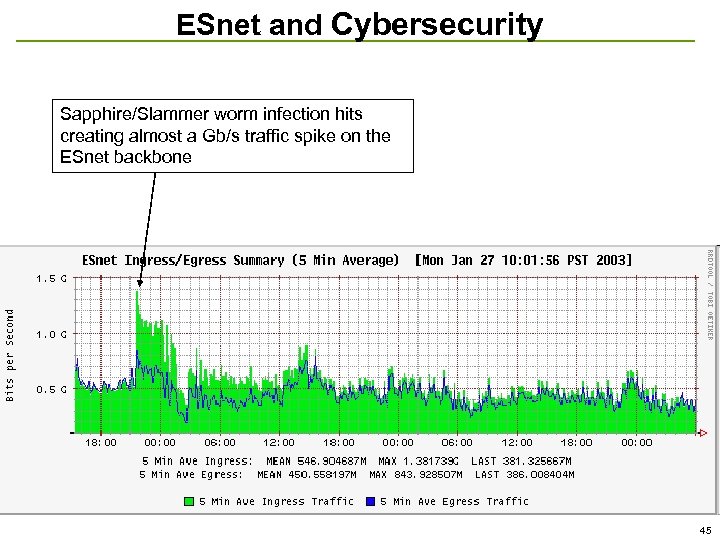

ESnet WAN Security and Cybersecurity • Cybersecurity is a new dimension of ESnet security o Security is now inherently a global problem o As the entity with a global view of the network, ESnet has an important role in overall security 30 minutes after the Sapphire/Slammer worm was released, 75, 000 hosts running Microsoft's SQL Server were infected. (“The Spread of the Sapphire/Slammer Worm, ” David Moore (CAIDA & UCSD CSE), Vern Paxson (ICIR & LBNL), Stefan Savage (UCSD CSE), Colleen Shannon (CAIDA), Stuart Staniford (Silicon Defense), Nicholas Weaver (Silicon Defense & UC Berkeley EECS) http: //www. cs. berkeley. edu/~nweaver/sapphire ) 44

ESnet WAN Security and Cybersecurity • Cybersecurity is a new dimension of ESnet security o Security is now inherently a global problem o As the entity with a global view of the network, ESnet has an important role in overall security 30 minutes after the Sapphire/Slammer worm was released, 75, 000 hosts running Microsoft's SQL Server were infected. (“The Spread of the Sapphire/Slammer Worm, ” David Moore (CAIDA & UCSD CSE), Vern Paxson (ICIR & LBNL), Stefan Savage (UCSD CSE), Colleen Shannon (CAIDA), Stuart Staniford (Silicon Defense), Nicholas Weaver (Silicon Defense & UC Berkeley EECS) http: //www. cs. berkeley. edu/~nweaver/sapphire ) 44

ESnet and Cybersecurity Sapphire/Slammer worm infection hits creating almost a Gb/s traffic spike on the ESnet backbone 45

ESnet and Cybersecurity Sapphire/Slammer worm infection hits creating almost a Gb/s traffic spike on the ESnet backbone 45

ESnet and Cybersecurity • ESnet protects itself and other sites – infected ESnet sites can be blocked, partially or completely • ESnet can come also come to the aid of an ESnet site with temporary filters on incoming traffic, etc. , if necessary o This is one of the very few areas where ESnet might participate directly in site security o Request must come from Site Coordinator o Not a substitute for good site security 46

ESnet and Cybersecurity • ESnet protects itself and other sites – infected ESnet sites can be blocked, partially or completely • ESnet can come also come to the aid of an ESnet site with temporary filters on incoming traffic, etc. , if necessary o This is one of the very few areas where ESnet might participate directly in site security o Request must come from Site Coordinator o Not a substitute for good site security 46

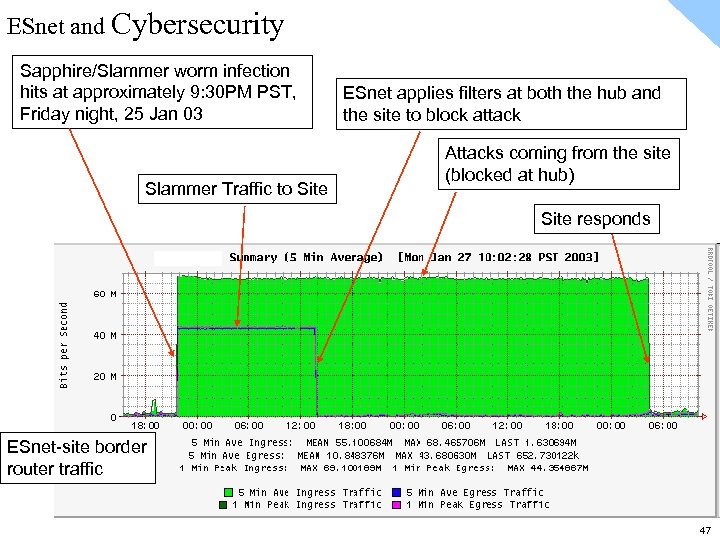

ESnet and Cybersecurity Sapphire/Slammer worm infection hits at approximately 9: 30 PM PST, Friday night, 25 Jan 03 Slammer Traffic to Site ESnet applies filters at both the hub and the site to block attack Attacks coming from the site (blocked at hub) Site responds ESnet-site border router traffic 47

ESnet and Cybersecurity Sapphire/Slammer worm infection hits at approximately 9: 30 PM PST, Friday night, 25 Jan 03 Slammer Traffic to Site ESnet applies filters at both the hub and the site to block attack Attacks coming from the site (blocked at hub) Site responds ESnet-site border router traffic 47

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 48

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 48

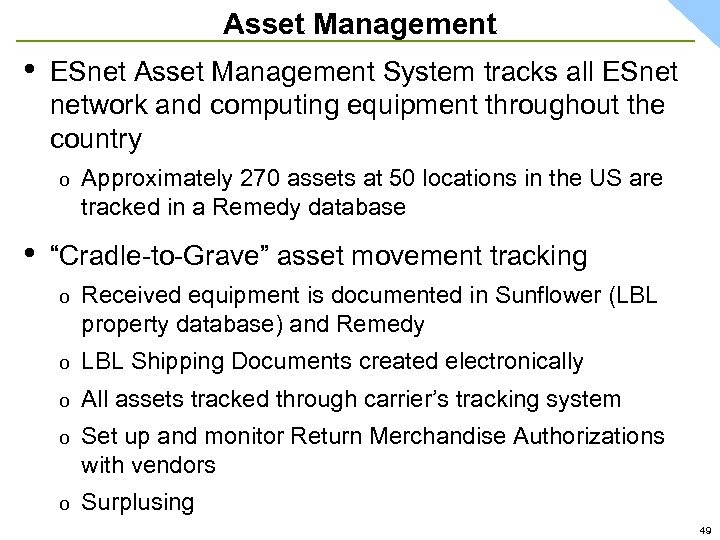

Asset Management • ESnet Asset Management System tracks all ESnet network and computing equipment throughout the country o • Approximately 270 assets at 50 locations in the US are tracked in a Remedy database “Cradle-to-Grave” asset movement tracking o Received equipment is documented in Sunflower (LBL property database) and Remedy o LBL Shipping Documents created electronically o All assets tracked through carrier’s tracking system o Set up and monitor Return Merchandise Authorizations with vendors o Surplusing 49

Asset Management • ESnet Asset Management System tracks all ESnet network and computing equipment throughout the country o • Approximately 270 assets at 50 locations in the US are tracked in a Remedy database “Cradle-to-Grave” asset movement tracking o Received equipment is documented in Sunflower (LBL property database) and Remedy o LBL Shipping Documents created electronically o All assets tracked through carrier’s tracking system o Set up and monitor Return Merchandise Authorizations with vendors o Surplusing 49

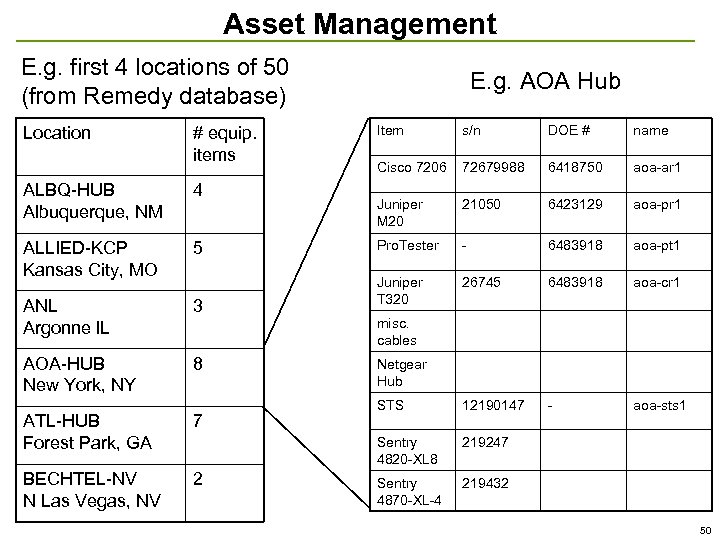

Asset Management E. g. first 4 locations of 50 (from Remedy database) Location E. g. AOA Hub ALBQ-HUB Albuquerque, NM 4 ALLIED-KCP Kansas City, MO 5 ANL Argonne IL 3 AOA-HUB New York, NY 8 ATL-HUB Forest Park, GA 7 BECHTEL-NV N Las Vegas, NV 2 Item s/n DOE # name Cisco 7206 72679988 6418750 aoa-ar 1 Juniper M 20 21050 6423129 aoa-pr 1 Pro. Tester - 6483918 aoa-pt 1 Juniper T 320 26745 6483918 aoa-cr 1 STS 12190147 - aoa-sts 1 Sentry 4820 -XL 8 # equip. items 219247 Sentry 4870 -XL-4 219432 misc. cables Netgear Hub 50

Asset Management E. g. first 4 locations of 50 (from Remedy database) Location E. g. AOA Hub ALBQ-HUB Albuquerque, NM 4 ALLIED-KCP Kansas City, MO 5 ANL Argonne IL 3 AOA-HUB New York, NY 8 ATL-HUB Forest Park, GA 7 BECHTEL-NV N Las Vegas, NV 2 Item s/n DOE # name Cisco 7206 72679988 6418750 aoa-ar 1 Juniper M 20 21050 6423129 aoa-pr 1 Pro. Tester - 6483918 aoa-pt 1 Juniper T 320 26745 6483918 aoa-cr 1 STS 12190147 - aoa-sts 1 Sentry 4820 -XL 8 # equip. items 219247 Sentry 4870 -XL-4 219432 misc. cables Netgear Hub 50

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 51

Outline • • • Forward ESnet science drivers 30 second tutorial on networking ESnet physical and logical infrastructure Not just one network Services for science collaboration ESnet is fairly unique ESnet is complex in several dimensions Operating critical science mission infrastructure Asset management Future directions Conclusions 51

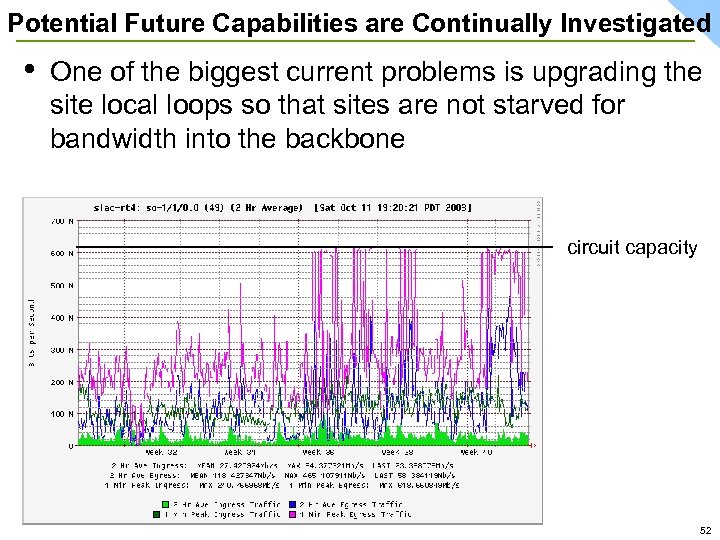

Potential Future Capabilities are Continually Investigated • One of the biggest current problems is upgrading the site local loops so that sites are not starved for bandwidth into the backbone circuit capacity 52

Potential Future Capabilities are Continually Investigated • One of the biggest current problems is upgrading the site local loops so that sites are not starved for bandwidth into the backbone circuit capacity 52

Potential Future Capabilities are Continually Investigated • Optical Metropolitan Area Networks (MANs) are being investigated in the SF Bay Area and Chicago Areas as an alternative to expensive local carriers for site connections o An optical fiber ring is purchased or leased from a fiber provider that can reach major sites (e. g. SLAC, LLNL, SNLL, LBNL, and NERSC in the SF Bay Area; FNAL, ANL, and Starlight in Chicago area)) o A single connection is made from the ESnet core ring to the local ring, which avoids local telecomm carriers o Probably only feasible in major metropolitan areas 53

Potential Future Capabilities are Continually Investigated • Optical Metropolitan Area Networks (MANs) are being investigated in the SF Bay Area and Chicago Areas as an alternative to expensive local carriers for site connections o An optical fiber ring is purchased or leased from a fiber provider that can reach major sites (e. g. SLAC, LLNL, SNLL, LBNL, and NERSC in the SF Bay Area; FNAL, ANL, and Starlight in Chicago area)) o A single connection is made from the ESnet core ring to the local ring, which avoids local telecomm carriers o Probably only feasible in major metropolitan areas 53

Conclusions • ESnet is an infrastructure that is critical to DOE’s science mission and that serves all of DOE • • Focused on the Office of Science Labs • You can’t go out and buy this – ESnet integrates commercial products and in-house software into a complex management system for operating the net • You can’t go out and take a class in how to run this sort of network – it is specialized and learned from experience • Extremely reliable in several dimensions Complex and specialized – both in the network engineering and the network management 54

Conclusions • ESnet is an infrastructure that is critical to DOE’s science mission and that serves all of DOE • • Focused on the Office of Science Labs • You can’t go out and buy this – ESnet integrates commercial products and in-house software into a complex management system for operating the net • You can’t go out and take a class in how to run this sort of network – it is specialized and learned from experience • Extremely reliable in several dimensions Complex and specialized – both in the network engineering and the network management 54

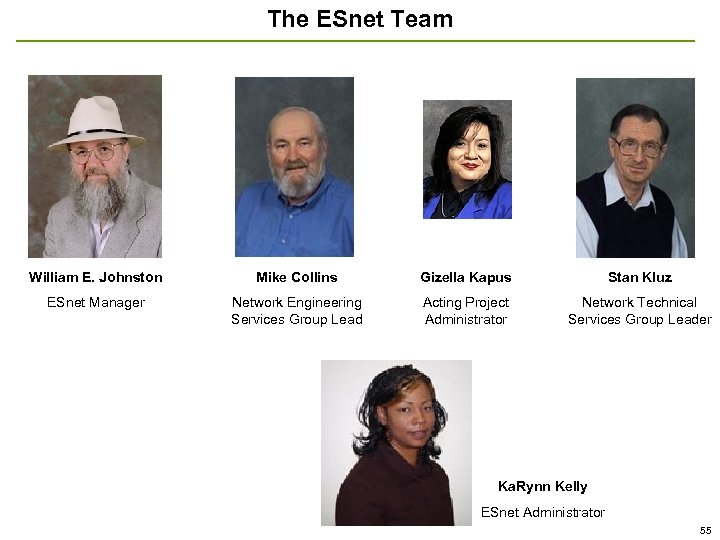

The ESnet Team William E. Johnston Mike Collins Gizella Kapus Stan Kluz ESnet Manager Network Engineering Services Group Lead Acting Project Administrator Network Technical Services Group Leader Ka. Rynn Kelly ESnet Administrator 55

The ESnet Team William E. Johnston Mike Collins Gizella Kapus Stan Kluz ESnet Manager Network Engineering Services Group Lead Acting Project Administrator Network Technical Services Group Leader Ka. Rynn Kelly ESnet Administrator 55

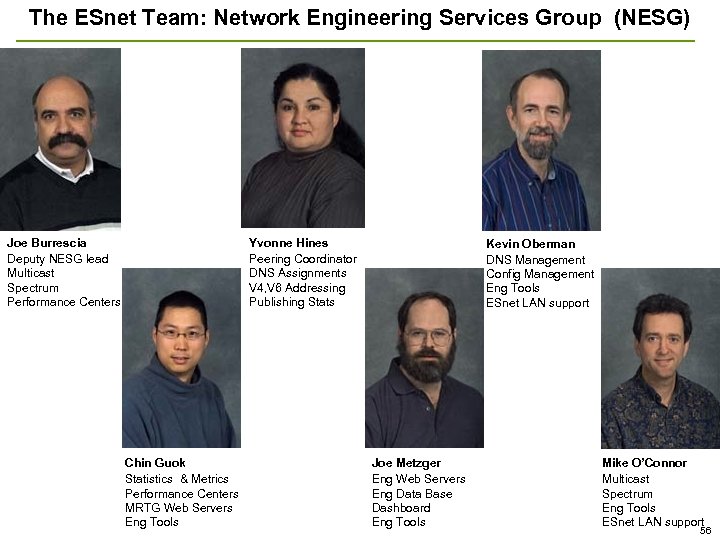

The ESnet Team: Network Engineering Services Group (NESG) Joe Burrescia Deputy NESG lead Multicast Spectrum Performance Centers Yvonne Hines Peering Coordinator DNS Assignments V 4, V 6 Addressing Publishing Stats Chin Guok Statistics & Metrics Performance Centers MRTG Web Servers Eng Tools Kevin Oberman DNS Management Config Management Eng Tools ESnet LAN support Joe Metzger Eng Web Servers Eng Data Base Dashboard Eng Tools Mike O’Connor Multicast Spectrum Eng Tools ESnet LAN support 56

The ESnet Team: Network Engineering Services Group (NESG) Joe Burrescia Deputy NESG lead Multicast Spectrum Performance Centers Yvonne Hines Peering Coordinator DNS Assignments V 4, V 6 Addressing Publishing Stats Chin Guok Statistics & Metrics Performance Centers MRTG Web Servers Eng Tools Kevin Oberman DNS Management Config Management Eng Tools ESnet LAN support Joe Metzger Eng Web Servers Eng Data Base Dashboard Eng Tools Mike O’Connor Multicast Spectrum Eng Tools ESnet LAN support 56

The ESnet Team: Network Technical Services Group (NTSG) Jim Gagliardi Network Support Team lead & Network On call John Paul Jones Network On call & ESnet LAN Clint Wadsworth Network On call & collaboration Chris Cavallo Network On call & Assets mgt Dan Peterson Network On call, MSWin & security Mark Redman Network On call & Config. Lab Scott Mason Assets mgt, Windows 57

The ESnet Team: Network Technical Services Group (NTSG) Jim Gagliardi Network Support Team lead & Network On call John Paul Jones Network On call & ESnet LAN Clint Wadsworth Network On call & collaboration Chris Cavallo Network On call & Assets mgt Dan Peterson Network On call, MSWin & security Mark Redman Network On call & Config. Lab Scott Mason Assets mgt, Windows 57

The ESnet Team: Unix, Database, and Collaboration Support John Webster UNIX – team leader Don Varner UNIX, AFS, security Ken Pon UNIX, security Roberto Morelli Systems Design Marcy Kamps Web, Oracle, Remedy Mike Pihlman Collaboration 58

The ESnet Team: Unix, Database, and Collaboration Support John Webster UNIX – team leader Don Varner UNIX, AFS, security Ken Pon UNIX, security Roberto Morelli Systems Design Marcy Kamps Web, Oracle, Remedy Mike Pihlman Collaboration 58

The ESnet Team: PKI Project Tony Genovese Project Lead Mike Helm Security Architect Dhivakaran Muruganantham (Dhiva) Software Engineer 59

The ESnet Team: PKI Project Tony Genovese Project Lead Mike Helm Security Architect Dhivakaran Muruganantham (Dhiva) Software Engineer 59