e65ca8f04d64354d5c2f4609d8a1aeb1.ppt

- Количество слайдов: 82

An Experimenter’s Guide to Open. Flow GENI Engineering Workshop June 2010 Rob Sherwood (with help from many others)

An Experimenter’s Guide to Open. Flow GENI Engineering Workshop June 2010 Rob Sherwood (with help from many others)

Talk Overview • • What is Open. Flow How Open. Flow Works Open. Flow for GENI Experimenters Deployments Next Session: Open. Flow “Office Hours” • Overview of available software, hardware • Getting started with NOX

Talk Overview • • What is Open. Flow How Open. Flow Works Open. Flow for GENI Experimenters Deployments Next Session: Open. Flow “Office Hours” • Overview of available software, hardware • Getting started with NOX

What is Open. Flow?

What is Open. Flow?

Short Story: Open. Flow is an API • Control how packets are forwarded • Implementable on COTS hardware • Make deployed networks programmable – not just configurable • Makes innovation easier • Goal (experimenter’s perspective): – No more special purpose test-beds – Validate your experiments on deployed hardware with real traffic at full line speed

Short Story: Open. Flow is an API • Control how packets are forwarded • Implementable on COTS hardware • Make deployed networks programmable – not just configurable • Makes innovation easier • Goal (experimenter’s perspective): – No more special purpose test-beds – Validate your experiments on deployed hardware with real traffic at full line speed

How Does Open. Flow Work?

How Does Open. Flow Work?

Ethernet Switch

Ethernet Switch

Control Path (Software) Data Path (Hardware)

Control Path (Software) Data Path (Hardware)

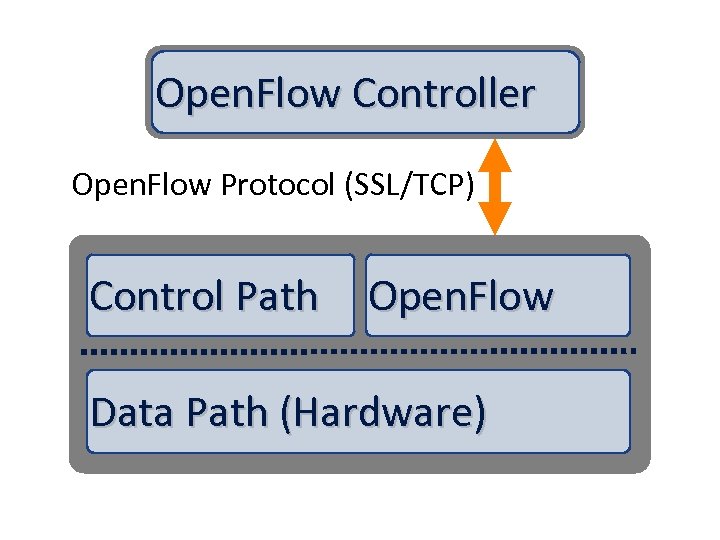

Open. Flow Controller Open. Flow Protocol (SSL/TCP) Control Path Open. Flow Data Path (Hardware)

Open. Flow Controller Open. Flow Protocol (SSL/TCP) Control Path Open. Flow Data Path (Hardware)

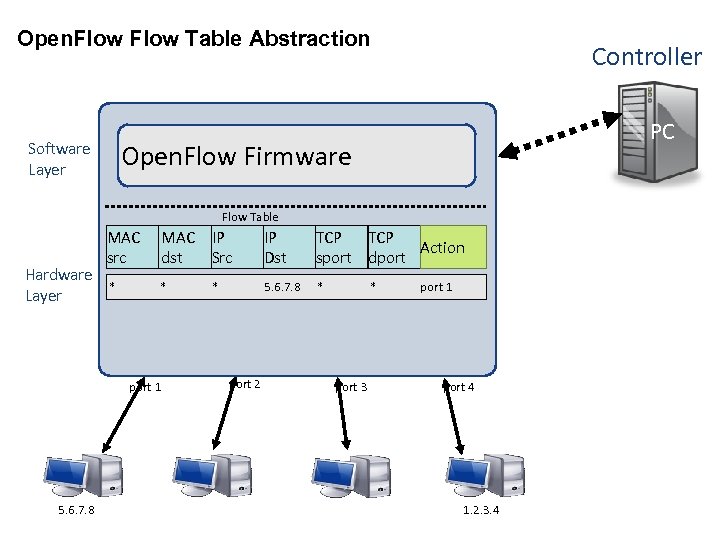

Open. Flow Table Abstraction Controller PC Open. Flow Firmware Software Layer Flow Table Hardware Layer MAC src MAC IP dst Src IP Dst TCP Action sport dport * * 5. 6. 7. 8 * port 1 5. 6. 7. 8 * port 2 * port 3 port 1 port 4 1. 2. 3. 4

Open. Flow Table Abstraction Controller PC Open. Flow Firmware Software Layer Flow Table Hardware Layer MAC src MAC IP dst Src IP Dst TCP Action sport dport * * 5. 6. 7. 8 * port 1 5. 6. 7. 8 * port 2 * port 3 port 1 port 4 1. 2. 3. 4

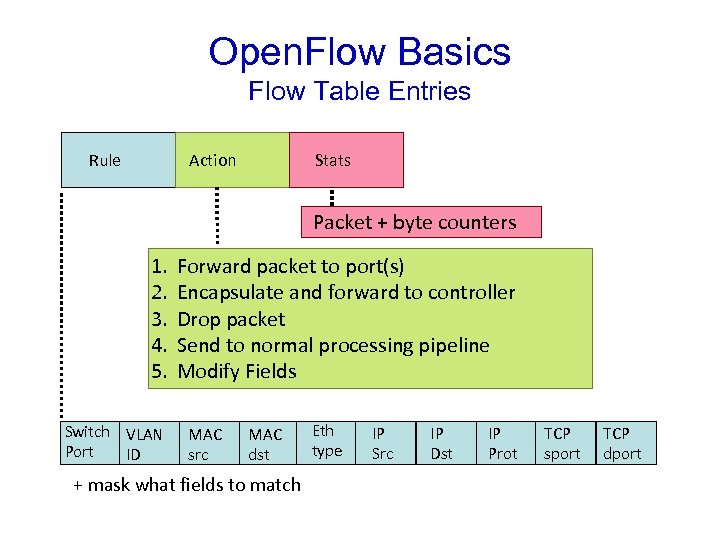

Open. Flow Basics Flow Table Entries Rule Action Stats Packet + byte counters 1. 2. 3. 4. 5. Switch VLAN Port ID Forward packet to port(s) Encapsulate and forward to controller Drop packet Send to normal processing pipeline Modify Fields MAC src MAC dst + mask what fields to match Eth type IP Src IP Dst IP Prot TCP sport TCP dport

Open. Flow Basics Flow Table Entries Rule Action Stats Packet + byte counters 1. 2. 3. 4. 5. Switch VLAN Port ID Forward packet to port(s) Encapsulate and forward to controller Drop packet Send to normal processing pipeline Modify Fields MAC src MAC dst + mask what fields to match Eth type IP Src IP Dst IP Prot TCP sport TCP dport

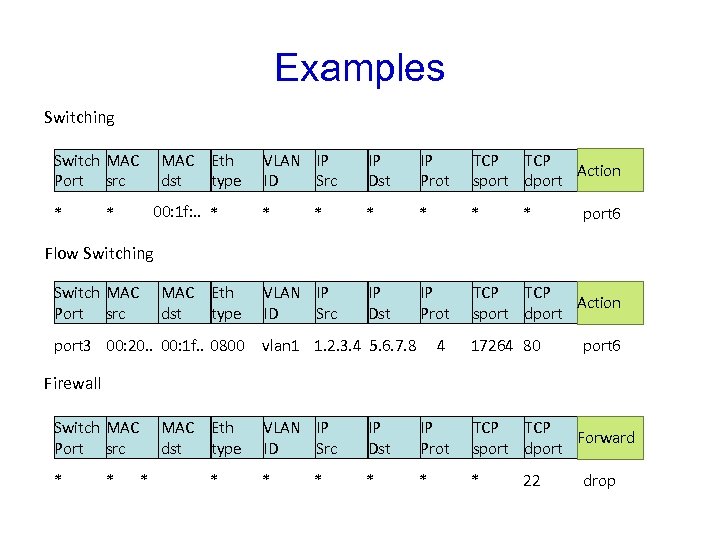

Examples Switching Switch MAC Port src * MAC Eth dst type 00: 1 f: . . * * VLAN IP ID Src IP Dst IP Prot TCP Action sport dport * * port 6 Flow Switching Switch MAC Port src MAC Eth dst type port 3 00: 20. . 00: 1 f. . 0800 VLAN IP ID Src vlan 1 1. 2. 3. 4 5. 6. 7. 8 4 17264 80 port 6 Firewall Switch MAC Port src * * MAC Eth dst type * * VLAN IP ID Src IP Dst IP Prot TCP Forward sport dport * * * 22 drop

Examples Switching Switch MAC Port src * MAC Eth dst type 00: 1 f: . . * * VLAN IP ID Src IP Dst IP Prot TCP Action sport dport * * port 6 Flow Switching Switch MAC Port src MAC Eth dst type port 3 00: 20. . 00: 1 f. . 0800 VLAN IP ID Src vlan 1 1. 2. 3. 4 5. 6. 7. 8 4 17264 80 port 6 Firewall Switch MAC Port src * * MAC Eth dst type * * VLAN IP ID Src IP Dst IP Prot TCP Forward sport dport * * * 22 drop

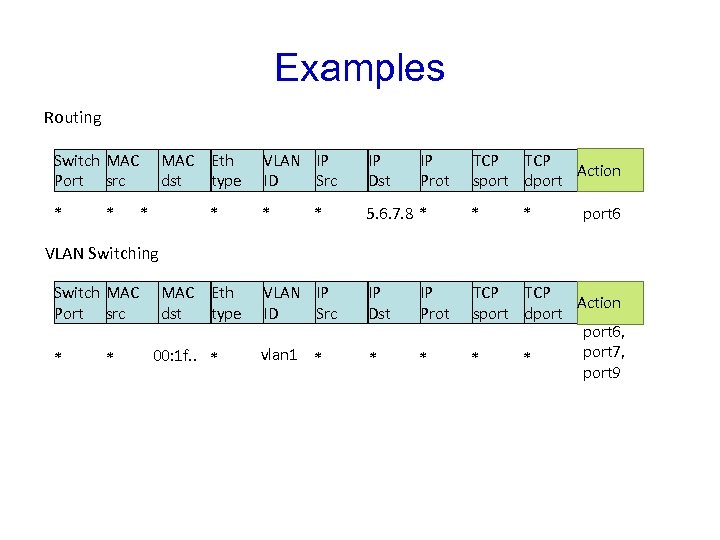

Examples Routing Switch MAC Port src * * MAC Eth dst type * * VLAN IP ID Src IP Dst * 5. 6. 7. 8 * * VLAN IP ID Src IP Dst IP Prot vlan 1 * * * TCP Action sport dport 6, port 7, * * port 9 * IP Prot TCP Action sport dport * port 6 VLAN Switching Switch MAC Port src * * MAC Eth dst type 00: 1 f. . *

Examples Routing Switch MAC Port src * * MAC Eth dst type * * VLAN IP ID Src IP Dst * 5. 6. 7. 8 * * VLAN IP ID Src IP Dst IP Prot vlan 1 * * * TCP Action sport dport 6, port 7, * * port 9 * IP Prot TCP Action sport dport * port 6 VLAN Switching Switch MAC Port src * * MAC Eth dst type 00: 1 f. . *

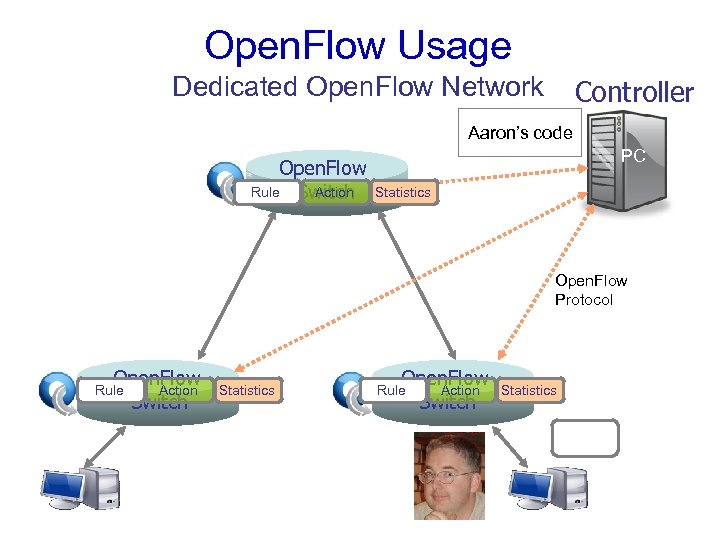

Open. Flow Usage Dedicated Open. Flow Network Controller Aaron’s code Open. Flow Rule Switch Action PC Statistics Open. Flow Protocol Open. Flow Action Switch Rule Open. Flow. Switch. org Statistics Open. Flow Action Switch Rule Statistics

Open. Flow Usage Dedicated Open. Flow Network Controller Aaron’s code Open. Flow Rule Switch Action PC Statistics Open. Flow Protocol Open. Flow Action Switch Rule Open. Flow. Switch. org Statistics Open. Flow Action Switch Rule Statistics

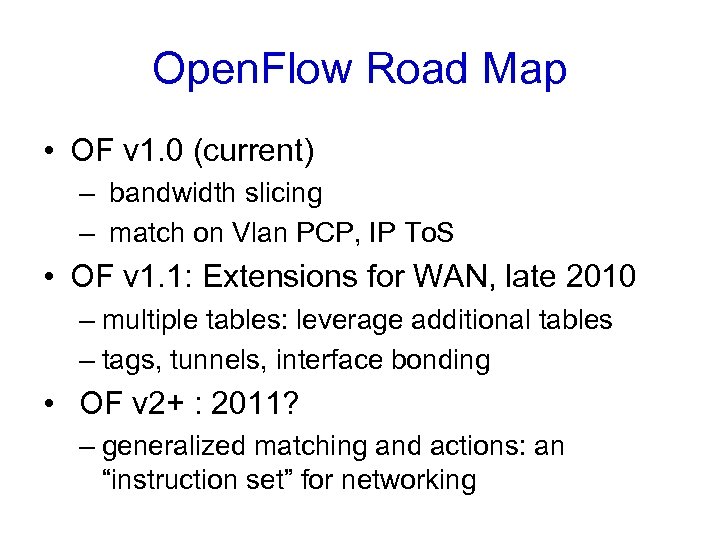

Open. Flow Road Map • OF v 1. 0 (current) – bandwidth slicing – match on Vlan PCP, IP To. S • OF v 1. 1: Extensions for WAN, late 2010 – multiple tables: leverage additional tables – tags, tunnels, interface bonding • OF v 2+ : 2011? – generalized matching and actions: an “instruction set” for networking

Open. Flow Road Map • OF v 1. 0 (current) – bandwidth slicing – match on Vlan PCP, IP To. S • OF v 1. 1: Extensions for WAN, late 2010 – multiple tables: leverage additional tables – tags, tunnels, interface bonding • OF v 2+ : 2011? – generalized matching and actions: an “instruction set” for networking

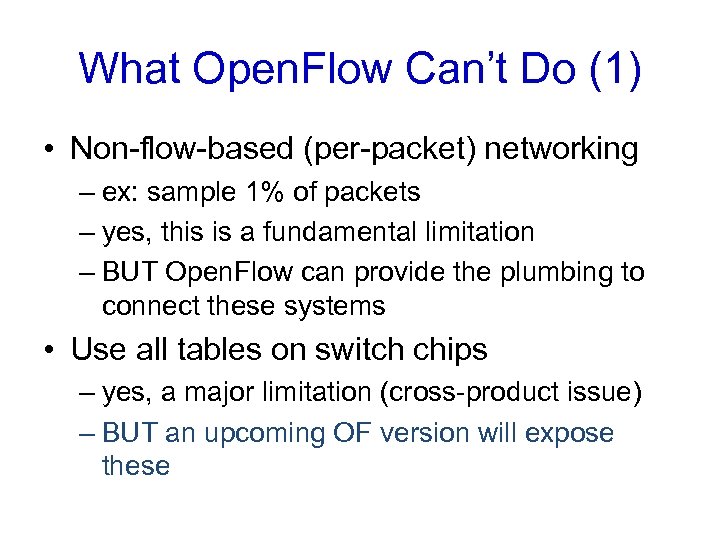

What Open. Flow Can’t Do (1) • Non-flow-based (per-packet) networking – ex: sample 1% of packets – yes, this is a fundamental limitation – BUT Open. Flow can provide the plumbing to connect these systems • Use all tables on switch chips – yes, a major limitation (cross-product issue) – BUT an upcoming OF version will expose these

What Open. Flow Can’t Do (1) • Non-flow-based (per-packet) networking – ex: sample 1% of packets – yes, this is a fundamental limitation – BUT Open. Flow can provide the plumbing to connect these systems • Use all tables on switch chips – yes, a major limitation (cross-product issue) – BUT an upcoming OF version will expose these

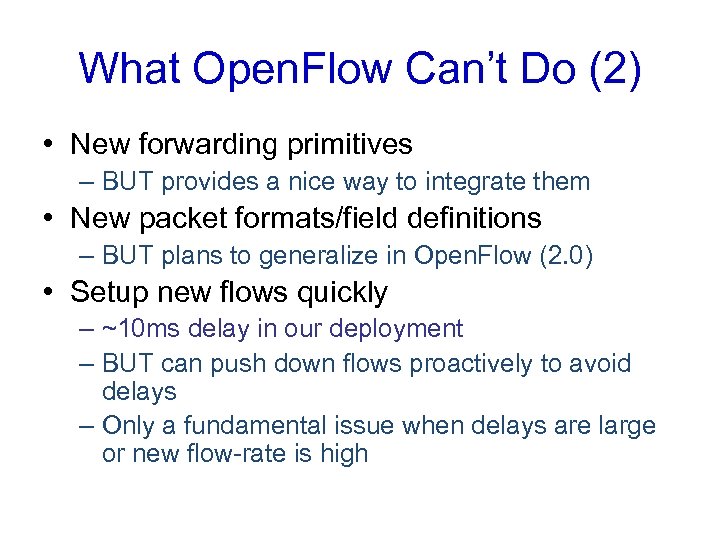

What Open. Flow Can’t Do (2) • New forwarding primitives – BUT provides a nice way to integrate them • New packet formats/field definitions – BUT plans to generalize in Open. Flow (2. 0) • Setup new flows quickly – ~10 ms delay in our deployment – BUT can push down flows proactively to avoid delays – Only a fundamental issue when delays are large or new flow-rate is high

What Open. Flow Can’t Do (2) • New forwarding primitives – BUT provides a nice way to integrate them • New packet formats/field definitions – BUT plans to generalize in Open. Flow (2. 0) • Setup new flows quickly – ~10 ms delay in our deployment – BUT can push down flows proactively to avoid delays – Only a fundamental issue when delays are large or new flow-rate is high

Open. Flow for Experimenters • Experiment Setup • Design considerations • Open. Flow GENI architecture • Limitations

Open. Flow for Experimenters • Experiment Setup • Design considerations • Open. Flow GENI architecture • Limitations

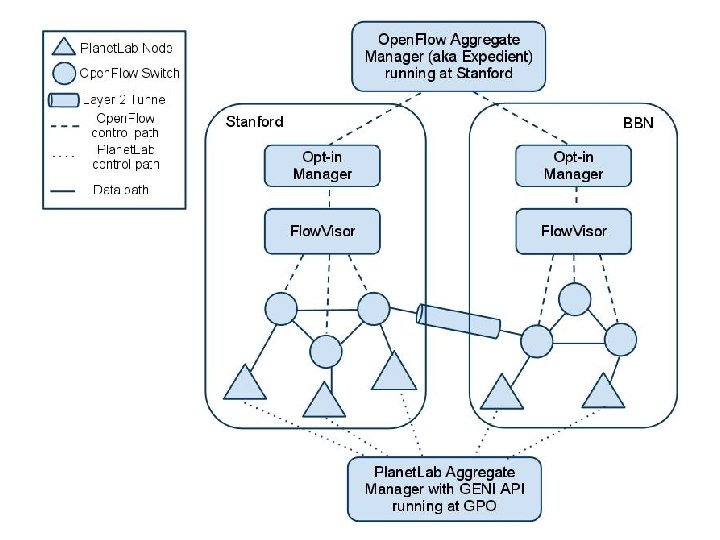

Why Use Open. Flow in GENI? • Fine-grained flow-level forwarding control – e. g. , between PL, Proto. GENI nodes – Not restricted to IP routes or Spanning tree • Control real user traffic with Opt-In – Deploy network services to actual people • Realistic validations – by definition: runs on real production network – performance, fan out, topologies

Why Use Open. Flow in GENI? • Fine-grained flow-level forwarding control – e. g. , between PL, Proto. GENI nodes – Not restricted to IP routes or Spanning tree • Control real user traffic with Opt-In – Deploy network services to actual people • Realistic validations – by definition: runs on real production network – performance, fan out, topologies

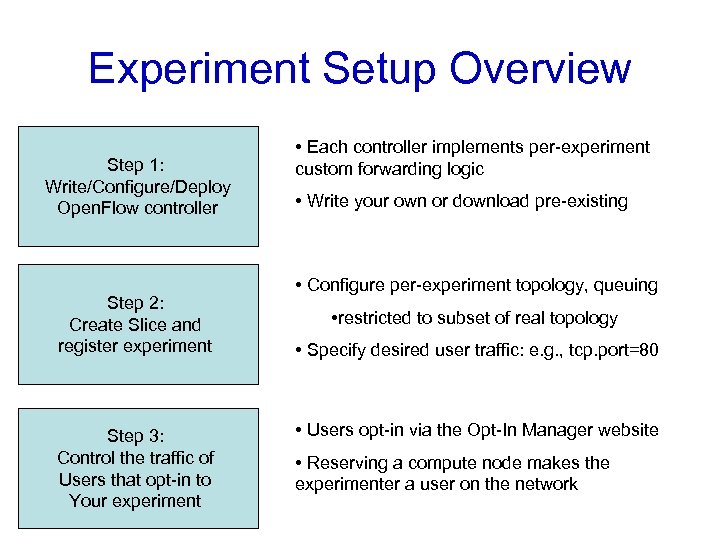

Experiment Setup Overview Step 1: Write/Configure/Deploy Open. Flow controller Step 2: Create Slice and register experiment Step 3: Control the traffic of Users that opt-in to Your experiment • Each controller implements per-experiment custom forwarding logic • Write your own or download pre-existing • Configure per-experiment topology, queuing • restricted to subset of real topology • Specify desired user traffic: e. g. , tcp. port=80 • Users opt-in via the Opt-In Manager website • Reserving a compute node makes the experimenter a user on the network

Experiment Setup Overview Step 1: Write/Configure/Deploy Open. Flow controller Step 2: Create Slice and register experiment Step 3: Control the traffic of Users that opt-in to Your experiment • Each controller implements per-experiment custom forwarding logic • Write your own or download pre-existing • Configure per-experiment topology, queuing • restricted to subset of real topology • Specify desired user traffic: e. g. , tcp. port=80 • Users opt-in via the Opt-In Manager website • Reserving a compute node makes the experimenter a user on the network

Experiment Design Decisions • • Forwarding logic (of course) Centralized vs. distributed control Fine vs. coarse grained rules Reactive vs. Proactive rule creation • Likely more: open research area

Experiment Design Decisions • • Forwarding logic (of course) Centralized vs. distributed control Fine vs. coarse grained rules Reactive vs. Proactive rule creation • Likely more: open research area

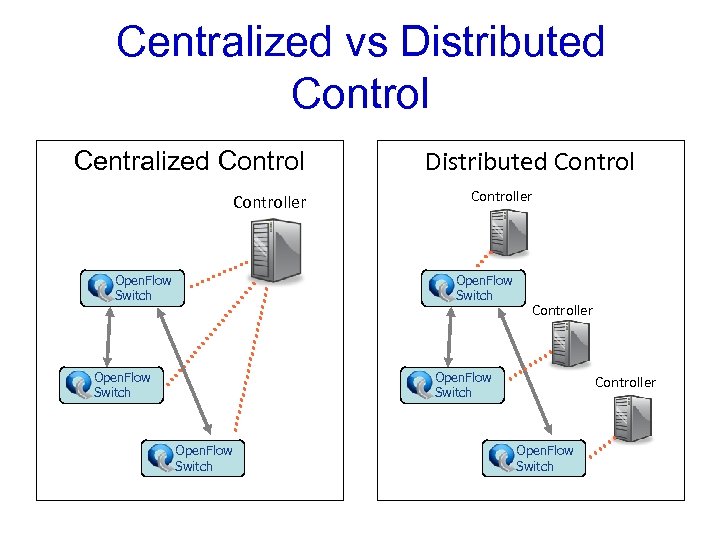

Centralized vs Distributed Control Centralized Controller Open. Flow Switch Distributed Controller Open. Flow Switch Controller Open. Flow Switch

Centralized vs Distributed Control Centralized Controller Open. Flow Switch Distributed Controller Open. Flow Switch Controller Open. Flow Switch

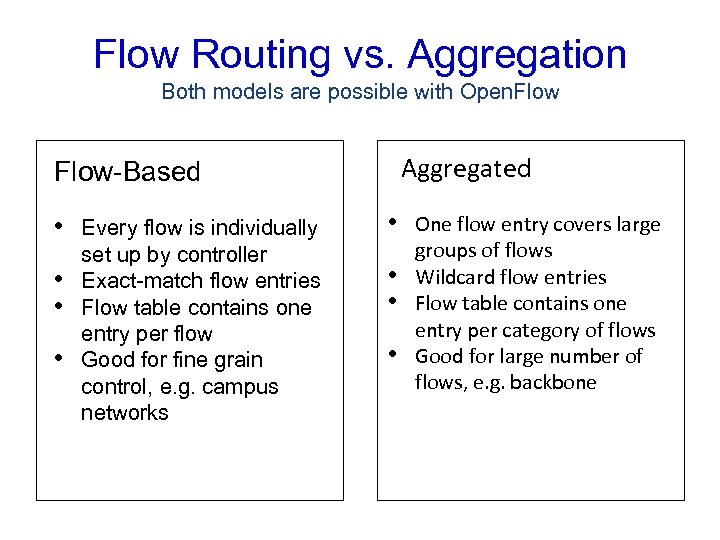

Flow Routing vs. Aggregation Both models are possible with Open. Flow Aggregated Flow-Based • • Every flow is individually set up by controller Exact-match flow entries Flow table contains one entry per flow Good for fine grain control, e. g. campus networks • • One flow entry covers large groups of flows Wildcard flow entries Flow table contains one entry per category of flows Good for large number of flows, e. g. backbone

Flow Routing vs. Aggregation Both models are possible with Open. Flow Aggregated Flow-Based • • Every flow is individually set up by controller Exact-match flow entries Flow table contains one entry per flow Good for fine grain control, e. g. campus networks • • One flow entry covers large groups of flows Wildcard flow entries Flow table contains one entry per category of flows Good for large number of flows, e. g. backbone

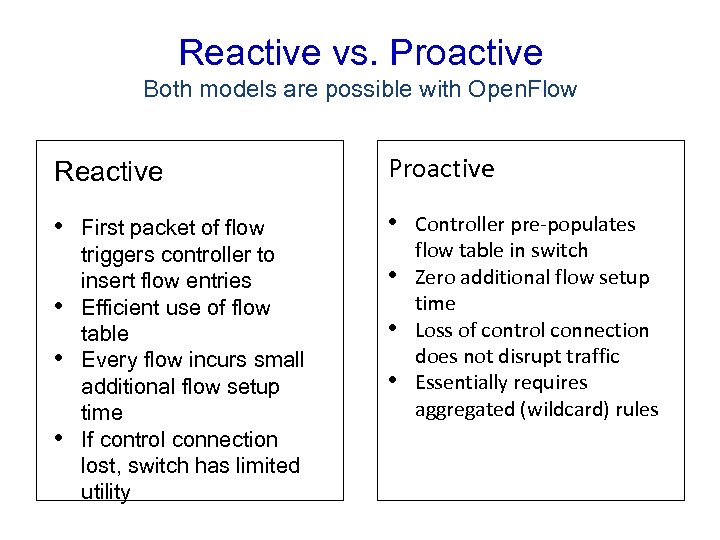

Reactive vs. Proactive Both models are possible with Open. Flow Reactive Proactive • • • First packet of flow triggers controller to insert flow entries Efficient use of flow table Every flow incurs small additional flow setup time If control connection lost, switch has limited utility • • • Controller pre-populates flow table in switch Zero additional flow setup time Loss of control connection does not disrupt traffic Essentially requires aggregated (wildcard) rules

Reactive vs. Proactive Both models are possible with Open. Flow Reactive Proactive • • • First packet of flow triggers controller to insert flow entries Efficient use of flow table Every flow incurs small additional flow setup time If control connection lost, switch has limited utility • • • Controller pre-populates flow table in switch Zero additional flow setup time Loss of control connection does not disrupt traffic Essentially requires aggregated (wildcard) rules

Examples of Open. Flow in Action • • • VM migration across subnets energy-efficient data center network WAN aggregation network slicing default-off network scalable Ethernet scalable data center network load balancing formal model solver verification distributing FPGA processing Summary of demos in next session

Examples of Open. Flow in Action • • • VM migration across subnets energy-efficient data center network WAN aggregation network slicing default-off network scalable Ethernet scalable data center network load balancing formal model solver verification distributing FPGA processing Summary of demos in next session

Opt-In Manager • User-facing website + List of experiments • User’s login and opt-in to experiments – Use local existing auth, e. g. , ldap – Can opt-in to multiple experiments • subsets of traffic: Rob & port 80 == Rob’s port 80 – Use priorities to manage conflicts • Only after opt-in does experimenter control any traffic

Opt-In Manager • User-facing website + List of experiments • User’s login and opt-in to experiments – Use local existing auth, e. g. , ldap – Can opt-in to multiple experiments • subsets of traffic: Rob & port 80 == Rob’s port 80 – Use priorities to manage conflicts • Only after opt-in does experimenter control any traffic

Deployments

Deployments

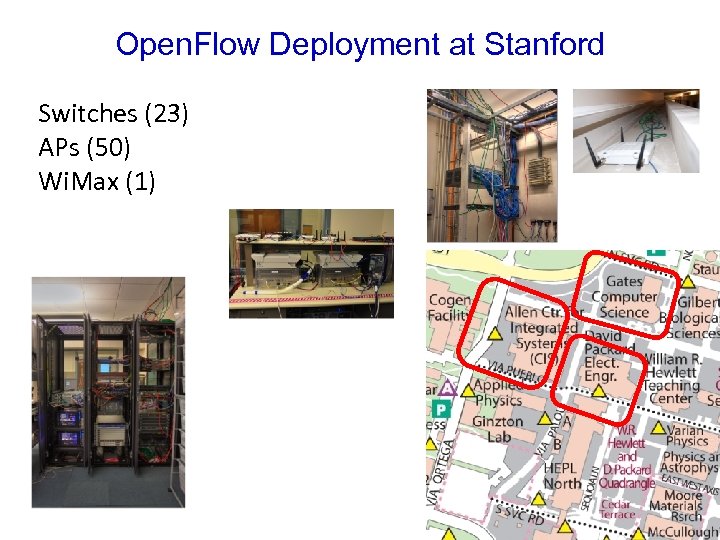

Open. Flow Deployment at Stanford Switches (23) APs (50) Wi. Max (1) 34

Open. Flow Deployment at Stanford Switches (23) APs (50) Wi. Max (1) 34

Live Stanford Deployment Statistics http: //yuba. stanford. edu/ofhallway/wide-right. html http: //yuba. stanford. edu/ofhallway/wide-left. html

Live Stanford Deployment Statistics http: //yuba. stanford. edu/ofhallway/wide-right. html http: //yuba. stanford. edu/ofhallway/wide-left. html

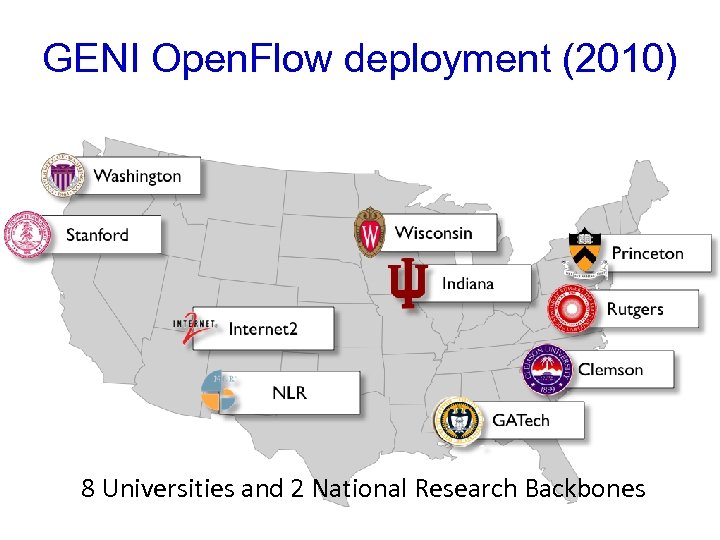

GENI Open. Flow deployment (2010) 8 Universities and 2 National Research Backbones

GENI Open. Flow deployment (2010) 8 Universities and 2 National Research Backbones

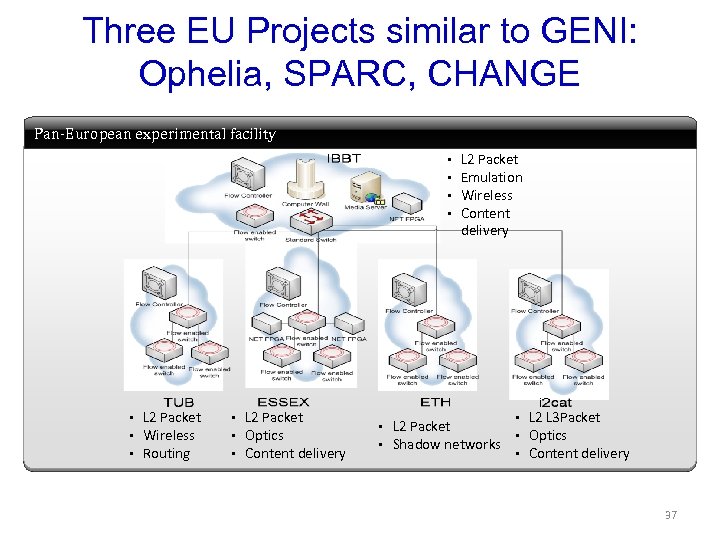

Three EU Projects similar to GENI: Ophelia, SPARC, CHANGE Pan-European experimental facility § § § § L 2 Packet Wireless Routing § § § L 2 Packet Optics Content delivery § § L 2 Packet Emulation Wireless Content delivery L 2 Packet Shadow networks § § § L 2 L 3 Packet Optics Content delivery 37

Three EU Projects similar to GENI: Ophelia, SPARC, CHANGE Pan-European experimental facility § § § § L 2 Packet Wireless Routing § § § L 2 Packet Optics Content delivery § § L 2 Packet Emulation Wireless Content delivery L 2 Packet Shadow networks § § § L 2 L 3 Packet Optics Content delivery 37

Other Open. Flow deployments • Japan - 3 -4 Universities interconnected by JGN 2 plus • Interest in Korea, China, Canada, …

Other Open. Flow deployments • Japan - 3 -4 Universities interconnected by JGN 2 plus • Interest in Korea, China, Canada, …

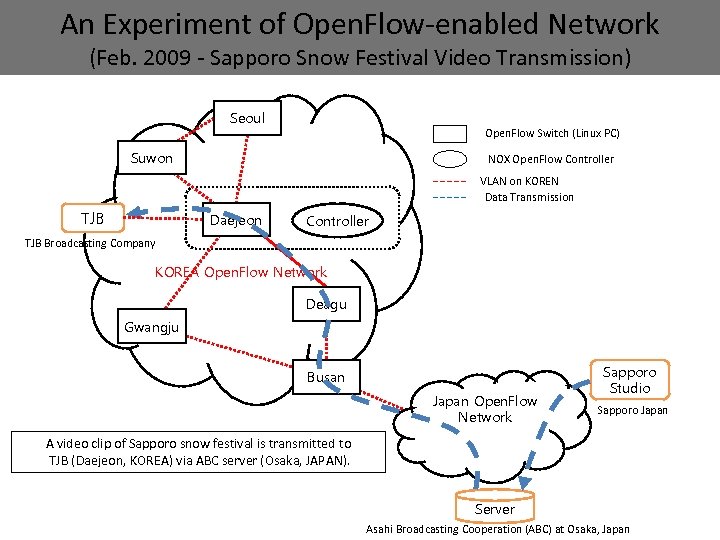

An Experiment of Open. Flow-enabled Network (Feb. 2009 - Sapporo Snow Festival Video Transmission) Seoul Open. Flow Switch (Linux PC) Suwon NOX Open. Flow Controller VLAN on KOREN Data Transmission TJB Daejeon Controller TJB Broadcasting Company KOREA Open. Flow Network Deagu Gwangju Busan Japan Open. Flow Network Sapporo Studio Sapporo Japan A video clip of Sapporo snow festival is transmitted to TJB (Daejeon, KOREA) via ABC server (Osaka, JAPAN). Server Asahi Broadcasting Cooperation (ABC) at Osaka, Japan

An Experiment of Open. Flow-enabled Network (Feb. 2009 - Sapporo Snow Festival Video Transmission) Seoul Open. Flow Switch (Linux PC) Suwon NOX Open. Flow Controller VLAN on KOREN Data Transmission TJB Daejeon Controller TJB Broadcasting Company KOREA Open. Flow Network Deagu Gwangju Busan Japan Open. Flow Network Sapporo Studio Sapporo Japan A video clip of Sapporo snow festival is transmitted to TJB (Daejeon, KOREA) via ABC server (Osaka, JAPAN). Server Asahi Broadcasting Cooperation (ABC) at Osaka, Japan

Highlights of Deployments • Stanford deployment – Mc. Keown group for a year: production and experiments – To scale later this year to entire building (~500 users) • Nation-wide trials and deployments – 7 other universities and BBN deploying now – GEC 9 in Nov, 2010 will showcase nation-wide OF – Internet 2 and NLR to deploy before GEC 9 • Global trials – Over 60 organizations experimenting 2010 likely to be a big year for Open. Flow

Highlights of Deployments • Stanford deployment – Mc. Keown group for a year: production and experiments – To scale later this year to entire building (~500 users) • Nation-wide trials and deployments – 7 other universities and BBN deploying now – GEC 9 in Nov, 2010 will showcase nation-wide OF – Internet 2 and NLR to deploy before GEC 9 • Global trials – Over 60 organizations experimenting 2010 likely to be a big year for Open. Flow

Slide Credits • • • Guido Appenzeller Nick Mc. Keown Guru Parulkar Brandon Heller Lots of others – (this slide was also stolen)

Slide Credits • • • Guido Appenzeller Nick Mc. Keown Guru Parulkar Brandon Heller Lots of others – (this slide was also stolen)

Conclusion • Open. Flow is an API for controlling packet forwarding • Open. Flow+GENI allows more realistic evaluation of network experiments • Glossed over many technical details – What does the API look like? • Stay for the next session

Conclusion • Open. Flow is an API for controlling packet forwarding • Open. Flow+GENI allows more realistic evaluation of network experiments • Glossed over many technical details – What does the API look like? • Stay for the next session

An Experimenter’s Guide to Open. Flow: Office Hours GENI Engineering Workshop June 2010 Rob Sherwood (with help from many others)

An Experimenter’s Guide to Open. Flow: Office Hours GENI Engineering Workshop June 2010 Rob Sherwood (with help from many others)

Office Hours Overview • • • Controllers Tools Slicing Open. Flow switches Demo survey • Ask questions!

Office Hours Overview • • • Controllers Tools Slicing Open. Flow switches Demo survey • Ask questions!

Controllers

Controllers

Controller is King • Principle job of experimenter: customize a controller for your Open. Flow experiment • Many ways to do this: – Download, configure existing controller • e. g. , if you just need shortest path – Read raw Open. Flow spec: write your own • handle ~20 Open. Flow messages – Recommended: extend existing controller • Write a module for NOX – www. noxrepo. org

Controller is King • Principle job of experimenter: customize a controller for your Open. Flow experiment • Many ways to do this: – Download, configure existing controller • e. g. , if you just need shortest path – Read raw Open. Flow spec: write your own • handle ~20 Open. Flow messages – Recommended: extend existing controller • Write a module for NOX – www. noxrepo. org

Starting with NOX • Grab and build – `git clone git: //noxrepo. org/nox` – `git checkout -b openflow-1. 0 origin/openflow-1. 0` – `sh boot. sh; . /configure; make` • Build nox first: non-trivial dependencies • API is documented inline – `cd doc/doxygen; make html` – Still very UTSL

Starting with NOX • Grab and build – `git clone git: //noxrepo. org/nox` – `git checkout -b openflow-1. 0 origin/openflow-1. 0` – `sh boot. sh; . /configure; make` • Build nox first: non-trivial dependencies • API is documented inline – `cd doc/doxygen; make html` – Still very UTSL

Writing a NOX Module • Modules live in. /src/nox/{core, net, web}apps/* • Modules are event based – Register listeners using APIs – C++ and Python bindings – Dynamic dependencies • e. g. , many modules (transitively) use discovery. py • Currently have to update build manually – Automated with. /src/scripts/nox-new-c-app. py • Most up to date docs are at noxrepo. org

Writing a NOX Module • Modules live in. /src/nox/{core, net, web}apps/* • Modules are event based – Register listeners using APIs – C++ and Python bindings – Dynamic dependencies • e. g. , many modules (transitively) use discovery. py • Currently have to update build manually – Automated with. /src/scripts/nox-new-c-app. py • Most up to date docs are at noxrepo. org

Useful NOX Events • Datapath_{join, leave} – New switch and switch leaving • Packet_in/Flow_in – New Datagram, stream; respectively – Cue to insert a new rule/flow_mod • Flow_removed – Expired rule (includes stats) • Shutdown – Tear down module; clean up state

Useful NOX Events • Datapath_{join, leave} – New switch and switch leaving • Packet_in/Flow_in – New Datagram, stream; respectively – Cue to insert a new rule/flow_mod • Flow_removed – Expired rule (includes stats) • Shutdown – Tear down module; clean up state

Tools • Open. Flow Wireshark plugin • Mini. Net • oftrace • many more…

Tools • Open. Flow Wireshark plugin • Mini. Net • oftrace • many more…

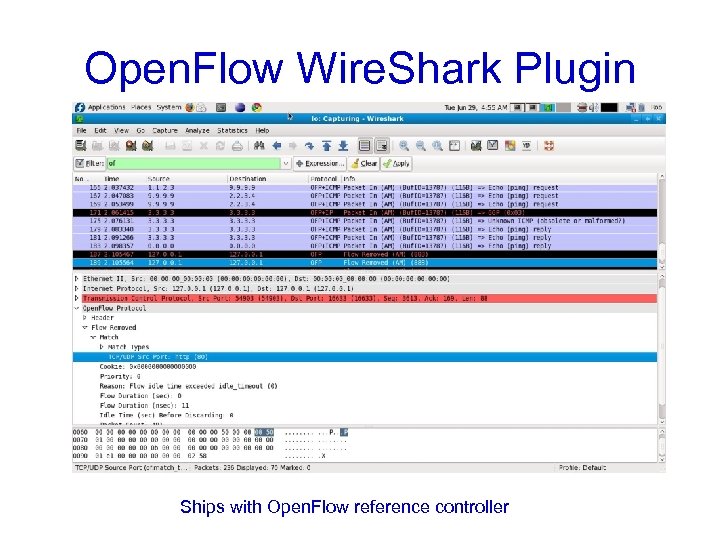

Open. Flow Wire. Shark Plugin Ships with Open. Flow reference controller

Open. Flow Wire. Shark Plugin Ships with Open. Flow reference controller

Mini. Net • Machine-local virtual network – great dev/testing tool • Uses linux virtual network features – Cheaper than VMs • Arbitrary topologies, nodes • Scriptable – Plans to move FV testing to Mini. Net • http: //www. openflow. org/foswiki/bin/view/Open. Flow/Mininet

Mini. Net • Machine-local virtual network – great dev/testing tool • Uses linux virtual network features – Cheaper than VMs • Arbitrary topologies, nodes • Scriptable – Plans to move FV testing to Mini. Net • http: //www. openflow. org/foswiki/bin/view/Open. Flow/Mininet

OFtrace • API for analyzing OF Control traffic • Calculate: – OF Message distribution – Flow Setup time – % of dropped LLDP messages – … extensible • http: //www. openflow. org/wk/index. php/Liboftrace

OFtrace • API for analyzing OF Control traffic • Calculate: – OF Message distribution – Flow Setup time – % of dropped LLDP messages – … extensible • http: //www. openflow. org/wk/index. php/Liboftrace

Slicing Open. Flow • Vlan vs. Flow. Visor slicing • Use cases

Slicing Open. Flow • Vlan vs. Flow. Visor slicing • Use cases

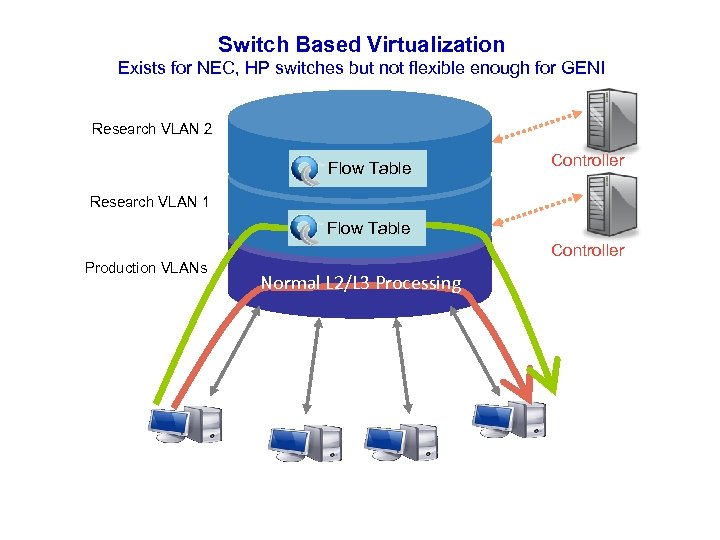

Switch Based Virtualization Exists for NEC, HP switches but not flexible enough for GENI Research VLAN 2 Flow Table Controller Research VLAN 1 Flow Table Production VLANs Controller Normal L 2/L 3 Processing

Switch Based Virtualization Exists for NEC, HP switches but not flexible enough for GENI Research VLAN 2 Flow Table Controller Research VLAN 1 Flow Table Production VLANs Controller Normal L 2/L 3 Processing

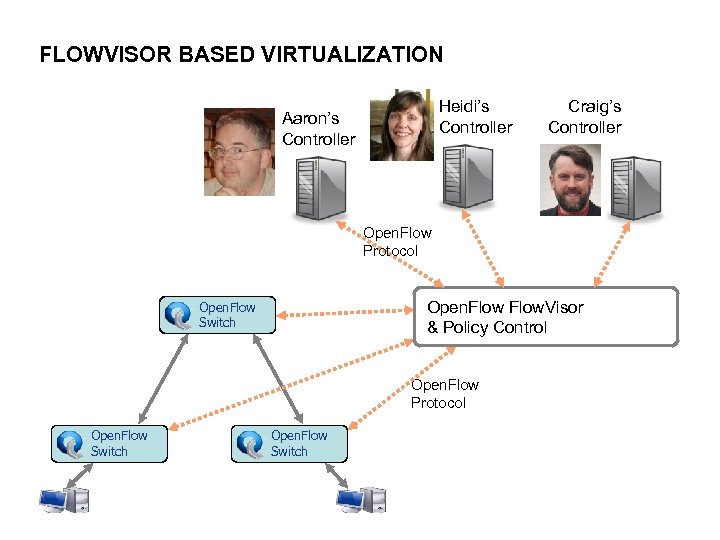

FLOWVISOR BASED VIRTUALIZATION Heidi’s Controller Aaron’s Controller Craig’s Controller Open. Flow Protocol Open. Flow. Visor & Policy Control Open. Flow Switch Open. Flow Protocol Open. Flow Switch

FLOWVISOR BASED VIRTUALIZATION Heidi’s Controller Aaron’s Controller Craig’s Controller Open. Flow Protocol Open. Flow. Visor & Policy Control Open. Flow Switch Open. Flow Protocol Open. Flow Switch

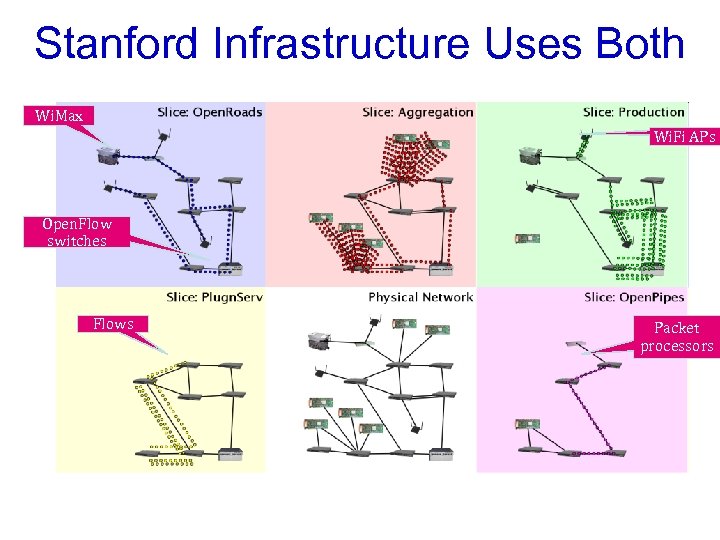

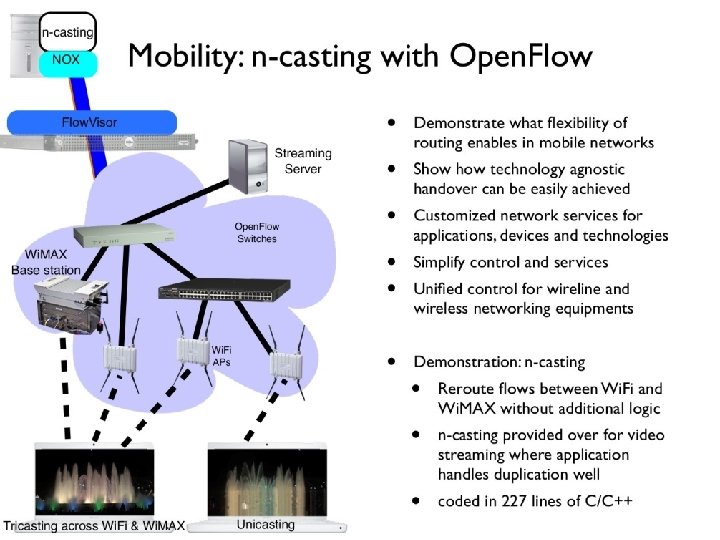

Stanford Infrastructure Uses Both Wi. Max Wi. Fi APs Open. Flow switches Flows Packet processors – The individual controllers and the Flow. Visor are applications on commodity PCs (not shown)

Stanford Infrastructure Uses Both Wi. Max Wi. Fi APs Open. Flow switches Flows Packet processors – The individual controllers and the Flow. Visor are applications on commodity PCs (not shown)

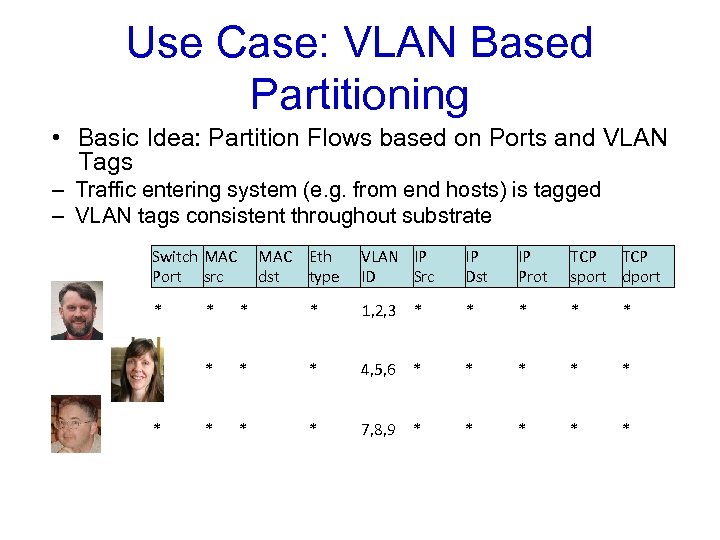

Use Case: VLAN Based Partitioning • Basic Idea: Partition Flows based on Ports and VLAN Tags – Traffic entering system (e. g. from end hosts) is tagged – VLAN tags consistent throughout substrate Switch MAC Port src MAC Eth dst type VLAN IP ID Src IP Dst IP Prot TCP sport dport * * 1, 2, 3 * * * * * 4, 5, 6 * * * * * 7, 8, 9 * * *

Use Case: VLAN Based Partitioning • Basic Idea: Partition Flows based on Ports and VLAN Tags – Traffic entering system (e. g. from end hosts) is tagged – VLAN tags consistent throughout substrate Switch MAC Port src MAC Eth dst type VLAN IP ID Src IP Dst IP Prot TCP sport dport * * 1, 2, 3 * * * * * 4, 5, 6 * * * * * 7, 8, 9 * * *

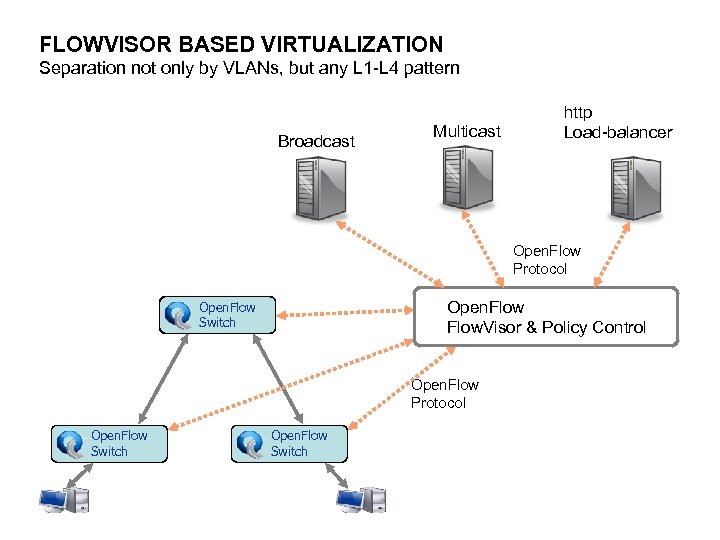

FLOWVISOR BASED VIRTUALIZATION Separation not only by VLANs, but any L 1 -L 4 pattern Broadcast Multicast http Load-balancer Open. Flow Protocol Open. Flow. Visor & Policy Control Open. Flow Switch Open. Flow Protocol Open. Flow Switch

FLOWVISOR BASED VIRTUALIZATION Separation not only by VLANs, but any L 1 -L 4 pattern Broadcast Multicast http Load-balancer Open. Flow Protocol Open. Flow. Visor & Policy Control Open. Flow Switch Open. Flow Protocol Open. Flow Switch

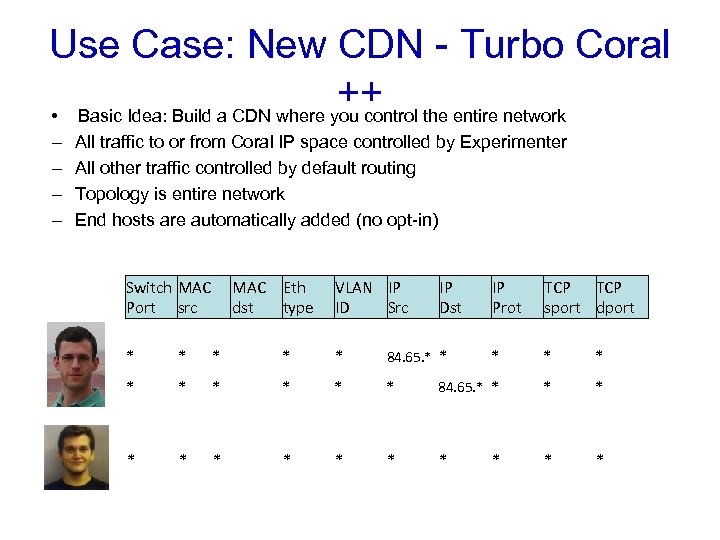

Use Case: New CDN - Turbo Coral ++ • Basic Idea: Build a CDN where you control the entire network – – All traffic to or from Coral IP space controlled by Experimenter All other traffic controlled by default routing Topology is entire network End hosts are automatically added (no opt-in) Switch MAC Port src MAC Eth dst type VLAN IP ID Src IP Dst IP Prot TCP sport dport * * * * 84. 65. * * * *

Use Case: New CDN - Turbo Coral ++ • Basic Idea: Build a CDN where you control the entire network – – All traffic to or from Coral IP space controlled by Experimenter All other traffic controlled by default routing Topology is entire network End hosts are automatically added (no opt-in) Switch MAC Port src MAC Eth dst type VLAN IP ID Src IP Dst IP Prot TCP sport dport * * * * 84. 65. * * * *

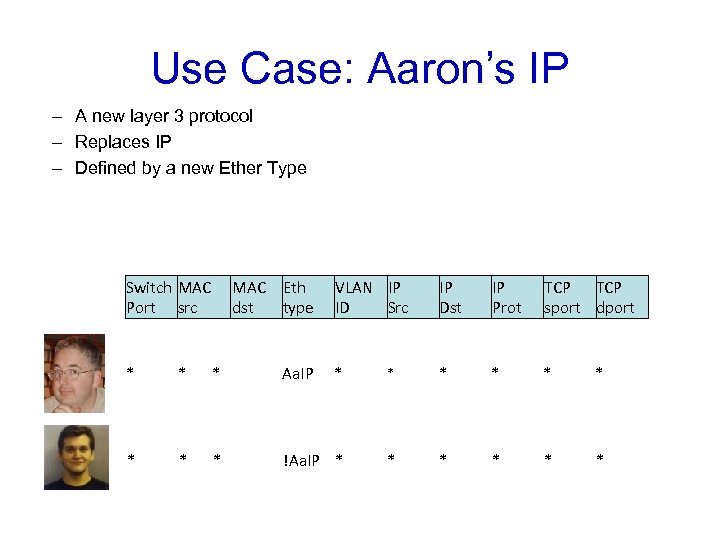

Use Case: Aaron’s IP – A new layer 3 protocol – Replaces IP – Defined by a new Ether Type Switch MAC Port src MAC Eth dst type VLAN IP ID Src IP Dst IP Prot TCP sport dport * * * Aa. IP * * * * * !Aa. IP * * *

Use Case: Aaron’s IP – A new layer 3 protocol – Replaces IP – Defined by a new Ether Type Switch MAC Port src MAC Eth dst type VLAN IP ID Src IP Dst IP Prot TCP sport dport * * * Aa. IP * * * * * !Aa. IP * * *

Switches

Switches

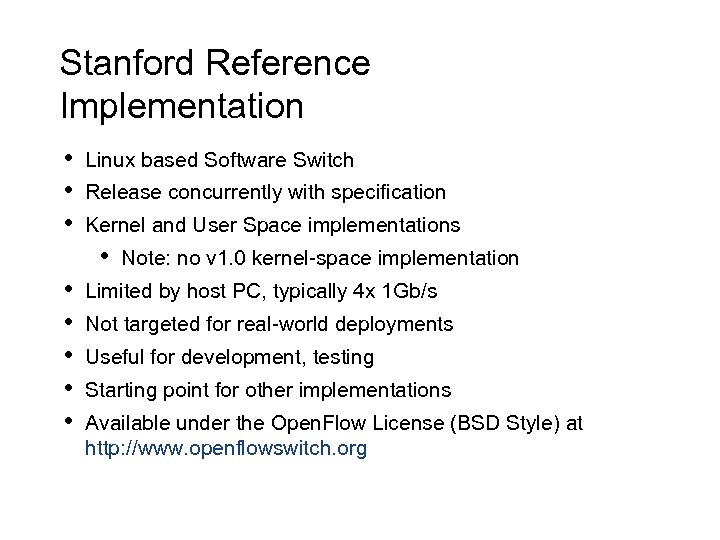

Stanford Reference Implementation • • • Linux based Software Switch Release concurrently with specification Kernel and User Space implementations • • • Note: no v 1. 0 kernel-space implementation Limited by host PC, typically 4 x 1 Gb/s Not targeted for real-world deployments Useful for development, testing Starting point for other implementations Available under the Open. Flow License (BSD Style) at http: //www. openflowswitch. org

Stanford Reference Implementation • • • Linux based Software Switch Release concurrently with specification Kernel and User Space implementations • • • Note: no v 1. 0 kernel-space implementation Limited by host PC, typically 4 x 1 Gb/s Not targeted for real-world deployments Useful for development, testing Starting point for other implementations Available under the Open. Flow License (BSD Style) at http: //www. openflowswitch. org

Wireless Access Points • Two Flavors: – Open. WRT based (Busybox Linux) • v 0. 8. 9 only – Vanilla Software (Full Linux) • Only runs on PC Engines Hardware • Debian disk image • Available from Stanford • Both implementations are software only.

Wireless Access Points • Two Flavors: – Open. WRT based (Busybox Linux) • v 0. 8. 9 only – Vanilla Software (Full Linux) • Only runs on PC Engines Hardware • Debian disk image • Available from Stanford • Both implementations are software only.

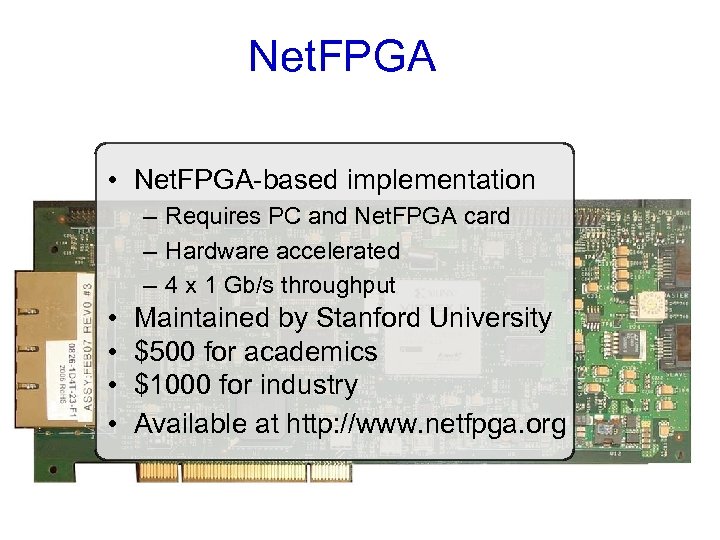

Net. FPGA • Net. FPGA-based implementation – Requires PC and Net. FPGA card – Hardware accelerated – 4 x 1 Gb/s throughput • • Maintained by Stanford University $500 for academics $1000 for industry Available at http: //www. netfpga. org

Net. FPGA • Net. FPGA-based implementation – Requires PC and Net. FPGA card – Hardware accelerated – 4 x 1 Gb/s throughput • • Maintained by Stanford University $500 for academics $1000 for industry Available at http: //www. netfpga. org

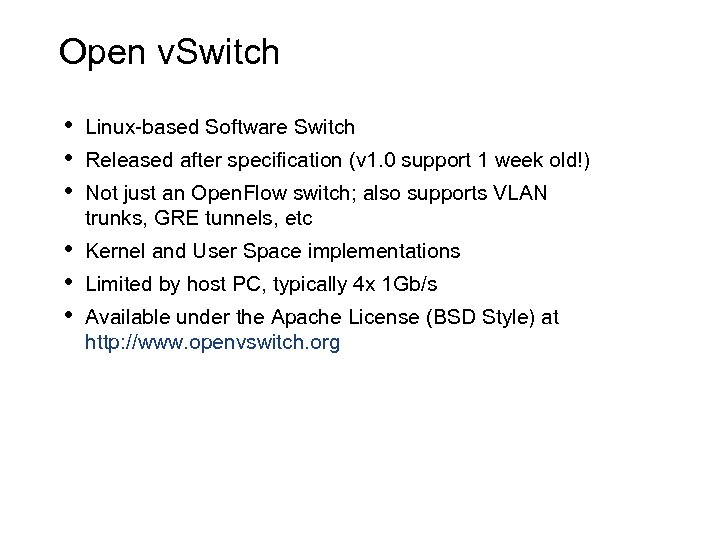

Open v. Switch • • • Linux-based Software Switch • • • Kernel and User Space implementations Released after specification (v 1. 0 support 1 week old!) Not just an Open. Flow switch; also supports VLAN trunks, GRE tunnels, etc Limited by host PC, typically 4 x 1 Gb/s Available under the Apache License (BSD Style) at http: //www. openvswitch. org

Open v. Switch • • • Linux-based Software Switch • • • Kernel and User Space implementations Released after specification (v 1. 0 support 1 week old!) Not just an Open. Flow switch; also supports VLAN trunks, GRE tunnels, etc Limited by host PC, typically 4 x 1 Gb/s Available under the Apache License (BSD Style) at http: //www. openvswitch. org

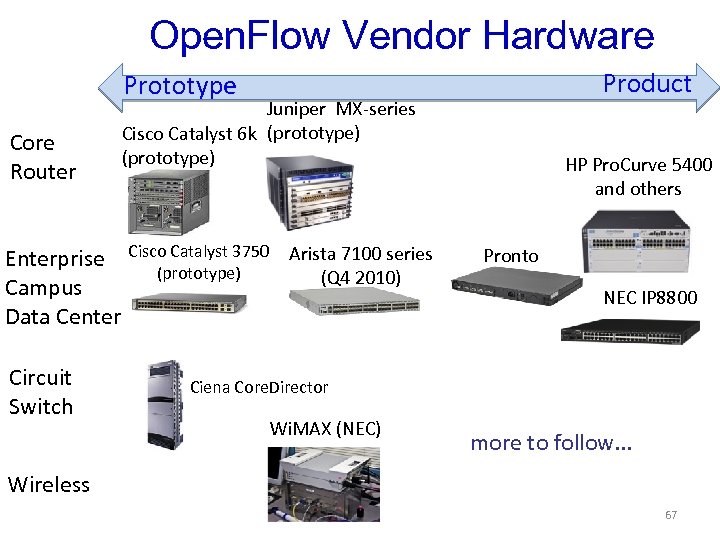

Open. Flow Vendor Hardware Product Prototype Core Router Juniper MX-series Cisco Catalyst 6 k (prototype) Enterprise Cisco Catalyst 3750 Arista 7100 series (prototype) (Q 4 2010) Campus Data Center Circuit Switch HP Pro. Curve 5400 and others Pronto NEC IP 8800 Ciena Core. Director Wi. MAX (NEC) more to follow. . . Wireless 67

Open. Flow Vendor Hardware Product Prototype Core Router Juniper MX-series Cisco Catalyst 6 k (prototype) Enterprise Cisco Catalyst 3750 Arista 7100 series (prototype) (Q 4 2010) Campus Data Center Circuit Switch HP Pro. Curve 5400 and others Pronto NEC IP 8800 Ciena Core. Director Wi. MAX (NEC) more to follow. . . Wireless 67

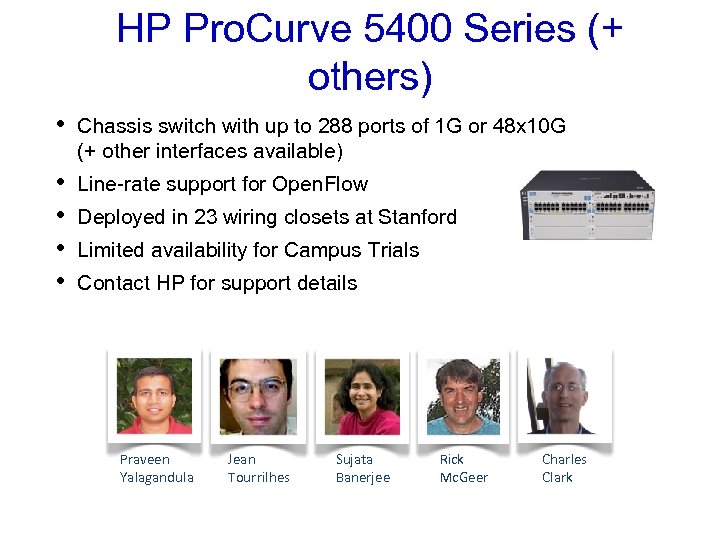

HP Pro. Curve 5400 Series (+ others) • Chassis switch with up to 288 ports of 1 G or 48 x 10 G (+ other interfaces available) • • Line-rate support for Open. Flow Deployed in 23 wiring closets at Stanford Limited availability for Campus Trials Contact HP for support details Praveen Yalagandula Jean Tourrilhes Sujata Banerjee Rick Mc. Geer Charles Clark

HP Pro. Curve 5400 Series (+ others) • Chassis switch with up to 288 ports of 1 G or 48 x 10 G (+ other interfaces available) • • Line-rate support for Open. Flow Deployed in 23 wiring closets at Stanford Limited availability for Campus Trials Contact HP for support details Praveen Yalagandula Jean Tourrilhes Sujata Banerjee Rick Mc. Geer Charles Clark

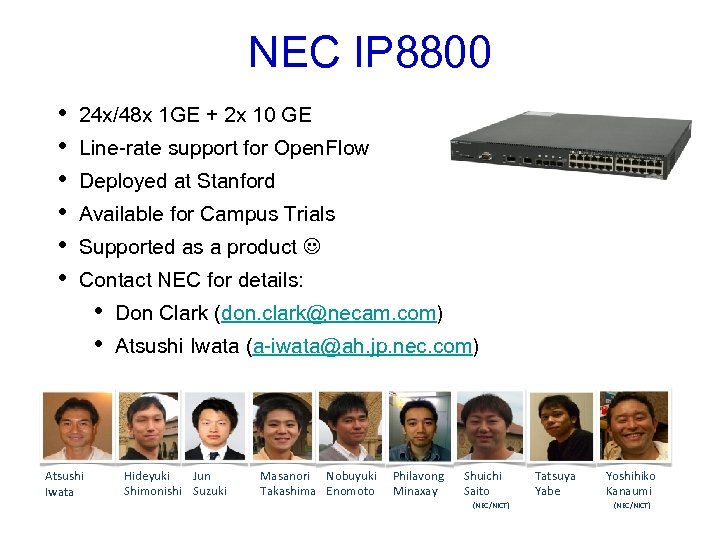

NEC IP 8800 • • • 24 x/48 x 1 GE + 2 x 10 GE Line-rate support for Open. Flow Deployed at Stanford Available for Campus Trials Supported as a product Contact NEC for details: • • Atsushi Iwata Don Clark (don. clark@necam. com) Atsushi Iwata (a-iwata@ah. jp. nec. com) Hideyuki Jun Shimonishi Suzuki Masanori Nobuyuki Takashima Enomoto Philavong Minaxay Shuichi Saito (NEC/NICT) Tatsuya Yabe Yoshihiko Kanaumi (NEC/NICT)

NEC IP 8800 • • • 24 x/48 x 1 GE + 2 x 10 GE Line-rate support for Open. Flow Deployed at Stanford Available for Campus Trials Supported as a product Contact NEC for details: • • Atsushi Iwata Don Clark (don. clark@necam. com) Atsushi Iwata (a-iwata@ah. jp. nec. com) Hideyuki Jun Shimonishi Suzuki Masanori Nobuyuki Takashima Enomoto Philavong Minaxay Shuichi Saito (NEC/NICT) Tatsuya Yabe Yoshihiko Kanaumi (NEC/NICT)

Pronto Switch • • Broadcom based 48 x 1 Gb/s + 4 x 10 Gb/s Bare switch – you add the software Supports Stanford Indigo and Toroki releases See openflowswitch. org blog post for more details

Pronto Switch • • Broadcom based 48 x 1 Gb/s + 4 x 10 Gb/s Bare switch – you add the software Supports Stanford Indigo and Toroki releases See openflowswitch. org blog post for more details

Stanford Indigo Firmware for Pronto • Source available under Open. Flow License to parties that have NDA with BRCM in place • Targeted for research use and as a baseline for vendor implementations (but not direct deployment) • • No standard Ethernet switching – Open. Flow only! Hardware accelerated Supports v 1. 0 Contact Dan Talayco (dtalayco@stanford. edu)

Stanford Indigo Firmware for Pronto • Source available under Open. Flow License to parties that have NDA with BRCM in place • Targeted for research use and as a baseline for vendor implementations (but not direct deployment) • • No standard Ethernet switching – Open. Flow only! Hardware accelerated Supports v 1. 0 Contact Dan Talayco (dtalayco@stanford. edu)

Toroki Firmware for Pronto • • Fastpath-based Open. Flow Implementation Full L 2/L 3 management capabilities on switch Hardware accelerated Availability TBD

Toroki Firmware for Pronto • • Fastpath-based Open. Flow Implementation Full L 2/L 3 management capabilities on switch Hardware accelerated Availability TBD

Ciena Core. Director • • • Circuit switch with experimental Open. Flow support Prototype only Demonstrated at Super Computing 2009

Ciena Core. Director • • • Circuit switch with experimental Open. Flow support Prototype only Demonstrated at Super Computing 2009

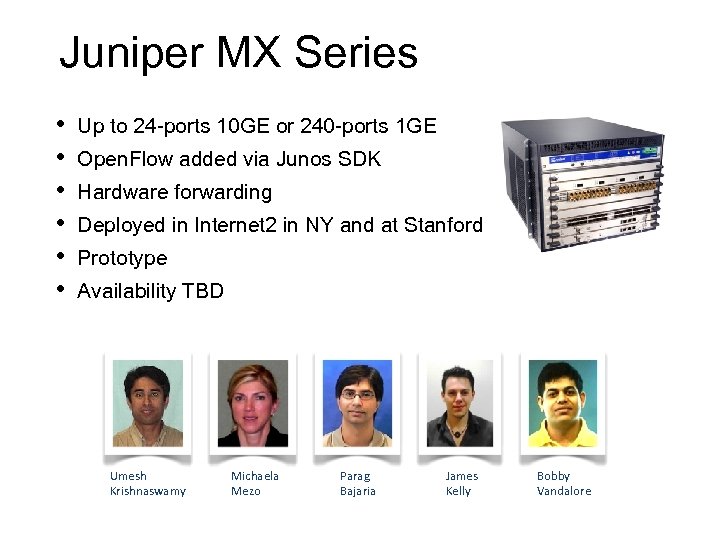

Juniper MX Series • • • Up to 24 -ports 10 GE or 240 -ports 1 GE Open. Flow added via Junos SDK Hardware forwarding Deployed in Internet 2 in NY and at Stanford Prototype Availability TBD Umesh Krishnaswamy Michaela Mezo Parag Bajaria James Kelly Bobby Vandalore

Juniper MX Series • • • Up to 24 -ports 10 GE or 240 -ports 1 GE Open. Flow added via Junos SDK Hardware forwarding Deployed in Internet 2 in NY and at Stanford Prototype Availability TBD Umesh Krishnaswamy Michaela Mezo Parag Bajaria James Kelly Bobby Vandalore

Cisco 6500 Series • • Various configurations available Software forwarding only Limited deployment as part of demos Availability TBD Work on other Cisco models in progress Pere Monclus Sailesh Kumar Flavio Bonomi

Cisco 6500 Series • • Various configurations available Software forwarding only Limited deployment as part of demos Availability TBD Work on other Cisco models in progress Pere Monclus Sailesh Kumar Flavio Bonomi

Stanford Reference Controller • • • Comes with reference distribution Monolithic C code – not designed for extensibility Ethernet flow switch or hub

Stanford Reference Controller • • • Comes with reference distribution Monolithic C code – not designed for extensibility Ethernet flow switch or hub

NOX Controller • • • Available at http: //NOXrepo. org Open Source (GPL) Modular design, programmable in C++ or Python High-performance (usually switches are the limit) Deployed as main controller in Stanford Martin Casado Scott Shenker Teemu Koponen Natasha Gude Justin Pettit

NOX Controller • • • Available at http: //NOXrepo. org Open Source (GPL) Modular design, programmable in C++ or Python High-performance (usually switches are the limit) Deployed as main controller in Stanford Martin Casado Scott Shenker Teemu Koponen Natasha Gude Justin Pettit

Simple Network Access Control (SNAC) • • • Available at http: //NOXrepo. org Policy + Nice GUI Branched from NOX long ago Available as a binary Part of Stanford deployment

Simple Network Access Control (SNAC) • • • Available at http: //NOXrepo. org Policy + Nice GUI Branched from NOX long ago Available as a binary Part of Stanford deployment

Demo Previews • • Flow. Visor Plug-n-Serve Aggregation Open. Pipes Open. Flow Wireless Mobile. VMs Elastic. Tree

Demo Previews • • Flow. Visor Plug-n-Serve Aggregation Open. Pipes Open. Flow Wireless Mobile. VMs Elastic. Tree

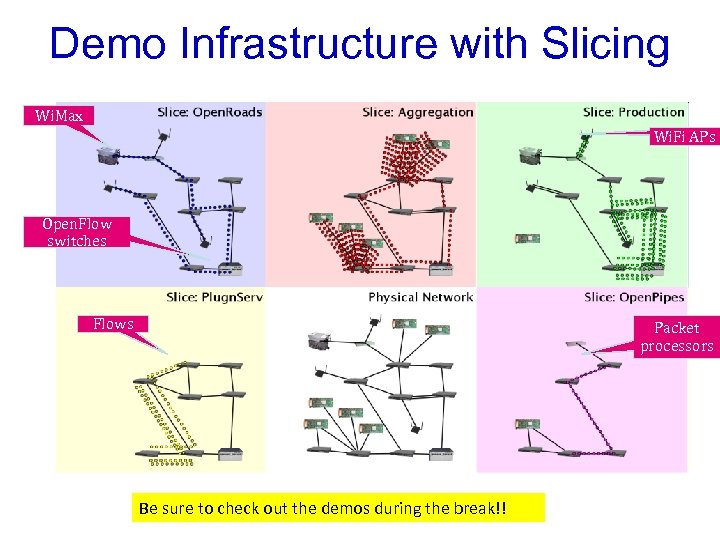

Demo Infrastructure with Slicing Wi. Max Wi. Fi APs Open. Flow switches Flows Packet processors – The individual controllers and the Flow. Visor are applications on commodity PCs (not shown) Be sure to check out the demos during the break!!

Demo Infrastructure with Slicing Wi. Max Wi. Fi APs Open. Flow switches Flows Packet processors – The individual controllers and the Flow. Visor are applications on commodity PCs (not shown) Be sure to check out the demos during the break!!

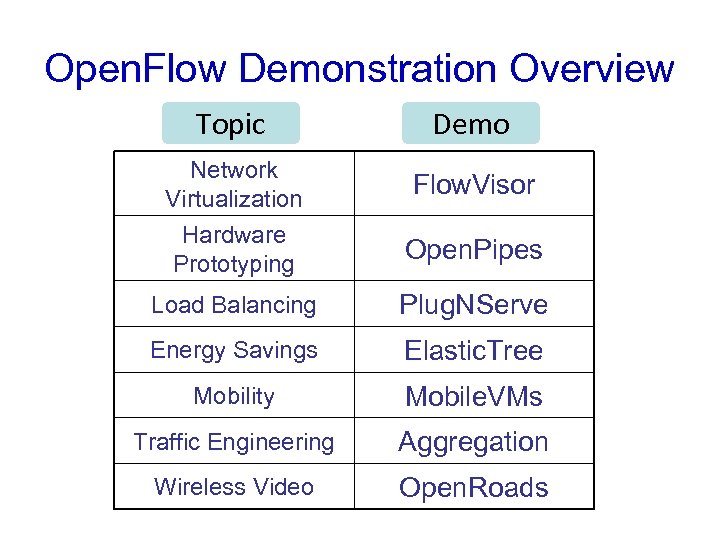

Open. Flow Demonstration Overview Topic Demo Network Virtualization Flow. Visor Hardware Prototyping Open. Pipes Load Balancing Plug. NServe Energy Savings Elastic. Tree Mobility Mobile. VMs Traffic Engineering Aggregation Wireless Video Open. Roads

Open. Flow Demonstration Overview Topic Demo Network Virtualization Flow. Visor Hardware Prototyping Open. Pipes Load Balancing Plug. NServe Energy Savings Elastic. Tree Mobility Mobile. VMs Traffic Engineering Aggregation Wireless Video Open. Roads

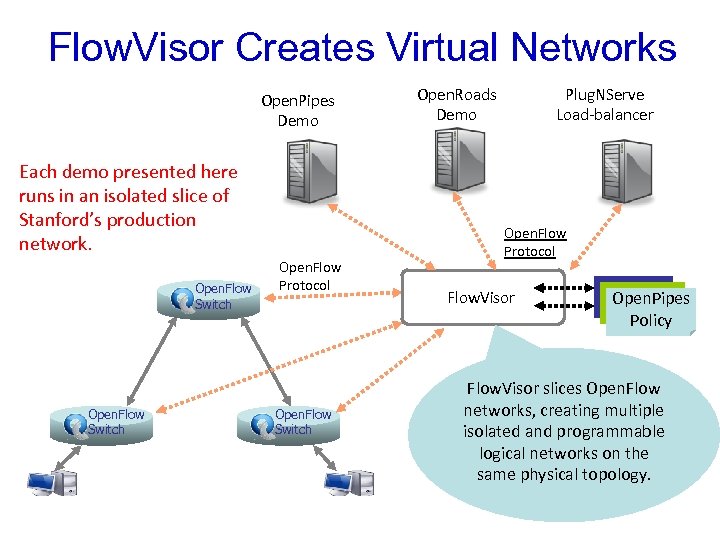

Flow. Visor Creates Virtual Networks Open. Pipes Demo Each demo presented here runs in an isolated slice of Stanford’s production network. Open. Flow Switch Open. Flow Protocol Open. Flow Switch Plug. NServe Load-balancer Open. Roads Demo Open. Flow Protocol Flow. Visor Open. Pipes Policy Flow. Visor slices Open. Flow networks, creating multiple isolated and programmable logical networks on the same physical topology.

Flow. Visor Creates Virtual Networks Open. Pipes Demo Each demo presented here runs in an isolated slice of Stanford’s production network. Open. Flow Switch Open. Flow Protocol Open. Flow Switch Plug. NServe Load-balancer Open. Roads Demo Open. Flow Protocol Flow. Visor Open. Pipes Policy Flow. Visor slices Open. Flow networks, creating multiple isolated and programmable logical networks on the same physical topology.

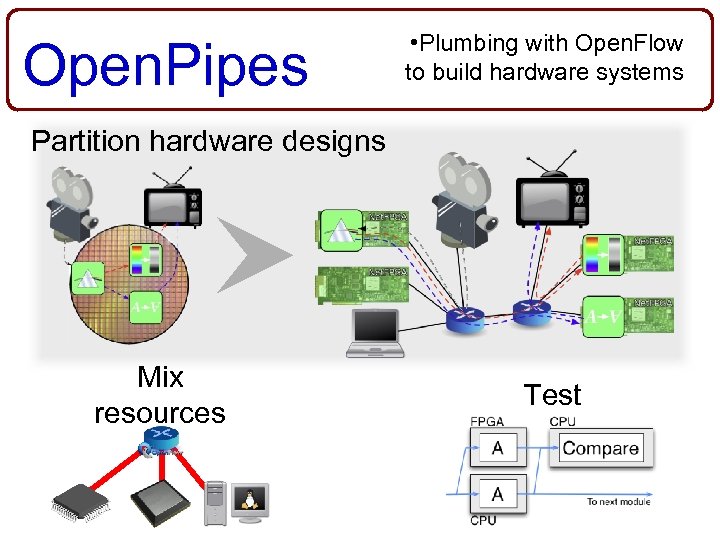

Open. Pipes • Plumbing with Open. Flow to build hardware systems Partition hardware designs Mix resources Test

Open. Pipes • Plumbing with Open. Flow to build hardware systems Partition hardware designs Mix resources Test

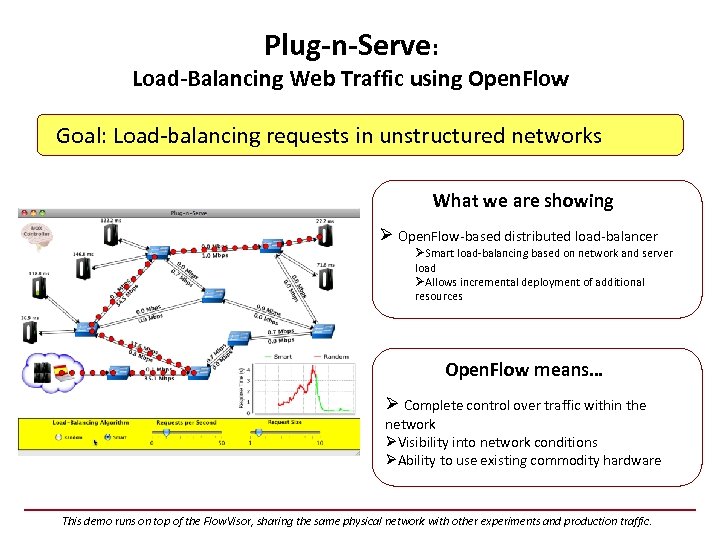

Plug-n-Serve: Load-Balancing Web Traffic using Open. Flow Goal: Load-balancing requests in unstructured networks What we are showing Ø Open. Flow-based distributed load-balancer ØSmart load-balancing based on network and server load ØAllows incremental deployment of additional resources Open. Flow means… Ø Complete control over traffic within the network ØVisibility into network conditions ØAbility to use existing commodity hardware This demo runs on top of the Flow. Visor, sharing the same physical network with other experiments and production traffic.

Plug-n-Serve: Load-Balancing Web Traffic using Open. Flow Goal: Load-balancing requests in unstructured networks What we are showing Ø Open. Flow-based distributed load-balancer ØSmart load-balancing based on network and server load ØAllows incremental deployment of additional resources Open. Flow means… Ø Complete control over traffic within the network ØVisibility into network conditions ØAbility to use existing commodity hardware This demo runs on top of the Flow. Visor, sharing the same physical network with other experiments and production traffic.

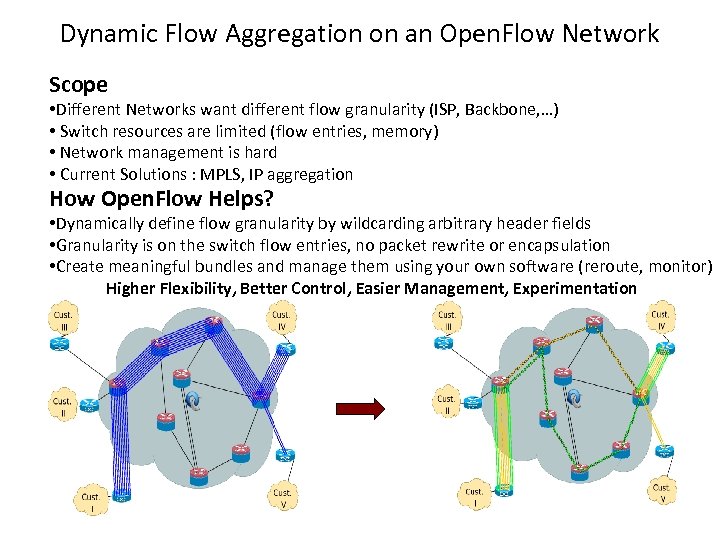

Dynamic Flow Aggregation on an Open. Flow Network Scope • Different Networks want different flow granularity (ISP, Backbone, …) • Switch resources are limited (flow entries, memory) • Network management is hard • Current Solutions : MPLS, IP aggregation How Open. Flow Helps? • Dynamically define flow granularity by wildcarding arbitrary header fields • Granularity is on the switch flow entries, no packet rewrite or encapsulation • Create meaningful bundles and manage them using your own software (reroute, monitor) Higher Flexibility, Better Control, Easier Management, Experimentation

Dynamic Flow Aggregation on an Open. Flow Network Scope • Different Networks want different flow granularity (ISP, Backbone, …) • Switch resources are limited (flow entries, memory) • Network management is hard • Current Solutions : MPLS, IP aggregation How Open. Flow Helps? • Dynamically define flow granularity by wildcarding arbitrary header fields • Granularity is on the switch flow entries, no packet rewrite or encapsulation • Create meaningful bundles and manage them using your own software (reroute, monitor) Higher Flexibility, Better Control, Easier Management, Experimentation

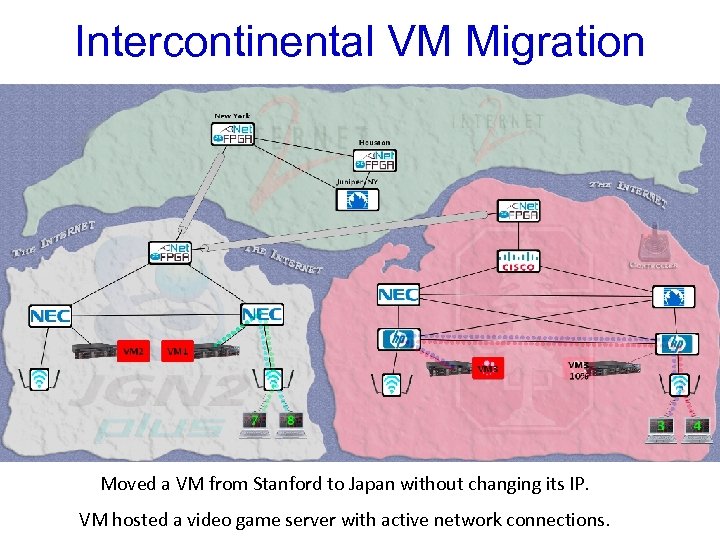

Intercontinental VM Migration Moved a VM from Stanford to Japan without changing its IP. VM hosted a video game server with active network connections.

Intercontinental VM Migration Moved a VM from Stanford to Japan without changing its IP. VM hosted a video game server with active network connections.

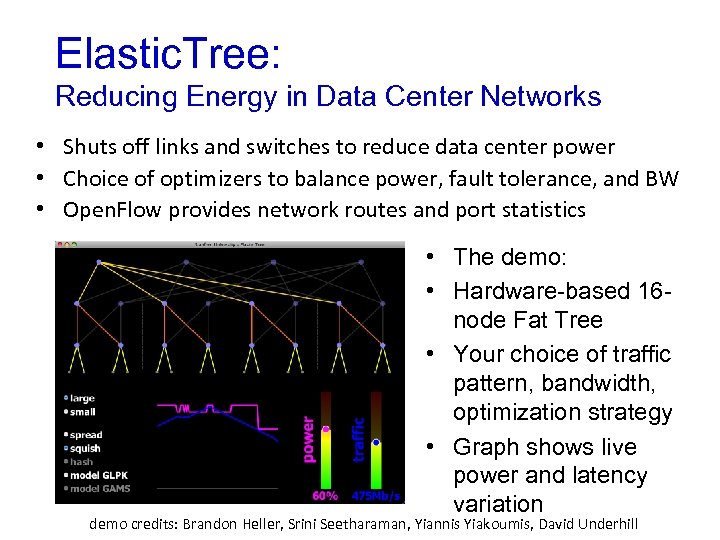

Elastic. Tree: Reducing Energy in Data Center Networks • Shuts off links and switches to reduce data center power • Choice of optimizers to balance power, fault tolerance, and BW • Open. Flow provides network routes and port statistics • The demo: • Hardware-based 16 node Fat Tree • Your choice of traffic pattern, bandwidth, optimization strategy • Graph shows live power and latency variation demo credits: Brandon Heller, Srini Seetharaman, Yiannis Yiakoumis, David Underhill

Elastic. Tree: Reducing Energy in Data Center Networks • Shuts off links and switches to reduce data center power • Choice of optimizers to balance power, fault tolerance, and BW • Open. Flow provides network routes and port statistics • The demo: • Hardware-based 16 node Fat Tree • Your choice of traffic pattern, bandwidth, optimization strategy • Graph shows live power and latency variation demo credits: Brandon Heller, Srini Seetharaman, Yiannis Yiakoumis, David Underhill