d7a98633476b1f66cb0cdb7424594347.ppt

- Количество слайдов: 2

An Application of Reinforcement Helicopter S T A N F O R D Pieter Abbeel, Adam Coates, Overview §Challenges in reinforcement learning for complex physical systems such as helicopters: §Data collection: Aggressive exploration is dangerous. §Difficult to specify the proper reward function for a given task. §We present apprenticeship learning algorithms which use an expert demonstration which: §Do not require explicit exploration. §Do not require an explicit reward function specification. Apprenticeship Learning for the Dynamics Model • Key question: How to fly helicopter for data collection? How to ensure that entire flight envelope is covered by the data collection process? §Experimental results: §Demonstrate effectiveness of algorithms on a highly challenging control problem. §Significantly extend the state of the art in autonomous helicopter flight. In particular, first completion of autonomous stationary forward flips, stationary sideways rolls, nose-in funnels and tail-in funnels. • State-of-the-art: 3 E algorithm, Kearns and Singh (2002). (And its variants/extensions: Kearns and Koller, 1999; Kakade, Kearns and Langford, 2003; Brafman and Tennenholtz, 2002. ) Have good model of dynamics? ment Learning and Apprenticeship Learning NO YES Complex tasks: hard to specify the reward function. Reward Function R Dynamics Model Psa Data collection: aggressive exploration is dangerous Reinforcement Learning Control policy p Apprenticeship Learning for the Reward Function §Reward function can be very difficult to specify. E. g. , for our helicopter control problem we have: §R(s) = c 1 * (position error)2 + c 2 * (orientation error)2 + c 3 * (velocity error)2 + c 4*(angular rate error)2 + … + c 25 * (inputs)2. §Difficult to specify the proper reward function for a given task. §Can we avoid the need to specify the reward function? § Our approach: [Abbeel & Ng, 2004] is based on inverse reinforcement learning [Ng & Russell, 2000]. § Returns policy with performance as good as the expert as measured according to the expert’s unknown reward function in polynomial number of iterations. §Inverse RL Algorithm: §For t = 1, 2, … §Inverse RL step: Estimate expert’s reward function R(s)= w. T (s) such that under R(s) the expert performs better than all previously found policies { i}. § RL step: Compute optimal policy t for the estimated reward w. §Related work: §Imitation learning: learn to predict expert’s actions as a function of states. Usually lacks strong performance guarantees. [E. g. , . Pomerleau, 1989; Sammut et al. , 1992; Kuniyoshi et al. , 1994; Demiris & Hayes, 1994; Amit & Mataric, 2002; Atkeson & Schaal, 1997; …] §Max margin planning, Ratliff et al. , 2006. “Explore” “Exploit” • Can we avoid explicit exploration? Autonomous flight Expert human pilot flight L ea rn Ps (a 1, s 1, a 2, s 2, a 3, s 3, …. ) Reward Function R Dynamics Model Psa Learn Psa (a 1, s 1, a 2, s 2, a 3, s 3, …. ) a Reinforcement Learning Theorem. Assuming we have a polynomial number of teacher demonstrations, then the apprenticeship learning algorithm will return a policy that performs as well as the teacher within a polynomial number of iterations. [Abbeel & Ng, 2005 for more details. ] Control policy p Take away message: In the apprenticeship learning setting, i. e. , when we have an expert demonstration, we do not need explicit exploration to perform as well as the expert.

An Application of Reinforcement Helicopter S T A N F O R D Pieter Abbeel, Adam Coates, Overview §Challenges in reinforcement learning for complex physical systems such as helicopters: §Data collection: Aggressive exploration is dangerous. §Difficult to specify the proper reward function for a given task. §We present apprenticeship learning algorithms which use an expert demonstration which: §Do not require explicit exploration. §Do not require an explicit reward function specification. Apprenticeship Learning for the Dynamics Model • Key question: How to fly helicopter for data collection? How to ensure that entire flight envelope is covered by the data collection process? §Experimental results: §Demonstrate effectiveness of algorithms on a highly challenging control problem. §Significantly extend the state of the art in autonomous helicopter flight. In particular, first completion of autonomous stationary forward flips, stationary sideways rolls, nose-in funnels and tail-in funnels. • State-of-the-art: 3 E algorithm, Kearns and Singh (2002). (And its variants/extensions: Kearns and Koller, 1999; Kakade, Kearns and Langford, 2003; Brafman and Tennenholtz, 2002. ) Have good model of dynamics? ment Learning and Apprenticeship Learning NO YES Complex tasks: hard to specify the reward function. Reward Function R Dynamics Model Psa Data collection: aggressive exploration is dangerous Reinforcement Learning Control policy p Apprenticeship Learning for the Reward Function §Reward function can be very difficult to specify. E. g. , for our helicopter control problem we have: §R(s) = c 1 * (position error)2 + c 2 * (orientation error)2 + c 3 * (velocity error)2 + c 4*(angular rate error)2 + … + c 25 * (inputs)2. §Difficult to specify the proper reward function for a given task. §Can we avoid the need to specify the reward function? § Our approach: [Abbeel & Ng, 2004] is based on inverse reinforcement learning [Ng & Russell, 2000]. § Returns policy with performance as good as the expert as measured according to the expert’s unknown reward function in polynomial number of iterations. §Inverse RL Algorithm: §For t = 1, 2, … §Inverse RL step: Estimate expert’s reward function R(s)= w. T (s) such that under R(s) the expert performs better than all previously found policies { i}. § RL step: Compute optimal policy t for the estimated reward w. §Related work: §Imitation learning: learn to predict expert’s actions as a function of states. Usually lacks strong performance guarantees. [E. g. , . Pomerleau, 1989; Sammut et al. , 1992; Kuniyoshi et al. , 1994; Demiris & Hayes, 1994; Amit & Mataric, 2002; Atkeson & Schaal, 1997; …] §Max margin planning, Ratliff et al. , 2006. “Explore” “Exploit” • Can we avoid explicit exploration? Autonomous flight Expert human pilot flight L ea rn Ps (a 1, s 1, a 2, s 2, a 3, s 3, …. ) Reward Function R Dynamics Model Psa Learn Psa (a 1, s 1, a 2, s 2, a 3, s 3, …. ) a Reinforcement Learning Theorem. Assuming we have a polynomial number of teacher demonstrations, then the apprenticeship learning algorithm will return a policy that performs as well as the teacher within a polynomial number of iterations. [Abbeel & Ng, 2005 for more details. ] Control policy p Take away message: In the apprenticeship learning setting, i. e. , when we have an expert demonstration, we do not need explicit exploration to perform as well as the expert.

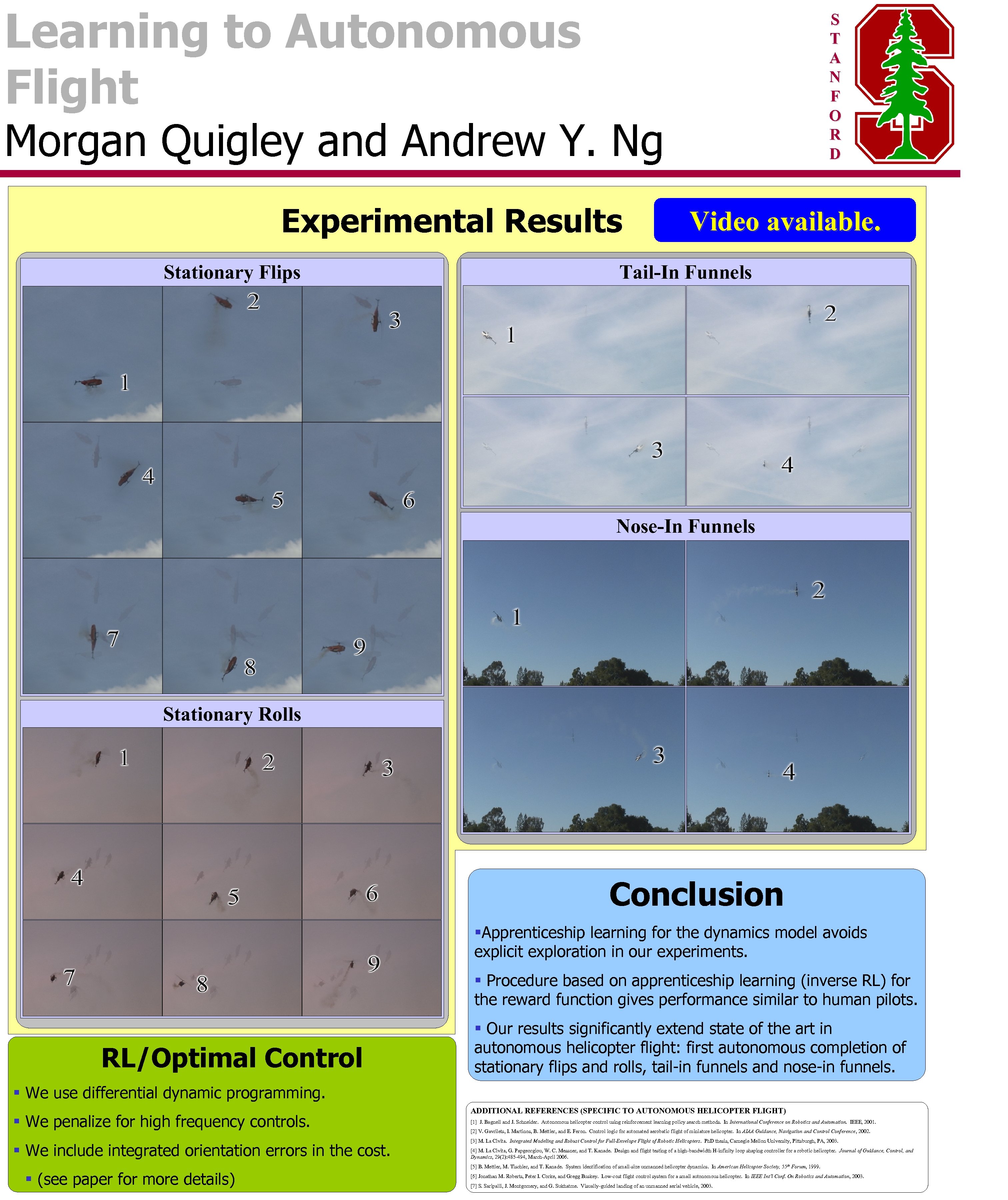

Learning to Autonomous Flight S T A N F O R D Morgan Quigley and Andrew Y. Ng Experimental Results Stationary Flips Video available. Tail-In Funnels Nose-In Funnels Stationary Rolls Conclusion §Apprenticeship learning for the dynamics model avoids explicit exploration in our experiments. § Procedure based on apprenticeship learning (inverse RL) for the reward function gives performance similar to human pilots. RL/Optimal Control § Our results significantly extend state of the art in autonomous helicopter flight: first autonomous completion of stationary flips and rolls, tail-in funnels and nose-in funnels. § We use differential dynamic programming. § We penalize for high frequency controls. § We include integrated orientation errors in the cost. § (see paper for more details) ADDITIONAL REFERENCES (SPECIFIC TO AUTONOMOUS HELICOPTER FLIGHT) [1] J. Bagnell and J. Schneider. Autonomous helicopter control using reinforcement learning policy search methods. In International Conference on Robotics and Automation. IEEE, 2001. [2] V. Gavrilets, I. Martinos, B. Mettler, and E. Feron. Control logic for automated aerobatic flight of miniature helicopter. In AIAA Guidance, Navigation and Control Conference, 2002. [3] M. La Civita. Integrated Modeling and Robust Control for Full-Envelope Flight of Robotic Helicopters. Ph. D thesis, Carnegie Mellon University, Pittsburgh, PA, 2003. [4] M. La Civita, G. Papgeorgiou, W. C. Messner, and T. Kanade. Design and flight testing of a high-bandwidth H-infinity loop shaping controller for a robotic helicopter. Journal of Guidance, Control, and Dynamics, 29(2): 485 -494, March-April 2006. [5] B. Mettler, M. Tischler, and T. Kanade. System identification of small-size unmanned helicopter dynamics. In American Helicopter Society, 55 th Forum, 1999. [6] Jonathan M. Roberts, Peter I. Corke, and Gregg Buskey. Low-cost flight control system for a small autonomous helicopter. In IEEE Int’l Conf. On Robotics and Automation, 2003. [7] S. Saripalli, J. Montgomery, and G. Sukhatme. Visually-guided landing of an unmanned aerial vehicle, 2003.

Learning to Autonomous Flight S T A N F O R D Morgan Quigley and Andrew Y. Ng Experimental Results Stationary Flips Video available. Tail-In Funnels Nose-In Funnels Stationary Rolls Conclusion §Apprenticeship learning for the dynamics model avoids explicit exploration in our experiments. § Procedure based on apprenticeship learning (inverse RL) for the reward function gives performance similar to human pilots. RL/Optimal Control § Our results significantly extend state of the art in autonomous helicopter flight: first autonomous completion of stationary flips and rolls, tail-in funnels and nose-in funnels. § We use differential dynamic programming. § We penalize for high frequency controls. § We include integrated orientation errors in the cost. § (see paper for more details) ADDITIONAL REFERENCES (SPECIFIC TO AUTONOMOUS HELICOPTER FLIGHT) [1] J. Bagnell and J. Schneider. Autonomous helicopter control using reinforcement learning policy search methods. In International Conference on Robotics and Automation. IEEE, 2001. [2] V. Gavrilets, I. Martinos, B. Mettler, and E. Feron. Control logic for automated aerobatic flight of miniature helicopter. In AIAA Guidance, Navigation and Control Conference, 2002. [3] M. La Civita. Integrated Modeling and Robust Control for Full-Envelope Flight of Robotic Helicopters. Ph. D thesis, Carnegie Mellon University, Pittsburgh, PA, 2003. [4] M. La Civita, G. Papgeorgiou, W. C. Messner, and T. Kanade. Design and flight testing of a high-bandwidth H-infinity loop shaping controller for a robotic helicopter. Journal of Guidance, Control, and Dynamics, 29(2): 485 -494, March-April 2006. [5] B. Mettler, M. Tischler, and T. Kanade. System identification of small-size unmanned helicopter dynamics. In American Helicopter Society, 55 th Forum, 1999. [6] Jonathan M. Roberts, Peter I. Corke, and Gregg Buskey. Low-cost flight control system for a small autonomous helicopter. In IEEE Int’l Conf. On Robotics and Automation, 2003. [7] S. Saripalli, J. Montgomery, and G. Sukhatme. Visually-guided landing of an unmanned aerial vehicle, 2003.