664de900fd34d49a217fb9e8deb1cc66.ppt

- Количество слайдов: 27

Alpha (elephant) flow characterization M. Veeraraghavan University of Virginia (UVA) mvee@virginia. edu Chris Tracy ESnet ctracy@es. net Feb. 24, 2014 Thanks to the US DOE ASCR for grants DE-SC 0002350 and DESC 0007341 (UVA), and for DE-AC 02 - 05 CH 11231 (ESnet) Thanks to Brian Tierney, Chin Guok, Eric Pouyoul UVA Students: Zhenzhen Yan, Tian Jin, Zhengyang Liu, Hanke (Casey) Meng, Ranjana Addanki, Haoyu Chen, and Sam Elliott 1

Alpha (elephant) flow characterization M. Veeraraghavan University of Virginia (UVA) mvee@virginia. edu Chris Tracy ESnet ctracy@es. net Feb. 24, 2014 Thanks to the US DOE ASCR for grants DE-SC 0002350 and DESC 0007341 (UVA), and for DE-AC 02 - 05 CH 11231 (ESnet) Thanks to Brian Tierney, Chin Guok, Eric Pouyoul UVA Students: Zhenzhen Yan, Tian Jin, Zhengyang Liu, Hanke (Casey) Meng, Ranjana Addanki, Haoyu Chen, and Sam Elliott 1

Outline • HNTES (Hybrid Network Traffic Engineering System) relation to AFCS • Alpha Flow Characterization System (AFCS) – – Algorithm Dataset analysis: May-Nov. 2011 (ESnet 4) Applications Current analysis and GUI: 2013 (ESnet 5) • Feedback: of interest to sites? 2

Outline • HNTES (Hybrid Network Traffic Engineering System) relation to AFCS • Alpha Flow Characterization System (AFCS) – – Algorithm Dataset analysis: May-Nov. 2011 (ESnet 4) Applications Current analysis and GUI: 2013 (ESnet 5) • Feedback: of interest to sites? 2

HNTES vs. AFCS • Goal of HNTES: – identify IP addresses of completed flows and then set firewall filters in ingress routers for future -flow redirection to circuits – Requires analysis of only single Net. Flow records • Goal of AFCS: characterize the size, rate and duration of alpha flows – Requires concatenation of multiple Net. Flow records to characterize individual flows – Not aggregation as done by commercial tools 3

HNTES vs. AFCS • Goal of HNTES: – identify IP addresses of completed flows and then set firewall filters in ingress routers for future -flow redirection to circuits – Requires analysis of only single Net. Flow records • Goal of AFCS: characterize the size, rate and duration of alpha flows – Requires concatenation of multiple Net. Flow records to characterize individual flows – Not aggregation as done by commercial tools 3

AFCS • AFCS work is newer: current focus • Easier to operationalize than HNTES – HNTES requires additional step to redirect -flows to AF class through firewall filter config. – Needs new work for ALUs – previous Qo. S experiments on Junipers • Goal: Characterize alpha flows – Determine size (bytes), duration, rate 4

AFCS • AFCS work is newer: current focus • Easier to operationalize than HNTES – HNTES requires additional step to redirect -flows to AF class through firewall filter config. – Needs new work for ALUs – previous Qo. S experiments on Junipers • Goal: Characterize alpha flows – Determine size (bytes), duration, rate 4

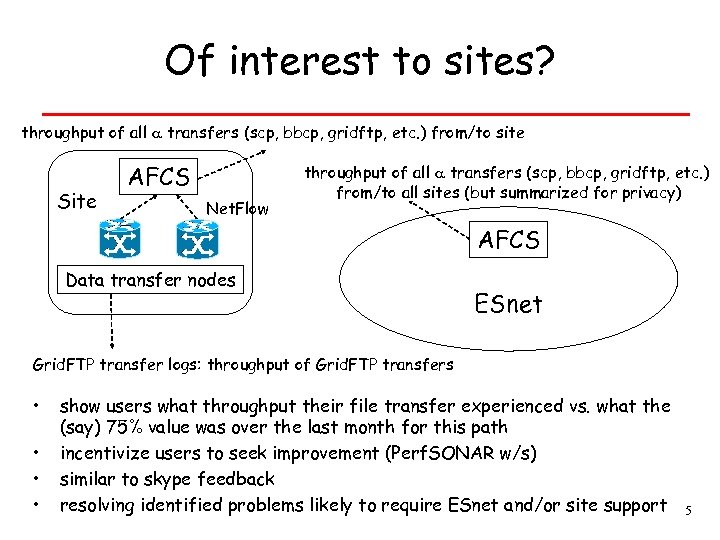

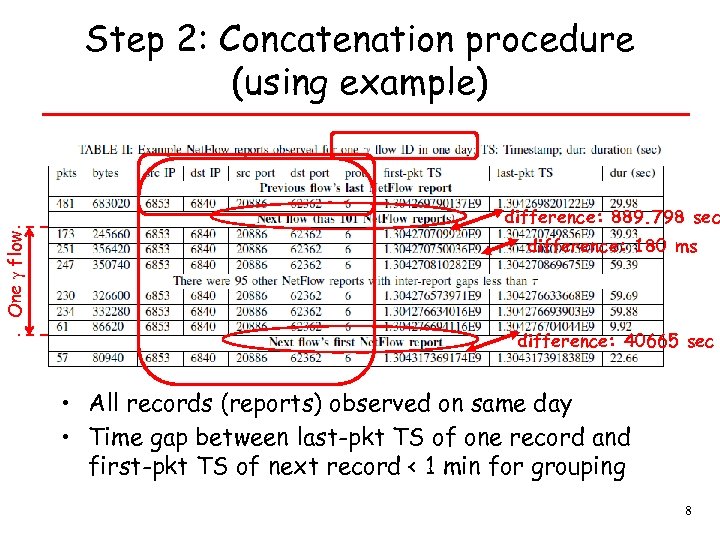

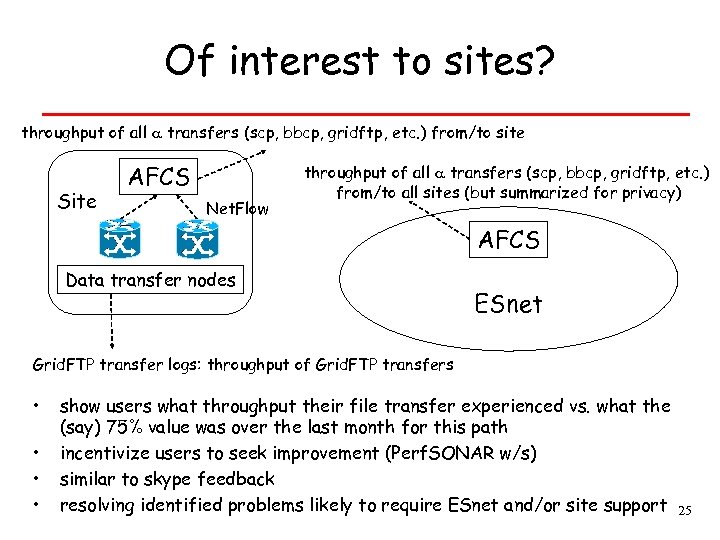

Of interest to sites? throughput of all transfers (scp, bbcp, gridftp, etc. ) from/to site Site AFCS Net. Flow throughput of all transfers (scp, bbcp, gridftp, etc. ) from/to all sites (but summarized for privacy) AFCS Data transfer nodes ESnet Grid. FTP transfer logs: throughput of Grid. FTP transfers • • show users what throughput their file transfer experienced vs. what the (say) 75% value was over the last month for this path incentivize users to seek improvement (Perf. SONAR w/s) similar to skype feedback resolving identified problems likely to require ESnet and/or site support 5

Of interest to sites? throughput of all transfers (scp, bbcp, gridftp, etc. ) from/to site Site AFCS Net. Flow throughput of all transfers (scp, bbcp, gridftp, etc. ) from/to all sites (but summarized for privacy) AFCS Data transfer nodes ESnet Grid. FTP transfer logs: throughput of Grid. FTP transfers • • show users what throughput their file transfer experienced vs. what the (say) 75% value was over the last month for this path incentivize users to seek improvement (Perf. SONAR w/s) similar to skype feedback resolving identified problems likely to require ESnet and/or site support 5

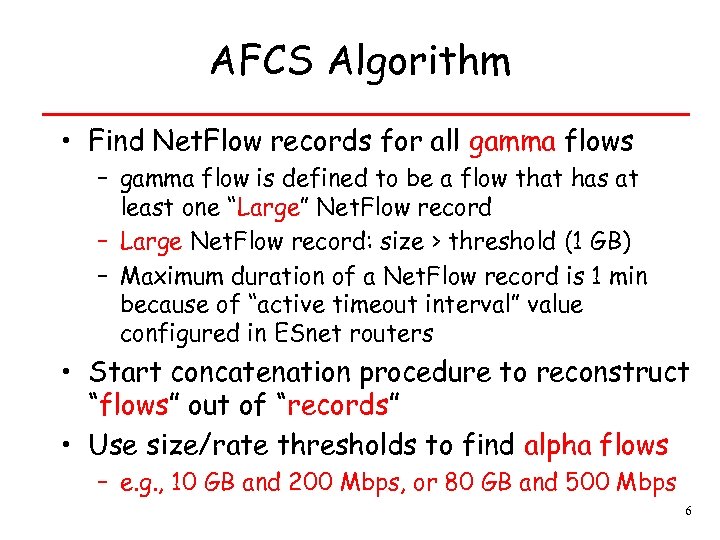

AFCS Algorithm • Find Net. Flow records for all gamma flows – gamma flow is defined to be a flow that has at least one “Large” Net. Flow record – Large Net. Flow record: size > threshold (1 GB) – Maximum duration of a Net. Flow record is 1 min because of “active timeout interval” value configured in ESnet routers • Start concatenation procedure to reconstruct “flows” out of “records” • Use size/rate thresholds to find alpha flows – e. g. , 10 GB and 200 Mbps, or 80 GB and 500 Mbps 6

AFCS Algorithm • Find Net. Flow records for all gamma flows – gamma flow is defined to be a flow that has at least one “Large” Net. Flow record – Large Net. Flow record: size > threshold (1 GB) – Maximum duration of a Net. Flow record is 1 min because of “active timeout interval” value configured in ESnet routers • Start concatenation procedure to reconstruct “flows” out of “records” • Use size/rate thresholds to find alpha flows – e. g. , 10 GB and 200 Mbps, or 80 GB and 500 Mbps 6

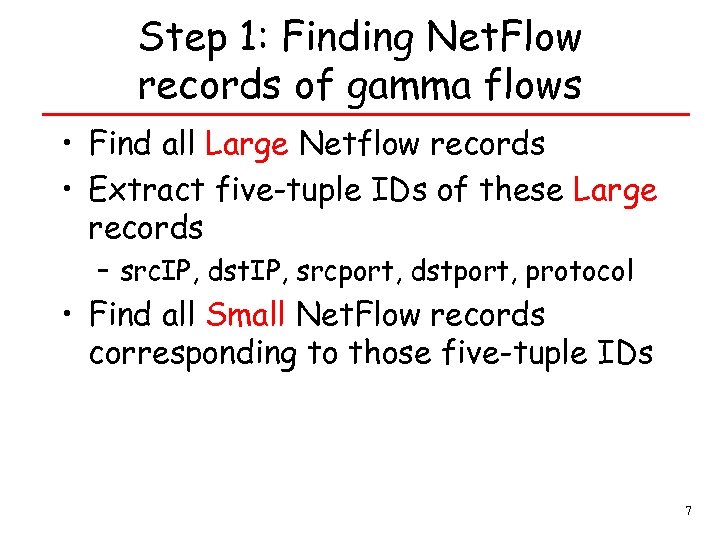

Step 1: Finding Net. Flow records of gamma flows • Find all Large Netflow records • Extract five-tuple IDs of these Large records – src. IP, dst. IP, srcport, dstport, protocol • Find all Small Net. Flow records corresponding to those five-tuple IDs 7

Step 1: Finding Net. Flow records of gamma flows • Find all Large Netflow records • Extract five-tuple IDs of these Large records – src. IP, dst. IP, srcport, dstport, protocol • Find all Small Net. Flow records corresponding to those five-tuple IDs 7

Step 2: Concatenation procedure (using example) One flow difference: 889. 798 sec difference: 180 ms difference: 40665 sec • All records (reports) observed on same day • Time gap between last-pkt TS of one record and first-pkt TS of next record < 1 min for grouping 8

Step 2: Concatenation procedure (using example) One flow difference: 889. 798 sec difference: 180 ms difference: 40665 sec • All records (reports) observed on same day • Time gap between last-pkt TS of one record and first-pkt TS of next record < 1 min for grouping 8

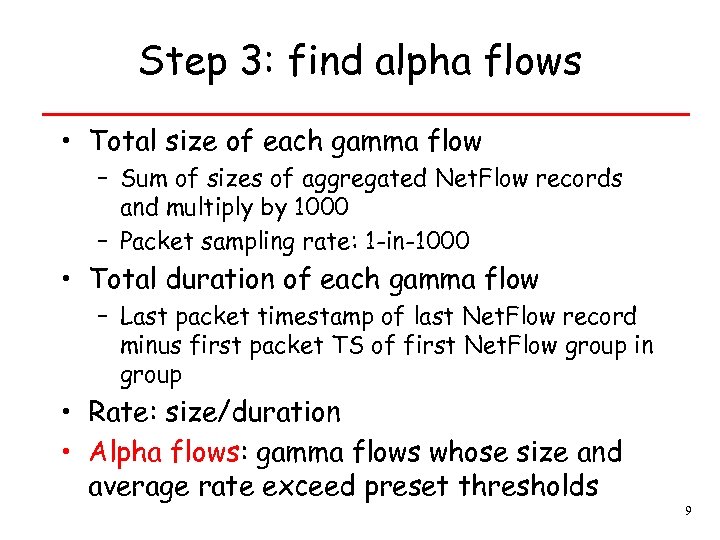

Step 3: find alpha flows • Total size of each gamma flow – Sum of sizes of aggregated Net. Flow records and multiply by 1000 – Packet sampling rate: 1 -in-1000 • Total duration of each gamma flow – Last packet timestamp of last Net. Flow record minus first packet TS of first Net. Flow group in group • Rate: size/duration • Alpha flows: gamma flows whose size and average rate exceed preset thresholds 9

Step 3: find alpha flows • Total size of each gamma flow – Sum of sizes of aggregated Net. Flow records and multiply by 1000 – Packet sampling rate: 1 -in-1000 • Total duration of each gamma flow – Last packet timestamp of last Net. Flow record minus first packet TS of first Net. Flow group in group • Rate: size/duration • Alpha flows: gamma flows whose size and average rate exceed preset thresholds 9

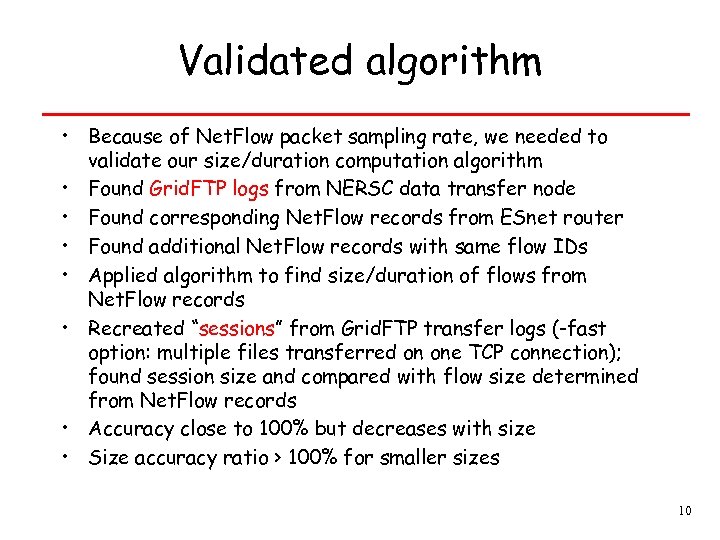

Validated algorithm • Because of Net. Flow packet sampling rate, we needed to validate our size/duration computation algorithm • Found Grid. FTP logs from NERSC data transfer node • Found corresponding Net. Flow records from ESnet router • Found additional Net. Flow records with same flow IDs • Applied algorithm to find size/duration of flows from Net. Flow records • Recreated “sessions” from Grid. FTP transfer logs (-fast option: multiple files transferred on one TCP connection); found session size and compared with flow size determined from Net. Flow records • Accuracy close to 100% but decreases with size • Size accuracy ratio > 100% for smaller sizes 10

Validated algorithm • Because of Net. Flow packet sampling rate, we needed to validate our size/duration computation algorithm • Found Grid. FTP logs from NERSC data transfer node • Found corresponding Net. Flow records from ESnet router • Found additional Net. Flow records with same flow IDs • Applied algorithm to find size/duration of flows from Net. Flow records • Recreated “sessions” from Grid. FTP transfer logs (-fast option: multiple files transferred on one TCP connection); found session size and compared with flow size determined from Net. Flow records • Accuracy close to 100% but decreases with size • Size accuracy ratio > 100% for smaller sizes 10

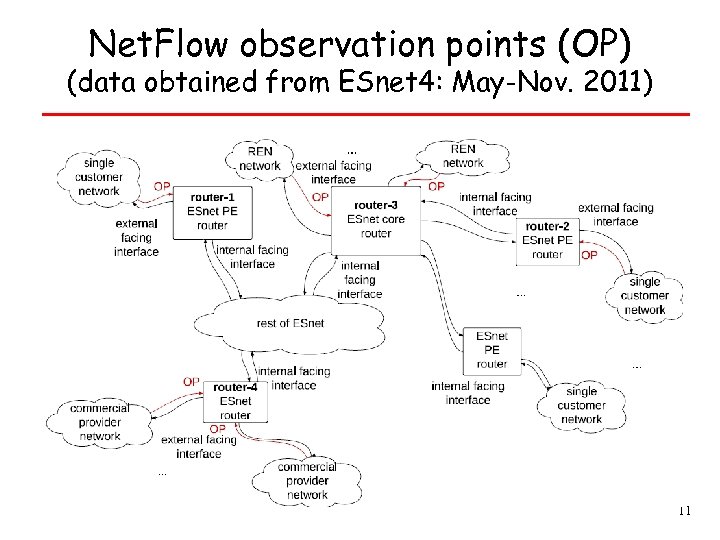

Net. Flow observation points (OP) (data obtained from ESnet 4: May-Nov. 2011) 11

Net. Flow observation points (OP) (data obtained from ESnet 4: May-Nov. 2011) 11

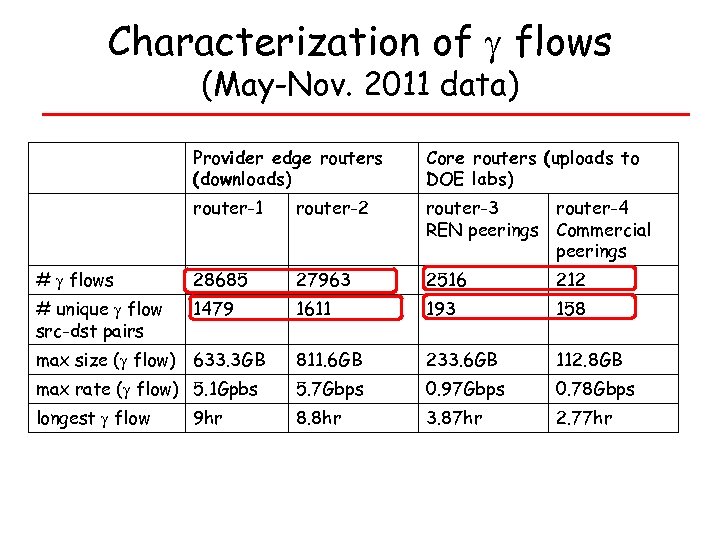

Characterization of flows (May-Nov. 2011 data) Provider edge routers (downloads) Core routers (uploads to DOE labs) router-1 router-2 router-3 router-4 REN peerings Commercial peerings # flows 28685 27963 2516 212 # unique flow src-dst pairs 1479 1611 193 158 max size ( flow) 633. 3 GB 811. 6 GB 233. 6 GB 112. 8 GB max rate ( flow) 5. 1 Gpbs 5. 7 Gbps 0. 97 Gbps 0. 78 Gbps longest flow 8. 8 hr 3. 87 hr 2. 77 hr 9 hr

Characterization of flows (May-Nov. 2011 data) Provider edge routers (downloads) Core routers (uploads to DOE labs) router-1 router-2 router-3 router-4 REN peerings Commercial peerings # flows 28685 27963 2516 212 # unique flow src-dst pairs 1479 1611 193 158 max size ( flow) 633. 3 GB 811. 6 GB 233. 6 GB 112. 8 GB max rate ( flow) 5. 1 Gpbs 5. 7 Gbps 0. 97 Gbps 0. 78 Gbps longest flow 8. 8 hr 3. 87 hr 2. 77 hr 9 hr

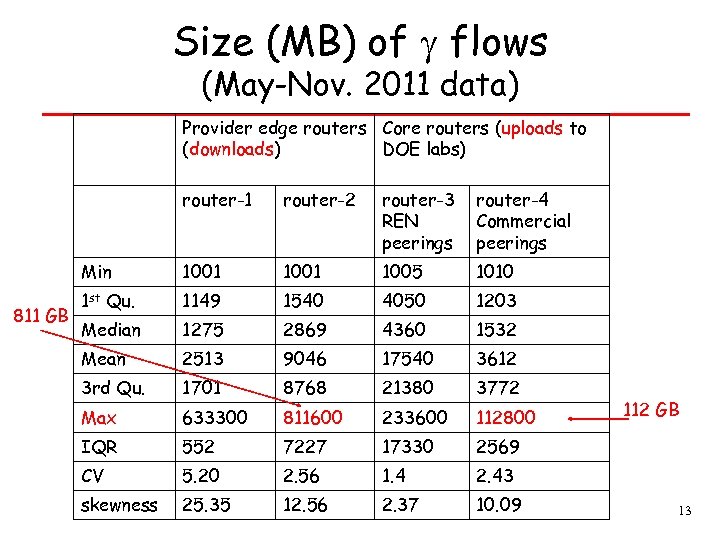

Size (MB) of flows (May-Nov. 2011 data) Provider edge routers Core routers (uploads to (downloads) DOE labs) router-1 router-3 REN peerings router-4 Commercial peerings Min 811 GB router-2 1001 1005 1010 1 st Qu. 1149 1540 4050 1203 Median 1275 2869 4360 1532 Mean 2513 9046 17540 3612 3 rd Qu. 1701 8768 21380 3772 Max 633300 811600 233600 112800 IQR 552 7227 17330 2569 CV 5. 20 2. 56 1. 4 2. 43 skewness 25. 35 12. 56 2. 37 10. 09 112 GB 13

Size (MB) of flows (May-Nov. 2011 data) Provider edge routers Core routers (uploads to (downloads) DOE labs) router-1 router-3 REN peerings router-4 Commercial peerings Min 811 GB router-2 1001 1005 1010 1 st Qu. 1149 1540 4050 1203 Median 1275 2869 4360 1532 Mean 2513 9046 17540 3612 3 rd Qu. 1701 8768 21380 3772 Max 633300 811600 233600 112800 IQR 552 7227 17330 2569 CV 5. 20 2. 56 1. 4 2. 43 skewness 25. 35 12. 56 2. 37 10. 09 112 GB 13

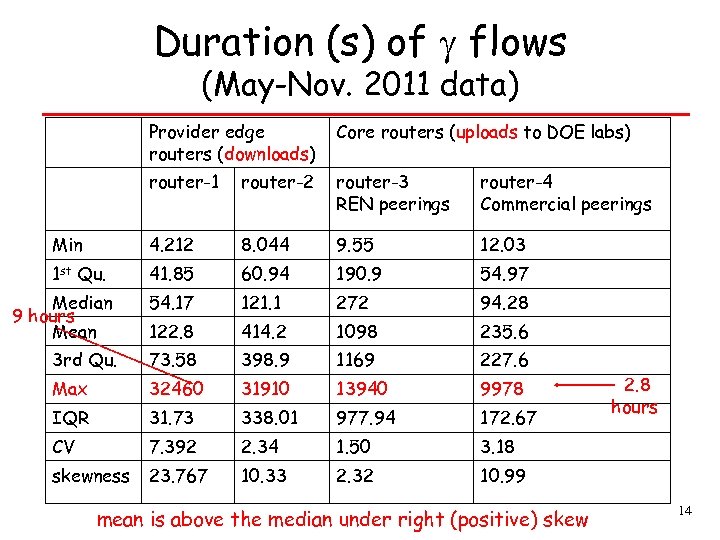

Duration (s) of flows (May-Nov. 2011 data) Provider edge routers (downloads) Core routers (uploads to DOE labs) router-1 router-2 router-3 REN peerings router-4 Commercial peerings Min 4. 212 8. 044 9. 55 12. 03 1 st Qu. 41. 85 60. 94 190. 9 54. 97 Median 9 hours Mean 54. 17 121. 1 272 94. 28 122. 8 414. 2 1098 235. 6 3 rd Qu. 73. 58 398. 9 1169 227. 6 Max 32460 31910 13940 9978 IQR 31. 73 338. 01 977. 94 172. 67 CV 7. 392 2. 34 1. 50 3. 18 skewness 23. 767 10. 33 2. 32 10. 99 mean is above the median under right (positive) skew 2. 8 hours 14

Duration (s) of flows (May-Nov. 2011 data) Provider edge routers (downloads) Core routers (uploads to DOE labs) router-1 router-2 router-3 REN peerings router-4 Commercial peerings Min 4. 212 8. 044 9. 55 12. 03 1 st Qu. 41. 85 60. 94 190. 9 54. 97 Median 9 hours Mean 54. 17 121. 1 272 94. 28 122. 8 414. 2 1098 235. 6 3 rd Qu. 73. 58 398. 9 1169 227. 6 Max 32460 31910 13940 9978 IQR 31. 73 338. 01 977. 94 172. 67 CV 7. 392 2. 34 1. 50 3. 18 skewness 23. 767 10. 33 2. 32 10. 99 mean is above the median under right (positive) skew 2. 8 hours 14

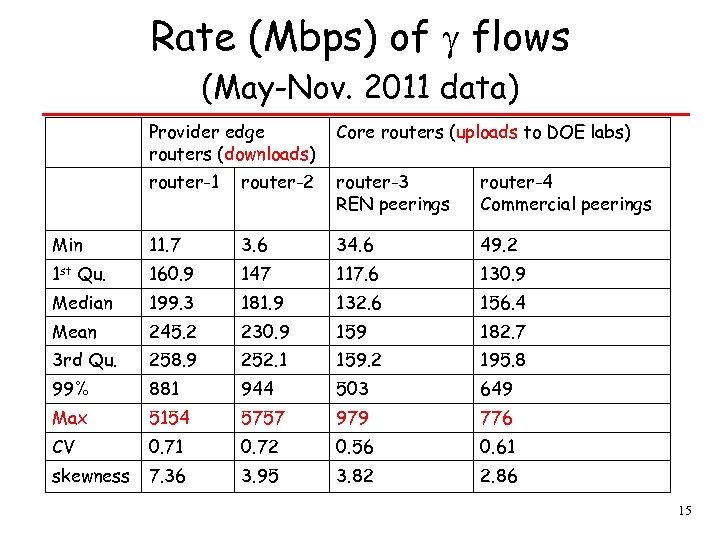

Rate (Mbps) of flows (May-Nov. 2011 data) Provider edge routers (downloads) Core routers (uploads to DOE labs) router-1 router-2 router-3 REN peerings router-4 Commercial peerings Min 11. 7 3. 6 34. 6 49. 2 1 st Qu. 160. 9 147 117. 6 130. 9 Median 199. 3 181. 9 132. 6 156. 4 Mean 245. 2 230. 9 159 182. 7 3 rd Qu. 258. 9 252. 1 159. 2 195. 8 99% 881 944 503 649 Max 5154 5757 979 776 CV 0. 71 0. 72 0. 56 0. 61 skewness 7. 36 3. 95 3. 82 2. 86 15

Rate (Mbps) of flows (May-Nov. 2011 data) Provider edge routers (downloads) Core routers (uploads to DOE labs) router-1 router-2 router-3 REN peerings router-4 Commercial peerings Min 11. 7 3. 6 34. 6 49. 2 1 st Qu. 160. 9 147 117. 6 130. 9 Median 199. 3 181. 9 132. 6 156. 4 Mean 245. 2 230. 9 159 182. 7 3 rd Qu. 258. 9 252. 1 159. 2 195. 8 99% 881 944 503 649 Max 5154 5757 979 776 CV 0. 71 0. 72 0. 56 0. 61 skewness 7. 36 3. 95 3. 82 2. 86 15

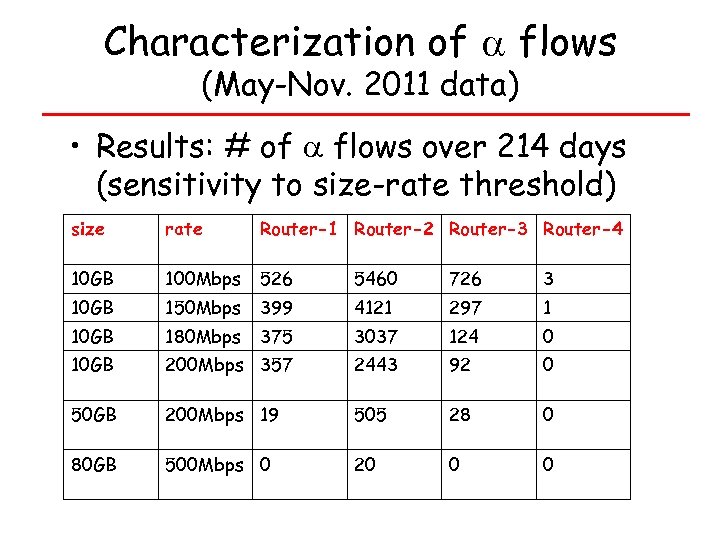

Characterization of flows (May-Nov. 2011 data) • Results: # of flows over 214 days (sensitivity to size-rate threshold) size rate Router-1 Router-2 Router-3 Router-4 10 GB 100 Mbps 526 5460 726 3 10 GB 150 Mbps 399 4121 297 1 10 GB 180 Mbps 375 3037 124 0 10 GB 200 Mbps 357 2443 92 0 50 GB 200 Mbps 19 505 28 0 80 GB 500 Mbps 0 20 0 0

Characterization of flows (May-Nov. 2011 data) • Results: # of flows over 214 days (sensitivity to size-rate threshold) size rate Router-1 Router-2 Router-3 Router-4 10 GB 100 Mbps 526 5460 726 3 10 GB 150 Mbps 399 4121 297 1 10 GB 180 Mbps 375 3037 124 0 10 GB 200 Mbps 357 2443 92 0 50 GB 200 Mbps 19 505 28 0 80 GB 500 Mbps 0 20 0 0

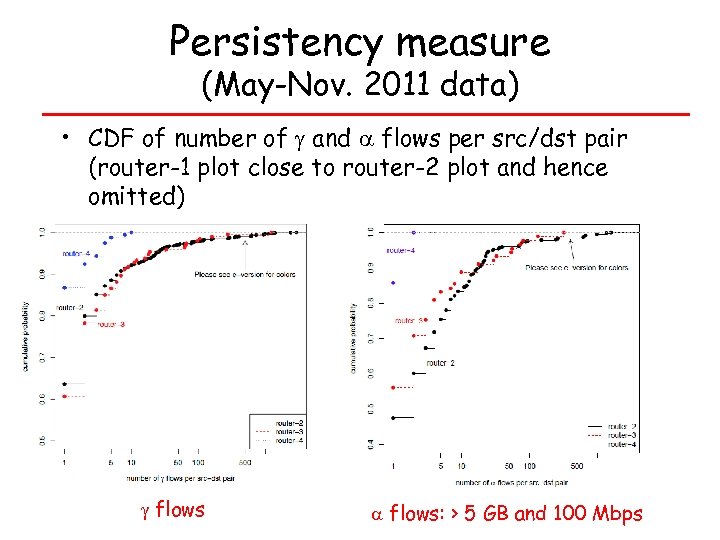

Persistency measure (May-Nov. 2011 data) • CDF of number of and flows per src/dst pair (router-1 plot close to router-2 plot and hence omitted) flows: > 5 GB and 100 Mbps

Persistency measure (May-Nov. 2011 data) • CDF of number of and flows per src/dst pair (router-1 plot close to router-2 plot and hence omitted) flows: > 5 GB and 100 Mbps

Discussion • Largest-sized flow rate: 301 Mbps, fastest-flow size: 7. 14 GB, and longest-flow size: 370 GB • At the low end, one 1. 9 GB lasted 4181 sec • High skewness in size for downloads • Larger-sized flows for downloads than uploads, and more frequent • Max number of and flows per src-dst pair were 2913 and 1596 (for router-2) • The amount of data analyzed is a small subset of our total dataset, both in time and number of routers analyzed. Concatenating flows is somewhat of an intensive task, so we tried to choose routers that would be representative. 18

Discussion • Largest-sized flow rate: 301 Mbps, fastest-flow size: 7. 14 GB, and longest-flow size: 370 GB • At the low end, one 1. 9 GB lasted 4181 sec • High skewness in size for downloads • Larger-sized flows for downloads than uploads, and more frequent • Max number of and flows per src-dst pair were 2913 and 1596 (for router-2) • The amount of data analyzed is a small subset of our total dataset, both in time and number of routers analyzed. Concatenating flows is somewhat of an intensive task, so we tried to choose routers that would be representative. 18

Three applications of AFCS • Diagnose performance problems • Identify suboptimal paths • Traffic engineering (HNTES) 19

Three applications of AFCS • Diagnose performance problems • Identify suboptimal paths • Traffic engineering (HNTES) 19

Diagnose performance problems • Find src-dst pairs that are experiencing high variance in throughput to initiate diagnostics and improve user experience – In the 2913 -flow set between same src-dst pair, 75% of the flows experienced less than 161. 2 Mbps while the highest rate experienced was 1. 1 Gbps (size: 3. 5 GB). – In the 1596 -flow set, 75% of the flows experienced less than 167 Mbps, while the highest rate experienced was 536 Mbps (size: 11 GB). 20

Diagnose performance problems • Find src-dst pairs that are experiencing high variance in throughput to initiate diagnostics and improve user experience – In the 2913 -flow set between same src-dst pair, 75% of the flows experienced less than 161. 2 Mbps while the highest rate experienced was 1. 1 Gbps (size: 3. 5 GB). – In the 1596 -flow set, 75% of the flows experienced less than 167 Mbps, while the highest rate experienced was 536 Mbps (size: 11 GB). 20

Other applications • Identify suboptimal paths – science flows should typically enter ESNet via REN peerings, but some of the observed alpha flows at router-4 could have occurred because of BGP misconfigurations – correlate AFCS findings with BGP data • HNTES: traffic engineering alpha flows 21

Other applications • Identify suboptimal paths – science flows should typically enter ESNet via REN peerings, but some of the observed alpha flows at router-4 could have occurred because of BGP misconfigurations – correlate AFCS findings with BGP data • HNTES: traffic engineering alpha flows 21

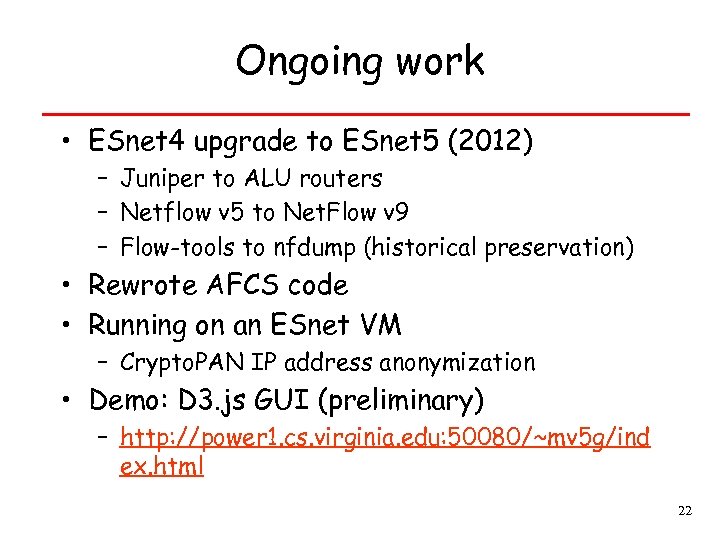

Ongoing work • ESnet 4 upgrade to ESnet 5 (2012) – Juniper to ALU routers – Netflow v 5 to Net. Flow v 9 – Flow-tools to nfdump (historical preservation) • Rewrote AFCS code • Running on an ESnet VM – Crypto. PAN IP address anonymization • Demo: D 3. js GUI (preliminary) – http: //power 1. cs. virginia. edu: 50080/~mv 5 g/ind ex. html 22

Ongoing work • ESnet 4 upgrade to ESnet 5 (2012) – Juniper to ALU routers – Netflow v 5 to Net. Flow v 9 – Flow-tools to nfdump (historical preservation) • Rewrote AFCS code • Running on an ESnet VM – Crypto. PAN IP address anonymization • Demo: D 3. js GUI (preliminary) – http: //power 1. cs. virginia. edu: 50080/~mv 5 g/ind ex. html 22

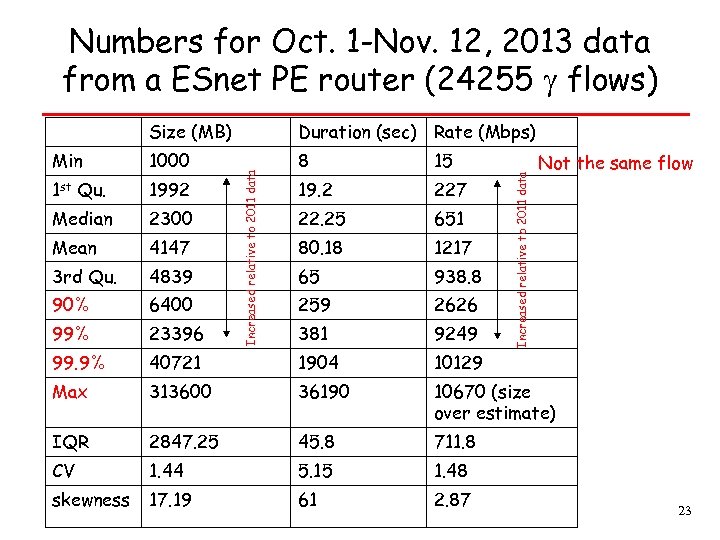

Numbers for Oct. 1 -Nov. 12, 2013 data from a ESnet PE router (24255 flows) Min 1000 8 15 1 st Qu. 1992 19. 2 227 Median 2300 22. 25 651 Mean 4147 80. 18 1217 3 rd Qu. 4839 65 938. 8 90% 6400 259 2626 99% 23396 381 9249 99. 9% 40721 1904 10129 Max 313600 36190 10670 (size over estimate) IQR 2847. 25 45. 8 711. 8 CV 1. 44 5. 15 1. 48 skewness 17. 19 61 2. 87 Increased relative to 2011 data Duration (sec) Rate (Mbps) Increased relative to 2011 data Size (MB) Not the same flow 23

Numbers for Oct. 1 -Nov. 12, 2013 data from a ESnet PE router (24255 flows) Min 1000 8 15 1 st Qu. 1992 19. 2 227 Median 2300 22. 25 651 Mean 4147 80. 18 1217 3 rd Qu. 4839 65 938. 8 90% 6400 259 2626 99% 23396 381 9249 99. 9% 40721 1904 10129 Max 313600 36190 10670 (size over estimate) IQR 2847. 25 45. 8 711. 8 CV 1. 44 5. 15 1. 48 skewness 17. 19 61 2. 87 Increased relative to 2011 data Duration (sec) Rate (Mbps) Increased relative to 2011 data Size (MB) Not the same flow 23

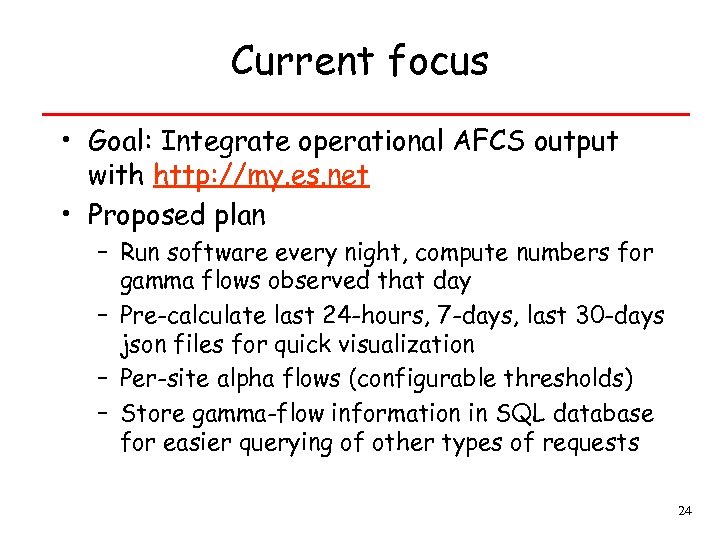

Current focus • Goal: Integrate operational AFCS output with http: //my. es. net • Proposed plan – Run software every night, compute numbers for gamma flows observed that day – Pre-calculate last 24 -hours, 7 -days, last 30 -days json files for quick visualization – Per-site alpha flows (configurable thresholds) – Store gamma-flow information in SQL database for easier querying of other types of requests 24

Current focus • Goal: Integrate operational AFCS output with http: //my. es. net • Proposed plan – Run software every night, compute numbers for gamma flows observed that day – Pre-calculate last 24 -hours, 7 -days, last 30 -days json files for quick visualization – Per-site alpha flows (configurable thresholds) – Store gamma-flow information in SQL database for easier querying of other types of requests 24

Of interest to sites? throughput of all transfers (scp, bbcp, gridftp, etc. ) from/to site Site AFCS Net. Flow throughput of all transfers (scp, bbcp, gridftp, etc. ) from/to all sites (but summarized for privacy) AFCS Data transfer nodes ESnet Grid. FTP transfer logs: throughput of Grid. FTP transfers • • show users what throughput their file transfer experienced vs. what the (say) 75% value was over the last month for this path incentivize users to seek improvement (Perf. SONAR w/s) similar to skype feedback resolving identified problems likely to require ESnet and/or site support 25

Of interest to sites? throughput of all transfers (scp, bbcp, gridftp, etc. ) from/to site Site AFCS Net. Flow throughput of all transfers (scp, bbcp, gridftp, etc. ) from/to all sites (but summarized for privacy) AFCS Data transfer nodes ESnet Grid. FTP transfer logs: throughput of Grid. FTP transfers • • show users what throughput their file transfer experienced vs. what the (say) 75% value was over the last month for this path incentivize users to seek improvement (Perf. SONAR w/s) similar to skype feedback resolving identified problems likely to require ESnet and/or site support 25

Feedback? • What kind of questions about flows would you like the GUI to answer? – Largest or highest-rate flows? – IP addresses about specific flows? • Is deploying an AFCS at your site of interest? • Or is an ESnet AFCS sufficient? 26

Feedback? • What kind of questions about flows would you like the GUI to answer? – Largest or highest-rate flows? – IP addresses about specific flows? • Is deploying an AFCS at your site of interest? • Or is an ESnet AFCS sufficient? 26

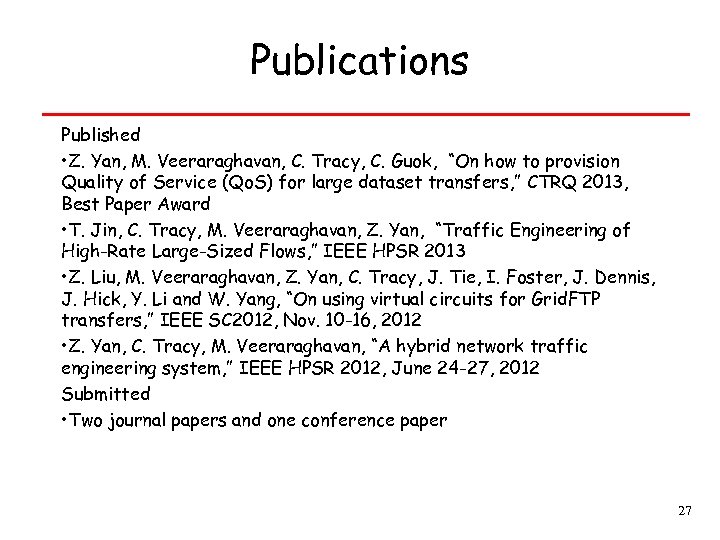

Publications Published • Z. Yan, M. Veeraraghavan, C. Tracy, C. Guok, “On how to provision Quality of Service (Qo. S) for large dataset transfers, ” CTRQ 2013, Best Paper Award • T. Jin, C. Tracy, M. Veeraraghavan, Z. Yan, “Traffic Engineering of High-Rate Large-Sized Flows, ” IEEE HPSR 2013 • Z. Liu, M. Veeraraghavan, Z. Yan, C. Tracy, J. Tie, I. Foster, J. Dennis, J. Hick, Y. Li and W. Yang, “On using virtual circuits for Grid. FTP transfers, ” IEEE SC 2012, Nov. 10 -16, 2012 • Z. Yan, C. Tracy, M. Veeraraghavan, “A hybrid network traffic engineering system, ” IEEE HPSR 2012, June 24 -27, 2012 Submitted • Two journal papers and one conference paper 27

Publications Published • Z. Yan, M. Veeraraghavan, C. Tracy, C. Guok, “On how to provision Quality of Service (Qo. S) for large dataset transfers, ” CTRQ 2013, Best Paper Award • T. Jin, C. Tracy, M. Veeraraghavan, Z. Yan, “Traffic Engineering of High-Rate Large-Sized Flows, ” IEEE HPSR 2013 • Z. Liu, M. Veeraraghavan, Z. Yan, C. Tracy, J. Tie, I. Foster, J. Dennis, J. Hick, Y. Li and W. Yang, “On using virtual circuits for Grid. FTP transfers, ” IEEE SC 2012, Nov. 10 -16, 2012 • Z. Yan, C. Tracy, M. Veeraraghavan, “A hybrid network traffic engineering system, ” IEEE HPSR 2012, June 24 -27, 2012 Submitted • Two journal papers and one conference paper 27