5120b4da3f82b259a4958655d01cc50c.ppt

- Количество слайдов: 10

ALMA Archive Operations Impact on the ARC Facilities

ALMA Archive Operations Impact on the ARC Facilities

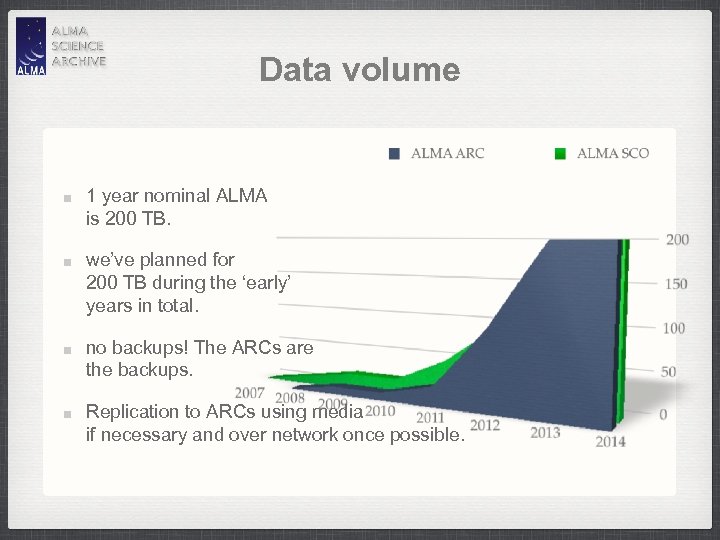

Data volume 1 year nominal ALMA is 200 TB. we’ve planned for 200 TB during the ‘early’ years in total. no backups! The ARCs are the backups. Replication to ARCs using media if necessary and over network once possible.

Data volume 1 year nominal ALMA is 200 TB. we’ve planned for 200 TB during the ‘early’ years in total. no backups! The ARCs are the backups. Replication to ARCs using media if necessary and over network once possible.

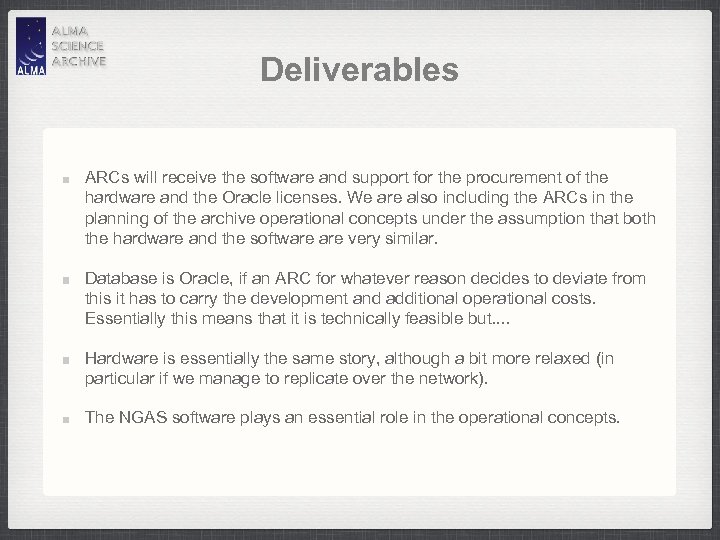

Deliverables ARCs will receive the software and support for the procurement of the hardware and the Oracle licenses. We are also including the ARCs in the planning of the archive operational concepts under the assumption that both the hardware and the software very similar. Database is Oracle, if an ARC for whatever reason decides to deviate from this it has to carry the development and additional operational costs. Essentially this means that it is technically feasible but. . Hardware is essentially the same story, although a bit more relaxed (in particular if we manage to replicate over the network). The NGAS software plays an essential role in the operational concepts.

Deliverables ARCs will receive the software and support for the procurement of the hardware and the Oracle licenses. We are also including the ARCs in the planning of the archive operational concepts under the assumption that both the hardware and the software very similar. Database is Oracle, if an ARC for whatever reason decides to deviate from this it has to carry the development and additional operational costs. Essentially this means that it is technically feasible but. . Hardware is essentially the same story, although a bit more relaxed (in particular if we manage to replicate over the network). The NGAS software plays an essential role in the operational concepts.

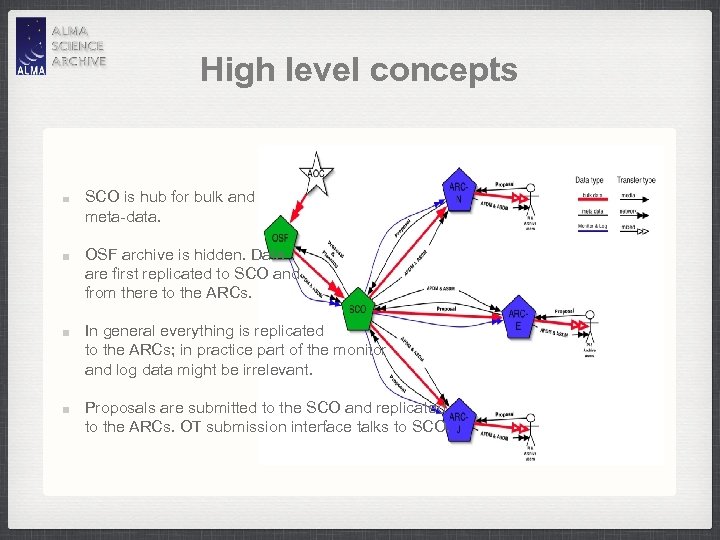

High level concepts SCO is hub for bulk and meta-data. OSF archive is hidden. Data are first replicated to SCO and from there to the ARCs. In general everything is replicated to the ARCs; in practice part of the monitor and log data might be irrelevant. Proposals are submitted to the SCO and replicated to the ARCs. OT submission interface talks to SCO.

High level concepts SCO is hub for bulk and meta-data. OSF archive is hidden. Data are first replicated to SCO and from there to the ARCs. In general everything is replicated to the ARCs; in practice part of the monitor and log data might be irrelevant. Proposals are submitted to the SCO and replicated to the ARCs. OT submission interface talks to SCO.

Nominal Operations Database replication will be done using Oracle streams replication technology to the various sites. This means that meta-data will be available at the ARCs within seconds. Bulk data replication will be done using the NGAS mirroring service (network) or the NGAS cloning service (hard disks). The NGAS archives at the ARCs are virtually independent from the SCO NGAS, i. e. they don’t share DB or any other resources, but they ‘know’ of each other. Network transfer: During periods of average data rate the bulk data should arrive at the ARCs within a few minutes as well (network bandwidth limitation).

Nominal Operations Database replication will be done using Oracle streams replication technology to the various sites. This means that meta-data will be available at the ARCs within seconds. Bulk data replication will be done using the NGAS mirroring service (network) or the NGAS cloning service (hard disks). The NGAS archives at the ARCs are virtually independent from the SCO NGAS, i. e. they don’t share DB or any other resources, but they ‘know’ of each other. Network transfer: During periods of average data rate the bulk data should arrive at the ARCs within a few minutes as well (network bandwidth limitation).

nominal operations Media transfer: Assuming that we would send media twice a week and that they get delivered within one week, the maximum delay would be 1. 5 weeks after the observation. This has to be defined and implemented. Important data could still be replicated through the network. Access to data is always transparently possible, i. e. user accessing ARC can request data even if it has not yet been replicated.

nominal operations Media transfer: Assuming that we would send media twice a week and that they get delivered within one week, the maximum delay would be 1. 5 weeks after the observation. This has to be defined and implemented. Important data could still be replicated through the network. Access to data is always transparently possible, i. e. user accessing ARC can request data even if it has not yet been replicated.

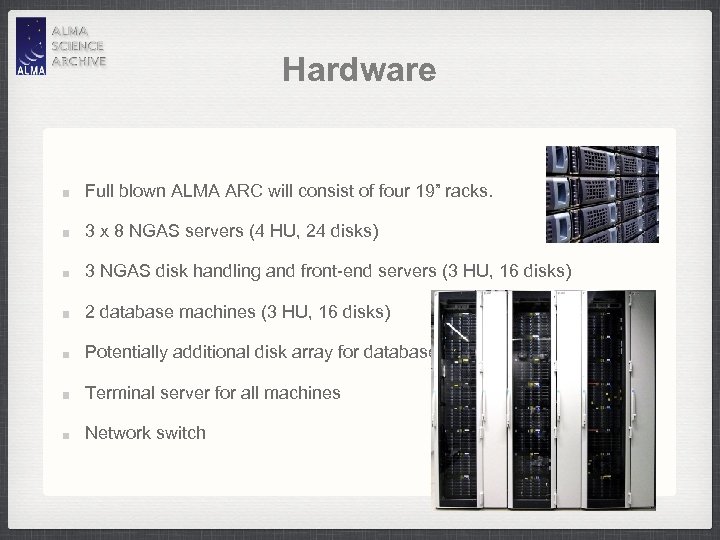

Hardware Full blown ALMA ARC will consist of four 19” racks. 3 x 8 NGAS servers (4 HU, 24 disks) 3 NGAS disk handling and front-end servers (3 HU, 16 disks) 2 database machines (3 HU, 16 disks) Potentially additional disk array for database Terminal server for all machines Network switch

Hardware Full blown ALMA ARC will consist of four 19” racks. 3 x 8 NGAS servers (4 HU, 24 disks) 3 NGAS disk handling and front-end servers (3 HU, 16 disks) 2 database machines (3 HU, 16 disks) Potentially additional disk array for database Terminal server for all machines Network switch

hardware This equipment requires sufficient cooling and good racks One of the 4 HU NGAS servers more than 100 kg, i. e. one of the three racks will be approximately 1 ton. Since one rack with current disk capacity holds about 1 year of ALMA data it should be possible to keep the full ALMA archive stable in terms of total space required by replacing disks with new double capacity disks after 2 -3 years (no increase of data rate). This not only saves space, but also power and maintenance.

hardware This equipment requires sufficient cooling and good racks One of the 4 HU NGAS servers more than 100 kg, i. e. one of the three racks will be approximately 1 ton. Since one rack with current disk capacity holds about 1 year of ALMA data it should be possible to keep the full ALMA archive stable in terms of total space required by replacing disks with new double capacity disks after 2 -3 years (no increase of data rate). This not only saves space, but also power and maintenance.

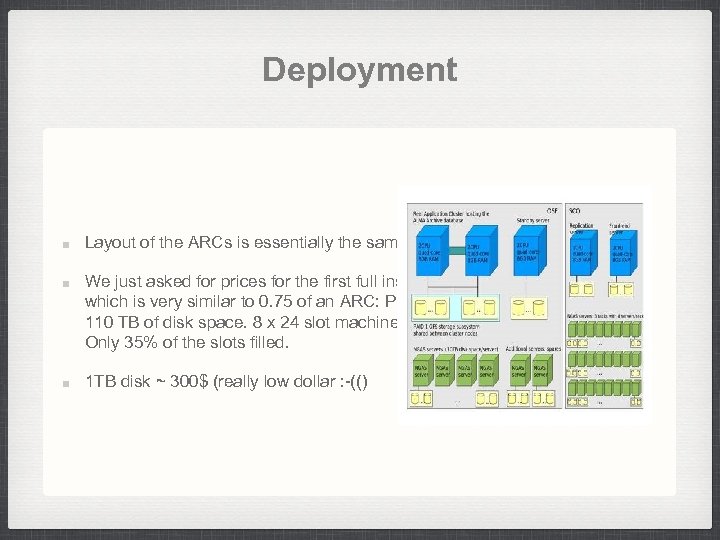

Deployment Layout of the ARCs is essentially the same as for the SCO. We just asked for prices for the first full installation of hardware at the OSF, which is very similar to 0. 75 of an ARC: Price is about 135, 000 $ including 110 TB of disk space. 8 x 24 slot machines, plus 14 x 16 slot machines. Only 35% of the slots filled. 1 TB disk ~ 300$ (really low dollar : -(()

Deployment Layout of the ARCs is essentially the same as for the SCO. We just asked for prices for the first full installation of hardware at the OSF, which is very similar to 0. 75 of an ARC: Price is about 135, 000 $ including 110 TB of disk space. 8 x 24 slot machines, plus 14 x 16 slot machines. Only 35% of the slots filled. 1 TB disk ~ 300$ (really low dollar : -(()

Prices Totals per TB: 300 $/disk + 240 $/slot in computer = 540 $/TB including auxiliary machines (disk handling, DB, front-end). No infrastructure included (racks, cooling, network, UPS. . . ) At the time when we have to procure the hardware for the ARCs this should have gone down to about 270 $/TB. We have to buy about 1 year worth of capacity (200 TB) initially and that should by that time fit in half of the number of machines/slots.

Prices Totals per TB: 300 $/disk + 240 $/slot in computer = 540 $/TB including auxiliary machines (disk handling, DB, front-end). No infrastructure included (racks, cooling, network, UPS. . . ) At the time when we have to procure the hardware for the ARCs this should have gone down to about 270 $/TB. We have to buy about 1 year worth of capacity (200 TB) initially and that should by that time fit in half of the number of machines/slots.