cf29be3f409c4284f65d60938b306e09.ppt

- Количество слайдов: 35

Algorithms for Network Security George Varghese, UCSD

Algorithms for Network Security George Varghese, UCSD

Network Security Background 4 Current Approach: When a new attack appears, analysts work for hours (learning) to obtain a signature. Following this, IDS devices screen traffic for signature (detection) 4 Problem 1: Slow learning by humans does not scale as attacks get faster. Example: Slammer reached critical mass in 10 minutes. 4 Problem 2: Detection of signatures at high speeds (10 Gbps or higher) is hard. 4 This talk: Will describe two proposals to rethink the learning and detection problems that use interesting algorithms.

Network Security Background 4 Current Approach: When a new attack appears, analysts work for hours (learning) to obtain a signature. Following this, IDS devices screen traffic for signature (detection) 4 Problem 1: Slow learning by humans does not scale as attacks get faster. Example: Slammer reached critical mass in 10 minutes. 4 Problem 2: Detection of signatures at high speeds (10 Gbps or higher) is hard. 4 This talk: Will describe two proposals to rethink the learning and detection problems that use interesting algorithms.

Dealing with Slow Learning by Humans by Automating Signature Extraction (OSDI 2004, joint with S. Singh, C. Estan, and S. Savage)

Dealing with Slow Learning by Humans by Automating Signature Extraction (OSDI 2004, joint with S. Singh, C. Estan, and S. Savage)

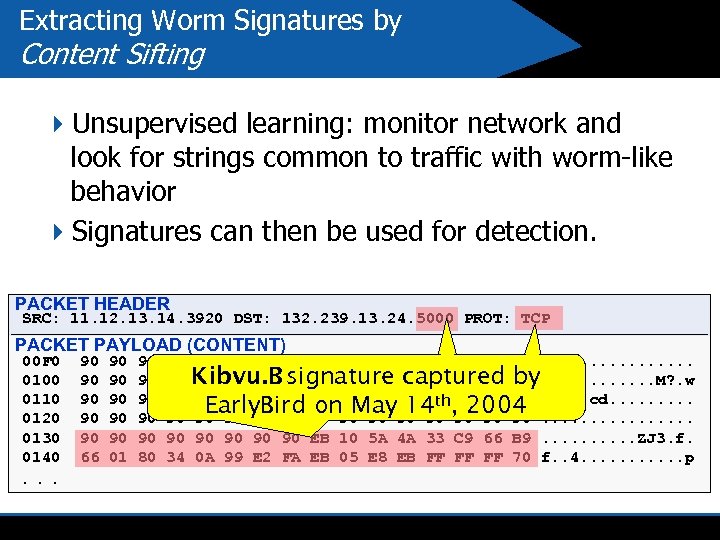

Extracting Worm Signatures by Content Sifting 4 Unsupervised learning: monitor network and look for strings common to traffic with worm-like behavior 4 Signatures can then be used for detection. PACKET HEADER SRC: 11. 12. 13. 14. 3920 DST: 132. 239. 13. 24. 5000 PROT: TCP PACKET PAYLOAD (CONTENT) 00 F 0 0100 0110 0120 0130 0140 . . . 90 90 90 66 90 90 90 01 90 90 90 80 90 90 90 34 90 90 90. . . . Kibvu. B 90 90 90 4 D 3 F E 3 77. . . M? . w 90 90 90 signature captured by FF 63 64 90 90 90. . . cd. . Early. Bird 90 90 90 , 90 90 90. . . . on May 14 th 2004 90 90 EB 10 5 A 4 A 33 C 9 66 B 9. . ZJ 3. f. 0 A 99 E 2 FA EB 05 E 8 EB FF FF FF 70 f. . 4. . . p

Extracting Worm Signatures by Content Sifting 4 Unsupervised learning: monitor network and look for strings common to traffic with worm-like behavior 4 Signatures can then be used for detection. PACKET HEADER SRC: 11. 12. 13. 14. 3920 DST: 132. 239. 13. 24. 5000 PROT: TCP PACKET PAYLOAD (CONTENT) 00 F 0 0100 0110 0120 0130 0140 . . . 90 90 90 66 90 90 90 01 90 90 90 80 90 90 90 34 90 90 90. . . . Kibvu. B 90 90 90 4 D 3 F E 3 77. . . M? . w 90 90 90 signature captured by FF 63 64 90 90 90. . . cd. . Early. Bird 90 90 90 , 90 90 90. . . . on May 14 th 2004 90 90 EB 10 5 A 4 A 33 C 9 66 B 9. . ZJ 3. f. 0 A 99 E 2 FA EB 05 E 8 EB FF FF FF 70 f. . 4. . . p

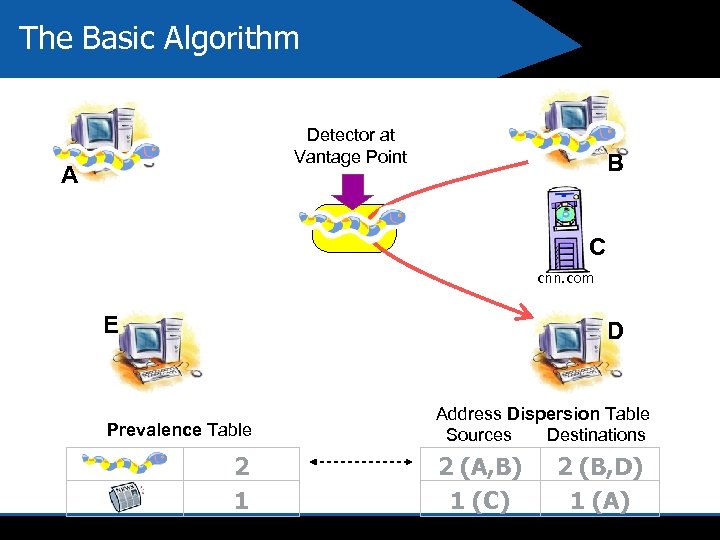

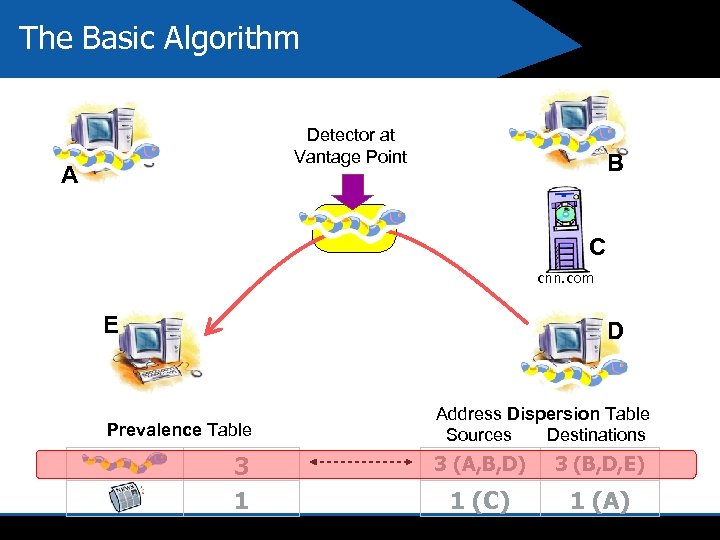

Assumed Characteristics of Worm Behavior we used for Learning 4 Content Prevalence Payload of worm is seen frequently 4 Address Dispersion Payload of worm is seen traversing between many distinct hosts

Assumed Characteristics of Worm Behavior we used for Learning 4 Content Prevalence Payload of worm is seen frequently 4 Address Dispersion Payload of worm is seen traversing between many distinct hosts

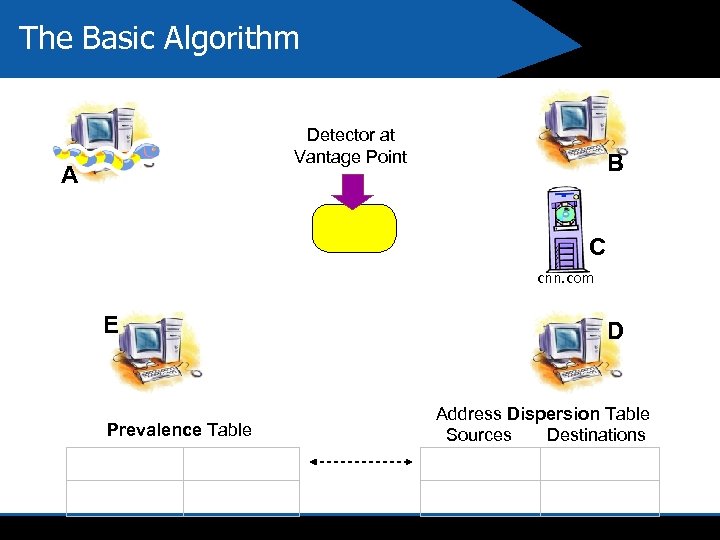

The Basic Algorithm Detector at Vantage Point A B C cnn. com E Prevalence Table D Address Dispersion Table Sources Destinations

The Basic Algorithm Detector at Vantage Point A B C cnn. com E Prevalence Table D Address Dispersion Table Sources Destinations

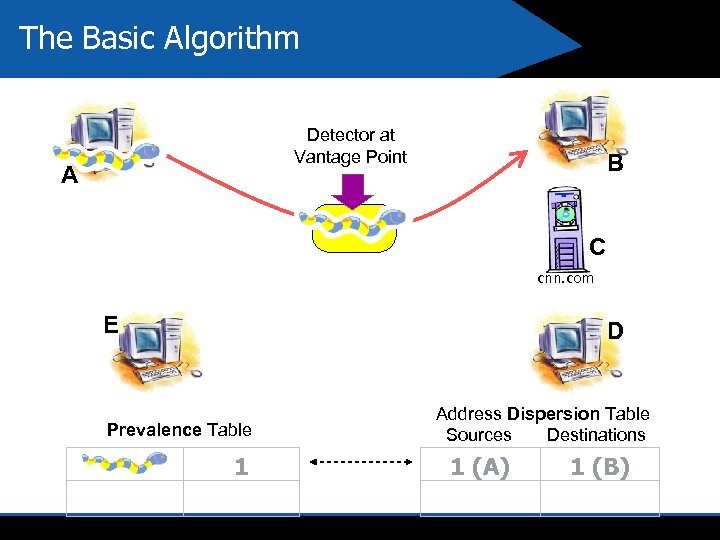

The Basic Algorithm Detector at Vantage Point A B C cnn. com E D Prevalence Table 1 Address Dispersion Table Sources Destinations 1 (A) 1 (B)

The Basic Algorithm Detector at Vantage Point A B C cnn. com E D Prevalence Table 1 Address Dispersion Table Sources Destinations 1 (A) 1 (B)

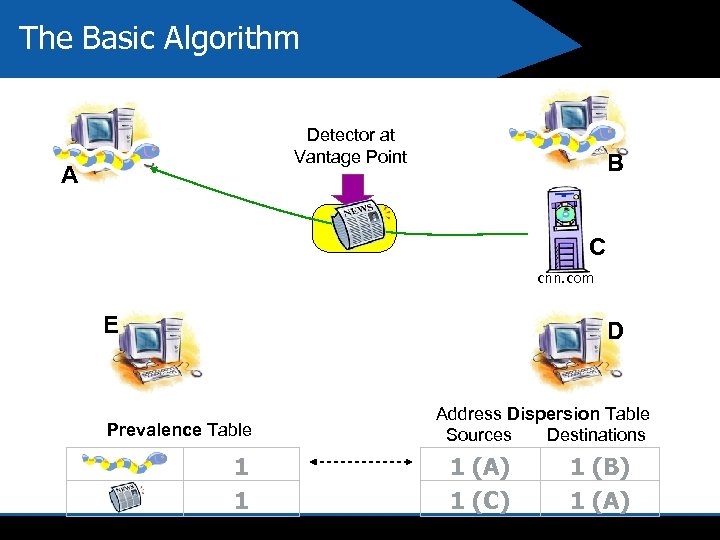

The Basic Algorithm Detector at Vantage Point A B C cnn. com E D Prevalence Table 1 1 Address Dispersion Table Sources Destinations 1 (A) 1 (C) 1 (B) 1 (A)

The Basic Algorithm Detector at Vantage Point A B C cnn. com E D Prevalence Table 1 1 Address Dispersion Table Sources Destinations 1 (A) 1 (C) 1 (B) 1 (A)

The Basic Algorithm Detector at Vantage Point A B C cnn. com E D Prevalence Table 2 1 Address Dispersion Table Sources Destinations 2 (A, B) 1 (C) 2 (B, D) 1 (A)

The Basic Algorithm Detector at Vantage Point A B C cnn. com E D Prevalence Table 2 1 Address Dispersion Table Sources Destinations 2 (A, B) 1 (C) 2 (B, D) 1 (A)

The Basic Algorithm Detector at Vantage Point A B C cnn. com E D Prevalence Table 3 1 Address Dispersion Table Sources Destinations 3 (A, B, D) 3 (B, D, E) 1 (C) 1 (A)

The Basic Algorithm Detector at Vantage Point A B C cnn. com E D Prevalence Table 3 1 Address Dispersion Table Sources Destinations 3 (A, B, D) 3 (B, D, E) 1 (C) 1 (A)

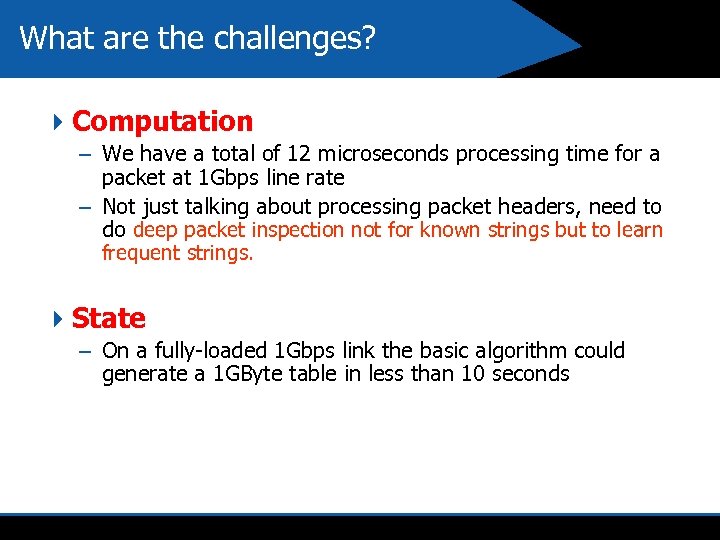

What are the challenges? 4 Computation – We have a total of 12 microseconds processing time for a packet at 1 Gbps line rate – Not just talking about processing packet headers, need to do deep packet inspection not for known strings but to learn frequent strings. 4 State – On a fully-loaded 1 Gbps link the basic algorithm could generate a 1 GByte table in less than 10 seconds

What are the challenges? 4 Computation – We have a total of 12 microseconds processing time for a packet at 1 Gbps line rate – Not just talking about processing packet headers, need to do deep packet inspection not for known strings but to learn frequent strings. 4 State – On a fully-loaded 1 Gbps link the basic algorithm could generate a 1 GByte table in less than 10 seconds

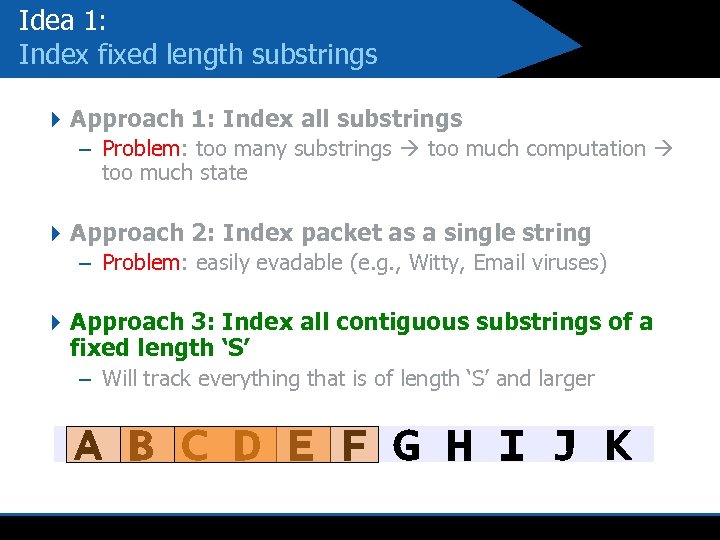

Idea 1: Index fixed length substrings 4 Approach 1: Index all substrings – Problem: too many substrings too much computation too much state 4 Approach 2: Index packet as a single string – Problem: easily evadable (e. g. , Witty, Email viruses) 4 Approach 3: Index all contiguous substrings of a fixed length ‘S’ – Will track everything that is of length ‘S’ and larger A B C D E F G H I J K

Idea 1: Index fixed length substrings 4 Approach 1: Index all substrings – Problem: too many substrings too much computation too much state 4 Approach 2: Index packet as a single string – Problem: easily evadable (e. g. , Witty, Email viruses) 4 Approach 3: Index all contiguous substrings of a fixed length ‘S’ – Will track everything that is of length ‘S’ and larger A B C D E F G H I J K

Idea 2: Incremental Hash Functions 4 Use hashing to reduce state. – 40 byte strings 8 byte hash 4 Use an Incremental hash function to reduce computation. – Rabin Fingerprint: efficient incremental hash

Idea 2: Incremental Hash Functions 4 Use hashing to reduce state. – 40 byte strings 8 byte hash 4 Use an Incremental hash function to reduce computation. – Rabin Fingerprint: efficient incremental hash

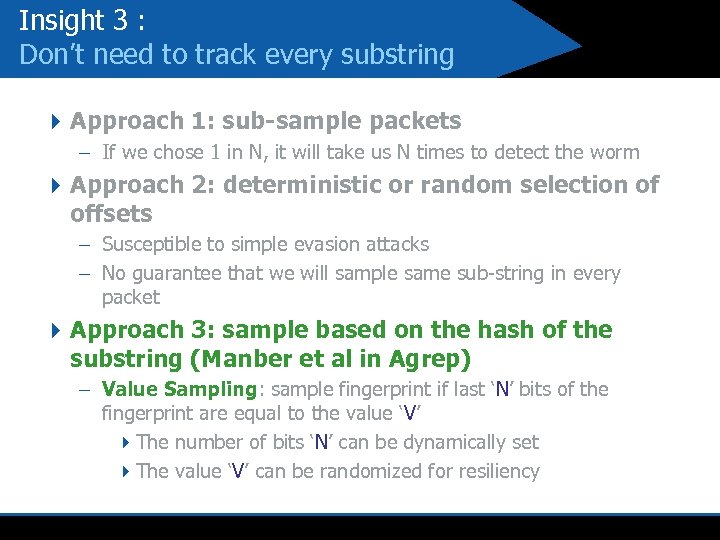

Insight 3 : Don’t need to track every substring 4 Approach 1: sub-sample packets – If we chose 1 in N, it will take us N times to detect the worm 4 Approach 2: deterministic or random selection of offsets – Susceptible to simple evasion attacks – No guarantee that we will sample same sub-string in every packet 4 Approach 3: sample based on the hash of the substring (Manber et al in Agrep) – Value Sampling: sample fingerprint if last ‘N’ bits of the fingerprint are equal to the value ‘V’ 4 The number of bits ‘N’ can be dynamically set 4 The value ‘V’ can be randomized for resiliency

Insight 3 : Don’t need to track every substring 4 Approach 1: sub-sample packets – If we chose 1 in N, it will take us N times to detect the worm 4 Approach 2: deterministic or random selection of offsets – Susceptible to simple evasion attacks – No guarantee that we will sample same sub-string in every packet 4 Approach 3: sample based on the hash of the substring (Manber et al in Agrep) – Value Sampling: sample fingerprint if last ‘N’ bits of the fingerprint are equal to the value ‘V’ 4 The number of bits ‘N’ can be dynamically set 4 The value ‘V’ can be randomized for resiliency

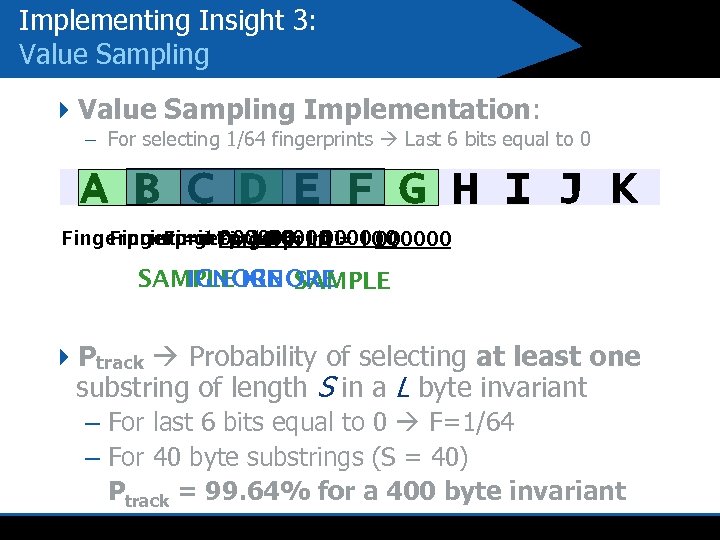

Implementing Insight 3: Value Sampling 4 Value Sampling Implementation: – For selecting 1/64 fingerprints Last 6 bits equal to 0 A B C D E F G H I J K Fingerprint = 11 = 10 00000010 Fingerprint 000001 Fingerprint 000000 SAMPLE IGNORE SAMPLE 4 Ptrack Probability of selecting at least one substring of length S in a L byte invariant – For last 6 bits equal to 0 F=1/64 – For 40 byte substrings (S = 40) Ptrack = 99. 64% for a 400 byte invariant

Implementing Insight 3: Value Sampling 4 Value Sampling Implementation: – For selecting 1/64 fingerprints Last 6 bits equal to 0 A B C D E F G H I J K Fingerprint = 11 = 10 00000010 Fingerprint 000001 Fingerprint 000000 SAMPLE IGNORE SAMPLE 4 Ptrack Probability of selecting at least one substring of length S in a L byte invariant – For last 6 bits equal to 0 F=1/64 – For 40 byte substrings (S = 40) Ptrack = 99. 64% for a 400 byte invariant

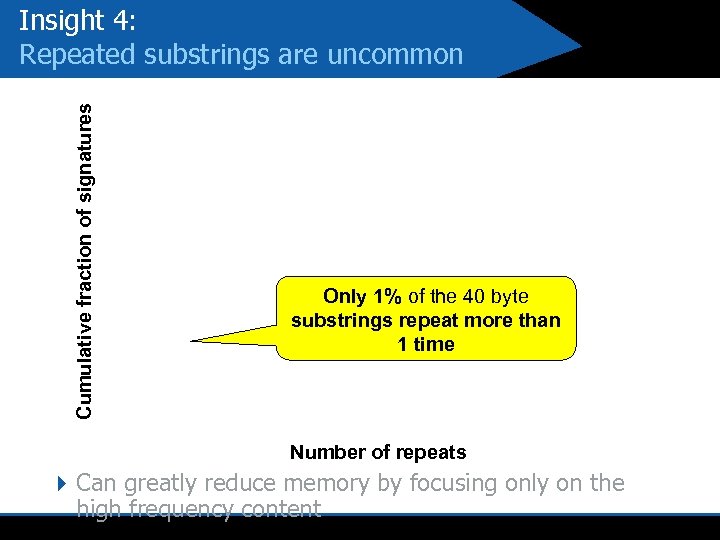

Cumulative fraction of signatures Insight 4: Repeated substrings are uncommon Only 1% of the 40 byte substrings repeat more than 1 time Number of repeats 4 Can greatly reduce memory by focusing only on the high frequency content

Cumulative fraction of signatures Insight 4: Repeated substrings are uncommon Only 1% of the 40 byte substrings repeat more than 1 time Number of repeats 4 Can greatly reduce memory by focusing only on the high frequency content

Implementing Insight 4: Use an approximate high-pass filter 4 Multi Stage Filters use randomized techniques to implement a high pass filter using low memory and few false positives [Estan. Varghese 02]. Similar to approach by Motwani et al. – Use the content hash as a flow identifier 4 Three orders of magnitude improvement over the naïve approach (1 entry/string)

Implementing Insight 4: Use an approximate high-pass filter 4 Multi Stage Filters use randomized techniques to implement a high pass filter using low memory and few false positives [Estan. Varghese 02]. Similar to approach by Motwani et al. – Use the content hash as a flow identifier 4 Three orders of magnitude improvement over the naïve approach (1 entry/string)

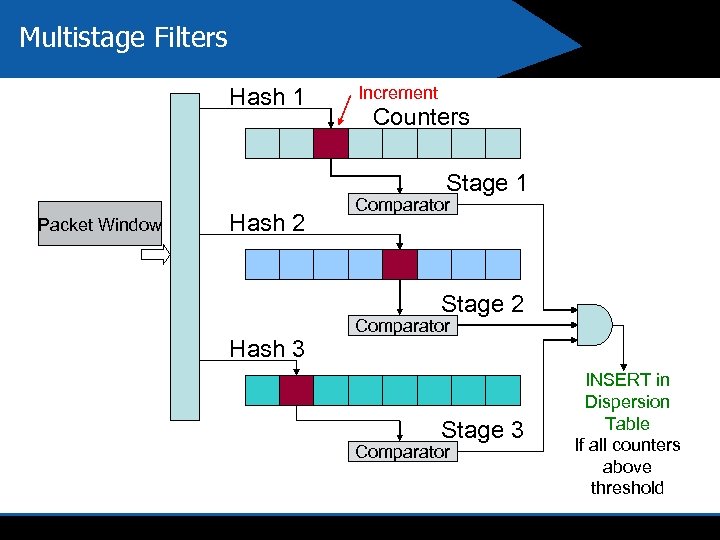

Multistage Filters Hash 1 Increment Counters Stage 1 Packet Window Hash 2 Comparator Stage 2 Hash 3 Comparator Stage 3 Comparator INSERT in Dispersion Table If all counters above threshold

Multistage Filters Hash 1 Increment Counters Stage 1 Packet Window Hash 2 Comparator Stage 2 Hash 3 Comparator Stage 3 Comparator INSERT in Dispersion Table If all counters above threshold

Insight 5: Prevalent substrings with high dispersion are rare

Insight 5: Prevalent substrings with high dispersion are rare

Insight 5 : Prevalent substrings with high dispersion are rare 4 Naïve approach would maintain a list of sources (or destinations) 4 We only care if dispersion is high – Approximate counting suffices 4 Scalable Bitmap Counters – Sample larger virtual bitmap; scale and adjust for error – Order of magnitude less memory than naïve approach and acceptable error (<30%)

Insight 5 : Prevalent substrings with high dispersion are rare 4 Naïve approach would maintain a list of sources (or destinations) 4 We only care if dispersion is high – Approximate counting suffices 4 Scalable Bitmap Counters – Sample larger virtual bitmap; scale and adjust for error – Order of magnitude less memory than naïve approach and acceptable error (<30%)

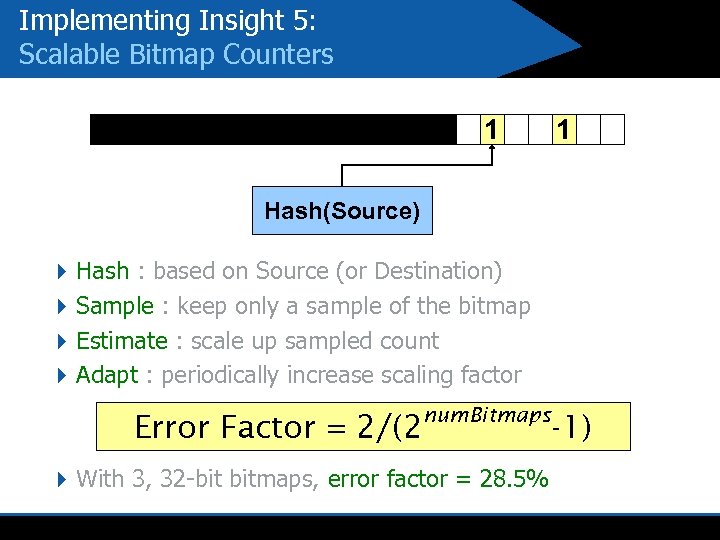

Implementing Insight 5: Scalable Bitmap Counters 1 1 Hash(Source) 4 Hash : based on Source (or Destination) 4 Sample : keep only a sample of the bitmap 4 Estimate : scale up sampled count 4 Adapt : periodically increase scaling factor Error Factor = 2/(2 num. Bitmaps 4 With 3, 32 -bit bitmaps, error factor = 28. 5% -1)

Implementing Insight 5: Scalable Bitmap Counters 1 1 Hash(Source) 4 Hash : based on Source (or Destination) 4 Sample : keep only a sample of the bitmap 4 Estimate : scale up sampled count 4 Adapt : periodically increase scaling factor Error Factor = 2/(2 num. Bitmaps 4 With 3, 32 -bit bitmaps, error factor = 28. 5% -1)

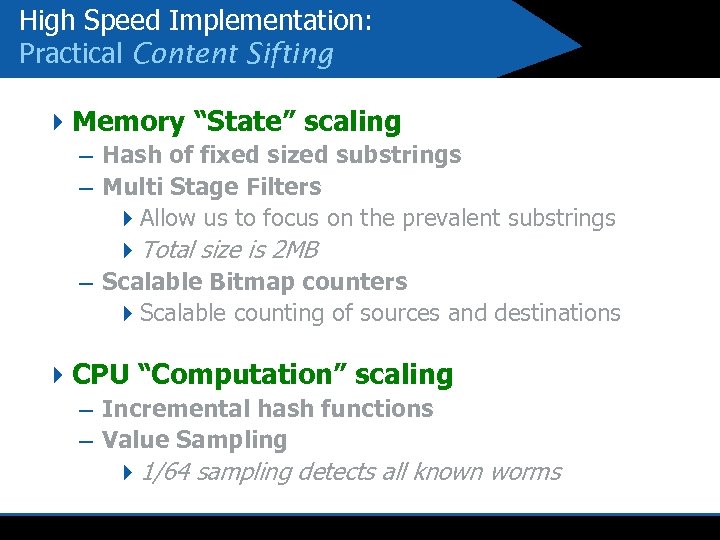

High Speed Implementation: Practical Content Sifting 4 Memory “State” scaling – Hash of fixed sized substrings – Multi Stage Filters 4 Allow us to focus on the prevalent substrings 4 Total size is 2 MB – Scalable Bitmap counters 4 Scalable counting of sources and destinations 4 CPU “Computation” scaling – Incremental hash functions – Value Sampling 41/64 sampling detects all known worms

High Speed Implementation: Practical Content Sifting 4 Memory “State” scaling – Hash of fixed sized substrings – Multi Stage Filters 4 Allow us to focus on the prevalent substrings 4 Total size is 2 MB – Scalable Bitmap counters 4 Scalable counting of sources and destinations 4 CPU “Computation” scaling – Incremental hash functions – Value Sampling 41/64 sampling detects all known worms

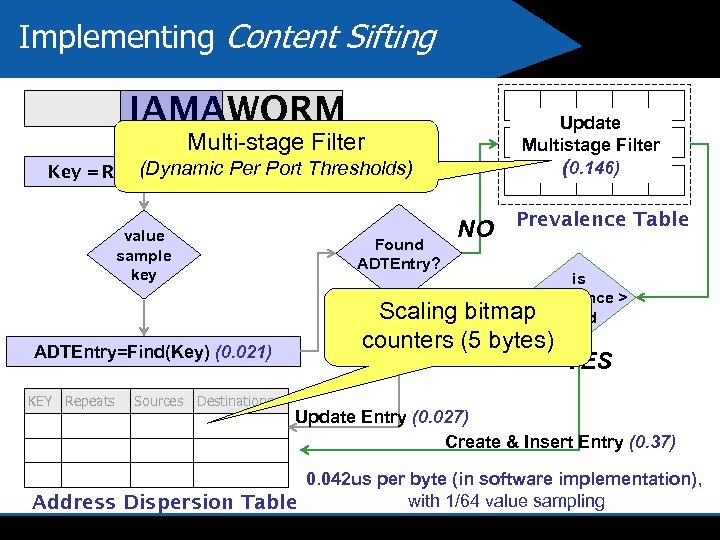

Implementing Content Sifting IAMAWORM Multi-stage Filter (Dynamic Per ) (0. 349, 0. 037) Key = Rabin. Hash(“IAMA Port Thresholds) ” value sample key Found ADTEntry? ADTEntry=Find(Key) (0. 021) KEY Repeats Sources Destinations Update Multistage Filter (0. 146) NO Prevalence Table is prevalence > bitmap thold Scaling counters (5 bytes) YES Update Entry (0. 027) Create & Insert Entry (0. 37) 0. 042 us per byte (in software implementation), with 1/64 value sampling Address Dispersion Table

Implementing Content Sifting IAMAWORM Multi-stage Filter (Dynamic Per ) (0. 349, 0. 037) Key = Rabin. Hash(“IAMA Port Thresholds) ” value sample key Found ADTEntry? ADTEntry=Find(Key) (0. 021) KEY Repeats Sources Destinations Update Multistage Filter (0. 146) NO Prevalence Table is prevalence > bitmap thold Scaling counters (5 bytes) YES Update Entry (0. 027) Create & Insert Entry (0. 37) 0. 042 us per byte (in software implementation), with 1/64 value sampling Address Dispersion Table

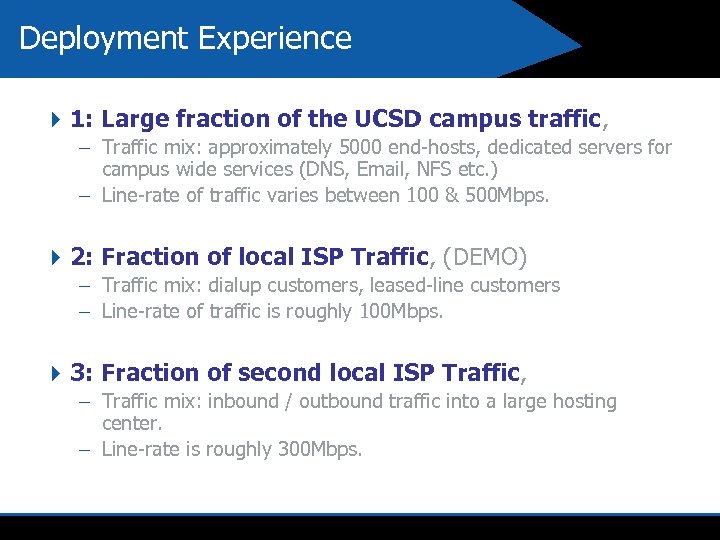

Deployment Experience 4 1: Large fraction of the UCSD campus traffic, – Traffic mix: approximately 5000 end-hosts, dedicated servers for campus wide services (DNS, Email, NFS etc. ) – Line-rate of traffic varies between 100 & 500 Mbps. 4 2: Fraction of local ISP Traffic, (DEMO) – Traffic mix: dialup customers, leased-line customers – Line-rate of traffic is roughly 100 Mbps. 4 3: Fraction of second local ISP Traffic, – Traffic mix: inbound / outbound traffic into a large hosting center. – Line-rate is roughly 300 Mbps.

Deployment Experience 4 1: Large fraction of the UCSD campus traffic, – Traffic mix: approximately 5000 end-hosts, dedicated servers for campus wide services (DNS, Email, NFS etc. ) – Line-rate of traffic varies between 100 & 500 Mbps. 4 2: Fraction of local ISP Traffic, (DEMO) – Traffic mix: dialup customers, leased-line customers – Line-rate of traffic is roughly 100 Mbps. 4 3: Fraction of second local ISP Traffic, – Traffic mix: inbound / outbound traffic into a large hosting center. – Line-rate is roughly 300 Mbps.

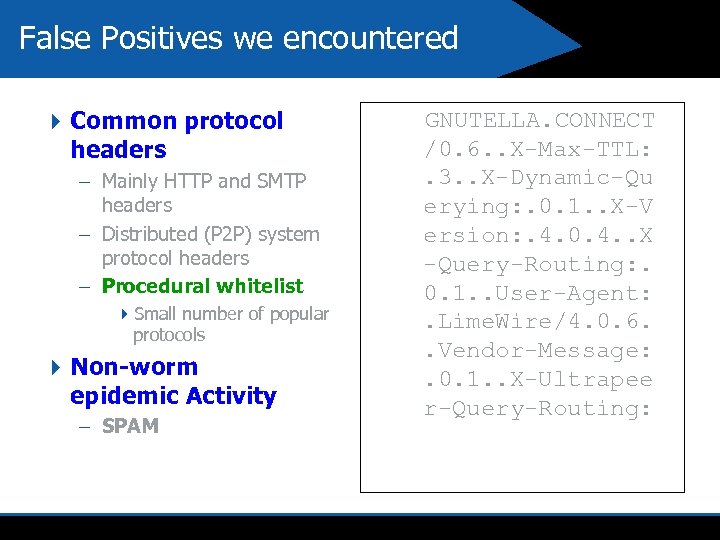

False Positives we encountered 4 Common protocol headers – Mainly HTTP and SMTP headers – Distributed (P 2 P) system protocol headers – Procedural whitelist 4 Small number of popular protocols 4 Non-worm epidemic Activity – SPAM GNUTELLA. CONNECT /0. 6. . X-Max-TTL: . 3. . X-Dynamic-Qu erying: . 0. 1. . X-V ersion: . 4. 0. 4. . X -Query-Routing: . 0. 1. . User-Agent: . Lime. Wire/4. 0. 6. . Vendor-Message: . 0. 1. . X-Ultrapee r-Query-Routing:

False Positives we encountered 4 Common protocol headers – Mainly HTTP and SMTP headers – Distributed (P 2 P) system protocol headers – Procedural whitelist 4 Small number of popular protocols 4 Non-worm epidemic Activity – SPAM GNUTELLA. CONNECT /0. 6. . X-Max-TTL: . 3. . X-Dynamic-Qu erying: . 0. 1. . X-V ersion: . 4. 0. 4. . X -Query-Routing: . 0. 1. . User-Agent: . Lime. Wire/4. 0. 6. . Vendor-Message: . 0. 1. . X-Ultrapee r-Query-Routing:

Other Experience: 4 Lesson 1: From experience, static whitelisting is still not sufficient for HTTP and P 2 P. We needed other more dynamic white listing techniques 4 Lesson 2: Signature selection is key. From worms like Blaster, we get several options. A major delay today in signature release is “vetting” signatures. 4 Lesson 3: Works better for vulnerability based mass attacks; does not work for directed attacks or attacks based on social engineering where rep rate is low, 4 Lesson 4: Major IDS vendors have moved to vulnerability signatures. Automated approaches to this (CMU) are very useful but automated exploit signature detection may also be useful as an addition piece of defense in depth for truly Zero day stuff.

Other Experience: 4 Lesson 1: From experience, static whitelisting is still not sufficient for HTTP and P 2 P. We needed other more dynamic white listing techniques 4 Lesson 2: Signature selection is key. From worms like Blaster, we get several options. A major delay today in signature release is “vetting” signatures. 4 Lesson 3: Works better for vulnerability based mass attacks; does not work for directed attacks or attacks based on social engineering where rep rate is low, 4 Lesson 4: Major IDS vendors have moved to vulnerability signatures. Automated approaches to this (CMU) are very useful but automated exploit signature detection may also be useful as an addition piece of defense in depth for truly Zero day stuff.

Related Work and issues 43 roughly concurrent pieces of work: Autograph (CMU), Honeycomb (Cambridge) and Early. Bird (us). Early. Bird is only 4 Further work at CMU extending Autograph to polymorphic worms (can do with Earlybird in realtime as well). Automating vulnerability sigs 4 Issues: encryption, P 2 P false positives like Bit Torrent, etc.

Related Work and issues 43 roughly concurrent pieces of work: Autograph (CMU), Honeycomb (Cambridge) and Early. Bird (us). Early. Bird is only 4 Further work at CMU extending Autograph to polymorphic worms (can do with Earlybird in realtime as well). Automating vulnerability sigs 4 Issues: encryption, P 2 P false positives like Bit Torrent, etc.

Part 2: Detection of Signatures with Minimal Reassembly (to appear in SIGCOMM 06, joint with F. Bonomi and A. . Fingerhut of Cisco Systems)

Part 2: Detection of Signatures with Minimal Reassembly (to appear in SIGCOMM 06, joint with F. Bonomi and A. . Fingerhut of Cisco Systems)

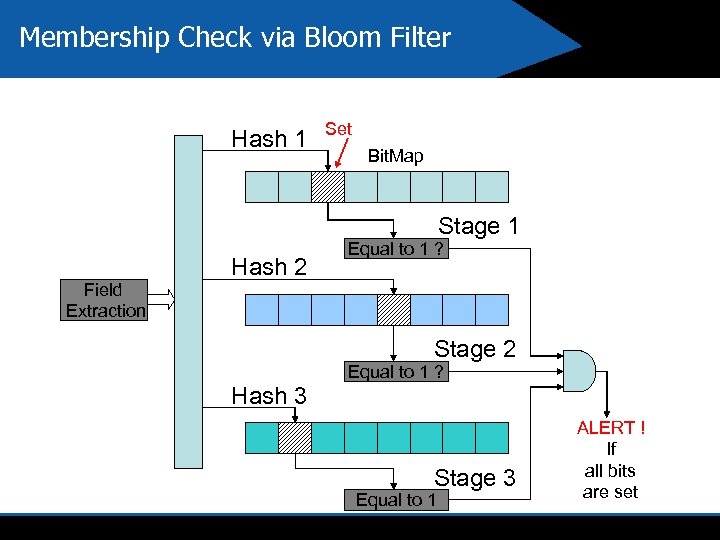

Membership Check via Bloom Filter Hash 1 Set Bit. Map Stage 1 Field Extraction Hash 2 Equal to 1 ? Stage 2 Hash 3 Equal to 1 ? Stage 3 Equal to 1 ALERT ! If all bits are set

Membership Check via Bloom Filter Hash 1 Set Bit. Map Stage 1 Field Extraction Hash 2 Equal to 1 ? Stage 2 Hash 3 Equal to 1 ? Stage 3 Equal to 1 ALERT ! If all bits are set

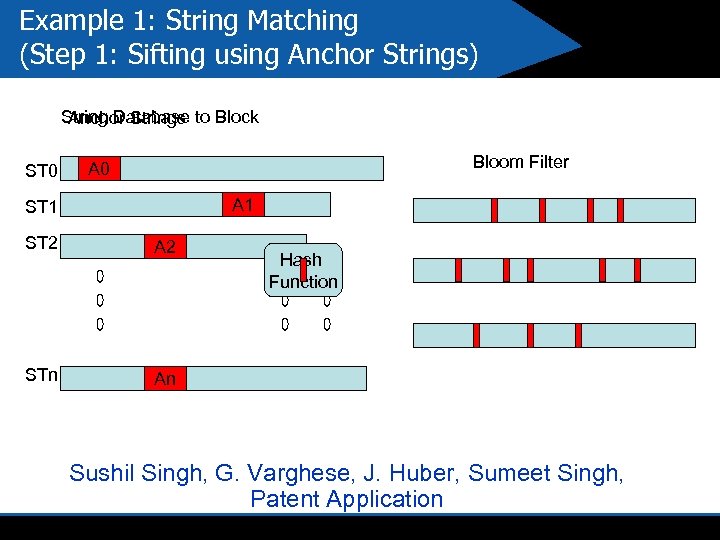

Example 1: String Matching (Step 1: Sifting using Anchor Strings) String Database to Block Anchor Strings ST 0 Bloom Filter A 0 A 1 ST 2 A 2 STn An Hash Function Sushil Singh, G. Varghese, J. Huber, Sumeet Singh, Patent Application

Example 1: String Matching (Step 1: Sifting using Anchor Strings) String Database to Block Anchor Strings ST 0 Bloom Filter A 0 A 1 ST 2 A 2 STn An Hash Function Sushil Singh, G. Varghese, J. Huber, Sumeet Singh, Patent Application

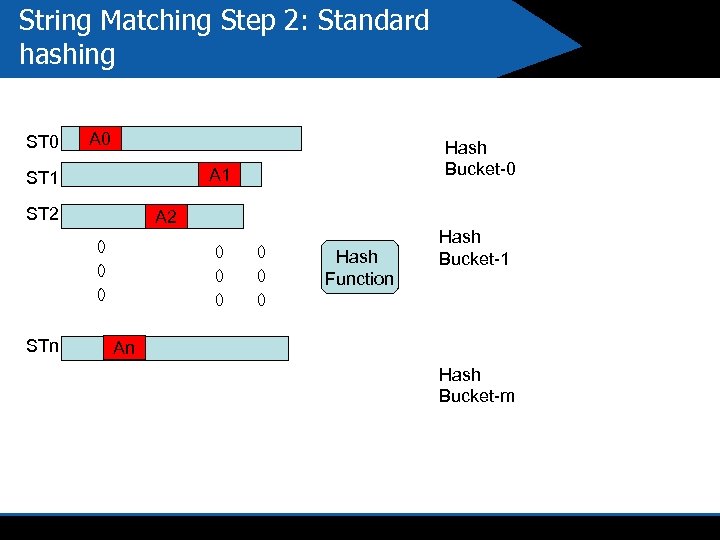

String Matching Step 2: Standard hashing ST 0 A 0 Hash Bucket-0 A 1 ST 2 A 2 Hash Function STn Hash Bucket-1 An Hash Bucket-m

String Matching Step 2: Standard hashing ST 0 A 0 Hash Bucket-0 A 1 ST 2 A 2 Hash Function STn Hash Bucket-1 An Hash Bucket-m

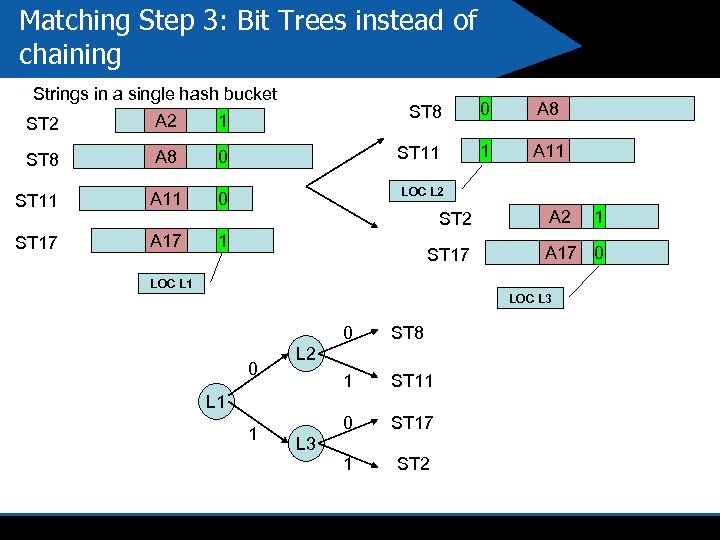

Matching Step 3: Bit Trees instead of chaining Strings in a single hash bucket ST 2 A 2 ST 8 A 11 0 ST 17 A 17 0 A 8 ST 11 0 ST 11 ST 8 1 1 A 11 1 LOC L 2 ST 17 A 2 A 17 0 LOC L 1 LOC L 3 0 0 ST 8 1 ST 11 0 ST 17 1 ST 2 L 1 1 L 3 1

Matching Step 3: Bit Trees instead of chaining Strings in a single hash bucket ST 2 A 2 ST 8 A 11 0 ST 17 A 17 0 A 8 ST 11 0 ST 11 ST 8 1 1 A 11 1 LOC L 2 ST 17 A 2 A 17 0 LOC L 1 LOC L 3 0 0 ST 8 1 ST 11 0 ST 17 1 ST 2 L 1 1 L 3 1

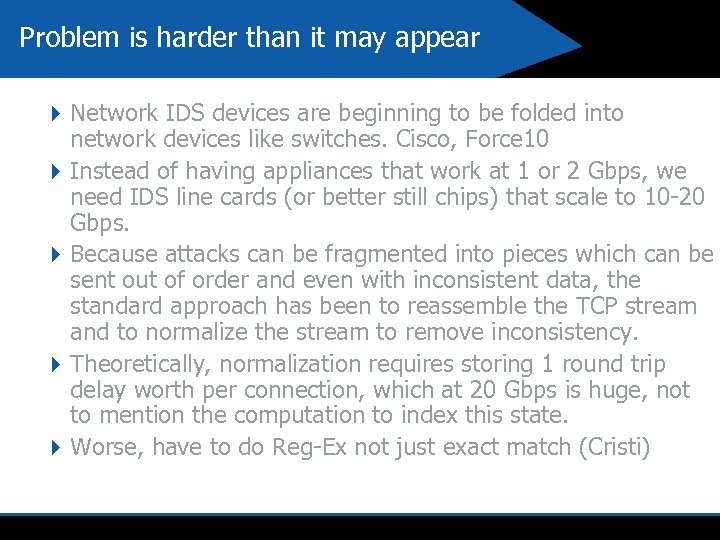

Problem is harder than it may appear 4 Network IDS devices are beginning to be folded into network devices like switches. Cisco, Force 10 4 Instead of having appliances that work at 1 or 2 Gbps, we need IDS line cards (or better still chips) that scale to 10 -20 Gbps. 4 Because attacks can be fragmented into pieces which can be sent out of order and even with inconsistent data, the standard approach has been to reassemble the TCP stream and to normalize the stream to remove inconsistency. 4 Theoretically, normalization requires storing 1 round trip delay worth per connection, which at 20 Gbps is huge, not to mention the computation to index this state. 4 Worse, have to do Reg-Ex not just exact match (Cristi)

Problem is harder than it may appear 4 Network IDS devices are beginning to be folded into network devices like switches. Cisco, Force 10 4 Instead of having appliances that work at 1 or 2 Gbps, we need IDS line cards (or better still chips) that scale to 10 -20 Gbps. 4 Because attacks can be fragmented into pieces which can be sent out of order and even with inconsistent data, the standard approach has been to reassemble the TCP stream and to normalize the stream to remove inconsistency. 4 Theoretically, normalization requires storing 1 round trip delay worth per connection, which at 20 Gbps is huge, not to mention the computation to index this state. 4 Worse, have to do Reg-Ex not just exact match (Cristi)

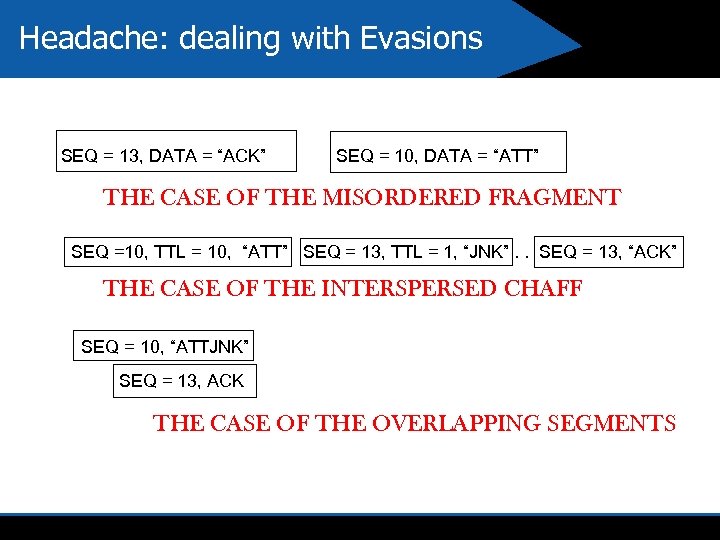

Headache: dealing with Evasions SEQ = 13, DATA = “ACK” SEQ = 10, DATA = “ATT” THE CASE OF THE MISORDERED FRAGMENT SEQ =10, TTL = 10, “ATT” SEQ = 13, TTL = 1, “JNK”. . SEQ = 13, “ACK” THE CASE OF THE INTERSPERSED CHAFF SEQ = 10, “ATTJNK” SEQ = 13, ACK THE CASE OF THE OVERLAPPING SEGMENTS

Headache: dealing with Evasions SEQ = 13, DATA = “ACK” SEQ = 10, DATA = “ATT” THE CASE OF THE MISORDERED FRAGMENT SEQ =10, TTL = 10, “ATT” SEQ = 13, TTL = 1, “JNK”. . SEQ = 13, “ACK” THE CASE OF THE INTERSPERSED CHAFF SEQ = 10, “ATTJNK” SEQ = 13, ACK THE CASE OF THE OVERLAPPING SEGMENTS

Conclusions 4 Surprising what one can do with network algorithms. At first glance, learning seems much harder than lookups or Qo. S. 4 Underlying principle in both algorithms is “sifting”: reducing traffic to be examined to a manageable amount and then doing more cumbersome checks. 4 Lots of caveats in practice: moving target

Conclusions 4 Surprising what one can do with network algorithms. At first glance, learning seems much harder than lookups or Qo. S. 4 Underlying principle in both algorithms is “sifting”: reducing traffic to be examined to a manageable amount and then doing more cumbersome checks. 4 Lots of caveats in practice: moving target