57570c93cf11e667ddaaafb581240f00.ppt

- Количество слайдов: 46

Algorithmic Issues in Strategic Distributed Systems

Suggested readings n n n Algorithmic Game Theory, Edited by Noam Nisan, Tim Roughgarden, Eva Tardos, and Vijay V. Vazirani, Cambridge University Press. Algorithmic Mechanism Design for Network Optimization Problems, Luciano Gualà, Ph. D Thesis, Università degli Studi dell’Aquila, 2007. Web pages by Éva Tardos, Christos Papadimitriou, Tim Roughgarden, and then follow the links therein

Two Research Traditions n Theory of Algorithms: computational issues n n n What can be feasibly computed? How long does it take to compute a solution? Which is the quality of a computed solution? Centralized or distributed computational models Game Theory: interaction between self-interested individuals n n What is the outcome of the interaction? Which social goals are compatible with selfishness?

Different Assumptions n Theory of Algorithms (in distributed systems): n n n Processors are obedient, faulty, or adversarial Large systems, limited computational resources Game Theory: n n Players are strategic (selfish) Small systems, unlimited computational resources

The Internet World n Agents often autonomous (users) n n n Users have their own individual goals Users own network components Internet scale n n Massive systems Limited communication/computational resources Both strategic and computational issues!

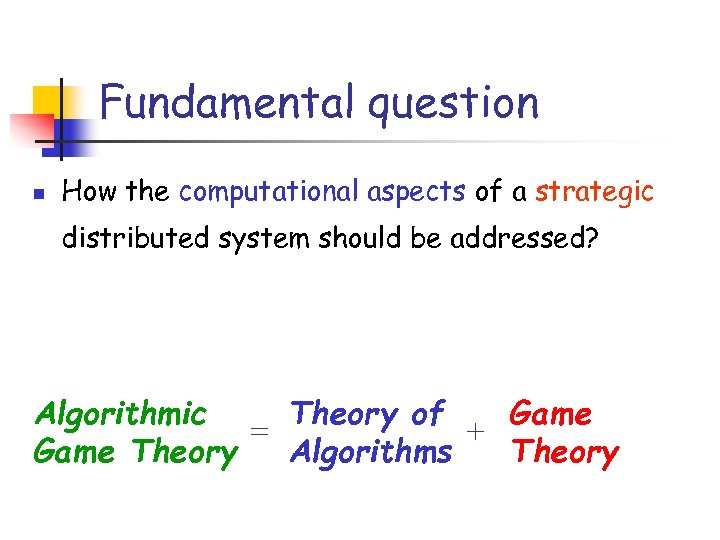

Fundamental question n How the computational aspects of a strategic distributed system should be addressed? Algorithmic Theory of Game = + Game Theory Algorithms Theory

Basics of Game Theory n A game consists of: n n A set of players (or agents) A set of rules of encounter: Who should act when, and what are the possible actions (strategies) A specification of payoffs for each outcome (combination of strategies) Game Theory attempts to predict the final outcome (or solution) of the game by taking into account the individual behavior of the players

(Some) Types of games n n n Cooperative/Non-cooperative Symmetric/Asymmetric (for 2 -player games) Zero sum/Non-zero sum Simultaneous/Sequential Perfect information/Imperfect information One-shot/Repeated

Solution concept How do we establish that an outcome is a solution? Among the possible outcomes of a game, those enjoying the following properties play a fundamental role: n Pareto’s efficient: no player's payoff can be made greater, without making any other player's payoff lesser; n Equilibrium: strategy combination in which players are not willing to change their state. This is much more informal: when a player does not want to change his state? In the Homo Economicus model, when he has selected a strategy that maximizes his individual payoff, knowing that other players are also doing the same.

Roadmap n n We focus on equilibria: in particular, Nash Equilibria (NE) and Dominant Strategy Equilibria (DSE) Computational Aspects of Nash Equilibria n n n Does a NE always exist? Can a NE be feasibly computed, once it exists? What about the “quality” of a NE? Case study: Selfish Routing in Internet, Network Connection Games (Algorithmic) Mechanism Design n Which social goals can be (efficiently) implemented in a strategic distributed system? Strategy-proof mechanisms in DSE: VCG-mechanisms Case study: Mechanism design for some basic network design problems (shortest paths and minimum spanning tree)

FIRST PART: (Nash) Equilibria

Games in Normal-Form We will be interested in simultaneous, perfectinformation and non-cooperative games. These games are usually represented explicitly by listing all possible strategies and corresponding payoffs of all players (this is the so-called normal–form); more formally, we have: n n n A set of N rational players For each player i, a strategy set Si A payoff matrix: for each strategy combination (s 1, s 2, …, s. N), where si Si, a corresponding payoff vector (p 1, p 2, …, p. N) |S 1| |S 2| … |SN| payoff matrix

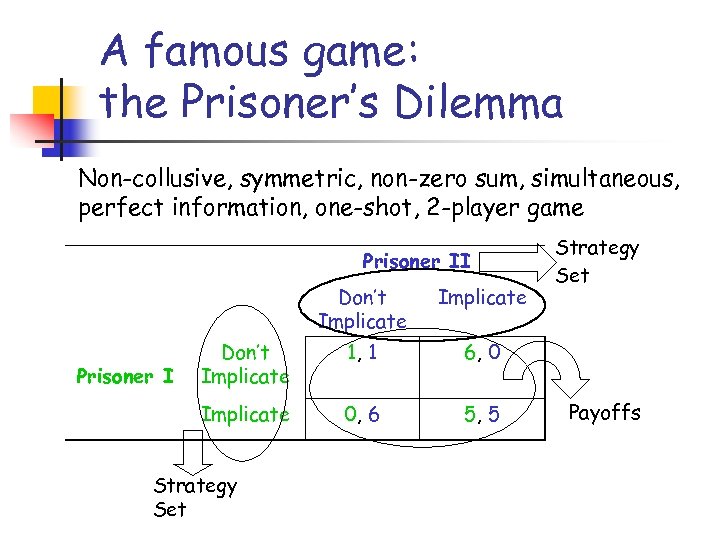

A famous game: the Prisoner’s Dilemma Non-collusive, symmetric, non-zero sum, simultaneous, perfect information, one-shot, 2 -player game Prisoner II Don’t Implicate 1, 1 6, 0 Implicate Prisoner I Implicate 0, 6 5, 5 Strategy Set Payoffs

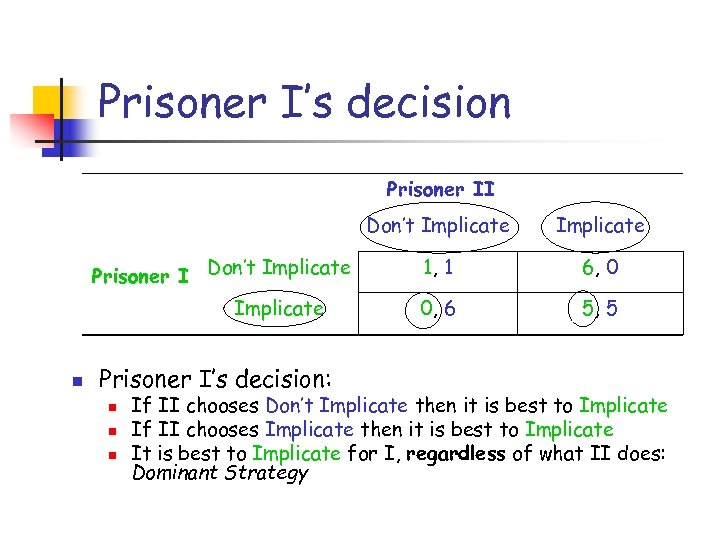

Prisoner I’s decision Prisoner II Don’t Implicate Prisoner I Don’t Implicate n Prisoner I’s decision: n n n Implicate 1, 1 6, 0 0, 6 5, 5 If II chooses Don’t Implicate then it is best to Implicate If II chooses Implicate then it is best to Implicate It is best to Implicate for I, regardless of what II does: Dominant Strategy

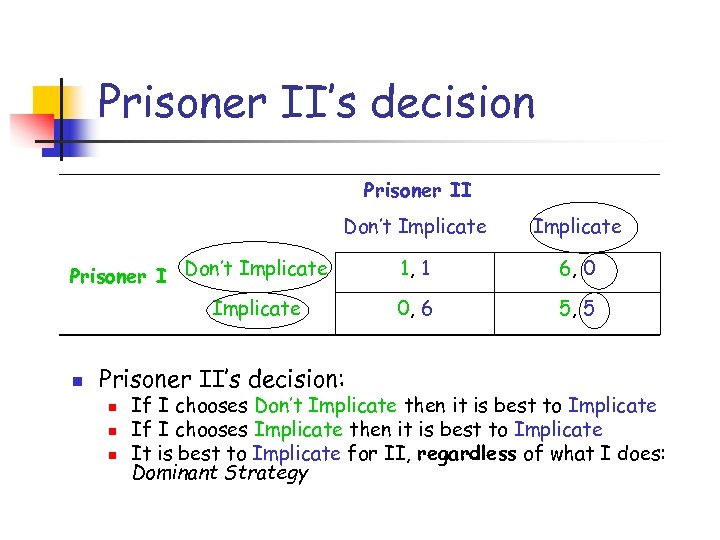

Prisoner II’s decision Prisoner II Don’t Implicate 1, 1 6, 0 0, 6 5, 5 Prisoner I Don’t Implicate n Prisoner II’s decision: n n n If I chooses Don’t Implicate then it is best to Implicate If I chooses Implicate then it is best to Implicate It is best to Implicate for II, regardless of what I does: Dominant Strategy

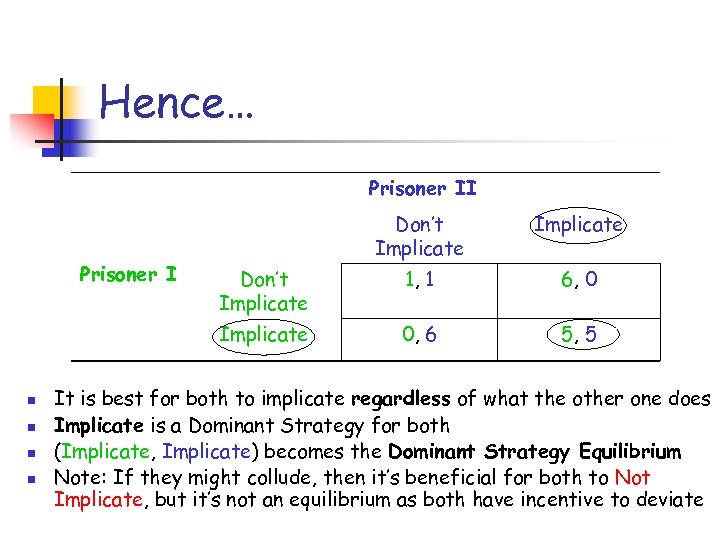

Hence… Prisoner II n n Implicate Don’t Implicate 1, 1 6, 0 Implicate Prisoner I Don’t Implicate 0, 6 5, 5 It is best for both to implicate regardless of what the other one does Implicate is a Dominant Strategy for both (Implicate, Implicate) becomes the Dominant Strategy Equilibrium Note: If they might collude, then it’s beneficial for both to Not Implicate, but it’s not an equilibrium as both have incentive to deviate

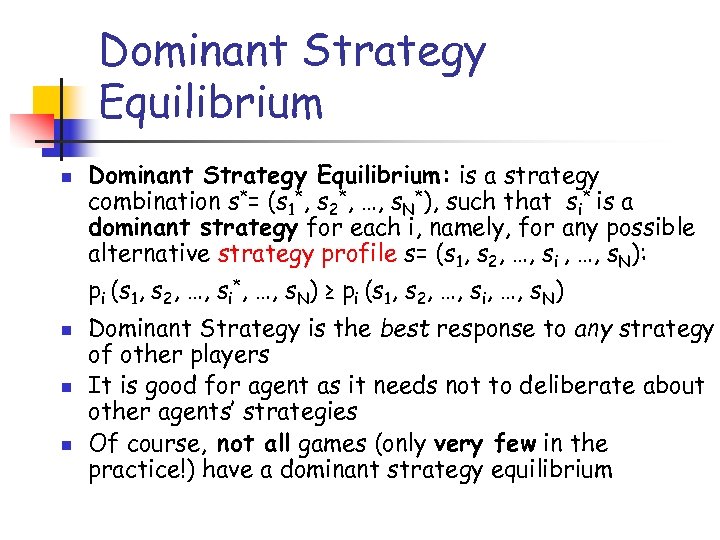

Dominant Strategy Equilibrium n Dominant Strategy Equilibrium: is a strategy combination s*= (s 1*, s 2*, …, s. N*), such that si* is a dominant strategy for each i, namely, for any possible alternative strategy profile s= (s 1, s 2, …, si , …, s. N): pi (s 1, s 2, …, si*, …, s. N) ≥ pi (s 1, s 2, …, si, …, s. N) n n n Dominant Strategy is the best response to any strategy of other players It is good for agent as it needs not to deliberate about other agents’ strategies Of course, not all games (only very few in the practice!) have a dominant strategy equilibrium

![A more relaxed solution concept: Nash Equilibrium [1951] n Nash Equilibrium: is a strategy A more relaxed solution concept: Nash Equilibrium [1951] n Nash Equilibrium: is a strategy](https://present5.com/presentation/57570c93cf11e667ddaaafb581240f00/image-18.jpg)

A more relaxed solution concept: Nash Equilibrium [1951] n Nash Equilibrium: is a strategy combination s*= (s 1*, s 2*, …, s. N*) such that for each i, si* is a best response to (s 1*, …, si-1*, si+1*, …, s. N*), namely, for any possible alternative strategy of player i si pi (s*) ≥ pi (s 1*, s 2*, …, si, …, s. N*)

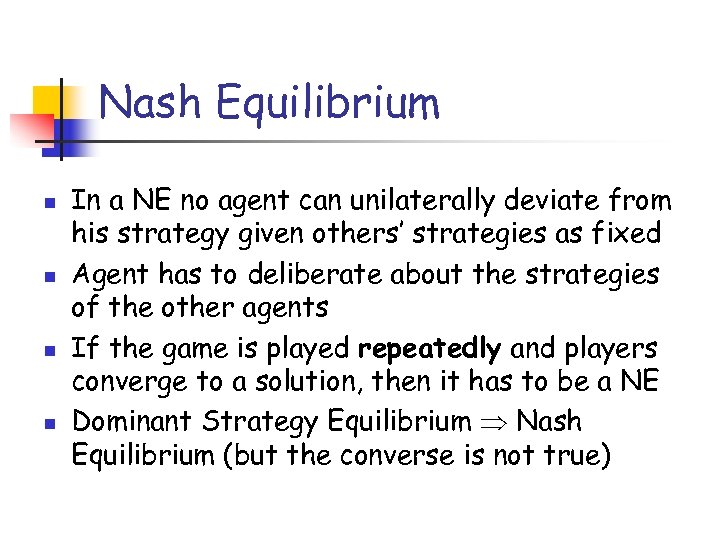

Nash Equilibrium n n In a NE no agent can unilaterally deviate from his strategy given others’ strategies as fixed Agent has to deliberate about the strategies of the other agents If the game is played repeatedly and players converge to a solution, then it has to be a NE Dominant Strategy Equilibrium Nash Equilibrium (but the converse is not true)

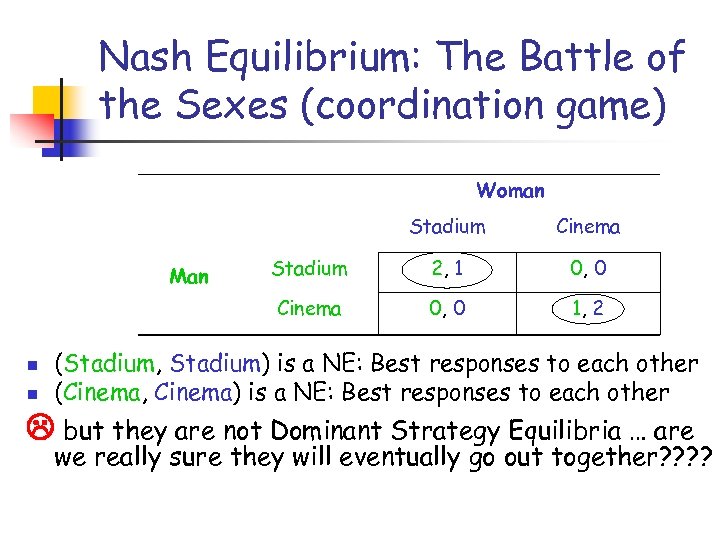

Nash Equilibrium: The Battle of the Sexes (coordination game) Woman Stadium n n Stadium 2, 1 0, 0 Cinema Man Cinema 0, 0 1, 2 (Stadium, Stadium) is a NE: Best responses to each other (Cinema, Cinema) is a NE: Best responses to each other but they are not Dominant Strategy Equilibria … are we really sure they will eventually go out together? ?

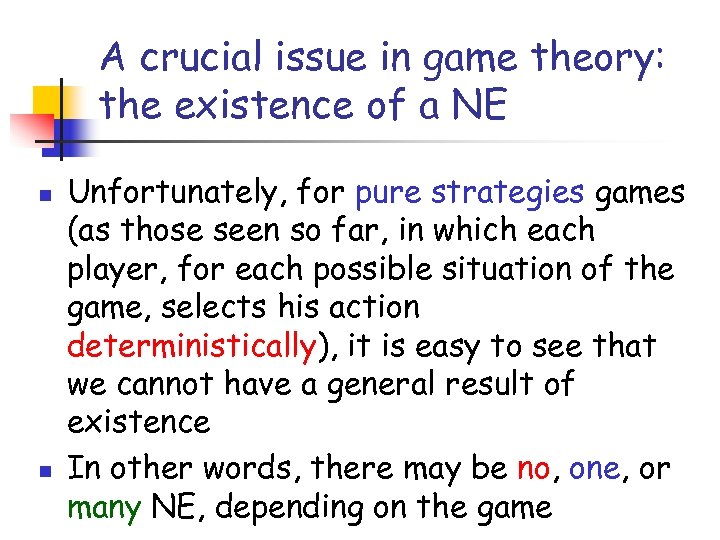

A crucial issue in game theory: the existence of a NE n n Unfortunately, for pure strategies games (as those seen so far, in which each player, for each possible situation of the game, selects his action deterministically), it is easy to see that we cannot have a general result of existence In other words, there may be no, one, or many NE, depending on the game

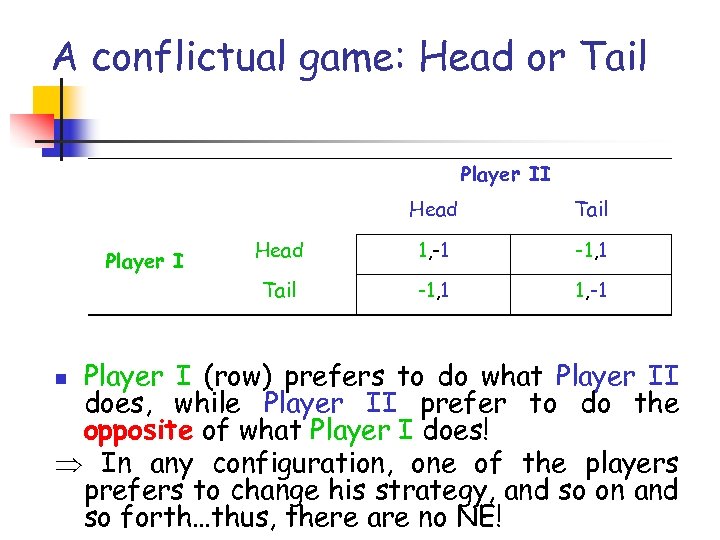

A conflictual game: Head or Tail Player II Head 1, -1 -1, 1 Tail Player I Tail -1, 1 1, -1 Player I (row) prefers to do what Player II does, while Player II prefer to do the opposite of what Player I does! In any configuration, one of the players prefers to change his strategy, and so on and so forth…thus, there are no NE! n

On the existence of a NE n n n However, when a player can select his strategy randomly by using a probability distribution over his set of possible pure strategies (mixed strategy), then the following general result holds: Theorem (Nash, 1951): Any game with a finite set of players and a finite set of strategies has a NE of mixed strategies (i. e. , there exists a profile of probability distributions for the players such that the expected payoff of each player cannot be improved by changing unilaterally the selected probability distribution). Head or Tail game: if each player sets p(Head)=p(Tail)=1/2, then the expected payoff of each player is 0, and this is a NE, since no player can improve on this by choosing unilaterally a different randomization!

Some big computational issues concerned with NE 1. 2. 3. 4. Finding a NE in mixed/pure (if any) strategies Establishing the quality of a NE, as compared to a cooperative system, namely a system in which agents can collaborate (recall the Prisoner’s Dilemma) In a repeated game, establishing whether and in how many steps the system will eventually converge to a NE (recall the Battle of the Sexes) Verifying a NE, approximating a NE, NE in resource (e. g. , time, space, message size) constrained settings, breaking a NE by colluding, etc. . . (interested in a Ph. D? )

Finding a NE in mixed strategies n How do we select the correct probability distribution? It looks like a problem in the continuous… …but it’s not, actually! It can be shown that such a distribution can be found by selecting for each player a best possible subset of pure strategies (so-called best support), over which the probability distribution can actually be found by solving a system of algebraic equations! : In the practice, the problem can be solved by a simplex -like technique called the Lemke–Howson algorithm, which however is exponential in the worst case

Is finding a NE NP-hard? n n In pure strategies, yes, for many games of interest What about mixed strategies? W. l. o. g. , we restrict ourself to 2 player games: Then, we wonder whether 2 -NASH is NP-hard. Reminder: a problem P is NP-hard if one can Turing-reduce in polynomial time any NP-complete problem P’ to it (this means, P’ can be solved in polynomial time by an oracle machine with an oracle for P) But 2 -NASH can be solved in polynomial-time by a NDTM (by enumerating all the supports); moreover, every instance of 2 NASH is a “yes”-instance (since every game has a NE), and so we could certificate in polynomial-time on a NDTM both “yes” and “no”-instances of any NP-complete problem if 2 -NASH is NP-hard then NP = co. NP (hard to believe!)

The complexity class PPAD n n n Definition (Papadimitriou, 1994): PPAD (Polynomial Parity Argument – Directed case) is a subclass of TFNP, where existence of a solution is guaranteed by a parity argument. Roughly speaking, PPAD contains all problems whose solution space can be set up as the (non-empty) set of all sinks in a suitable directed graph (generated by the input instance), having an exponential number of vertices in the size of the input, though. Breakthrough: 2 -NASH is PPAD-complete!!! (Chen & Deng, FOCS’ 06) Remark: It could very well be that PPAD=P NP… …but several PPAD-complete problems are resisting for decades to poly-time attacks (e. g. , finding Brouwer fixed points)

Finding a NE in pure strategies By definition, it is easy to see that an entry (p 1, …, p. N) of the payoff matrix is a NE if and only if pi is the maximum ith element of the row (p 1, …, pi-1, {p(s): s Si} , pi+1, …, p. N), for each i=1, …, N. n Notice that, with N players, an explicit (i. e. , in normal-form) representation of the payoff functions is exponential in N brute-force (i. e. , enumerative) search for pure NE is then exponential in the number of players (even if it is still polynomial in the input size, but the normal-form representation needs not be a minimal-space representation of the input!) Alternative cheaper methods are sought: for many games of interest, a NE can be found in poly-time w. r. t. to the number of players (e. g. , using the powerful potential method) n

On the quality of a NE n n How inefficient is a NE in comparison to an idealized situation in which the players would strive to collaborate selflessly with the common goal of maximizing the social welfare? Recall: in the Prisoner’s Dilemma (PD) game, the DSE NE means a total of 10 years in jail for the players. However, if they would not implicate reciprocally, then they would stay a total of only 2 years in jail!

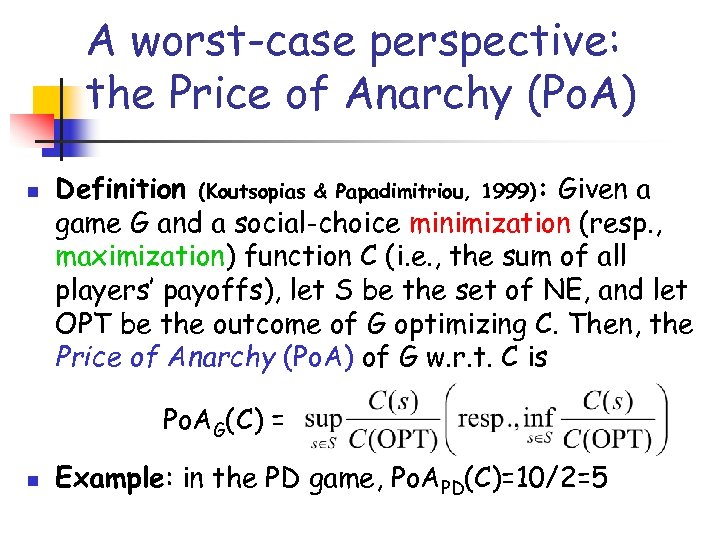

A worst-case perspective: the Price of Anarchy (Po. A) n Definition (Koutsopias & Papadimitriou, 1999): Given a game G and a social-choice minimization (resp. , maximization) function C (i. e. , the sum of all players’ payoffs), let S be the set of NE, and let OPT be the outcome of G optimizing C. Then, the Price of Anarchy (Po. A) of G w. r. t. C is Po. AG(C) = n Example: in the PD game, Po. APD(C)=10/2=5

A case study for the existence and quality of a NE: selfish routing on Internet n n Internet components are made up of heterogeneous nodes and links, and the network architecture is open-based and dynamic Internet users behave selfishly: they generate traffic, and their only goal is to download/upload data as fast as possible! But the more a link is used, the more is slower, and there is no central authority “optimizing” the data flow… So, why does Internet eventually work is such a jungle? ? ?

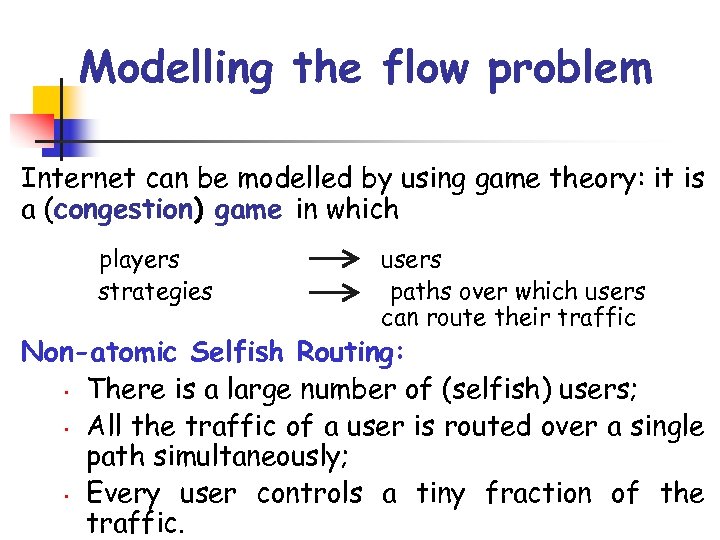

Modelling the flow problem Internet can be modelled by using game theory: it is a (congestion) game in which players strategies users paths over which users can route their traffic Non-atomic Selfish Routing: • There is a large number of (selfish) users; • All the traffic of a user is routed over a single path simultaneously; • Every user controls a tiny fraction of the traffic.

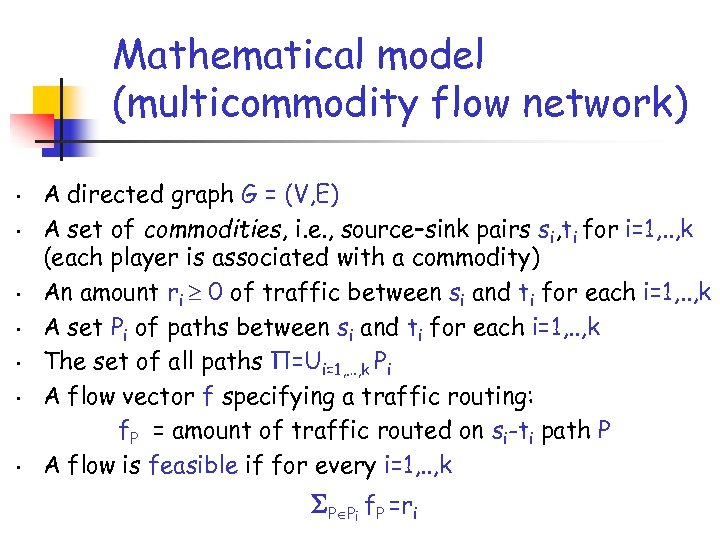

Mathematical model (multicommodity flow network) • • A directed graph G = (V, E) A set of commodities, i. e. , source–sink pairs si, ti for i=1, . . , k (each player is associated with a commodity) An amount ri 0 of traffic between si and ti for each i=1, . . , k A set Pi of paths between si and ti for each i=1, . . , k The set of all paths Π=Ui=1, …, k Pi A flow vector f specifying a traffic routing: f. P = amount of traffic routed on si-ti path P A flow is feasible if for every i=1, . . , k P Pi f. P =ri

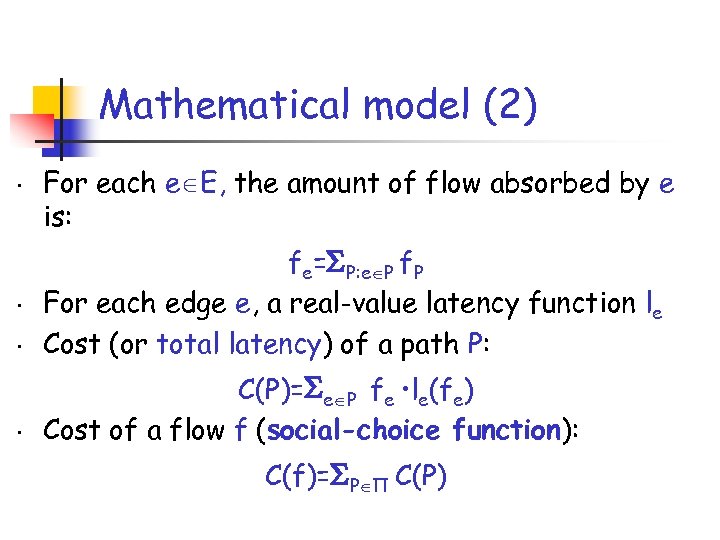

Mathematical model (2) • For each e E, the amount of flow absorbed by e is: • fe= P: e P f. P For each edge e, a real-value latency function le Cost (or total latency) of a path P: • C(P)= e P fe • le(fe) Cost of a flow f (social-choice function): • C(f)= P Π C(P)

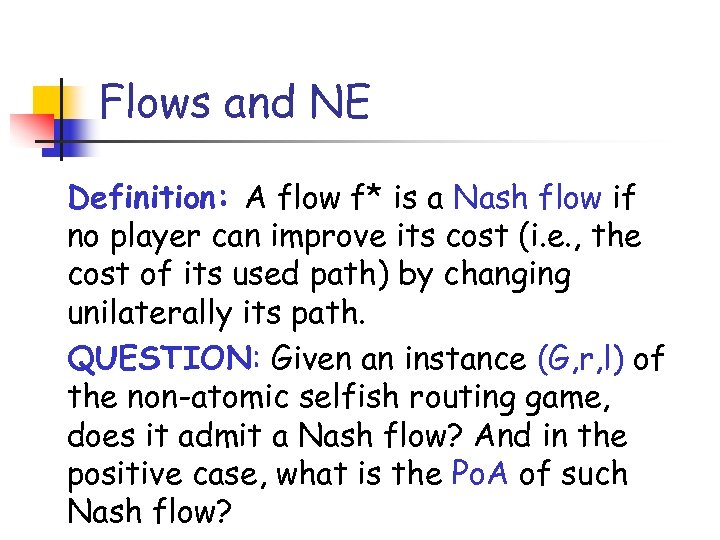

Flows and NE Definition: A flow f* is a Nash flow if no player can improve its cost (i. e. , the cost of its used path) by changing unilaterally its path. QUESTION: Given an instance (G, r, l) of the non-atomic selfish routing game, does it admit a Nash flow? And in the positive case, what is the Po. A of such Nash flow?

![Example: Pigou’s game [1920] Latency depends on the congestion (x is the fraction of Example: Pigou’s game [1920] Latency depends on the congestion (x is the fraction of](https://present5.com/presentation/57570c93cf11e667ddaaafb581240f00/image-36.jpg)

Example: Pigou’s game [1920] Latency depends on the congestion (x is the fraction of flow using the edge) Total amount of flow: 1 s t Latency is fixed §What is the NE of this game? Trivial: all the fraction of flow tends to travel on the upper edge the cost of the flow is 1· 1 +0· 1 =1 §What is the Po. A of this NE? The optimal solution is the minimum of C(x)=x·x +(1 -x)· 1 C’(x)=2 x-1 OPT=1/2 C(OPT)=1/2· 1/2+(1 -1/2)· 1=0. 75 Po. A(C) = 1/0. 75 = 4/3

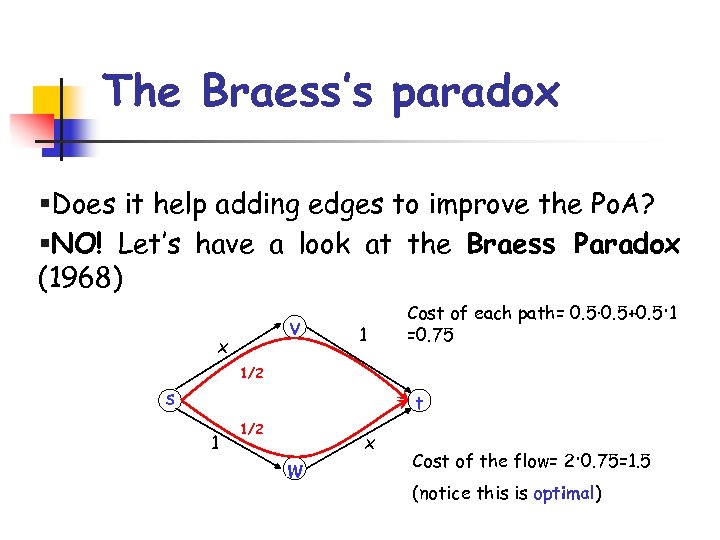

The Braess’s paradox §Does it help adding edges to improve the Po. A? §NO! Let’s have a look at the Braess Paradox (1968) v x 1 Cost of each path= 0. 5· 0. 5+0. 5· 1 =0. 75 1/2 s t 1 1/2 w x Cost of the flow= 2· 0. 75=1. 5 (notice this is optimal)

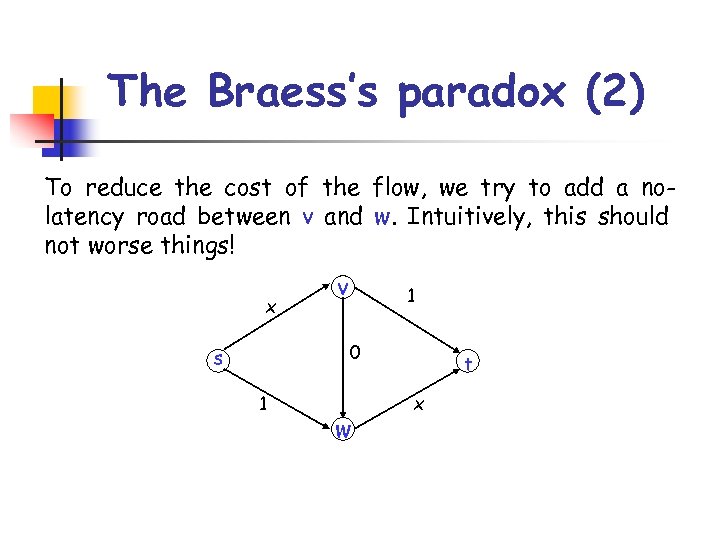

The Braess’s paradox (2) To reduce the cost of the flow, we try to add a nolatency road between v and w. Intuitively, this should not worse things! x v 1 0 s 1 w t x

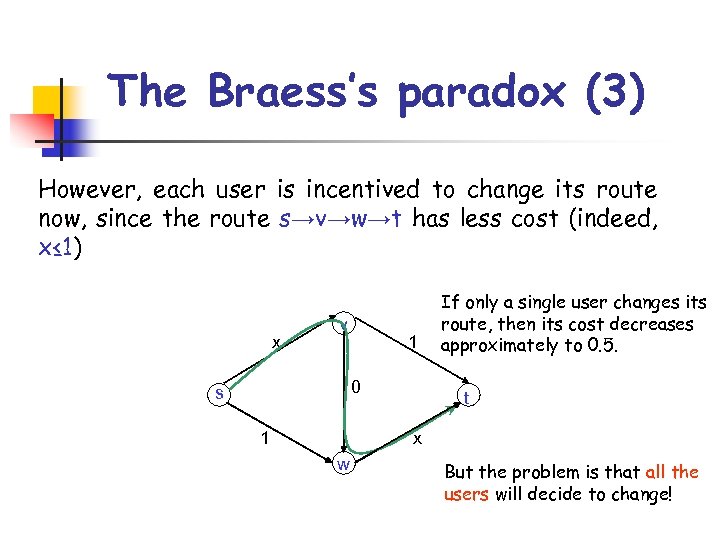

The Braess’s paradox (3) However, each user is incentived to change its route now, since the route s→v→w→t has less cost (indeed, x≤ 1) x v 1 0 s 1 If only a single user changes its route, then its cost decreases approximately to 0. 5. t x w But the problem is that all the users will decide to change!

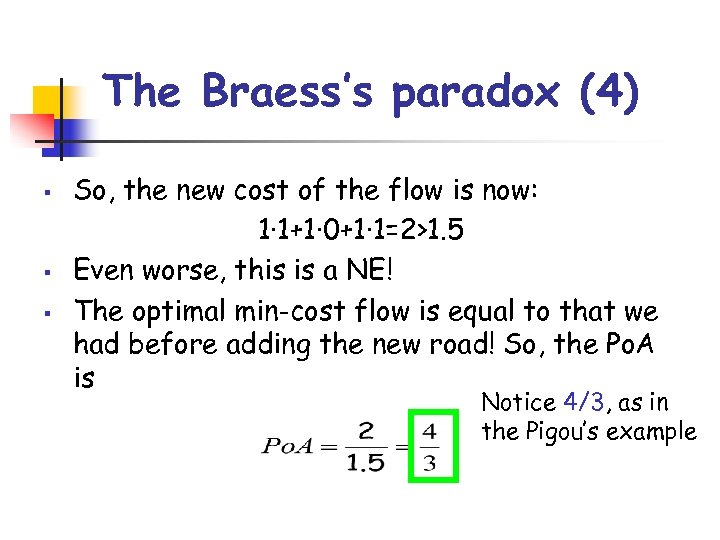

The Braess’s paradox (4) § § § So, the new cost of the flow is now: 1· 1+1· 0+1· 1=2>1. 5 Even worse, this is a NE! The optimal min-cost flow is equal to that we had before adding the new road! So, the Po. A is Notice 4/3, as in the Pigou’s example

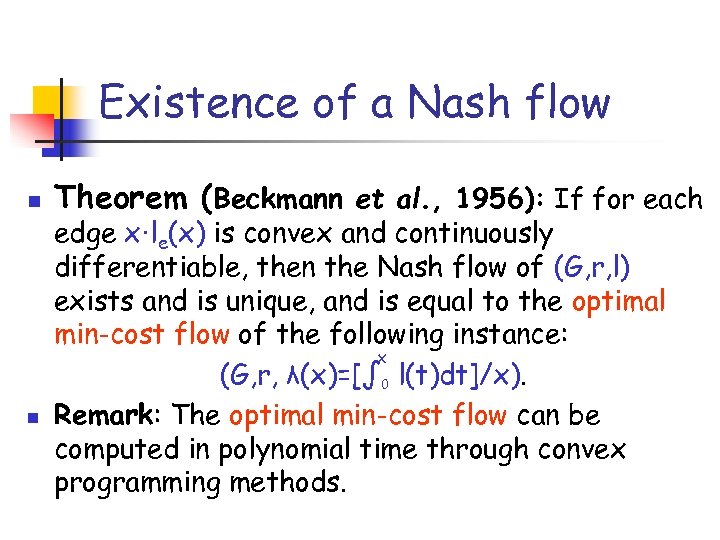

Existence of a Nash flow n n Theorem (Beckmann et al. , 1956): If for each edge x·le(x) is convex and continuously differentiable, then the Nash flow of (G, r, l) exists and is unique, and is equal to the optimal min-cost flow of the following instance: x (G, r, λ(x)=[∫ 0 l(t)dt]/x). Remark: The optimal min-cost flow can be computed in polynomial time through convex programming methods.

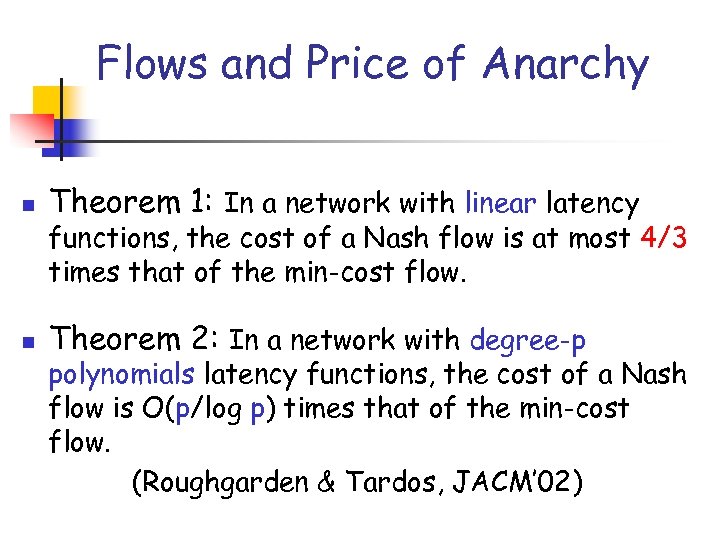

Flows and Price of Anarchy n Theorem 1: In a network with linear latency n Theorem 2: In a network with degree-p functions, the cost of a Nash flow is at most 4/3 times that of the min-cost flow. polynomials latency functions, the cost of a Nash flow is O(p/log p) times that of the min-cost flow. (Roughgarden & Tardos, JACM’ 02)

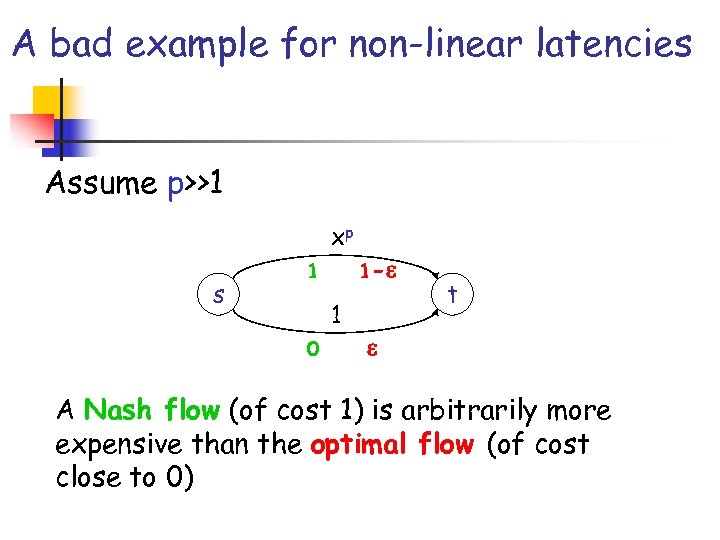

A bad example for non-linear latencies Assume p>>1 xp s 1 1 - 1 0 t A Nash flow (of cost 1) is arbitrarily more expensive than the optimal flow (of cost close to 0)

Convergence towards a NE (in pure strategies games) n n n Ok, we know that selfish routing is not so bad at its NE, but are we really sure this point of equilibrium will be eventually reached? Convergence Time: number of moves made by the players to reach a NE from an initial arbitrary state Question: Is the convergence time (polynomially) bounded in the number of players?

The potential function method n n n (Rough) Definition: A potential function for a game (if any) is a real-valued function, defined on the set of possible outcomes of the game, such that the equilibria of the game are precisely the local optima of the potential function. Theorem: In any finite game admitting a potential function, best response dynamics always converge to a NE of pure strategies. But how many steps are needed to reach a NE? It depends on the combinatorial structure of the players' strategy space…

Convergence towards the Nash flow Positive result: The non-atomic selfish routing game is a potential game (and moreover, for many instances, the convergence time is polynomial). Negative result: However, there exist instances of the non-atomic selfish routing game for which the convergence time is exponential (under some mild assumptions).

57570c93cf11e667ddaaafb581240f00.ppt