3fce6019b21cb8857308422caab78c8e.ppt

- Количество слайдов: 27

Advanced Tu. Talk Click to edit the title text format Dialogue Agents Pamela Jordan University of Pittsburgh Learning Research and Development Center

Advanced Tu. Talk Click to edit the title text format Dialogue Agents Pamela Jordan University of Pittsburgh Learning Research and Development Center

Agenda Introducing the Tu. Talk dialogue system server System architecture (briefly) Web interface to server for Fielding experiments Analyzing experiments For uploading sc and xml directly to dialogue system server Introduce additional authoring features Enabling and controlling automated feedback Optional steps (sc only) Looping (sc only)

Agenda Introducing the Tu. Talk dialogue system server System architecture (briefly) Web interface to server for Fielding experiments Analyzing experiments For uploading sc and xml directly to dialogue system server Introduce additional authoring features Enabling and controlling automated feedback Optional steps (sc only) Looping (sc only)

Architecture of Tu. Talk Dialogue System Hub & spokes architecture Main modules: Coordinator Language recognition Language generation Dialogue manager Dialogue History database

Architecture of Tu. Talk Dialogue System Hub & spokes architecture Main modules: Coordinator Language recognition Language generation Dialogue manager Dialogue History database

Language recognition Refers to labelled sets of alternative phrasings called concepts Inputs from dialogue manager normalized sentence, set of expected response concepts Computes minimum edit distance, number of adds and deletes of words needed to match input language to a concept Returns expected response concept with smallest minimum edit distance that falls within a threshold

Language recognition Refers to labelled sets of alternative phrasings called concepts Inputs from dialogue manager normalized sentence, set of expected response concepts Computes minimum edit distance, number of adds and deletes of words needed to match input language to a concept Returns expected response concept with smallest minimum edit distance that falls within a threshold

Language generation Refers to labelled sets of alternative phrasings called concepts Input from dialogue manager: a concept If > 1 alternative phrasing, removes one last used according to dialogue history Randomly selects from remaining alternative phrasings Requests output of selected phrasing to student

Language generation Refers to labelled sets of alternative phrasings called concepts Input from dialogue manager: a concept If > 1 alternative phrasing, removes one last used according to dialogue history Randomly selects from remaining alternative phrasings Requests output of selected phrasing to student

Integrating Tu. Talk Can integrate (embed/wrap) other modules Pro. PL Cordillera Hub & spokes architecture Replaceable modules e. g. , NLU

Integrating Tu. Talk Can integrate (embed/wrap) other modules Pro. PL Cordillera Hub & spokes architecture Replaceable modules e. g. , NLU

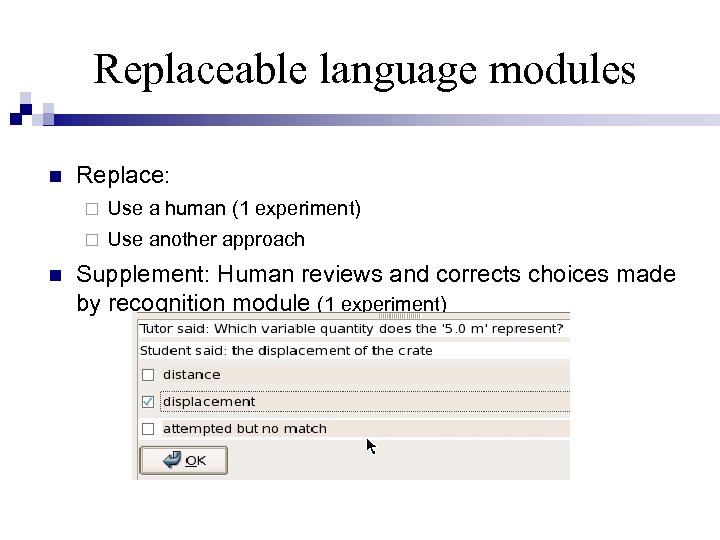

Replaceable language modules Replace: Use a human (1 experiment) Use another approach Supplement: Human reviews and corrects choices made by recognition module (1 experiment)

Replaceable language modules Replace: Use a human (1 experiment) Use another approach Supplement: Human reviews and corrects choices made by recognition module (1 experiment)

Experiment management/analysis Experiment management tools: http: //pyrenees. lrdc. pitt. edu/~tutalk/cgibin/admin. cgi One scenario/script = one dialogue agent = one condition, but can organize in other ways One agent per unit One agent that tells all knowledge components vs. one that elicits vs. one that uses a strategy to decide which to do Condition management Start on server and leave it running Designate who is allowed in the condition SQL database of information collected during interaction Can download or query Working on producing Data. Shop format

Experiment management/analysis Experiment management tools: http: //pyrenees. lrdc. pitt. edu/~tutalk/cgibin/admin. cgi One scenario/script = one dialogue agent = one condition, but can organize in other ways One agent per unit One agent that tells all knowledge components vs. one that elicits vs. one that uses a strategy to decide which to do Condition management Start on server and leave it running Designate who is allowed in the condition SQL database of information collected during interaction Can download or query Working on producing Data. Shop format

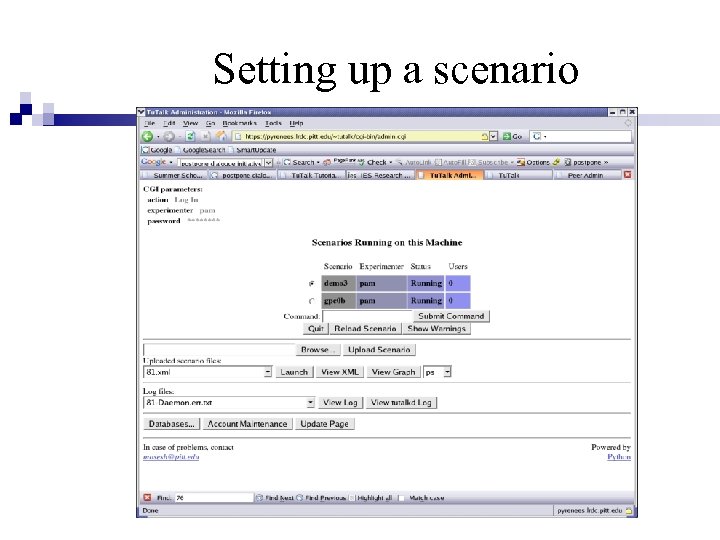

Setting up a scenario

Setting up a scenario

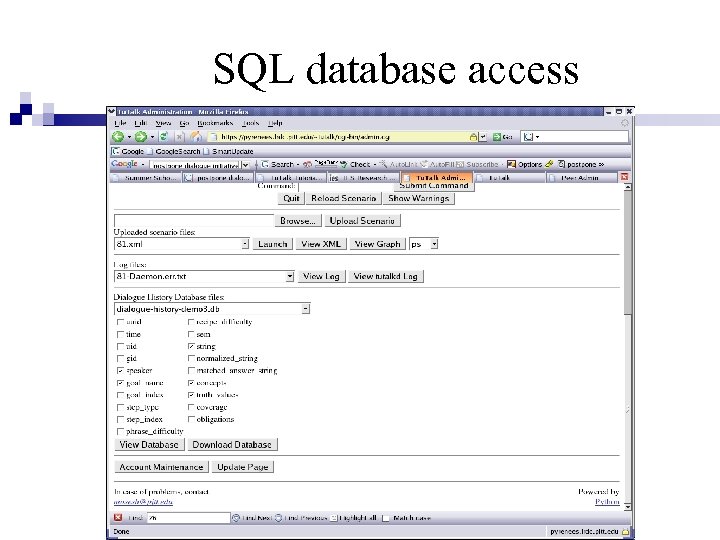

SQL database access

SQL database access

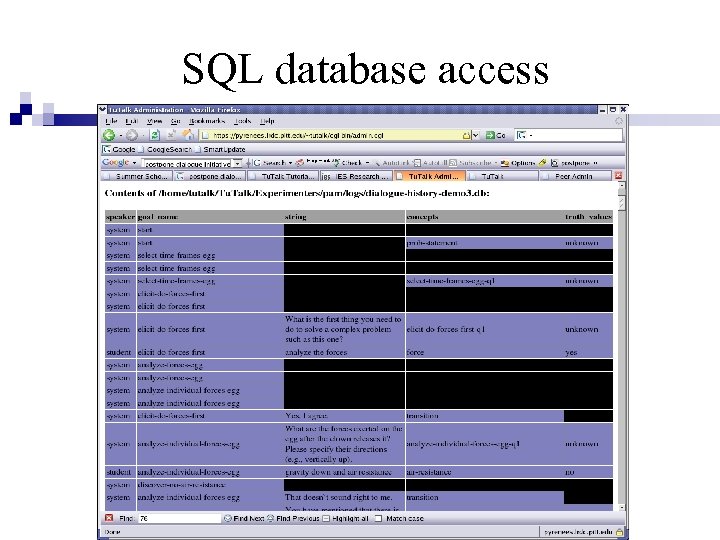

SQL database access

SQL database access

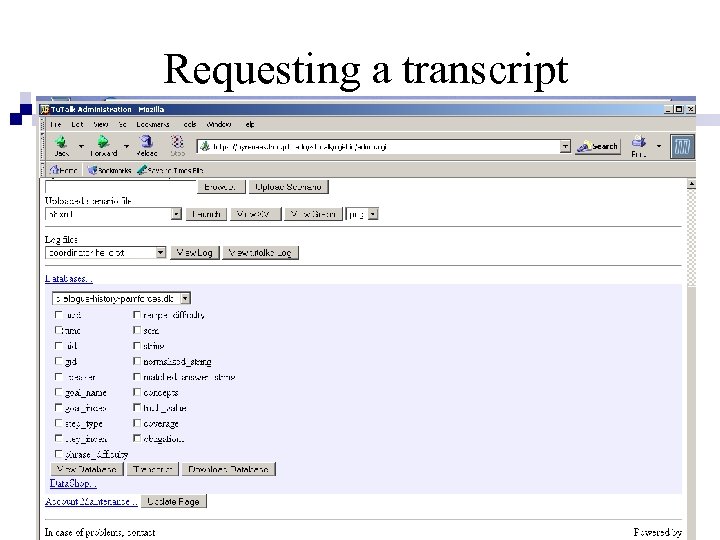

Requesting a transcript

Requesting a transcript

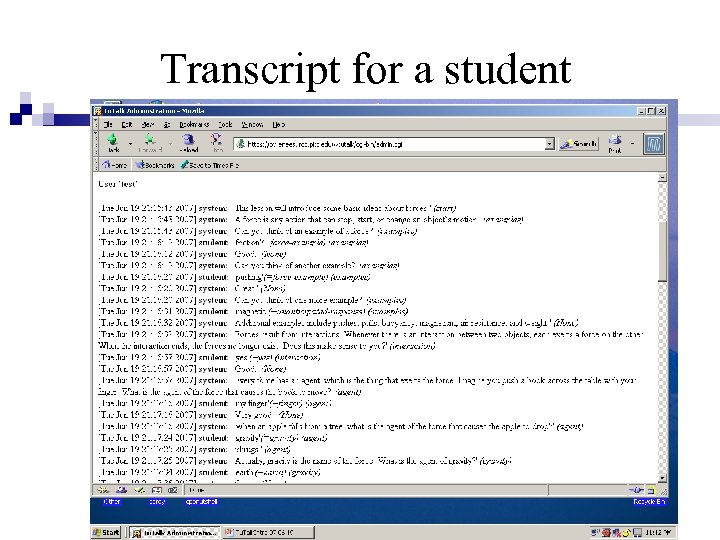

Transcript for a student

Transcript for a student

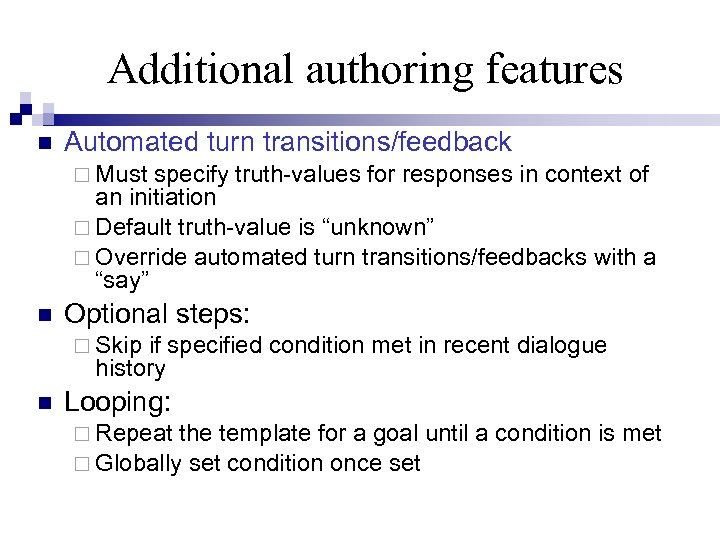

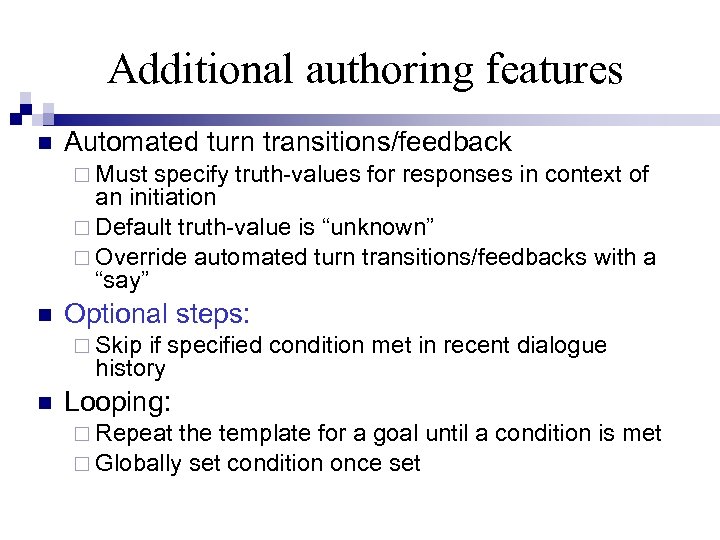

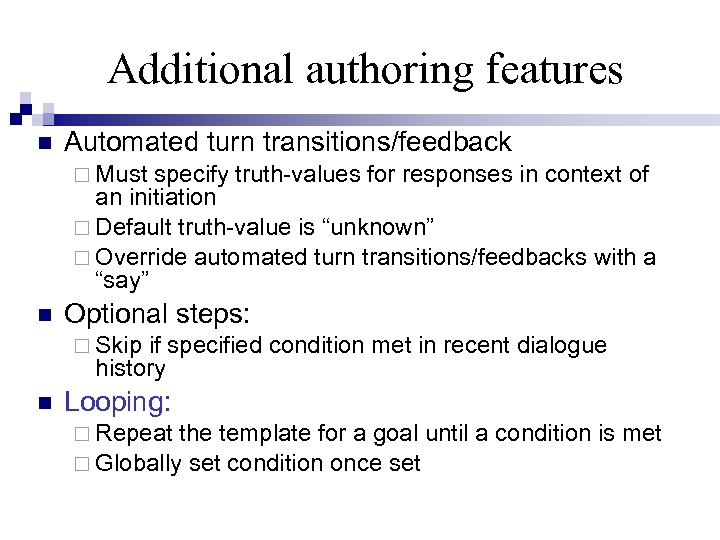

Additional authoring features Automated turn transitions/feedback Must specify truth-values for responses in context of an initiation Default truth-value is “unknown” Override automated turn transitions/feedbacks with a “say” Optional steps: Skip if specified condition met in recent dialogue history Looping: Repeat the template for a goal until a condition is met Globally set condition once set

Additional authoring features Automated turn transitions/feedback Must specify truth-values for responses in context of an initiation Default truth-value is “unknown” Override automated turn transitions/feedbacks with a “say” Optional steps: Skip if specified condition met in recent dialogue history Looping: Repeat the template for a goal until a condition is met Globally set condition once set

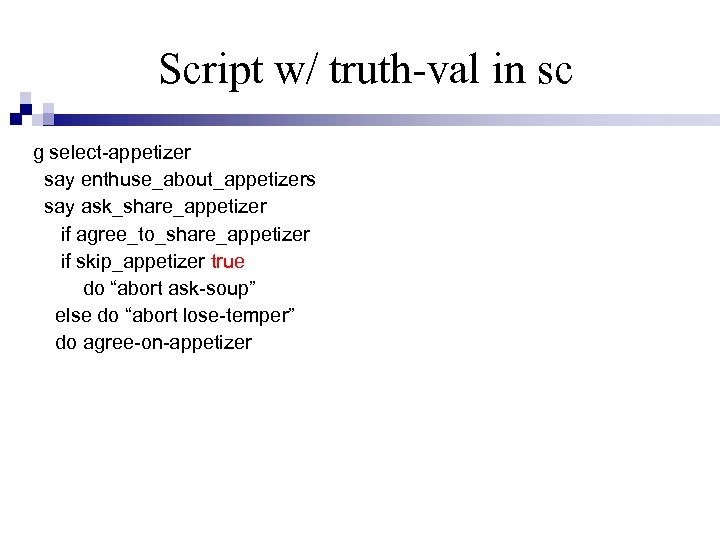

Script w/ truth-val in sc g select-appetizer say enthuse_about_appetizers say ask_share_appetizer if agree_to_share_appetizer if skip_appetizer true do “abort ask-soup” else do “abort lose-temper” do agree-on-appetizer

Script w/ truth-val in sc g select-appetizer say enthuse_about_appetizers say ask_share_appetizer if agree_to_share_appetizer if skip_appetizer true do “abort ask-soup” else do “abort lose-temper” do agree-on-appetizer

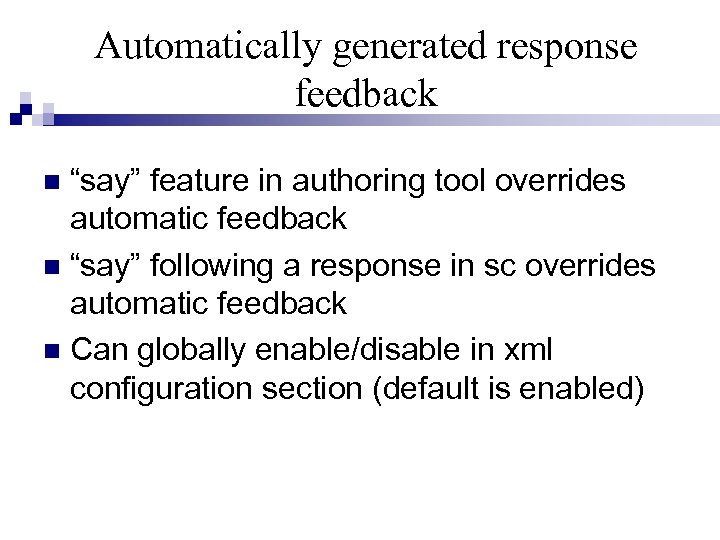

Automatically generated response feedback “say” feature in authoring tool overrides automatic feedback “say” following a response in sc overrides automatic feedback Can globally enable/disable in xml configuration section (default is enabled)

Automatically generated response feedback “say” feature in authoring tool overrides automatic feedback “say” following a response in sc overrides automatic feedback Can globally enable/disable in xml configuration section (default is enabled)

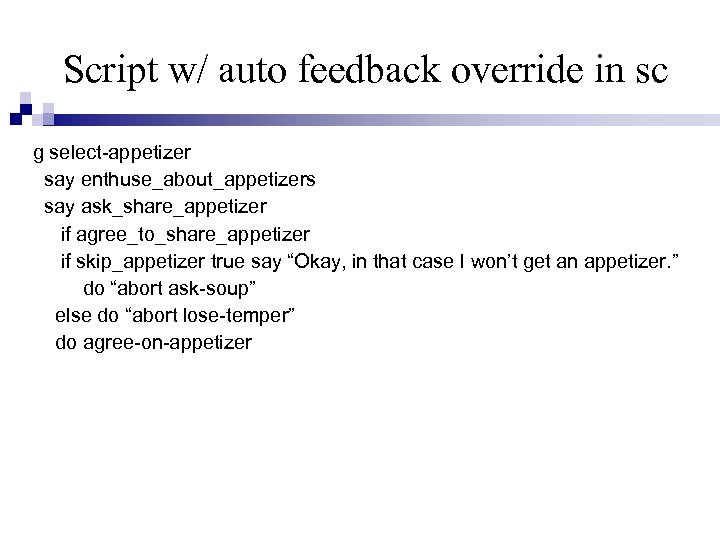

Script w/ auto feedback override in sc g select-appetizer say enthuse_about_appetizers say ask_share_appetizer if agree_to_share_appetizer if skip_appetizer true say “Okay, in that case I won’t get an appetizer. ” do “abort ask-soup” else do “abort lose-temper” do agree-on-appetizer

Script w/ auto feedback override in sc g select-appetizer say enthuse_about_appetizers say ask_share_appetizer if agree_to_share_appetizer if skip_appetizer true say “Okay, in that case I won’t get an appetizer. ” do “abort ask-soup” else do “abort lose-temper” do agree-on-appetizer

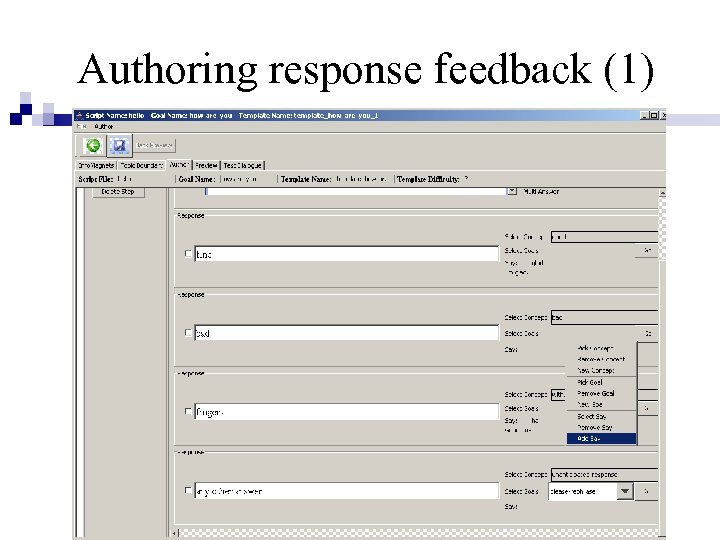

Authoring response feedback (1)

Authoring response feedback (1)

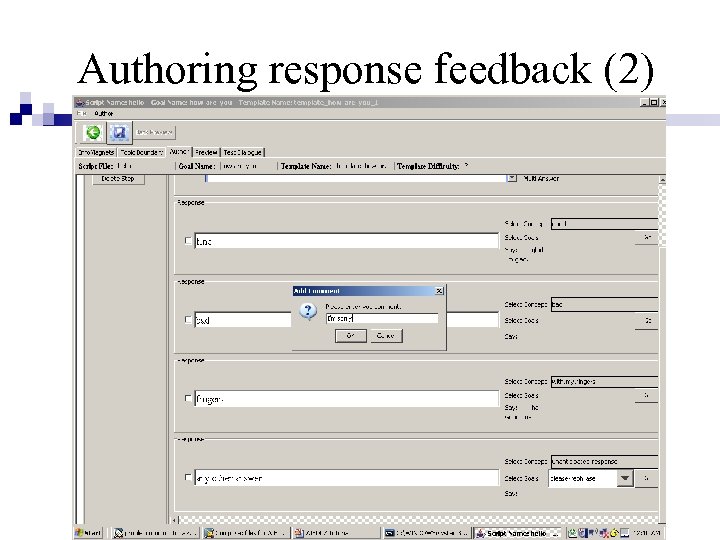

Authoring response feedback (2)

Authoring response feedback (2)

Additional authoring features Automated turn transitions/feedback Must specify truth-values for responses in context of an initiation Default truth-value is “unknown” Override automated turn transitions/feedbacks with a “say” Optional steps: Skip if specified condition met in recent dialogue history Looping: Repeat the template for a goal until a condition is met Globally set condition once set

Additional authoring features Automated turn transitions/feedback Must specify truth-values for responses in context of an initiation Default truth-value is “unknown” Override automated turn transitions/feedbacks with a “say” Optional steps: Skip if specified condition met in recent dialogue history Looping: Repeat the template for a goal until a condition is met Globally set condition once set

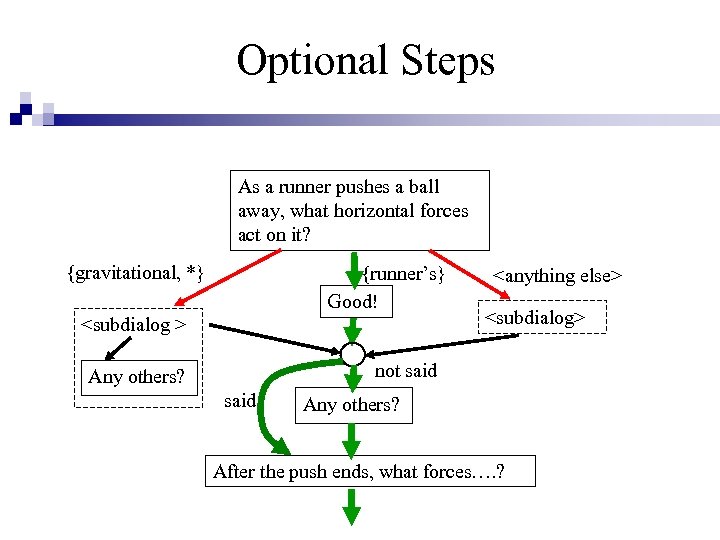

Optional Steps As a runner pushes a ball away, what horizontal forces act on it? {gravitational, *} {runner’s} Good!

Optional Steps As a runner pushes a ball away, what horizontal forces act on it? {gravitational, *} {runner’s} Good!

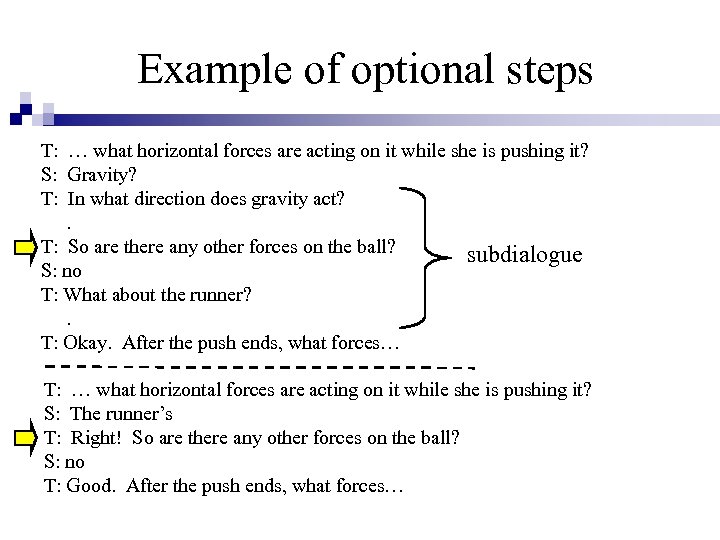

Example of optional steps T: … what horizontal forces are acting on it while she is pushing it? S: Gravity? T: In what direction does gravity act? . T: So are there any other forces on the ball? subdialogue S: no T: What about the runner? . T: Okay. After the push ends, what forces… T: … what horizontal forces are acting on it while she is pushing it? S: The runner’s T: Right! So are there any other forces on the ball? S: no T: Good. After the push ends, what forces…

Example of optional steps T: … what horizontal forces are acting on it while she is pushing it? S: Gravity? T: In what direction does gravity act? . T: So are there any other forces on the ball? subdialogue S: no T: What about the runner? . T: Okay. After the push ends, what forces… T: … what horizontal forces are acting on it while she is pushing it? S: The runner’s T: Right! So are there any other forces on the ball? S: no T: Good. After the push ends, what forces…

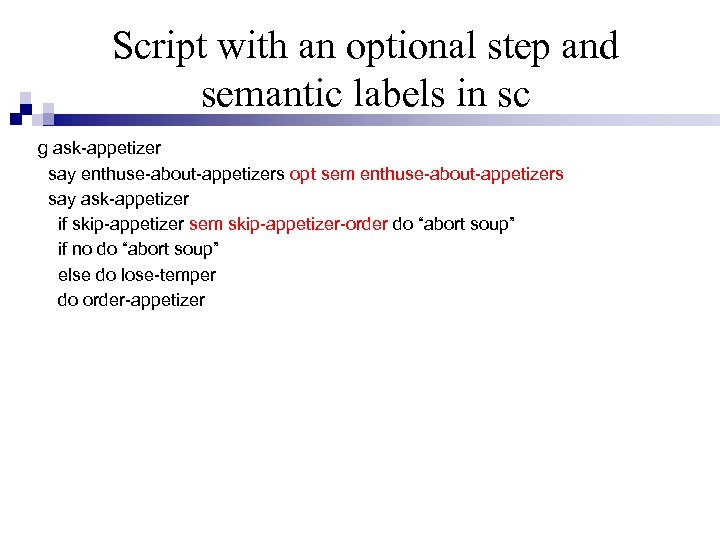

Script with an optional step and semantic labels in sc g ask-appetizer say enthuse-about-appetizers opt sem enthuse-about-appetizers say ask-appetizer if skip-appetizer sem skip-appetizer-order do “abort soup” if no do “abort soup” else do lose-temper do order-appetizer

Script with an optional step and semantic labels in sc g ask-appetizer say enthuse-about-appetizers opt sem enthuse-about-appetizers say ask-appetizer if skip-appetizer sem skip-appetizer-order do “abort soup” if no do “abort soup” else do lose-temper do order-appetizer

Additional authoring features Automated turn transitions/feedback Must specify truth-values for responses in context of an initiation Default truth-value is “unknown” Override automated turn transitions/feedbacks with a “say” Optional steps: Skip if specified condition met in recent dialogue history Looping: Repeat the template for a goal until a condition is met Globally set condition once set

Additional authoring features Automated turn transitions/feedback Must specify truth-values for responses in context of an initiation Default truth-value is “unknown” Override automated turn transitions/feedbacks with a “say” Optional steps: Skip if specified condition met in recent dialogue history Looping: Repeat the template for a goal until a condition is met Globally set condition once set

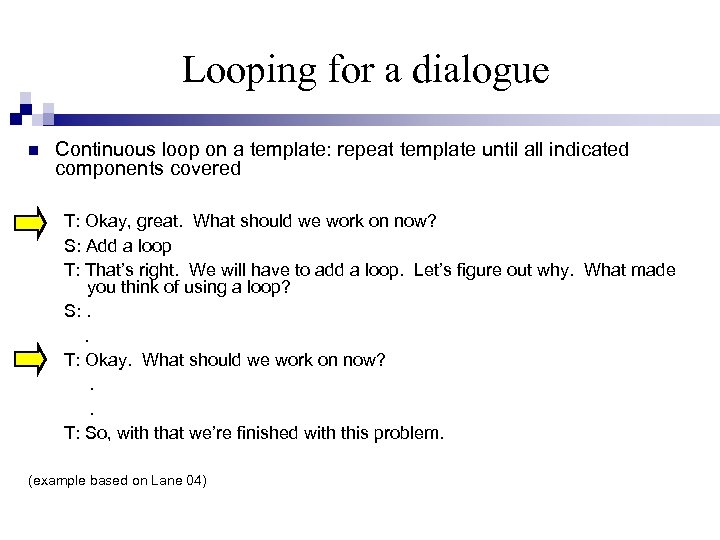

Looping for a dialogue Continuous loop on a template: repeat template until all indicated components covered T: Okay, great. What should we work on now? S: Add a loop T: That’s right. We will have to add a loop. Let’s figure out why. What made you think of using a loop? S: . . T: Okay. What should we work on now? . . T: So, with that we’re finished with this problem. (example based on Lane 04)

Looping for a dialogue Continuous loop on a template: repeat template until all indicated components covered T: Okay, great. What should we work on now? S: Add a loop T: That’s right. We will have to add a loop. Let’s figure out why. What made you think of using a loop? S: . . T: Okay. What should we work on now? . . T: So, with that we’re finished with this problem. (example based on Lane 04)

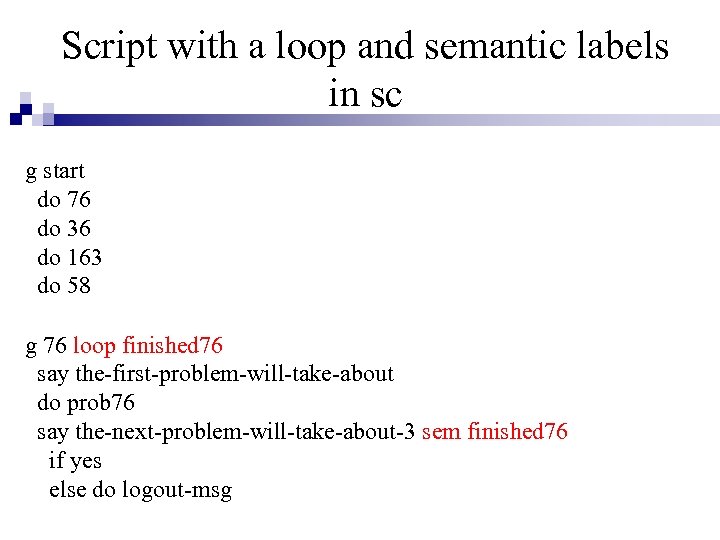

Script with a loop and semantic labels in sc g start do 76 do 36 do 163 do 58 g 76 loop finished 76 say the-first-problem-will-take-about do prob 76 say the-next-problem-will-take-about-3 sem finished 76 if yes else do logout-msg

Script with a loop and semantic labels in sc g start do 76 do 36 do 163 do 58 g 76 loop finished 76 say the-first-problem-will-take-about do prob 76 say the-next-problem-will-take-about-3 sem finished 76 if yes else do logout-msg

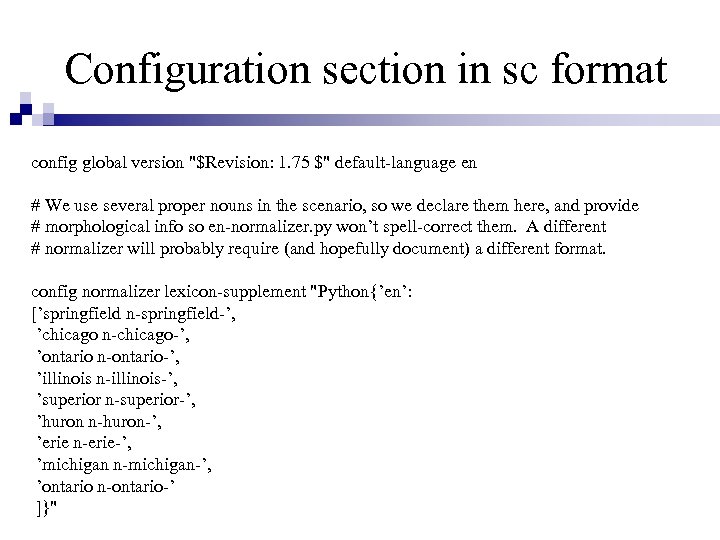

Configuration section in sc format config global version "$Revision: 1. 75 $" default-language en # We use several proper nouns in the scenario, so we declare them here, and provide # morphological info so en-normalizer. py won’t spell-correct them. A different # normalizer will probably require (and hopefully document) a different format. config normalizer lexicon-supplement "Python{’en’: [’springfield n-springfield-’, ’chicago n-chicago-’, ’ontario n-ontario-’, ’illinois n-illinois-’, ’superior n-superior-’, ’huron n-huron-’, ’erie n-erie-’, ’michigan n-michigan-’, ’ontario n-ontario-’ ]}"

Configuration section in sc format config global version "$Revision: 1. 75 $" default-language en # We use several proper nouns in the scenario, so we declare them here, and provide # morphological info so en-normalizer. py won’t spell-correct them. A different # normalizer will probably require (and hopefully document) a different format. config normalizer lexicon-supplement "Python{’en’: [’springfield n-springfield-’, ’chicago n-chicago-’, ’ontario n-ontario-’, ’illinois n-illinois-’, ’superior n-superior-’, ’huron n-huron-’, ’erie n-erie-’, ’michigan n-michigan-’, ’ontario n-ontario-’ ]}"