d8f0feebdfce91f4b3c852537fbe7544.ppt

- Количество слайдов: 52

Advanced Artificial Intelligence Lecture 1: Introduction to AI Associate professor, Ruiyun Yu Software College, Northeastern University Email: yury@mail. neu. edu. cn

Advanced Artificial Intelligence Lecture 1: Introduction to AI Associate professor, Ruiyun Yu Software College, Northeastern University Email: yury@mail. neu. edu. cn

Outline • Course overview • AI Introduction (Chapter 1) • What is AI? • Brief history of AI • What’s the state of AI now? • What’s an agent? (Chapter 2) • Agents and rational agents • Properties of environments • Types of agents • Two great persons 2

Outline • Course overview • AI Introduction (Chapter 1) • What is AI? • Brief history of AI • What’s the state of AI now? • What’s an agent? (Chapter 2) • Agents and rational agents • Properties of environments • Types of agents • Two great persons 2

Textbook & Prerequisite • Textbook • Artificial Intelligence: A Modern Approach (2 nd or 3 rd edition) • Prerequisite • Algorithmic analysis (big-O notation, NP-completeness) • Basic probability theory

Textbook & Prerequisite • Textbook • Artificial Intelligence: A Modern Approach (2 nd or 3 rd edition) • Prerequisite • Algorithmic analysis (big-O notation, NP-completeness) • Basic probability theory

Your daily life AI Vision Could an intelligent agent living on your home computer manage your email, coordinate your work and social activities, help plan your vacations…… even watch your house while you take vacations.

Your daily life AI Vision Could an intelligent agent living on your home computer manage your email, coordinate your work and social activities, help plan your vacations…… even watch your house while you take vacations.

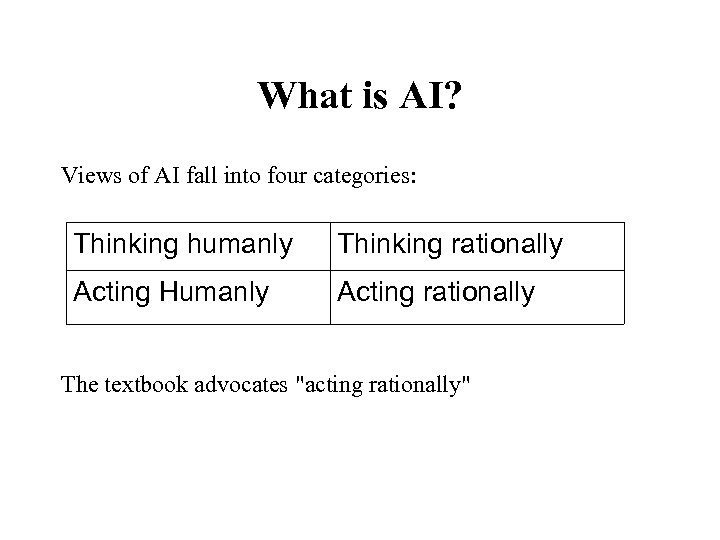

What is AI? Views of AI fall into four categories: Thinking humanly Thinking rationally Acting Humanly Acting rationally The textbook advocates "acting rationally"

What is AI? Views of AI fall into four categories: Thinking humanly Thinking rationally Acting Humanly Acting rationally The textbook advocates "acting rationally"

Acting humanly: Turing Test • Turing (1950) "Computing machinery and intelligence": “Can machines think? ” “Can machines behave intelligently? ” • Predicted that by 2000, a machine might have a 30% chance of fooling a lay person for 5 minutes • Anticipated all major arguments against AI in following 50 years • Suggested major components of AI: knowledge, reasoning, language understanding, learning • Capabilities – – – Natural language processing Knowledge representation Automated reasoning Machine learning Computer vision robotics

Acting humanly: Turing Test • Turing (1950) "Computing machinery and intelligence": “Can machines think? ” “Can machines behave intelligently? ” • Predicted that by 2000, a machine might have a 30% chance of fooling a lay person for 5 minutes • Anticipated all major arguments against AI in following 50 years • Suggested major components of AI: knowledge, reasoning, language understanding, learning • Capabilities – – – Natural language processing Knowledge representation Automated reasoning Machine learning Computer vision robotics

Thinking humanly: cognitive modeling • 1960 s "cognitive revolution": information-processing psychology • Requires scientific theories of internal activities of the brain • How to validate? Requires – Predicting and testing behavior of human subjects (top-down) – Direct identification from neurological data (bottom-up) • Both approaches (roughly, Cognitive Science and Cognitive Neuroscience) are now distinct from AI

Thinking humanly: cognitive modeling • 1960 s "cognitive revolution": information-processing psychology • Requires scientific theories of internal activities of the brain • How to validate? Requires – Predicting and testing behavior of human subjects (top-down) – Direct identification from neurological data (bottom-up) • Both approaches (roughly, Cognitive Science and Cognitive Neuroscience) are now distinct from AI

Thinking rationally: "laws of thought" • Aristotle: what are correct arguments/thought processes? – – • Syllogisms: patterns for argument structures Socrates is a man; all men are mortal; Socrates is mortal. Logicians in the 19 th century developed a precise notation for statements about all kinds of objects in the world Problems: • – – It’s not easy to take informal knowledge and state it in the formal terms required by logical notation There is a big difference between solving a problem “in principle” and solving it “in practice”

Thinking rationally: "laws of thought" • Aristotle: what are correct arguments/thought processes? – – • Syllogisms: patterns for argument structures Socrates is a man; all men are mortal; Socrates is mortal. Logicians in the 19 th century developed a precise notation for statements about all kinds of objects in the world Problems: • – – It’s not easy to take informal knowledge and state it in the formal terms required by logical notation There is a big difference between solving a problem “in principle” and solving it “in practice”

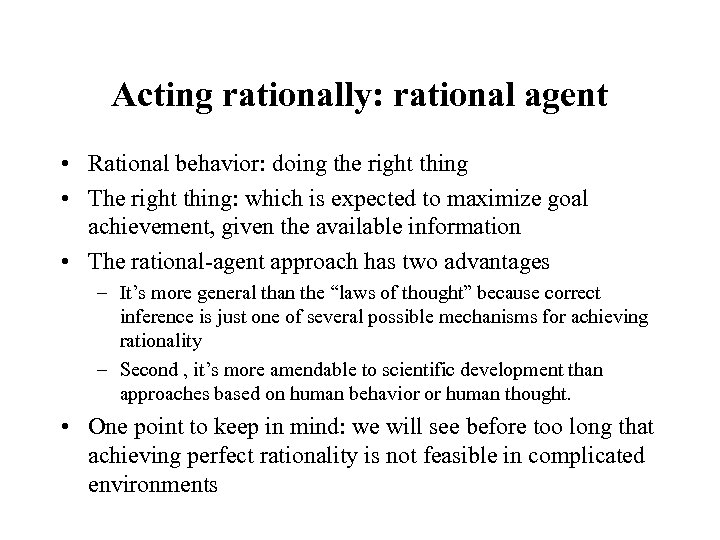

Acting rationally: rational agent • Rational behavior: doing the right thing • The right thing: which is expected to maximize goal achievement, given the available information • The rational-agent approach has two advantages – It’s more general than the “laws of thought” because correct inference is just one of several possible mechanisms for achieving rationality – Second , it’s more amendable to scientific development than approaches based on human behavior or human thought. • One point to keep in mind: we will see before too long that achieving perfect rationality is not feasible in complicated environments

Acting rationally: rational agent • Rational behavior: doing the right thing • The right thing: which is expected to maximize goal achievement, given the available information • The rational-agent approach has two advantages – It’s more general than the “laws of thought” because correct inference is just one of several possible mechanisms for achieving rationality – Second , it’s more amendable to scientific development than approaches based on human behavior or human thought. • One point to keep in mind: we will see before too long that achieving perfect rationality is not feasible in complicated environments

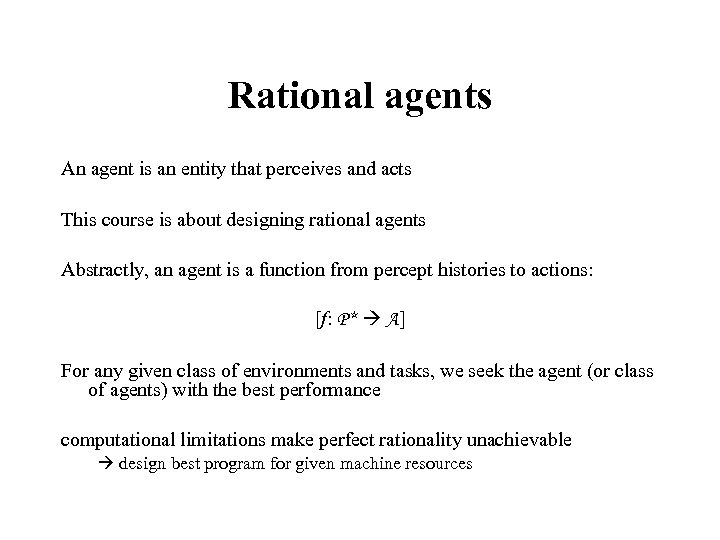

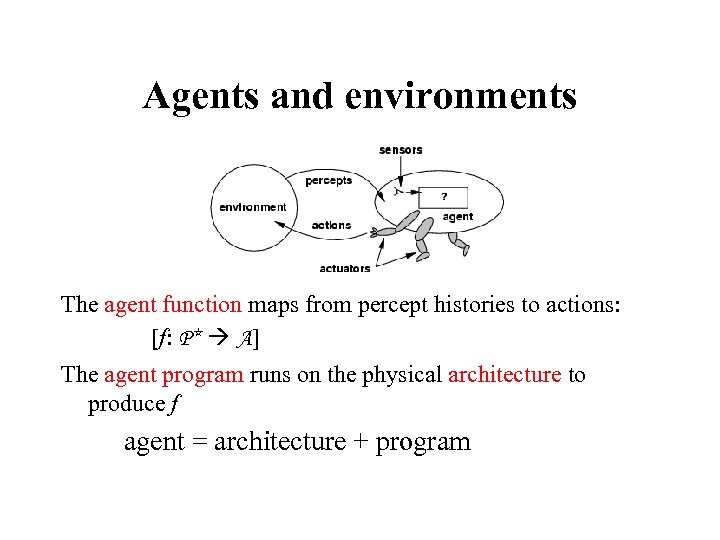

Rational agents An agent is an entity that perceives and acts This course is about designing rational agents Abstractly, an agent is a function from percept histories to actions: [f: P* A] For any given class of environments and tasks, we seek the agent (or class of agents) with the best performance computational limitations make perfect rationality unachievable design best program for given machine resources

Rational agents An agent is an entity that perceives and acts This course is about designing rational agents Abstractly, an agent is a function from percept histories to actions: [f: P* A] For any given class of environments and tasks, we seek the agent (or class of agents) with the best performance computational limitations make perfect rationality unachievable design best program for given machine resources

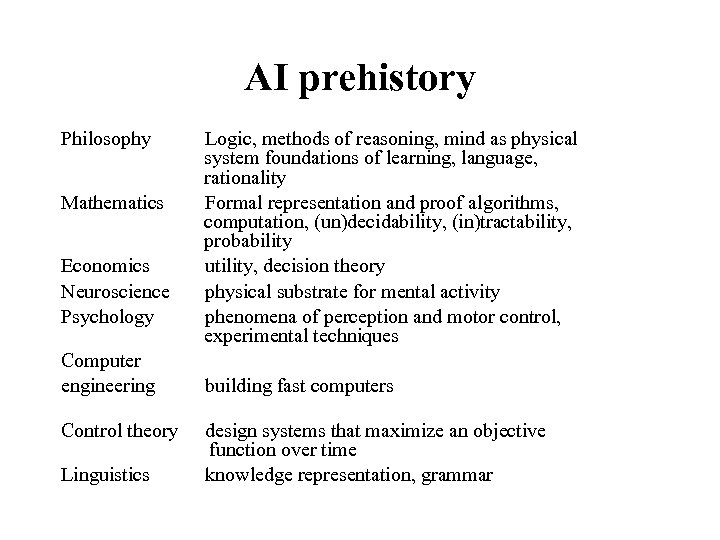

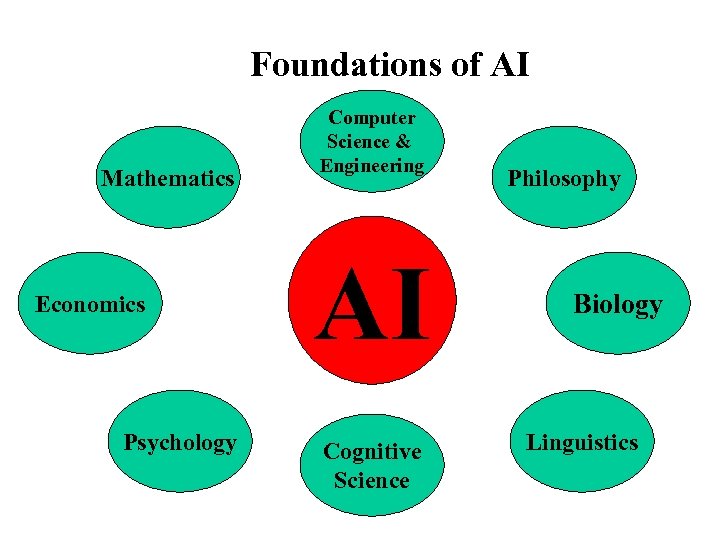

AI prehistory Philosophy Mathematics Economics Neuroscience Psychology Computer engineering Control theory Linguistics Logic, methods of reasoning, mind as physical system foundations of learning, language, rationality Formal representation and proof algorithms, computation, (un)decidability, (in)tractability, probability utility, decision theory physical substrate for mental activity phenomena of perception and motor control, experimental techniques building fast computers design systems that maximize an objective function over time knowledge representation, grammar

AI prehistory Philosophy Mathematics Economics Neuroscience Psychology Computer engineering Control theory Linguistics Logic, methods of reasoning, mind as physical system foundations of learning, language, rationality Formal representation and proof algorithms, computation, (un)decidability, (in)tractability, probability utility, decision theory physical substrate for mental activity phenomena of perception and motor control, experimental techniques building fast computers design systems that maximize an objective function over time knowledge representation, grammar

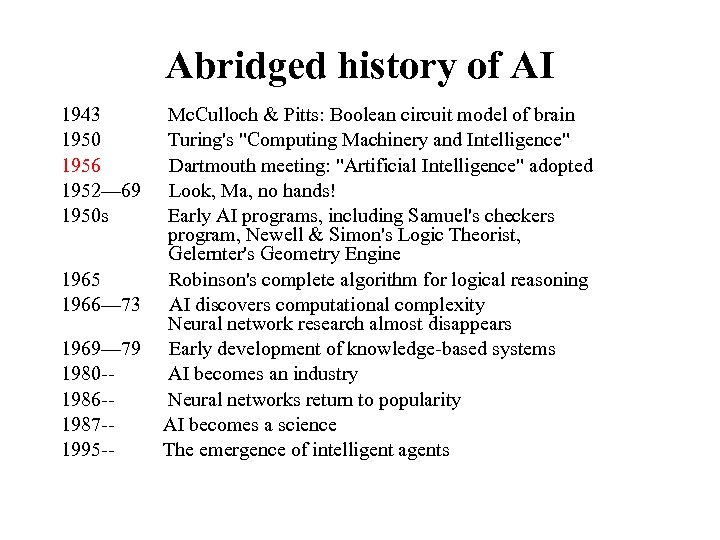

Abridged history of AI 1943 1950 1956 1952— 69 1950 s 1965 1966— 73 1969— 79 1980 -1986 -1987 -1995 -- Mc. Culloch & Pitts: Boolean circuit model of brain Turing's "Computing Machinery and Intelligence" Dartmouth meeting: "Artificial Intelligence" adopted Look, Ma, no hands! Early AI programs, including Samuel's checkers program, Newell & Simon's Logic Theorist, Gelernter's Geometry Engine Robinson's complete algorithm for logical reasoning AI discovers computational complexity Neural network research almost disappears Early development of knowledge-based systems AI becomes an industry Neural networks return to popularity AI becomes a science The emergence of intelligent agents

Abridged history of AI 1943 1950 1956 1952— 69 1950 s 1965 1966— 73 1969— 79 1980 -1986 -1987 -1995 -- Mc. Culloch & Pitts: Boolean circuit model of brain Turing's "Computing Machinery and Intelligence" Dartmouth meeting: "Artificial Intelligence" adopted Look, Ma, no hands! Early AI programs, including Samuel's checkers program, Newell & Simon's Logic Theorist, Gelernter's Geometry Engine Robinson's complete algorithm for logical reasoning AI discovers computational complexity Neural network research almost disappears Early development of knowledge-based systems AI becomes an industry Neural networks return to popularity AI becomes a science The emergence of intelligent agents

State of the art • Deep Blue defeated the reigning world chess champion Garry Kasparov in 1997 • Proved a mathematical conjecture (Robbins conjecture) unsolved for decades • No hands across America (driving autonomously 98% of the time from Pittsburgh to San Diego) • During the 1991 Gulf War, US forces deployed an AI logistics planning and scheduling program that involved up to 50, 000 vehicles, cargo, and people • NASA's on-board autonomous planning program controlled the scheduling of operations for a spacecraft • Proverb solves crossword puzzles better than most humans • 1998: Founding of Google • 2000: Interactive robot pets • 2004: Commercial recommender systems (TIVO, amazon. com) • 2011: Apple Siri born

State of the art • Deep Blue defeated the reigning world chess champion Garry Kasparov in 1997 • Proved a mathematical conjecture (Robbins conjecture) unsolved for decades • No hands across America (driving autonomously 98% of the time from Pittsburgh to San Diego) • During the 1991 Gulf War, US forces deployed an AI logistics planning and scheduling program that involved up to 50, 000 vehicles, cargo, and people • NASA's on-board autonomous planning program controlled the scheduling of operations for a spacecraft • Proverb solves crossword puzzles better than most humans • 1998: Founding of Google • 2000: Interactive robot pets • 2004: Commercial recommender systems (TIVO, amazon. com) • 2011: Apple Siri born

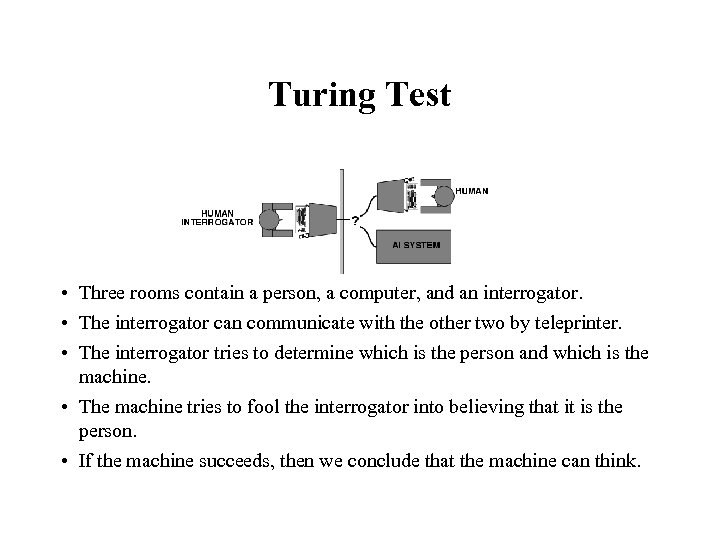

Turing Test • Three rooms contain a person, a computer, and an interrogator. • The interrogator can communicate with the other two by teleprinter. • The interrogator tries to determine which is the person and which is the machine. • The machine tries to fool the interrogator into believing that it is the person. • If the machine succeeds, then we conclude that the machine can think.

Turing Test • Three rooms contain a person, a computer, and an interrogator. • The interrogator can communicate with the other two by teleprinter. • The interrogator tries to determine which is the person and which is the machine. • The machine tries to fool the interrogator into believing that it is the person. • If the machine succeeds, then we conclude that the machine can think.

The Loebner Contest • A modern version of the Turing Test, held annually • Hugh Loebner was once director of UMBC’s Academic Computing Services (née UCS) • http: //www. loebner. net/Prizef/loebner-prize. html • Scoring – Each year an Annual Prize & Bronze Medal is awarded to the most human-like computer. Loebner Prize 2013 Annual First Prize is: US$ 4000 + Annual Bronze Medal, Second Prize: US$ 1000, Third Prize: US$ 750 and Fourth Prize: US$ 250 – The Silver Medal Prize of $25, 000 + Silver Medal will be awarded if any program fools two or more judges when compared to two or more humans – During the Multi. Modal stage, if any entry fools half the judges compared to half of the humans, the program's creator(s) will receive the Grand Prize of $100, 000 + 18 kt Gold Medal

The Loebner Contest • A modern version of the Turing Test, held annually • Hugh Loebner was once director of UMBC’s Academic Computing Services (née UCS) • http: //www. loebner. net/Prizef/loebner-prize. html • Scoring – Each year an Annual Prize & Bronze Medal is awarded to the most human-like computer. Loebner Prize 2013 Annual First Prize is: US$ 4000 + Annual Bronze Medal, Second Prize: US$ 1000, Third Prize: US$ 750 and Fourth Prize: US$ 250 – The Silver Medal Prize of $25, 000 + Silver Medal will be awarded if any program fools two or more judges when compared to two or more humans – During the Multi. Modal stage, if any entry fools half the judges compared to half of the humans, the program's creator(s) will receive the Grand Prize of $100, 000 + 18 kt Gold Medal

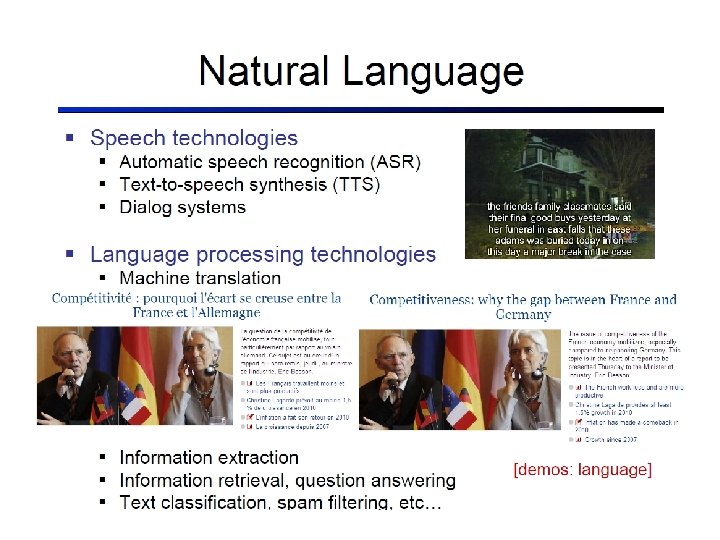

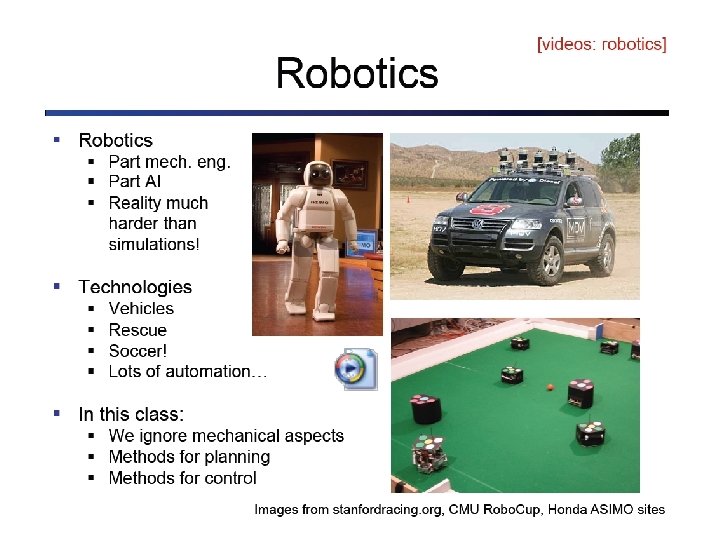

What Can AI Systems Do? Here are some example applications • Computer vision: face recognition from a large set • Robotics: autonomous (mostly) automobile • Natural language processing: simple machine translation • Expert systems: medical diagnosis in a narrow domain • Spoken language systems: ~1000 word continuous speech • Planning and scheduling: Hubble Telescope experiments • Learning: text categorization into ~1000 topics • User modeling: Bayesian reasoning in Windows help (the infamous paper clip…) • Games: Grand Master level in chess (world champion), perfect play in checkers, professional-level Go players

What Can AI Systems Do? Here are some example applications • Computer vision: face recognition from a large set • Robotics: autonomous (mostly) automobile • Natural language processing: simple machine translation • Expert systems: medical diagnosis in a narrow domain • Spoken language systems: ~1000 word continuous speech • Planning and scheduling: Hubble Telescope experiments • Learning: text categorization into ~1000 topics • User modeling: Bayesian reasoning in Windows help (the infamous paper clip…) • Games: Grand Master level in chess (world champion), perfect play in checkers, professional-level Go players

Foundations of AI Mathematics Economics Psychology Computer Science & Engineering AI Cognitive Science Philosophy Biology Linguistics

Foundations of AI Mathematics Economics Psychology Computer Science & Engineering AI Cognitive Science Philosophy Biology Linguistics

What Do AI People Do? • • • Represent knowledge Reason about knowledge Behave intelligently in complex environments Develop interesting and useful applications Interact with people, agents, and the environment

What Do AI People Do? • • • Represent knowledge Reason about knowledge Behave intelligently in complex environments Develop interesting and useful applications Interact with people, agents, and the environment

Intelligent Agents

Intelligent Agents

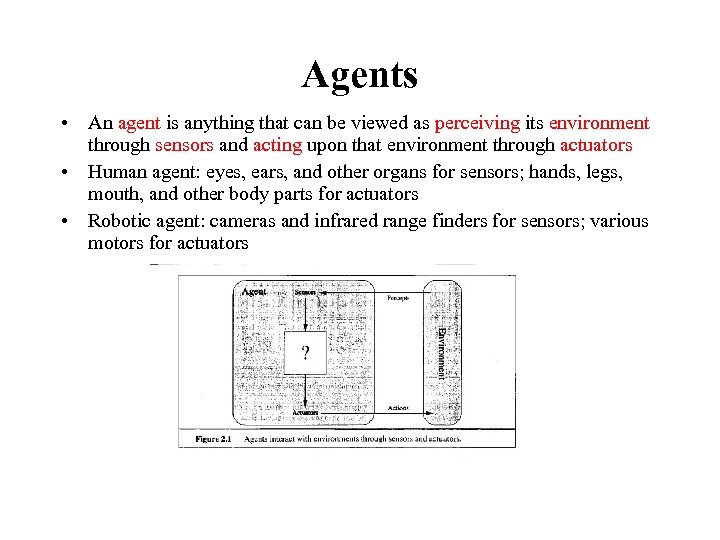

Agents • An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators • Human agent: eyes, ears, and other organs for sensors; hands, legs, mouth, and other body parts for actuators • Robotic agent: cameras and infrared range finders for sensors; various motors for actuators

Agents • An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators • Human agent: eyes, ears, and other organs for sensors; hands, legs, mouth, and other body parts for actuators • Robotic agent: cameras and infrared range finders for sensors; various motors for actuators

Rational agents • An agent should strive to "do the right thing", based on what it can perceive and the actions it can perform. The right action is the one that will cause the agent to be most successful • Performance measure: An objective criterion for success of an agent's behavior • E. g. , performance measure of a vacuum-cleaner agent could be amount of dirt cleaned up, amount of time taken, amount of electricity consumed, amount of noise generated, etc.

Rational agents • An agent should strive to "do the right thing", based on what it can perceive and the actions it can perform. The right action is the one that will cause the agent to be most successful • Performance measure: An objective criterion for success of an agent's behavior • E. g. , performance measure of a vacuum-cleaner agent could be amount of dirt cleaned up, amount of time taken, amount of electricity consumed, amount of noise generated, etc.

Rational agents • Rational Agent: For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has.

Rational agents • Rational Agent: For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has.

Rational agents • Rationality is distinct from omniscience (all-knowing with infinite knowledge) • Agents can perform actions in order to modify future percepts so as to obtain useful information (information gathering, exploration) • An agent is autonomous if its behavior is determined by its own experience (with ability to learn and adapt)

Rational agents • Rationality is distinct from omniscience (all-knowing with infinite knowledge) • Agents can perform actions in order to modify future percepts so as to obtain useful information (information gathering, exploration) • An agent is autonomous if its behavior is determined by its own experience (with ability to learn and adapt)

Specifying the environment • PEAS – – Performance measure Environment Actuators Sensors • Consider, e. g. , the task of designing an automated taxi driver: – Performance measure: Safe, fast, legal, comfortable trip, maximize profits – Environment: Roads, other traffic, pedestrians, customers – Actuators: Steering wheel, accelerator, brake, signal, horn – Sensors: Cameras, sonar, speedometer, GPS, odometer, engine sensors, keyboard

Specifying the environment • PEAS – – Performance measure Environment Actuators Sensors • Consider, e. g. , the task of designing an automated taxi driver: – Performance measure: Safe, fast, legal, comfortable trip, maximize profits – Environment: Roads, other traffic, pedestrians, customers – Actuators: Steering wheel, accelerator, brake, signal, horn – Sensors: Cameras, sonar, speedometer, GPS, odometer, engine sensors, keyboard

PEAS • Agent: Medical diagnosis system – Performance measure: Healthy patient, minimize costs, lawsuits – Environment: Patient, hospital, staff – Actuators: Screen display (questions, tests, diagnoses, treatments, referrals) – Sensors: Keyboard (entry of symptoms, findings, patient's answers)

PEAS • Agent: Medical diagnosis system – Performance measure: Healthy patient, minimize costs, lawsuits – Environment: Patient, hospital, staff – Actuators: Screen display (questions, tests, diagnoses, treatments, referrals) – Sensors: Keyboard (entry of symptoms, findings, patient's answers)

PEAS • Agent: Part-picking robot – – Performance measure: Percentage of parts in correct bins Environment: Conveyor belt with parts, bins Actuators: Jointed arm and hand Sensors: Camera, joint angle sensors

PEAS • Agent: Part-picking robot – – Performance measure: Percentage of parts in correct bins Environment: Conveyor belt with parts, bins Actuators: Jointed arm and hand Sensors: Camera, joint angle sensors

PEAS • Agent: Interactive English tutor – – Performance measure: Maximize student's score on test Environment: Set of students Actuators: Screen display (exercises, suggestions, corrections) Sensors: Keyboard

PEAS • Agent: Interactive English tutor – – Performance measure: Maximize student's score on test Environment: Set of students Actuators: Screen display (exercises, suggestions, corrections) Sensors: Keyboard

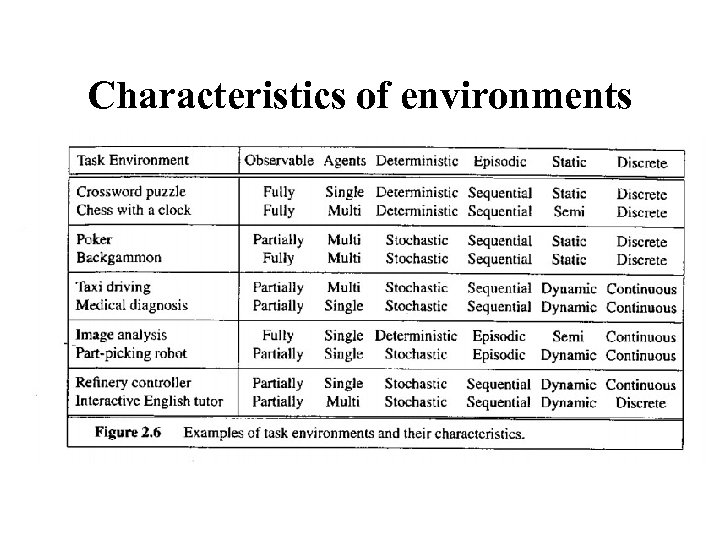

Properties of Environments Fully observable/Partially observable. If an agent’s sensors give it access to the complete state of the environment needed to choose an action, the environment is fully observable. Such environments are convenient, since the agent is freed from the task of keeping track of the changes in the environment. Deterministic/Stochastic. An environment is deterministic if the next state of the environment is completely determined by the current state of the environment and the action of the agent; in a stochastic environment, there are multiple, unpredictable outcomes In a fully observable, deterministic environment, the agent need not deal with uncertainty.

Properties of Environments Fully observable/Partially observable. If an agent’s sensors give it access to the complete state of the environment needed to choose an action, the environment is fully observable. Such environments are convenient, since the agent is freed from the task of keeping track of the changes in the environment. Deterministic/Stochastic. An environment is deterministic if the next state of the environment is completely determined by the current state of the environment and the action of the agent; in a stochastic environment, there are multiple, unpredictable outcomes In a fully observable, deterministic environment, the agent need not deal with uncertainty.

Properties of Environments II Episodic/Sequential. An episodic environment means that subsequent episodes do not depend on what actions occurred in previous episodes. In a sequential environment, the agent engages in a series of connected episodes. Such environments do not require the agent to plan ahead. Static/Dynamic. A static environment does not change while the agent is thinking. The passage of time as an agent deliberates is irrelevant. The agent doesn’t need to observe the world during deliberation.

Properties of Environments II Episodic/Sequential. An episodic environment means that subsequent episodes do not depend on what actions occurred in previous episodes. In a sequential environment, the agent engages in a series of connected episodes. Such environments do not require the agent to plan ahead. Static/Dynamic. A static environment does not change while the agent is thinking. The passage of time as an agent deliberates is irrelevant. The agent doesn’t need to observe the world during deliberation.

Properties of Environments III Discrete/Continuous. If the number of distinct percepts and actions is limited, the environment is discrete, otherwise it is continuous. Single agent/Multi-agent. If the environment contains other intelligent agents, the agent needs to be concerned about strategic, game-theoretic aspects of the environment (for either cooperative or competitive agents) Most engineering environments don’t have multi-agent properties, whereas most social and economic systems get their complexity from the interactions of (more or less) rational agents.

Properties of Environments III Discrete/Continuous. If the number of distinct percepts and actions is limited, the environment is discrete, otherwise it is continuous. Single agent/Multi-agent. If the environment contains other intelligent agents, the agent needs to be concerned about strategic, game-theoretic aspects of the environment (for either cooperative or competitive agents) Most engineering environments don’t have multi-agent properties, whereas most social and economic systems get their complexity from the interactions of (more or less) rational agents.

Characteristics of environments

Characteristics of environments

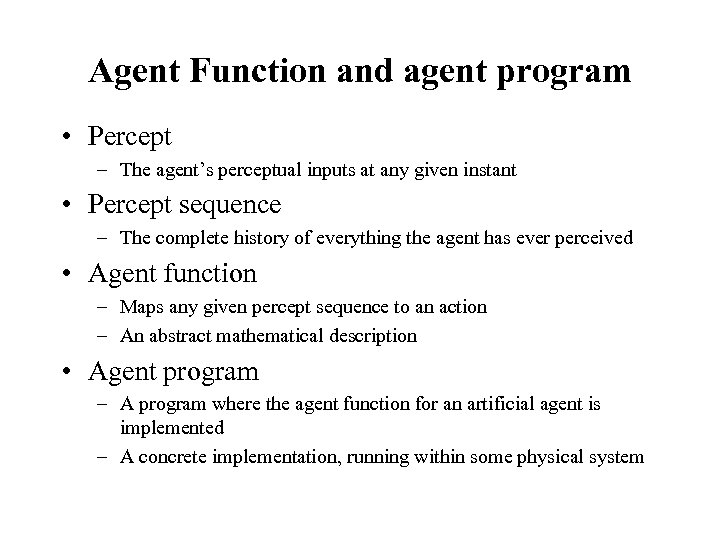

Agent Function and agent program • Percept – The agent’s perceptual inputs at any given instant • Percept sequence – The complete history of everything the agent has ever perceived • Agent function – Maps any given percept sequence to an action – An abstract mathematical description • Agent program – A program where the agent function for an artificial agent is implemented – A concrete implementation, running within some physical system

Agent Function and agent program • Percept – The agent’s perceptual inputs at any given instant • Percept sequence – The complete history of everything the agent has ever perceived • Agent function – Maps any given percept sequence to an action – An abstract mathematical description • Agent program – A program where the agent function for an artificial agent is implemented – A concrete implementation, running within some physical system

Agents and environments The agent function maps from percept histories to actions: [f: P* A] The agent program runs on the physical architecture to produce f agent = architecture + program

Agents and environments The agent function maps from percept histories to actions: [f: P* A] The agent program runs on the physical architecture to produce f agent = architecture + program

![Vacuum-cleaner world Percepts: location and contents, e. g. , [A, Dirty] Actions: Left, Right, Vacuum-cleaner world Percepts: location and contents, e. g. , [A, Dirty] Actions: Left, Right,](https://present5.com/presentation/d8f0feebdfce91f4b3c852537fbe7544/image-36.jpg) Vacuum-cleaner world Percepts: location and contents, e. g. , [A, Dirty] Actions: Left, Right, Suck, No. Op

Vacuum-cleaner world Percepts: location and contents, e. g. , [A, Dirty] Actions: Left, Right, Suck, No. Op

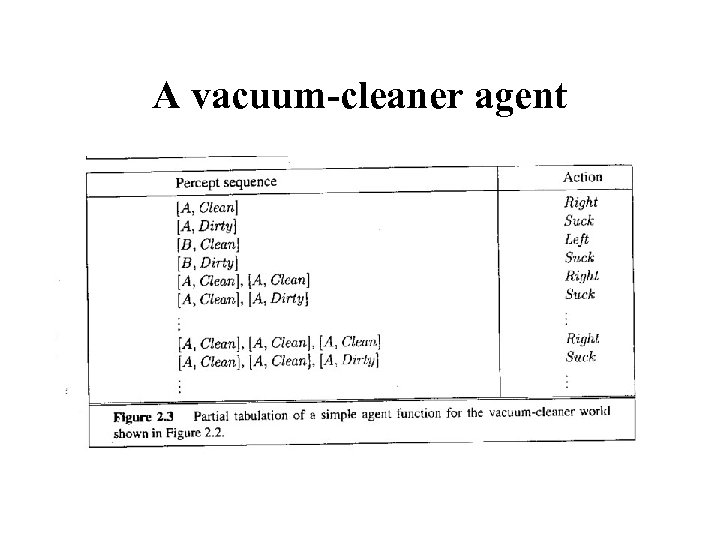

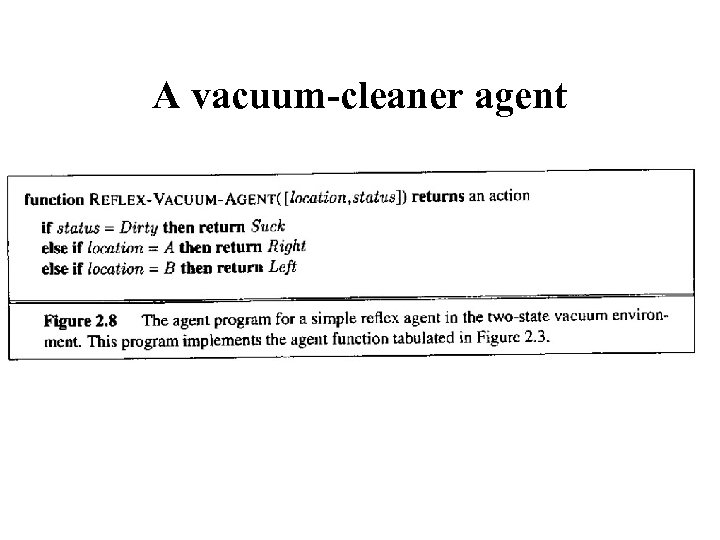

A vacuum-cleaner agent

A vacuum-cleaner agent

A vacuum-cleaner agent

A vacuum-cleaner agent

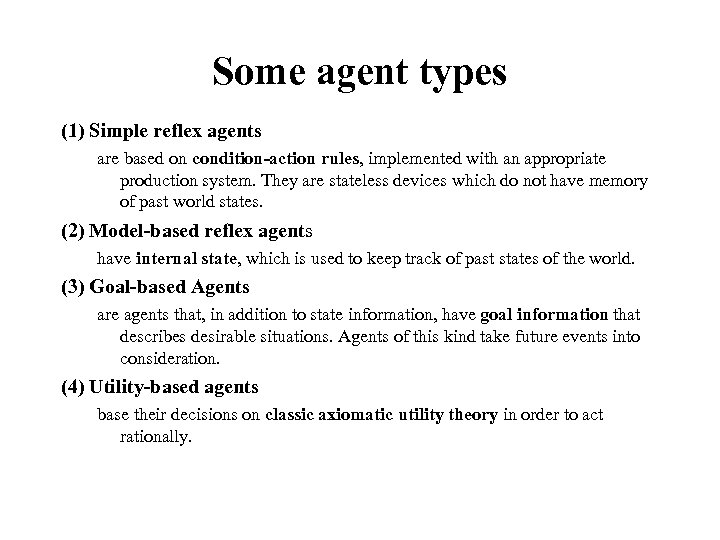

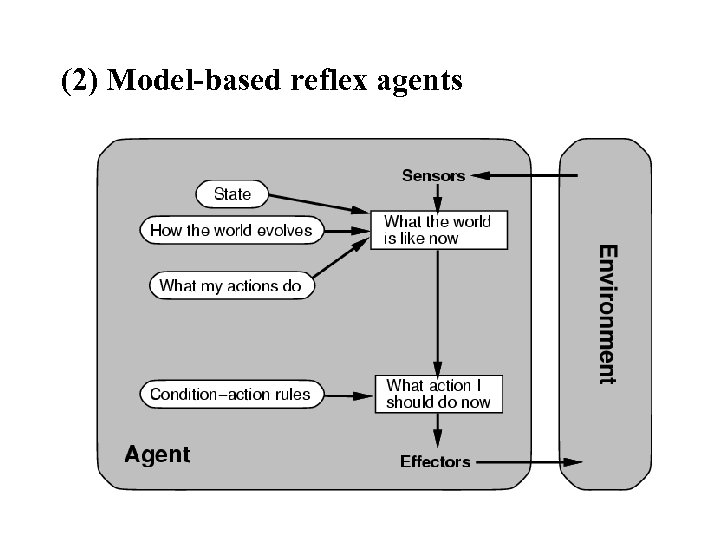

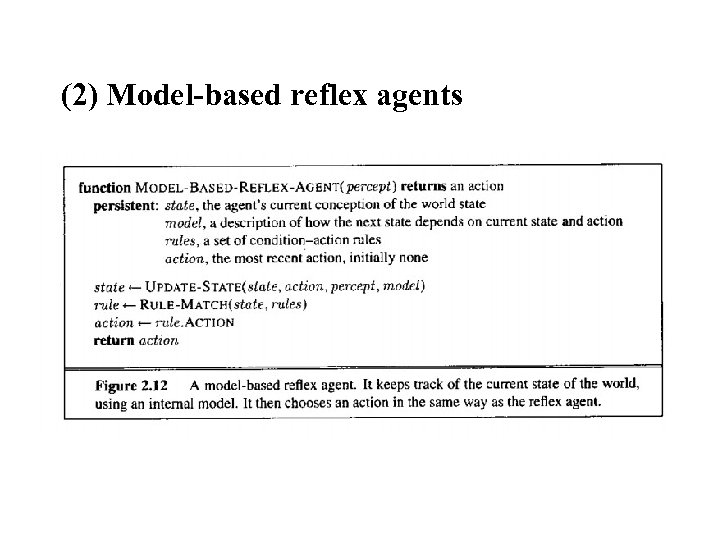

Some agent types (1) Simple reflex agents are based on condition-action rules, implemented with an appropriate production system. They are stateless devices which do not have memory of past world states. (2) Model-based reflex agents have internal state, which is used to keep track of past states of the world. (3) Goal-based Agents are agents that, in addition to state information, have goal information that describes desirable situations. Agents of this kind take future events into consideration. (4) Utility-based agents base their decisions on classic axiomatic utility theory in order to act rationally.

Some agent types (1) Simple reflex agents are based on condition-action rules, implemented with an appropriate production system. They are stateless devices which do not have memory of past world states. (2) Model-based reflex agents have internal state, which is used to keep track of past states of the world. (3) Goal-based Agents are agents that, in addition to state information, have goal information that describes desirable situations. Agents of this kind take future events into consideration. (4) Utility-based agents base their decisions on classic axiomatic utility theory in order to act rationally.

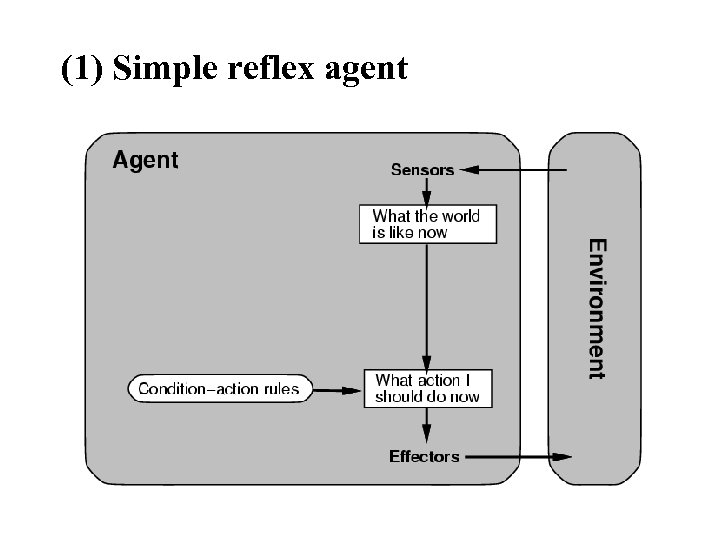

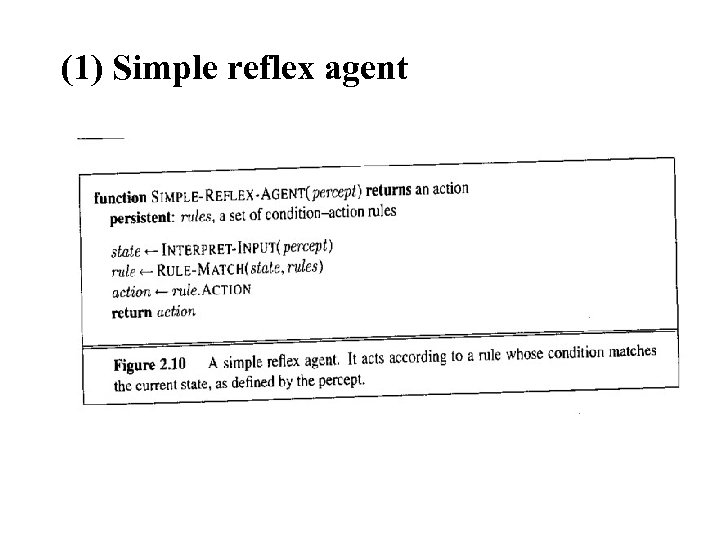

(1) Simple reflex agent

(1) Simple reflex agent

(1) Simple reflex agent

(1) Simple reflex agent

(1) Simple reflex agents • Rule-based reasoning to map from percepts to optimal action; each rule handles a collection of perceived states • Problems Still usually too big to generate and to store Still no knowledge of non-perceptual parts of state Still not adaptive to changes in the environment; requires collection of rules to be updated if changes occur Still can’t make actions conditional on previous state

(1) Simple reflex agents • Rule-based reasoning to map from percepts to optimal action; each rule handles a collection of perceived states • Problems Still usually too big to generate and to store Still no knowledge of non-perceptual parts of state Still not adaptive to changes in the environment; requires collection of rules to be updated if changes occur Still can’t make actions conditional on previous state

(2) Model-based reflex agents

(2) Model-based reflex agents

(2) Model-based reflex agents

(2) Model-based reflex agents

(2) Model-based reflex agents • Encode “internal state” of the world to remember the past as contained in earlier percepts. • Needed because sensors do not usually give the entire state of the world at each input, so perception of the environment is captured over time. “State” is used to encode different "world states" that generate the same immediate percept. • Requires ability to represent change in the world; one possibility is to represent just the latest state. .

(2) Model-based reflex agents • Encode “internal state” of the world to remember the past as contained in earlier percepts. • Needed because sensors do not usually give the entire state of the world at each input, so perception of the environment is captured over time. “State” is used to encode different "world states" that generate the same immediate percept. • Requires ability to represent change in the world; one possibility is to represent just the latest state. .

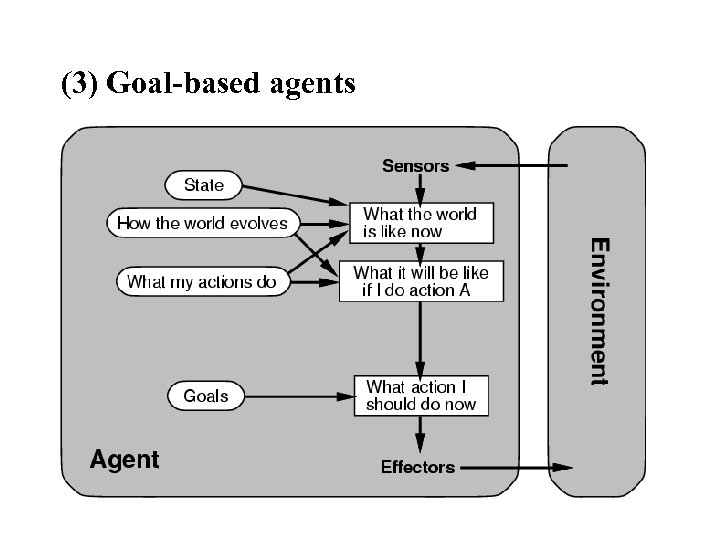

(3) Goal-based agents

(3) Goal-based agents

(3) Goal-based agents • Choose actions so as to achieve a (given or computed) goal • A goal is a description of a desirable situation. • Keeping track of the current state is often not enough need to add goals to decide which situations are good • May have to consider long sequences of possible actions before deciding if goal is achieved – involves consideration of the future, “what will happen if I do. . . ? ”

(3) Goal-based agents • Choose actions so as to achieve a (given or computed) goal • A goal is a description of a desirable situation. • Keeping track of the current state is often not enough need to add goals to decide which situations are good • May have to consider long sequences of possible actions before deciding if goal is achieved – involves consideration of the future, “what will happen if I do. . . ? ”

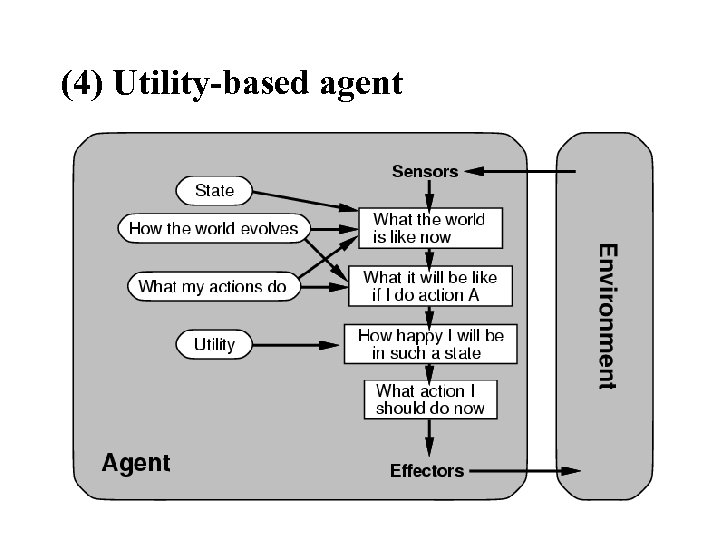

(4) Utility-based agent

(4) Utility-based agent

(4) Utility-based agents • When there are multiple possible alternatives, how to decide which one is best? • A goal specifies a crude distinction between a happy and unhappy state, but often need a more general performance measure that describes “degree of happiness. ” • Utility function U: State Reals indicating a measure of success or happiness when at a given state. • Allows decisions comparing choice between conflicting goals, and choice between likelihood of success and importance of goal (if achievement is uncertain).

(4) Utility-based agents • When there are multiple possible alternatives, how to decide which one is best? • A goal specifies a crude distinction between a happy and unhappy state, but often need a more general performance measure that describes “degree of happiness. ” • Utility function U: State Reals indicating a measure of success or happiness when at a given state. • Allows decisions comparing choice between conflicting goals, and choice between likelihood of success and importance of goal (if achievement is uncertain).

Summary of agent • An agent perceives and acts in an environment, has an architecture, and is implemented by an agent program. • An ideal agent always chooses the action which maximizes its expected performance, given its percept sequence so far. • An autonomous agent uses its own experience rather than builtin knowledge of the environment by the designer. • An agent program maps from percept to action and updates its internal state. Reflex agents respond immediately to percepts. Goal-based agents act in order to achieve their goal(s). Utility-based agents maximize their own utility function. • Representing knowledge is important for successful agent design. • The most challenging environments are partially observable, stochastic, sequential, dynamic, and continuous, and contain multiple intelligent agents.

Summary of agent • An agent perceives and acts in an environment, has an architecture, and is implemented by an agent program. • An ideal agent always chooses the action which maximizes its expected performance, given its percept sequence so far. • An autonomous agent uses its own experience rather than builtin knowledge of the environment by the designer. • An agent program maps from percept to action and updates its internal state. Reflex agents respond immediately to percepts. Goal-based agents act in order to achieve their goal(s). Utility-based agents maximize their own utility function. • Representing knowledge is important for successful agent design. • The most challenging environments are partially observable, stochastic, sequential, dynamic, and continuous, and contain multiple intelligent agents.

Two great persons in AI - 1 • Alan Mathison Turing (23 June, 1912 – 7 June, 1954) • English mathematician, logician, cryptanalyst, and computer scientist. • Providing a formalization of the concepts of "algorithm" and "computation" with the Turing machine, which played a significant role in the creation of the modern computer • Turing is widely considered to be the father of computer science and artificial intelligence • During the Second World War, Turing worked for the Government Code and Cypher School (GCCS) at Bletchley Park, Britain's codebreaking centre. For a time he was head of Hut 8, the section responsible for German naval cryptanalysis. He devised a number of techniques for breaking German ciphers • After the war he worked at the National Physical Laboratory, where he created one of the first designs for a stored-program computer, the ACE. In 1948 Turing joined Max Newman's Computing Laboratory at Manchester University • In 1950, his paper Computing Machinery and Intelligence introduced Turing test, machine learning, etc. • He died in 1954, just over two weeks before his 42 nd birthday, from cyanide poisoning, and an inquest determined he was suicide • A. M. Turing Award is name after him, which was established by ACM in 1966, and considered as the Nobel Prize of Computing

Two great persons in AI - 1 • Alan Mathison Turing (23 June, 1912 – 7 June, 1954) • English mathematician, logician, cryptanalyst, and computer scientist. • Providing a formalization of the concepts of "algorithm" and "computation" with the Turing machine, which played a significant role in the creation of the modern computer • Turing is widely considered to be the father of computer science and artificial intelligence • During the Second World War, Turing worked for the Government Code and Cypher School (GCCS) at Bletchley Park, Britain's codebreaking centre. For a time he was head of Hut 8, the section responsible for German naval cryptanalysis. He devised a number of techniques for breaking German ciphers • After the war he worked at the National Physical Laboratory, where he created one of the first designs for a stored-program computer, the ACE. In 1948 Turing joined Max Newman's Computing Laboratory at Manchester University • In 1950, his paper Computing Machinery and Intelligence introduced Turing test, machine learning, etc. • He died in 1954, just over two weeks before his 42 nd birthday, from cyanide poisoning, and an inquest determined he was suicide • A. M. Turing Award is name after him, which was established by ACM in 1966, and considered as the Nobel Prize of Computing

Two great persons in AI - 2 • John Mc. Carthy (September 4, 1927 – October 24, 2011) • American computer scientist and cognitive scientist. • During 1956, he organized the first international conference in the Dartmouth University. Ten attendees include Marvin Minsky, Claude Shannon, Nathaniel Rochester, et al. The term "artificial intelligence" (AI) invented by John Mc. Carthy was taken for naming this new research area. • He developed the Lisp programming language family. Originally specified in 1958, Lisp is the second-oldest high-level programming language in widespread use today; only Fortran is older (by one year). • Mc. Carthy received many honors, including the Turing Award for his contributions to the topic of AI, the United States National Medal of Science, and the Kyoto Prize.

Two great persons in AI - 2 • John Mc. Carthy (September 4, 1927 – October 24, 2011) • American computer scientist and cognitive scientist. • During 1956, he organized the first international conference in the Dartmouth University. Ten attendees include Marvin Minsky, Claude Shannon, Nathaniel Rochester, et al. The term "artificial intelligence" (AI) invented by John Mc. Carthy was taken for naming this new research area. • He developed the Lisp programming language family. Originally specified in 1958, Lisp is the second-oldest high-level programming language in widespread use today; only Fortran is older (by one year). • Mc. Carthy received many honors, including the Turing Award for his contributions to the topic of AI, the United States National Medal of Science, and the Kyoto Prize.