4cb7b13d5ea56789036dd1e316f70d63.ppt

- Количество слайдов: 40

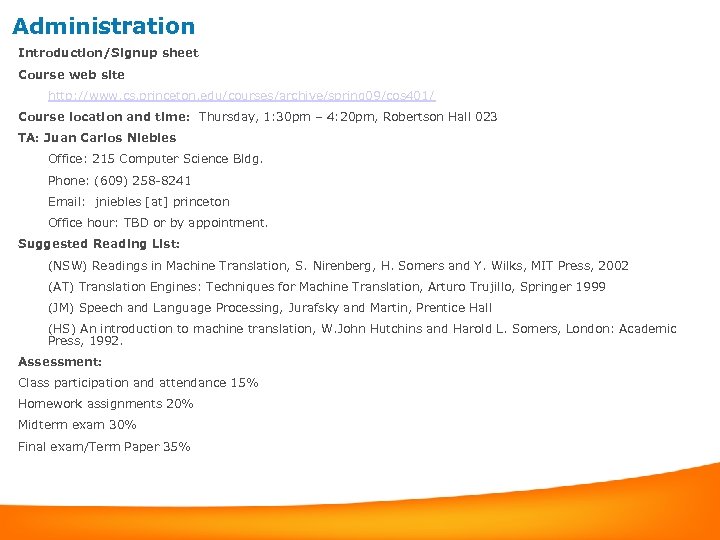

Administration Introduction/Signup sheet Course web site http: //www. cs. princeton. edu/courses/archive/spring 09/cos 401/ Course location and time: Thursday, 1: 30 pm – 4: 20 pm, Robertson Hall 023 TA: Juan Carlos Niebles Office: 215 Computer Science Bldg. Phone: (609) 258 -8241 Email: jniebles [at] princeton Office hour: TBD or by appointment. Suggested Reading List: (NSW) Readings in Machine Translation, S. Nirenberg, H. Somers and Y. Wilks, MIT Press, 2002 (AT) Translation Engines: Techniques for Machine Translation, Arturo Trujillo, Springer 1999 (JM) Speech and Language Processing, Jurafsky and Martin, Prentice Hall (HS) An introduction to machine translation, W. John Hutchins and Harold L. Somers, London: Academic Press, 1992. Assessment: Class participation and attendance 15% Homework assignments 20% Midterm exam 30% Final exam/Term Paper 35%

Machine Translation Srinivas Bangalore AT&T Research Florham Park, NJ 07932

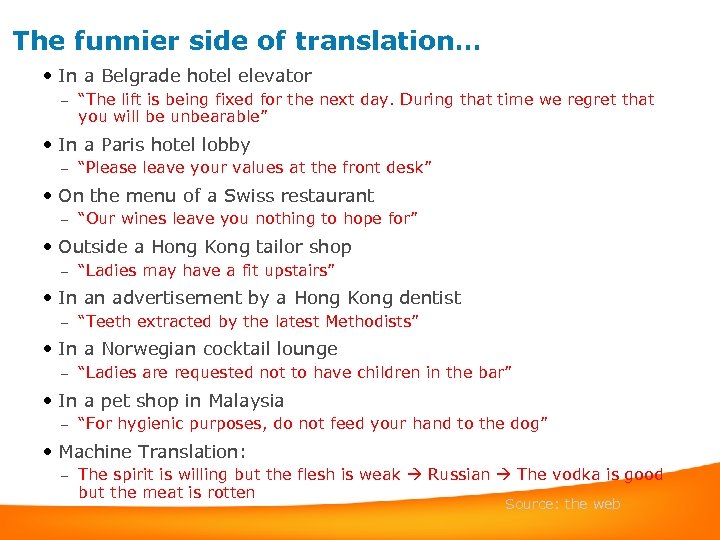

The funnier side of translation… • In a Belgrade hotel elevator – “The lift is being fixed for the next day. During that time we regret that you will be unbearable” • In a Paris hotel lobby – “Please leave your values at the front desk” • On the menu of a Swiss restaurant – “Our wines leave you nothing to hope for” • Outside a Hong Kong tailor shop – “Ladies may have a fit upstairs” • In an advertisement by a Hong Kong dentist – “Teeth extracted by the latest Methodists” • In a Norwegian cocktail lounge – “Ladies are requested not to have children in the bar” • In a pet shop in Malaysia – “For hygienic purposes, do not feed your hand to the dog” • Machine Translation: – The spirit is willing but the flesh is weak Russian The vodka is good but the meat is rotten Source: the web

Outline • History of Machine Translation • Machine Translation Paradigms • Machine Translation Evaluation • Applications of Machine Translation

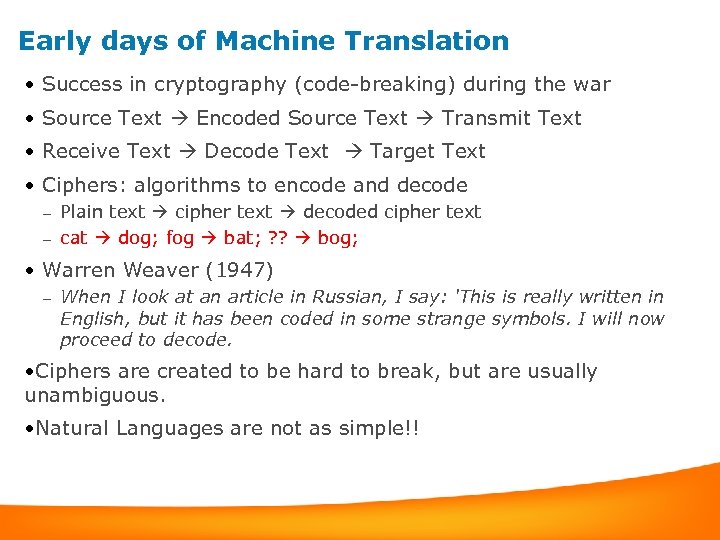

Early days of Machine Translation • Success in cryptography (code-breaking) during the war • Source Text Encoded Source Text Transmit Text • Receive Text Decode Text Target Text • Ciphers: algorithms to encode and decode Plain text cipher text decoded cipher text – cat dog; fog bat; ? ? bog; – • Warren Weaver (1947) – When I look at an article in Russian, I say: 'This is really written in English, but it has been coded in some strange symbols. I will now proceed to decode. • Ciphers are created to be hard to break, but are usually unambiguous. • Natural Languages are not as simple!!

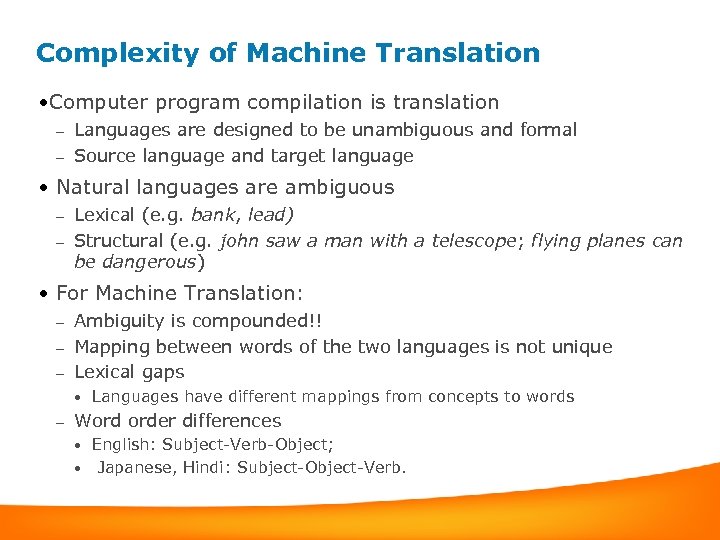

Complexity of Machine Translation • Computer program compilation is translation Languages are designed to be unambiguous and formal – Source language and target language – • Natural languages are ambiguous Lexical (e. g. bank, lead) – Structural (e. g. john saw a man with a telescope; flying planes can be dangerous) – • For Machine Translation: Ambiguity is compounded!! – Mapping between words of the two languages is not unique – Lexical gaps – • – Languages have different mappings from concepts to words Word order differences English: Subject-Verb-Object; • Japanese, Hindi: Subject-Object-Verb. •

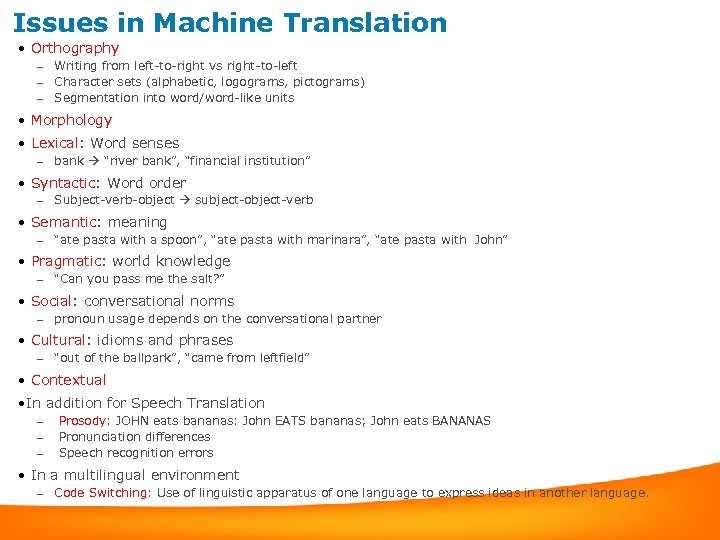

Issues in Machine Translation • Orthography – – – Writing from left-to-right vs right-to-left Character sets (alphabetic, logograms, pictograms) Segmentation into word/word-like units • Morphology • Lexical: Word senses – bank “river bank”, “financial institution” • Syntactic: Word order – Subject-verb-object subject-object-verb • Semantic: meaning – “ate pasta with a spoon”, “ate pasta with marinara”, “ate pasta with John” • Pragmatic: world knowledge – “Can you pass me the salt? ” • Social: conversational norms – pronoun usage depends on the conversational partner • Cultural: idioms and phrases – “out of the ballpark”, “came from leftfield” • Contextual • In addition for Speech Translation – – – Prosody: JOHN eats bananas: John EATS bananas; John eats BANANAS Pronunciation differences Speech recognition errors • In a multilingual environment – Code Switching: Use of linguistic apparatus of one language to express ideas in another language.

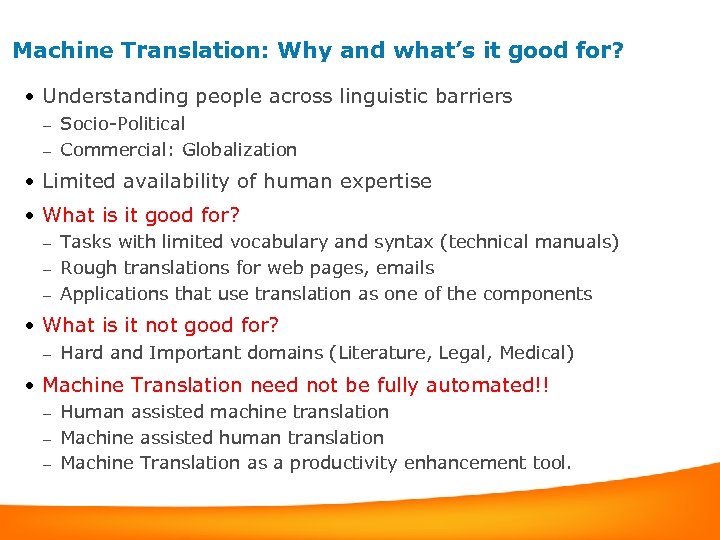

Machine Translation: Why and what’s it good for? • Understanding people across linguistic barriers Socio-Political – Commercial: Globalization – • Limited availability of human expertise • What is it good for? Tasks with limited vocabulary and syntax (technical manuals) – Rough translations for web pages, emails – Applications that use translation as one of the components – • What is it not good for? – Hard and Important domains (Literature, Legal, Medical) • Machine Translation need not be fully automated!! Human assisted machine translation – Machine assisted human translation – Machine Translation as a productivity enhancement tool. –

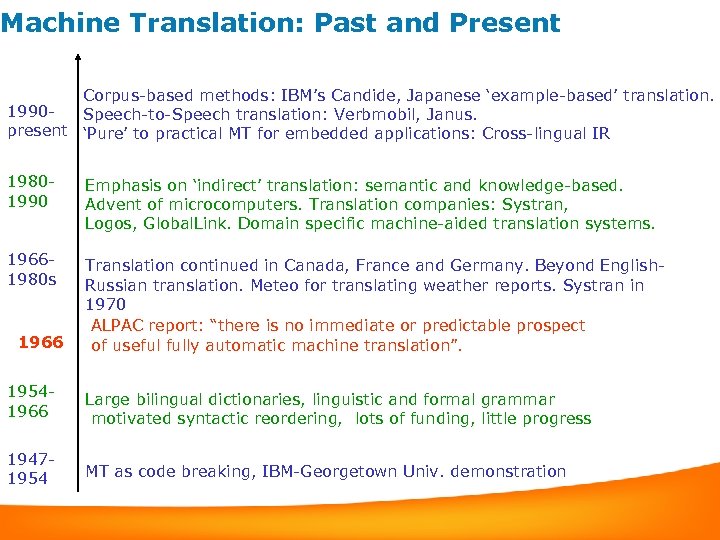

Machine Translation: Past and Present Corpus-based methods: IBM’s Candide, Japanese ‘example-based’ translation. 1990 Speech-to-Speech translation: Verbmobil, Janus. present ‘Pure’ to practical MT for embedded applications: Cross-lingual IR 19801990 Emphasis on ‘indirect’ translation: semantic and knowledge-based. Advent of microcomputers. Translation companies: Systran, Logos, Global. Link. Domain specific machine-aided translation systems. 19661980 s Translation continued in Canada, France and Germany. Beyond English. Russian translation. Meteo for translating weather reports. Systran in 1970 ALPAC report: “there is no immediate or predictable prospect of useful fully automatic machine translation”. 1966 19541966 Large bilingual dictionaries, linguistic and formal grammar motivated syntactic reordering, lots of funding, little progress 19471954 MT as code breaking, IBM-Georgetown Univ. demonstration

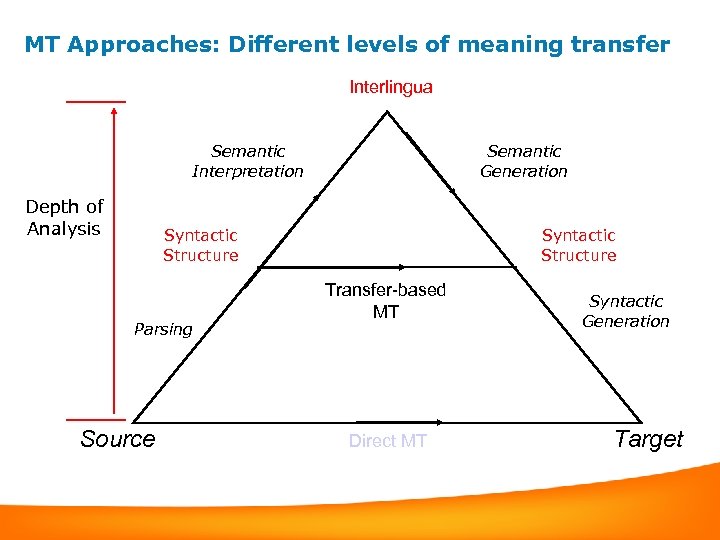

MT Approaches: Different levels of meaning transfer Interlingua Semantic Interpretation Depth of Analysis Semantic Generation Syntactic Structure Parsing Source Syntactic Structure Transfer-based MT Direct MT Syntactic Generation Target

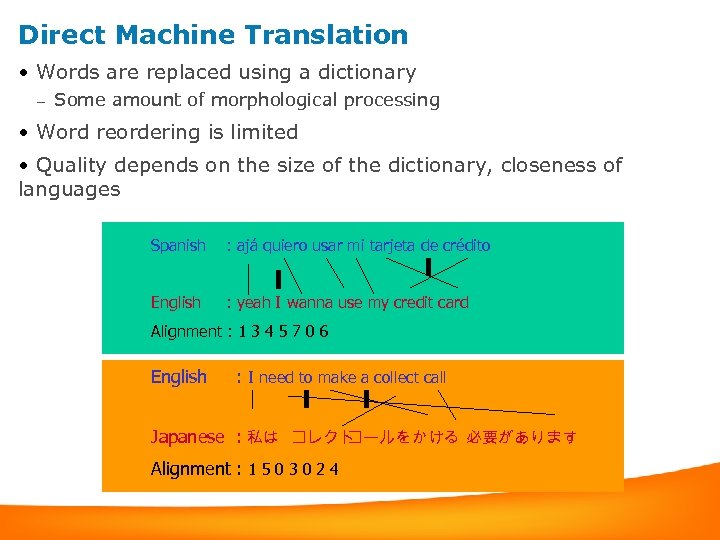

Direct Machine Translation • Words are replaced using a dictionary – Some amount of morphological processing • Word reordering is limited • Quality depends on the size of the dictionary, closeness of languages Spanish : ajá quiero usar mi tarjeta de crédito English : yeah I wanna use my credit card Alignment : 1 3 4 5 7 0 6 English : I need to make a collect call Japanese : 私は コレクト コールを かける 必要があります Alignment : 1 5 0 3 0 2 4

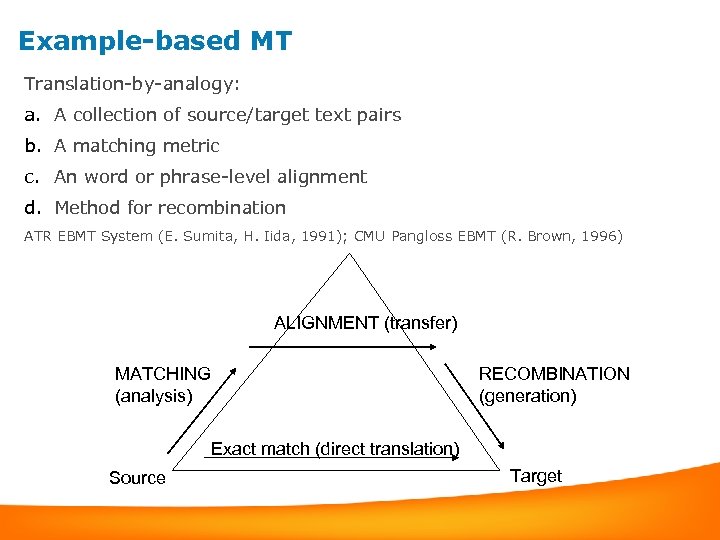

Example-based MT Translation-by-analogy: a. A collection of source/target text pairs b. A matching metric c. An word or phrase-level alignment d. Method for recombination ATR EBMT System (E. Sumita, H. Iida, 1991); CMU Pangloss EBMT (R. Brown, 1996) ALIGNMENT (transfer) MATCHING (analysis) RECOMBINATION (generation) Exact match (direct translation) Source Target

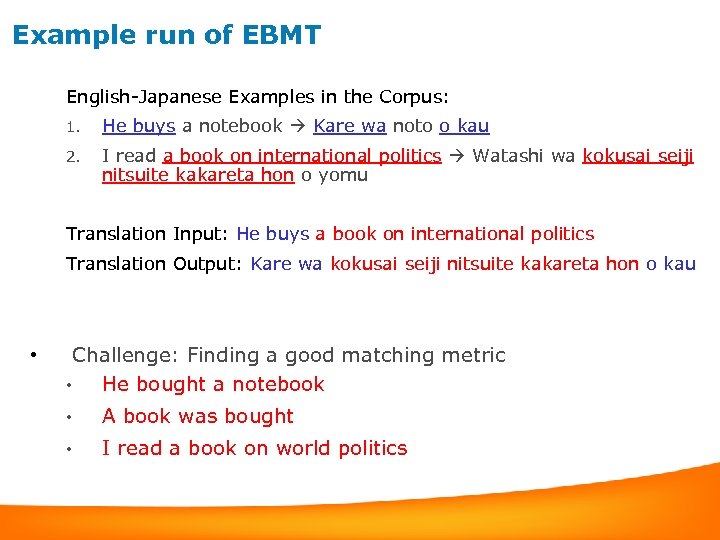

Example run of EBMT English-Japanese Examples in the Corpus: 1. He buys a notebook Kare wa noto o kau 2. I read a book on international politics Watashi wa kokusai seiji nitsuite kakareta hon o yomu Translation Input: He buys a book on international politics Translation Output: Kare wa kokusai seiji nitsuite kakareta hon o kau • Challenge: Finding a good matching metric • He bought a notebook • A book was bought • I read a book on world politics

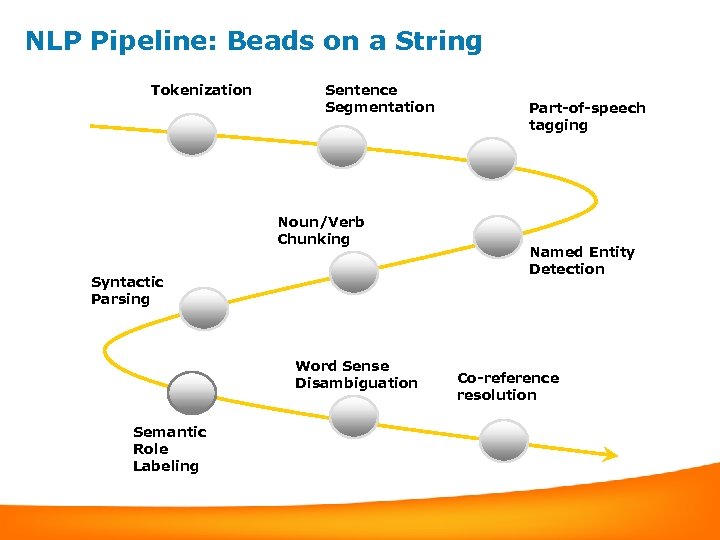

NLP Pipeline: Beads on a String Tokenization Sentence Segmentation Noun/Verb Chunking Syntactic Parsing Word Sense Disambiguation Semantic Role Labeling Part-of-speech tagging Named Entity Detection Co-reference resolution

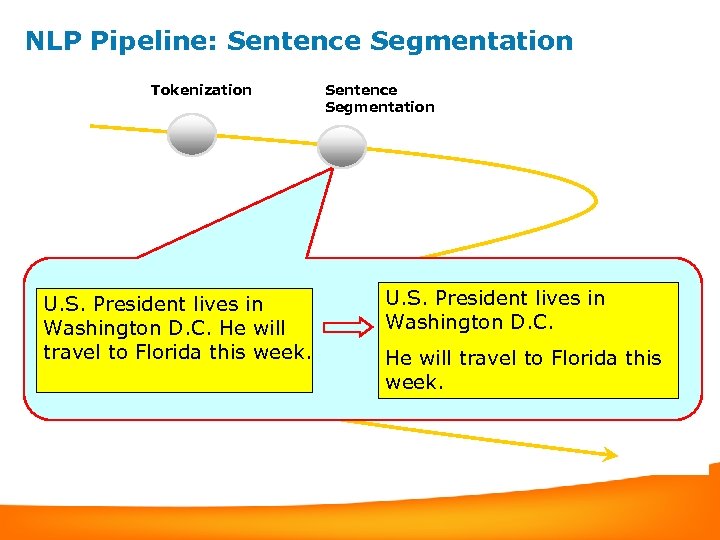

NLP Pipeline: Sentence Segmentation Tokenization Sentence Segmentation Noun/Verb Chunking Syntactic Parsing U. S. President lives in Washington D. C. He will travel to Florida this week. Part-of-speech tagging Named Entity Detection U. S. President lives in Washington D. C. He will travel to Florida this Word Sense Disambiguation week. Co-reference resolution Semantic Role Labeling

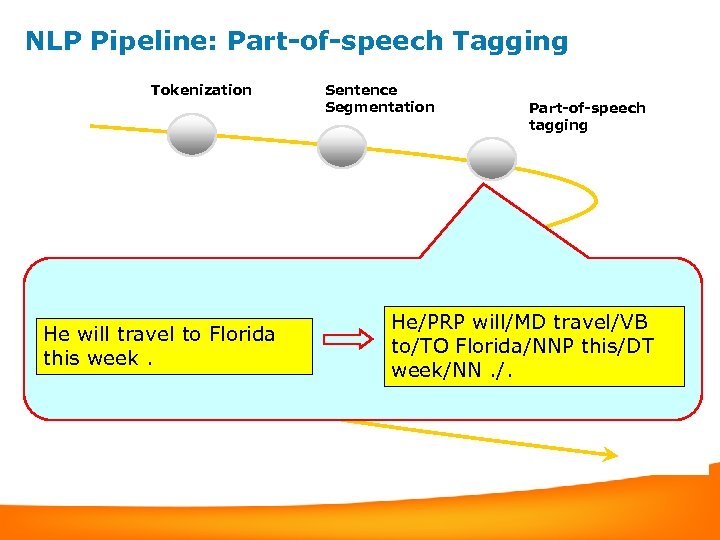

NLP Pipeline: Part-of-speech Tagging Tokenization Sentence Segmentation Noun/Verb Chunking Syntactic Parsing He will travel to Florida this week. Named Entity Detection He/PRP will/MD travel/VB to/TO Florida/NNP this/DT Word Sense week/NN. /. Co-reference Disambiguation Semantic Role Labeling Part-of-speech tagging resolution

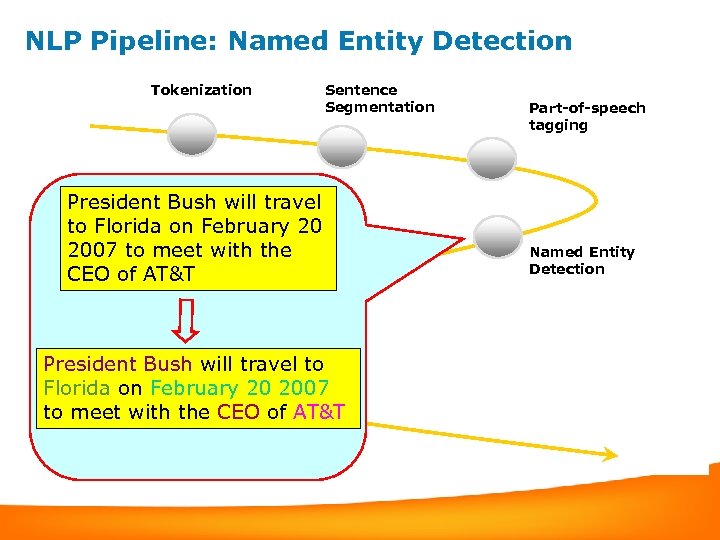

NLP Pipeline: Named Entity Detection Tokenization Sentence Segmentation President Bush will travel Noun/Verb to Florida on February 20 Chunking 2007 to meet with the CEO of AT&T Syntactic Part-of-speech tagging Named Entity Detection Parsing President Bush will travel to Sense Word Disambiguation Florida on February 20 2007 to meet with the CEO of AT&T Semantic Role Labeling Co-reference resolution

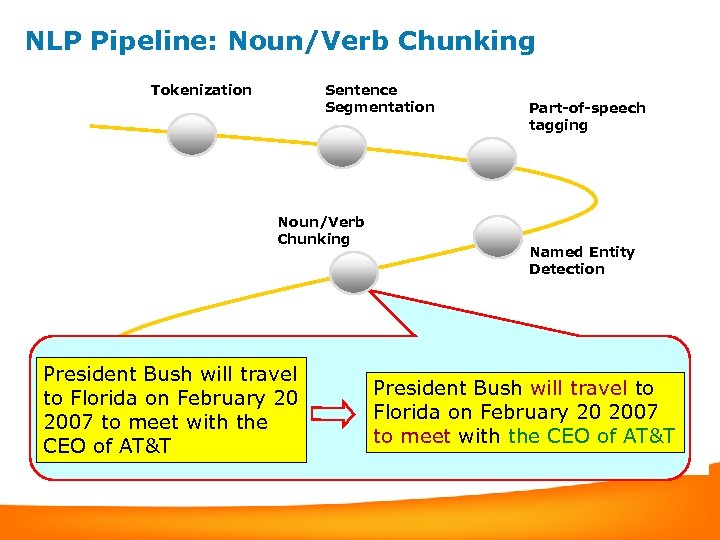

NLP Pipeline: Noun/Verb Chunking Tokenization Sentence Segmentation Noun/Verb Chunking Syntactic Parsing Part-of-speech tagging Named Entity Detection Word Sense President Bush will travel Co-reference Disambiguation President Bush will travel to resolution to Florida on February 20 2007 to meet with the Semantic to meet with the CEO of AT&T Role Labeling

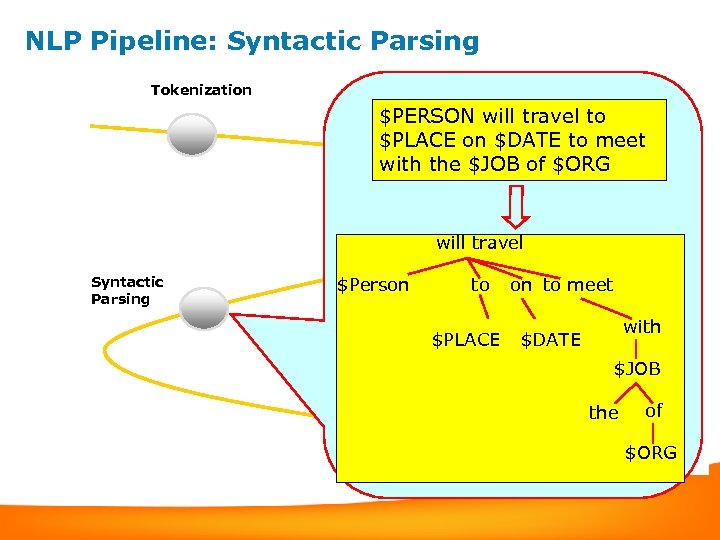

NLP Pipeline: Syntactic Parsing Tokenization Sentence Segmentation Part-of-speech $PERSON will travel to tagging $PLACE on $DATE to meet with the $JOB of $ORG Noun/Verb Chunking Syntactic Parsing $Person will travel Named Entity to $PLACE Word Sense Disambiguation Semantic Role Labeling Detection on to meet with $DATE Co-reference resolution $JOB the of $ORG

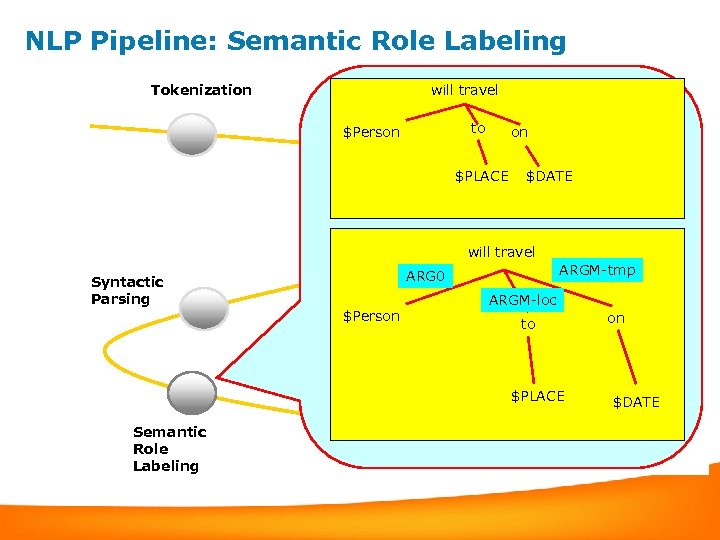

NLP Pipeline: Semantic Role Labeling Tokenization Sentence will travel Segmentation $Person to $PLACE Part-of-speech tagging Entity Named Detection on $DATE Noun/Verb Chunking Syntactic Parsing Co-reference resolution will travel the Part-of-speech of ARGM-tmp ARG 0 tagging Entity Named Detection ARGM-loc $ORG $Person on to Word Sense Disambiguation Semantic Role Labeling $PLACE $DATE

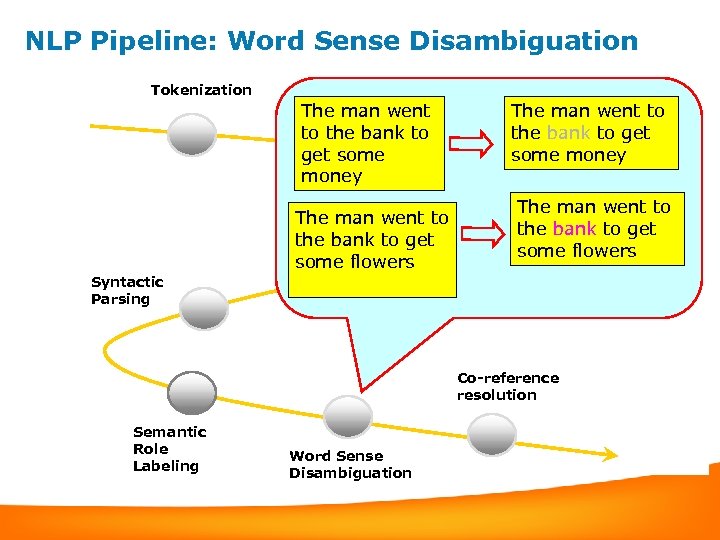

NLP Pipeline: Word Sense Disambiguation Tokenization Sentence Segmentation The man went to the bank to get some money The man went to Noun/Verb Chunking the bank to get some flowers Part-of-speech The man went to tagging the bank to get some money The man went to the bank to get some flowers Syntactic Parsing Co-reference resolution Semantic Role Labeling Word Sense Disambiguation

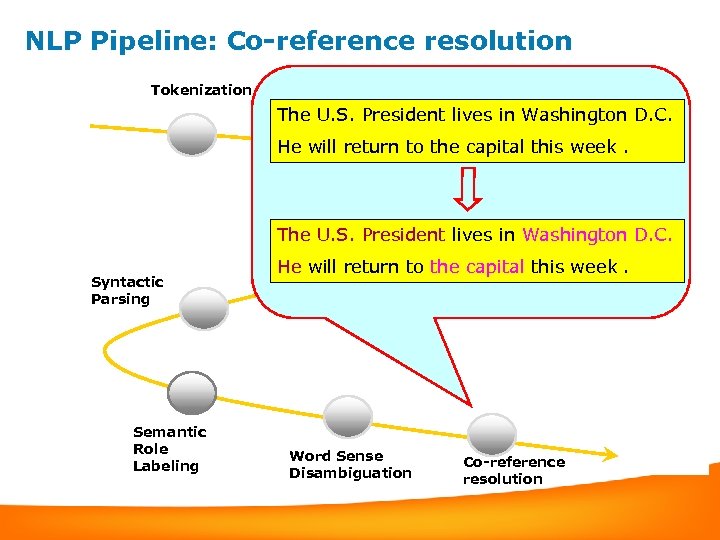

NLP Pipeline: Co-reference resolution Tokenization Sentence Segmentation Part-of-speech The U. S. President lives in Washington D. C. tagging He will return to the capital this week. Noun/Verb The U. S. President lives in Washington D. C. Chunking Syntactic Parsing Semantic Role Labeling He will return to the capital this week. Word Sense Disambiguation Co-reference resolution

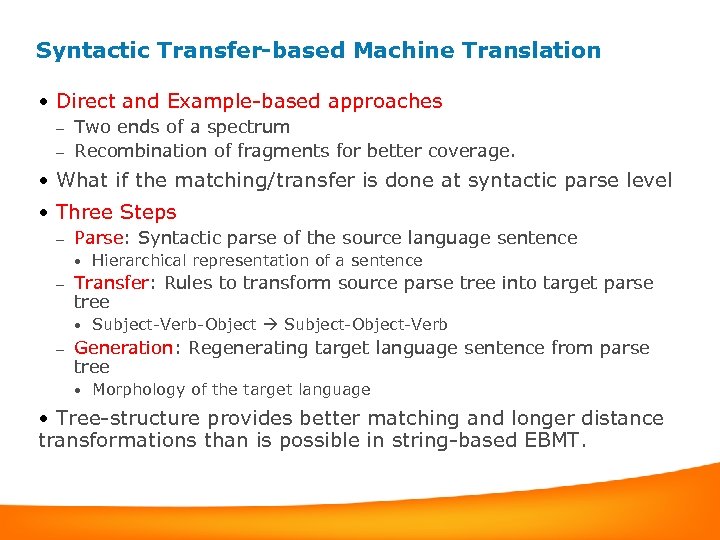

Syntactic Transfer-based Machine Translation • Direct and Example-based approaches Two ends of a spectrum – Recombination of fragments for better coverage. – • What if the matching/transfer is done at syntactic parse level • Three Steps – Parse: Syntactic parse of the source language sentence • – Transfer: Rules to transform source parse tree into target parse tree • – Hierarchical representation of a sentence Subject-Verb-Object Subject-Object-Verb Generation: Regenerating target language sentence from parse tree • Morphology of the target language • Tree-structure provides better matching and longer distance transformations than is possible in string-based EBMT.

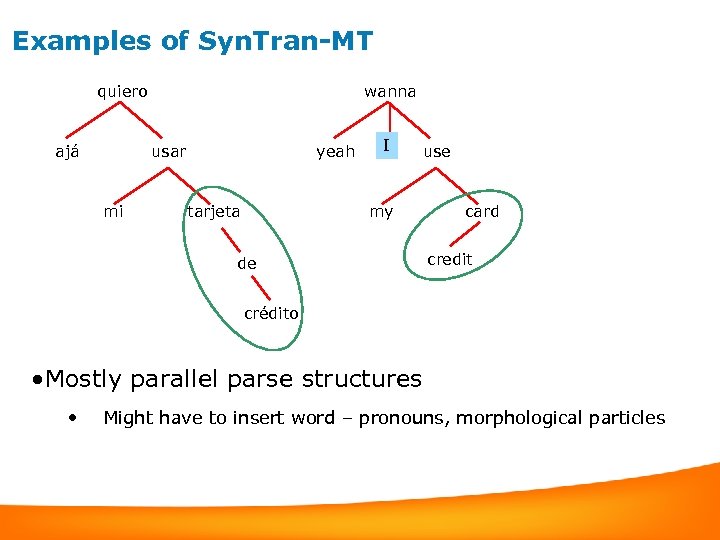

Examples of Syn. Tran-MT quiero ajá wanna usar mi yeah tarjeta I use my de card credit crédito • Mostly parallel parse structures • Might have to insert word – pronouns, morphological particles

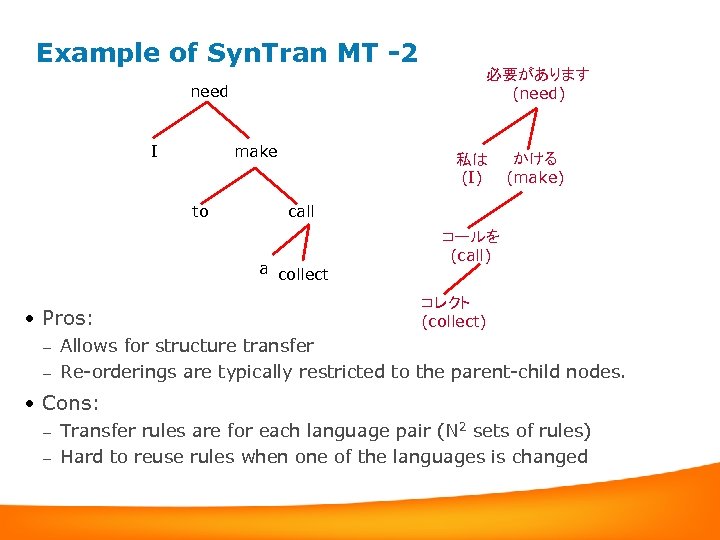

Example of Syn. Tran MT -2 need I make to 私は かける (I) (make) call a collect • Pros: 必要があります (need) コールを (call) コレクト (collect) Allows for structure transfer – Re-orderings are typically restricted to the parent-child nodes. – • Cons: Transfer rules are for each language pair (N 2 sets of rules) – Hard to reuse rules when one of the languages is changed –

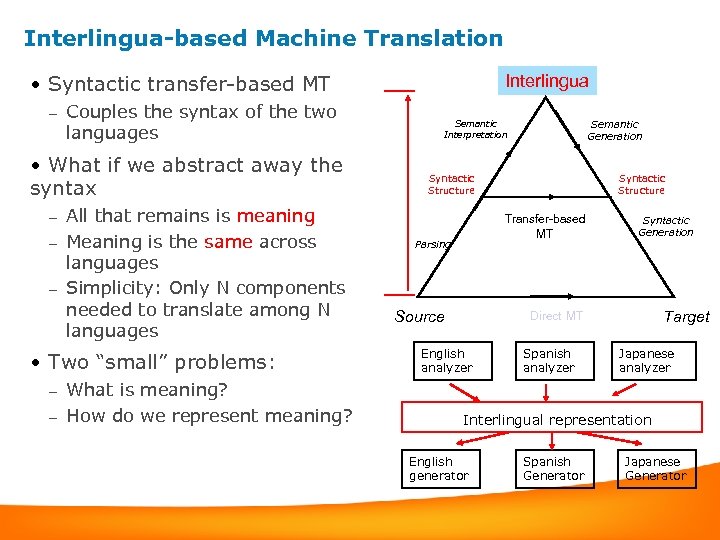

Interlingua-based Machine Translation Interlingua • Syntactic transfer-based MT – Couples the syntax of the two languages • What if we abstract away the syntax All that remains is meaning – Meaning is the same across languages – Simplicity: Only N components needed to translate among N languages Syntactic Structure – • Two “small” problems: What is meaning? – How do we represent meaning? Semantic Generation Semantic Interpretation Syntactic Structure Transfer-based MT Parsing Source Syntactic Generation Target Direct MT English analyzer Spanish analyzer Japanese analyzer – Interlingual representation English generator Spanish Generator Japanese Generator

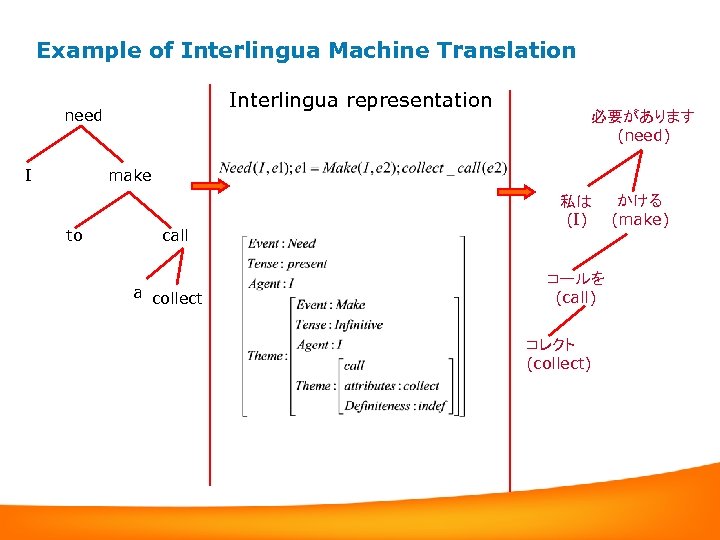

Example of Interlingua Machine Translation Interlingua representation need I 必要があります (need) make to call a collect 私は かける (I) (make) コールを (call) コレクト (collect)

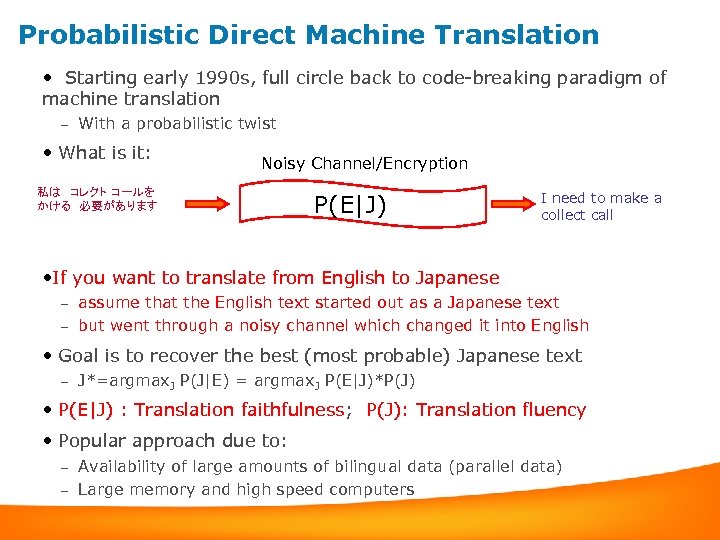

Probabilistic Direct Machine Translation • Starting early 1990 s, full circle back to code-breaking paradigm of machine translation – With a probabilistic twist • What is it: Noisy Channel/Encryption 私は コレクト コールを かける 必要があります P(E|J) I need to make a collect call • If you want to translate from English to Japanese assume that the English text started out as a Japanese text – but went through a noisy channel which changed it into English – • Goal is to recover the best (most probable) Japanese text – J*=argmax. J P(J|E) = argmax. J P(E|J)*P(J) • P(E|J) : Translation faithfulness; P(J): Translation fluency • Popular approach due to: Availability of large amounts of bilingual data (parallel data) – Large memory and high speed computers –

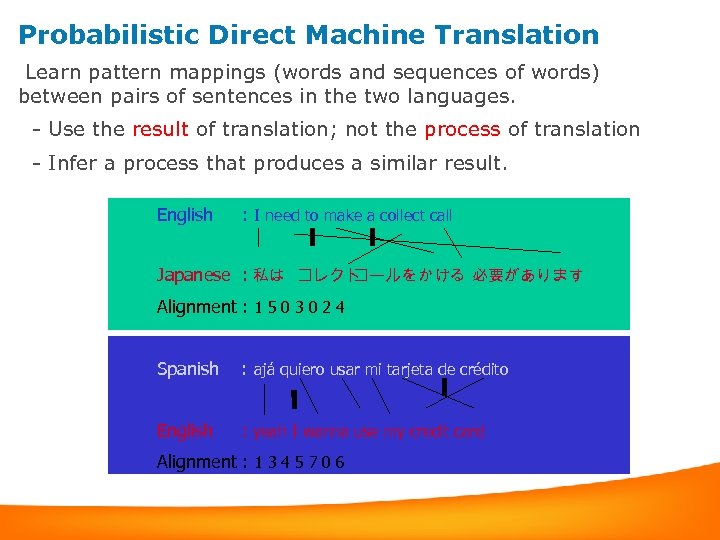

Probabilistic Direct Machine Translation Learn pattern mappings (words and sequences of words) between pairs of sentences in the two languages. - Use the result of translation; not the process of translation - Infer a process that produces a similar result. English : I need to make a collect call Japanese : 私は コレクト コールを かける 必要があります Alignment : 1 5 0 3 0 2 4 Spanish : ajá quiero usar mi tarjeta de crédito English : yeah I wanna use my credit card Alignment : 1 3 4 5 7 0 6

Applications of Machine Translation

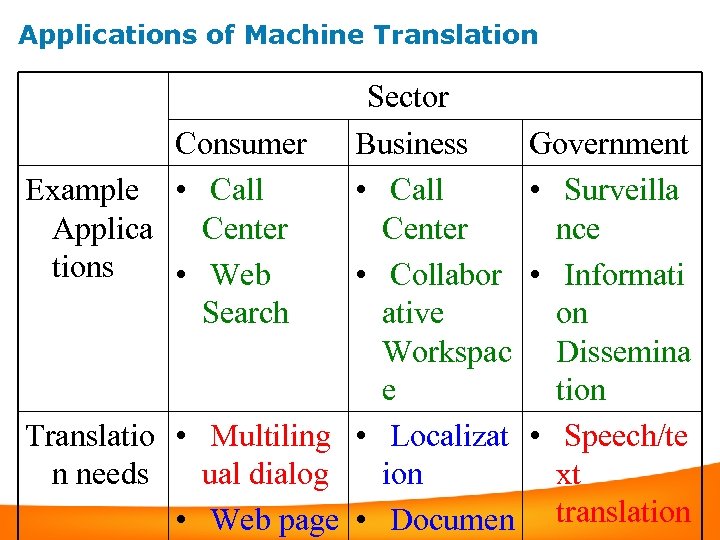

Applications of Machine Translation Sector Consumer Business Example • Call Applica Center tions • Web • Collabor Search ative Workspac e Translatio • Multiling • Localizat n needs ual dialog ion • Web page • Documen Government • Surveilla nce • Informati on Dissemina tion • Speech/te xt translation

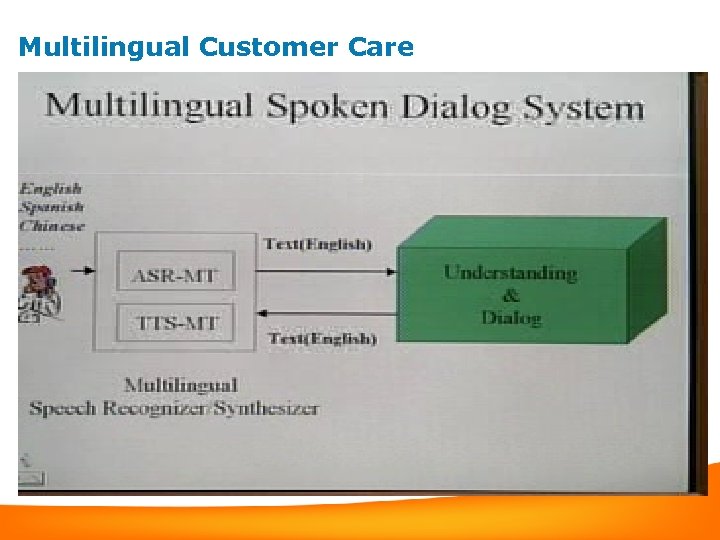

Multilingual Customer Care

Making Travel Arrangements using Multilingual Chat

Large Vocabulary Speech Recognition and Translation

Large Vocabulary Speech Recognition and Translation

Evaluation of Machine Translation

Machine Translation Evaluation • What is a good translation? • Meaning preserving and (social, cultural, conversation) contextappropriate rendering of the source language sentence • Bilingual Human Annotators • Mark the output of a translation system on a 5 point scale. • Expensive!! • Too coarse to arrive at a feedback signal to improve the translation system • Objective Metrics: Approximations to the real thing!! • Lexical Accuracy (LA) – • Translation Accuracy (TA) – • Bag of words. Based on string alignment Application-driven evaluation “How May I Help You? ” – Spoken dialog for call routing – Classification based on salient phrase detection –

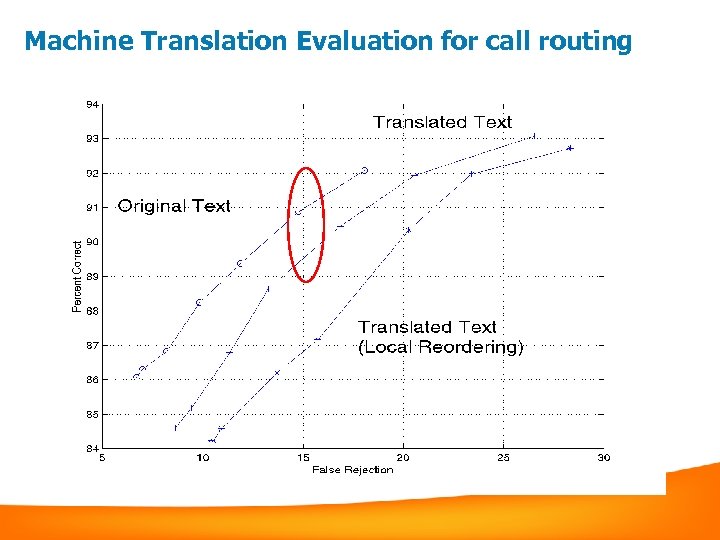

Machine Translation Evaluation for call routing

Summary • Fully Automatic Machine Translation in its full complexity is a very hard task • Pragmatic approaches to Machine Translation have been successful Limited domain/vocabulary – Human-assisted machine translation – Machine-assisted human translation – • A range of applications for “rough” machine translation • Machine Translation will improve as we better understand how people communicate.

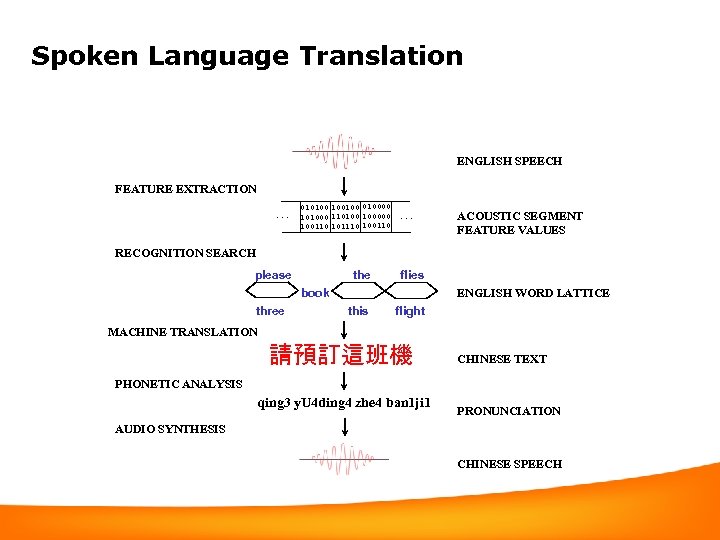

Spoken Language Translation ENGLISH SPEECH FEATURE EXTRACTION. . . 010100 10010000 101000 110100 100000 100110 101110 100110 . . . ACOUSTIC SEGMENT FEATURE VALUES RECOGNITION SEARCH please the flies book three ENGLISH WORD LATTICE this flight MACHINE TRANSLATION 請預訂這班機 CHINESE TEXT PHONETIC ANALYSIS qing 3 y. U 4 ding 4 zhe 4 ban 1 ji 1 PRONUNCIATION AUDIO SYNTHESIS CHINESE SPEECH

4cb7b13d5ea56789036dd1e316f70d63.ppt