f0c9d8ab356d7e5dc17b04cf440b4c60.ppt

- Количество слайдов: 24

Adding High Availability to Condor Central Manager Gabi Kliot Technion – Israel Institute of Technology ©Gabriel Kliot, Technion 1 Condor week – March 2005

Outline n n n Current Condor pool Motivation for Highly Available Central Manager The solution - HA Daemon Performance impacts Testing Future Work ©Gabriel Kliot, Technion 2 Condor week – March 2005

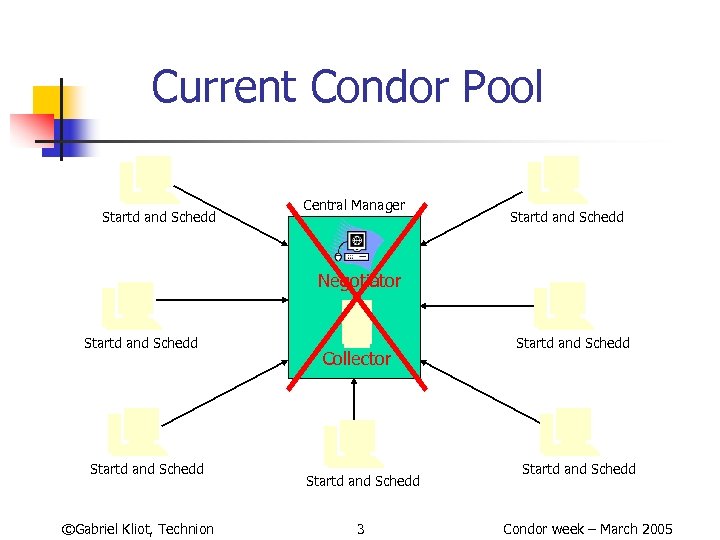

Current Condor Pool Startd and Schedd Central Manager Startd and Schedd Negotiator Startd and Schedd ©Gabriel Kliot, Technion Collector Startd and Schedd 3 Startd and Schedd Condor week – March 2005

Why Highly Available Central Manager n Central manager is a single-point-offailure n n n Negotiator’s failure - No additional matches will be possible Collector’s failure – negotiator is out of job, tools querying collector won’t work, etc. Our goal n Allow continuous pool functioning in case of failure ©Gabriel Kliot, Technion 4 Condor week – March 2005

Highly Available Condor Pool Startd and Schedd Highly Available Central Manager Idle Central Manager Startd and Schedd ©Gabriel Kliot, Technion Startd and Schedd Idle Central Manager Active Central Manager Startd and Schedd 5 Startd and Schedd Condor week – March 2005

Highly Available Central Manager n Our solution - Highly Available Central Manager n n n Automatic failure detection Transparent failover to backup matchmaker (no global configuration change for the pool entities) “Split brain” reconciliation after network partitions State replication between active and backups No changes to Negotiator/Collector code ©Gabriel Kliot, Technion 6 Condor week – March 2005

Highly Available Central Manager How it works n n Collector’s HA is provided by redundancy Negotiator’s HA is provided by HA daemons ©Gabriel Kliot, Technion 7 Condor week – March 2005

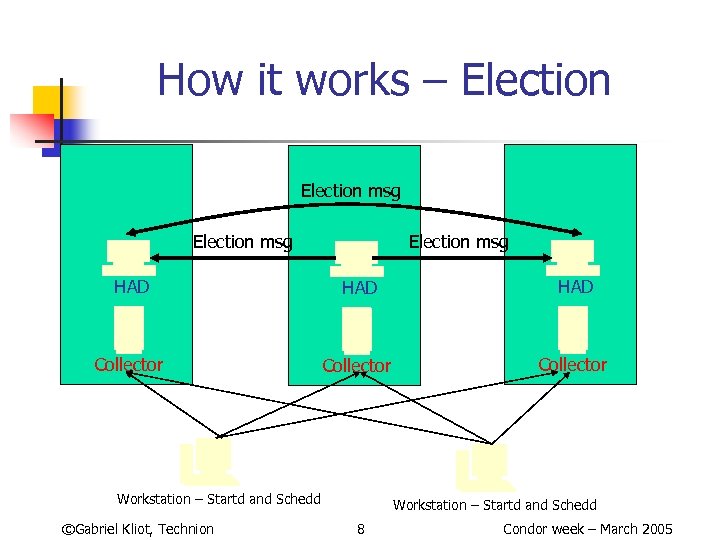

How it works – Election msg HAD HAD Collector Workstation – Startd and Schedd ©Gabriel Kliot, Technion Workstation – Startd and Schedd 8 Condor week – March 2005

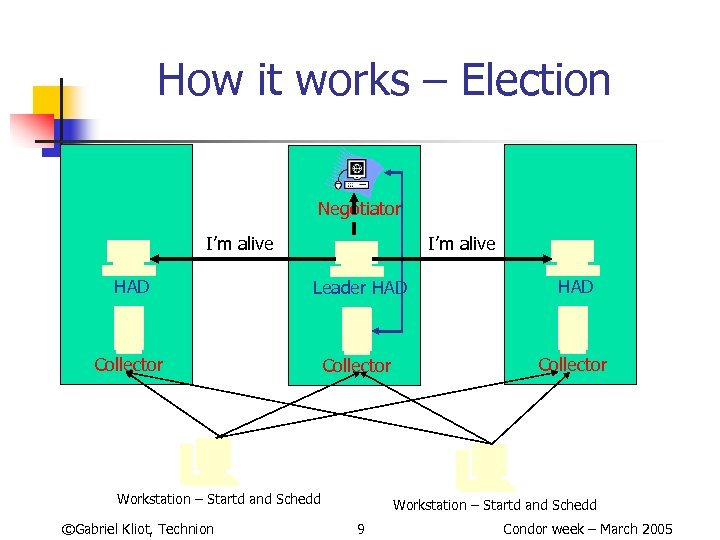

How it works – Election Negotiator I’m alive HAD Leader HAD Collector Workstation – Startd and Schedd ©Gabriel Kliot, Technion Workstation – Startd and Schedd 9 Condor week – March 2005

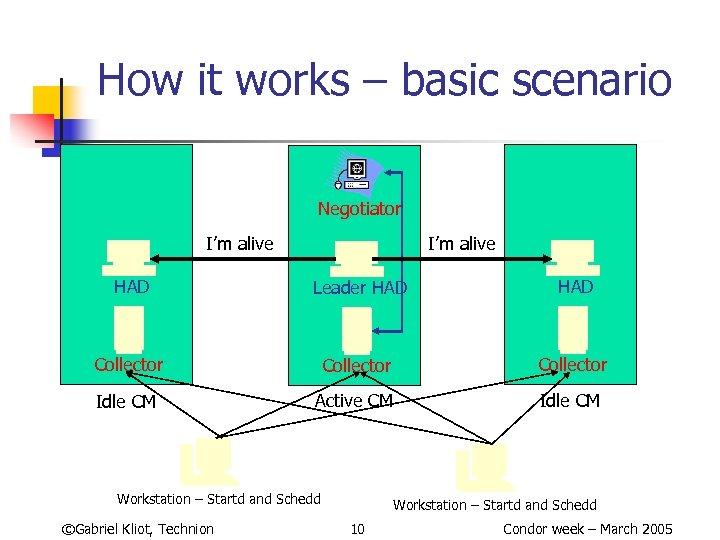

How it works – basic scenario Negotiator I’m alive HAD Leader HAD Collector Idle CM Active CM Idle CM Workstation – Startd and Schedd ©Gabriel Kliot, Technion Workstation – Startd and Schedd 10 Condor week – March 2005

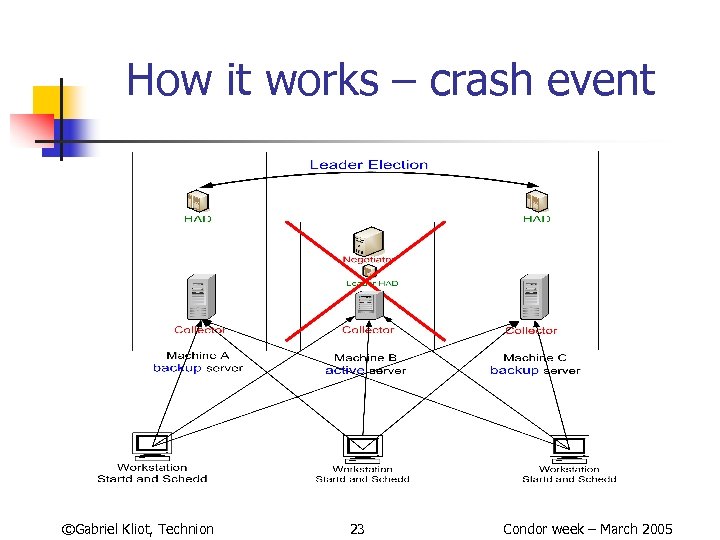

How it works – crash event Negotiator I’m alive HAD Leader HAD Collector Idle CM Active CM Idle CM Workstation – Startd and Schedd ©Gabriel Kliot, Technion Workstation – Startd and Schedd 11 Condor week – March 2005

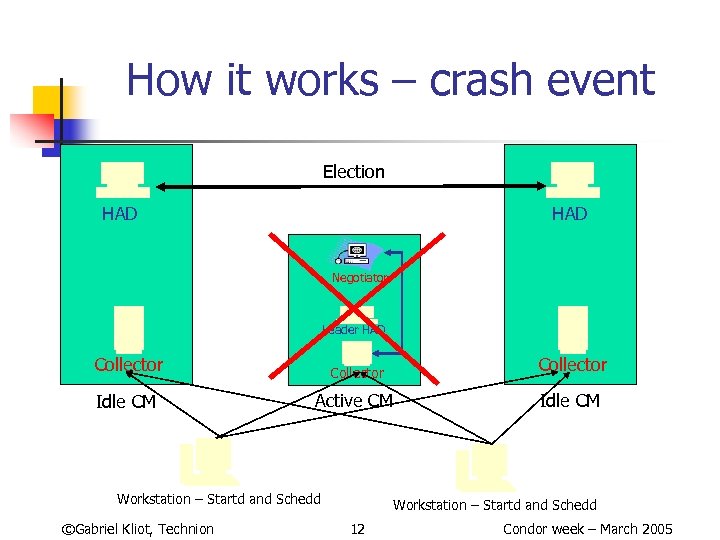

How it works – crash event Election HAD Negotiator Leader HAD Collector Idle CM Active CM Idle CM Workstation – Startd and Schedd ©Gabriel Kliot, Technion Workstation – Startd and Schedd 12 Condor week – March 2005

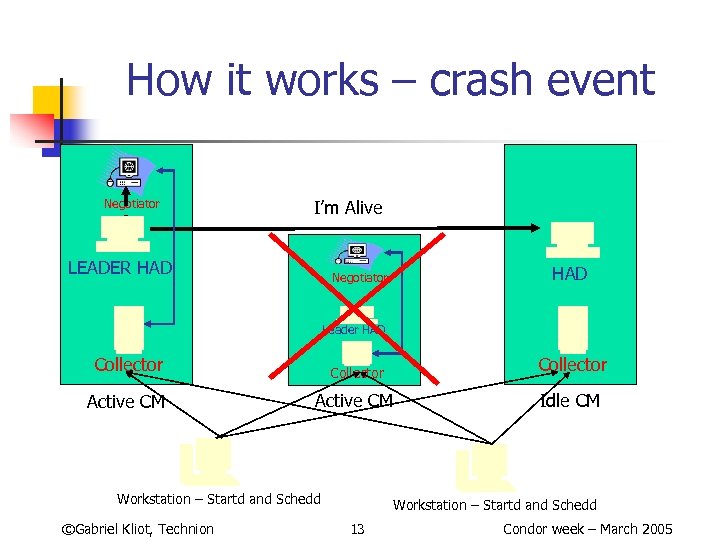

How it works – crash event Negotiator I’m Alive LEADER HAD Negotiator HAD Leader HAD Collector Active CM Idle CM Workstation – Startd and Schedd ©Gabriel Kliot, Technion Workstation – Startd and Schedd 13 Condor week – March 2005

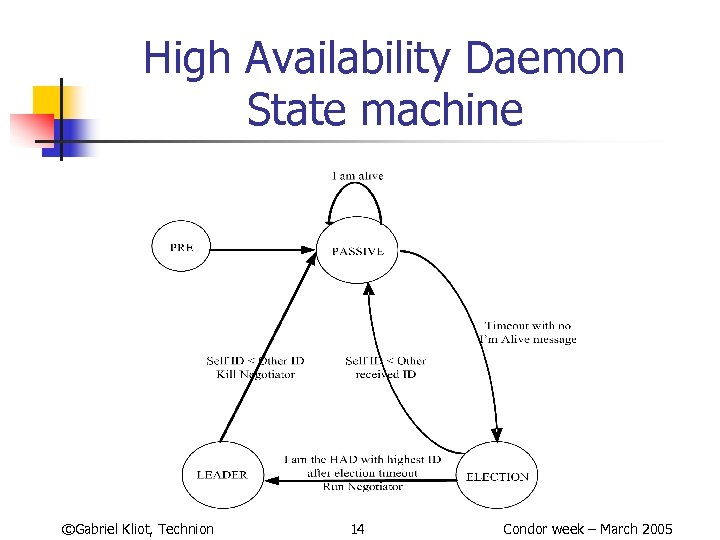

High Availability Daemon State machine ©Gabriel Kliot, Technion 14 Condor week – March 2005

Performance impact n n Stabilization time – the time it takes for HA daemons to detect failure and fix it Depends on number of CMs and network performance HAD_CONNECT_TIMEOUT – time it takes to establish TCP connection (depends on network type, presence of encryption, etc…) Assuming it takes up to 2 seconds to establish TCP connection and 2 CMs are used - new Negotiator is up and running after 48 seconds ©Gabriel Kliot, Technion 15 Condor week – March 2005

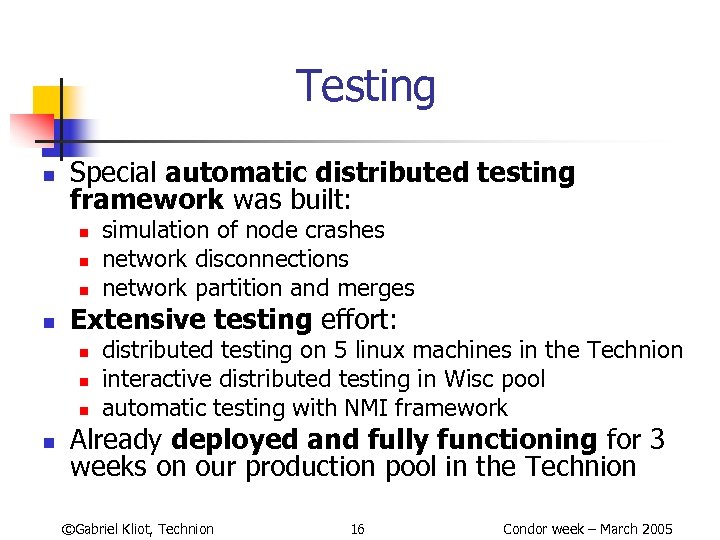

Testing n Special automatic distributed testing framework was built: n n Extensive testing effort: n n simulation of node crashes network disconnections network partition and merges distributed testing on 5 linux machines in the Technion interactive distributed testing in Wisc pool automatic testing with NMI framework Already deployed and fully functioning for 3 weeks on our production pool in the Technion ©Gabriel Kliot, Technion 16 Condor week – March 2005

Future development n HAD publishing in Collectors n n Accounting file replication n n condor_status –had current solution is provided for NFS Software High Availability ©Gabriel Kliot, Technion 17 Condor week – March 2005

Collaboration with Condor team n n n Compliance with high Condor coding standards Peer-reviewed code Integration with NMI framework Automation of testing Open-minded attitude of Condor team to numerous requests and questions Unique experience of working with large peermanaged group of talented programmers ©Gabriel Kliot, Technion 18 Condor week – March 2005

Collaboration with Condor team This work was a collaborative effort of: n Distributed Systems Laboratory in Technion n n Prof Assaf Schuster, Mark Silberstein, Gabi Kliot, Svetlana Kantorovitch, Dedi Carmeli, Artiom Sharov Condor team n Prof Miron Livni, Nick, Todd, Derek, Erik, Carey, Peter, Becky, Parag, Zack, Dan ©Gabriel Kliot, Technion 19 Condor week – March 2005

You should definitely try it ! n n n Part of the official 6. 7. 6 development release Full support by the Technion team More information: n n n http: //dsl. cs. technion. ac. il/projects/gozal/project_page s/ha/ha. html more details + configuration on my tutorial tomorrow Contact: n n gabik@cs. technion. ac. il condor-users@cs. wisc. edu ©Gabriel Kliot, Technion 20 Condor week – March 2005

In case of time ©Gabriel Kliot, Technion 21 Condor week – March 2005

How it works – basic scenario ©Gabriel Kliot, Technion 22 Condor week – March 2005

How it works – crash event ©Gabriel Kliot, Technion 23 Condor week – March 2005

Usability and administration n Configuration sanity check perl script Disable HAD perl script Detailed manual section n Full support by Technion team n n ©Gabriel Kliot, Technion 24 Condor week – March 2005

f0c9d8ab356d7e5dc17b04cf440b4c60.ppt