72727da0e3952782626b05a4a62538c3.ppt

- Количество слайдов: 57

Adaptive Information Integration Subbarao Kambhampati http: //rakaposhi. eas. asu. edu/i 3 Thanks to Zaiqing Nie, Ullas Nambiar & Thomas Hernandez Talk at USC/Information Sciences Institute; November 5 th 2004.

Yochan Research Group • Plan-Yochan Automated Planning – Temporal planning • • Multi-objective optimization Partial satisfaction planning – Conditional/Conformant/Stochastic planning • Heuristics using labeled planning graphs – OR approaches to planning – Applications to • • • Autonomic computing, Web service composition, Workflows Db-Yochan • Information Integration – Adaptive Information Integration • Learning source profiles • Learning user interests – Applications to • Bio-informatics • Anthropological sources – Service and Sensor Integration

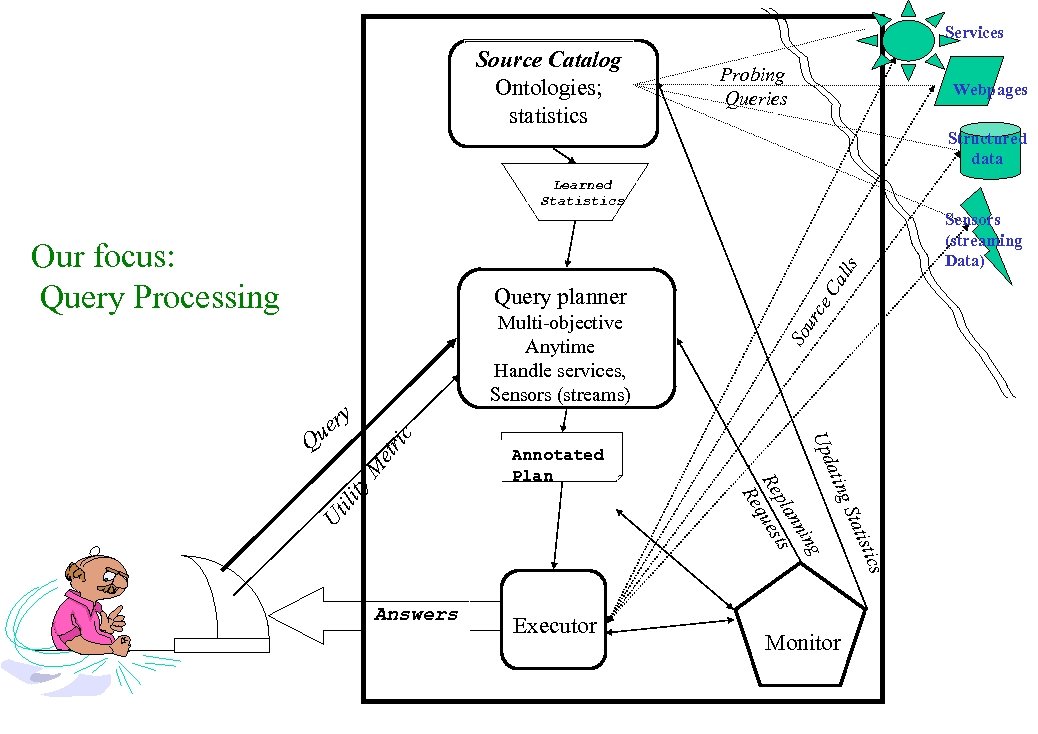

Services Source Catalog Ontologies; statistics Probing Queries Webpages Structured data Learned Statistics etr ili ty M Ut ce tics Monitor atis Executor g St Answers ing nn pla ts Re ques Re Annotated Plan atin Upd Q ic y er u ur Multi-objective Anytime Handle services, Sensors (streams) So Query planner Ca lls Our focus: Query Processing Sensors (streaming Data)

Adaptive Information Integration • Query processing in information integration needs to be adaptive to: – Source characteristics • How is the data spread among the sources? – User needs • Multi-objective queries (tradeoff coverage for cost) • Imprecise queries • To be adaptive we need, profiles (meta-data) about sources as well as users – Challenge: Profiles are not going to be provided. . • Autonomous sources may not export meta-data about data spread! • Lay users may not be able to articulate the source of their imprecision! Need approaches that gather (learn) the meta-data they need

Three contributions to Adaptive Information Integration • Bib. Finder /Statminer – Learns and uses source coverage and overlap statistics to support multi-objective query processing • [VLDB 2003; ICDE 2004; TKDE 2005] • COSCO – Adapts the Coverage/Overlap statistics to text collection selection • – Supports imprecise queries by automatically learning approximate structural relations among data tuples • [Web. DB 2004; WWW 2004] Although we focus on avoiding retrieval of duplicates, Coverage/Overlap statistics can also be used to look for duplicates

Adaptive Integration of Heterogeneous Power Point Slides àDifferent template “schemas” àDifferent Font Styles àNaïve “concatenation” approaches don’t work!

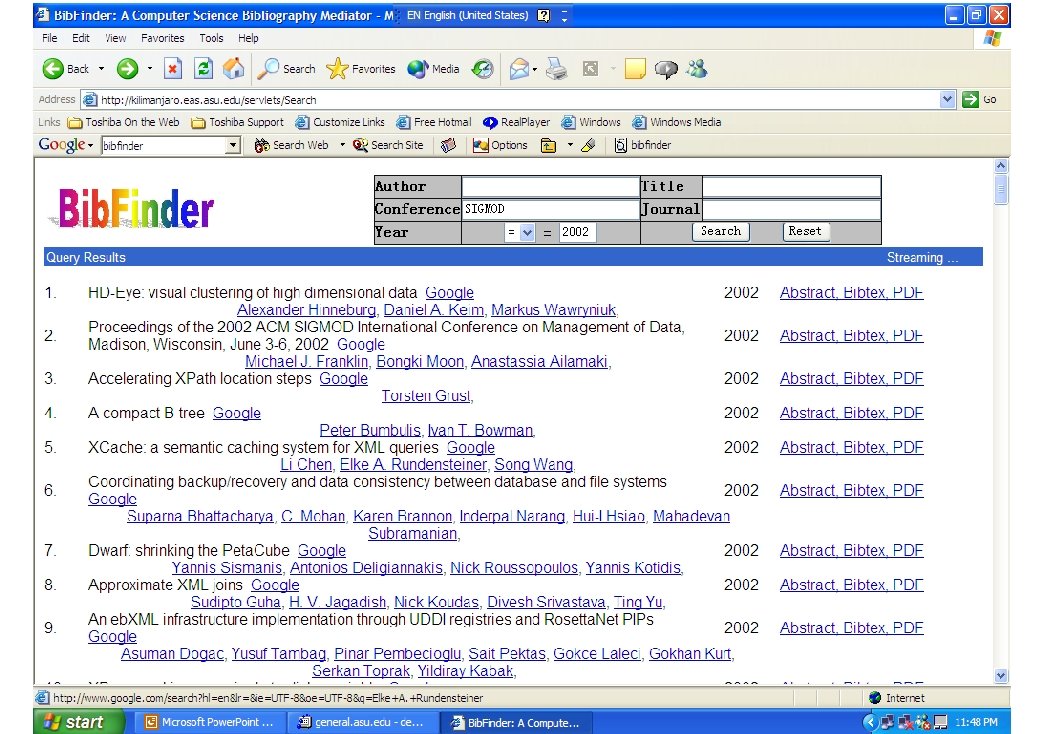

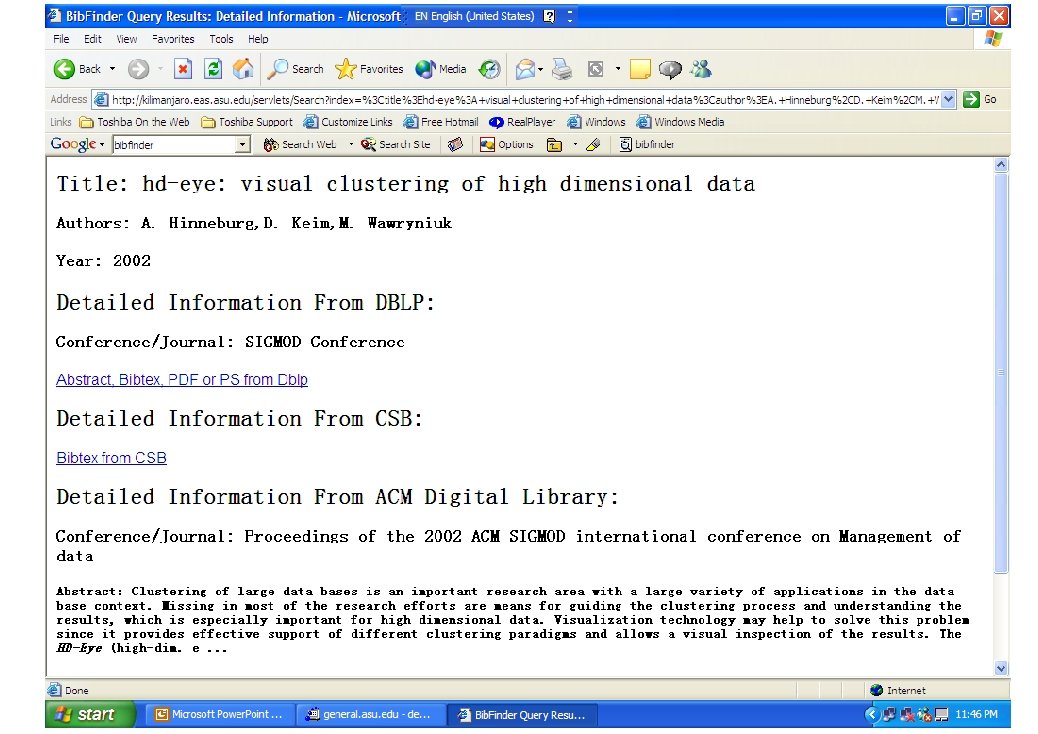

Part I: Bib. Finder • Bib. Finder: A popular CS bibliographic mediator – Integrating 8 online sources: DBLP, ACM DL, ACM Guide, IEEE Xplore, Science. Direct, Network Bibliography, CSB, Cite. Seer – More than 58000 real user queries collected • Mediated schema relation in Bib. Finder: paper(title, author, conference/journal, year) Primary key: title+author+year • Focus on Selection queries Q(title, author, year) : - paper(title, author, conference/journal, year), conference=SIGMOD

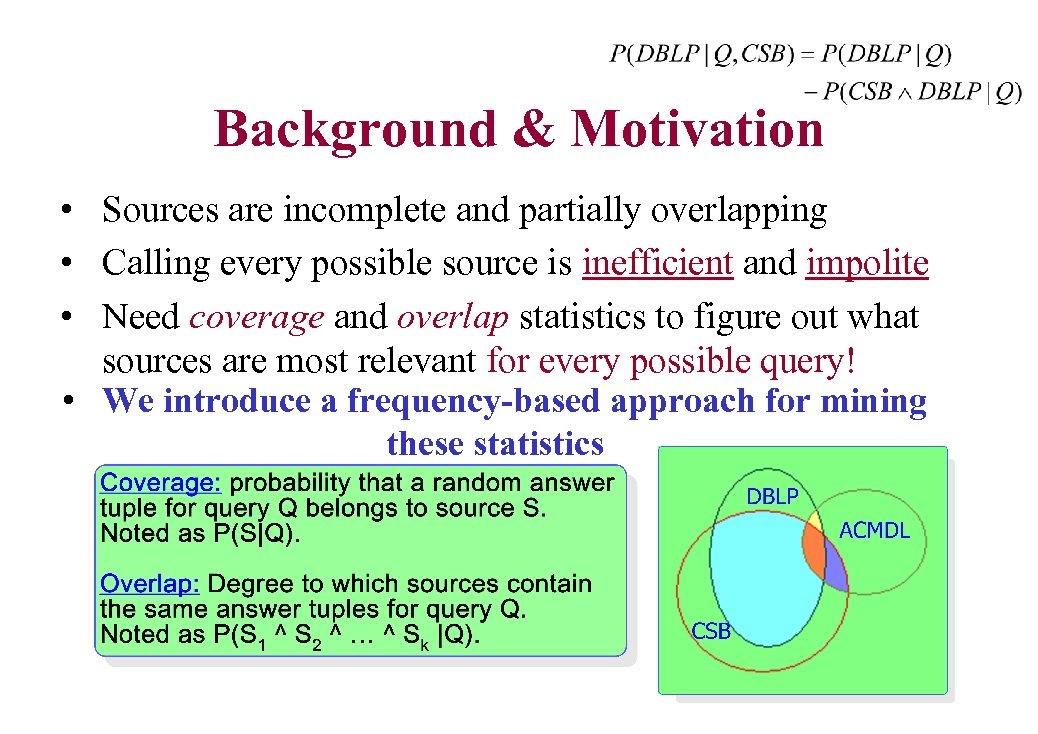

Background & Motivation • Sources are incomplete and partially overlapping • Calling every possible source is inefficient and impolite • Need coverage and overlap statistics to figure out what sources are most relevant for every possible query! • We introduce a frequency-based approach for mining these statistics

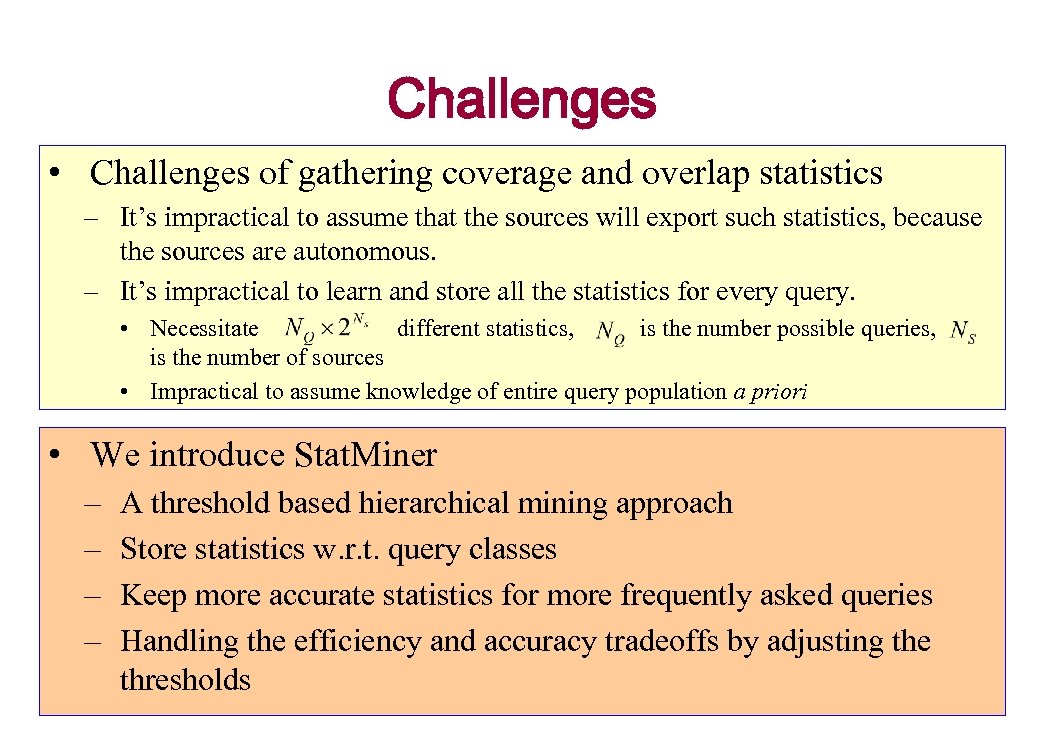

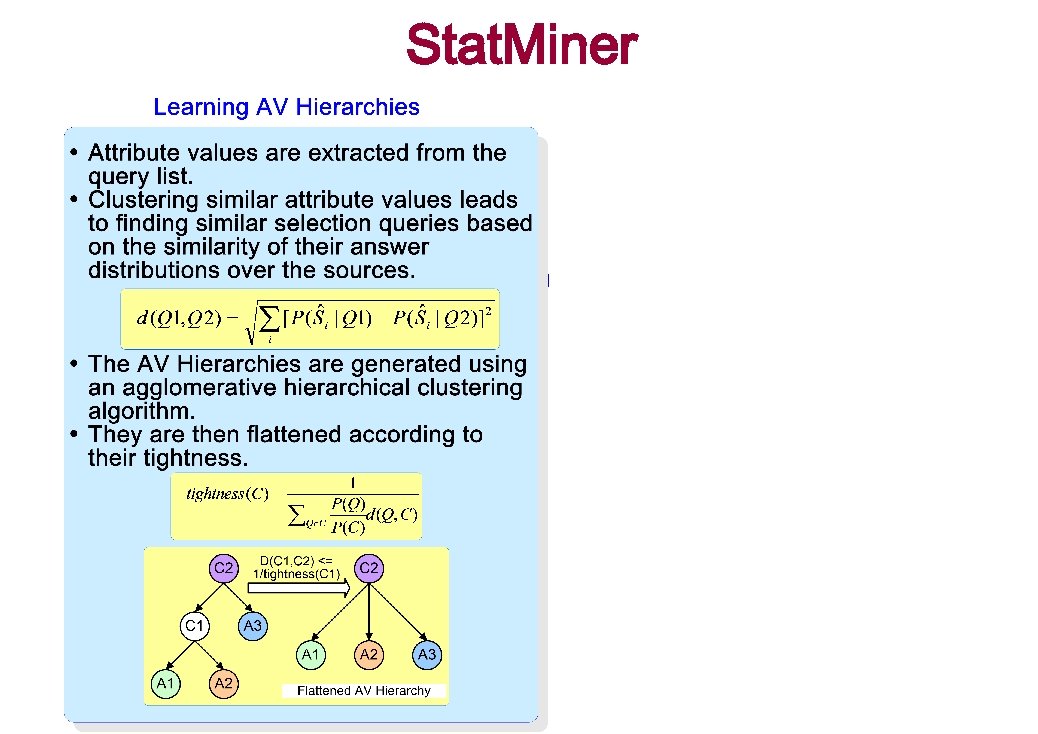

Challenges • Challenges of gathering coverage and overlap statistics – It’s impractical to assume that the sources will export such statistics, because the sources are autonomous. – It’s impractical to learn and store all the statistics for every query. • Necessitate different statistics, is the number possible queries, is the number of sources • Impractical to assume knowledge of entire query population a priori • We introduce Stat. Miner – – A threshold based hierarchical mining approach Store statistics w. r. t. query classes Keep more accurate statistics for more frequently asked queries Handling the efficiency and accuracy tradeoffs by adjusting the thresholds

Bib. Finder/Stat. Miner

Query List & Raw Statistics Given the query list, we can compute the raw statistics for each query: P(S 1. . Sk|q)

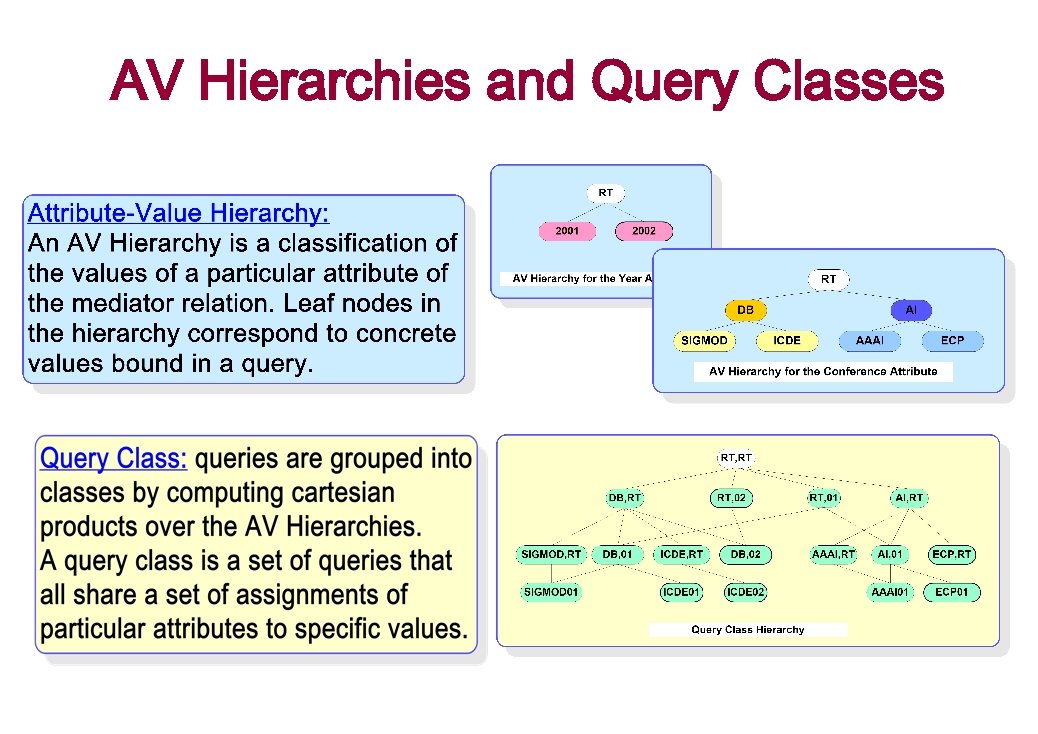

AV Hierarchies and Query Classes

Stat. Miner Raw Stats

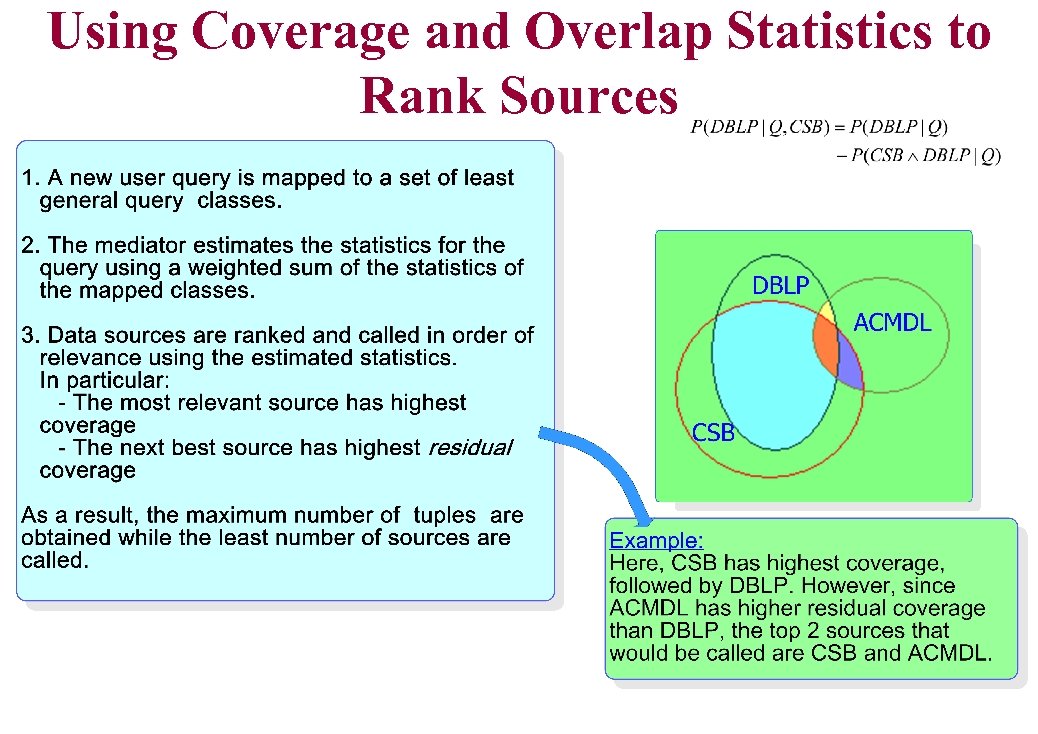

Using Coverage and Overlap Statistics to Rank Sources

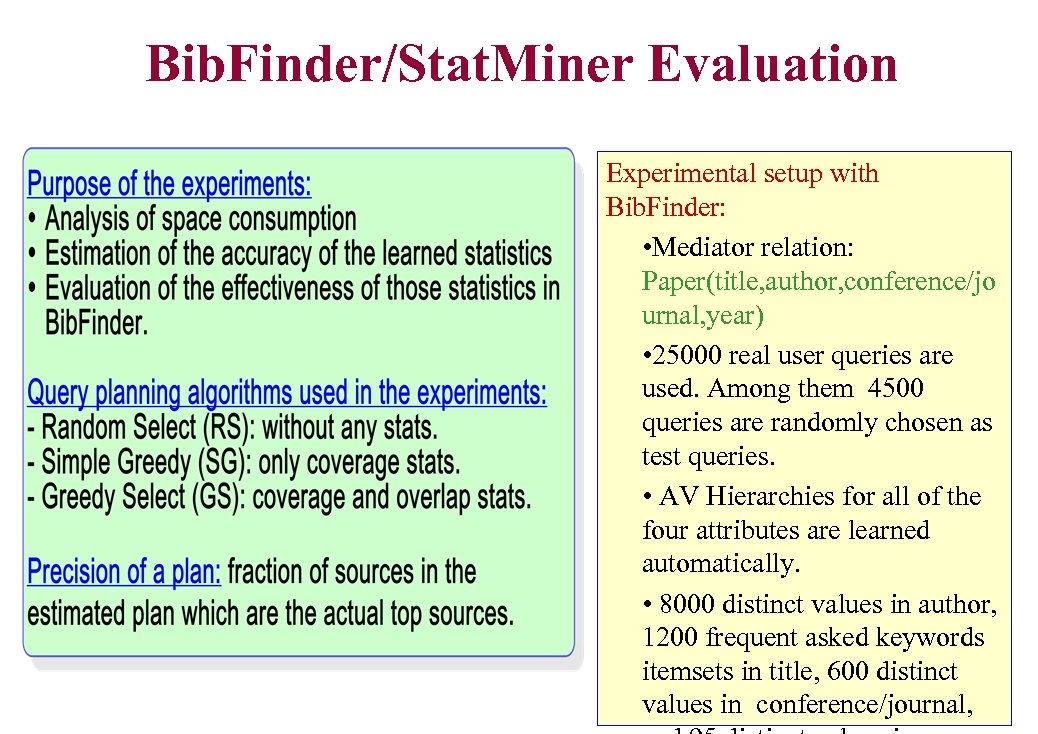

Bib. Finder/Stat. Miner Evaluation Experimental setup with Bib. Finder: • Mediator relation: Paper(title, author, conference/jo urnal, year) • 25000 real user queries are used. Among them 4500 queries are randomly chosen as test queries. • AV Hierarchies for all of the four attributes are learned automatically. • 8000 distinct values in author, 1200 frequent asked keywords itemsets in title, 600 distinct values in conference/journal,

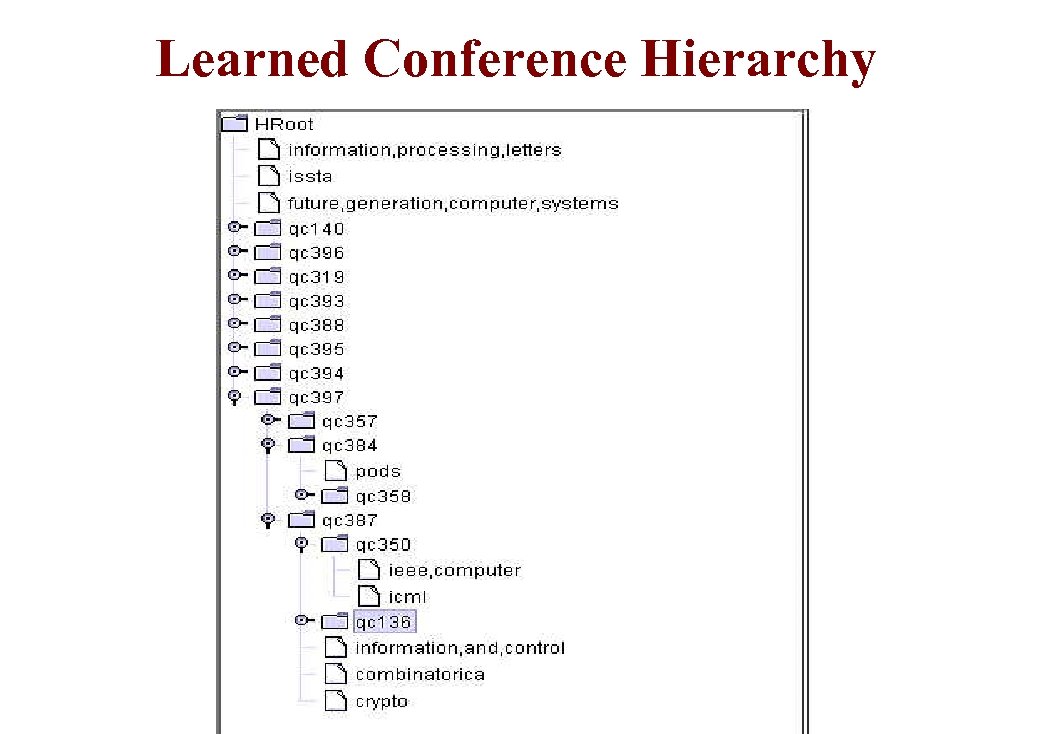

Learned Conference Hierarchy

Plan Precision Fraction of true top-K sources called • Here we observe the average precision of the top-2 source plans • The plans using our learned statistics have high precision compared to random select, and it decreases very slowly as we change the minfreq and minoverlap threshold.

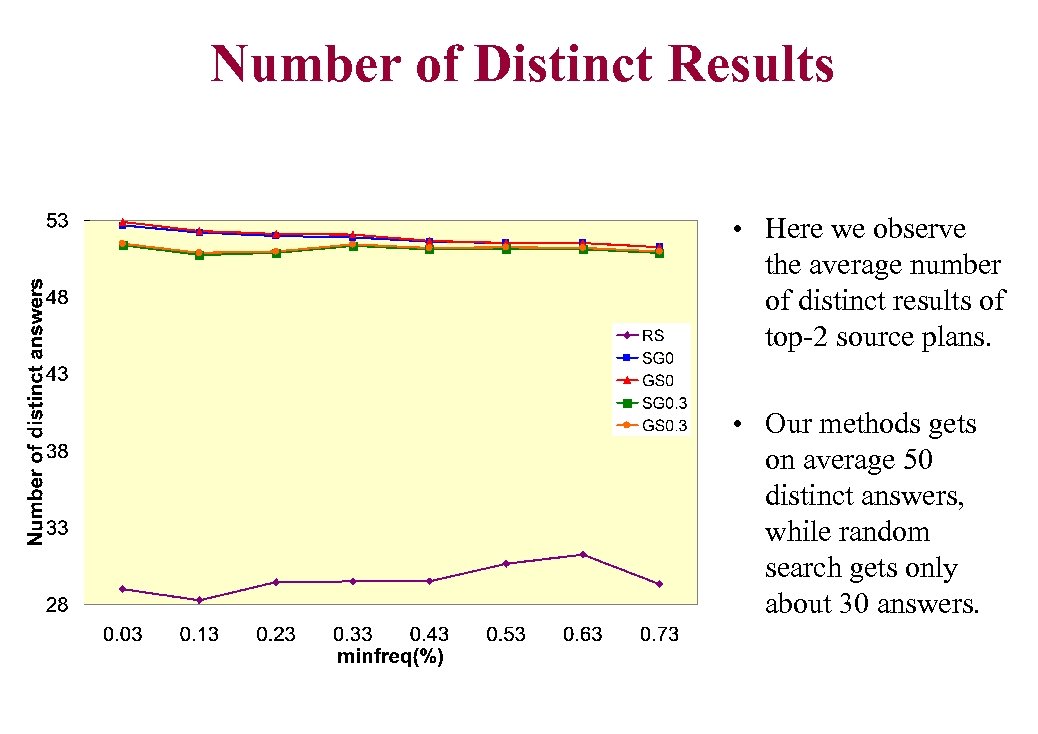

Number of Distinct Results • Here we observe the average number of distinct results of top-2 source plans. • Our methods gets on average 50 distinct answers, while random search gets only about 30 answers.

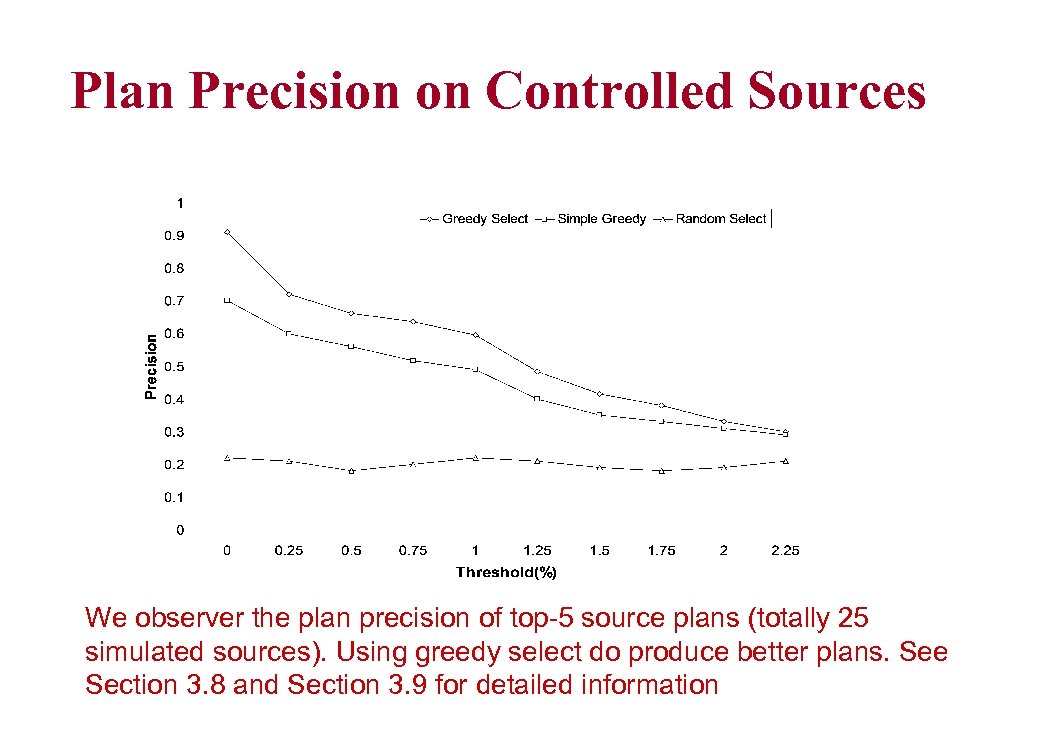

Plan Precision on Controlled Sources We observer the plan precision of top-5 source plans (totally 25 simulated sources). Using greedy select do produce better plans. See Section 3. 8 and Section 3. 9 for detailed information

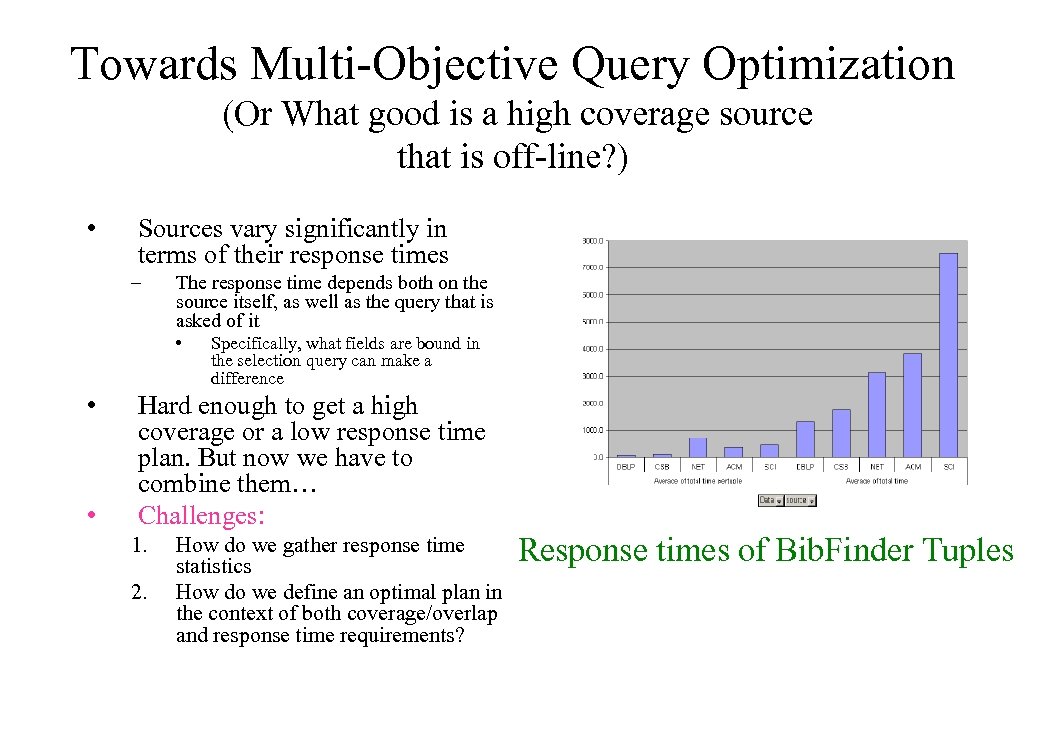

Towards Multi-Objective Query Optimization (Or What good is a high coverage source that is off-line? ) • Sources vary significantly in terms of their response times – The response time depends both on the source itself, as well as the query that is asked of it • • • Specifically, what fields are bound in the selection query can make a difference Hard enough to get a high coverage or a low response time plan. But now we have to combine them… Challenges: 1. 2. How do we gather response time statistics How do we define an optimal plan in the context of both coverage/overlap and response time requirements? Response times of Bib. Finder Tuples

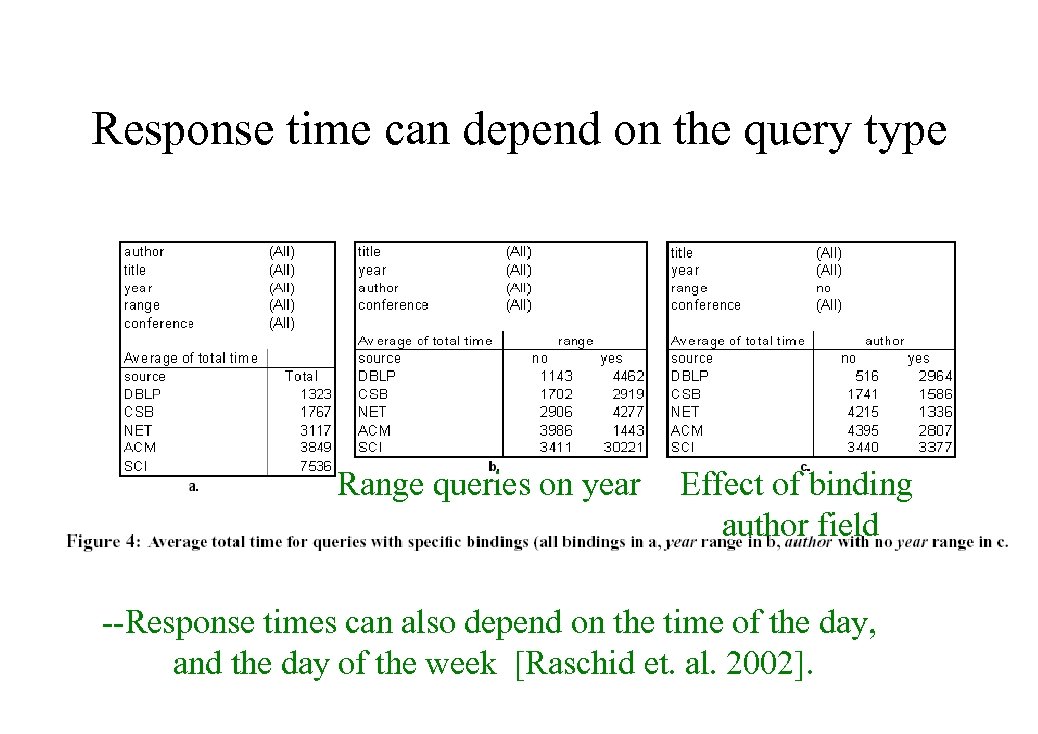

Response time can depend on the query type Range queries on year Effect of binding author field --Response times can also depend on the time of the day, and the day of the week [Raschid et. al. 2002].

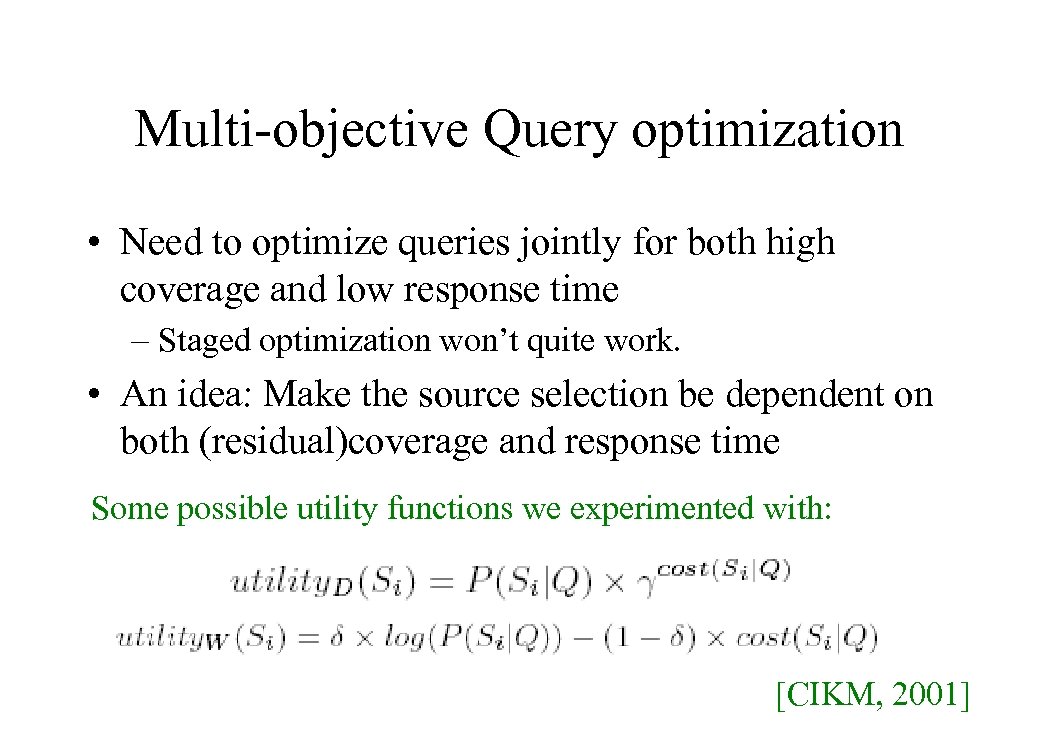

Multi-objective Query optimization • Need to optimize queries jointly for both high coverage and low response time – Staged optimization won’t quite work. • An idea: Make the source selection be dependent on both (residual)coverage and response time Some possible utility functions we experimented with: [CIKM, 2001]

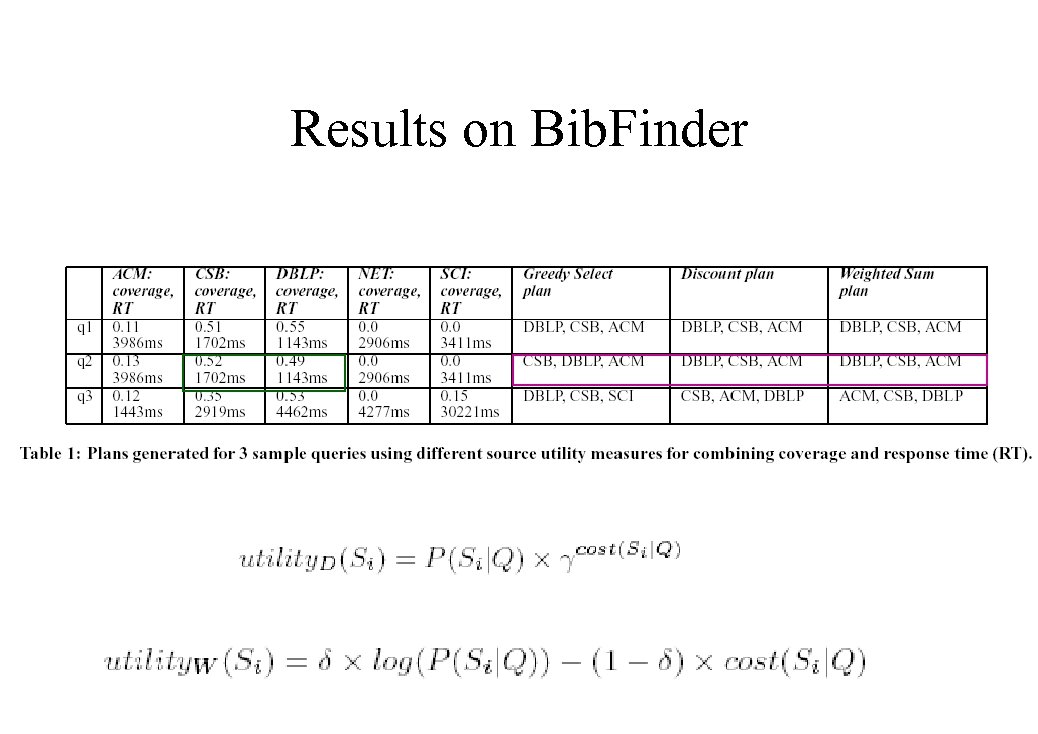

Results on Bib. Finder

Part II: Text Collection Selection with , , , , ,

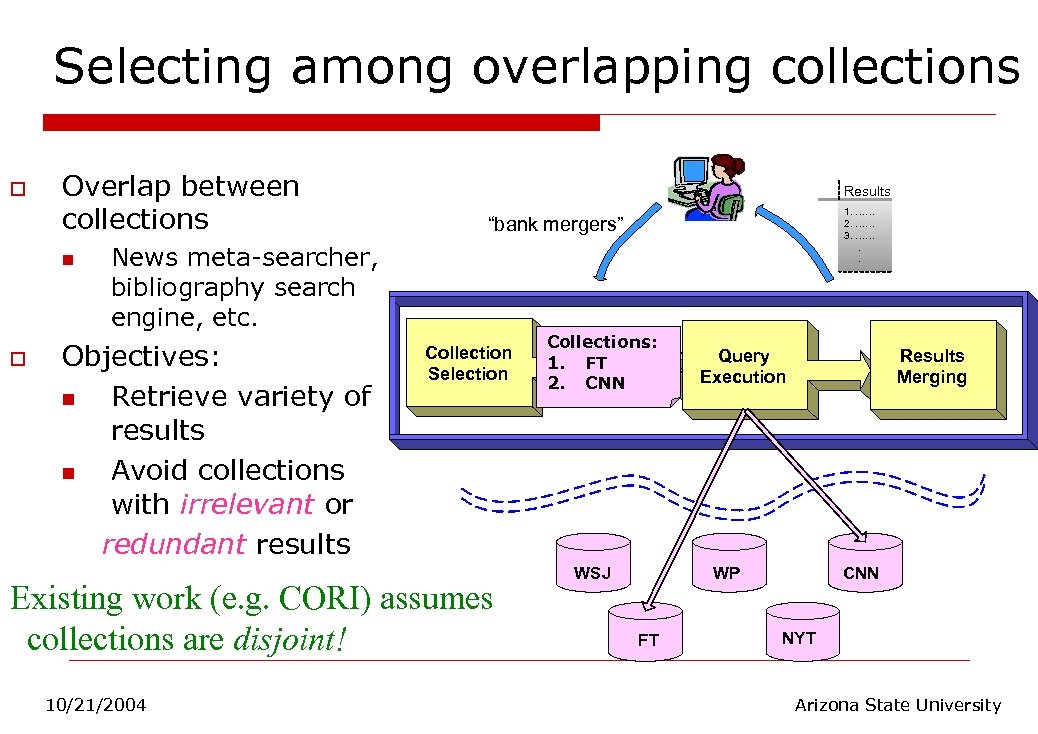

Selecting among overlapping collections o Overlap between collections n o Results “bank mergers” News meta-searcher, bibliography search engine, etc. Objectives: n Retrieve variety of results n Avoid collections with irrelevant or redundant results Collection Selection Existing work (e. g. CORI) assumes collections are disjoint! 10/21/2004 1. …… 2. …… 3. ……. . Collections: 1. FT 2. CNN WSJ Query Execution Results Merging WP FT CNN NYT Arizona State University

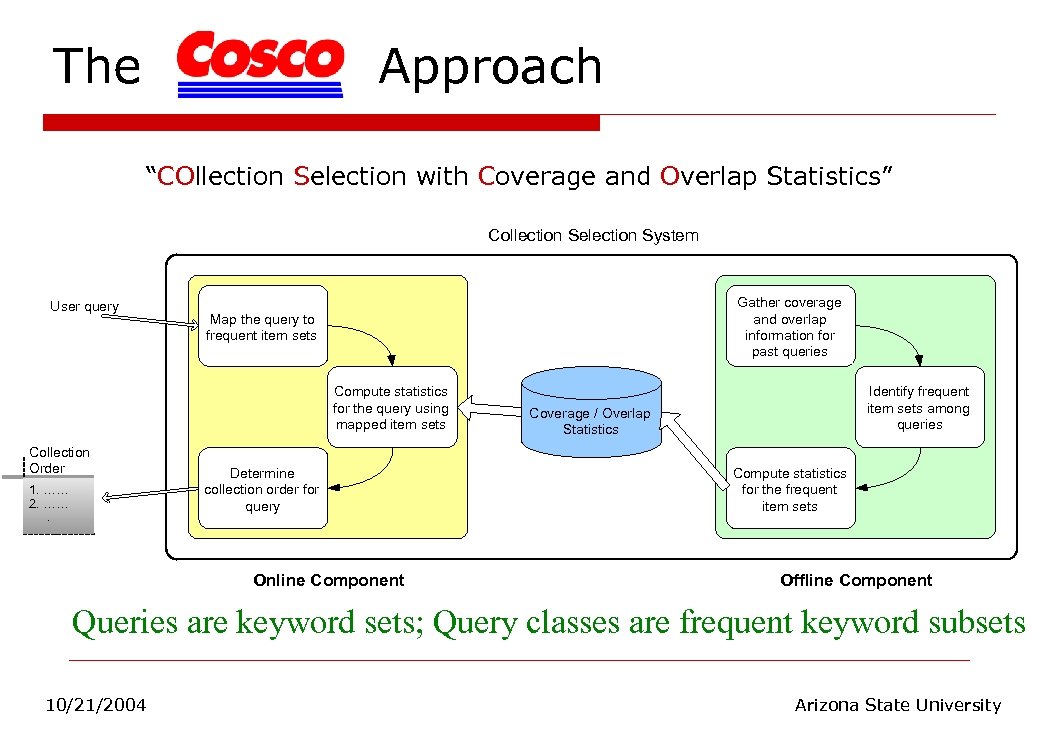

The Approach “COllection Selection with Coverage and Overlap Statistics” Collection Selection System User query Gather coverage and overlap information for past queries Map the query to frequent item sets Compute statistics for the query using mapped item sets Collection Order 1. …… 2. ……. Determine collection order for query Online Component Identify frequent item sets among queries Coverage / Overlap Statistics Compute statistics for the frequent item sets Offline Component Queries are keyword sets; Query classes are frequent keyword subsets 10/21/2004 Arizona State University

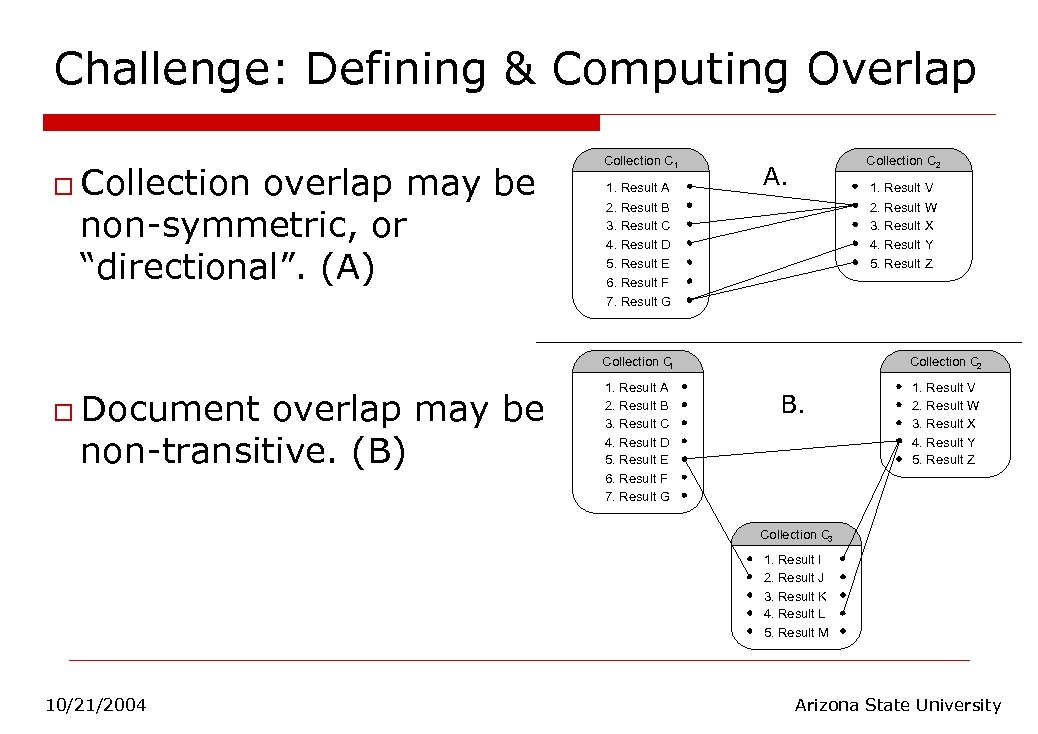

Challenge: Defining & Computing Overlap o Collection overlap may be non-symmetric, or “directional”. (A) Collection C 1 1. Result A 2. Result B 3. Result C 4. Result D 5. Result E 6. Result F 7. Result G Collection C 2 A. 1. Result V 2. Result W 3. Result X 4. Result Y 5. Result Z Collection C 1 o Document overlap may be non-transitive. (B) Collection C 2 1. Result A 2. Result B 3. Result C 4. Result D 5. Result E 6. Result F 7. Result G 1. Result V 2. Result W 3. Result X 4. Result Y 5. Result Z B. Collection C 3 1. Result I 2. Result J 3. Result K 4. Result L 5. Result M 10/21/2004 Arizona State University

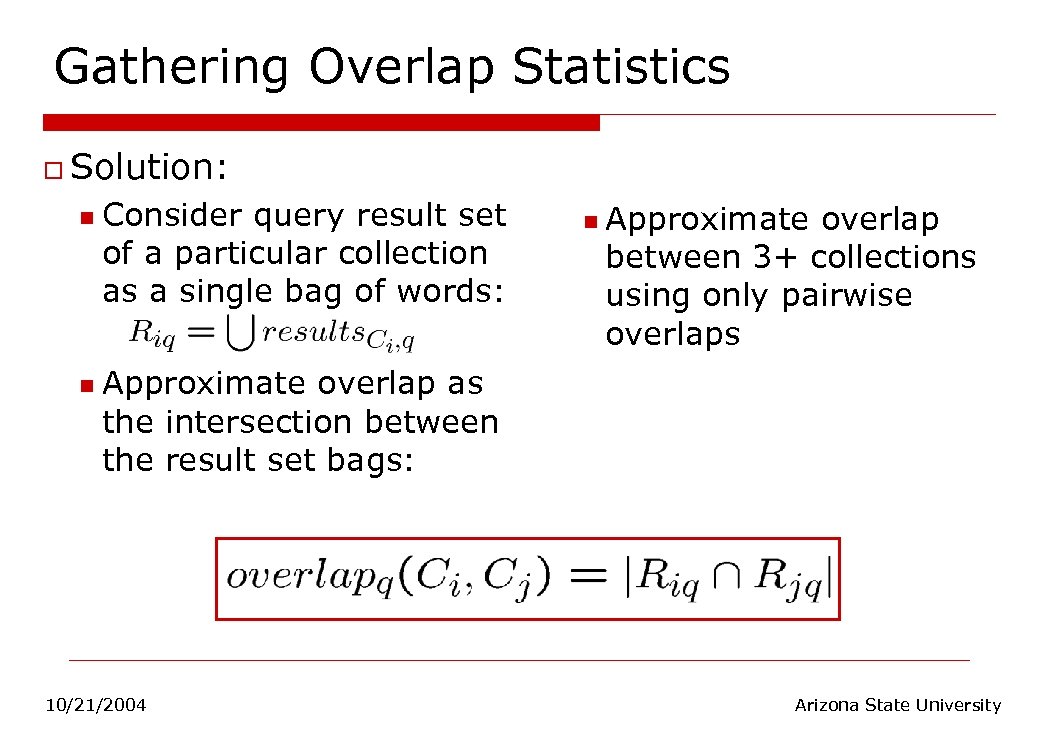

Gathering Overlap Statistics o Solution: n n Consider query result set of a particular collection as a single bag of words: n Approximate overlap between 3+ collections using only pairwise overlaps Approximate overlap as the intersection between the result set bags: 10/21/2004 Arizona State University

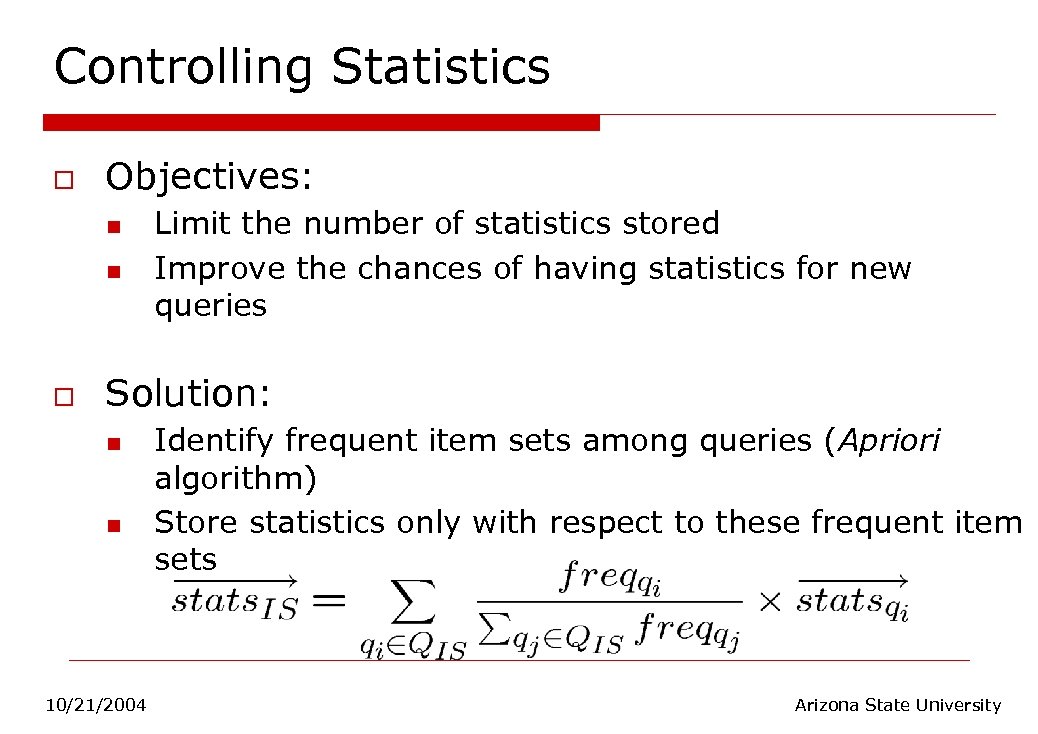

Controlling Statistics o Objectives: n n o Limit the number of statistics stored Improve the chances of having statistics for new queries Solution: n n 10/21/2004 Identify frequent item sets among queries (Apriori algorithm) Store statistics only with respect to these frequent item sets Arizona State University

The Online Component Collection Selection System o Purpose: determine collection order for user query 1. Map query to stored item sets n 2. Compute statistics for query n User query Gather coverage and overlap information for past queries Map the query to frequent item sets Compute statistics for the query using mapped item sets Collection Order 1. …… 2. ……. Identify frequent item sets among queries Coverage / Overlap Statistics Determine collection order for query Compute statistics for the frequent item sets Online Component Offline Component Map the query to frequent item sets Compute statistics for the query using mapped item sets n 3. Determine collection order 10/21/2004 Determine collection order for query Arizona State University

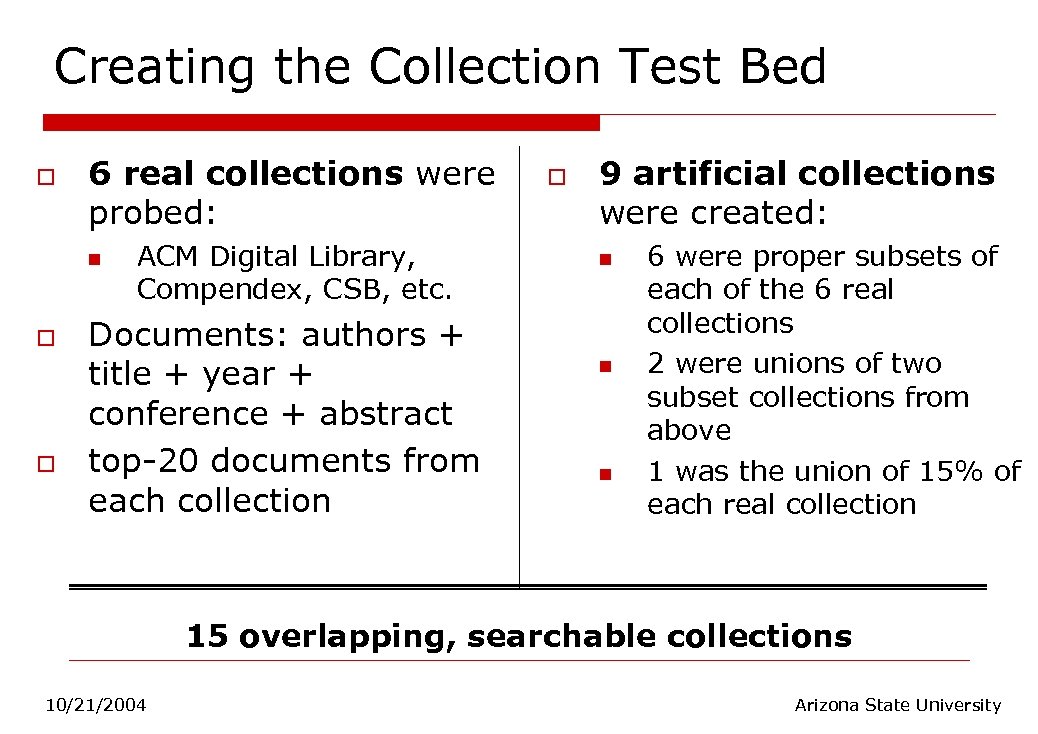

Creating the Collection Test Bed o 6 real collections were probed: n o o ACM Digital Library, Compendex, CSB, etc. Documents: authors + title + year + conference + abstract top-20 documents from each collection o 9 artificial collections were created: n n n 6 were proper subsets of each of the 6 real collections 2 were unions of two subset collections from above 1 was the union of 15% of each real collection 15 overlapping, searchable collections 10/21/2004 Arizona State University

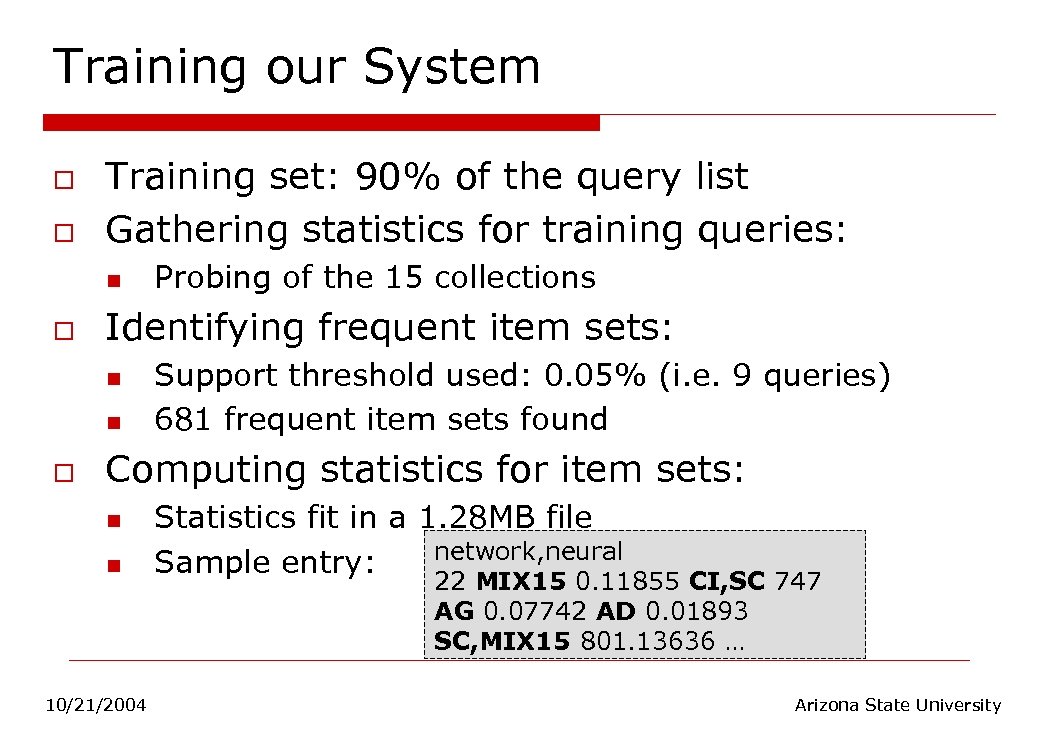

Training our System o o Training set: 90% of the query list Gathering statistics for training queries: n o Identifying frequent item sets: n n o Probing of the 15 collections Support threshold used: 0. 05% (i. e. 9 queries) 681 frequent item sets found Computing statistics for item sets: n n 10/21/2004 Statistics fit in a 1. 28 MB file network, neural Sample entry: 22 MIX 15 0. 11855 CI, SC 747 AG 0. 07742 AD 0. 01893 SC, MIX 15 801. 13636 … Arizona State University

Performance Evaluation o Measuring number of new and duplicate results: n n o Duplicate result: has cosine similarity > 0. 95 with at least one retrieved result New result: has no duplicate Oracular approach: n n 10/21/2004 Knows which collection has most new results Retrieves large portion of new results early Arizona State University

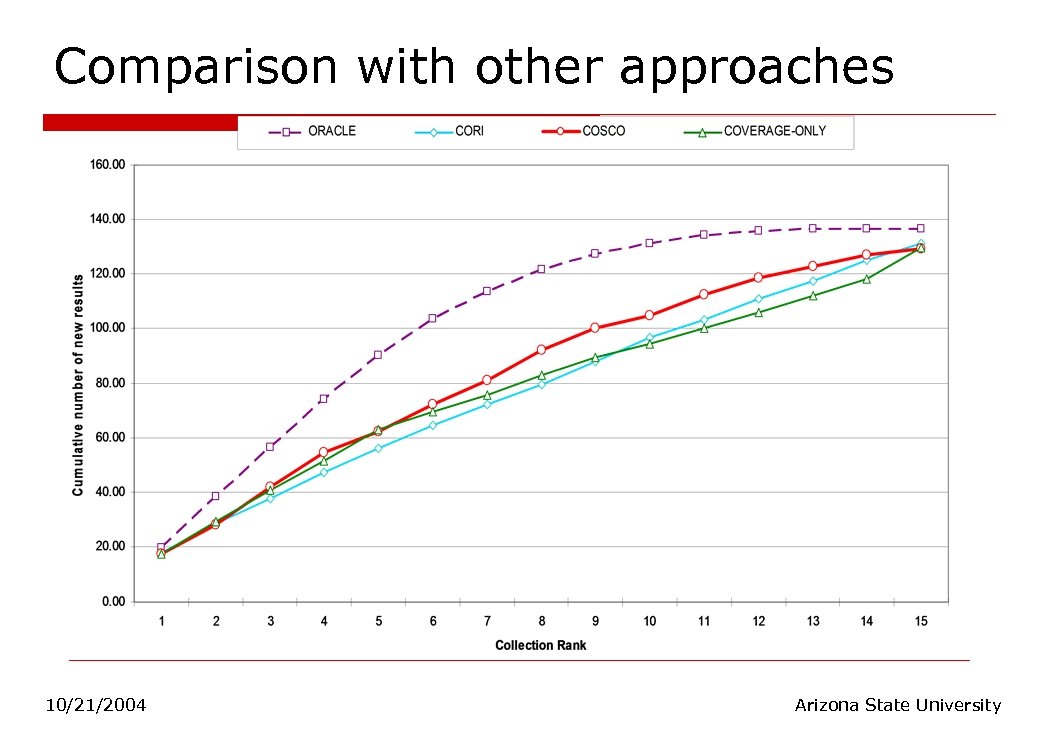

Comparison with other approaches 10/21/2004 Arizona State University

Comparison of COSCO against CORI results 140. 00 16. 00 120. 00 14. 00 100. 00 12. 00 10. 00 8. 00 60. 00 6. 00 20. 00 new cumulative 160. 00 COSCO 18. 00 Cumulative number of new results CORI 18. 00 Number of results, dup, new 160. 00 Number of results, dup, new 20. 00 dup 140. 00 16. 00 120. 00 14. 00 100. 00 12. 00 10. 00 8. 00 60. 00 6. 00 40. 00 4. 00 20. 00 2. 00 0. 00 40. 00 1 2 3 4 5 6 7 8 9 10 11 12 Collection Rank using CORI 13 14 15 0. 00 2. 00 0. 00 1 2 3 4 5 6 7 8 9 10 11 12 13 14 Collection Rank using Coverage and Overlap 15 0. 00 CORI: constant rate of change, as many new results as duplicates, more total results retrieved early o COSCO: globally descending trend of new results, sharp difference between # of new and duplicates, fewer total results first o 10/21/2004 Arizona State University

Summary of Experimental Results o COSCO… n n n 10/21/2004 displays Oracular-like behavior. consistently outperforms CORI. retrieves up to 30% more results than CORI when test queries reflect training queries. can map at least 50% of queries to some item sets, even in worst-case training queries. is a step towards Oracular-like performance, but still some room for improvement Arizona State University

![Part III: Answer Imprecise Queries with [Web. DB, 2004; WWW, 2004] Part III: Answer Imprecise Queries with [Web. DB, 2004; WWW, 2004]](https://present5.com/presentation/72727da0e3952782626b05a4a62538c3/image-39.jpg)

Part III: Answer Imprecise Queries with [Web. DB, 2004; WWW, 2004]

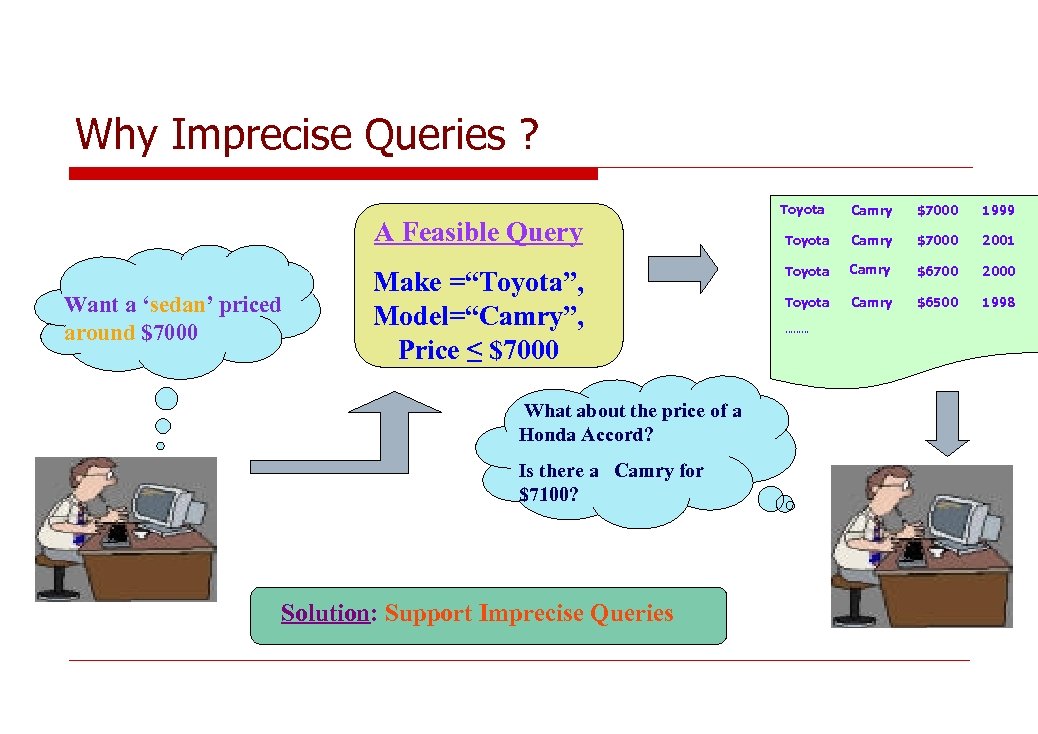

Why Imprecise Queries ? A Feasible Query Want a ‘sedan’ priced around $7000 Make =“Toyota”, Model=“Camry”, Price ≤ $7000 What about the price of a Honda Accord? Is there a Camry for $7100? Solution: Support Imprecise Queries Toyota Camry $7000 1999 Toyota Camry $7000 2001 Toyota Camry $6700 2000 Toyota Camry $6500 1998 ………

Dichotomy in Query Processing Databases IR Systems User knows what she wants • User has an idea of what she wants • User query captures the need to some degree • Answers ranked by degree of relevance User query completely expresses the need Answers exactly matching query constraints

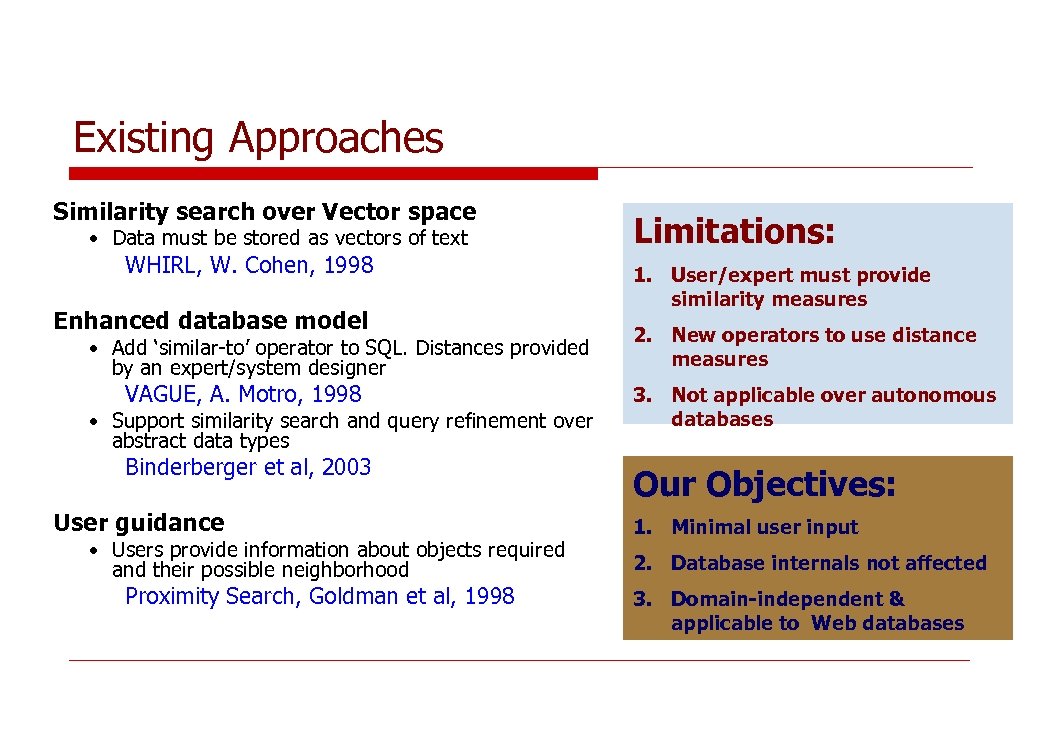

Existing Approaches Similarity search over Vector space • Data must be stored as vectors of text WHIRL, W. Cohen, 1998 Enhanced database model • Add ‘similar-to’ operator to SQL. Distances provided by an expert/system designer VAGUE, A. Motro, 1998 • Support similarity search and query refinement over abstract data types Binderberger et al, 2003 User guidance • Users provide information about objects required and their possible neighborhood Proximity Search, Goldman et al, 1998 Limitations: 1. User/expert must provide similarity measures 2. New operators to use distance measures 3. Not applicable over autonomous databases Our Objectives: 1. Minimal user input 2. Database internals not affected 3. Domain-independent & applicable to Web databases

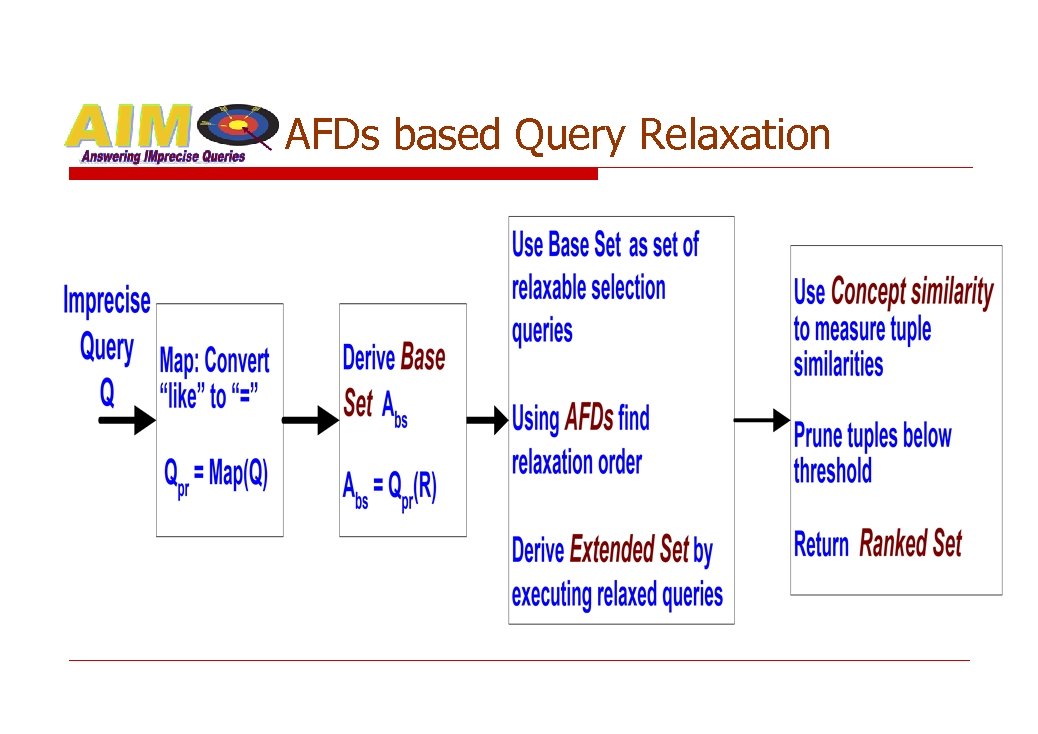

AFDs based Query Relaxation

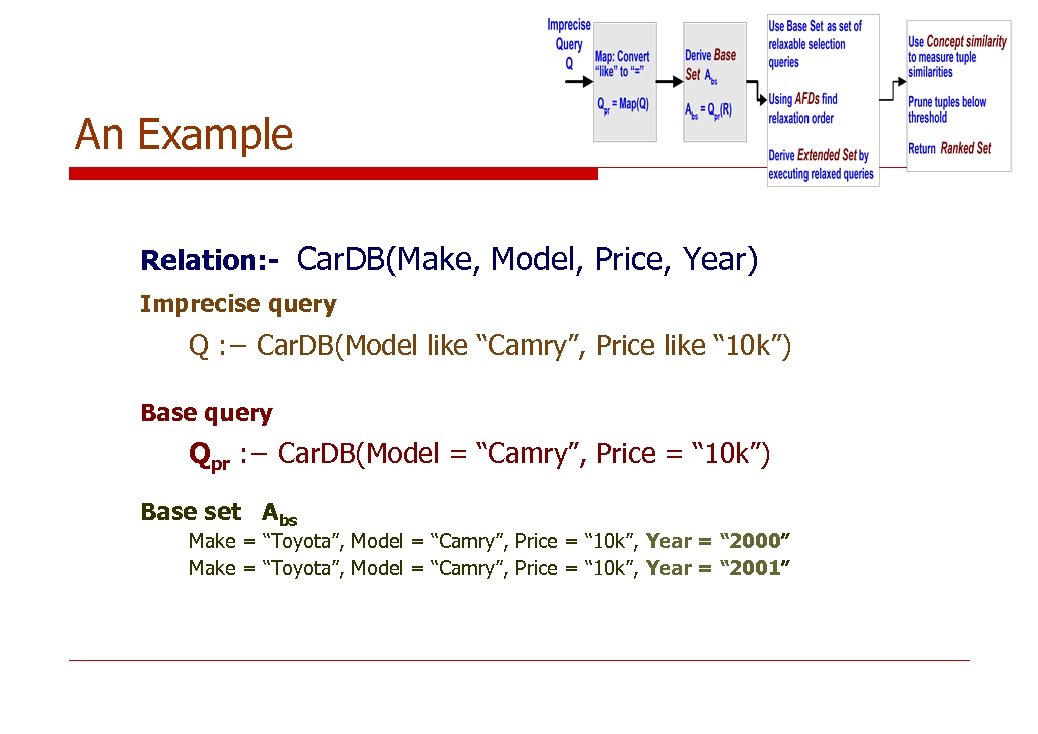

An Example Relation: - Car. DB(Make, Model, Price, Year) Imprecise query Q : − Car. DB(Model like “Camry”, Price like “ 10 k”) Base query Qpr : − Car. DB(Model = “Camry”, Price = “ 10 k”) Base set Abs Make = “Toyota”, Model = “Camry”, Price = “ 10 k”, Year = “ 2000” Make = “Toyota”, Model = “Camry”, Price = “ 10 k”, Year = “ 2001”

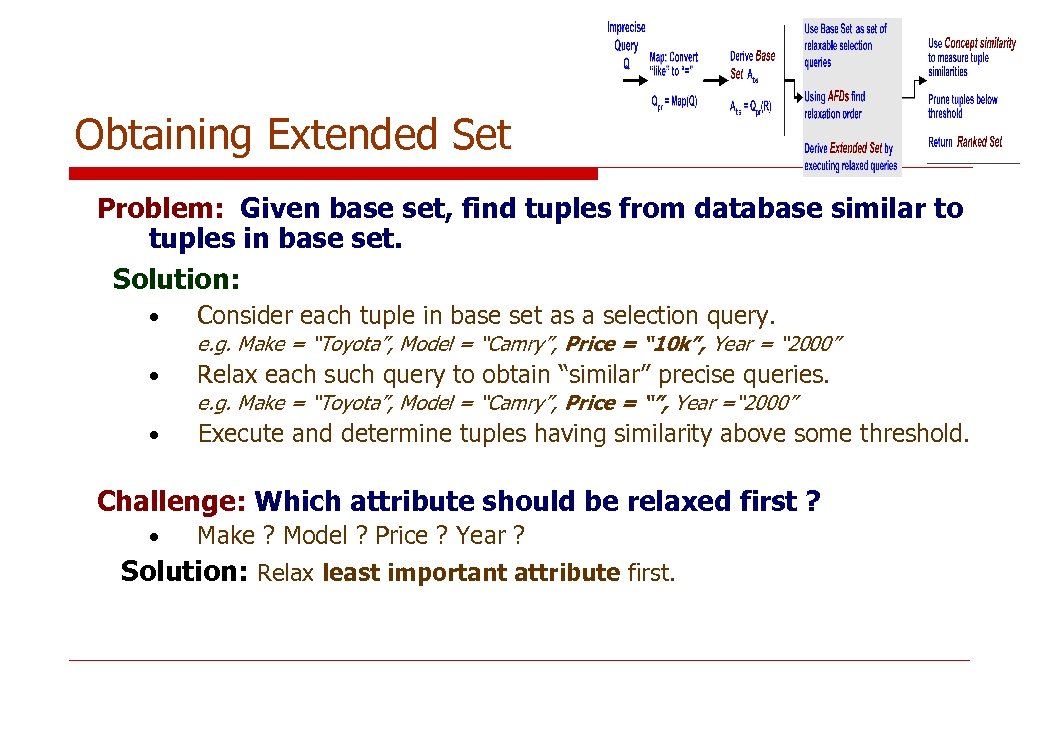

Obtaining Extended Set Problem: Given base set, find tuples from database similar to tuples in base set. Solution: • Consider each tuple in base set as a selection query. e. g. Make = “Toyota”, Model = “Camry”, Price = “ 10 k”, Year = “ 2000” • Relax each such query to obtain “similar” precise queries. e. g. Make = “Toyota”, Model = “Camry”, Price = “”, Year =“ 2000” • Execute and determine tuples having similarity above some threshold. Challenge: Which attribute should be relaxed first ? Make ? Model ? Price ? Year ? Solution: Relax least important attribute first. •

Least Important Attribute Definition: An attribute whose binding value when changed has minimal effect on values binding other attributes. • Does not decide values of other attributes • Value may depend on other attributes E. g. Changing/relaxing Price will usually not affect other attributes but changing Model usually affects Price Dependence between attributes useful to decide relative importance • Approximate Functional Dependencies & Approximate Keys § Approximate in the sense that they are obeyed by a large percentage (but not all) of tuples in the database • Can use TANE, an algorithm by Huhtala et al [1999]

Attribute Ordering Given a relation R • • Determine the AFDs and Approximate Keys Pick key with highest support, say Kbest Partition attributes of R into § key attributes i. e. belonging to Kbest § non-key attributes I. e. not belonging to Kbest Sort the subsets using influence weights Car. DB(Make, Model, Year, Price) Key attributes: Make, Year Non-key: Model, Price Order: Price, Model, Year, Make 1 - attribute: { Price, Model, Year, Make} where Ai ∈ A’ ⊆ R, j ≠ i & j =1 to |Attributes(R)| Attribute relaxation order is all non-keys first then keys Multi-attribute relaxation - independence assumption 2 -attribute: {(Price, Model), (Price, Year), (Price, Make)…. . }

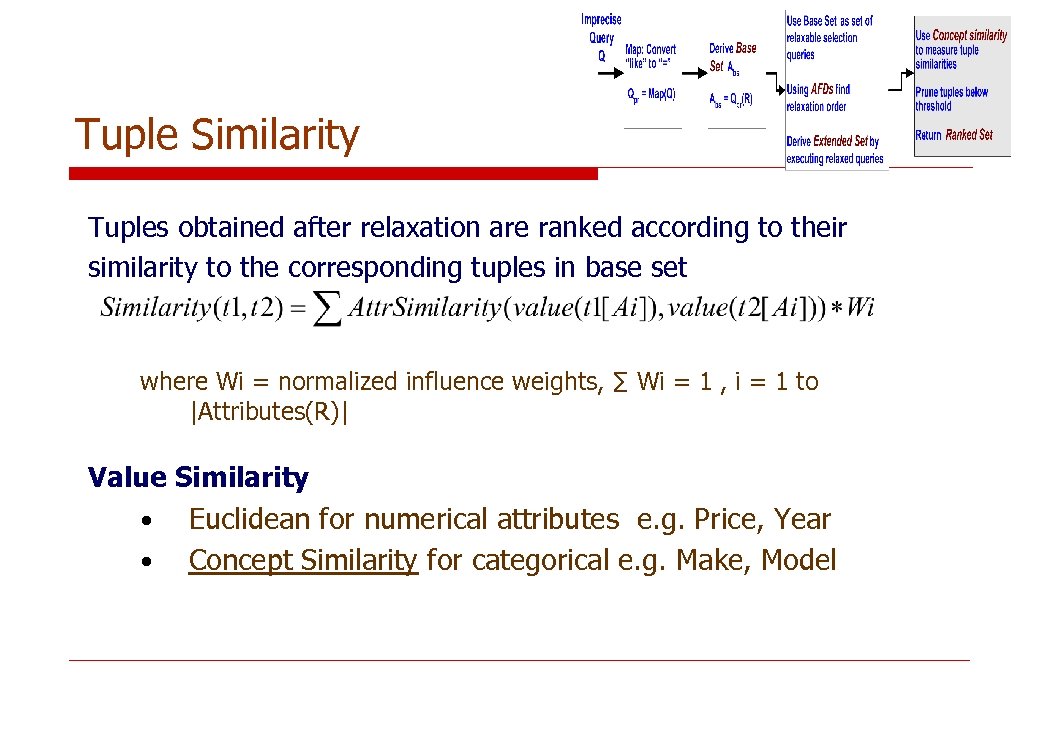

Tuple Similarity Tuples obtained after relaxation are ranked according to their similarity to the corresponding tuples in base set where Wi = normalized influence weights, ∑ Wi = 1 , i = 1 to |Attributes(R)| Value Similarity • Euclidean for numerical attributes e. g. Price, Year • Concept Similarity for categorical e. g. Make, Model

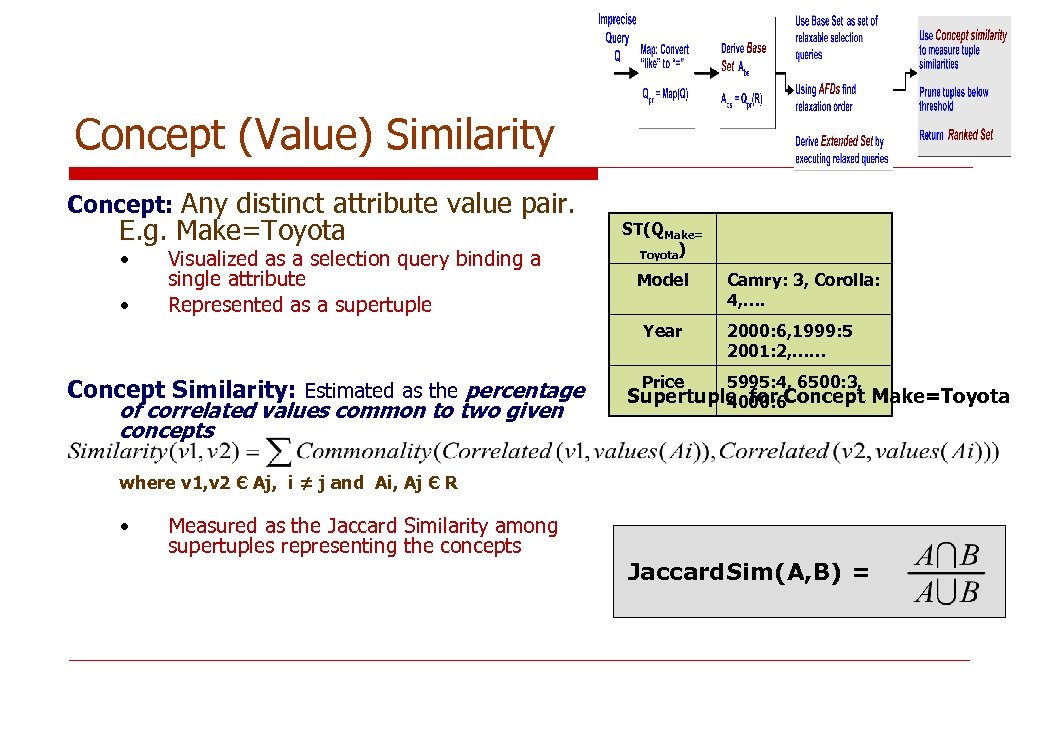

Concept (Value) Similarity Concept: Any distinct attribute value pair. E. g. Make=Toyota • • Visualized as a selection query binding a single attribute Represented as a supertuple ST(QMake= Toyota) Model Year Concept Similarity: Estimated as the percentage of correlated values common to two given concepts Camry: 3, Corolla: 4, …. 2000: 6, 1999: 5 2001: 2, …… Price 5995: 4, 6500: 3, Supertuple for Concept Make=Toyota 4000: 6 where v 1, v 2 Є Aj, i ≠ j and Ai, Aj Є R • Measured as the Jaccard Similarity among supertuples representing the concepts Jaccard. Sim(A, B) =

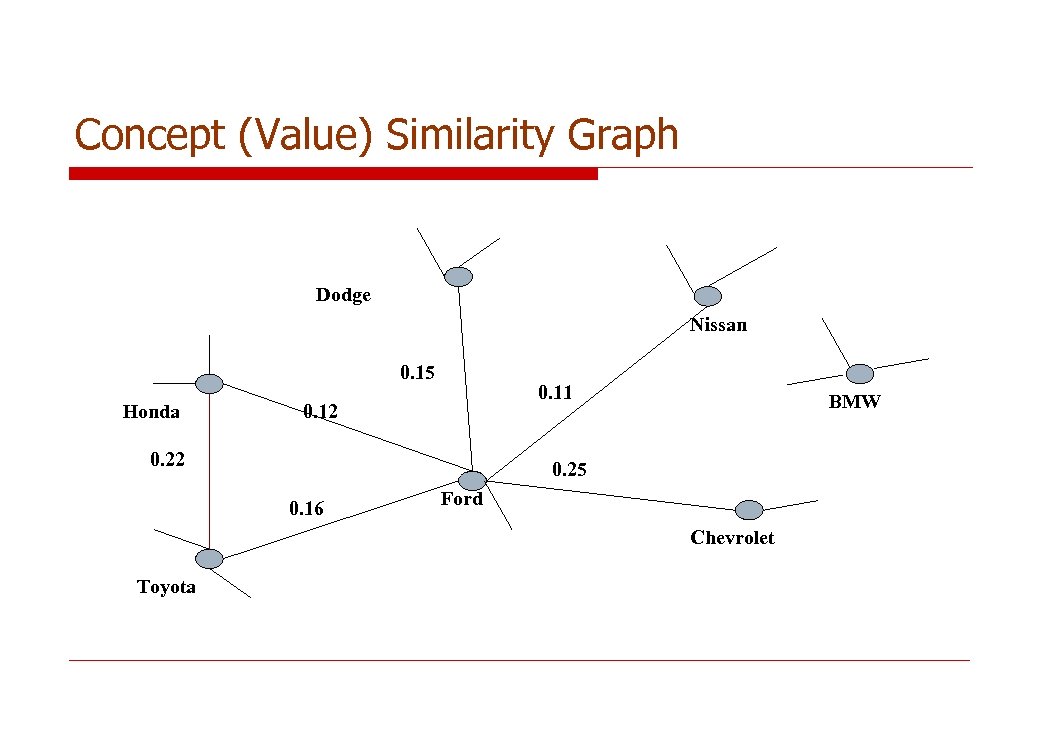

Concept (Value) Similarity Graph Dodge Nissan 0. 15 Honda 0. 11 0. 12 0. 22 BMW 0. 25 0. 16 Ford Chevrolet Toyota

Empirical Evaluation of Goal • Evaluate the effectiveness of the query relaxation and concept learning Setup • A database of used cars Car. DB( Make, Model, Year, Price, Mileage, Location, Color) • Populated using 30 k tuples from Yahoo Autos Concept similarity estimated for Make, Model, Location, Color Two query relaxation algorithms § Random. Relax – randomly picks attribute to relax § Guided. Relax – uses relaxation order determined using approximate keys and AFDs • •

Evaluating the effectiveness of relaxation Test Scenario • 10 randomly selected base queries from Car. DB • 20 tuples showing similarity > Є § 0. 5 < Є < 1 • Weighted summation of attribute similarities § Euclidean distance used for Year, Price, Mileage § • • Concept Similarity used for Make, Model, Location, Color Limit 64 relaxed queries per base query § 128 max possible – 7 attributes Efficiency measured using metric

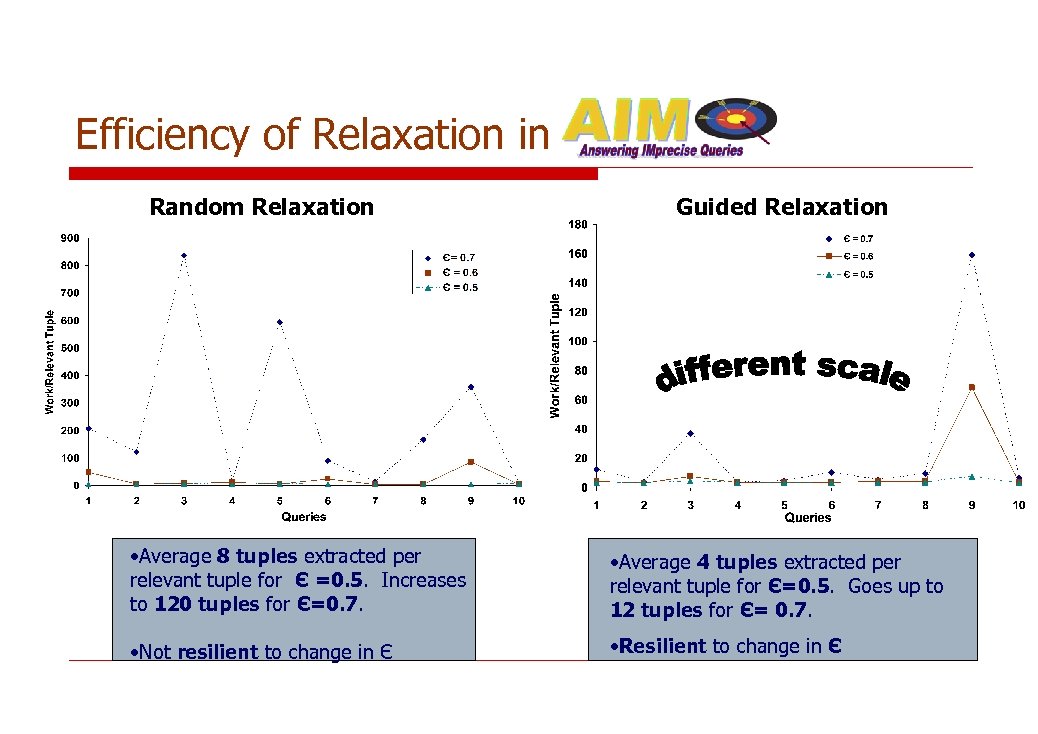

Efficiency of Relaxation in Random Relaxation Guided Relaxation • Average 8 tuples extracted per relevant tuple for Є =0. 5. Increases to 120 tuples for Є=0. 7. • Average 4 tuples extracted per relevant tuple for Є=0. 5. Goes up to 12 tuples for Є= 0. 7. • Not resilient to change in Є • Resilient to change in Є

Summary An approach for answering imprecise queries over Web database • • • Mine and use AFDs to determine attribute importance Domain-independent concept similarity estimation technique Tuple similarity score as a weighted sum of attribute similarity scores Empirical evaluation shows • • Reasonable concept similarity models estimated Set of similar precise queries efficiently identified

Adaptive Information Integration • Query processing in information integration needs to be adaptive to: – Source characteristics • How is the data spread among the sources? – User needs • Multi-objective queries (tradeoff coverage for cost) • Imprecise queries • To be adaptive we need, profiles (meta-data) about sources as well as users – Challenge: Profiles are not going to be provided. . • Autonomous sources may not export meta-data about data spread! • Lay users may not be able to articulate the source of their imprecision! Need approaches that gather (learn) the meta-data they need

Three contributions to Adaptive Information Integration • Bib. Finder – Learns and uses source coverage and overlap statistics to support multi-objective query processing • [VLDB 2003; ICDE 2004; TKDE 2005] • COSCO – Adapts the Coverage/Overlap techniques to text collection selection • – Supports imprecise queries by automatically learning approximate structural relations among data tuples • [Web. DB 2004; WWW 2004] Although we focus on avoiding retrieval of duplicates, Coverage/Overlap statistics can also be used to look for duplicates

Current Directions • Focusing on retrieving redundant records/documents to improve information quality – Eg. Multiple view points on the same story, additional details (e. g. bibtex entry) on a bibliography record – Our coverage/overlap statistics can be used for this purpose too! • Learning and exploiting other types of source statistics – “Density”—the percentage of null values in a record – “Recency”/ “Freshness”—how recent the results from a source or likely to be • These statistics also may vary based on the query type – E. g. DBLP is more up-to-date for database papers than AI papers – Such statistics can be used to increase the quality of answers returned by the mediator in accessing top-K sources.

72727da0e3952782626b05a4a62538c3.ppt