8034ffc35ab65e595d6d69431c718c0a.ppt

- Количество слайдов: 36

Accessibility Motivations for an English-to-ASL Machine Translation System Matt Huenerfauth Closing Plenary The 6 th International ACM SIGACCESS Conference on Computers and Accessibility October 20, 2004 Atlanta, GA, USA Computer and Information Science University of Pennsylvania Research Advisors: Mitch Marcus & Martha Palmer

A two-part story… • Development of English-to-ASL machine translation (MT) software for accessibility applications has been slow… – Misconceptions: the deaf experience, ASL linguistics, and ASL’s relationship to English. – Challenges: some ASL phenomena are very difficult (but important) to translate.

Misconceptions about Deaf Literacy and ASL MT How have they affected research?

Misconception: All deaf people are written-English literate. • Only half of deaf high school graduates (age 18+) can read English at a fourth-grade (age 10) level, despite ASL fluency. • Many deaf accessibility tools forget that English is a second language for these students (and has a different structure). • Applications for a Machine Translation System: – – TV captioning, teletype telephones. Computer user-interfaces in ASL. Educational tools using ASL animation. Access to information/media. Audiology Online

Building tools to address deaf literacy… What’s our input? English Text. What’s our output? ASL has no written form. Imagine a 3 D virtual reality human being… One that can perform sign language… But this character needs a set of instructions telling it how to move! Our job: English These Instructions. VCom 3 d

Building tools to address deaf literacy… We can use an off-the-shelf animated character. Photos: Seamless Solutions, Inc. Simon the Signer (Bangham et al. 2000. ) Vcom 3 D Corporation

Misconception: ASL is just manually performed English. • Signed English vs. American Sign Language. • Some ASL sentences have a structure that is similar to written languages. • Other sentences use space around signer to describe 3 D layout of a real-world scene. – Hands indicate movement and location of entities in the scene (using special handshapes). – These are called “Classifier Predicates. ”

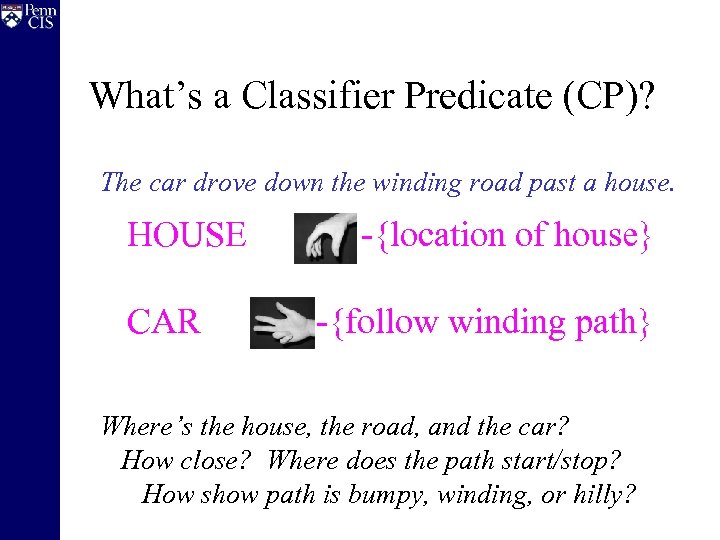

What’s a Classifier Predicate (CP)? The car drove down the winding road past a house. HOUSE CAR -{location of house} -{follow winding path} Where’s the house, the road, and the car? How close? Where does the path start/stop? How show path is bumpy, winding, or hilly?

Misconception: Traditional MT software is well-suited to ASL. • Classifier predicates are hard to produce. – 3 D paths for the hands, layout of the scene. – Grammar rules & dictionaries? Not enough. • No written form of ASL. – Very little English-ASL parallel corpora. – Can’t use machine learning approaches. • Previous systems are only partial solutions. – Some produce only Signed English, not ASL. – None can produce classifier predicates.

Misconception: OK to ignore visual/spatial ASL phenomena. But classifier predicates are important! – CPs are needed to convey many concepts. – Signers use CPs frequently. * – English sentences that produce CPs are the ones that signers often have trouble reading. – CPs needed for some important applications • User-interfaces with ASL animation • Literacy educational software * Morford and Mc. Farland. 2003. “Sign Frequency Characteristics of ASL. ” Sign Language Studies. 3: 2.

ASL MT Challenges: Producing Classifier Predicates And some novel solutions…

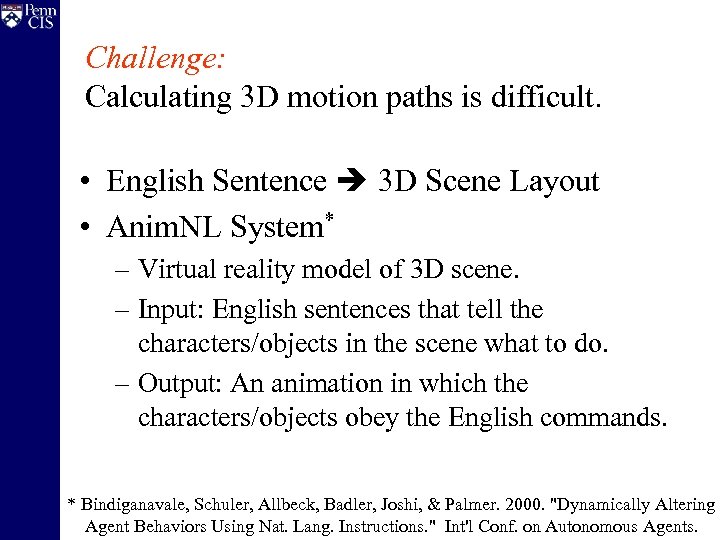

Challenge: Calculating 3 D motion paths is difficult. • Several approaches to generating the 3 D motion path of the hands were examined. * – For linguistic and engineering reasons, several simplistic approaches were discounted: • Pre-storing all possible motion paths. • Rule-based approach to construct motion paths. – To produce CPs, the system needs to model the 3 D layout of the objects under discussion. * Huenerfauth, M. 2004. “Spatial Representation of Classifier Predicates for MT into ASL. ” Workshop on Representation and Processing of Signed Languages, LREC-2004.

Challenge: Calculating 3 D motion paths is difficult. • English Sentence 3 D Scene Layout • Anim. NL System* – Virtual reality model of 3 D scene. – Input: English sentences that tell the characters/objects in the scene what to do. – Output: An animation in which the characters/objects obey the English commands. * Bindiganavale, Schuler, Allbeck, Badler, Joshi, & Palmer. 2000. "Dynamically Altering Agent Behaviors Using Nat. Lang. Instructions. " Int'l Conf. on Autonomous Agents.

Anim. NL Software “the car drove down the winding road past the house. ”

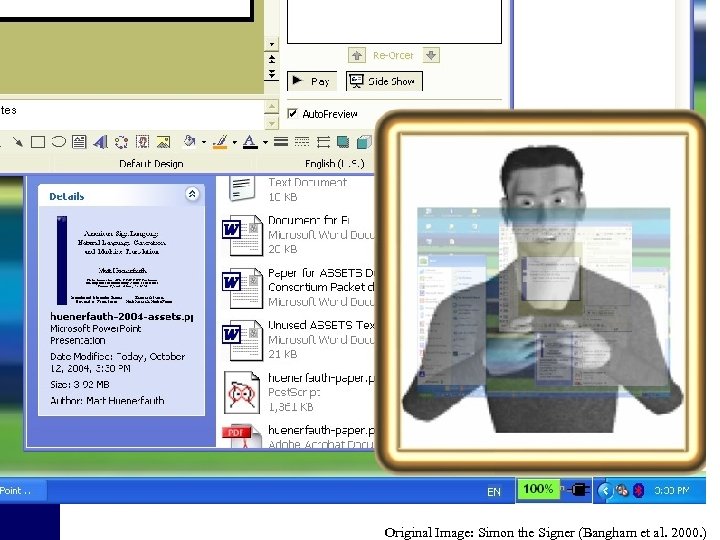

Calculating 3 D Paths for Hands “the car drove down the winding road past the house. ” Original Image: Simon the Signer (Bangham et al. 2000. )

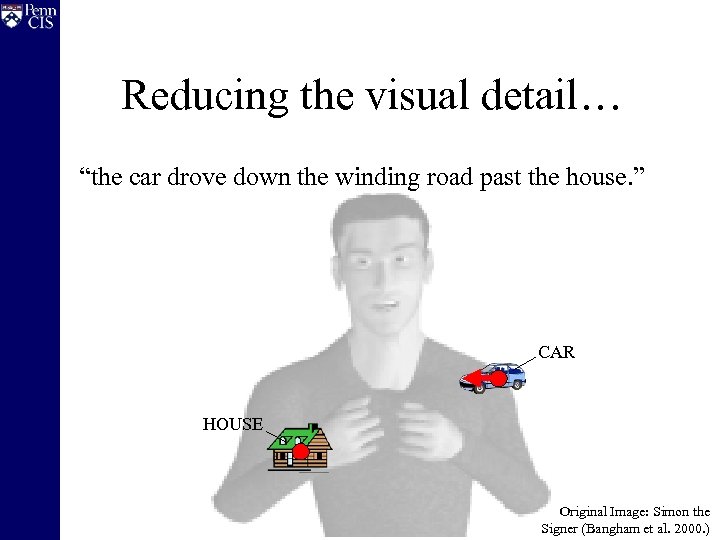

Challenge: Minor 3 D visual details are error-prone. • Difficult to get correct. • Not important for classifier predicates. • Solution: Detailed objects 3 D points. – Record 3 d orientation of object (sometimes). – Record what handshape should be used. • E. g. bulky objects get one handshape. • E. g. motorized vehicles get a different one.

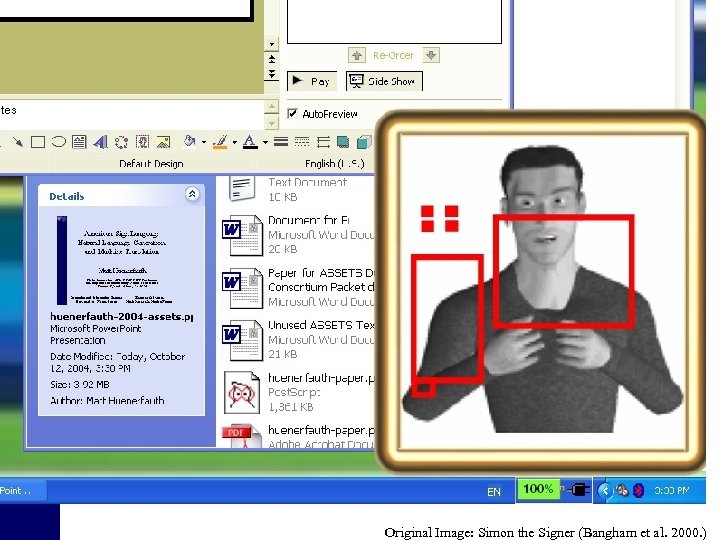

Reducing the visual detail… “the car drove down the winding road past the house. ” CAR HOUSE Original Image: Simon the Signer (Bangham et al. 2000. )

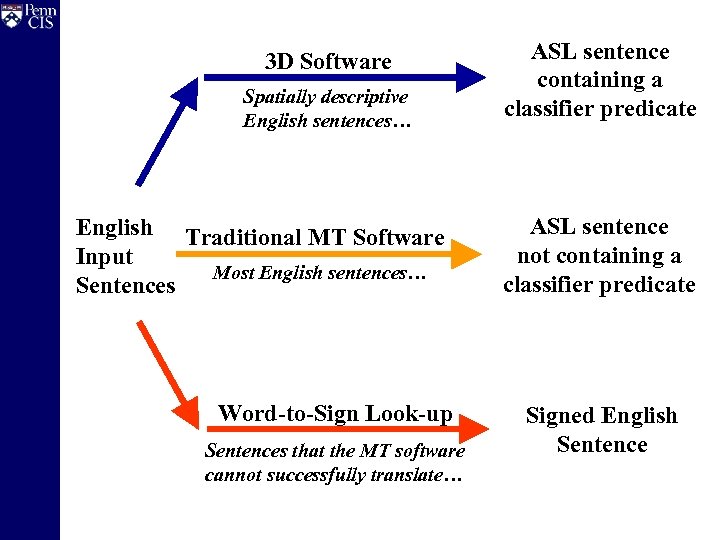

Challenge: 3 D processing is resource intensive. • Computation time: 3 D processing of scene. • Development effort: extending Anim. NL. • Only need 3 D processing to produce CPs. – English sentences not discussing 3 D scenes or spatial relationships are easier to translate. – Can use traditional MT technology instead. • Solution: Multi-Path System* * Huenerfauth, M. 2004. “A Multi-Path Architecture for English-to-ASL MT. ” HLT-NAACL Student Workshop.

3 D Software Spatially descriptive English sentences… English Traditional MT Software Input Most English sentences… Sentences Word-to-Sign Look-up Sentences that the MT software cannot successfully translate… ASL sentence containing a classifier predicate ASL sentence not containing a classifier predicate Signed English Sentence

Challenge: ASL phonological models ill-suited to CPs. • Linguists have proposed symbolic representations of ASL output (“phonological models”). – Streams of raw animation coordinates are unwieldy. – Need something more abstract, parameterized. – Hand shape, orientation, location, motion. • But current representations ill-suited to CPs. – Too much handshape info, too little orientation info. – Hard to specify 3 D motion paths. • Solution: New linguistic model designed for CPs. * *Huenerfauth, M. “Spatial and Planning Models of ASL CPs for MT. ” TMI-2004.

Challenge: Some motion paths linguistically determined. • Some CP motion paths are linguistically, not visually determined. – E. g. Leisurely walking upright figure. – Motion of 3 D character ≠ Motion of hand. • Solution: Store CPs as a set of templates. – Template represents prototypical form of a CP. – Fill in 3 D coordinate details at run-time. – Some 3 D paths taken from the virtual reality. – Some 3 D info hard-coded inside the template.

Challenge: English-to-ASL not always 1 -to-1 mapping. • Sometimes no one-to-one mapping from English sentences to classifier predicates. • Solution: Use same formalism to represent the structure inside and in-between CPs. – Makes it easy to store one-to-one, many-to-one, one-to-many, and many-to-many mappings. – Allows one CP to affect production of another. – Allows CPs to work together to convey info.

Implications of this Design Advantages of a 3 D Model

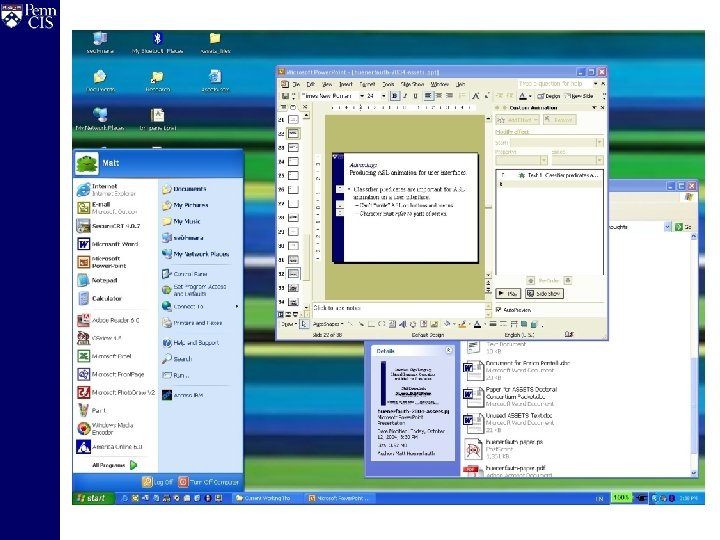

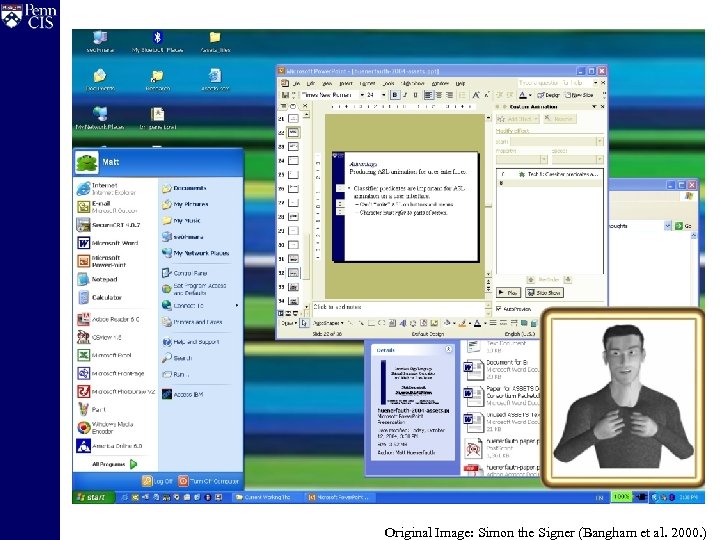

Advantage: Producing ASL animation for user-interfaces. • CPs are important for ASL on user-interface – Can’t “write” ASL on buttons and menus. – Character must refer to parts of screen. – ASL uses CPs to do this. • “Grab” GUI screen coordinates to lay out our placeholders (the little red dots). – Don’t need Anim. NL software. – Can update placeholders dynamically.

Original Image: Simon the Signer (Bangham et al. 2000. )

Original Image: Simon the Signer (Bangham et al. 2000. )

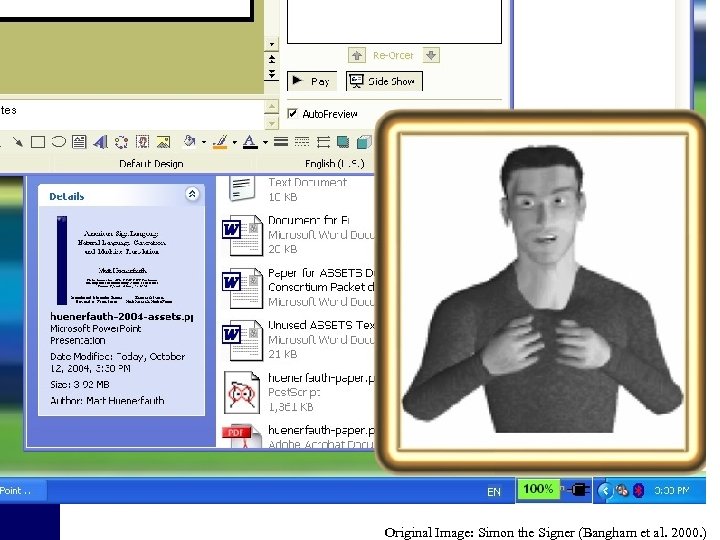

Original Image: Simon the Signer (Bangham et al. 2000. )

Original Image: Simon the Signer (Bangham et al. 2000. )

Original Image: Simon the Signer (Bangham et al. 2000. )

Advantage: Create CPs for other national sign languages. • Other national sign languages have their own signs and structure distinct from ASL. • However, nearly all have a system of classifier predicates that is similar to ASL. – The specific handshapes used might differ, as well as some other motion details. – Future Potential: But this 3 D approach to CP production should be easy to adapt to these other sign languages.

Advantage: Producing non-CP ASL linguistic phenomena. • There are other linguistic phenomena in ASL (aside from classifier predicates) that could benefit from the way this system keeps track of the space around the signer. – Pronouns – Verb Agreement – Narrative Role-Shifting – Contrastive Discourse Structure

Summary

Summary • Misconceptions, challenges, implications… • This is the first MT approach proposed for producing ASL Classifier Predicates. – New 3 D modeling approach to handle CPs. – Embed into multi-path English-to-ASL system. – Amenable to incorporation in a user-interface. • Currently in early implementation phase. One more interesting future potential of this design…

Potential Future Extension: Sign language virtual reality for the deaf-blind • Tactile Sign Language – Feel the signer’s hands move through 3 D space. • This system: unique in use of virtual reality. – Graphics software arranges the 3 D objects. – Signing character is also a detailed 3 D object. • Future Potential: – ASL Tactile Sign Language – A deaf-blind user could experience the motion of signer’s hands using tactile feedback glove.

Questions?

8034ffc35ab65e595d6d69431c718c0a.ppt