a00bcfb7ef956d830547e46cce97d31a.ppt

- Количество слайдов: 16

Accelerating Benchmark Testing Through Automation Ilana Golan Technical Director, Quali. Systems August 9, 2011 Santa Clara, CA August 2010

Agenda § § § Typical SSD testing challenges The solution “End to End Automation Framework” Deployment Architecture Case Study Results and ROI Summary 2

Typical SSD Testing Challenges § Variety of vendors § Multiple models § Many benchmark applications § Multiple Operating Systems § Distributed test teams and stations § Exhausting data collection § Time consuming analysis Santa Clara, CA August 2010

Testing performance of drives using HDTune § Prepare the environment a tedious process • Download new firmware • Install Operating System • Prepare the device for testing Spent 1 h § Activate the benchmark app • Run HD Tune • Click Start • Wait until the application finishes Spent 0. 5 h 4

Testing performance of drives using HDTune § Need to MANUALLY collect the results: • Copy to clipboard • Paste to excel/word/other • Keep a constant format so it can be merged to one report Time consuming process, that requires advance preparations § Prepare the environment for another test all over again n x § Repeat the process many times (multiple OS, various benchmark applications) 5

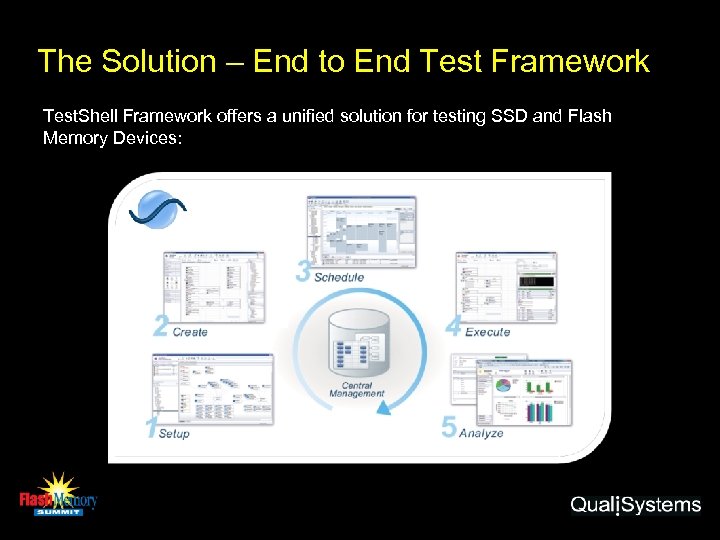

The Solution – End to End Test Framework Test. Shell Framework offers a unified solution for testing SSD and Flash Memory Devices:

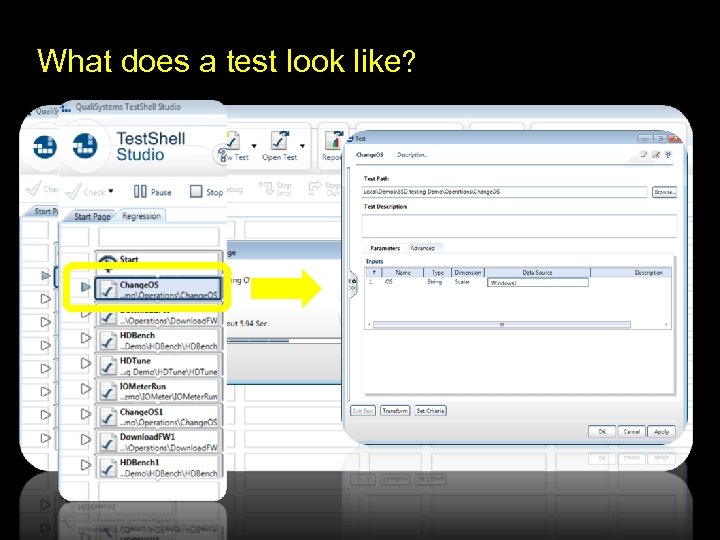

What does a test look like? Santa Clara, CA August 2010 7

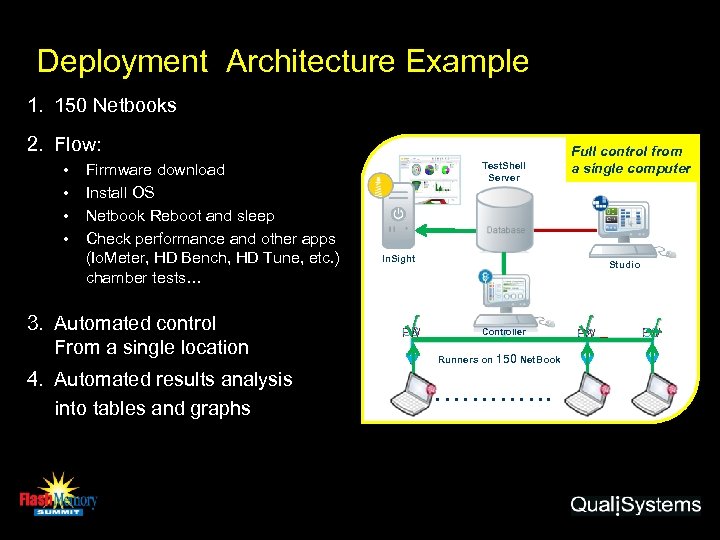

Deployment Architecture Example 1. 150 Netbooks 2. Flow: • • Firmware download Install OS Netbook Reboot and sleep Check performance and other apps (Io. Meter, HD Bench, HD Tune, etc. ) chamber tests… 3. Automated control From a single location 4. Automated results analysis into tables and graphs Test. Shell Server Full control from a single computer In. Sight √ OS FW Studio Controller Runners on 150 Net. Book …………. √ OS FW

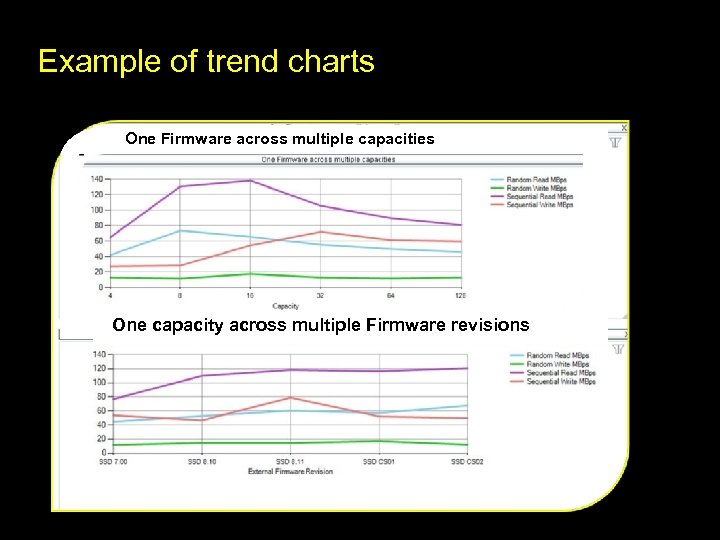

Example of trend charts One Firmware across multiple capacities One capacity across multiple Firmware revisions 9

Case Study Results § All major tests were created: • Performance: HDBench, Crystal. Mark, PCMark 05, IOMeter etc. • • Various OS: DOS, XP, Win 7, Linux etc. Regression: Sleeping, rebooting, Chamber tests etc. • Utilities: E-Mail Report, FW download notifications § Tests executed in parallel on 100 -150 netbooks § Tests are launched from one machine and executed on various clusters § All data is automatically saved to a central DB § Reports are reliable, and have multiple formats including : Full Data, executive summary, weekly and monthly reports Santa Clara, CA August 2010 10

ROI - Examples It used to take me 3 hours to run a test on each netbook Now I run in few minutes on 100+ netbooks in parallel Saves a week for each regression It used to take me 60 -90 minutes to analyze a run and create reports Now the reports are created for all runs automatic and a management report with trends is generated Real time reports and trend analysis in zero time

Summary § Typical SSD / Flash Memory Testing results in time and efforts spending § End-to-end automation framework - proven significant improvement: ü Code free test creation ü Emphasis on scalability, test modification and reuse ü OSs installation, launching and switching in a click ü Easy integration and control of any benchmark apps ü Running scenarios without manual intervention 24/7 in parallel ü Dynamic station allocation and scheduling ü Data reporting & analysis in a click Save time and efforts! Santa Clara, CA August 2010

Thank you Want to learn more? Visit: www. qualisystems. com/ssd

Presenter Info § § § Presenter Name: Ilana Golan Job Title: Technical Director Email: ilana. g@qualisystems. com Phone: 408 -313 -0487 Biography: Ilana Golan, Technical Director at Quali. Systems, is leading the technical team in North America. Ilana’s group repeatedly engage with various customers to help them adopt automation in their projects. Before that Ilana played several roles at Cadence Design Systems in the functional verification space. From field engineer and on to product marketing and solution architecture. Before that Ilana was a software developer at Intel and holds a BCs of computer science and engineering from the Technion, Israel's foremost technology institute. § Ilana began her career as an F-16 flight simulator instructor in the Israeli air force § 14

Topic Category: Testing/Performance/Benchmarking Presentation Abstract: Benchmark testing for performance and regression assurance is an increasing challenge for Solid State Drive (SSD) testing team. Considerable efforts, time and human resources were invested to perform tests prior to product release. Distributed test stations, a wide array of benchmark applications, the frequent necessity to manually install, launch and replace operating systems, and an exhausting test data aggregation and analysis processes led SSD customers to seek a solution. By automating their SSD testing process using a unified test automation framework, the customers achieved significant reductions in testing time and efforts, while allowing non-programmers to join the automation effort, easily modify and re-use test scenarios, automatically handle different OS, execute tests over night and weekend and many other achievements. Presentation outline: § Describing an example of a customer’s testing lab and process § Benchmark testing challenges § The solution “ an end to end automation framework” § Conclusions and results 15

Solution Highlights § Unified Code free test creation § Easy test modification and re-use § OSs installation, launching and switching in a click § Control over multiple distributed test stations § Control over Benchmark Applications via API or GUI § Automatic execution over nights and weekends § Automatic collection of test results to a central server § Test aggregation and analysis in minutes § Online dashboards and reports Santa Clara, CA August 2010 16

a00bcfb7ef956d830547e46cce97d31a.ppt