2cb80f898bfa62aaba1cf346470537bd.ppt

- Количество слайдов: 28

A&T Advisory Board EDC Storage Area Network (SAN) April 19, 2004 Ken Gacke, Brian Sauer, Doug Jaton gacke@usgs. gov bsauer@usgs. gov djaton@usgs. gov U. S. Department of the Interior U. S. Geological Survey 1

Agenda l l Storage Architecture EDC SAN Architectures u u u l Digital Reproduction SAN Landsat SAN LPDAAC SAN Reality Check 2

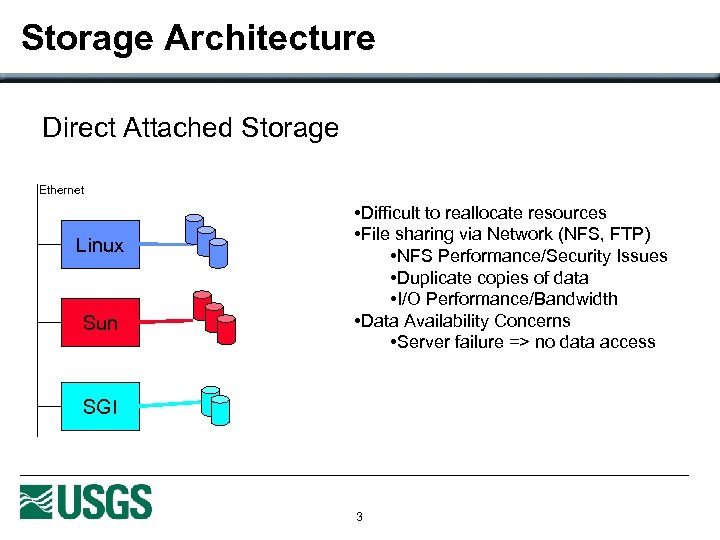

Storage Architecture Direct Attached Storage Ethernet Linux Sun • Difficult to reallocate resources • File sharing via Network (NFS, FTP) • NFS Performance/Security Issues • Duplicate copies of data • I/O Performance/Bandwidth • Data Availability Concerns • Server failure => no data access SGI 3

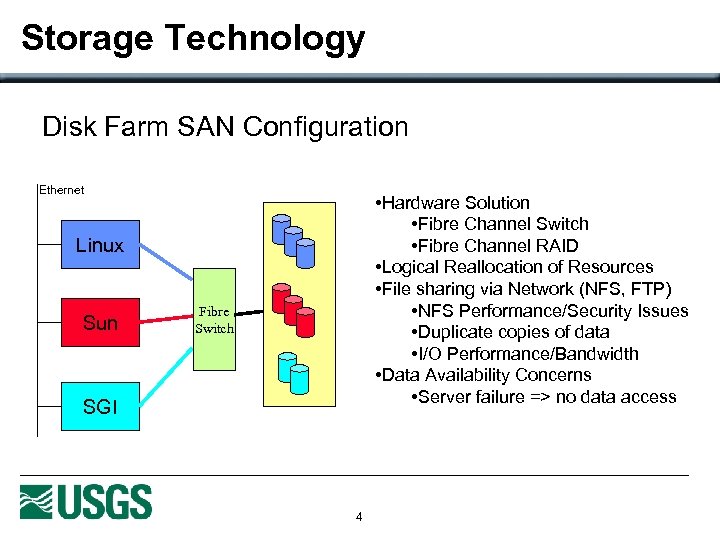

Storage Technology Disk Farm SAN Configuration Ethernet • Hardware Solution • Fibre Channel Switch • Fibre Channel RAID • Logical Reallocation of Resources • File sharing via Network (NFS, FTP) • NFS Performance/Security Issues • Duplicate copies of data • I/O Performance/Bandwidth • Data Availability Concerns • Server failure => no data access Linux Sun Fibre Switch SGI 4

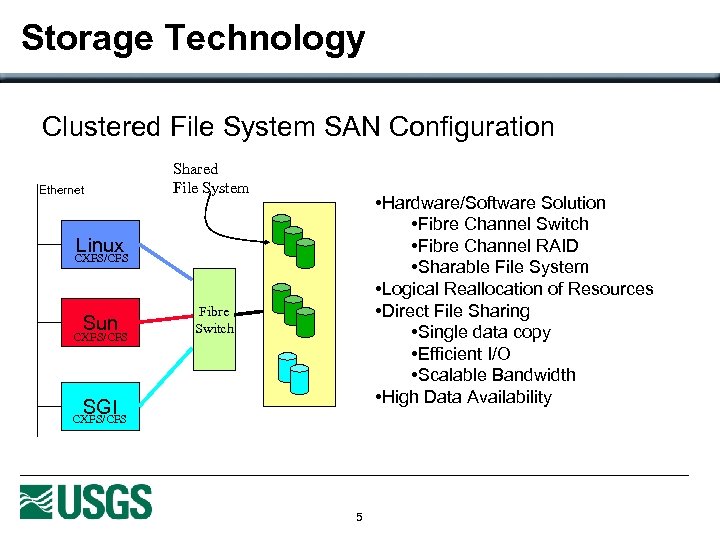

Storage Technology Clustered File System SAN Configuration Ethernet Shared File System • Hardware/Software Solution • Fibre Channel Switch • Fibre Channel RAID • Sharable File System • Logical Reallocation of Resources • Direct File Sharing • Single data copy • Efficient I/O • Scalable Bandwidth • High Data Availability Linux CXFS/CFS Sun CXFS/CFS Fibre Switch SGI CXFS/CFS 5

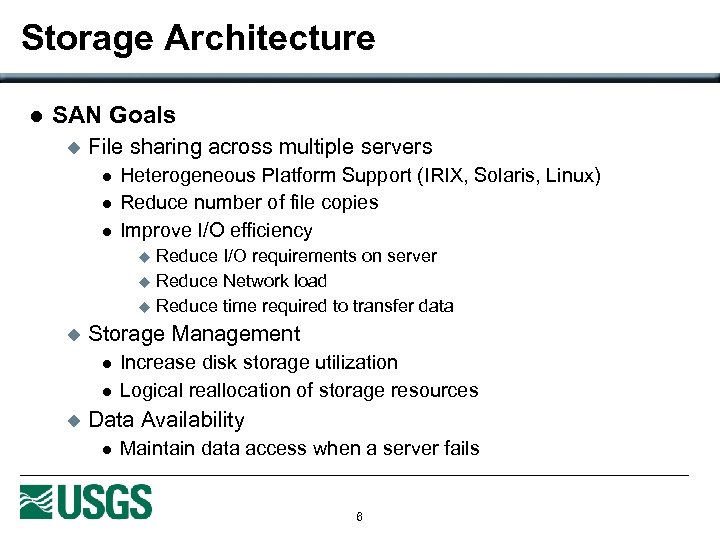

Storage Architecture l SAN Goals u File sharing across multiple servers l l l Heterogeneous Platform Support (IRIX, Solaris, Linux) Reduce number of file copies Improve I/O efficiency Reduce I/O requirements on server u Reduce Network load u Reduce time required to transfer data u u Storage Management l l u Increase disk storage utilization Logical reallocation of storage resources Data Availability l Maintain data access when a server fails 6

Digital Reproduction CR 1 SAN April 19, 2004 Ken Gacke SAIC Contractor gacke@usgs. gov U. S. Department of the Interior U. S. Geological Survey 7

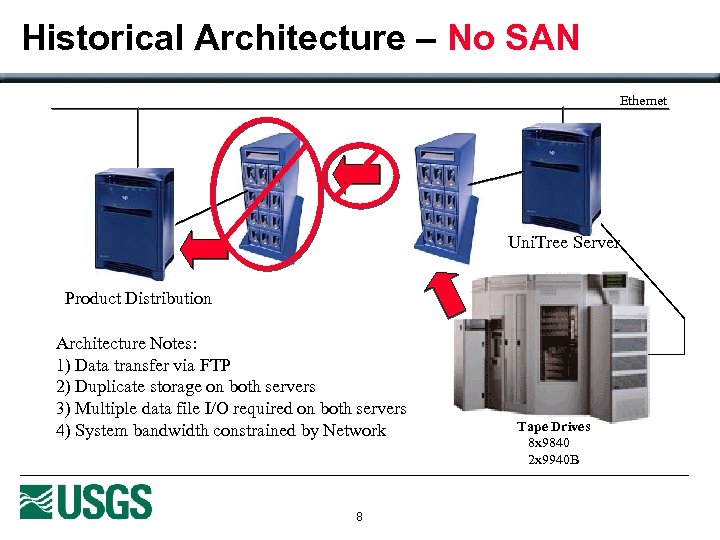

Historical Architecture – No SAN Ethernet Uni. Tree Server Product Distribution Architecture Notes: 1) Data transfer via FTP 2) Duplicate storage on both servers 3) Multiple data file I/O required on both servers 4) System bandwidth constrained by Network 8 Tape Drives 8 x 9840 2 x 9940 B

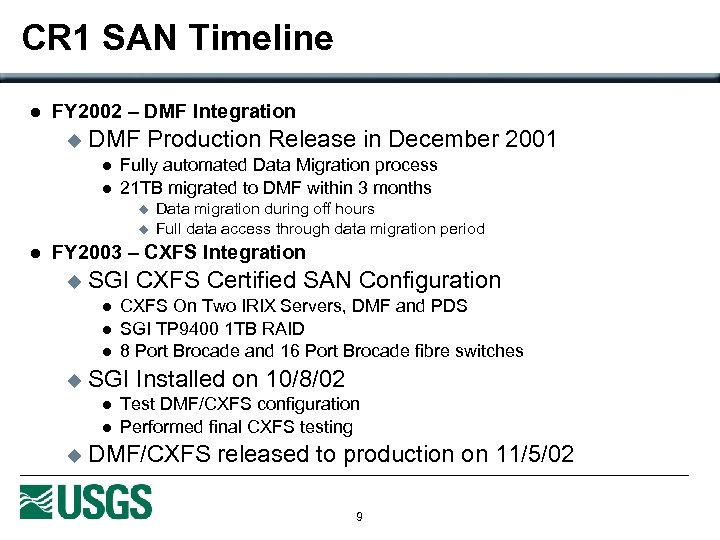

CR 1 SAN Timeline l FY 2002 – DMF Integration u DMF Production Release in December 2001 l l Fully automated Data Migration process 21 TB migrated to DMF within 3 months u u l Data migration during off hours Full data access through data migration period FY 2003 – CXFS Integration u SGI CXFS Certified SAN Configuration l l l u SGI Installed on 10/8/02 l l u CXFS On Two IRIX Servers, DMF and PDS SGI TP 9400 1 TB RAID 8 Port Brocade and 16 Port Brocade fibre switches Test DMF/CXFS configuration Performed final CXFS testing DMF/CXFS released to production on 11/5/02 9

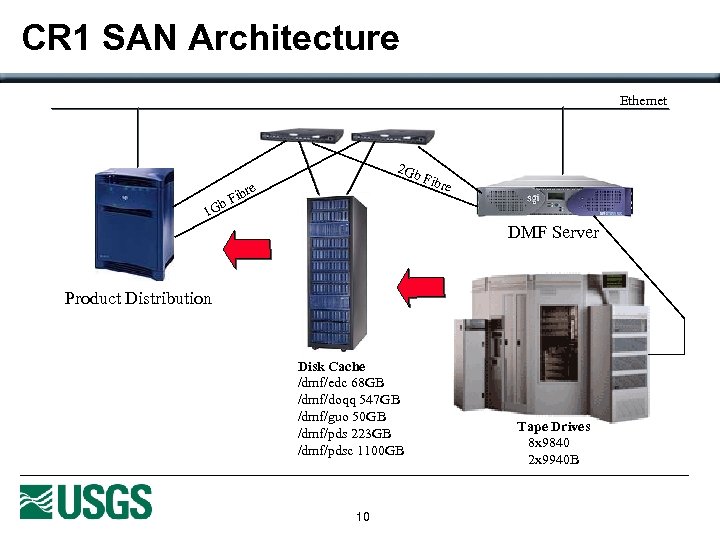

CR 1 SAN Architecture Ethernet 2 Gb e ibr b. F 1 G Fibr e DMF Server Product Distribution Disk Cache /dmf/edc 68 GB /dmf/doqq 547 GB /dmf/guo 50 GB /dmf/pds 223 GB /dmf/pdsc 1100 GB 10 Tape Drives 8 x 9840 2 x 9940 B

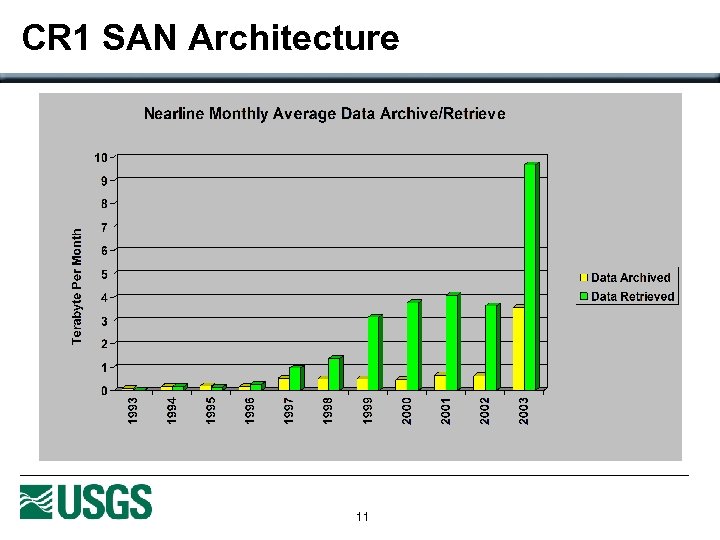

CR 1 SAN Architecture 11

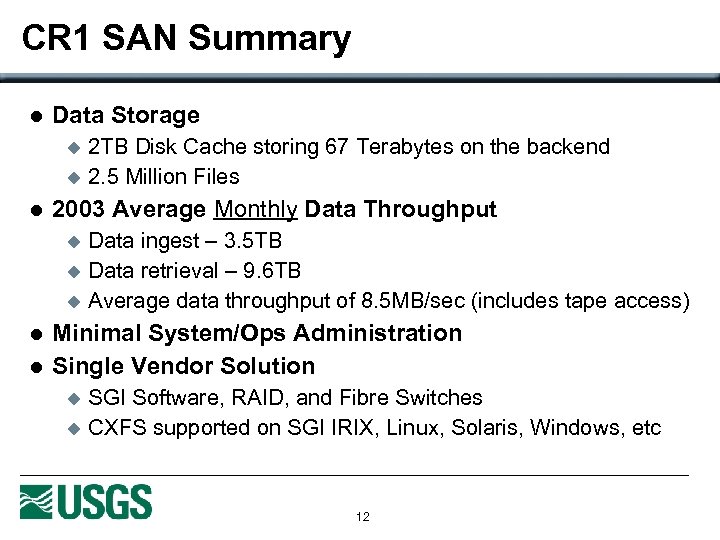

CR 1 SAN Summary l Data Storage u u l 2003 Average Monthly Data Throughput u u u l l 2 TB Disk Cache storing 67 Terabytes on the backend 2. 5 Million Files Data ingest – 3. 5 TB Data retrieval – 9. 6 TB Average data throughput of 8. 5 MB/sec (includes tape access) Minimal System/Ops Administration Single Vendor Solution u u SGI Software, RAID, and Fibre Switches CXFS supported on SGI IRIX, Linux, Solaris, Windows, etc 12

Landsat SAN April 19, 2004 Brian Sauer SAIC Contractor bsauer@usgs. gov U. S. Department of the Interior U. S. Geological Survey 13

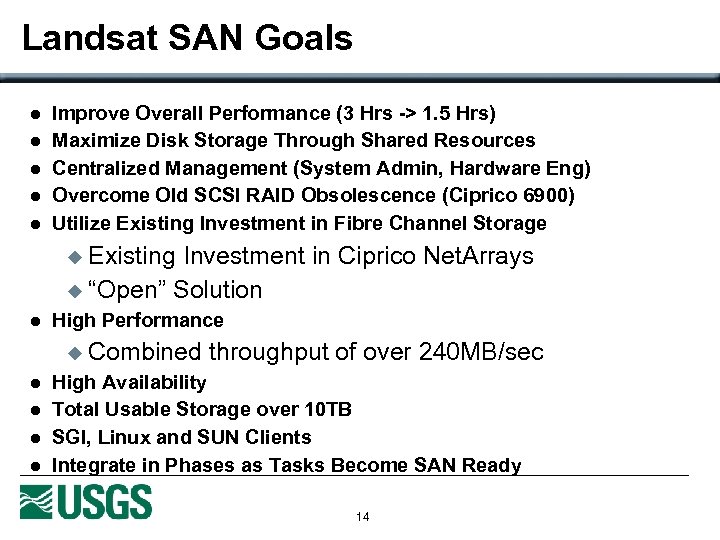

Landsat SAN Goals l l l Improve Overall Performance (3 Hrs -> 1. 5 Hrs) Maximize Disk Storage Through Shared Resources Centralized Management (System Admin, Hardware Eng) Overcome Old SCSI RAID Obsolescence (Ciprico 6900) Utilize Existing Investment in Fibre Channel Storage u Existing Investment in Ciprico Net. Arrays u “Open” Solution l High Performance u Combined l l throughput of over 240 MB/sec High Availability Total Usable Storage over 10 TB SGI, Linux and SUN Clients Integrate in Phases as Tasks Become SAN Ready 14

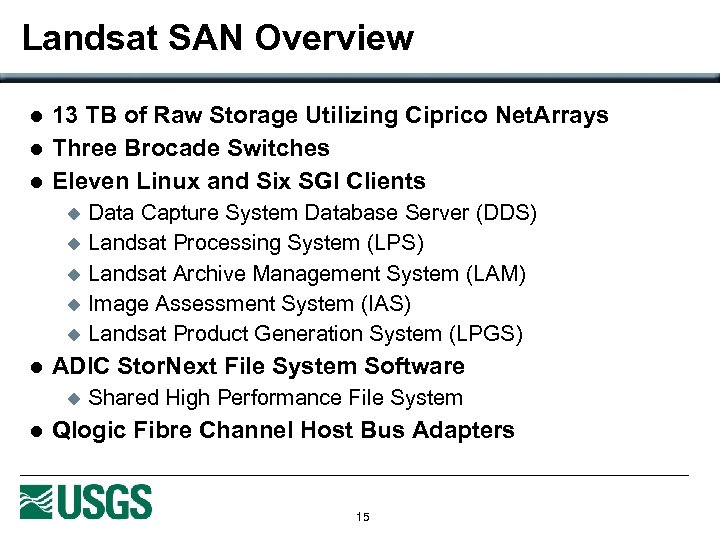

Landsat SAN Overview l l l 13 TB of Raw Storage Utilizing Ciprico Net. Arrays Three Brocade Switches Eleven Linux and Six SGI Clients u u u l ADIC Stor. Next File System Software u l Data Capture System Database Server (DDS) Landsat Processing System (LPS) Landsat Archive Management System (LAM) Image Assessment System (IAS) Landsat Product Generation System (LPGS) Shared High Performance File System Qlogic Fibre Channel Host Bus Adapters 15

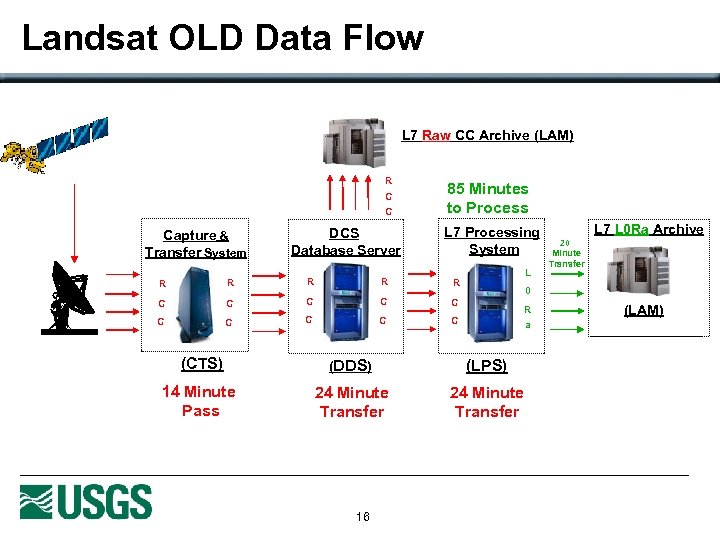

Landsat OLD Data Flow L 7 Raw CC Archive (LAM) R C C Capture & Transfer System DCS Database Server 85 Minutes to Process L 7 Processing System R R C C C C C L R C 0 R a (CTS) (DDS) (LPS) 14 Minute Pass 24 Minute Transfer 16 L 7 L 0 Ra Archive 20 Minute Transfer (LAM)

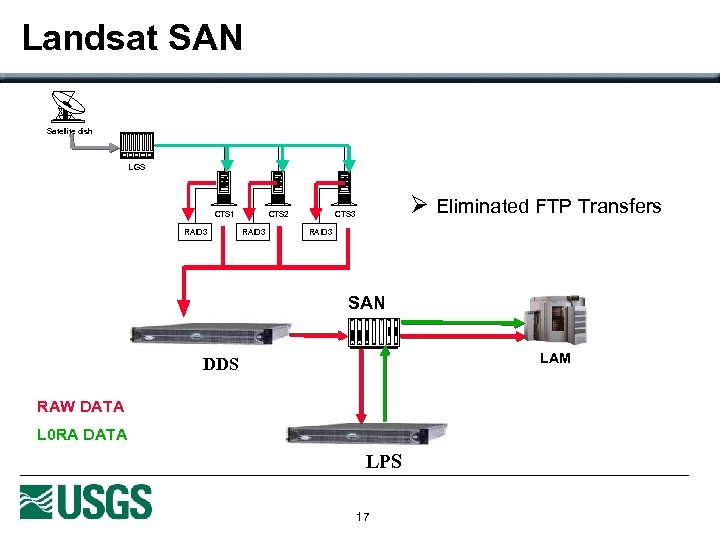

Landsat SAN Satellite dish LGS CTS 1 RAID 3 CTS 2 RAID 3 Ø Eliminated FTP Transfers CTS 3 RAID 3 SAN LAM DDS RAW DATA L 0 RA DATA LPS 17

Landsat SAN Summary l Advantages u Able to share data in a high performance environment to reduce the amount of storage necessary u Increase in overall performance of the Landsat Ground System u Open Solution l l Able to utilize existing equipment Currently testing with other vendors Disk availability for projects during off-peak times e. g. IAS Disadvantages / Challenges u Challenge to integrate an open solution u l l u u CIPRICO RAID controller failures Not good for real-time I/O Challenge to integrate into multiple tasks l l l Own agenda and schedule Individual requirements Difficult to guarantee I/O 18

LP DAAC SAN Forum April 19, 2004 Douglas Jaton SAIC Contractor djaton@usgs. gov U. S. Department of the Interior U. S. Geological Survey 19

LP DAAC Data Pool – Phase I SAN Goals Phase I – “Data Pool” Implementation in early FY 03 l Access/Distribution Method (ftp site): l Support increased electronic distribution l Reduce need to pull data from archive silos l Reduce need for order submissions (and media/shipping costs) l Give science and applications users timely, direct access to data, including machine access l Allow users to tailor their data views to more quickly locate the data they need by providing “The Data Pool SAN infrastructure effectively acts as a subset archive of the full ECS archive” 20

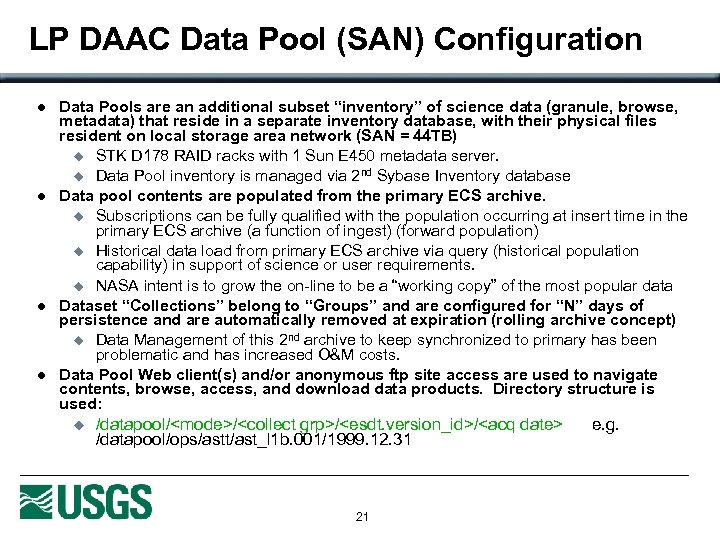

LP DAAC Data Pool (SAN) Configuration l l Data Pools are an additional subset “inventory” of science data (granule, browse, metadata) that reside in a separate inventory database, with their physical files resident on local storage area network (SAN = 44 TB) u STK D 178 RAID racks with 1 Sun E 450 metadata server. u Data Pool inventory is managed via 2 nd Sybase Inventory database Data pool contents are populated from the primary ECS archive. u Subscriptions can be fully qualified with the population occurring at insert time in the primary ECS archive (a function of ingest) (forward population) u Historical data load from primary ECS archive via query (historical population capability) in support of science or user requirements. u NASA intent is to grow the on-line to be a “working copy” of the most popular data Dataset “Collections” belong to “Groups” and are configured for “N” days of persistence and are automatically removed at expiration (rolling archive concept) u Data Management of this 2 nd archive to keep synchronized to primary has been problematic and has increased O&M costs. Data Pool Web client(s) and/or anonymous ftp site access are used to navigate contents, browse, access, and download data products. Directory structure is used: u /datapool/<mode>/<collect grp>/<esdt. version_id>/<acq date> /datapool/ops/astt/ast_l 1 b. 001/1999. 12. 31 21 e. g.

LP DAAC Data Pool Contents & Access Science Data: l ASTER L 1 B Group (TERRA) u ASTER collection over U. S. States and Territories (no billing!) l MODIS Group (TERRA & AQUA) u 8 day rolling archive of daily data for MODIS u 12 months of data for higher level products l Most 8 -day, 16 -day, and 96 -day products Access Methods: l Anonymous FTP Site l Web Client interface(s) to navigate & browse data holdings via Sybase inventory database l Public Access: http: //lpdaac. usgs. gov/datapool. asp 22

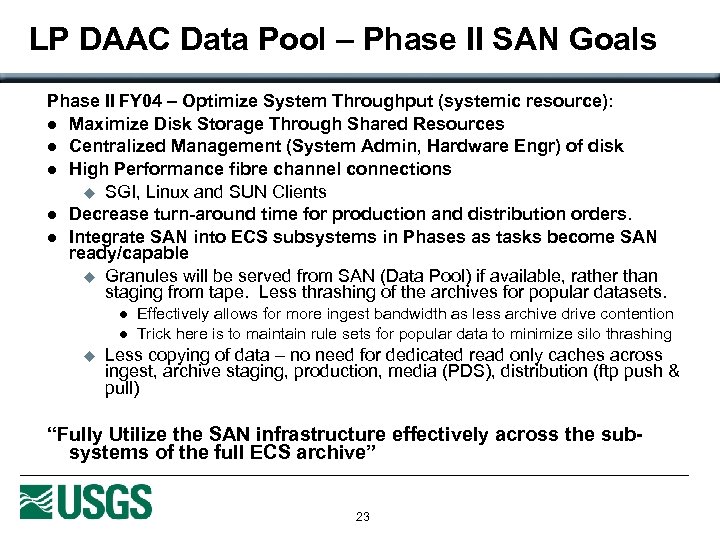

LP DAAC Data Pool – Phase II SAN Goals Phase II FY 04 – Optimize System Throughput (systemic resource): l Maximize Disk Storage Through Shared Resources l Centralized Management (System Admin, Hardware Engr) of disk l High Performance fibre channel connections u SGI, Linux and SUN Clients l Decrease turn-around time for production and distribution orders. l Integrate SAN into ECS subsystems in Phases as tasks become SAN ready/capable u Granules will be served from SAN (Data Pool) if available, rather than staging from tape. Less thrashing of the archives for popular datasets. l l u Effectively allows for more ingest bandwidth as less archive drive contention Trick here is to maintain rule sets for popular data to minimize silo thrashing Less copying of data – no need for dedicated read only caches across ingest, archive staging, production, media (PDS), distribution (ftp push & pull) “Fully Utilize the SAN infrastructure effectively across the subsystems of the full ECS archive” 23

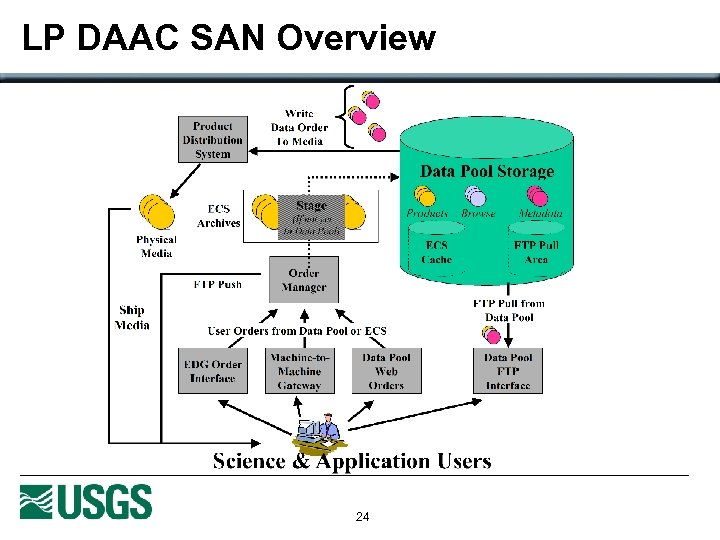

LP DAAC SAN Overview 24

SAN Reality Check April 19, 2004 Brian Sauer SAIC Contractor bsauer@usgs. gov U. S. Department of the Interior U. S. Geological Survey 25

EDC SAN Experience l l l Technology Infusion u TSSC Understands this new technology. u Bring it in at right level and at the right time to satisfy USGS programmatic requirements. u SAN technology is not a one size fits all solution set. u Need to balance complexity vs. benefits. Project Requirements Differ u Size of SAN (Storage, Number Clients, etc) u Open System Versus Single Vendor Experiences Gained u Provides high performance shared storage access u Provides better manageability and utilization u Provides flexibility in reallocating resources u Requires trained Storage Engineers u Complex architecture, especially as number of nodes increases 26

EDC SAN Reality Check l SAN Issues u Vendors typically oversell SAN architecture l Infrastructure costs Hardware – Switches, HBAs, Fibre Infrastructure u Software u Maintenance u l Hardware/Software maintenance l Labor l Disk maintenance higher than tape u l Power & cooling of disk vs. tape Complex Architecture Requires additional/stronger System Engineering u Requires highly skilled System Administration u l Lifecycle is significantly shorter with disk vs. tape. 27

EDC SAN Reality Check l SAN Issues u Difficult to share resources among projects in an enterprise environment l l Ability to fund large shared infrastructure historically been problematic for EDC Ability to allocate and guarantee performance to projects (storage, bandwidth, security, peak vs. sustained) Scheduling among multiple projects would be challenging Not all projects require a SAN u u SAN will not replace the Tape Archive(s) anytime soon Direct attached storage may be sufficient for many projects 28

2cb80f898bfa62aaba1cf346470537bd.ppt