dbd1ce09f8ca08a5d7d47ecbc0fd1e28.ppt

- Количество слайдов: 35

A speech about Boosting Presenter: Roberto Valenti

The Paper* *R. Schapire. The boosting approach to Machine Learning An Overview, 2001

I want YOU… …TO UNDERSTAND

Overview • Introduction • Adaboost – How Does it work? – Why does it work? – Demo – Extensions – Performance & Applications • Summary & Conclusions • Questions

Introduction to Boosting Let’s start

Introduction • An example of Machine Learning: Spam classifier • Highly accurate rule: difficult to find • Inaccurate rule: ”BUY NOW” • Introducing Boosting: “An effective method of producing an accurate prediction rule from inaccurate rules”

Introduction • History of boosting: – 1989: Schapire • First provable polynomial time boosting – 1990: Freund • Much more efficient, but practical drawbacks – 1995: Freund & Schapire • Adaboost: Focus of this Presentation –…

Introduction • The Boosting Approach – Lots of Weak Classifiers – One Strong Classifier • Boosting key points: – Give importance to misclassified data – Find a way to combine weak classifiers in general rule.

Adaboost How does it work?

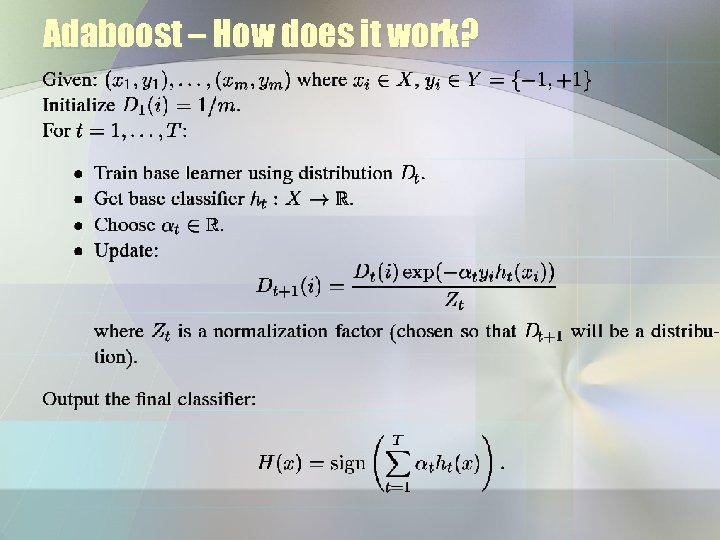

Adaboost – How does it work?

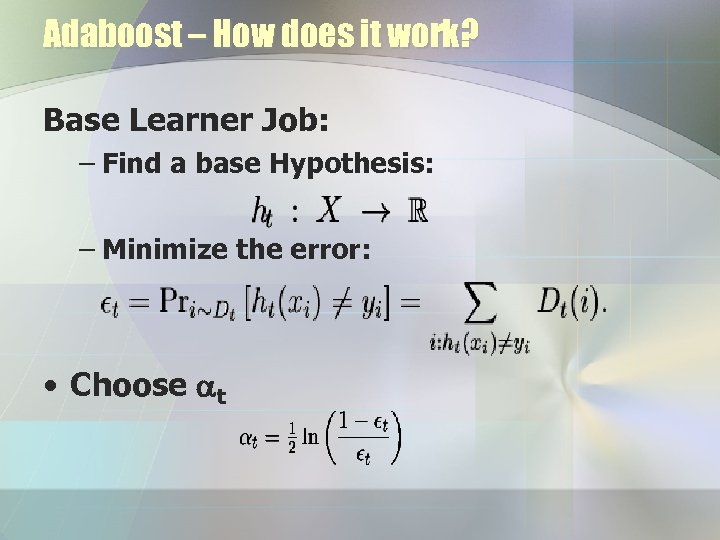

Adaboost – How does it work? Base Learner Job: – Find a base Hypothesis: – Minimize the error: • Choose at

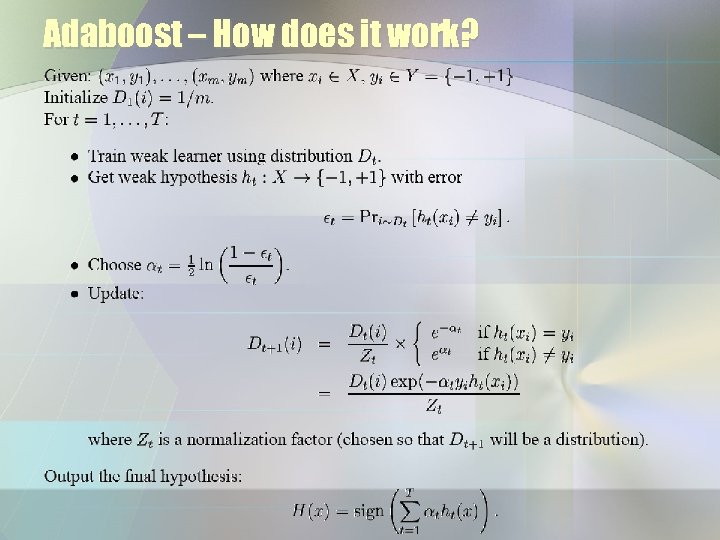

Adaboost – How does it work?

Adaboost Why does it work?

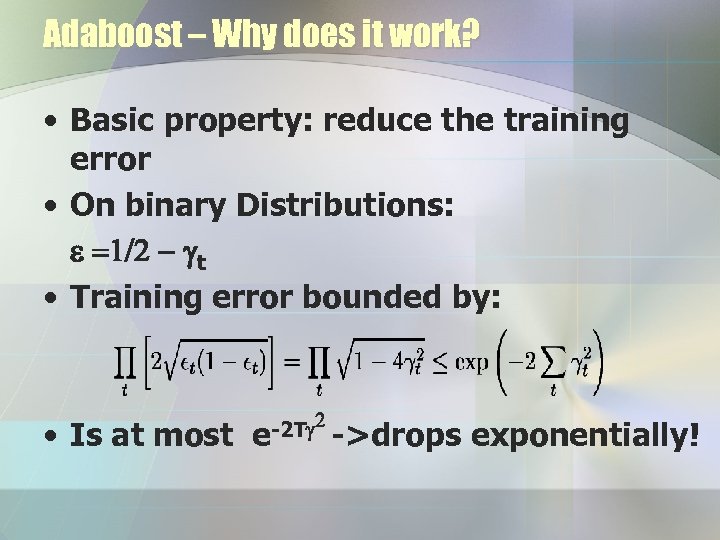

Adaboost – Why does it work? • Basic property: reduce the training error • On binary Distributions: e =1/2 - gt • Training error bounded by: • Is at most -2 Tg 2 ->drops e exponentially!

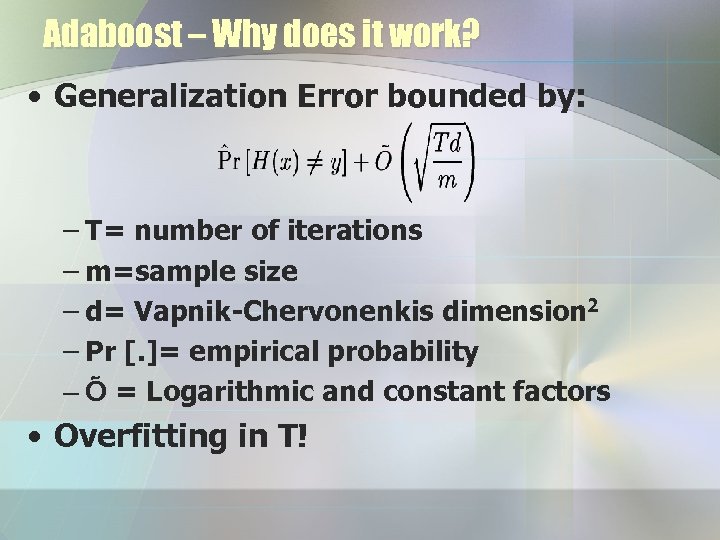

Adaboost – Why does it work? • Generalization Error bounded by: – T= number of iterations – m=sample size – d= Vapnik-Chervonenkis dimension 2 – Pr [. ]= empirical probability – Õ = Logarithmic and constant factors • Overfitting in T!

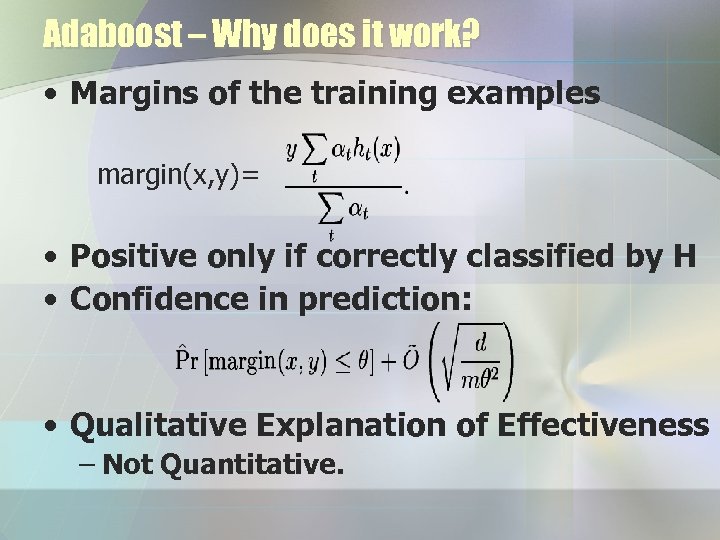

Adaboost – Why does it work? • Margins of the training examples margin(x, y)= • Positive only if correctly classified by H • Confidence in prediction: • Qualitative Explanation of Effectiveness – Not Quantitative.

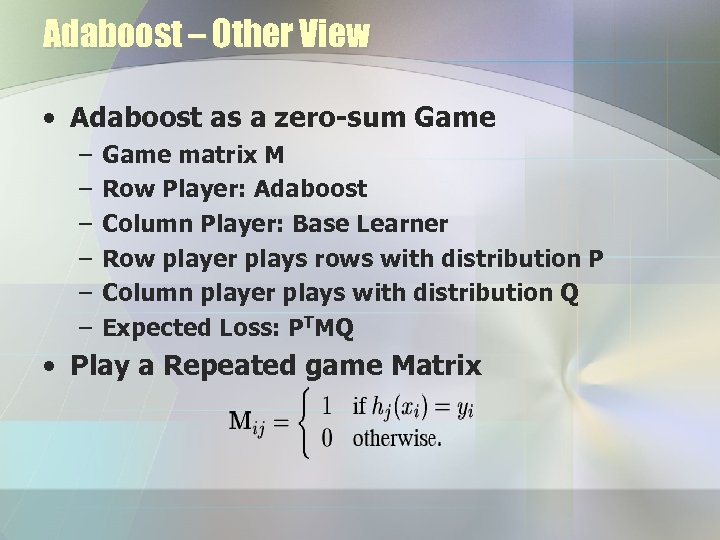

Adaboost – Other View • Adaboost as a zero-sum Game – – – Game matrix M Row Player: Adaboost Column Player: Base Learner Row player plays rows with distribution P Column player plays with distribution Q Expected Loss: PTMQ • Play a Repeated game Matrix

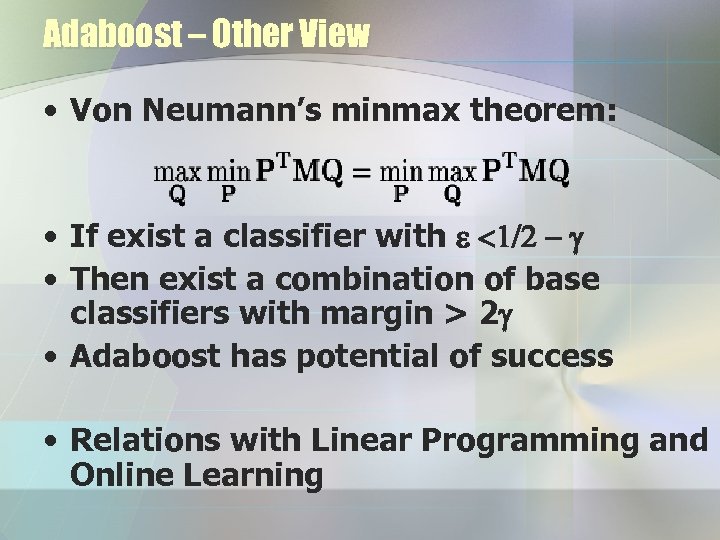

Adaboost – Other View • Von Neumann’s minmax theorem: • If exist a classifier with e <1/2 - g • Then exist a combination of base classifiers with margin > 2 g • Adaboost has potential of success • Relations with Linear Programming and Online Learning

Adaboost Demo

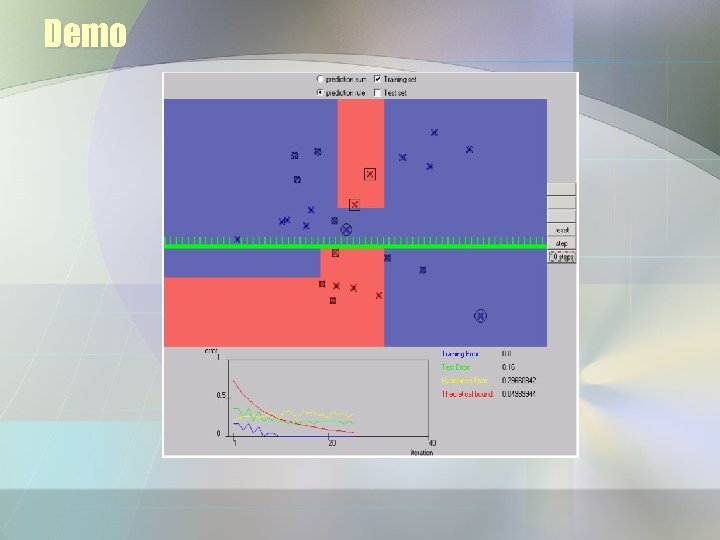

Demo

Adaboost Extensions

Adaboost - Extensions • History of Boosting: –… – 1997: Freund & Schapire • Adaboost. M 1 – First Multiclass Generalization – Fails if weak learner achieves less than 50% • Adaboost. M 2 – Creates a set of binary problems – For x, better l 1 or l 2? – 1999: Schapire & Singer • Adaboost. MH – For x, better l 1 or one of the others?

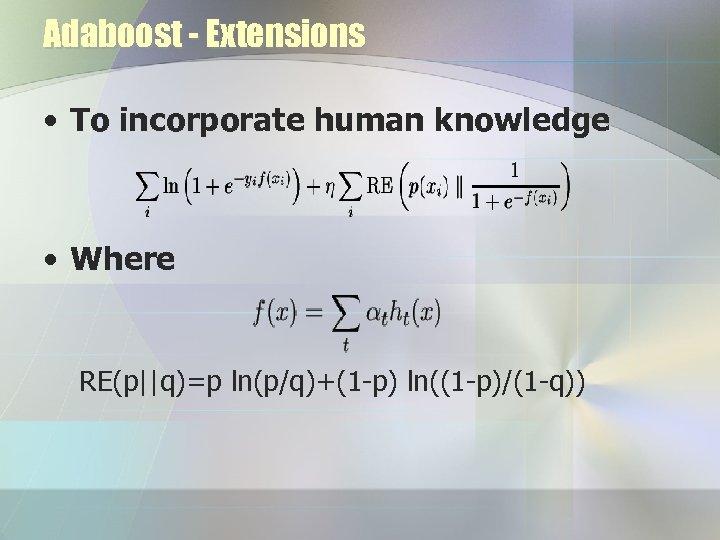

Adaboost - Extensions – 2001: Rochery, Schapire et al. • Incorporating Human Knowledge • Adaboost is data-driven • Human Knowledge can compensate lack of data • Human expert: – Chose rule p mapping x to p(x) Є [0, 1] – Difficult! – Simple rules should work. .

Adaboost - Extensions • To incorporate human knowledge • Where RE(p||q)=p ln(p/q)+(1 -p) ln((1 -p)/(1 -q))

Adaboost Performance and Applications

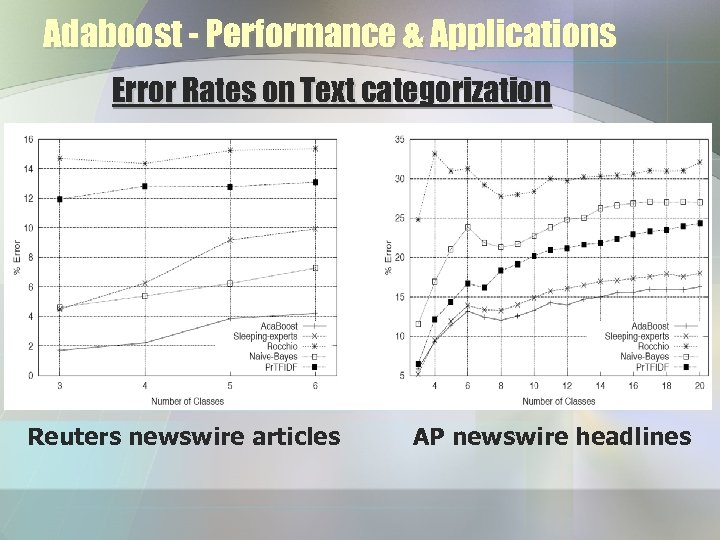

Adaboost - Performance & Applications Error Rates on Text categorization Reuters newswire articles AP newswire headlines

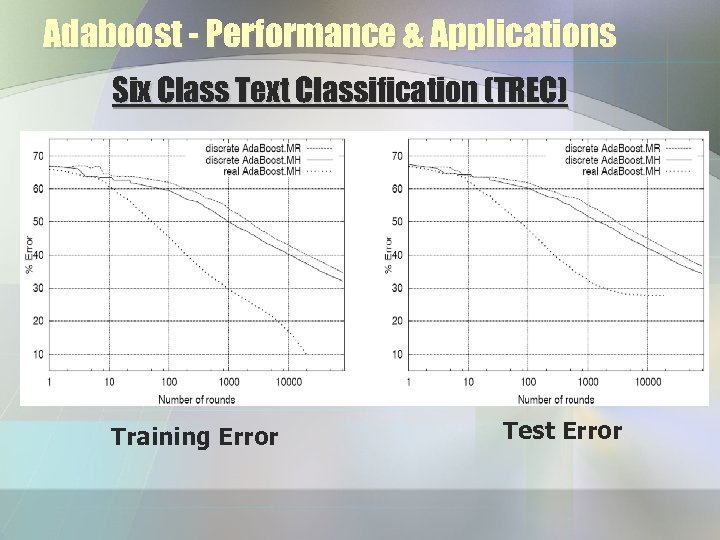

Adaboost - Performance & Applications Six Class Text Classification (TREC) Training Error Test Error

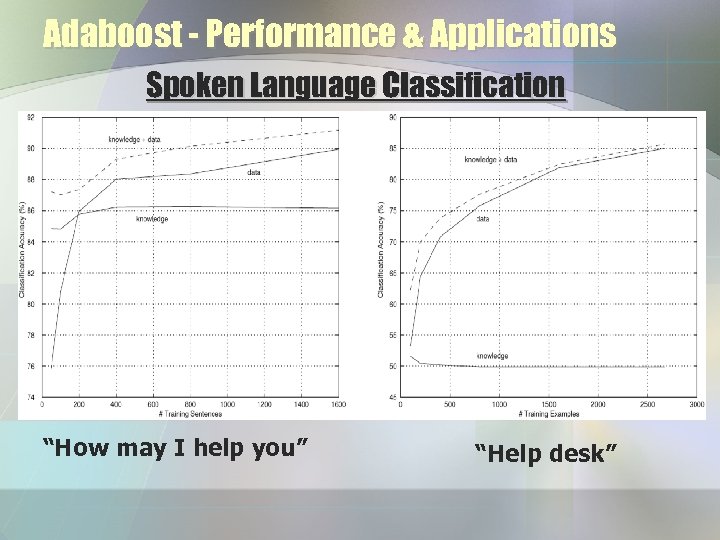

Adaboost - Performance & Applications Spoken Language Classification “How may I help you” “Help desk”

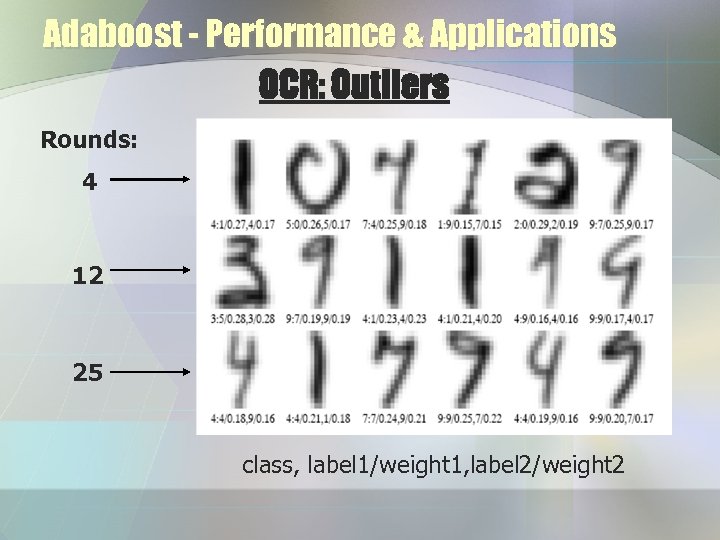

Adaboost - Performance & Applications OCR: Outliers Rounds: 4 12 25 class, label 1/weight 1, label 2/weight 2

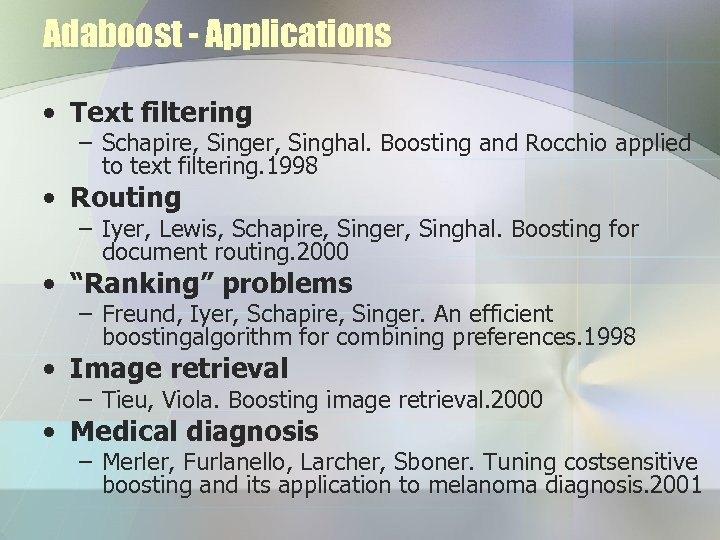

Adaboost - Applications • Text filtering – Schapire, Singer, Singhal. Boosting and Rocchio applied to text filtering. 1998 • Routing – Iyer, Lewis, Schapire, Singer, Singhal. Boosting for document routing. 2000 • “Ranking” problems – Freund, Iyer, Schapire, Singer. An efficient boostingalgorithm for combining preferences. 1998 • Image retrieval – Tieu, Viola. Boosting image retrieval. 2000 • Medical diagnosis – Merler, Furlanello, Larcher, Sboner. Tuning costsensitive boosting and its application to melanoma diagnosis. 2001

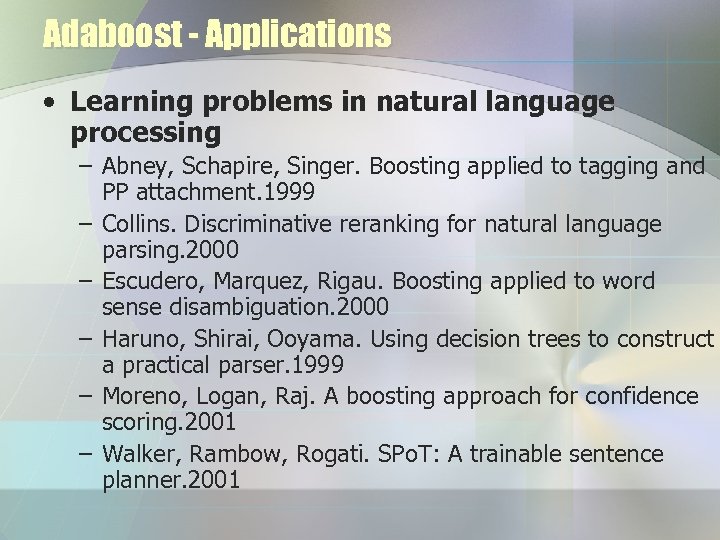

Adaboost - Applications • Learning problems in natural language processing – Abney, Schapire, Singer. Boosting applied to tagging and PP attachment. 1999 – Collins. Discriminative reranking for natural language parsing. 2000 – Escudero, Marquez, Rigau. Boosting applied to word sense disambiguation. 2000 – Haruno, Shirai, Ooyama. Using decision trees to construct a practical parser. 1999 – Moreno, Logan, Raj. A boosting approach for confidence scoring. 2001 – Walker, Rambow, Rogati. SPo. T: A trainable sentence planner. 2001

Summary and Conclusions At last…

Summary • Boosting takes a weak learner and converts it to a strong one • Works by asymptotically minimizing the training error • Effectively maximizes the margin of the combined hypothesis • Adaboost is related to other many topics • It Works!

Conclusions • Adaboost advantages: – Fast, simple and easy to program – No parameter required • Performance Dependency: – (Skurichina, 2001) Boosting is only useful for large sample size. – Choice of weak classifier – Incorporation of classifier weights – Data distribution

Questions ? (don’t be mean)

dbd1ce09f8ca08a5d7d47ecbc0fd1e28.ppt