8abfc93bea92dff6ed1541c8692abb26.ppt

- Количество слайдов: 51

A Scalable Front-End Architecture for Fast Instruction Delivery Paper by: Glenn Reinman, Todd Austin and Brad Calder Presenter: Alexander Choong

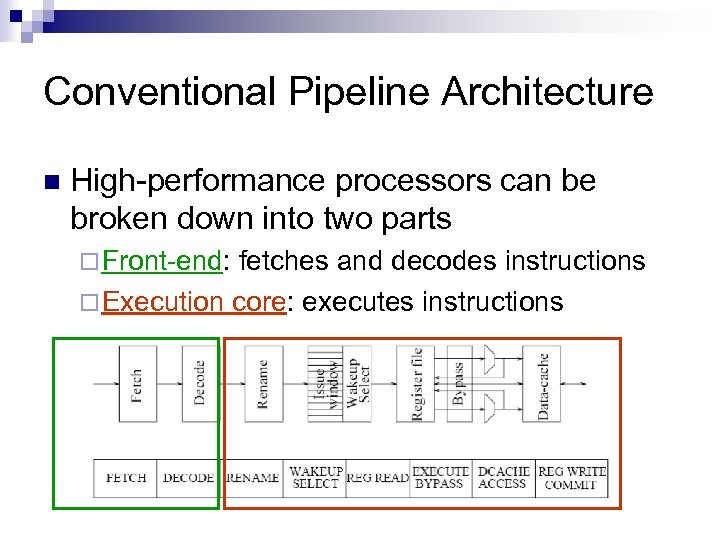

Conventional Pipeline Architecture n High-performance processors can be broken down into two parts ¨ Front-end: fetches and decodes instructions ¨ Execution core: executes instructions

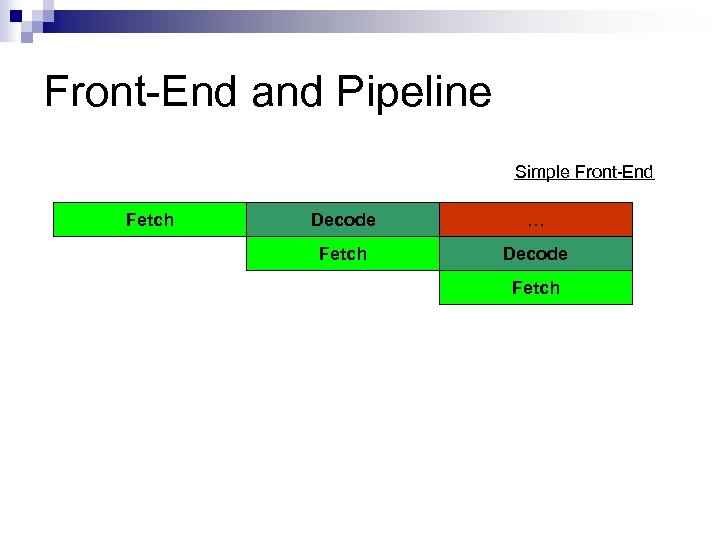

Front-End and Pipeline Simple Front-End Fetch Decode … Fetch Decode Fetch

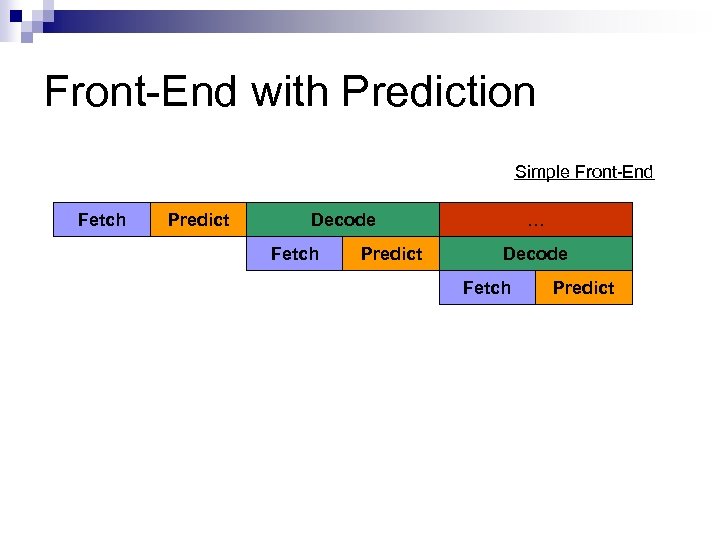

Front-End with Prediction Simple Front-End Fetch Predict Decode Fetch Predict … Decode Fetch Predict

Front-End Issues I n Flynn’s bottleneck: ¨ IPC is bounded by the number of Instructions fetched per cycle n Implies: As execution performance increases, the front-end must keep up to ensure overall performance

Front-End Issues II n Two opposing forces ¨ Designing n a faster front-end Increase I-cache size ¨ Interconnect Scaling Problem Wire performance does not scale with feature size n Decrease I-cache size n

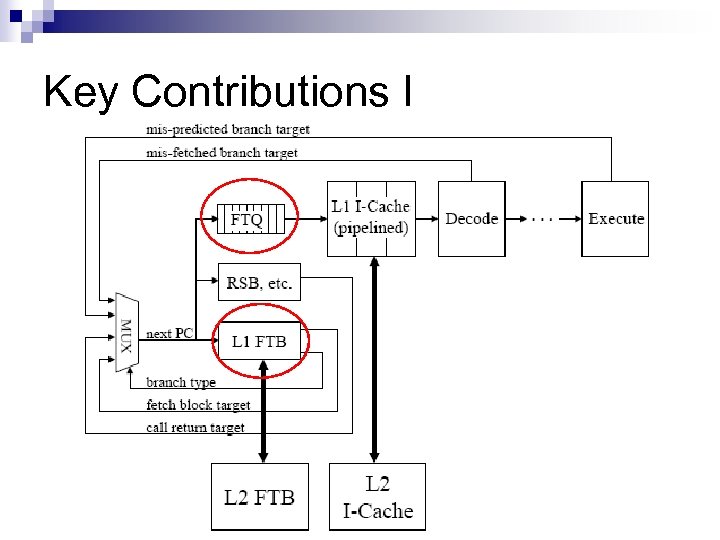

Key Contributions I

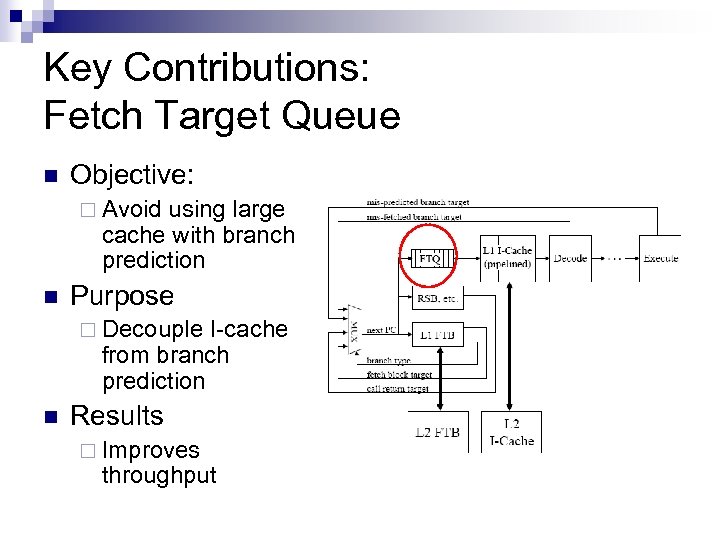

Key Contributions: Fetch Target Queue n Objective: ¨ Avoid using large cache with branch prediction n Purpose ¨ Decouple I-cache from branch prediction n Results ¨ Improves throughput

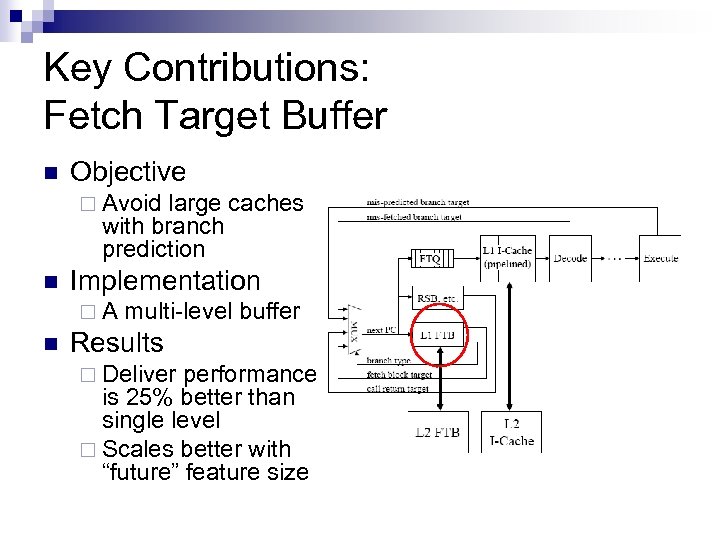

Key Contributions: Fetch Target Buffer n Objective ¨ Avoid large caches with branch prediction n Implementation ¨A n multi-level buffer Results ¨ Deliver performance is 25% better than single level ¨ Scales better with “future” feature size

Outline n Scalable Front-End and Components ¨ Fetch Target Queue ¨ Fetch Target Buffer Experimental Methodology n Results n Analysis and Conclusion n

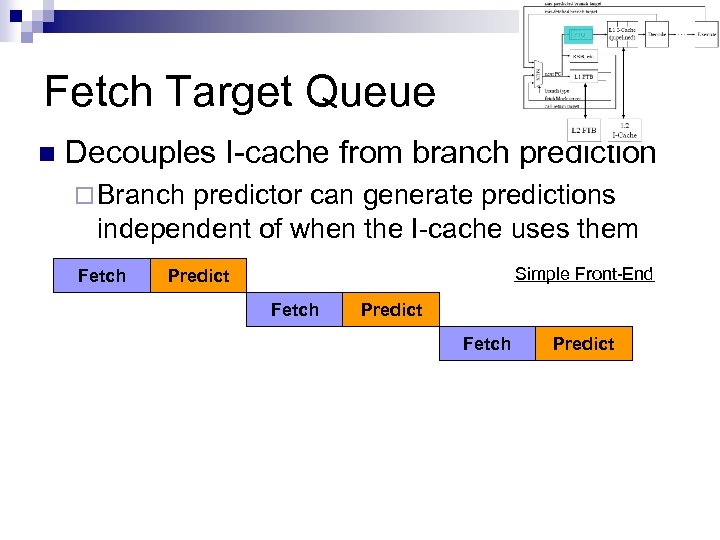

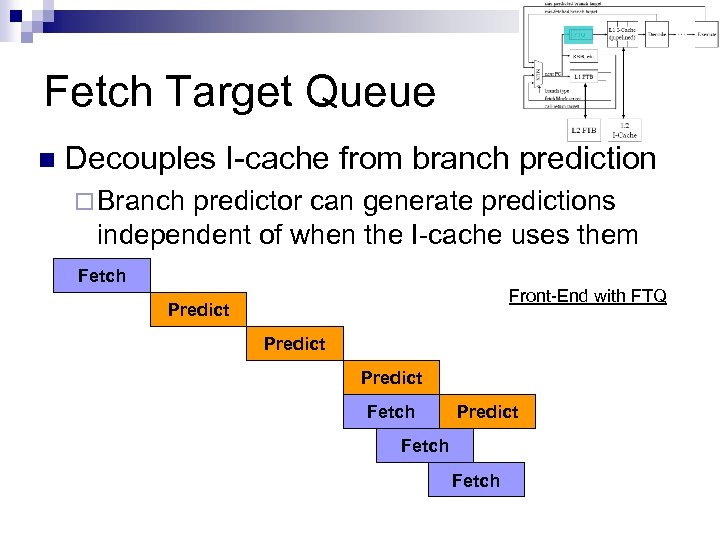

Fetch Target Queue n Decouples I-cache from branch prediction ¨ Branch predictor can generate predictions independent of when the I-cache uses them Fetch Simple Front-End Predict Fetch Predict

Fetch Target Queue n Decouples I-cache from branch prediction ¨ Branch predictor can generate predictions independent of when the I-cache uses them Fetch Front-End with FTQ Predict Fetch

Fetch Target Queue n Fetch and predict can have different latencies ¨ Allows n for I-cache to be pipelined As long as they have the same throughput

Fetch Blocks n n FTQ stores fetch block Sequence of instructions ¨ Starting at branch target ¨ Ending at a strongly biased branch n Instructions are directly fed into pipeline

Outline n Scalable Front-End and Component ¨ Fetch Target Queue ¨ Fetch Target Buffer Experimental Methodology n Results n Analysis and Conclusion n

Fetch Target Buffer: Outline Review: Branch Target Buffer n Fetch Blocks n Functionality n

![Review: Branch Target Buffer I Previous Work (Perleberg and Smith [2]) n Makes fetch Review: Branch Target Buffer I Previous Work (Perleberg and Smith [2]) n Makes fetch](https://present5.com/presentation/8abfc93bea92dff6ed1541c8692abb26/image-17.jpg)

Review: Branch Target Buffer I Previous Work (Perleberg and Smith [2]) n Makes fetch independent of predict n Fetch Simple Front-End Predict Fetch With Branch Target Buffer Predict Fetch Predict

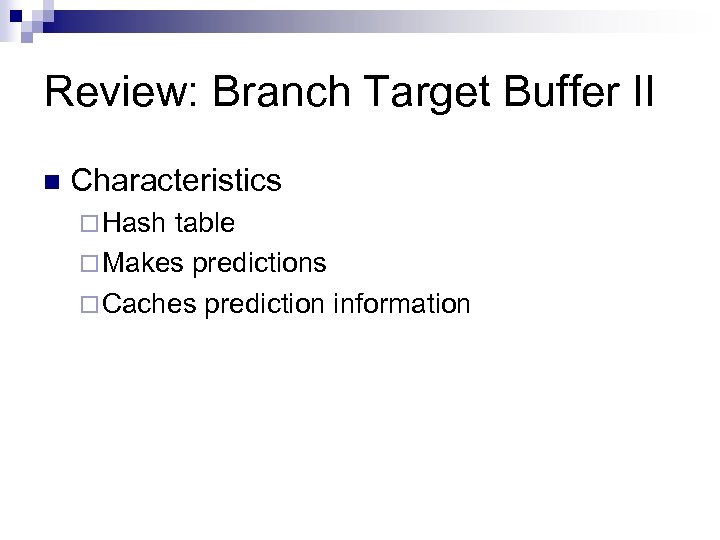

Review: Branch Target Buffer II n Characteristics ¨ Hash table ¨ Makes predictions ¨ Caches prediction information

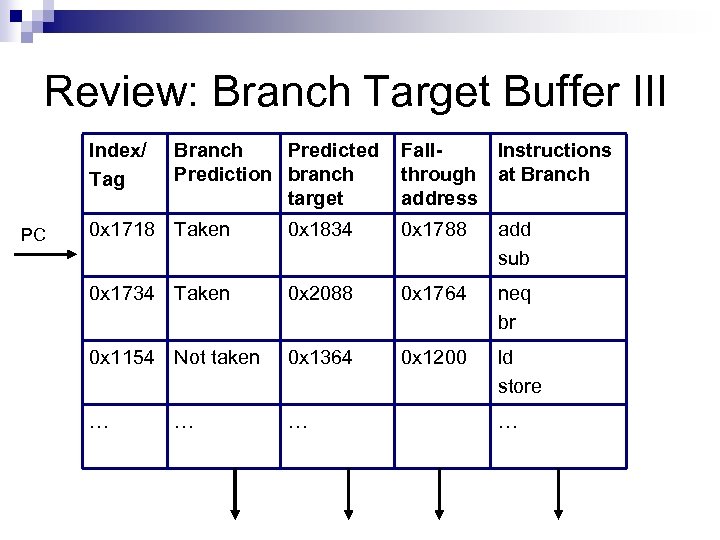

Review: Branch Target Buffer III Index/ Tag PC Branch Predicted Prediction branch target Fall. Instructions through at Branch address 0 x 1718 Taken 0 x 1834 0 x 1788 add sub 0 x 1734 Taken 0 x 2088 0 x 1764 neq br 0 x 1154 Not taken 0 x 1364 0 x 1200 ld store … …

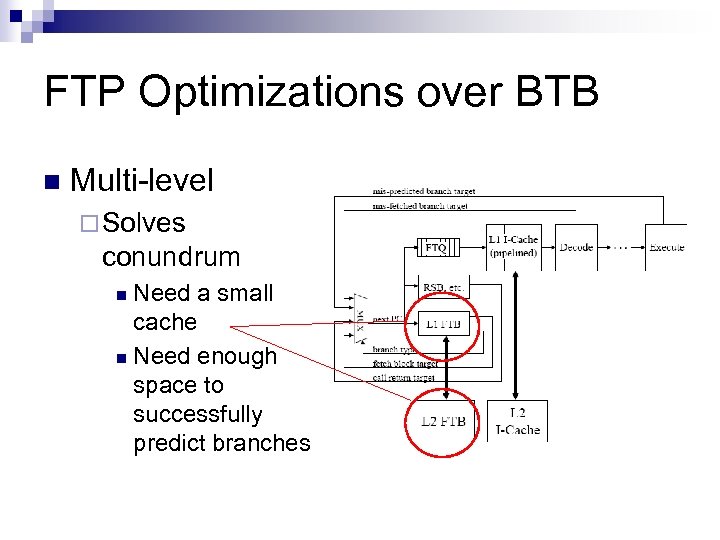

FTP Optimizations over BTB n Multi-level ¨ Solves conundrum Need a small cache n Need enough space to successfully predict branches n

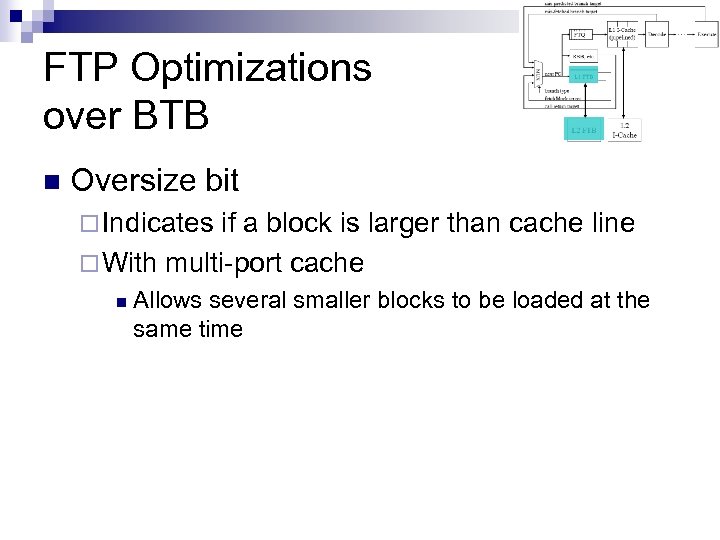

FTP Optimizations over BTB n Oversize bit ¨ Indicates if a block is larger than cache line ¨ With multi-port cache n Allows several smaller blocks to be loaded at the same time

FTP Optimizations over BTB n Only stores partial fall-through address ¨ Fall-through address is close to the current PC ¨ Only need to store an offset

FTP Optimizations over BTB n Doesn’t store every blocks: ¨ Fall-through blocks ¨ Blocks that are seldom taken

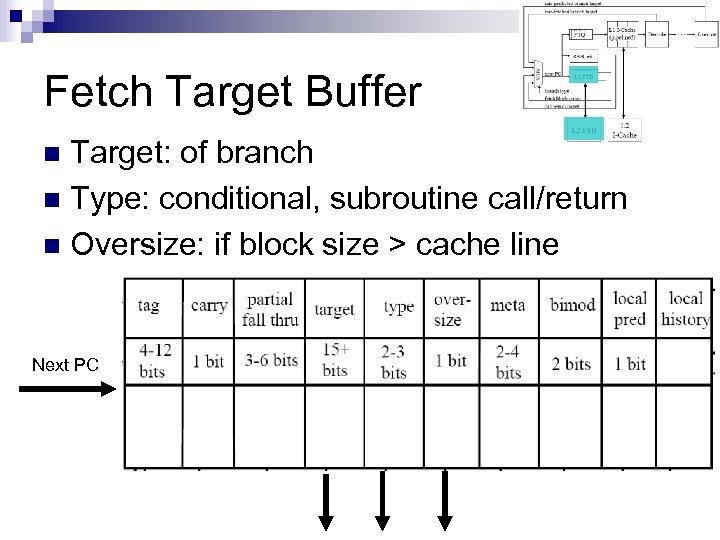

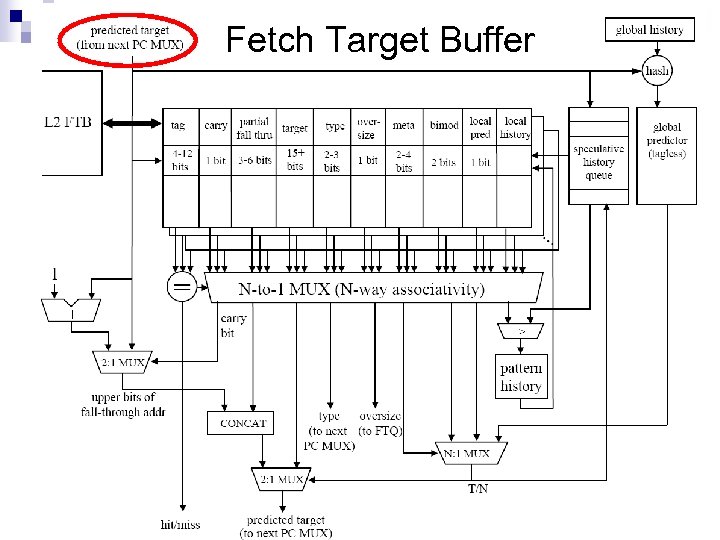

Fetch Target Buffer Target: of branch n Type: conditional, subroutine call/return n Oversize: if block size > cache line n Next PC

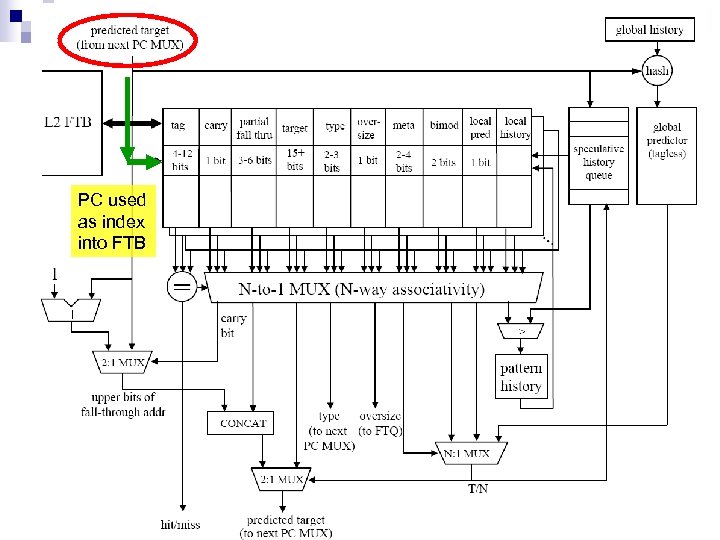

Fetch Target Buffer

PC used as index into FTB

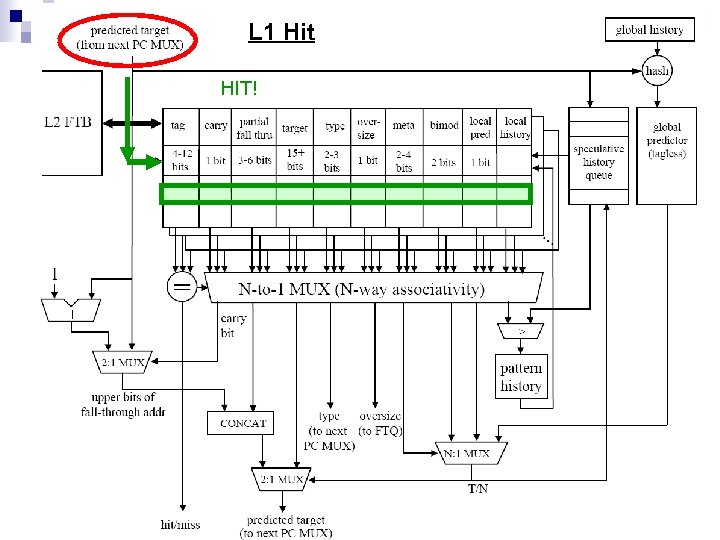

L 1 Hit HIT!

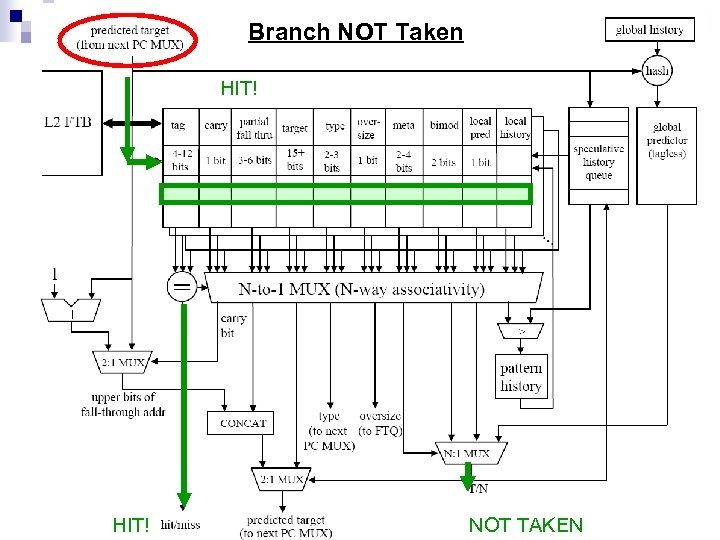

Branch NOT Taken HIT! NOT TAKEN

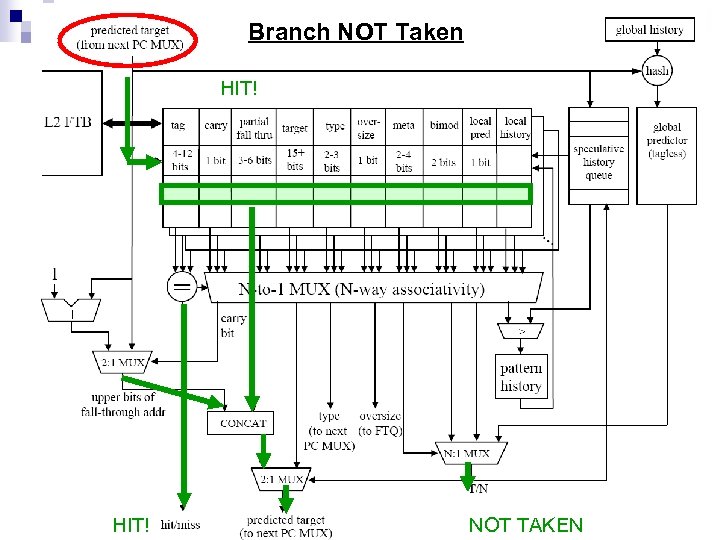

Branch NOT Taken HIT! NOT TAKEN

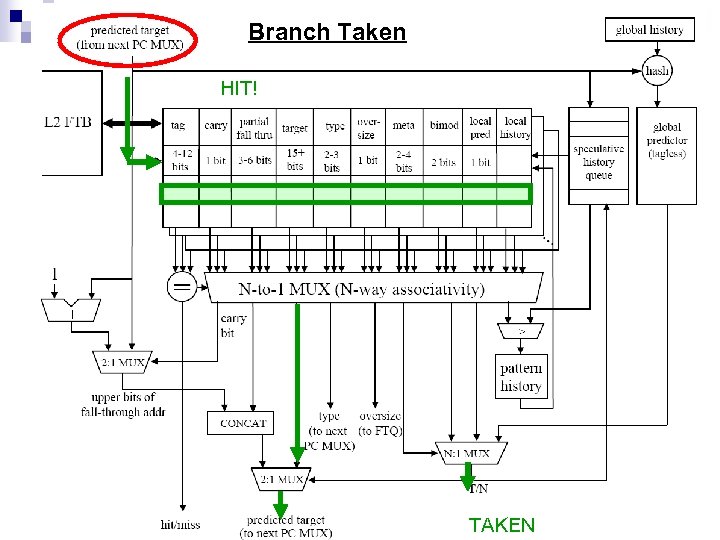

Branch Taken HIT! TAKEN

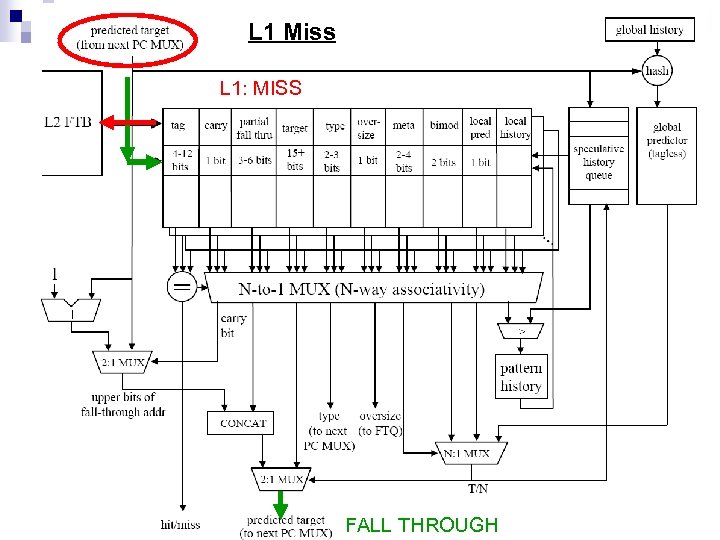

L 1 Miss L 1: MISS FALL THROUGH

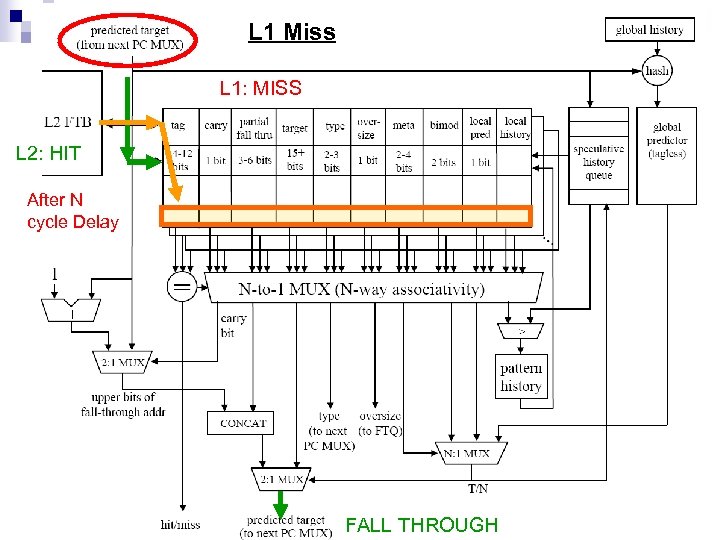

L 1 Miss L 1: MISS L 2: HIT After N cycle Delay FALL THROUGH

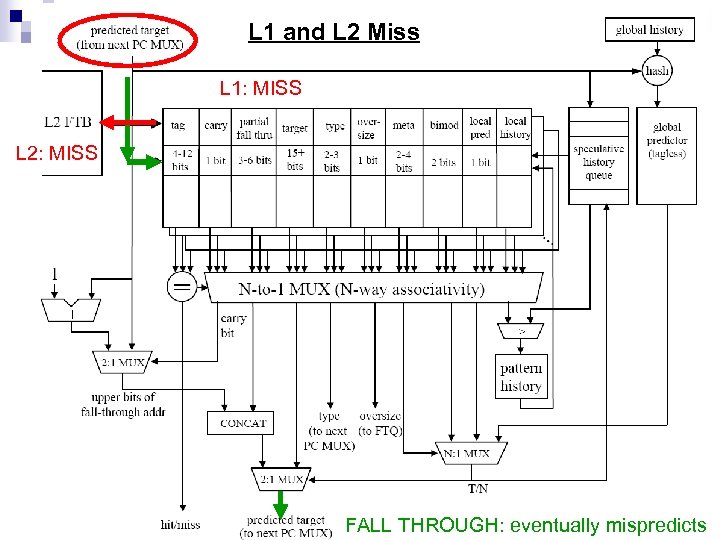

L 1 and L 2 Miss L 1: MISS L 2: MISS FALL THROUGH: eventually mispredicts

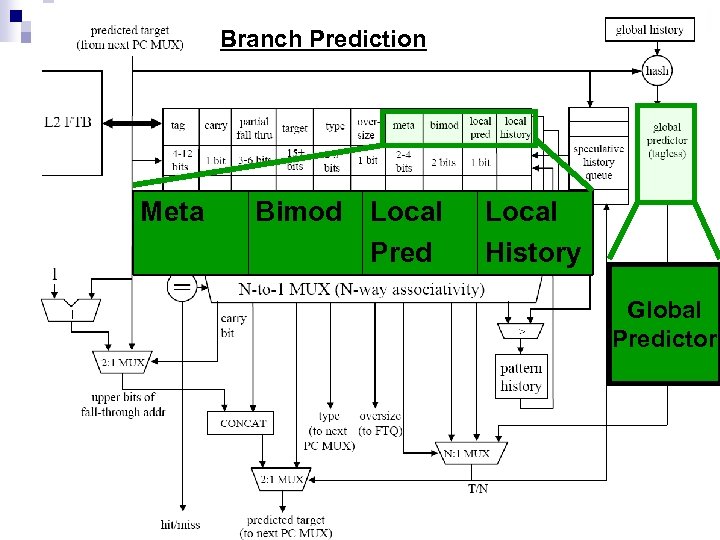

Hybrid branch prediction n Meta-predictor selects between ¨ Local history predictor ¨ Global history predictor ¨ Bimodal predictor

Branch Prediction Meta Bimod Local Pred Local History Global Predictor

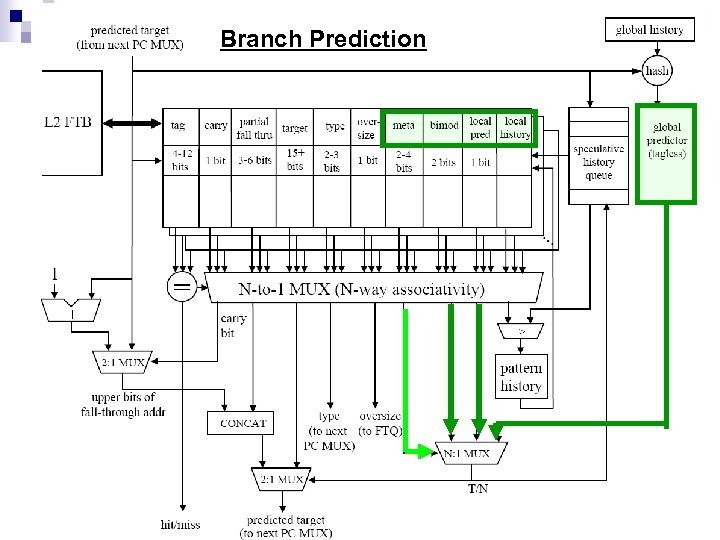

Branch Prediction

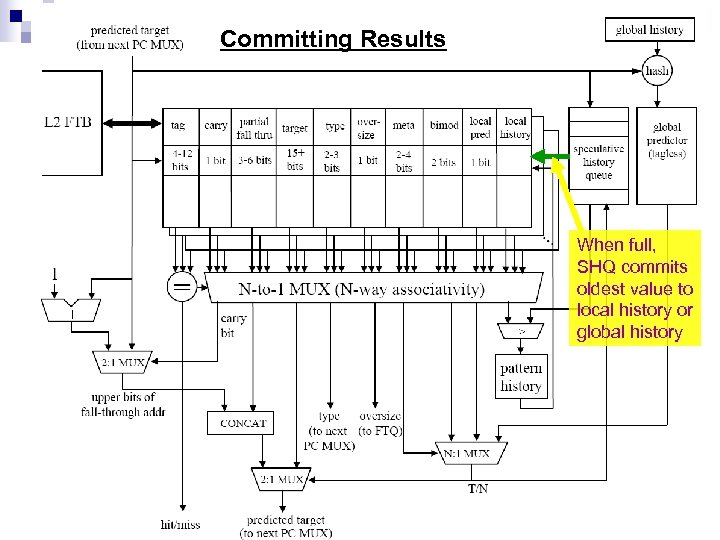

Committing Results When full, SHQ commits oldest value to local history or global history

Outline n Scalable Front-End and Component ¨ Fetch Target Queue ¨ Fetch Target Buffer Methodology n Results n Analysis and Conclusion n

Experimental Methodology I n Baseline Architecture ¨ Processor n n 8 instruction fetch with 16 instruction issue per cycle 128 entry reorder buffer with 32 entry load/store buffer 8 cycle minimum branch mis-prediction penalty Cache ¨ ¨ 64 k 2 -way instruction cache 64 k 4 way data cache (pipelined)

Experimental Methodology II n Timing Model ¨ Cacti cache compiler n Models on-chip memory n Modified for 0. 35 um, 0. 188 um and 0. 10 um processes n Test set ¨ 6 SPEC 95 benchmarks ¨ 2 C++ Programs

Outline n Scalable Front-End and Component ¨ Fetch Target Queue ¨ Fetch Target Buffer Experimental Methodology n Results n Analysis and Conclusion n

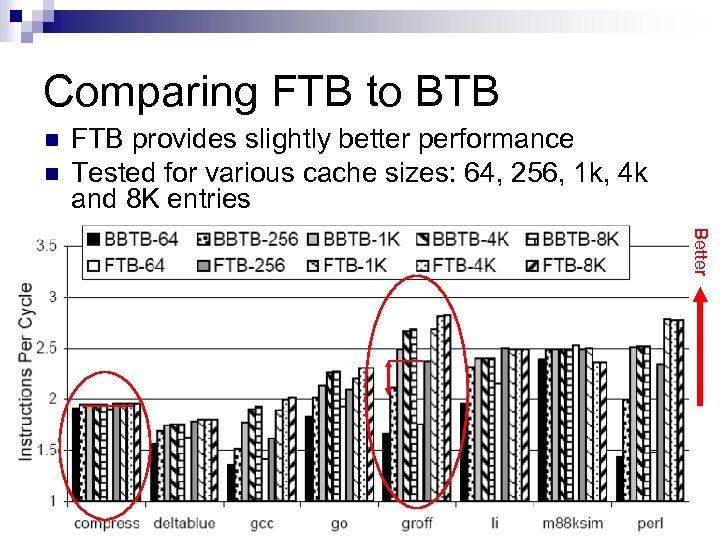

Comparing FTB to BTB n n FTB provides slightly better performance Tested for various cache sizes: 64, 256, 1 k, 4 k and 8 K entries Better

Comparing Multi-level FTB to Single-Level FTB n Two-level FTB Performance ¨ Smaller fetch size 2 Level Average Size: 6. 6 n 1 Level Average Size: 7. 5 n ¨ Higher accuracy on average Two-Level: n Single: n ¨ Higher n 83. 3% 73. 1 % performance 25% average speedup over single

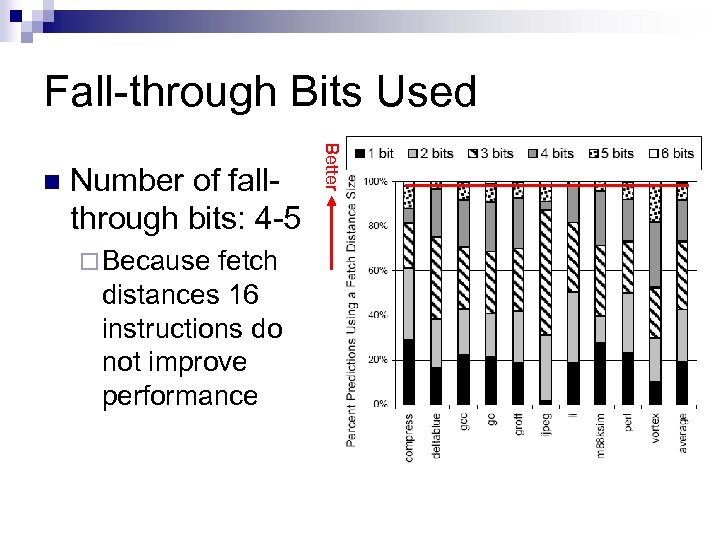

Fall-through Bits Used Number of fallthrough bits: 4 -5 ¨ Because fetch distances 16 instructions do not improve performance Better n

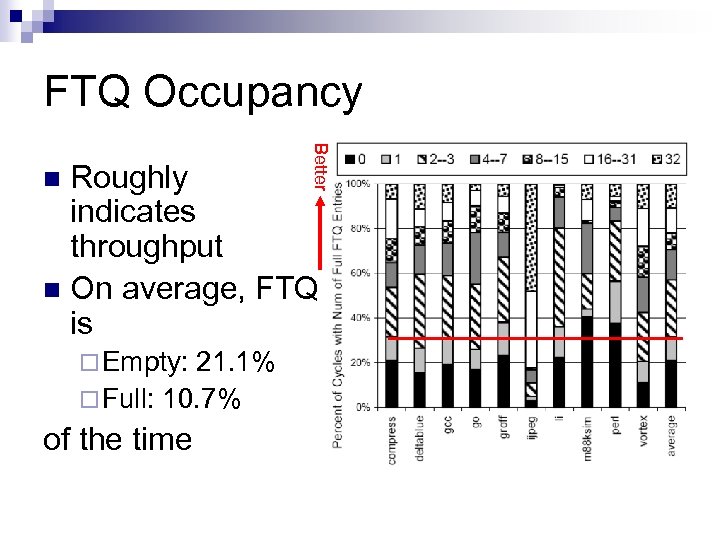

FTQ Occupancy Better Roughly indicates throughput n On average, FTQ is n ¨ Empty: 21. 1% ¨ Full: 10. 7% of the time

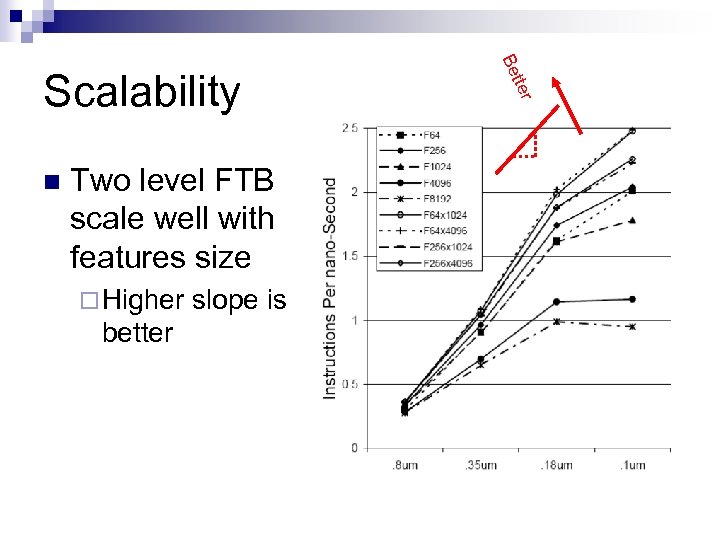

Two level FTB scale well with features size ¨ Higher better slope is t t er n Be Scalability

Outline n Scalable Front-End and Component ¨ Fetch Target Queue ¨ Fetch Target Buffer Experimental Methodology n Results n Analysis and Conclusion n

Analysis 25% improvement in IPC over best performing single-level designs n System scales well with feature size n On average, FTQ is non-empty 21. 1% of the time n FTB Design requires at most 5 bits for fallthrough address n

Conclusion n FTQ and FTB design ¨ Decouples n Produces higher throughput ¨ Uses n the I-cache from branch prediction multi-level buffer Produces better scalability

![References n n [1] A Scalable Front-End Architecture for Fast Instruction Delivery. Glenn Reinman, References n n [1] A Scalable Front-End Architecture for Fast Instruction Delivery. Glenn Reinman,](https://present5.com/presentation/8abfc93bea92dff6ed1541c8692abb26/image-50.jpg)

References n n [1] A Scalable Front-End Architecture for Fast Instruction Delivery. Glenn Reinman, Todd Austin, and Brand Calder. ACM/IEEE 26 th Annual International Symposium on Computer Architecture. May 1999 [2] Branch Target Buffer: Design and Optimization. Chris Perleberg and Alan Smith. Technical Report. December 1989.

Thank you Questions?

8abfc93bea92dff6ed1541c8692abb26.ppt