9051136db283680aa32e2ee111f4e53d.ppt

- Количество слайдов: 27

A Knowledge-Driven Approach to Meaning Processing Peter Clark Phil Harrison John Thompson Boeing Mathematics and Computing Technology

A Knowledge-Driven Approach to Meaning Processing Peter Clark Phil Harrison John Thompson Boeing Mathematics and Computing Technology

Goal • Answer questions about text, especially questions which go beyond explicitly stated facts “China launched a meteorological satellite into orbit Wednesday, the first of five weather guardians to be sent into the skies before 2008. ” • Suggests: • there a rocket launch • China owns the satellite • the satellite is for monitoring weather • the orbit is around the Earth • etc. None of these are explicitly stated in the text

Goal • Answer questions about text, especially questions which go beyond explicitly stated facts “China launched a meteorological satellite into orbit Wednesday, the first of five weather guardians to be sent into the skies before 2008. ” • Suggests: • there a rocket launch • China owns the satellite • the satellite is for monitoring weather • the orbit is around the Earth • etc. None of these are explicitly stated in the text

Meaning Processing Meaning processing construction of a situation-specific representation of the scenario described by text. • May include elements not explicit in the text • Operational definition: Degree of “captured meaning” ability to answer questions about the scenario being described Text Representation (Assembled from prior expectations) Question-Answering (Inference)

Meaning Processing Meaning processing construction of a situation-specific representation of the scenario described by text. • May include elements not explicit in the text • Operational definition: Degree of “captured meaning” ability to answer questions about the scenario being described Text Representation (Assembled from prior expectations) Question-Answering (Inference)

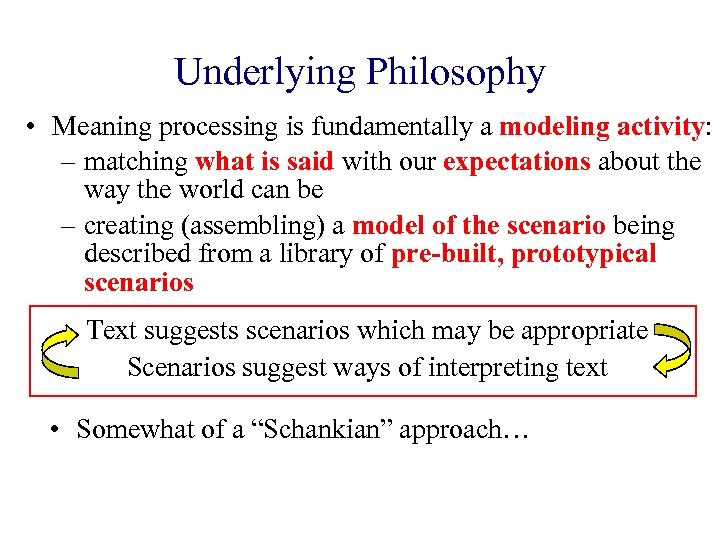

Underlying Philosophy • Meaning processing is fundamentally a modeling activity: – matching what is said with our expectations about the way the world can be – creating (assembling) a model of the scenario being described from a library of pre-built, prototypical scenarios Text suggests scenarios which may be appropriate Scenarios suggest ways of interpreting text • Somewhat of a “Schankian” approach…

Underlying Philosophy • Meaning processing is fundamentally a modeling activity: – matching what is said with our expectations about the way the world can be – creating (assembling) a model of the scenario being described from a library of pre-built, prototypical scenarios Text suggests scenarios which may be appropriate Scenarios suggest ways of interpreting text • Somewhat of a “Schankian” approach…

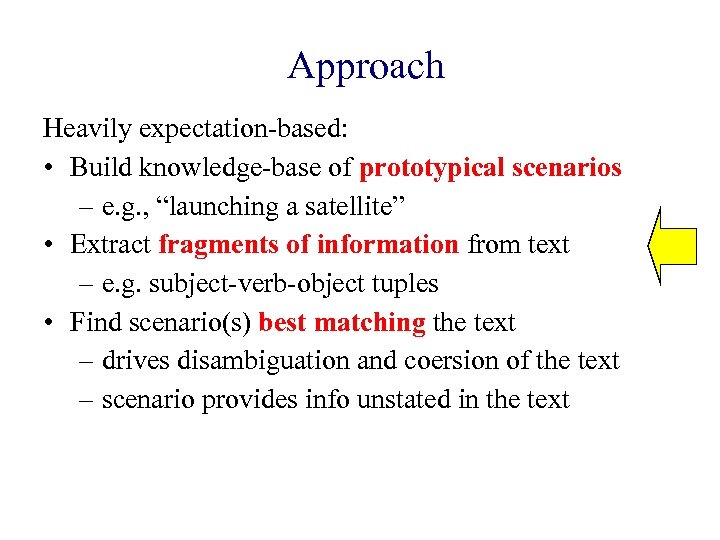

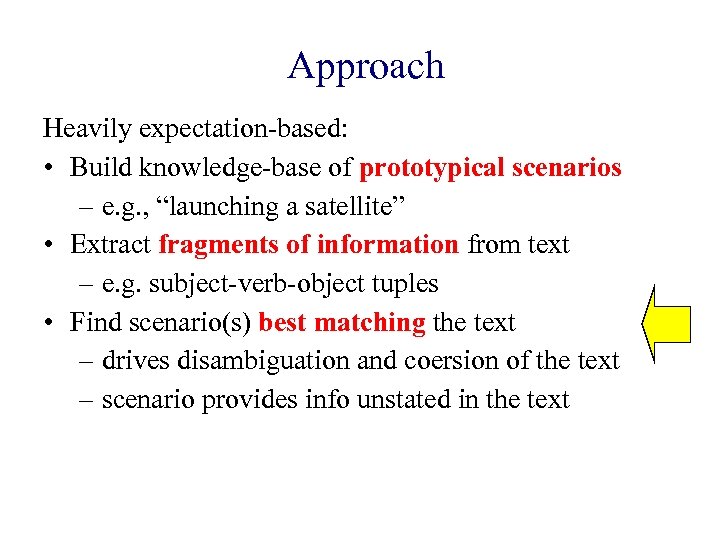

Approach Heavily expectation-based: • Build knowledge-base of prototypical scenarios – e. g. , “launching a satellite” • Extract fragments of information from text – e. g. subject-verb-object tuples • Find scenario(s) best matching the text – drives disambiguation and coersion of the text – scenario provides info unstated in the text

Approach Heavily expectation-based: • Build knowledge-base of prototypical scenarios – e. g. , “launching a satellite” • Extract fragments of information from text – e. g. subject-verb-object tuples • Find scenario(s) best matching the text – drives disambiguation and coersion of the text – scenario provides info unstated in the text

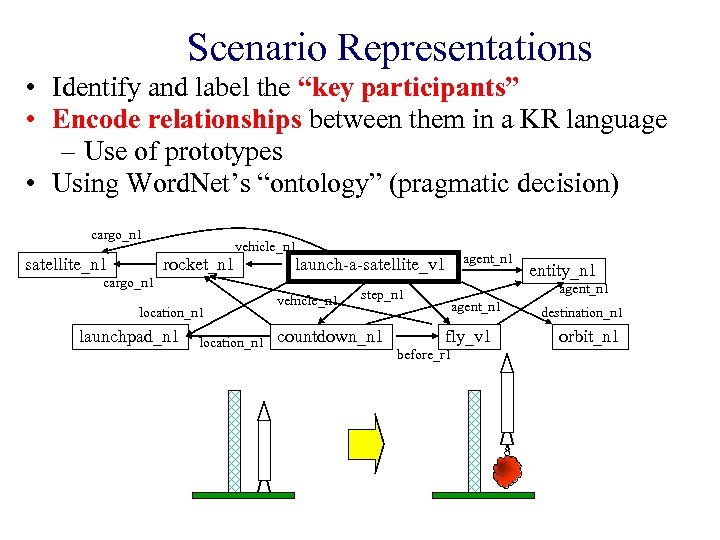

Scenario Representations • Identify and label the “key participants” • Encode relationships between them in a KR language – Use of prototypes • Using Word. Net’s “ontology” (pragmatic decision) cargo_n 1 satellite_n 1 rocket_n 1 vehicle_n 1 cargo_n 1 location_n 1 launchpad_n 1 location_n 1 agent_n 1 launch-a-satellite_v 1 vehicle_n 1 step_n 1 countdown_n 1 entity_n 1 agent_n 1 fly_v 1 before_r 1 destination_n 1 orbit_n 1

Scenario Representations • Identify and label the “key participants” • Encode relationships between them in a KR language – Use of prototypes • Using Word. Net’s “ontology” (pragmatic decision) cargo_n 1 satellite_n 1 rocket_n 1 vehicle_n 1 cargo_n 1 location_n 1 launchpad_n 1 location_n 1 agent_n 1 launch-a-satellite_v 1 vehicle_n 1 step_n 1 countdown_n 1 entity_n 1 agent_n 1 fly_v 1 before_r 1 destination_n 1 orbit_n 1

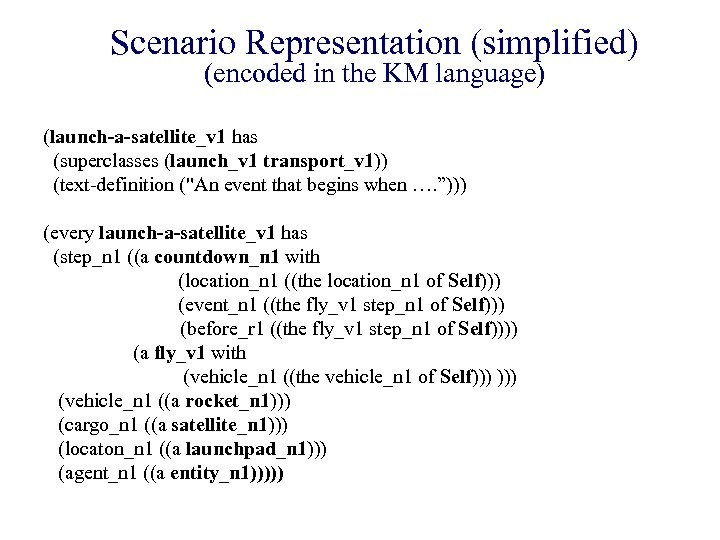

Scenario Representation (simplified) (encoded in the KM language) (launch-a-satellite_v 1 has (superclasses (launch_v 1 transport_v 1)) (text-definition ("An event that begins when …. ”))) (every launch-a-satellite_v 1 has (step_n 1 ((a countdown_n 1 with (location_n 1 ((the location_n 1 of Self))) (event_n 1 ((the fly_v 1 step_n 1 of Self))) (before_r 1 ((the fly_v 1 step_n 1 of Self)))) (a fly_v 1 with (vehicle_n 1 ((the vehicle_n 1 of Self))) (vehicle_n 1 ((a rocket_n 1))) (cargo_n 1 ((a satellite_n 1))) (locaton_n 1 ((a launchpad_n 1))) (agent_n 1 ((a entity_n 1)))))

Scenario Representation (simplified) (encoded in the KM language) (launch-a-satellite_v 1 has (superclasses (launch_v 1 transport_v 1)) (text-definition ("An event that begins when …. ”))) (every launch-a-satellite_v 1 has (step_n 1 ((a countdown_n 1 with (location_n 1 ((the location_n 1 of Self))) (event_n 1 ((the fly_v 1 step_n 1 of Self))) (before_r 1 ((the fly_v 1 step_n 1 of Self)))) (a fly_v 1 with (vehicle_n 1 ((the vehicle_n 1 of Self))) (vehicle_n 1 ((a rocket_n 1))) (cargo_n 1 ((a satellite_n 1))) (locaton_n 1 ((a launchpad_n 1))) (agent_n 1 ((a entity_n 1)))))

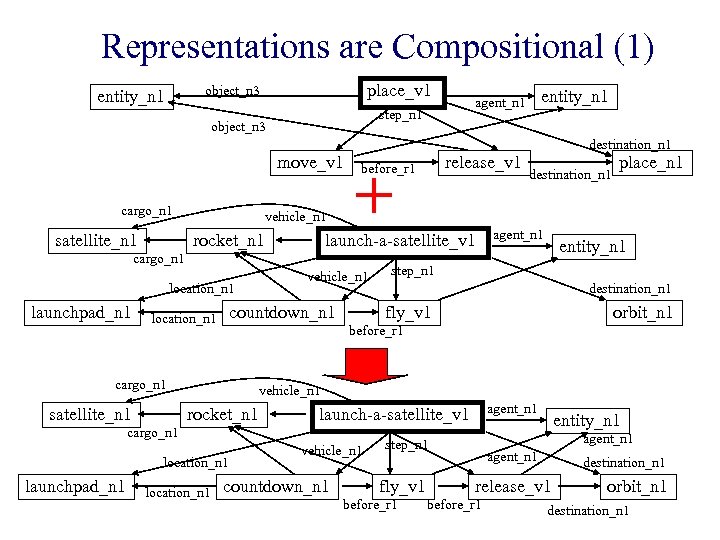

Representations are Compositional (1) place_v 1 object_n 3 entity_n 1 step_n 1 object_n 3 move_v 1 cargo_n 1 satellite_n 1 vehicle_n 1 rocket_n 1 + launch-a-satellite_v 1 cargo_n 1 location_n 1 launchpad_n 1 location_n 1 vehicle_n 1 countdown_n 1 destination_n 1 agent_n 1 place_n 1 entity_n 1 step_n 1 destination_n 1 fly_v 1 orbit_n 1 vehicle_n 1 rocket_n 1 location_n 1 agent_n 1 launch-a-satellite_v 1 cargo_n 1 launchpad_n 1 destination_n 1 before_r 1 cargo_n 1 satellite_n 1 release_v 1 before_r 1 entity_n 1 agent_n 1 vehicle_n 1 countdown_n 1 agent_n 1 step_n 1 fly_v 1 before_r 1 entity_n 1 agent_n 1 destination_n 1 release_v 1 before_r 1 orbit_n 1 destination_n 1

Representations are Compositional (1) place_v 1 object_n 3 entity_n 1 step_n 1 object_n 3 move_v 1 cargo_n 1 satellite_n 1 vehicle_n 1 rocket_n 1 + launch-a-satellite_v 1 cargo_n 1 location_n 1 launchpad_n 1 location_n 1 vehicle_n 1 countdown_n 1 destination_n 1 agent_n 1 place_n 1 entity_n 1 step_n 1 destination_n 1 fly_v 1 orbit_n 1 vehicle_n 1 rocket_n 1 location_n 1 agent_n 1 launch-a-satellite_v 1 cargo_n 1 launchpad_n 1 destination_n 1 before_r 1 cargo_n 1 satellite_n 1 release_v 1 before_r 1 entity_n 1 agent_n 1 vehicle_n 1 countdown_n 1 agent_n 1 step_n 1 fly_v 1 before_r 1 entity_n 1 agent_n 1 destination_n 1 release_v 1 before_r 1 orbit_n 1 destination_n 1

Representations are Compositional (2) • Create multiple representations for a single concept – each encoding a different aspect/viewpoint • Representations can be combined as needed Objects Involved cargo_n 1 satellite_n 1 Temporal vehicle_n 1 rocket_n 1 launch-a-satellite_v 1 vehicle_n 1 location_n 1 launchpad_n 1 step_n 1 countdown_n 1 cargo_n 1 satellite_n 1 rocket_n 1 vehicle_n 1 agent_n 1 fly_v 1 location_n 1 agent_n 1 launch-a-satellite_v 1 vehicle_n 1 step_n 1 countdown_n 1 vehicle_n 1 cargo_n 1 launchpad_n 1 launch-a-satellite_v 1 fly_v 1 before_r 1 entity_n 1 agent_n 1 fly_v 1 before_r 1 destination_n 1 orbit_n 1

Representations are Compositional (2) • Create multiple representations for a single concept – each encoding a different aspect/viewpoint • Representations can be combined as needed Objects Involved cargo_n 1 satellite_n 1 Temporal vehicle_n 1 rocket_n 1 launch-a-satellite_v 1 vehicle_n 1 location_n 1 launchpad_n 1 step_n 1 countdown_n 1 cargo_n 1 satellite_n 1 rocket_n 1 vehicle_n 1 agent_n 1 fly_v 1 location_n 1 agent_n 1 launch-a-satellite_v 1 vehicle_n 1 step_n 1 countdown_n 1 vehicle_n 1 cargo_n 1 launchpad_n 1 launch-a-satellite_v 1 fly_v 1 before_r 1 entity_n 1 agent_n 1 fly_v 1 before_r 1 destination_n 1 orbit_n 1

Scenario Representations • Goal is to capture “intermediate level” scenes: – more specific than “Move” or “Launch” – more general than • “The launching of meteorological satellites by China from Taiyuan. ” The Intermediate Level Setting-In-Motion Launching-A-Satellite China-Launching-A-Weather-Satellite

Scenario Representations • Goal is to capture “intermediate level” scenes: – more specific than “Move” or “Launch” – more general than • “The launching of meteorological satellites by China from Taiyuan. ” The Intermediate Level Setting-In-Motion Launching-A-Satellite China-Launching-A-Weather-Satellite

Approach Heavily expectation-based: • Build knowledge-base of prototypical scenarios – e. g. , “launching a satellite” • Extract fragments of information from text – e. g. subject-verb-object tuples • Find scenario(s) best matching the text – drives disambiguation and coersion of the text – scenario provides info unstated in the text

Approach Heavily expectation-based: • Build knowledge-base of prototypical scenarios – e. g. , “launching a satellite” • Extract fragments of information from text – e. g. subject-verb-object tuples • Find scenario(s) best matching the text – drives disambiguation and coersion of the text – scenario provides info unstated in the text

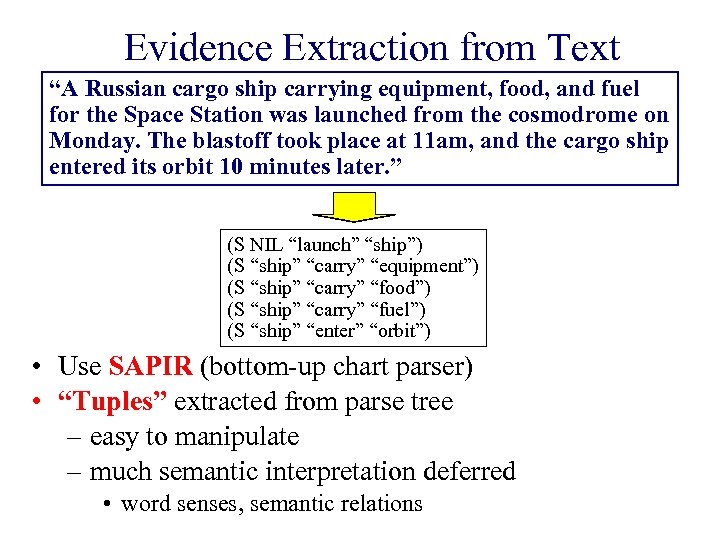

Evidence Extraction from Text “A Russian cargo ship carrying equipment, food, and fuel for the Space Station was launched from the cosmodrome on Monday. The blastoff took place at 11 am, and the cargo ship entered its orbit 10 minutes later. ” (S NIL “launch” “ship”) (S “ship” “carry” “equipment”) (S “ship” “carry” “food”) (S “ship” “carry” “fuel”) (S “ship” “enter” “orbit”) • Use SAPIR (bottom-up chart parser) • “Tuples” extracted from parse tree – easy to manipulate – much semantic interpretation deferred • word senses, semantic relations

Evidence Extraction from Text “A Russian cargo ship carrying equipment, food, and fuel for the Space Station was launched from the cosmodrome on Monday. The blastoff took place at 11 am, and the cargo ship entered its orbit 10 minutes later. ” (S NIL “launch” “ship”) (S “ship” “carry” “equipment”) (S “ship” “carry” “food”) (S “ship” “carry” “fuel”) (S “ship” “enter” “orbit”) • Use SAPIR (bottom-up chart parser) • “Tuples” extracted from parse tree – easy to manipulate – much semantic interpretation deferred • word senses, semantic relations

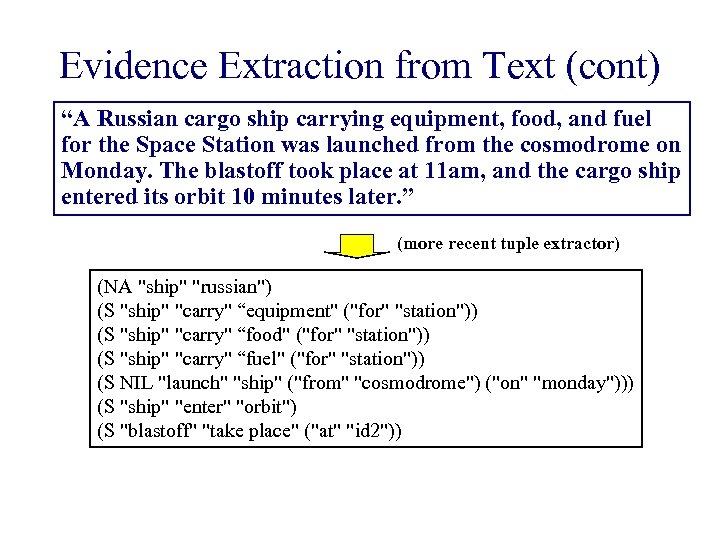

Evidence Extraction from Text (cont) “A Russian cargo ship carrying equipment, food, and fuel for the Space Station was launched from the cosmodrome on Monday. The blastoff took place at 11 am, and the cargo ship entered its orbit 10 minutes later. ” (more recent tuple extractor) (NA "ship" "russian") (S "ship" "carry" “equipment" ("for" "station")) (S "ship" "carry" “food" ("for" "station")) (S "ship" "carry" “fuel" ("for" "station")) (S NIL "launch" "ship" ("from" "cosmodrome") ("on" "monday"))) (S "ship" "enter" "orbit") (S "blastoff" "take place" ("at" "id 2"))

Evidence Extraction from Text (cont) “A Russian cargo ship carrying equipment, food, and fuel for the Space Station was launched from the cosmodrome on Monday. The blastoff took place at 11 am, and the cargo ship entered its orbit 10 minutes later. ” (more recent tuple extractor) (NA "ship" "russian") (S "ship" "carry" “equipment" ("for" "station")) (S "ship" "carry" “food" ("for" "station")) (S "ship" "carry" “fuel" ("for" "station")) (S NIL "launch" "ship" ("from" "cosmodrome") ("on" "monday"))) (S "ship" "enter" "orbit") (S "blastoff" "take place" ("at" "id 2"))

Approach Heavily expectation-based: • Build knowledge-base of prototypical scenarios – e. g. , “launching a satellite” • Extract fragments of information from text – e. g. subject-verb-object tuples • Find scenario(s) best matching the text – drives disambiguation and coersion of the text – scenario provides info unstated in the text

Approach Heavily expectation-based: • Build knowledge-base of prototypical scenarios – e. g. , “launching a satellite” • Extract fragments of information from text – e. g. subject-verb-object tuples • Find scenario(s) best matching the text – drives disambiguation and coersion of the text – scenario provides info unstated in the text

Matching the Text with Scenarios • A syntactic tuple matches a semantic assertion if: – its words have an interpretation matching the concepts – the syntactic relation can map to the semantic relation • Simple scoring function f(# word matches, # tuple matches) (S “china” “launch” “satellite”) “launch” subject “launch” direct object cargo_n 1 satellite_n 1 rocket_n 1 “china” “satellite” vehicle_n 1 cargo_n 1 location_n 1 launchpad_n 1 location_n 1 agent_n 1 launch-a-satellite_v 1 vehicle_n 1 step_n 1 countdown_n 1 causal_agent_n 1 fly_v 1 before_r 1 destination_n 1 orbit_n 1

Matching the Text with Scenarios • A syntactic tuple matches a semantic assertion if: – its words have an interpretation matching the concepts – the syntactic relation can map to the semantic relation • Simple scoring function f(# word matches, # tuple matches) (S “china” “launch” “satellite”) “launch” subject “launch” direct object cargo_n 1 satellite_n 1 rocket_n 1 “china” “satellite” vehicle_n 1 cargo_n 1 location_n 1 launchpad_n 1 location_n 1 agent_n 1 launch-a-satellite_v 1 vehicle_n 1 step_n 1 countdown_n 1 causal_agent_n 1 fly_v 1 before_r 1 destination_n 1 orbit_n 1

Multiple abstractions may match… Moving Tangible-Entity Launching Placing-In-Position Launching-A-Boat Artificial-Satellite Launching-A-Satellite Rocket-Vehicle Orbit-Celestial China’s Launch of the FY-1 D meteorologic al satellite

Multiple abstractions may match… Moving Tangible-Entity Launching Placing-In-Position Launching-A-Boat Artificial-Satellite Launching-A-Satellite Rocket-Vehicle Orbit-Celestial China’s Launch of the FY-1 D meteorologic al satellite

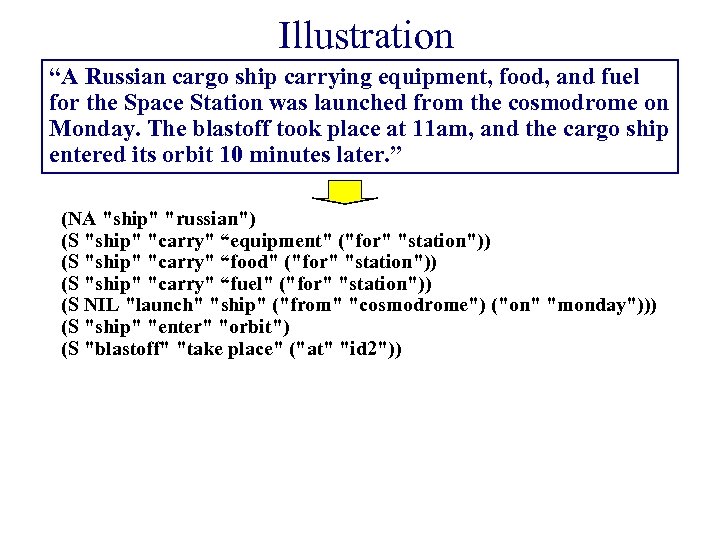

Illustration “A Russian cargo ship carrying equipment, food, and fuel for the Space Station was launched from the cosmodrome on Monday. The blastoff took place at 11 am, and the cargo ship entered its orbit 10 minutes later. ” (NA "ship" "russian") (S "ship" "carry" “equipment" ("for" "station")) (S "ship" "carry" “food" ("for" "station")) (S "ship" "carry" “fuel" ("for" "station")) (S NIL "launch" "ship" ("from" "cosmodrome") ("on" "monday"))) (S "ship" "enter" "orbit") (S "blastoff" "take place" ("at" "id 2"))

Illustration “A Russian cargo ship carrying equipment, food, and fuel for the Space Station was launched from the cosmodrome on Monday. The blastoff took place at 11 am, and the cargo ship entered its orbit 10 minutes later. ” (NA "ship" "russian") (S "ship" "carry" “equipment" ("for" "station")) (S "ship" "carry" “food" ("for" "station")) (S "ship" "carry" “fuel" ("for" "station")) (S NIL "launch" "ship" ("from" "cosmodrome") ("on" "monday"))) (S "ship" "enter" "orbit") (S "blastoff" "take place" ("at" "id 2"))

Illustration “A Russian cargo ship carrying equipment, food, and fuel for the Space Station was launched from the cosmodrome on Monday. The blastoff took place at 11 am, and the cargo ship entered its orbit 10 minutes later. ” (NA "ship" "russian") (S "ship" "carry" “equipment" ("for" "station")) (S "ship" "carry" “food" ("for" "station")) (S "ship" "carry" “fuel" ("for" "station")) (S NIL "launch" "ship" ("from" "cosmodrome") ("on" "monday"))) (S "ship" "enter" "orbit") (S "blastoff" "take place" ("at" "id 2")) Score: 24 rocket_n 1 location_n 1 launchpad_n 1 location_n 1 vehicle_n 1 countdown_n 1 agent_n 1 launch-a-rocket_v 1 agent_n 1 step_n 1 fly_v 1 before_r 1 entity_n 1 agent_n 1 destination_n 1 release_v 1 before_r 1 orbit_n 1 destination_n 1

Illustration “A Russian cargo ship carrying equipment, food, and fuel for the Space Station was launched from the cosmodrome on Monday. The blastoff took place at 11 am, and the cargo ship entered its orbit 10 minutes later. ” (NA "ship" "russian") (S "ship" "carry" “equipment" ("for" "station")) (S "ship" "carry" “food" ("for" "station")) (S "ship" "carry" “fuel" ("for" "station")) (S NIL "launch" "ship" ("from" "cosmodrome") ("on" "monday"))) (S "ship" "enter" "orbit") (S "blastoff" "take place" ("at" "id 2")) Score: 24 rocket_n 1 location_n 1 launchpad_n 1 location_n 1 vehicle_n 1 countdown_n 1 agent_n 1 launch-a-rocket_v 1 agent_n 1 step_n 1 fly_v 1 before_r 1 entity_n 1 agent_n 1 destination_n 1 release_v 1 before_r 1 orbit_n 1 destination_n 1

Issues Constraints to overcome: 1. Language: Requires just the right text 2. Knowledge: Requires just the right representation “John entered the restaurant. John sat down. He ordered some food…” enter But what if… “John placed his order…” “John picked up the menu…” sit order

Issues Constraints to overcome: 1. Language: Requires just the right text 2. Knowledge: Requires just the right representation “John entered the restaurant. John sat down. He ordered some food…” enter But what if… “John placed his order…” “John picked up the menu…” sit order

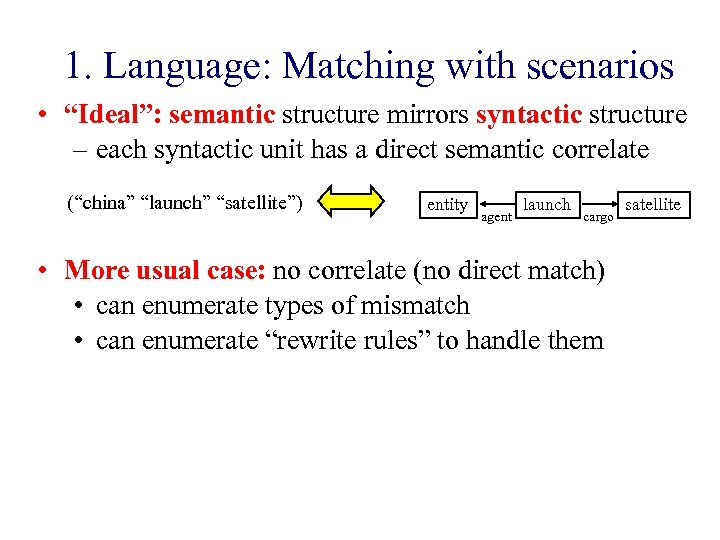

1. Language: Matching with scenarios • “Ideal”: semantic structure mirrors syntactic structure – each syntactic unit has a direct semantic correlate (“china” “launch” “satellite”) entity agent launch cargo • More usual case: no correlate (no direct match) • can enumerate types of mismatch • can enumerate “rewrite rules” to handle them satellite

1. Language: Matching with scenarios • “Ideal”: semantic structure mirrors syntactic structure – each syntactic unit has a direct semantic correlate (“china” “launch” “satellite”) entity agent launch cargo • More usual case: no correlate (no direct match) • can enumerate types of mismatch • can enumerate “rewrite rules” to handle them satellite

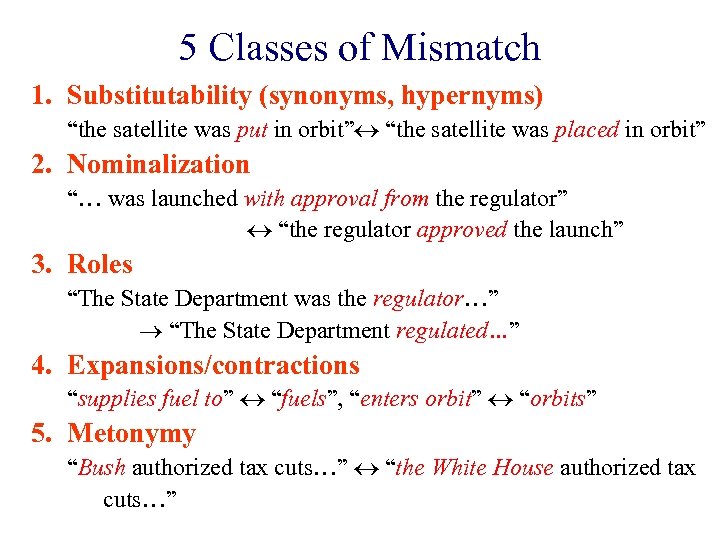

5 Classes of Mismatch 1. Substitutability (synonyms, hypernyms) “the satellite was put in orbit” “the satellite was placed in orbit” 2. Nominalization “… was launched with approval from the regulator” “the regulator approved the launch” 3. Roles “The State Department was the regulator…” “The State Department regulated…” 4. Expansions/contractions “supplies fuel to” “fuels”, “enters orbit” “orbits” 5. Metonymy “Bush authorized tax cuts…” “the White House authorized tax cuts…”

5 Classes of Mismatch 1. Substitutability (synonyms, hypernyms) “the satellite was put in orbit” “the satellite was placed in orbit” 2. Nominalization “… was launched with approval from the regulator” “the regulator approved the launch” 3. Roles “The State Department was the regulator…” “The State Department regulated…” 4. Expansions/contractions “supplies fuel to” “fuels”, “enters orbit” “orbits” 5. Metonymy “Bush authorized tax cuts…” “the White House authorized tax cuts…”

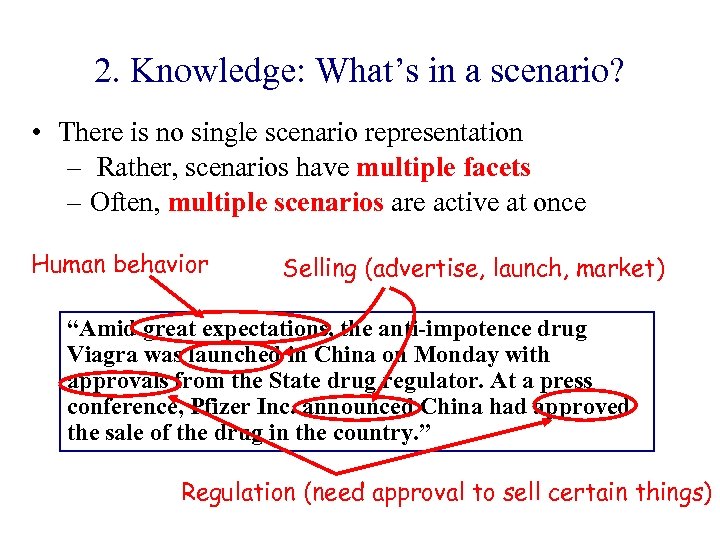

2. Knowledge: What’s in a scenario? • There is no single scenario representation – Rather, scenarios have multiple facets – Often, multiple scenarios are active at once Human behavior Selling (advertise, launch, market) “Amid great expectations, the anti-impotence drug Viagra was launched in China on Monday with approvals from the State drug regulator. At a press conference, Pfizer Inc. announced China had approved the sale of the drug in the country. ” Regulation (need approval to sell certain things)

2. Knowledge: What’s in a scenario? • There is no single scenario representation – Rather, scenarios have multiple facets – Often, multiple scenarios are active at once Human behavior Selling (advertise, launch, market) “Amid great expectations, the anti-impotence drug Viagra was launched in China on Monday with approvals from the State drug regulator. At a press conference, Pfizer Inc. announced China had approved the sale of the drug in the country. ” Regulation (need approval to sell certain things)

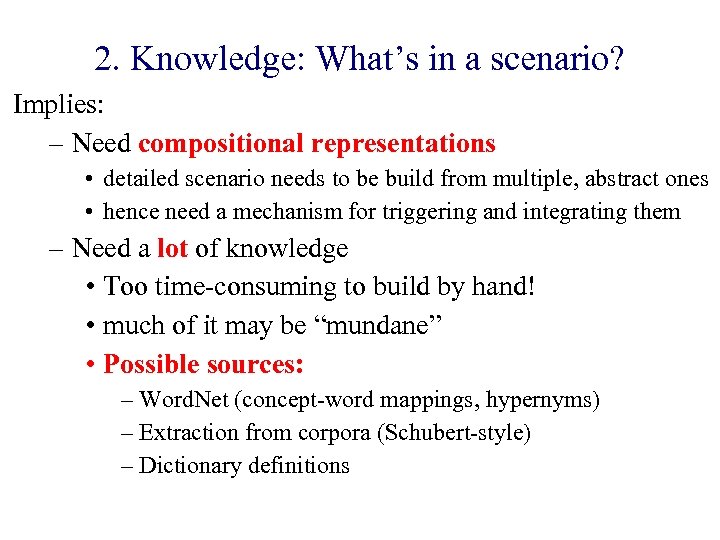

2. Knowledge: What’s in a scenario? Implies: – Need compositional representations • detailed scenario needs to be build from multiple, abstract ones • hence need a mechanism for triggering and integrating them – Need a lot of knowledge • Too time-consuming to build by hand! • much of it may be “mundane” • Possible sources: – Word. Net (concept-word mappings, hypernyms) – Extraction from corpora (Schubert-style) – Dictionary definitions

2. Knowledge: What’s in a scenario? Implies: – Need compositional representations • detailed scenario needs to be build from multiple, abstract ones • hence need a mechanism for triggering and integrating them – Need a lot of knowledge • Too time-consuming to build by hand! • much of it may be “mundane” • Possible sources: – Word. Net (concept-word mappings, hypernyms) – Extraction from corpora (Schubert-style) – Dictionary definitions

2. Knowledge: How to build the KB? cargo_n 1 vehicle_n 1 satellite_n 1 rocket_n 1 agent_n 1 launch-a-satellite_v 1 cargo_n 1 vehicle_n 1 agent_n 1 step_n 1 agent_n 1 location_n 1 launchpad_n 1 location_n 1 countdown_n 1 entity_n 1 fly_v 1 ? destination_n 1 orbit_n 1 before_r 1 Many of these facts are “mundane” “satellites can be launched” “rockets can carry satellites” “countdowns can be at launchpads” “rockets can fly” • Can we extract these facts semi-automatically? yes! • Can we use them to rapidly assemble models? perhaps

2. Knowledge: How to build the KB? cargo_n 1 vehicle_n 1 satellite_n 1 rocket_n 1 agent_n 1 launch-a-satellite_v 1 cargo_n 1 vehicle_n 1 agent_n 1 step_n 1 agent_n 1 location_n 1 launchpad_n 1 location_n 1 countdown_n 1 entity_n 1 fly_v 1 ? destination_n 1 orbit_n 1 before_r 1 Many of these facts are “mundane” “satellites can be launched” “rockets can carry satellites” “countdowns can be at launchpads” “rockets can fly” • Can we extract these facts semi-automatically? yes! • Can we use them to rapidly assemble models? perhaps

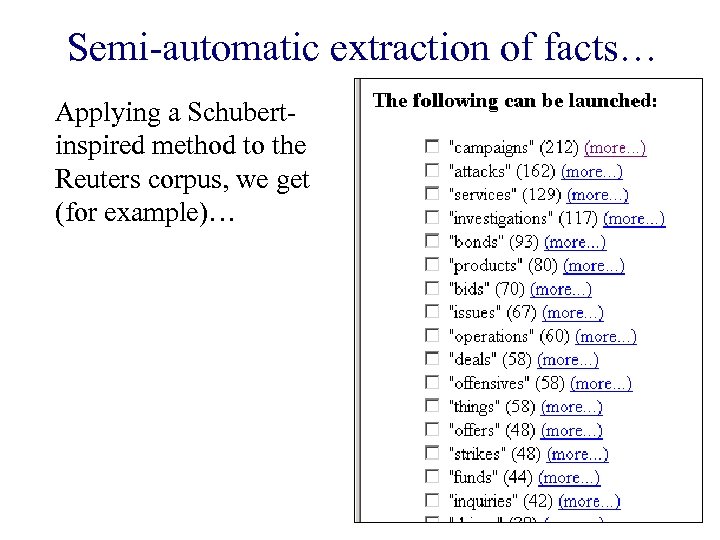

Semi-automatic extraction of facts… Applying a Schubertinspired method to the Reuters corpus, we get (for example)…

Semi-automatic extraction of facts… Applying a Schubertinspired method to the Reuters corpus, we get (for example)…

Semi-automatic extraction of facts…

Semi-automatic extraction of facts…

Summary • Text understanding = a modeling activity – Text suggests scenario models to use – Models suggest ways of interpreting text • Approach: – library of pre-built scenario representations – NL-processed fragments of input text – Matching process to find best-matching scenario • Issues (many!); in particular: – matching syntax and semantics – constructing the KB

Summary • Text understanding = a modeling activity – Text suggests scenario models to use – Models suggest ways of interpreting text • Approach: – library of pre-built scenario representations – NL-processed fragments of input text – Matching process to find best-matching scenario • Issues (many!); in particular: – matching syntax and semantics – constructing the KB