ce06e14ca6f9cf4544e6de3936f75fae.ppt

- Количество слайдов: 49

A Heartbeat Mechanism and its Application in Gigascope Johnson, Muthukrishnan, Shkapenyuk, Spatscheck Presented by: Joseph Frate and John Russo

Introduction o Unblocking aggregation, join and union operators n n Limit scope of output tuples that an input tuple can affect. Two techniques o Define queries over window of input stream n o Applicable to continuous query systems for monitoring Timestamp mechanism n Applicable to data reduction applications

Gigascope o o o A high-performance streaming database for network monitoring Some fields of input stream behave as timestamps Locality of input tuple n n n Aggregation must have timestamp as one of group by fields Join query relates timestamp fields of both inputs Merge operator o o Union operator Preserves timestamp property of one of the fields

Timestamps and Gigascope o o Effective as long as progress is made in all input streams Join or merge operators can stall if one input stream stalls, causing systems failure

Example o o o Sites monitored by Gigascope have multiple gigabit connections to the Internet. One or more is a backup link through which traffic can be diverted if primary link fails All links are monitored simultaneously Since primary link has gigabit traffic and backup link has no traffic, merge operator will quickly overflow. Presence of tuples carries timestamps, absence does not

Focus of Paper o Authors propose use of heartbeats or punctuation to unblock operators n n n Heartbeats originate at source query operators and propagate throughout query Timestamp punctuations are generated at source query nodes and inferred at every other operator Punctuated heartbeats unblock operators that would otherwise be blocked Focus is on multi-stream operators (joins and merges) Significantly reduces memory load for join and merge operators

Related Work o Heartbeat n n n o Stream Punctuations n o Widely used for fault-tolerance in distributed systems Remote nodes send periodic heartbeats to let other nodes know that they are alive Heartbeats are also used in distributed DSMS Embed special marks in stream to indicate end of subset of data Previous work in heartbeat mechanisms with streaming data have focused on enforcing ordering of timestamps of tuples before query processing

Gigascope Architecture o o o Stream-only database No continuous queries, thus no windows to unblock queries Streams are labeled with timestampness, such as monotone increasing. Used by query planner to unblocking operators

Gigascope Architecture (Continued) o Aggregation query n n One of the group by attributes must have timestampness. When this attribute changes, all groups and aggregates are flushed to the operator’s output We can define this as an epoch. Flush occurs at the end of each epoch. Example: here time is labeled as monotone increasing o n select tb, src. IP, count(*) from TCP group by time/60 as tb, src. OP In this query, we count the packets from each source IP address during 60 second epochs

Gigascope Architecture (Continued) o Merge Operator n n Union of two streams: A and B must have same schema Both streams must have a timestamp field, such as t, on which to merge If tuples on A have a larger value of t than those on B, tuples on A are buffered until B catches up

Gigascope Architecture (Continued) o Join Query n Must contain a join predicate such as A. tr=B. ts or A. tr/3 = B. ts+2 o n Relates timestamp field from A with B Input streams are buffered so that streams match up on the timestamp.

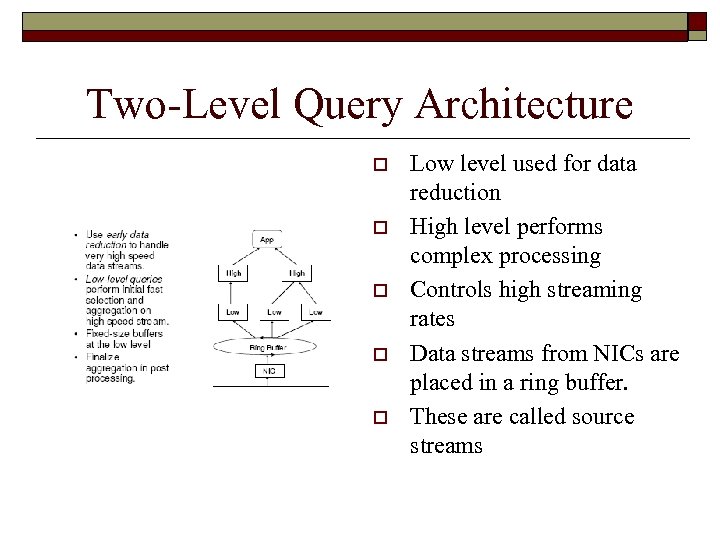

Two-Level Query Architecture o o o Low level used for data reduction High level performs complex processing Controls high streaming rates Data streams from NICs are placed in a ring buffer. These are called source streams

Two-Level Query Architecture (continued) o o Since volumes are too large to provide copy to each query, Gigascope creates a subquery For example: n n n Query Q to be executed over source stream S Gigascope creates a subquery q which directly accesses S Q is transformed into Q 0 which is executed over the stream output of q

Two-Level Query Architecture (continued) o o Low-level subqueries are called LFTAs Fast, lightweight data reduction queries Objective is to quickly process high volume data stream in order to minimize buffer requirements Expensive processing is performed on output of low level queries n n Smaller volume Easily buffered

Two-Level Query Architecture (continued) o o Much of subquery processing can be performed on the NIC itself Low-level aggregation uses a fixed-size hash table for maintaining groups in group by If a collision occurs in hash table, old group is ejected as a tuple and new group replaces it in its slot Similar methodology as subaggregates and superaggregates in data cube computations n Higher level queries complete aggregation

Two-Level Query Architecture (continued) o Traffic shaping policies are implemented in order to spread out processing load n Aggregation operator uses a slow flush to emit tuples when aggregation epoch changes

Heartbeats to Unblock Streaming Operators o Gigascope heartbeats are produced by low-level query operators n n o Propagated throughout query DAG Incur same queuing delays as tuples System performance monitoring Aids in detecting failed nodes Stream punctuation mechanism is implemented n n Injects special temporal update tuples into operator output streams Informs receiving operator of end of subset of data Authors first attempted on-demand generation of temporal update tuples Authors settled upon approach of using heartbeats to carry temporal update tuples

Schema of Temporal Update Tuple o o Identical to regular tuple All attributes marked as temporal are initialized with values that will not violate the ordering properties n n n i. e. timebucket is marked as temporal increasing Temporal update tuple with timebucket=t is received by an operator All future tuples will have timebucket >=t Non-temporal attributes are ignored by receiving operators Goal is to generate temporal attribute values aggressively and set them to highest possible value

Heartbeat Generation at LFTA Level Low-level Streaming Operators o o Read data directly from source data streams (packets sniffed from NICs) Use filtering, projection and aggregation to reduce amount of data in a stream before passing it to higher-level nodes Two types of streaming operators: selection and aggregation Multi-query optimization through prefilters

Heartbeat Generation at LFTA Level Low-level Streaming Operators o o o Normal mode of operation is to block waiting for new tuples to be posted to NICs ring buffer. Once a tuple is in the buffer, it is processed Wait is periodically interrupted to generate a punctuated heartbeat

Heartbeats in select LFTAs o o o Selection, projection and transformation is performed by LFTAs on packets arriving from data stream source. If predicate is satisfied, output tuple is generated according to projection list. A few additions n n Modify accept_tuple to save all temporal attribute values referenced in select clause Whenever a request is received to generate a temporal update tuple, use maximum of saved value of temporal attributes and a value saved by prefilter to infer value of temporal update tuple

Heartbeats in Aggregation LFTAs o o Group by and aggregation functionality is implemented using directmapped hash table Collision results in ejected tuple being sent to output stream n o o Flushing occurs whenever incoming tuple advances the epoch. Slow-flush is used to avoid overflow of buffers n o o o High-level aggregation node completes aggregation of partial results produced by LFTA Gradually emits tuples as new tuples arrive Last seen temporal values in input stream are saved, similar to select. This value is used to generate temporal update tuples Also maintains value of last temporal attribute of last tuple flushed

Heartbeats in Aggregation LFTAs o Whenever a request for a heartbeat is received, uses following formula in order to insure that heartbeat does not violate temporal attribute ordering properties: if we have unflushed tuples: use the value of last flushed tuple else use max of saved value of the temporal attributes and the value saved by the prefilter

Using System Time for Temporal Values o o When a link has no traffic for a long time, heartbeat is generated using inference from system time. Skew between system clock and buffering has to be accounted for when setting up Gigascope system

High Level Query Nodes (HFTA) o o o Second level of query execution (two levels) LFTA for low-level filtering and sub-queries HFTA for complex query processing n n o Selection Multiple types of aggregation Stream merge Inner and outer join Data from multiple streams (HFTAs, LFTAs)

HFTA Execution o Normal mode of execution: n n n Block while waiting for new tuples to arrive Determines which operator to apply to that stream Process the incoming tuple for that operator Route output (if operator required) Also regularly receives temporal update tuples o o Operators interpret temporal update tuples Operators use these tuples to unblock themselves

HFTA: Heartbeats in selection o Mostly identical to selection LFTAs n n n o Unpack field values referenced in query predicate Check if predicate is satisfied If satisfied, generate projection list Difference: can receive temporal update tuples (in addition to regular data tuples) n n Temporal update tuple is received Operator updates the saved values of all temporal attributes referenced in query Select Generates new temporal update tuple Rest of normal tuple processing is bypassed

HFTA: Heartbeats in aggregation and sampling o Non-blocking operator n n o Aggregates data within a time window (epoch) Epoch defined by values of temporal group-by attributes Required to keep all groups and corresponding aggregates until end of epoch n n Then flushes to output stream “Slow flush” to avoid overflow o o One output tuple for each input tuple Change in epoch immediate flush

HFTA: Heartbeats in stream merge o o Union of two streams R and S in a way that preserves the ordering properties of the temporal attributes R and S must have same schema Both must have a temporal field to merge on Stream buffers until the other catches up (if difference in time fields)

HFTA: Heartbeats in join o Relate two data streams by timestamp n o o e. g. , R. tr = 2 * S. ts INNER, LEFT, RIGHT, and FULL OUTER Minimum timestamps for R and S maintained Buffers input streams to ensure they match up by timestamp predicate May include temporal attributes

Other heartbeat applications o o o Initial goal of heartbeats was to collect statistics about nodes in a distributed setting Main focus of paper is to use heartbeats to carry stream punctuation Found that there are other uses for heartbeats

Fault tolerance o o o Heartbeat mechanism used to detect node failures in distributed systems Nodes must “ping” to indicate still alive Gigascope slightly different: n n o Heartbeats periodically generated by low-level queries Propagated upward through query execution DAG Constant flow of heartbeat tuples indicates if lowlevel sub-query is responding or not responding n No heartbeat over specified amount of time query has failed and recovery is initiated

System performance analysis o o o Heartbeat message subject to same routing as other data in network Heartbeat visit all nodes Statistics gathered on every node visit Full trace of all nodes Can be used to identify poor-performing nodes

Distributed query optimization o Automated tools to utilize collected statistics n o Re-optimize query execution plans (eliminate identified bottlenecks) Other statistics: n n n Predicate selectivities Data arrival rates Tuple processing costs

Performance evaluation o o Experiments conducted on live network feed Queries monitor three network interfaces n n o Approximately 100, 000 packets per second n o o main 1 and main 2: DAG 4. 3 GE Gigabit Ethernet interfaces (see bulk of traffic) control: 100 Mbit interface 400 Mbits/sec Dual-processor 2. 8 GHz P 4, 4 GB RAM, Free. BSD 4. 10 Focus is to unblock streaming operators n n high-rate main links low-rate backup links (control)

Evaluation: Unblocking stream merge using heartbeats o o Effect of heartbeats on memory usage of queries that use stream merge operator Used the following query: SELECT tb, protocol, src. IP, dest. IP, src. Port, dest. Port, count(*) FROM Data. Protocol GROUP BY time/10 as tb, protocol, src. IP, dest. IP, src. Port, dest. Port

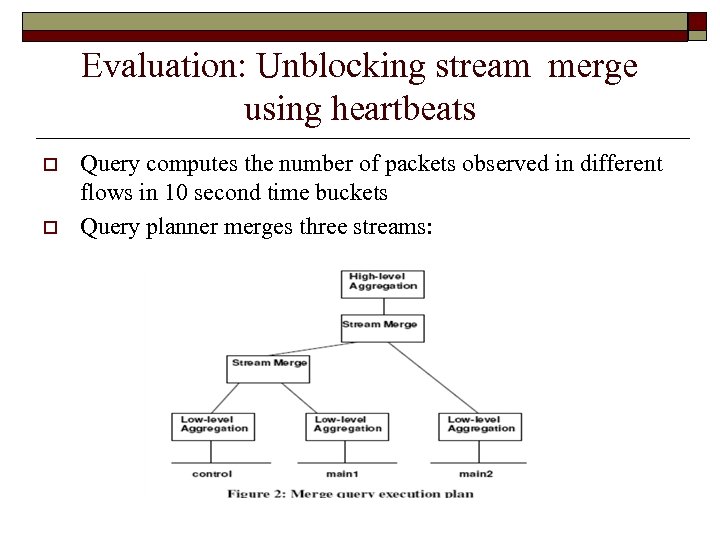

Evaluation: Unblocking stream merge using heartbeats o o Query computes the number of packets observed in different flows in 10 second time buckets Query planner merges three streams:

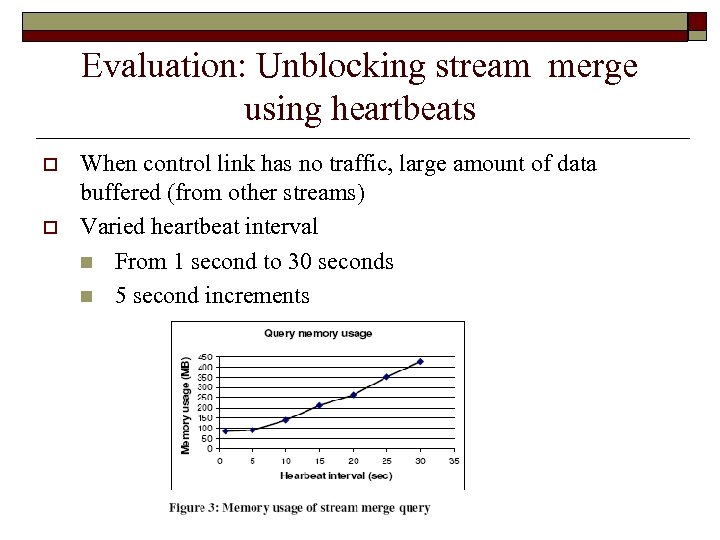

Evaluation: Unblocking stream merge using heartbeats o o When control link has no traffic, large amount of data buffered (from other streams) Varied heartbeat interval n From 1 second to 30 seconds n 5 second increments

Evaluation: Unblocking stream merge using heartbeats o Heartbeats successfully unblock the stream merge operators n n Higher intervals more state maintained Also results in more data being flushed when an epoch advances (could cause query failure when system lacks traffic sharing, such as slow flush)

Evaluation: Unblocking join operators using heartbeats o How effectively do heartbeats… n n Unblock join queries Reduce overall query memory requirements

Evaluation: Unblocking join operators using heartbeats (continued) n Query flow 1: SELECT tb, protocol, src. IP, dest. IP, src. Port, dest. Port, count(*) as cnt FROM [main 0_and_control]. Data. Protocol GROUP BY time/10 as tb, protocol, src. IP, dest. IP, src. Port, dest. Port; n Query flow 2: SELECT tb, protocol, src. IP, dest. IP, src. Port, dest. Port, count(*) as cnt FROM main 1. Data. Protocol GROUP BY time/10 as tb, protocol, src. IP, dest. IP, src. Port, dest. Port;

Evaluation: Unblocking join operators using heartbeats (continued) o Query full_flow: SELECT flow 1. tb, flow 1. protocol, flow 1. src. IP, flow 1. dest. IP, flow 1. src. Port, flow 1. dest. Port, flow 1. cnt, flow 2. cnt OUTER_JOIN FROM flow 1, flow 2 WHERE flow 1. src. IP=flow 2. src. IP and flow 1. dest. IP=flow 2. dest. IP and flow 1. src. Port=flow 2. src. Port and flow 1. dest. Port=flow 2. dest. Port and flow 1. protocol=flow 2. protocol and flow 1. tb = flow 2. tb

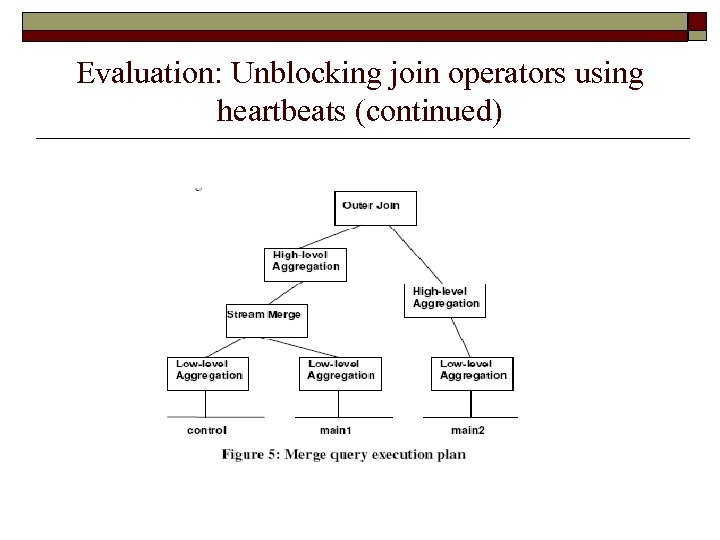

Evaluation: Unblocking join operators using heartbeats (continued)

Evaluation: Unblocking join operators using heartbeats (continued) o Two sub-queries compute the flows aggregated in 10 second time-buckets n n o main 1+control main 2 Full query results combined using full outer join for final output

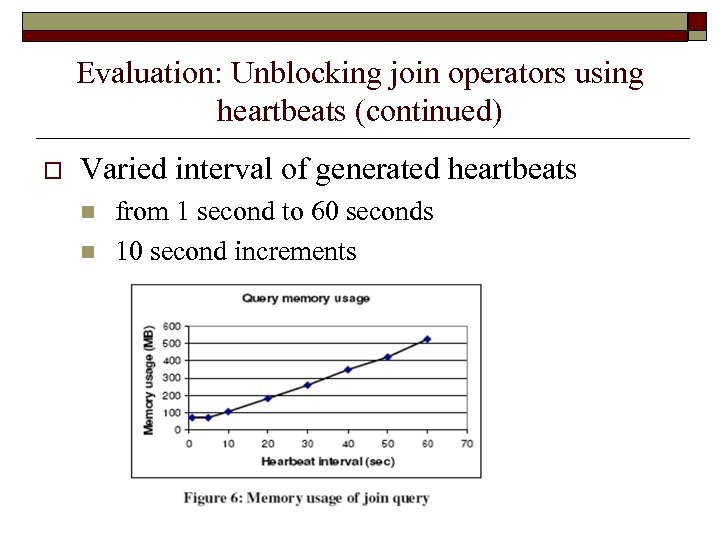

Evaluation: Unblocking join operators using heartbeats (continued) o Varied interval of generated heartbeats n n from 1 second to 60 seconds 10 second increments

Evaluation: Unblocking join operators using heartbeats (continued) o Less memory usage with greater heartbeat interval (linear growth) n n Avoid accumulating large state of blocking operators Decrease bursty-ness of output (since less data is dumped at the end of the heartbeat interval when the interval is low)

Evaluation: CPU overhead of heartbeat generation o o Measure CPU overhead from heartbeats Measure average CPU load of a merge query: SELET tb, protocol, src. IP, dest. IP, src. Port, dest. Port, count(*) FROM Data. Protocol GROUP BY time/10 as tb, protocol, src. IP, dest. IP, src. Port, dest. Port o o Run on two high-rate interfaces Compared 1 second heartbeat interval vs. identical system with heartbeats disabled

Evaluation: CPU overhead of heartbeat generation (continued) o o Both monitored links have moderately high load, so merge operators are naturally unblocked Heartbeats disabled: 37. 3% CPU load Heartbeats enabled: 37. 5% CPU load Insignificant difference n o Explained by variations in traffic load Conclusion: overhead of heartbeat mechanism is immeasurably small

Conclusion o Heartbeats regularly generated n n o Heartbeat mechanism can be used for other applications in distributed setting n n n o Propagated to all nodes Attach temporal update tuples to unblock operators Detecting node failure Performance monitoring Query optimization Performance evaluation n n Capable of working at multiple Gigabit line speeds Significantly decrease query memory utilization

ce06e14ca6f9cf4544e6de3936f75fae.ppt