711a6243141941ae45048033fb3fa1c1.ppt

- Количество слайдов: 53

A Future for Parallel Computer Architectures Mark D. Hill Computer Sciences Department University of Wisconsin—Madison Multifacet Project (www. cs. wisc. edu/multifacet) August 2004 Full Disclosure: Consult for Sun & US NSF © 2004 Mark D. Hill Wisconsin Multifacet Project

A Future for Parallel Computer Architectures Mark D. Hill Computer Sciences Department University of Wisconsin—Madison Multifacet Project (www. cs. wisc. edu/multifacet) August 2004 Full Disclosure: Consult for Sun & US NSF © 2004 Mark D. Hill Wisconsin Multifacet Project

Summary • Issues – Moore’s Law, etc. – Instruction Level Parallelism for More Performance – But Memory Latency Longer (e. g. , 200 FP multiplies) • Must Exploit Memory Level Parallelism – At Thread: Runahead & Continual Flow Pipeline – At Processor: Simultaneous Multithreading – At Chip: Chip Multiprocessing © 2004 Mark D. Hill 2 Wisconsin Multifacet Project

Summary • Issues – Moore’s Law, etc. – Instruction Level Parallelism for More Performance – But Memory Latency Longer (e. g. , 200 FP multiplies) • Must Exploit Memory Level Parallelism – At Thread: Runahead & Continual Flow Pipeline – At Processor: Simultaneous Multithreading – At Chip: Chip Multiprocessing © 2004 Mark D. Hill 2 Wisconsin Multifacet Project

Outline • Computer Architecture Drivers – Moore’s Law, Microprocessors, & Caching • Instruction Level Parallelism (ILP) Review • Memory Level Parallelism (MLP) • Improving MLP of Thread • Improving MLP of a Core or Chip • CMP Systems © 2004 Mark D. Hill 3 Wisconsin Multifacet Project

Outline • Computer Architecture Drivers – Moore’s Law, Microprocessors, & Caching • Instruction Level Parallelism (ILP) Review • Memory Level Parallelism (MLP) • Improving MLP of Thread • Improving MLP of a Core or Chip • CMP Systems © 2004 Mark D. Hill 3 Wisconsin Multifacet Project

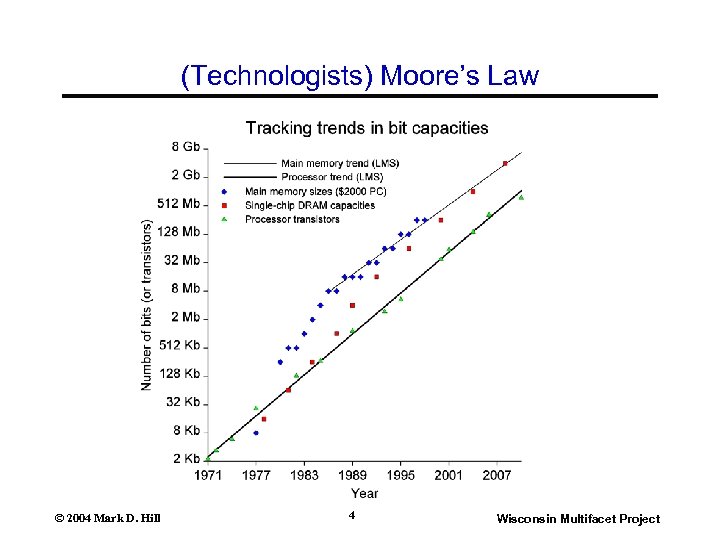

(Technologists) Moore’s Law © 2004 Mark D. Hill 4 Wisconsin Multifacet Project

(Technologists) Moore’s Law © 2004 Mark D. Hill 4 Wisconsin Multifacet Project

What If Your Salary? • Parameters – $16 base – 59% growth/year – 40 years • • • Initially $16 buy book 3 rd year’s $64 buy computer game 16 th year’s $27, 000 buy car 22 nd year’s $430, 000 buy house 40 th year’s > billion dollars buy a lot You have to find fundamental new ways to spend money! © 2004 Mark D. Hill 5 Wisconsin Multifacet Project

What If Your Salary? • Parameters – $16 base – 59% growth/year – 40 years • • • Initially $16 buy book 3 rd year’s $64 buy computer game 16 th year’s $27, 000 buy car 22 nd year’s $430, 000 buy house 40 th year’s > billion dollars buy a lot You have to find fundamental new ways to spend money! © 2004 Mark D. Hill 5 Wisconsin Multifacet Project

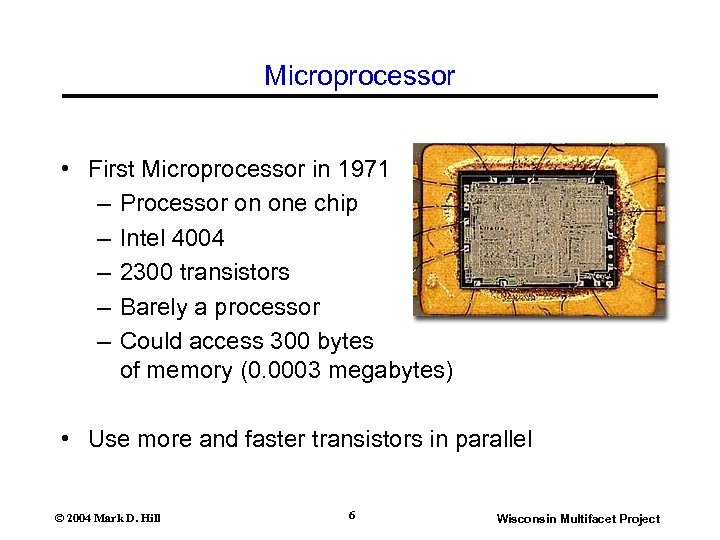

Microprocessor • First Microprocessor in 1971 – Processor on one chip – Intel 4004 – 2300 transistors – Barely a processor – Could access 300 bytes of memory (0. 0003 megabytes) • Use more and faster transistors in parallel © 2004 Mark D. Hill 6 Wisconsin Multifacet Project

Microprocessor • First Microprocessor in 1971 – Processor on one chip – Intel 4004 – 2300 transistors – Barely a processor – Could access 300 bytes of memory (0. 0003 megabytes) • Use more and faster transistors in parallel © 2004 Mark D. Hill 6 Wisconsin Multifacet Project

Other “Moore’s Laws” • Other technologies improving rapidly – Magnetic disk capacity – DRAM capacity – Fiber-optic network bandwidth • Other aspects improving slowly – Delay to memory – Delay to disk – Delay across networks • Computer Implementor’s Challenge – Design with dissimilarly expanding resources – To Double computer performance every two years – A. k. a. , (Popular) Moore’s Law © 2004 Mark D. Hill 7 Wisconsin Multifacet Project

Other “Moore’s Laws” • Other technologies improving rapidly – Magnetic disk capacity – DRAM capacity – Fiber-optic network bandwidth • Other aspects improving slowly – Delay to memory – Delay to disk – Delay across networks • Computer Implementor’s Challenge – Design with dissimilarly expanding resources – To Double computer performance every two years – A. k. a. , (Popular) Moore’s Law © 2004 Mark D. Hill 7 Wisconsin Multifacet Project

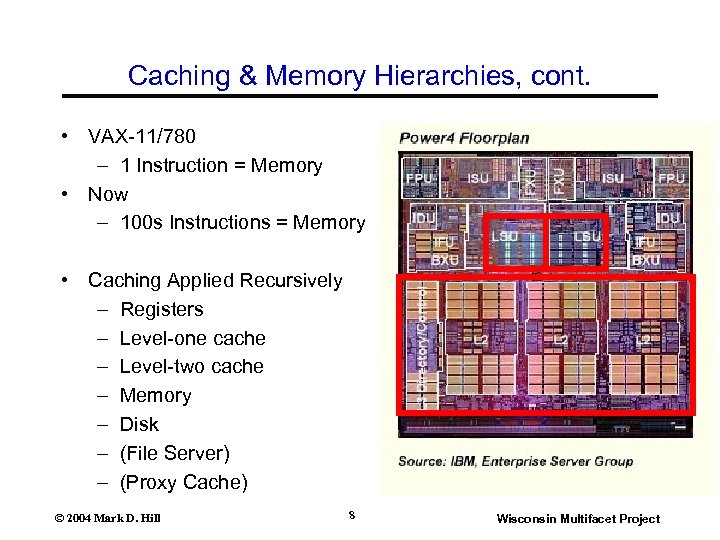

Caching & Memory Hierarchies, cont. • VAX-11/780 – 1 Instruction = Memory • Now – 100 s Instructions = Memory • Caching Applied Recursively – Registers – Level-one cache – Level-two cache – Memory – Disk – (File Server) – (Proxy Cache) © 2004 Mark D. Hill 8 Wisconsin Multifacet Project

Caching & Memory Hierarchies, cont. • VAX-11/780 – 1 Instruction = Memory • Now – 100 s Instructions = Memory • Caching Applied Recursively – Registers – Level-one cache – Level-two cache – Memory – Disk – (File Server) – (Proxy Cache) © 2004 Mark D. Hill 8 Wisconsin Multifacet Project

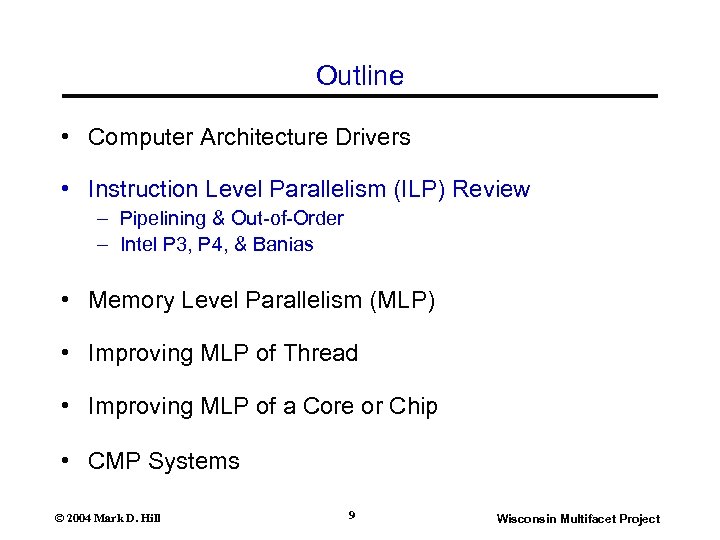

Outline • Computer Architecture Drivers • Instruction Level Parallelism (ILP) Review – Pipelining & Out-of-Order – Intel P 3, P 4, & Banias • Memory Level Parallelism (MLP) • Improving MLP of Thread • Improving MLP of a Core or Chip • CMP Systems © 2004 Mark D. Hill 9 Wisconsin Multifacet Project

Outline • Computer Architecture Drivers • Instruction Level Parallelism (ILP) Review – Pipelining & Out-of-Order – Intel P 3, P 4, & Banias • Memory Level Parallelism (MLP) • Improving MLP of Thread • Improving MLP of a Core or Chip • CMP Systems © 2004 Mark D. Hill 9 Wisconsin Multifacet Project

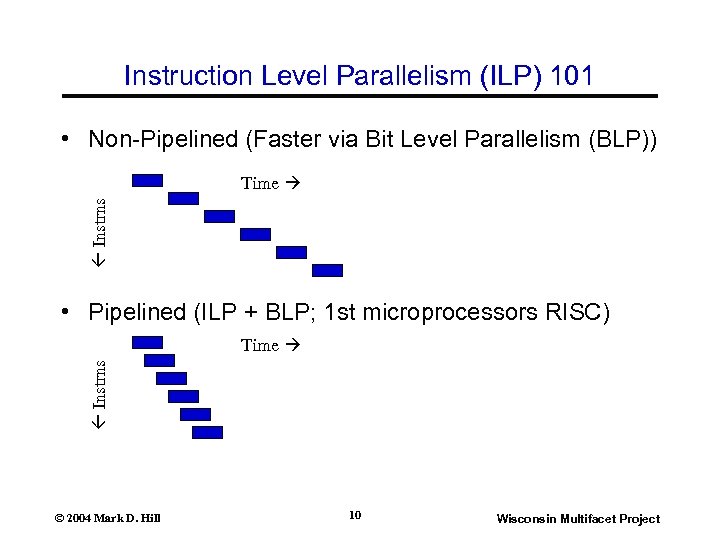

Instruction Level Parallelism (ILP) 101 • Non-Pipelined (Faster via Bit Level Parallelism (BLP)) Instrns Time • Pipelined (ILP + BLP; 1 st microprocessors RISC) Instrns Time © 2004 Mark D. Hill 10 Wisconsin Multifacet Project

Instruction Level Parallelism (ILP) 101 • Non-Pipelined (Faster via Bit Level Parallelism (BLP)) Instrns Time • Pipelined (ILP + BLP; 1 st microprocessors RISC) Instrns Time © 2004 Mark D. Hill 10 Wisconsin Multifacet Project

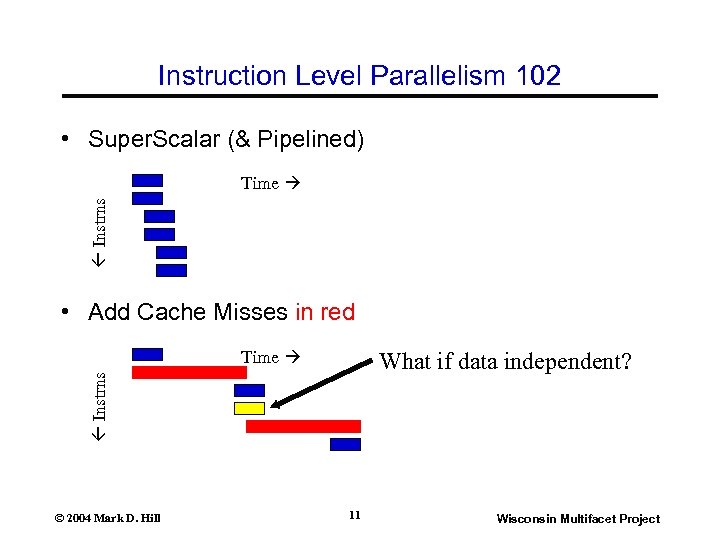

Instruction Level Parallelism 102 • Super. Scalar (& Pipelined) Instrns Time • Add Cache Misses in red Time Instrns What if data independent? © 2004 Mark D. Hill 11 Wisconsin Multifacet Project

Instruction Level Parallelism 102 • Super. Scalar (& Pipelined) Instrns Time • Add Cache Misses in red Time Instrns What if data independent? © 2004 Mark D. Hill 11 Wisconsin Multifacet Project

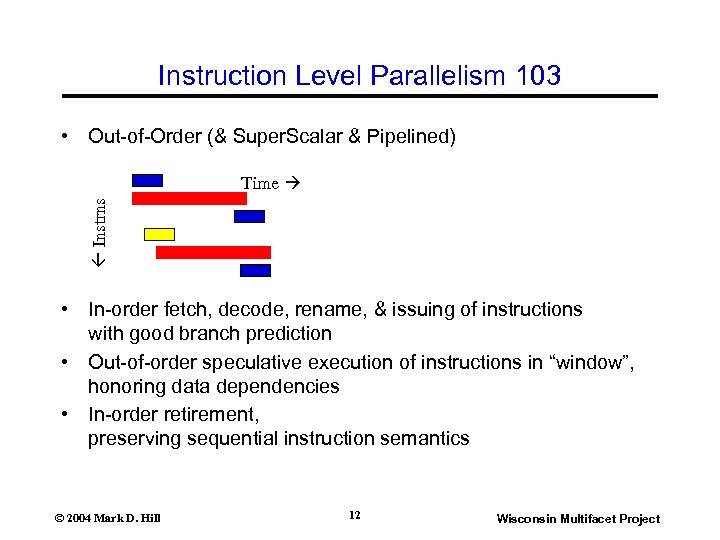

Instruction Level Parallelism 103 • Out-of-Order (& Super. Scalar & Pipelined) Instrns Time • In-order fetch, decode, rename, & issuing of instructions with good branch prediction • Out-of-order speculative execution of instructions in “window”, honoring data dependencies • In-order retirement, preserving sequential instruction semantics © 2004 Mark D. Hill 12 Wisconsin Multifacet Project

Instruction Level Parallelism 103 • Out-of-Order (& Super. Scalar & Pipelined) Instrns Time • In-order fetch, decode, rename, & issuing of instructions with good branch prediction • Out-of-order speculative execution of instructions in “window”, honoring data dependencies • In-order retirement, preserving sequential instruction semantics © 2004 Mark D. Hill 12 Wisconsin Multifacet Project

Out-of-Order Example: Intel x 86 P 6 Core • “CISC” Twist to Out-of-Order – In-order front end cracks x 86 instructions into micro-ops (like RISC instructions) – Out-of-order execution – In-Order retirement of micro-ops in x 86 instruction groups • Used in Pentium Pro, II, & III – – 3 -way superscalar of micro-ops 10 -stage pipeline (for branch misprediction penalty) Sophisticated branch prediction Deep pipeline allowed scaling for many generations © 2004 Mark D. Hill 13 Wisconsin Multifacet Project

Out-of-Order Example: Intel x 86 P 6 Core • “CISC” Twist to Out-of-Order – In-order front end cracks x 86 instructions into micro-ops (like RISC instructions) – Out-of-order execution – In-Order retirement of micro-ops in x 86 instruction groups • Used in Pentium Pro, II, & III – – 3 -way superscalar of micro-ops 10 -stage pipeline (for branch misprediction penalty) Sophisticated branch prediction Deep pipeline allowed scaling for many generations © 2004 Mark D. Hill 13 Wisconsin Multifacet Project

![Pentium 4 Core [Hinton 2001] • Follow basic approach of P 6 core • Pentium 4 Core [Hinton 2001] • Follow basic approach of P 6 core •](https://present5.com/presentation/711a6243141941ae45048033fb3fa1c1/image-14.jpg) Pentium 4 Core [Hinton 2001] • Follow basic approach of P 6 core • Trace Cache stores dynamic micro-op sequences • 20 -stage pipeline (for branch misprediction penalty) • 128 active micro-ops (48 loads & 24 stores) • Deep pipeline to allow scaling for many generations © 2004 Mark D. Hill 14 Wisconsin Multifacet Project

Pentium 4 Core [Hinton 2001] • Follow basic approach of P 6 core • Trace Cache stores dynamic micro-op sequences • 20 -stage pipeline (for branch misprediction penalty) • 128 active micro-ops (48 loads & 24 stores) • Deep pipeline to allow scaling for many generations © 2004 Mark D. Hill 14 Wisconsin Multifacet Project

Intel Kills Pentium 4 Roadmap • Why? I can speculate • Too Much Power? – More transistors – Higher-frequency transistors – Designed before power became first-order design constraint • Too Little Performance? Time/Program = – Instructions/Program * Cycles/Instruction * Time/Cycle • For x 86: Instructions/Cycle * Frequency • Pent 4 Instruction/Cycle loss vs. Frequency gains? • Intel moving away from marketing with frequency! © 2004 Mark D. Hill 15 Wisconsin Multifacet Project

Intel Kills Pentium 4 Roadmap • Why? I can speculate • Too Much Power? – More transistors – Higher-frequency transistors – Designed before power became first-order design constraint • Too Little Performance? Time/Program = – Instructions/Program * Cycles/Instruction * Time/Cycle • For x 86: Instructions/Cycle * Frequency • Pent 4 Instruction/Cycle loss vs. Frequency gains? • Intel moving away from marketing with frequency! © 2004 Mark D. Hill 15 Wisconsin Multifacet Project

![Pentium M / Banias [Gochman 2003] • For laptops, but now more general – Pentium M / Banias [Gochman 2003] • For laptops, but now more general –](https://present5.com/presentation/711a6243141941ae45048033fb3fa1c1/image-16.jpg) Pentium M / Banias [Gochman 2003] • For laptops, but now more general – Key: Feature must add 1% performance for 3% power – Why: Increasing voltage for 1% perf. costs 3% power • Techniques – – – Enhance Intel Speed. Step™ Shorter pipeline (more like P 6) Better branch predictor (e. g. , loops) Special handling of memory stack Fused micro-ops Lower power transistors (off critical path) © 2004 Mark D. Hill 16 Wisconsin Multifacet Project

Pentium M / Banias [Gochman 2003] • For laptops, but now more general – Key: Feature must add 1% performance for 3% power – Why: Increasing voltage for 1% perf. costs 3% power • Techniques – – – Enhance Intel Speed. Step™ Shorter pipeline (more like P 6) Better branch predictor (e. g. , loops) Special handling of memory stack Fused micro-ops Lower power transistors (off critical path) © 2004 Mark D. Hill 16 Wisconsin Multifacet Project

What about Future for Intel & Others? • Worry about power & energy (not this talk) • Memory latency too great for out-of-order cores to tolerate (coming next) Memory Level Parallelism for Thread, Processor, & Chip! © 2004 Mark D. Hill 17 Wisconsin Multifacet Project

What about Future for Intel & Others? • Worry about power & energy (not this talk) • Memory latency too great for out-of-order cores to tolerate (coming next) Memory Level Parallelism for Thread, Processor, & Chip! © 2004 Mark D. Hill 17 Wisconsin Multifacet Project

Outline • Computer Architecture Drivers • Instruction Level Parallelism (ILP) Review • Memory Level Parallelism (MLP) – Cause & Effect • Improving MLP of Thread • Improving MLP of a Core or Chip • CMP Systems © 2004 Mark D. Hill 18 Wisconsin Multifacet Project

Outline • Computer Architecture Drivers • Instruction Level Parallelism (ILP) Review • Memory Level Parallelism (MLP) – Cause & Effect • Improving MLP of Thread • Improving MLP of a Core or Chip • CMP Systems © 2004 Mark D. Hill 18 Wisconsin Multifacet Project

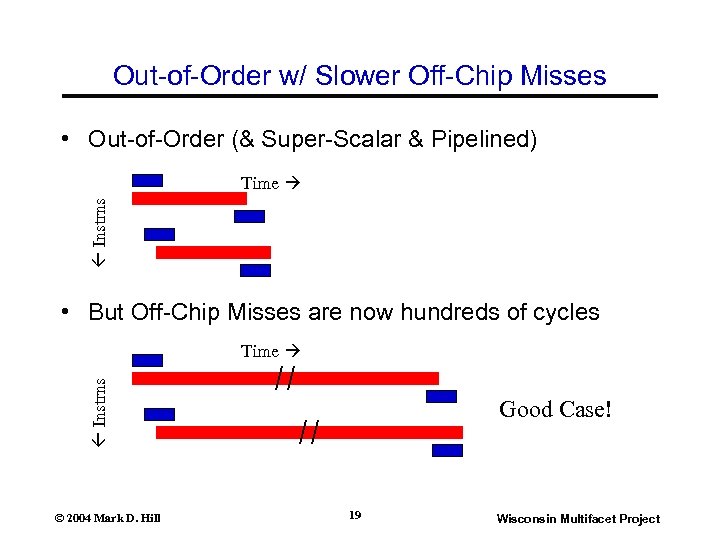

Out-of-Order w/ Slower Off-Chip Misses • Out-of-Order (& Super-Scalar & Pipelined) Instrns Time • But Off-Chip Misses are now hundreds of cycles Instrns Time © 2004 Mark D. Hill Good Case! 19 Wisconsin Multifacet Project

Out-of-Order w/ Slower Off-Chip Misses • Out-of-Order (& Super-Scalar & Pipelined) Instrns Time • But Off-Chip Misses are now hundreds of cycles Instrns Time © 2004 Mark D. Hill Good Case! 19 Wisconsin Multifacet Project

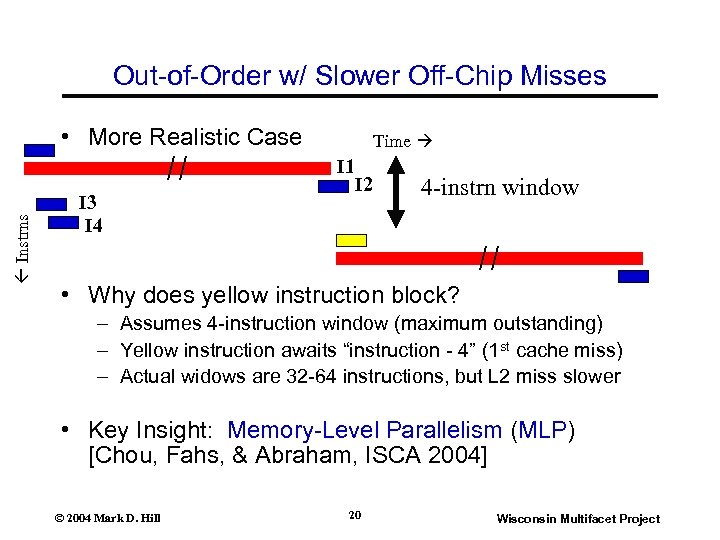

Out-of-Order w/ Slower Off-Chip Misses • More Realistic Case Time Instrns I 1 I 3 I 4 I 2 4 -instrn window • Why does yellow instruction block? – Assumes 4 -instruction window (maximum outstanding) – Yellow instruction awaits “instruction - 4” (1 st cache miss) – Actual widows are 32 -64 instructions, but L 2 miss slower • Key Insight: Memory-Level Parallelism (MLP) [Chou, Fahs, & Abraham, ISCA 2004] © 2004 Mark D. Hill 20 Wisconsin Multifacet Project

Out-of-Order w/ Slower Off-Chip Misses • More Realistic Case Time Instrns I 1 I 3 I 4 I 2 4 -instrn window • Why does yellow instruction block? – Assumes 4 -instruction window (maximum outstanding) – Yellow instruction awaits “instruction - 4” (1 st cache miss) – Actual widows are 32 -64 instructions, but L 2 miss slower • Key Insight: Memory-Level Parallelism (MLP) [Chou, Fahs, & Abraham, ISCA 2004] © 2004 Mark D. Hill 20 Wisconsin Multifacet Project

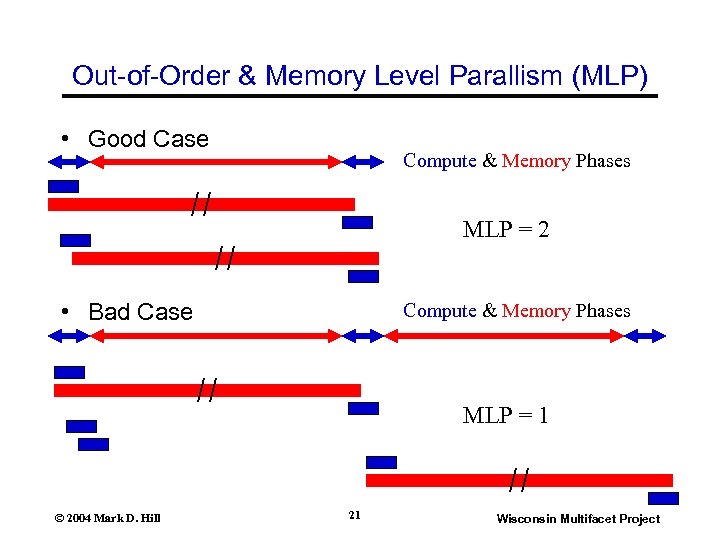

Out-of-Order & Memory Level Parallism (MLP) • Good Case Compute & Memory Phases MLP = 2 • Bad Case Compute & Memory Phases MLP = 1 © 2004 Mark D. Hill 21 Wisconsin Multifacet Project

Out-of-Order & Memory Level Parallism (MLP) • Good Case Compute & Memory Phases MLP = 2 • Bad Case Compute & Memory Phases MLP = 1 © 2004 Mark D. Hill 21 Wisconsin Multifacet Project

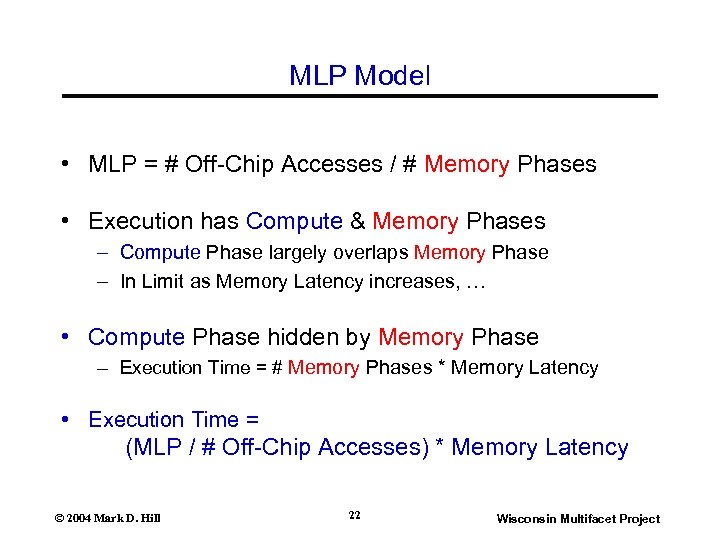

MLP Model • MLP = # Off-Chip Accesses / # Memory Phases • Execution has Compute & Memory Phases – Compute Phase largely overlaps Memory Phase – In Limit as Memory Latency increases, … • Compute Phase hidden by Memory Phase – Execution Time = # Memory Phases * Memory Latency • Execution Time = (MLP / # Off-Chip Accesses) * Memory Latency © 2004 Mark D. Hill 22 Wisconsin Multifacet Project

MLP Model • MLP = # Off-Chip Accesses / # Memory Phases • Execution has Compute & Memory Phases – Compute Phase largely overlaps Memory Phase – In Limit as Memory Latency increases, … • Compute Phase hidden by Memory Phase – Execution Time = # Memory Phases * Memory Latency • Execution Time = (MLP / # Off-Chip Accesses) * Memory Latency © 2004 Mark D. Hill 22 Wisconsin Multifacet Project

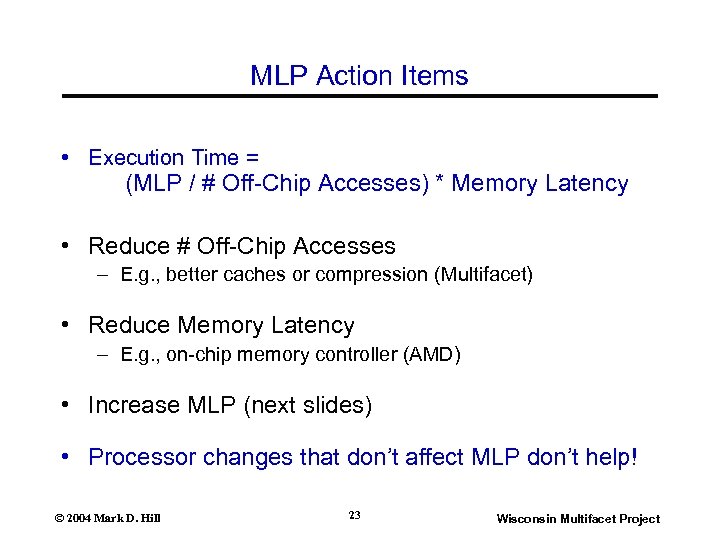

MLP Action Items • Execution Time = (MLP / # Off-Chip Accesses) * Memory Latency • Reduce # Off-Chip Accesses – E. g. , better caches or compression (Multifacet) • Reduce Memory Latency – E. g. , on-chip memory controller (AMD) • Increase MLP (next slides) • Processor changes that don’t affect MLP don’t help! © 2004 Mark D. Hill 23 Wisconsin Multifacet Project

MLP Action Items • Execution Time = (MLP / # Off-Chip Accesses) * Memory Latency • Reduce # Off-Chip Accesses – E. g. , better caches or compression (Multifacet) • Reduce Memory Latency – E. g. , on-chip memory controller (AMD) • Increase MLP (next slides) • Processor changes that don’t affect MLP don’t help! © 2004 Mark D. Hill 23 Wisconsin Multifacet Project

![What Limits MLP in Processor? [Chou et al. ] • Issue window and reorder What Limits MLP in Processor? [Chou et al. ] • Issue window and reorder](https://present5.com/presentation/711a6243141941ae45048033fb3fa1c1/image-24.jpg) What Limits MLP in Processor? [Chou et al. ] • Issue window and reorder buffer size • Instruction fetch off-chip accesses • Unresolvable mispredicted branches • Load and branch issue restrictions • Serializing instructions © 2004 Mark D. Hill 24 Wisconsin Multifacet Project

What Limits MLP in Processor? [Chou et al. ] • Issue window and reorder buffer size • Instruction fetch off-chip accesses • Unresolvable mispredicted branches • Load and branch issue restrictions • Serializing instructions © 2004 Mark D. Hill 24 Wisconsin Multifacet Project

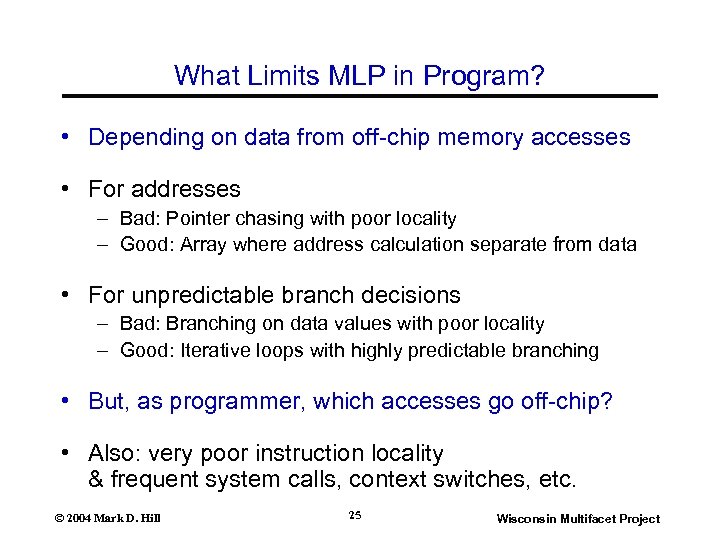

What Limits MLP in Program? • Depending on data from off-chip memory accesses • For addresses – Bad: Pointer chasing with poor locality – Good: Array where address calculation separate from data • For unpredictable branch decisions – Bad: Branching on data values with poor locality – Good: Iterative loops with highly predictable branching • But, as programmer, which accesses go off-chip? • Also: very poor instruction locality & frequent system calls, context switches, etc. © 2004 Mark D. Hill 25 Wisconsin Multifacet Project

What Limits MLP in Program? • Depending on data from off-chip memory accesses • For addresses – Bad: Pointer chasing with poor locality – Good: Array where address calculation separate from data • For unpredictable branch decisions – Bad: Branching on data values with poor locality – Good: Iterative loops with highly predictable branching • But, as programmer, which accesses go off-chip? • Also: very poor instruction locality & frequent system calls, context switches, etc. © 2004 Mark D. Hill 25 Wisconsin Multifacet Project

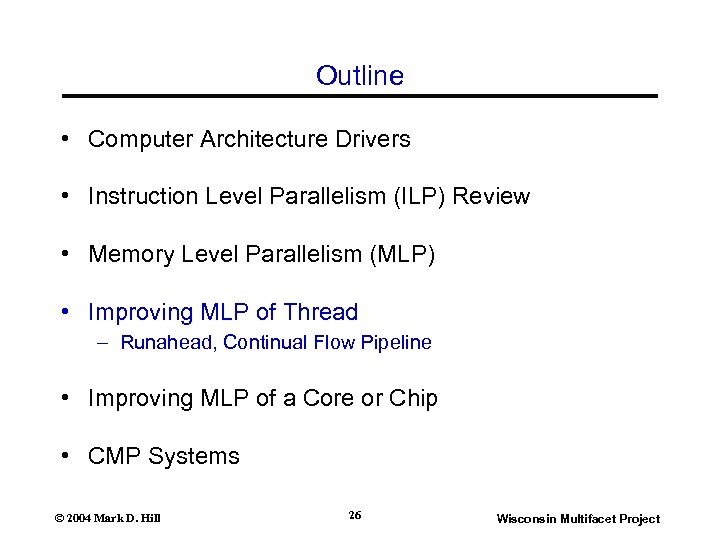

Outline • Computer Architecture Drivers • Instruction Level Parallelism (ILP) Review • Memory Level Parallelism (MLP) • Improving MLP of Thread – Runahead, Continual Flow Pipeline • Improving MLP of a Core or Chip • CMP Systems © 2004 Mark D. Hill 26 Wisconsin Multifacet Project

Outline • Computer Architecture Drivers • Instruction Level Parallelism (ILP) Review • Memory Level Parallelism (MLP) • Improving MLP of Thread – Runahead, Continual Flow Pipeline • Improving MLP of a Core or Chip • CMP Systems © 2004 Mark D. Hill 26 Wisconsin Multifacet Project

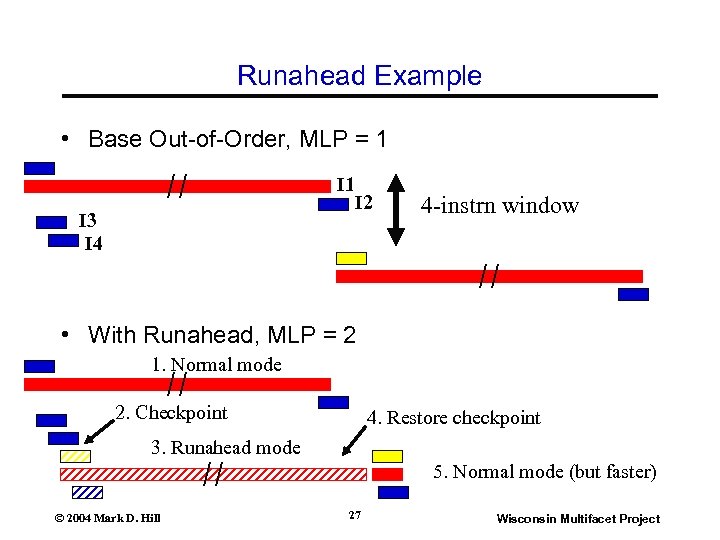

Runahead Example • Base Out-of-Order, MLP = 1 I 3 I 4 I 2 4 -instrn window • With Runahead, MLP = 2 1. Normal mode 2. Checkpoint 4. Restore checkpoint 3. Runahead mode 5. Normal mode (but faster) © 2004 Mark D. Hill 27 Wisconsin Multifacet Project

Runahead Example • Base Out-of-Order, MLP = 1 I 3 I 4 I 2 4 -instrn window • With Runahead, MLP = 2 1. Normal mode 2. Checkpoint 4. Restore checkpoint 3. Runahead mode 5. Normal mode (but faster) © 2004 Mark D. Hill 27 Wisconsin Multifacet Project

![Runahead Execution [Dundas ICS 97, Mutlu HPCA 03] 1. Execute normally until instruction M’s Runahead Execution [Dundas ICS 97, Mutlu HPCA 03] 1. Execute normally until instruction M’s](https://present5.com/presentation/711a6243141941ae45048033fb3fa1c1/image-28.jpg) Runahead Execution [Dundas ICS 97, Mutlu HPCA 03] 1. Execute normally until instruction M’s off-chip access blocks issue of more instructions 2. Checkpoint processor 3. Discard instruction M, set M’s destination register to poisoned, & speculatively Runahead – Instructions propagate poisoned from source to destination – Seek off-chip accesses to start prefetches & increase MLP 4. Restore checkpoint when off-chip access M returns 5. Resume normal execution (hopefully faster) © 2004 Mark D. Hill 28 Wisconsin Multifacet Project

Runahead Execution [Dundas ICS 97, Mutlu HPCA 03] 1. Execute normally until instruction M’s off-chip access blocks issue of more instructions 2. Checkpoint processor 3. Discard instruction M, set M’s destination register to poisoned, & speculatively Runahead – Instructions propagate poisoned from source to destination – Seek off-chip accesses to start prefetches & increase MLP 4. Restore checkpoint when off-chip access M returns 5. Resume normal execution (hopefully faster) © 2004 Mark D. Hill 28 Wisconsin Multifacet Project

![Continual Flow Pipeline [Srinivasan ASPLOS 04] Simplified Example Have off-chip access M free many Continual Flow Pipeline [Srinivasan ASPLOS 04] Simplified Example Have off-chip access M free many](https://present5.com/presentation/711a6243141941ae45048033fb3fa1c1/image-29.jpg) Continual Flow Pipeline [Srinivasan ASPLOS 04] Simplified Example Have off-chip access M free many resources, but SAVE Keep decoding instructions SAVE instructions dependent on M Execute instructions independent of M When M completes, execute SAVED instructions © 2004 Mark D. Hill 29 Wisconsin Multifacet Project

Continual Flow Pipeline [Srinivasan ASPLOS 04] Simplified Example Have off-chip access M free many resources, but SAVE Keep decoding instructions SAVE instructions dependent on M Execute instructions independent of M When M completes, execute SAVED instructions © 2004 Mark D. Hill 29 Wisconsin Multifacet Project

Implications of Runahead, & Continual Flow • Runahead – Discards dependent instructions – Speculatively executes independent instructions – When miss returns, re-executes dependent & independent instrns • Continual Flow Pipeline – Saves dependent instructions – Executes independent instructions – When miss returns, executes only saved dependent instructions • Assessment – – Both allow MLP to break past window limits Both limited by branch prediction accuracy on unresolved branches Continual Flow Pipeline sounds even more appealing But may not be worthwhile (vs. Runahead) & memory order issues © 2004 Mark D. Hill 30 Wisconsin Multifacet Project

Implications of Runahead, & Continual Flow • Runahead – Discards dependent instructions – Speculatively executes independent instructions – When miss returns, re-executes dependent & independent instrns • Continual Flow Pipeline – Saves dependent instructions – Executes independent instructions – When miss returns, executes only saved dependent instructions • Assessment – – Both allow MLP to break past window limits Both limited by branch prediction accuracy on unresolved branches Continual Flow Pipeline sounds even more appealing But may not be worthwhile (vs. Runahead) & memory order issues © 2004 Mark D. Hill 30 Wisconsin Multifacet Project

Outline • Computer Architecture Drivers • Instruction Level Parallelism (ILP) Review • Memory Level Parallelism (MLP) • Improving MLP of Thread • Improving MLP of a Core or Chip – Core: Simultaneous Multithreading – Chip: Chip Multiprocessing • CMP Systems © 2004 Mark D. Hill 31 Wisconsin Multifacet Project

Outline • Computer Architecture Drivers • Instruction Level Parallelism (ILP) Review • Memory Level Parallelism (MLP) • Improving MLP of Thread • Improving MLP of a Core or Chip – Core: Simultaneous Multithreading – Chip: Chip Multiprocessing • CMP Systems © 2004 Mark D. Hill 31 Wisconsin Multifacet Project

Getting MLP from Thread Level Parallelism • Runahead & Continual Flow seek MLP for Thread • More MLP for Processor? – More parallel off-chip accesses for a processor? – Yes: Simultaneous Multithreading • More MLP for Chip? – More parallel off-chip accesses for a chip? – Yes: Chip Multiprocessing • Exploit workload Thread Level Parallelism (TLP) © 2004 Mark D. Hill 32 Wisconsin Multifacet Project

Getting MLP from Thread Level Parallelism • Runahead & Continual Flow seek MLP for Thread • More MLP for Processor? – More parallel off-chip accesses for a processor? – Yes: Simultaneous Multithreading • More MLP for Chip? – More parallel off-chip accesses for a chip? – Yes: Chip Multiprocessing • Exploit workload Thread Level Parallelism (TLP) © 2004 Mark D. Hill 32 Wisconsin Multifacet Project

![Simultaneous Multithreading [U Washington] • Turn a physical processor into S logical processors • Simultaneous Multithreading [U Washington] • Turn a physical processor into S logical processors •](https://present5.com/presentation/711a6243141941ae45048033fb3fa1c1/image-33.jpg) Simultaneous Multithreading [U Washington] • Turn a physical processor into S logical processors • Need S copies of architectural state, S=2, 4, (8? ) – PC, Registers, PSW, etc. (small!) • Completely share – Caches, functional units, & datapaths • Manage via threshold sharing, partition, etc. – Physical registers, issue queue, & reorder buffer • Intel calls Hyperthreading in Pentium 4 – 1. 4 x performance for S=2 with little area, but complexity – But Pentium 4 is now dead & no Hyperthreading in Banias © 2004 Mark D. Hill 33 Wisconsin Multifacet Project

Simultaneous Multithreading [U Washington] • Turn a physical processor into S logical processors • Need S copies of architectural state, S=2, 4, (8? ) – PC, Registers, PSW, etc. (small!) • Completely share – Caches, functional units, & datapaths • Manage via threshold sharing, partition, etc. – Physical registers, issue queue, & reorder buffer • Intel calls Hyperthreading in Pentium 4 – 1. 4 x performance for S=2 with little area, but complexity – But Pentium 4 is now dead & no Hyperthreading in Banias © 2004 Mark D. Hill 33 Wisconsin Multifacet Project

Simultaneous Multithreading Assessment • Programming – Supports finer-grained sharing than old-style MP – But gains less than S and S is small • Have Multi-Threaded Workload – Hides off-chip latencies better than Runahead – E. g, 4 threads w/ MLP 1. 5 each MLP = 6 • Have Single-Threaded Workload – Base SMT No Help – Many “Helper Thread” Ideas • Expect SMT in processors for servers • Probably SMT even in processors for clients © 2004 Mark D. Hill 34 Wisconsin Multifacet Project

Simultaneous Multithreading Assessment • Programming – Supports finer-grained sharing than old-style MP – But gains less than S and S is small • Have Multi-Threaded Workload – Hides off-chip latencies better than Runahead – E. g, 4 threads w/ MLP 1. 5 each MLP = 6 • Have Single-Threaded Workload – Base SMT No Help – Many “Helper Thread” Ideas • Expect SMT in processors for servers • Probably SMT even in processors for clients © 2004 Mark D. Hill 34 Wisconsin Multifacet Project

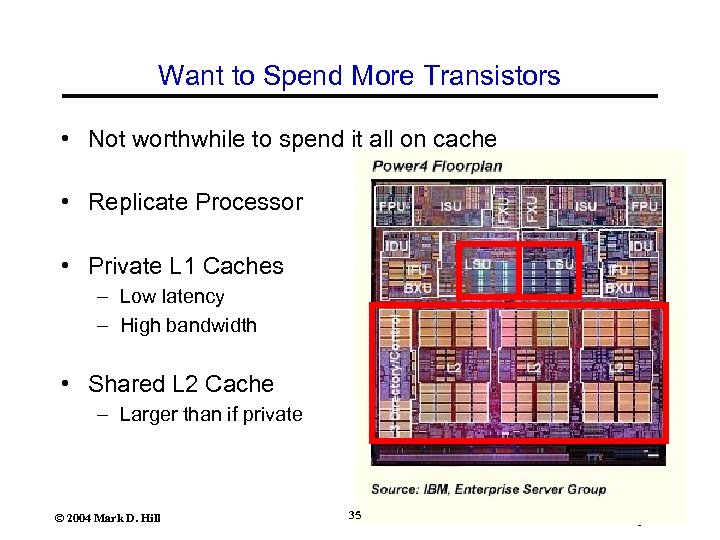

Want to Spend More Transistors • Not worthwhile to spend it all on cache • Replicate Processor • Private L 1 Caches – Low latency – High bandwidth • Shared L 2 Cache – Larger than if private © 2004 Mark D. Hill 35 Wisconsin Multifacet Project

Want to Spend More Transistors • Not worthwhile to spend it all on cache • Replicate Processor • Private L 1 Caches – Low latency – High bandwidth • Shared L 2 Cache – Larger than if private © 2004 Mark D. Hill 35 Wisconsin Multifacet Project

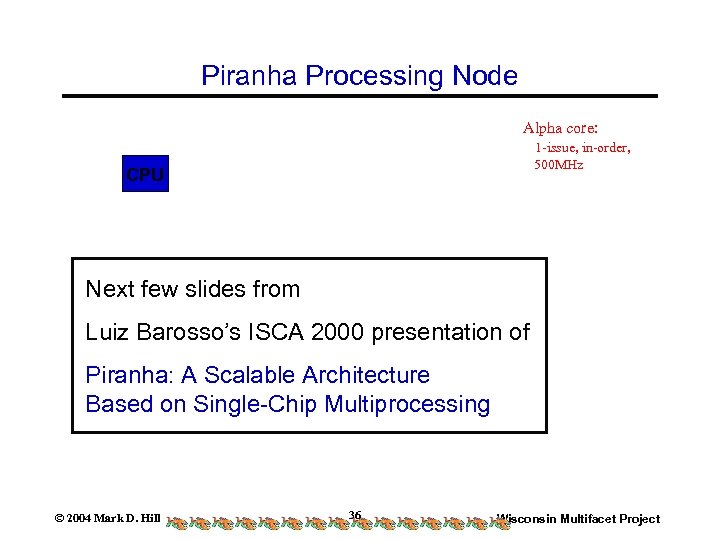

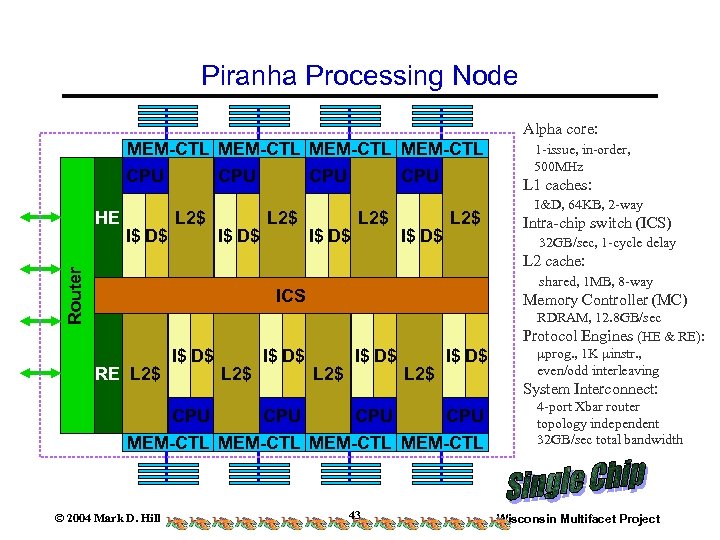

Piranha Processing Node Alpha core: 1 -issue, in-order, 500 MHz CPU Next few slides from Luiz Barosso’s ISCA 2000 presentation of Piranha: A Scalable Architecture Based on Single-Chip Multiprocessing © 2004 Mark D. Hill 36 Wisconsin Multifacet Project

Piranha Processing Node Alpha core: 1 -issue, in-order, 500 MHz CPU Next few slides from Luiz Barosso’s ISCA 2000 presentation of Piranha: A Scalable Architecture Based on Single-Chip Multiprocessing © 2004 Mark D. Hill 36 Wisconsin Multifacet Project

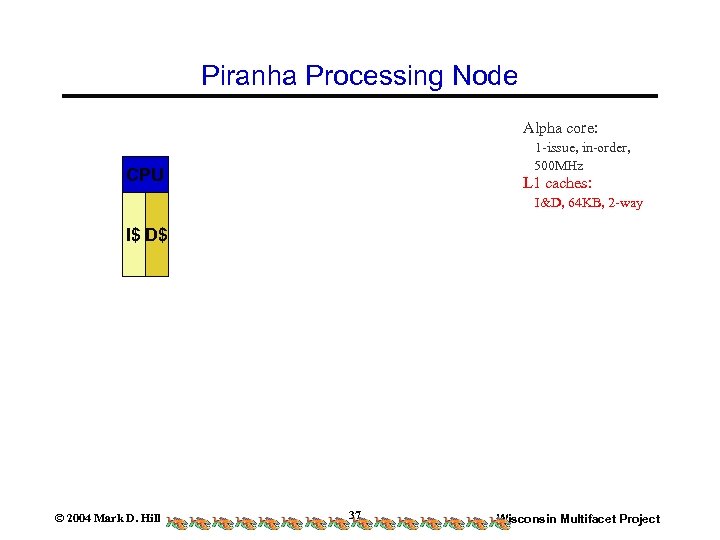

Piranha Processing Node Alpha core: 1 -issue, in-order, 500 MHz CPU L 1 caches: I&D, 64 KB, 2 -way I$ D$ © 2004 Mark D. Hill 37 Wisconsin Multifacet Project

Piranha Processing Node Alpha core: 1 -issue, in-order, 500 MHz CPU L 1 caches: I&D, 64 KB, 2 -way I$ D$ © 2004 Mark D. Hill 37 Wisconsin Multifacet Project

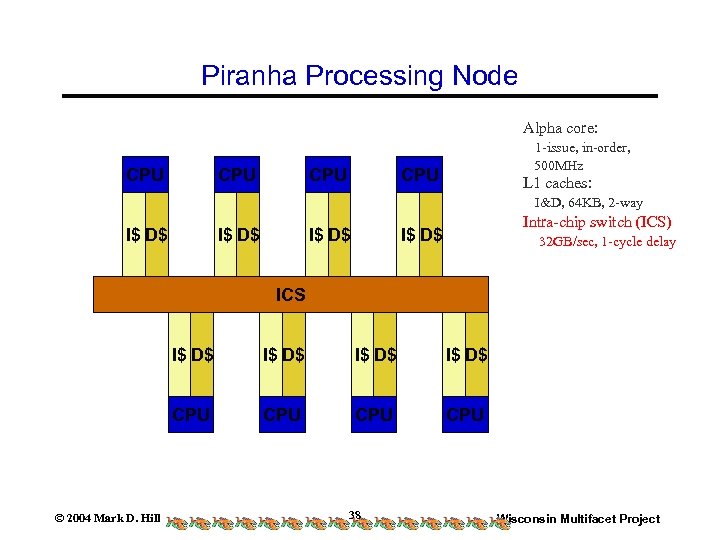

Piranha Processing Node Alpha core: CPU CPU 1 -issue, in-order, 500 MHz CPU L 1 caches: I&D, 64 KB, 2 -way I$ D$ Intra-chip switch (ICS) I$ D$ 32 GB/sec, 1 -cycle delay ICS I$ D$ CPU © 2004 Mark D. Hill I$ D$ CPU CPU 38 Wisconsin Multifacet Project

Piranha Processing Node Alpha core: CPU CPU 1 -issue, in-order, 500 MHz CPU L 1 caches: I&D, 64 KB, 2 -way I$ D$ Intra-chip switch (ICS) I$ D$ 32 GB/sec, 1 -cycle delay ICS I$ D$ CPU © 2004 Mark D. Hill I$ D$ CPU CPU 38 Wisconsin Multifacet Project

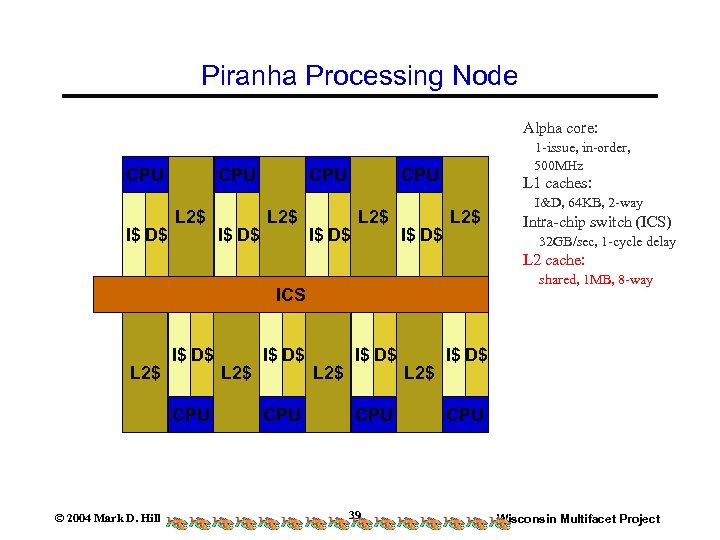

Piranha Processing Node Alpha core: CPU I$ D$ CPU L 2$ 1 -issue, in-order, 500 MHz CPU I$ D$ L 2$ I$ D$ L 1 caches: L 2$ I&D, 64 KB, 2 -way Intra-chip switch (ICS) 32 GB/sec, 1 -cycle delay L 2 cache: shared, 1 MB, 8 -way ICS L 2$ I$ D$ CPU © 2004 Mark D. Hill L 2$ I$ D$ CPU 39 L 2$ I$ D$ CPU Wisconsin Multifacet Project

Piranha Processing Node Alpha core: CPU I$ D$ CPU L 2$ 1 -issue, in-order, 500 MHz CPU I$ D$ L 2$ I$ D$ L 1 caches: L 2$ I&D, 64 KB, 2 -way Intra-chip switch (ICS) 32 GB/sec, 1 -cycle delay L 2 cache: shared, 1 MB, 8 -way ICS L 2$ I$ D$ CPU © 2004 Mark D. Hill L 2$ I$ D$ CPU 39 L 2$ I$ D$ CPU Wisconsin Multifacet Project

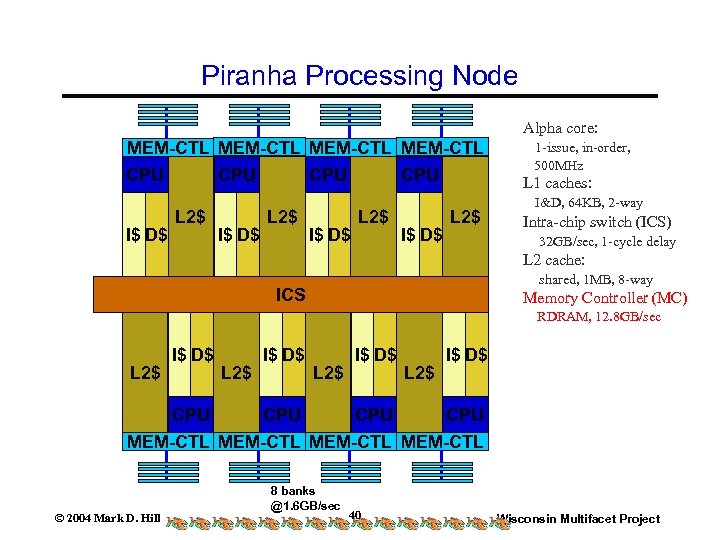

Piranha Processing Node Alpha core: MEM-CTL CPU I$ D$ CPU L 2$ CPU I$ D$ L 2$ I$ D$ 1 -issue, in-order, 500 MHz L 1 caches: L 2$ I&D, 64 KB, 2 -way Intra-chip switch (ICS) 32 GB/sec, 1 -cycle delay L 2 cache: shared, 1 MB, 8 -way ICS Memory Controller (MC) RDRAM, 12. 8 GB/sec L 2$ I$ D$ CPU CPU MEM-CTL © 2004 Mark D. Hill 8 banks @1. 6 GB/sec 40 Wisconsin Multifacet Project

Piranha Processing Node Alpha core: MEM-CTL CPU I$ D$ CPU L 2$ CPU I$ D$ L 2$ I$ D$ 1 -issue, in-order, 500 MHz L 1 caches: L 2$ I&D, 64 KB, 2 -way Intra-chip switch (ICS) 32 GB/sec, 1 -cycle delay L 2 cache: shared, 1 MB, 8 -way ICS Memory Controller (MC) RDRAM, 12. 8 GB/sec L 2$ I$ D$ CPU CPU MEM-CTL © 2004 Mark D. Hill 8 banks @1. 6 GB/sec 40 Wisconsin Multifacet Project

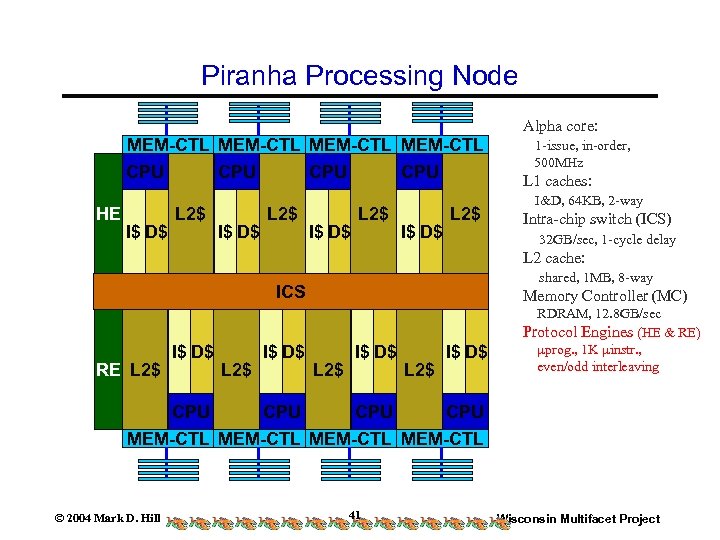

Piranha Processing Node Alpha core: MEM-CTL CPU HE I$ D$ CPU L 2$ CPU I$ D$ L 2$ I$ D$ 1 -issue, in-order, 500 MHz L 1 caches: L 2$ I&D, 64 KB, 2 -way Intra-chip switch (ICS) 32 GB/sec, 1 -cycle delay L 2 cache: shared, 1 MB, 8 -way ICS RE L 2$ I$ D$ Memory Controller (MC) L 2$ I$ D$ RDRAM, 12. 8 GB/sec Protocol Engines (HE & RE) prog. , 1 K instr. , even/odd interleaving CPU CPU MEM-CTL © 2004 Mark D. Hill 41 Wisconsin Multifacet Project

Piranha Processing Node Alpha core: MEM-CTL CPU HE I$ D$ CPU L 2$ CPU I$ D$ L 2$ I$ D$ 1 -issue, in-order, 500 MHz L 1 caches: L 2$ I&D, 64 KB, 2 -way Intra-chip switch (ICS) 32 GB/sec, 1 -cycle delay L 2 cache: shared, 1 MB, 8 -way ICS RE L 2$ I$ D$ Memory Controller (MC) L 2$ I$ D$ RDRAM, 12. 8 GB/sec Protocol Engines (HE & RE) prog. , 1 K instr. , even/odd interleaving CPU CPU MEM-CTL © 2004 Mark D. Hill 41 Wisconsin Multifacet Project

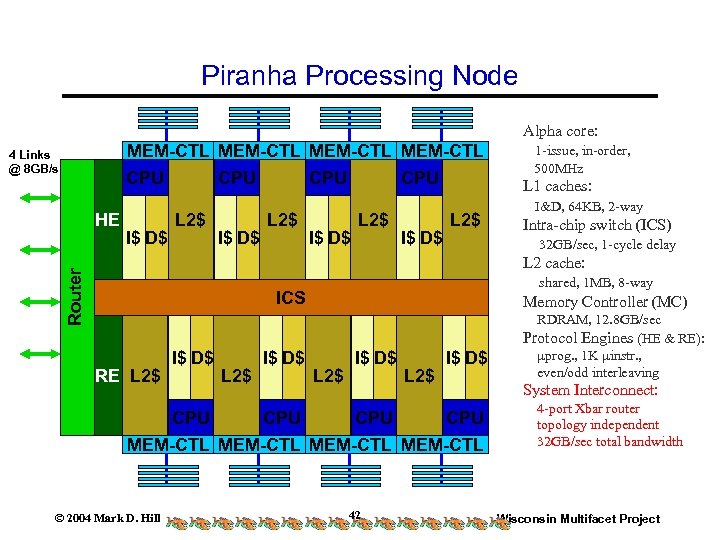

Piranha Processing Node Alpha core: MEM-CTL 4 Links @ 8 GB/s CPU HE I$ D$ CPU L 2$ CPU I$ D$ L 2$ I$ D$ 1 -issue, in-order, 500 MHz L 1 caches: L 2$ I&D, 64 KB, 2 -way Intra-chip switch (ICS) 32 GB/sec, 1 -cycle delay Router L 2 cache: shared, 1 MB, 8 -way ICS RE L 2$ I$ D$ Memory Controller (MC) L 2$ I$ D$ CPU CPU MEM-CTL © 2004 Mark D. Hill 42 RDRAM, 12. 8 GB/sec Protocol Engines (HE & RE): prog. , 1 K instr. , even/odd interleaving System Interconnect: 4 -port Xbar router topology independent 32 GB/sec total bandwidth Wisconsin Multifacet Project

Piranha Processing Node Alpha core: MEM-CTL 4 Links @ 8 GB/s CPU HE I$ D$ CPU L 2$ CPU I$ D$ L 2$ I$ D$ 1 -issue, in-order, 500 MHz L 1 caches: L 2$ I&D, 64 KB, 2 -way Intra-chip switch (ICS) 32 GB/sec, 1 -cycle delay Router L 2 cache: shared, 1 MB, 8 -way ICS RE L 2$ I$ D$ Memory Controller (MC) L 2$ I$ D$ CPU CPU MEM-CTL © 2004 Mark D. Hill 42 RDRAM, 12. 8 GB/sec Protocol Engines (HE & RE): prog. , 1 K instr. , even/odd interleaving System Interconnect: 4 -port Xbar router topology independent 32 GB/sec total bandwidth Wisconsin Multifacet Project

Piranha Processing Node Alpha core: MEM-CTL CPU HE I$ D$ CPU L 2$ CPU I$ D$ L 2$ I$ D$ 1 -issue, in-order, 500 MHz L 1 caches: L 2$ I&D, 64 KB, 2 -way Intra-chip switch (ICS) 32 GB/sec, 1 -cycle delay Router L 2 cache: shared, 1 MB, 8 -way ICS RE L 2$ I$ D$ Memory Controller (MC) L 2$ I$ D$ CPU CPU MEM-CTL © 2004 Mark D. Hill 43 RDRAM, 12. 8 GB/sec Protocol Engines (HE & RE): prog. , 1 K instr. , even/odd interleaving System Interconnect: 4 -port Xbar router topology independent 32 GB/sec total bandwidth Wisconsin Multifacet Project

Piranha Processing Node Alpha core: MEM-CTL CPU HE I$ D$ CPU L 2$ CPU I$ D$ L 2$ I$ D$ 1 -issue, in-order, 500 MHz L 1 caches: L 2$ I&D, 64 KB, 2 -way Intra-chip switch (ICS) 32 GB/sec, 1 -cycle delay Router L 2 cache: shared, 1 MB, 8 -way ICS RE L 2$ I$ D$ Memory Controller (MC) L 2$ I$ D$ CPU CPU MEM-CTL © 2004 Mark D. Hill 43 RDRAM, 12. 8 GB/sec Protocol Engines (HE & RE): prog. , 1 K instr. , even/odd interleaving System Interconnect: 4 -port Xbar router topology independent 32 GB/sec total bandwidth Wisconsin Multifacet Project

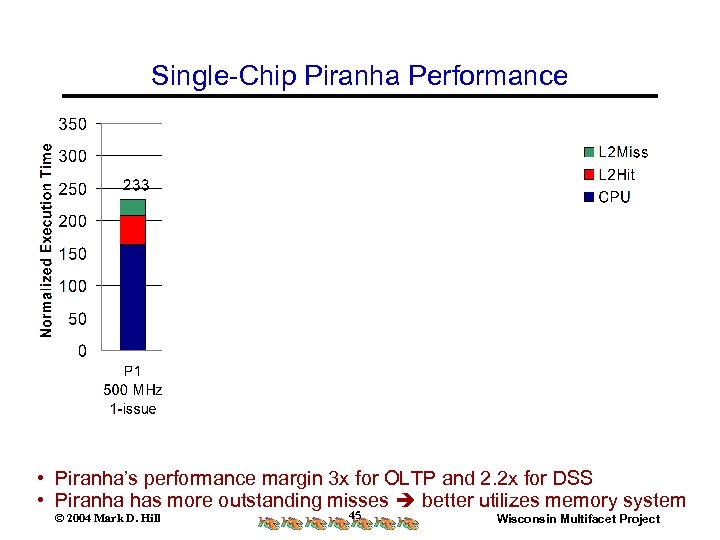

Single-Chip Piranha Performance • Piranha’s performance margin 3 x for OLTP and 2. 2 x for DSS • Piranha has more outstanding misses better utilizes memory system © 2004 Mark D. Hill 45 Wisconsin Multifacet Project

Single-Chip Piranha Performance • Piranha’s performance margin 3 x for OLTP and 2. 2 x for DSS • Piranha has more outstanding misses better utilizes memory system © 2004 Mark D. Hill 45 Wisconsin Multifacet Project

Chip Multiprocessing Assessment: Servers • Programming – Supports finer-grained sharing than old-style MP – But not as fine as SMT (yet) – Many cores can make performance gain large • Can Yield MLP for Chip! – Can do CMP of SMT processors – C cores of S-way SMT with T-way MLP per thread – Yields Chip MLP of C*S*T (e. g. , 8*2*2 = 32) • Most Servers have Multi-Threaded Workload • CMP is a Server Inflection Point – Expect >10 x performance for less cost Implying, >>10 x cost-performance © 2004 Mark D. Hill 46 Wisconsin Multifacet Project

Chip Multiprocessing Assessment: Servers • Programming – Supports finer-grained sharing than old-style MP – But not as fine as SMT (yet) – Many cores can make performance gain large • Can Yield MLP for Chip! – Can do CMP of SMT processors – C cores of S-way SMT with T-way MLP per thread – Yields Chip MLP of C*S*T (e. g. , 8*2*2 = 32) • Most Servers have Multi-Threaded Workload • CMP is a Server Inflection Point – Expect >10 x performance for less cost Implying, >>10 x cost-performance © 2004 Mark D. Hill 46 Wisconsin Multifacet Project

Chip Multiprocessing Assessment: Clients • Most Client (Today) have Single-Threaded Workload – Base CMP No Help – Use Thread Level Speculation? – Use Helper Threads? • CMPs for Clients? – Depends on Threads – CMP costs significant chip area (unlike SMT) © 2004 Mark D. Hill 47 Wisconsin Multifacet Project

Chip Multiprocessing Assessment: Clients • Most Client (Today) have Single-Threaded Workload – Base CMP No Help – Use Thread Level Speculation? – Use Helper Threads? • CMPs for Clients? – Depends on Threads – CMP costs significant chip area (unlike SMT) © 2004 Mark D. Hill 47 Wisconsin Multifacet Project

Outline • Computer Architecture Drivers • Instruction Level Parallelism (ILP) Review • Memory Level Parallelism (MLP) • Improving MLP of Thread • Improving MLP of a Core or Chip • CMP Systems – Small, Medium, but Not Large – Wisconsin Multifacet Token Coherence © 2004 Mark D. Hill 48 Wisconsin Multifacet Project

Outline • Computer Architecture Drivers • Instruction Level Parallelism (ILP) Review • Memory Level Parallelism (MLP) • Improving MLP of Thread • Improving MLP of a Core or Chip • CMP Systems – Small, Medium, but Not Large – Wisconsin Multifacet Token Coherence © 2004 Mark D. Hill 48 Wisconsin Multifacet Project

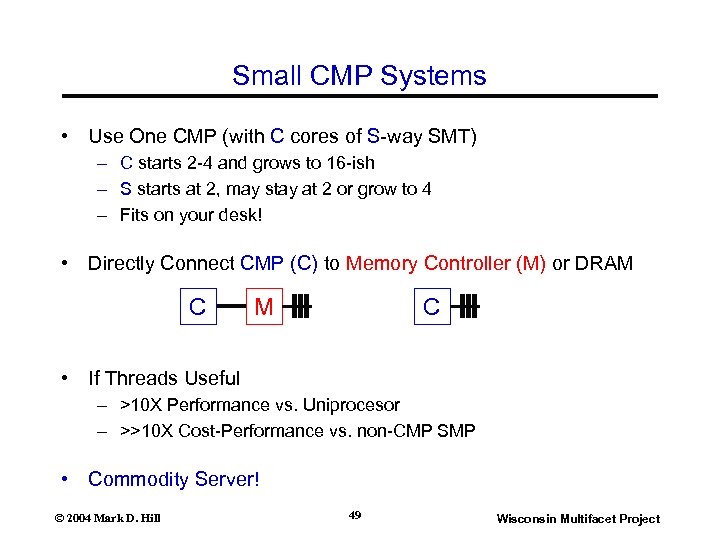

Small CMP Systems • Use One CMP (with C cores of S-way SMT) – C starts 2 -4 and grows to 16 -ish – S starts at 2, may stay at 2 or grow to 4 – Fits on your desk! • Directly Connect CMP (C) to Memory Controller (M) or DRAM C • If Threads Useful – >10 X Performance vs. Uniprocesor – >>10 X Cost-Performance vs. non-CMP SMP • Commodity Server! © 2004 Mark D. Hill 49 Wisconsin Multifacet Project

Small CMP Systems • Use One CMP (with C cores of S-way SMT) – C starts 2 -4 and grows to 16 -ish – S starts at 2, may stay at 2 or grow to 4 – Fits on your desk! • Directly Connect CMP (C) to Memory Controller (M) or DRAM C • If Threads Useful – >10 X Performance vs. Uniprocesor – >>10 X Cost-Performance vs. non-CMP SMP • Commodity Server! © 2004 Mark D. Hill 49 Wisconsin Multifacet Project

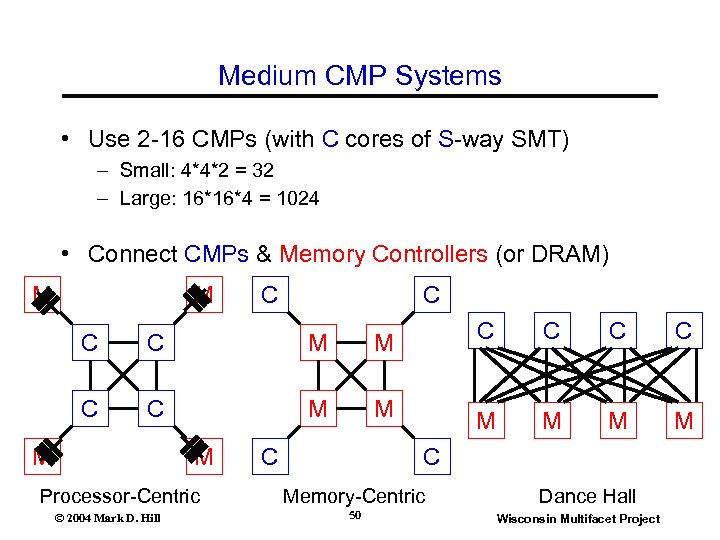

Medium CMP Systems • Use 2 -16 CMPs (with C cores of S-way SMT) – Small: 4*4*2 = 32 – Large: 16*16*4 = 1024 • Connect CMPs & Memory Controllers (or DRAM) M M C C C M M M M Processor-Centric © 2004 Mark D. Hill C C Memory-Centric 50 Dance Hall Wisconsin Multifacet Project

Medium CMP Systems • Use 2 -16 CMPs (with C cores of S-way SMT) – Small: 4*4*2 = 32 – Large: 16*16*4 = 1024 • Connect CMPs & Memory Controllers (or DRAM) M M C C C M M M M Processor-Centric © 2004 Mark D. Hill C C Memory-Centric 50 Dance Hall Wisconsin Multifacet Project

Large CMP Systems? • 1000 s of CMPs? • Will not happen in the commercial market – Instead will network CMP systems into clusters – Enhance availability & reduces cost – Poor latency acceptable • Market for large scientific machines probably ~$0 Billion • Market for large government machines similar – Nevertheless, government can make this happen (like bombers) • The rest of us will use – a small- or medium-CMP system – A cluster of small- or medium-CMP systems © 2004 Mark D. Hill 51 Wisconsin Multifacet Project

Large CMP Systems? • 1000 s of CMPs? • Will not happen in the commercial market – Instead will network CMP systems into clusters – Enhance availability & reduces cost – Poor latency acceptable • Market for large scientific machines probably ~$0 Billion • Market for large government machines similar – Nevertheless, government can make this happen (like bombers) • The rest of us will use – a small- or medium-CMP system – A cluster of small- or medium-CMP systems © 2004 Mark D. Hill 51 Wisconsin Multifacet Project

Wisconsin Multifacet (www. cs. wisc. edu/multifacet) • Designing Commercial Servers • Availability: Safety. Net Checkpointing [ISCA 2002] • Programability: Flight Data Recorder [ISCA 2003] • Methods: Simulating a $2 M Server on a $2 K PC [Computer 2003] • Performance: Cache Compression [ISCA 2004] • Simplicity & Performance: Token Coherence (next) © 2004 Mark D. Hill 52 Wisconsin Multifacet Project

Wisconsin Multifacet (www. cs. wisc. edu/multifacet) • Designing Commercial Servers • Availability: Safety. Net Checkpointing [ISCA 2002] • Programability: Flight Data Recorder [ISCA 2003] • Methods: Simulating a $2 M Server on a $2 K PC [Computer 2003] • Performance: Cache Compression [ISCA 2004] • Simplicity & Performance: Token Coherence (next) © 2004 Mark D. Hill 52 Wisconsin Multifacet Project

![Token Coherence [IEEE MICRO Top Picks 03] • Coherence Invariant (for any memory block Token Coherence [IEEE MICRO Top Picks 03] • Coherence Invariant (for any memory block](https://present5.com/presentation/711a6243141941ae45048033fb3fa1c1/image-52.jpg) Token Coherence [IEEE MICRO Top Picks 03] • Coherence Invariant (for any memory block at any time): – One writer or multiple readers • Implemented with distributed Finite State Machines • Indirectly enforced (bus order, acks, blocking, etc. ) • Token Coherence Directly Enforces – – Each memory block has T tokens Token count store with data (even in messages) Processor needs all T tokens to write Processor needs at least one token to read • Last year: Glueless Multiprocessor – Speedup 17 -54% vs directory • This Year: Medium CMP Systems – Flat for correctness – Hierarchical for performance © 2004 Mark D. Hill 53 Wisconsin Multifacet Project

Token Coherence [IEEE MICRO Top Picks 03] • Coherence Invariant (for any memory block at any time): – One writer or multiple readers • Implemented with distributed Finite State Machines • Indirectly enforced (bus order, acks, blocking, etc. ) • Token Coherence Directly Enforces – – Each memory block has T tokens Token count store with data (even in messages) Processor needs all T tokens to write Processor needs at least one token to read • Last year: Glueless Multiprocessor – Speedup 17 -54% vs directory • This Year: Medium CMP Systems – Flat for correctness – Hierarchical for performance © 2004 Mark D. Hill 53 Wisconsin Multifacet Project

Conclusions Must Exploit Memory Level Parallelism! At Thread: Runahead & Continual Flow Pipeline At Processor: Simultaneous Multithreading At Chip: Chip Multiprocessing Talk to be filed : Google Mark Hill > Publications > 2004 © 2004 Mark D. Hill 54 Wisconsin Multifacet Project

Conclusions Must Exploit Memory Level Parallelism! At Thread: Runahead & Continual Flow Pipeline At Processor: Simultaneous Multithreading At Chip: Chip Multiprocessing Talk to be filed : Google Mark Hill > Publications > 2004 © 2004 Mark D. Hill 54 Wisconsin Multifacet Project