692e62a3c8fdf23e8344e4fcfbe14c41.ppt

- Количество слайдов: 1

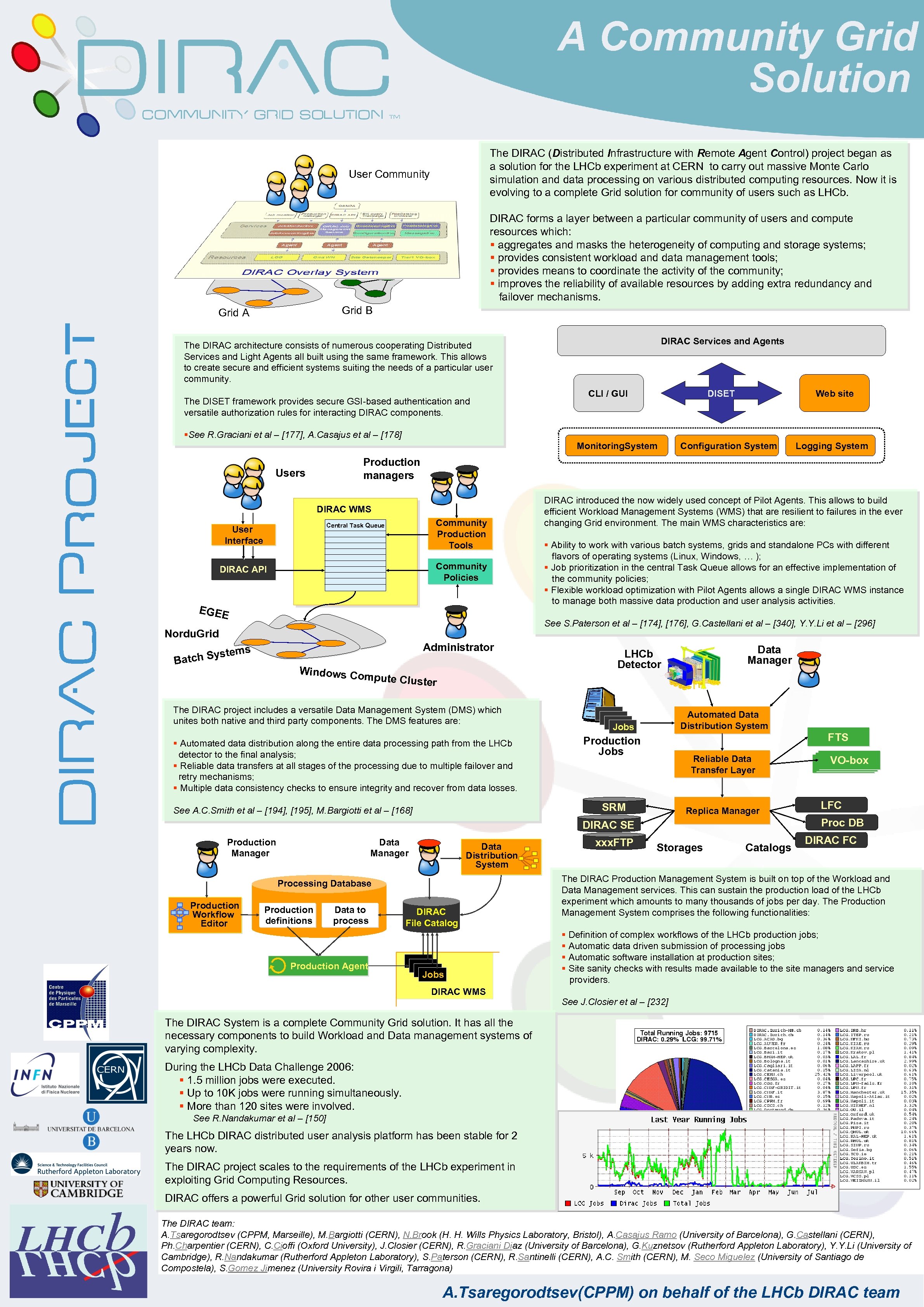

A Community Grid Solution The DIRAC (Distributed Infrastructure with Remote Agent Control) project began as a solution for the LHCb experiment at CERN to carry out massive Monte Carlo simulation and data processing on various distributed computing resources. Now it is evolving to a complete Grid solution for community of users such as LHCb. User Community DIRAC forms a layer between a particular community of users and compute resources which: § aggregates and masks the heterogeneity of computing and storage systems; § provides consistent workload and data management tools; § provides means to coordinate the activity of the community; § improves the reliability of available resources by adding extra redundancy and failover mechanisms. Grid B DIRAC Project Grid A DIRAC Services and Agents The DIRAC architecture consists of numerous cooperating Distributed Services and Light Agents all built using the same framework. This allows to create secure and efficient systems suiting the needs of a particular user community. The DISET framework provides secure GSI-based authentication and versatile authorization rules for interacting DIRAC components. DISET CLI / GUI Web site §See R. Graciani et al – [177], A. Casajus et al – [178] Monitoring. System Configuration System Production managers Users DIRAC WMS Community Production Tools Central Task Queue User Interface Community Policies DIRAC API EGEE DIRAC introduced the now widely used concept of Pilot Agents. This allows to build efficient Workload Management Systems (WMS) that are resilient to failures in the ever changing Grid environment. The main WMS characteristics are: § Ability to work with various batch systems, grids and standalone PCs with different flavors of operating systems (Linux, Windows, … ); § Job prioritization in the central Task Queue allows for an effective implementation of the community policies; § Flexible workload optimization with Pilot Agents allows a single DIRAC WMS instance to manage both massive data production and user analysis activities. See S. Paterson et al – [174], [176], G. Castellani et al – [340], Y. Y. Li et al – [296] Nordu. Grid Administrator stems h Sy Batc Windows Co mpute Clust Data Manager LHCb Detector er The DIRAC project includes a versatile Data Management System (DMS) which unites both native and third party components. The DMS features are: § Automated data distribution along the entire data processing path from the LHCb detector to the final analysis; § Reliable data transfers at all stages of the processing due to multiple failover and retry mechanisms; § Multiple data consistency checks to ensure integrity and recover from data losses. Automated Data Distribution System Jobs FTS Production Jobs Reliable Data Transfer Layer SRM See A. C. Smith et al – [194], [195], M. Bargiotti et al – [168] Replica Manager Production Manager Data Distribution System Processing Database Production definitions Data to process Production Agent DIRAC File Catalog Jobs DIRAC WMS xxx. FTP VO-box LFC Proc DB DIRAC SE Production Workflow Editor Logging System Storages Catalogs DIRAC FC The DIRAC Production Management System is built on top of the Workload and Data Management services. This can sustain the production load of the LHCb experiment which amounts to many thousands of jobs per day. The Production Management System comprises the following functionalities: § Definition of complex workflows of the LHCb production jobs; § Automatic data driven submission of processing jobs § Automatic software installation at production sites; § Site sanity checks with results made available to the site managers and service providers. See J. Closier et al – [232] The DIRAC System is a complete Community Grid solution. It has all the necessary components to build Workload and Data management systems of varying complexity. During the LHCb Data Challenge 2006: § 1. 5 million jobs were executed. § Up to 10 K jobs were running simultaneously. § More than 120 sites were involved. See R. Nandakumar et al – [150] The LHCb DIRAC distributed user analysis platform has been stable for 2 years now. The DIRAC project scales to the requirements of the LHCb experiment in exploiting Grid Computing Resources. DIRAC offers a powerful Grid solution for other user communities. The DIRAC team: A. Tsaregorodtsev (CPPM, Marseille), M. Bargiotti (CERN), N. Brook (H. H. Wills Physics Laboratory, Bristol), A. Casajus Ramo (University of Barcelona), G. Castellani (CERN), Ph. Charpentier (CERN), C. Cioffi (Oxford University), J. Closier (CERN), R. Graciani Diaz (University of Barcelona), G. Kuznetsov (Rutherford Appleton Laboratory), Y. Y. Li (University of Cambridge), R. Nandakumar (Rutherford Appleton Laboratory), S. Paterson (CERN), R. Santinelli (CERN), A. C. Smith (CERN), M. Seco Miguelez (University of Santiago de Compostela), S. Gomez Jimenez (University Rovira i Virgili, Tarragona) A. Tsaregorodtsev(CPPM) on behalf of the LHCb DIRAC team

692e62a3c8fdf23e8344e4fcfbe14c41.ppt