e891aa341365b78bc8e2bb897434879e.ppt

- Количество слайдов: 44

A Code Layout Framework for Embedded Processors with Configurable Memory Hierarchy Kaushal Sanghai David Kaeli ECE Department Northeastern University Boston, MA

Outline n n n n n Motivation and goals Blackfin 53 x memory architecture L 1 code memory configurations Code layout algorithm PGO linker tool Methodology Results Conclusions and future work References

Motivation n Blackfin processor cores provide highly configurable memory subsystems to better match application-specific workload characteristics Spatial and temporal locality present in applications should be exploited to produce efficient layouts Code and data layout can be optimized by profile guidance

Motivation n n Most developers rely on hand tuning the layout which not only increases the time-to-market embedded products but also results in an inefficient memory mapping Program optimization techniques to automatically optimize memory layout for such memory subsytems are thereby needed

Goals n n Develop a complete code-mapping framework that provides for automatic code layout for the range of L 1 memory configurations available on Blackfin Create tools that enable fast and easy design space exploration across the range of L 1 memory configurations Utilize execution profiles to tune code layout Evaluate performance of the code mapping algorithms on the available L 1 memory configurations for embedded multimedia applications

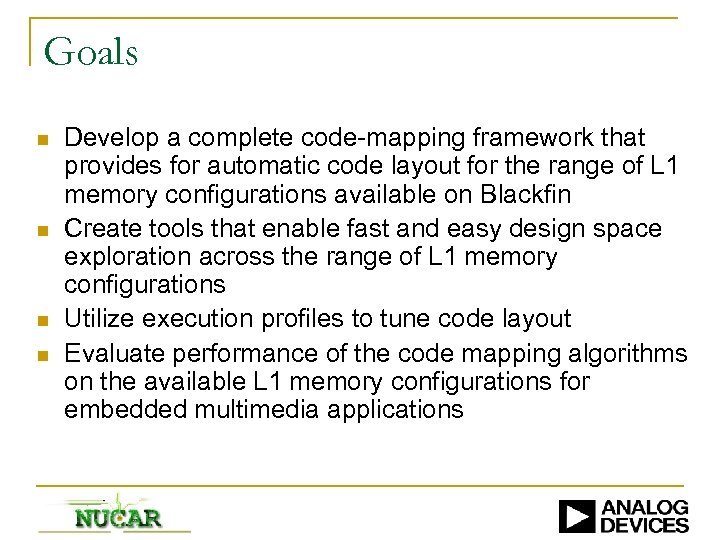

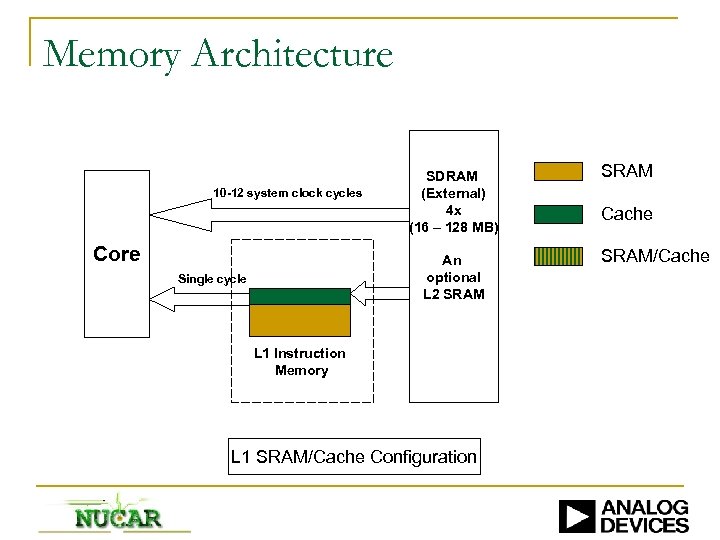

Memory Architecture 10 -12 system clock cycles Core SDRAM (External) 4 x (16 – 128 MB) An optional L 2 SRAM Single cycle L 1 Instruction Memory SRAM Cache SRAM/Cache

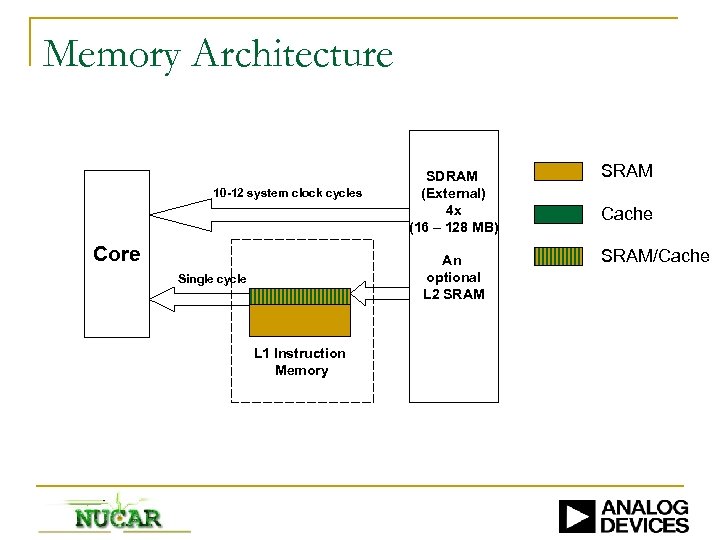

Memory Architecture 10 -12 system clock cycles Core SDRAM (External) 4 x (16 – 128 MB) An optional L 2 SRAM Single cycle L 1 Instruction Memory L 1 SRAM Configuration SRAM Cache SRAM/Cache

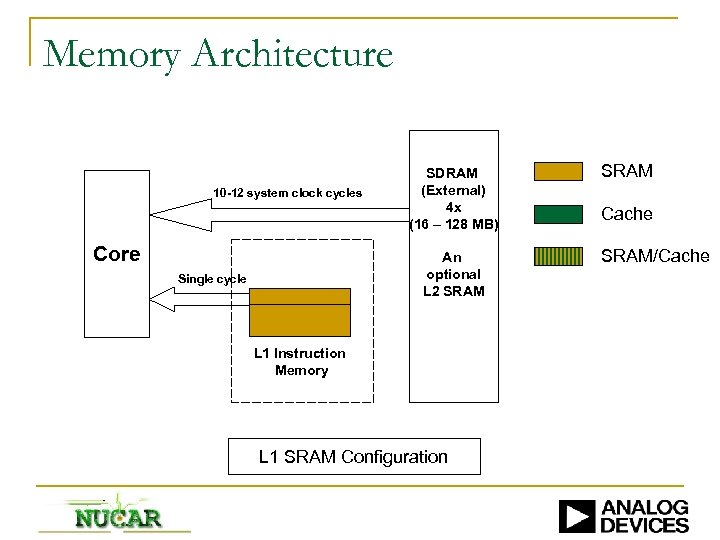

Memory Architecture 10 -12 system clock cycles Core SDRAM (External) 4 x (16 – 128 MB) An optional L 2 SRAM Single cycle L 1 Instruction Memory L 1 Cache Configuration SRAM Cache SRAM/Cache

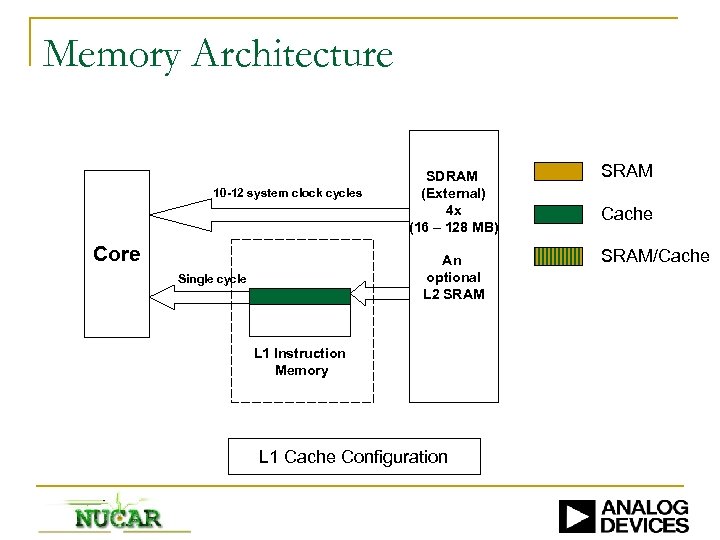

Memory Architecture 10 -12 system clock cycles Core SDRAM (External) 4 x (16 – 128 MB) An optional L 2 SRAM Single cycle L 1 Instruction Memory L 1 SRAM/Cache Configuration SRAM Cache SRAM/Cache

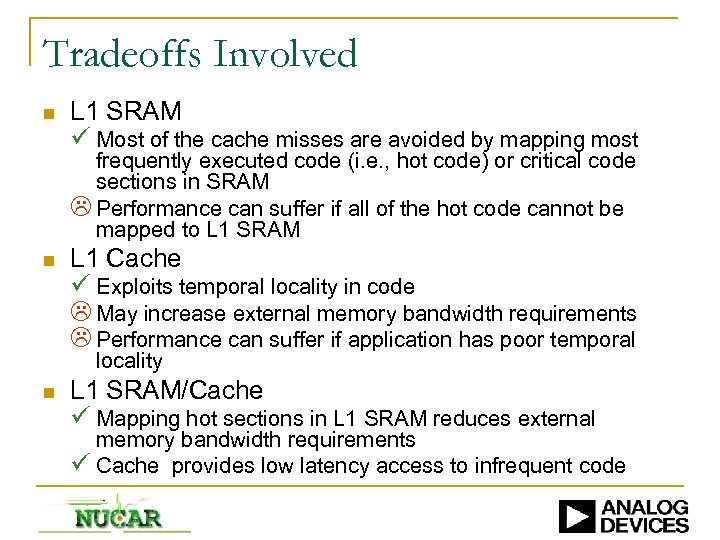

Tradeoffs Involved n L 1 SRAM ü Most of the cache misses are avoided by mapping most frequently executed code (i. e. , hot code) or critical code sections in SRAM L Performance can suffer if all of the hot code cannot be mapped to L 1 SRAM n L 1 Cache ü Exploits temporal locality in code L May increase external memory bandwidth requirements L Performance can suffer if application has poor temporal locality n L 1 SRAM/Cache ü Mapping hot sections in L 1 SRAM reduces external memory bandwidth requirements ü Cache provides low latency access to infrequent code

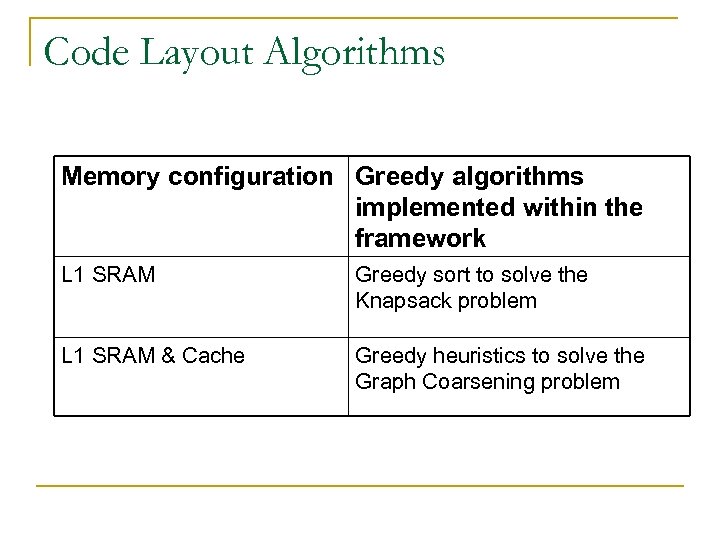

Code Layout Algorithms Memory configuration Greedy algorithms implemented within the framework L 1 SRAM Greedy sort to solve the Knapsack problem L 1 SRAM & Cache Greedy heuristics to solve the Graph Coarsening problem

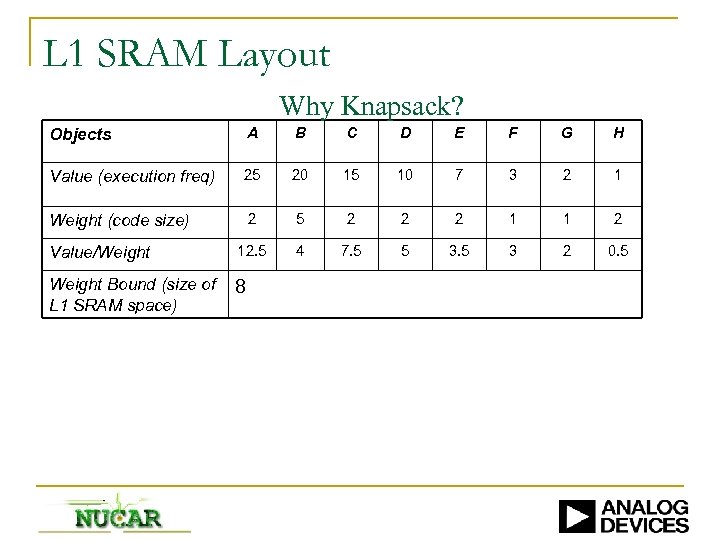

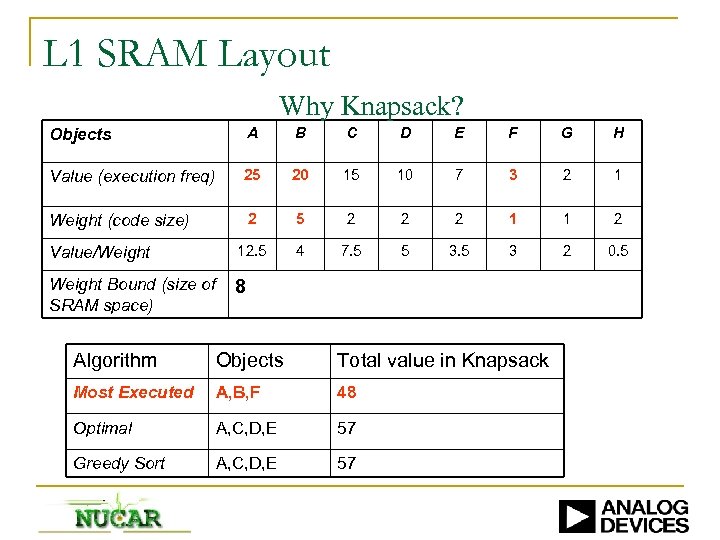

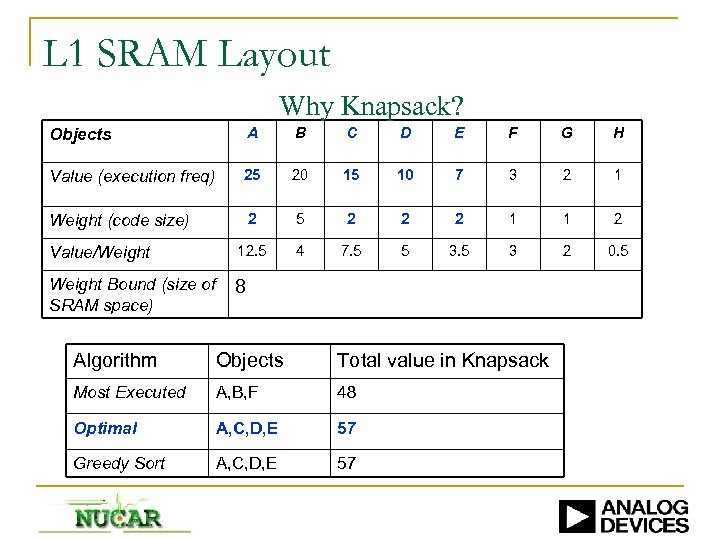

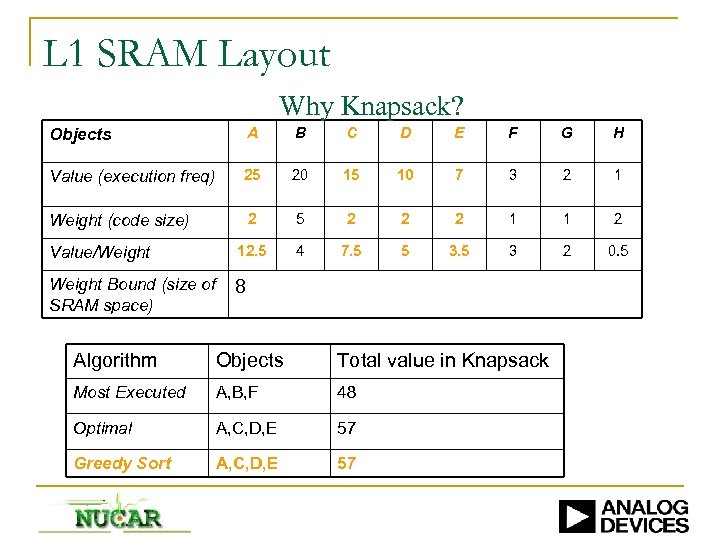

L 1 SRAM Layout Why Knapsack? Objects A B C D E F G H Value (execution freq) 25 20 15 10 7 3 2 1 Weight (code size) 2 5 2 2 2 1 1 2 Value/Weight 12. 5 4 7. 5 5 3 2 0. 5 Weight Bound (size of L 1 SRAM space) 8

L 1 SRAM Layout Why Knapsack? Objects A B C D E F G H Value (execution freq) 25 20 15 10 7 3 2 1 Weight (code size) 2 5 2 2 2 1 1 2 Value/Weight 12. 5 4 7. 5 5 3 2 0. 5 Weight Bound (size of SRAM space) 8 Algorithm Objects Total value in Knapsack Most Executed A, B, F 48 Optimal A, C, D, E 57 Greedy Sort A, C, D, E 57

L 1 SRAM Layout Why Knapsack? Objects A B C D E F G H Value (execution freq) 25 20 15 10 7 3 2 1 Weight (code size) 2 5 2 2 2 1 1 2 Value/Weight 12. 5 4 7. 5 5 3 2 0. 5 Weight Bound (size of SRAM space) 8 Algorithm Objects Total value in Knapsack Most Executed A, B, F 48 Optimal A, C, D, E 57 Greedy Sort A, C, D, E 57

L 1 SRAM Layout Why Knapsack? Objects A B C D E F G H Value (execution freq) 25 20 15 10 7 3 2 1 Weight (code size) 2 5 2 2 2 1 1 2 Value/Weight 12. 5 4 7. 5 5 3 2 0. 5 Weight Bound (size of SRAM space) 8 Algorithm Objects Total value in Knapsack Most Executed A, B, F 48 Optimal A, C, D, E 57 Greedy Sort A, C, D, E 57

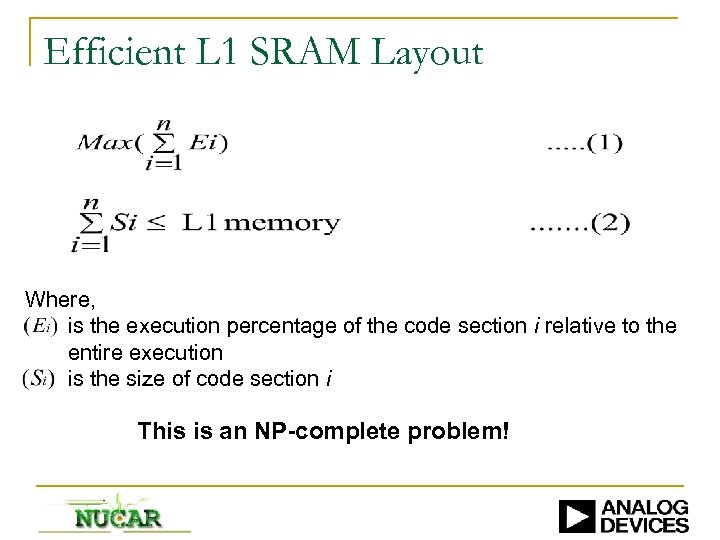

Efficient L 1 SRAM Layout Where, is the execution percentage of the code section i relative to the entire execution is the size of code section i This is an NP-complete problem!

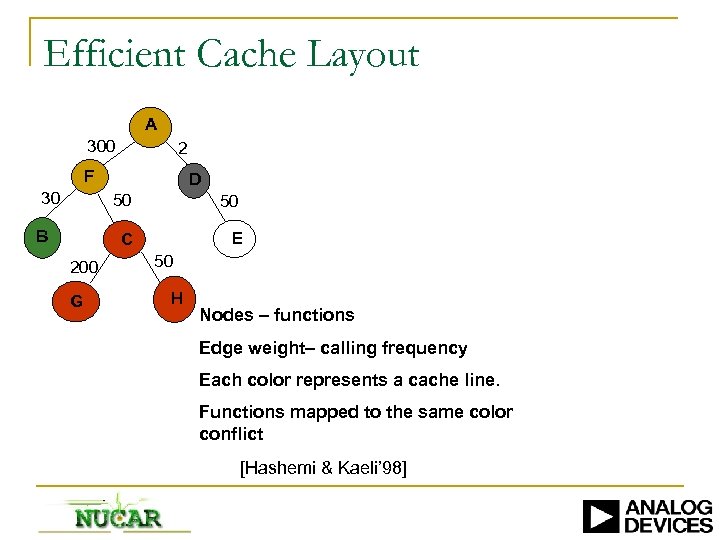

Efficient Cache Layout A 300 2 F 30 D 50 C B 200 G 50 E 50 H Nodes – functions Edge weight– calling frequency Each color represents a cache line. Functions mapped to the same color conflict [Hashemi & Kaeli’ 98]

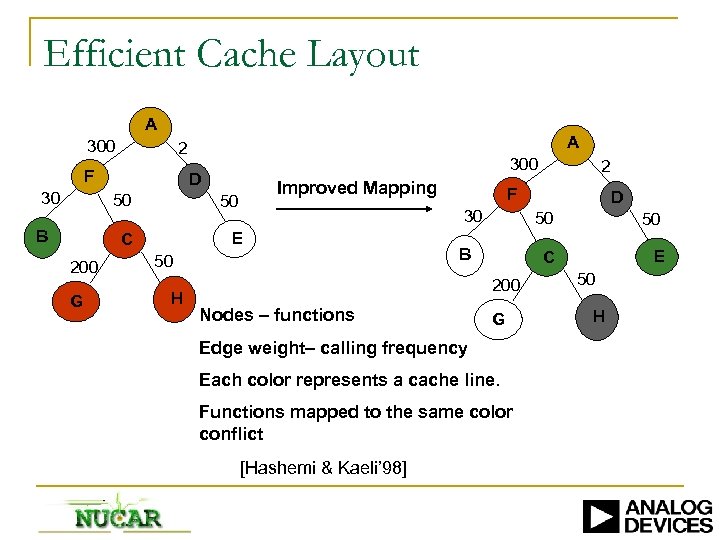

Efficient Cache Layout A 300 F 30 A 2 300 D Improved Mapping 50 C B 200 G 50 E H F 30 50 G Edge weight– calling frequency Each color represents a cache line. Functions mapped to the same color conflict 50 C B Nodes – functions D 50 200 [Hashemi & Kaeli’ 98] 2 E 50 H

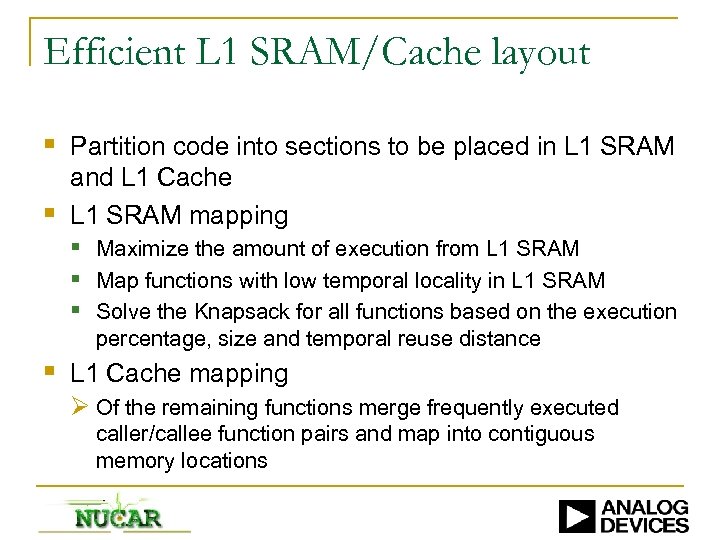

Efficient L 1 SRAM/Cache layout § Partition code into sections to be placed in L 1 SRAM and L 1 Cache § L 1 SRAM mapping § Maximize the amount of execution from L 1 SRAM § Map functions with low temporal locality in L 1 SRAM § Solve the Knapsack for all functions based on the execution percentage, size and temporal reuse distance § L 1 Cache mapping Ø Of the remaining functions merge frequently executed caller/callee function pairs and map into contiguous memory locations

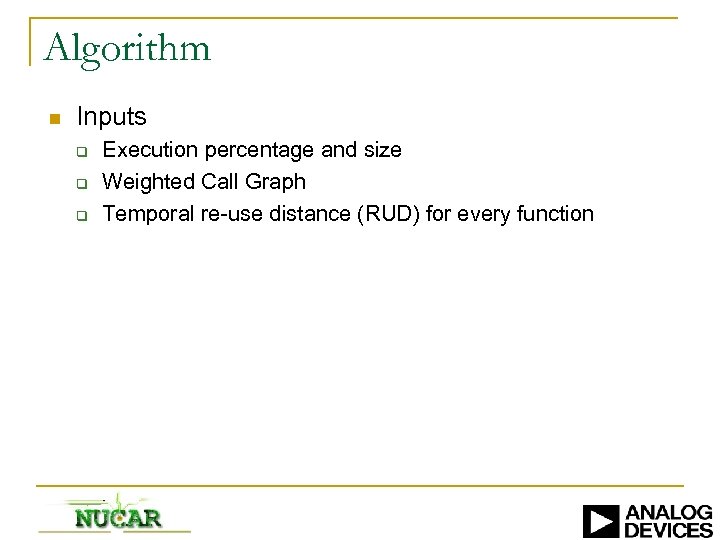

Algorithm n Inputs q q q Execution percentage and size Weighted Call Graph Temporal re-use distance (RUD) for every function

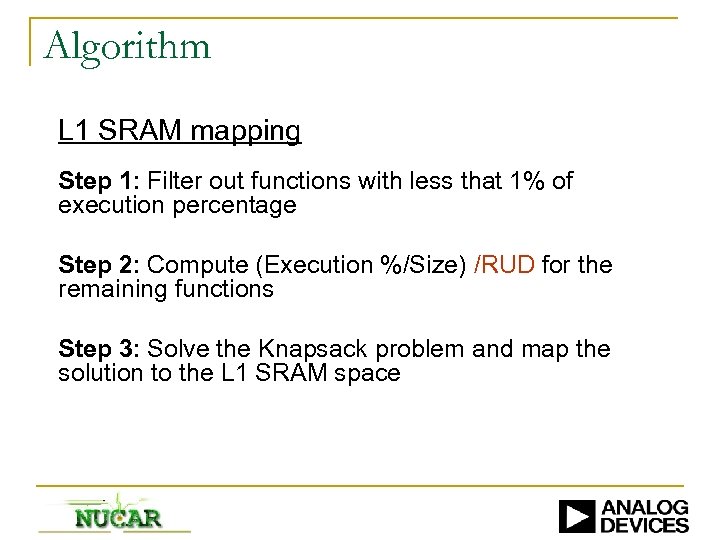

Algorithm L 1 SRAM mapping Step 1: Filter out functions with less that 1% of execution percentage Step 2: Compute (Execution %/Size) /RUD for the remaining functions Step 3: Solve the Knapsack problem and map the solution to the L 1 SRAM space

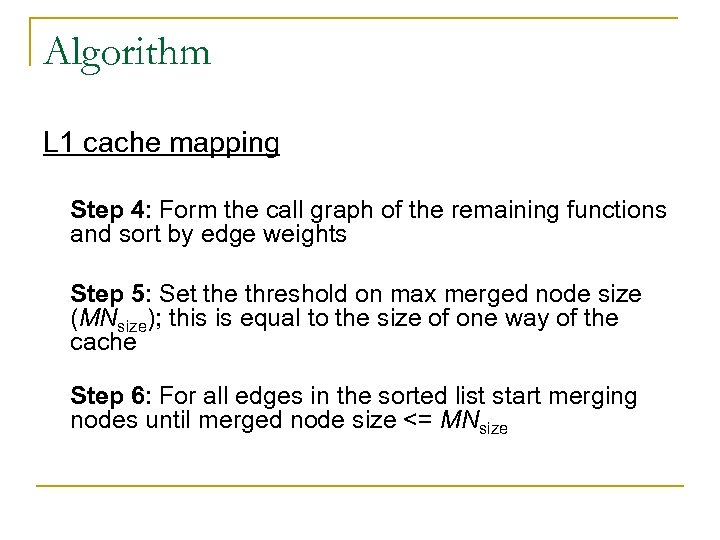

Algorithm L 1 cache mapping Step 4: Form the call graph of the remaining functions and sort by edge weights Step 5: Set the threshold on max merged node size (MNsize); this is equal to the size of one way of the cache Step 6: For all edges in the sorted list start merging nodes until merged node size <= MNsize

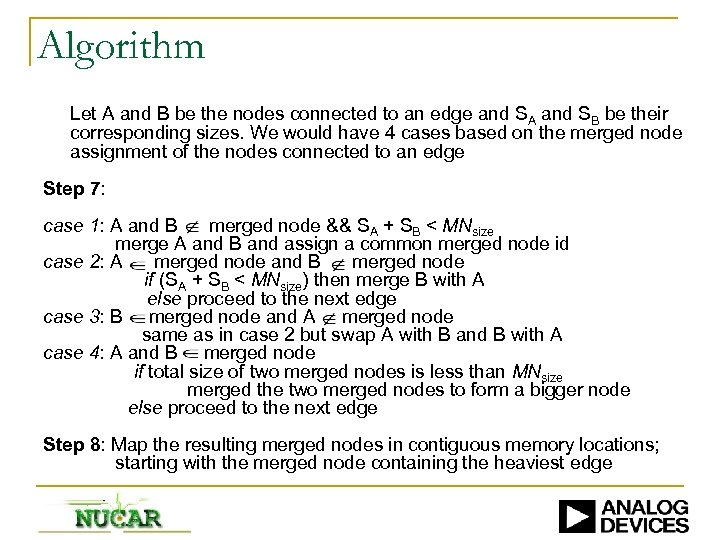

Algorithm Let A and B be the nodes connected to an edge and SA and SB be their corresponding sizes. We would have 4 cases based on the merged node assignment of the nodes connected to an edge Step 7: case 1: A and B merged node && SA + SB < MNsize merge A and B and assign a common merged node id case 2: A merged node and B merged node if (SA + SB < MNsize) then merge B with A else proceed to the next edge case 3: B merged node and A merged node same as in case 2 but swap A with B and B with A case 4: A and B merged node if total size of two merged nodes is less than MNsize merged the two merged nodes to form a bigger node else proceed to the next edge Step 8: Map the resulting merged nodes in contiguous memory locations; starting with the merged node containing the heaviest edge

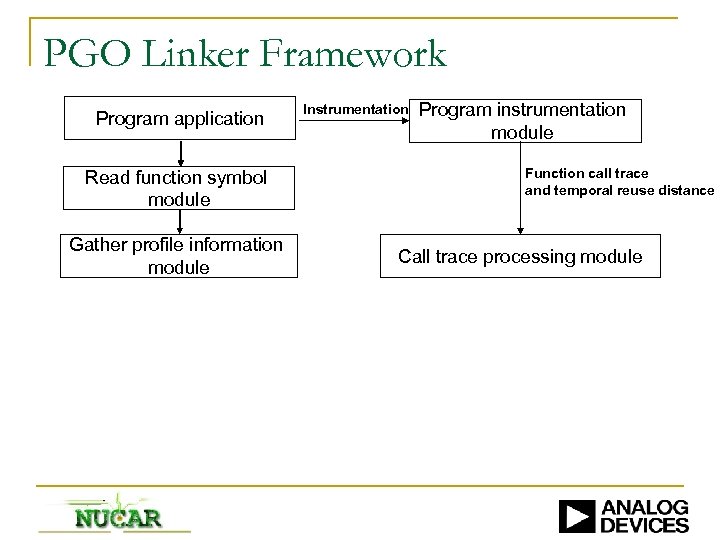

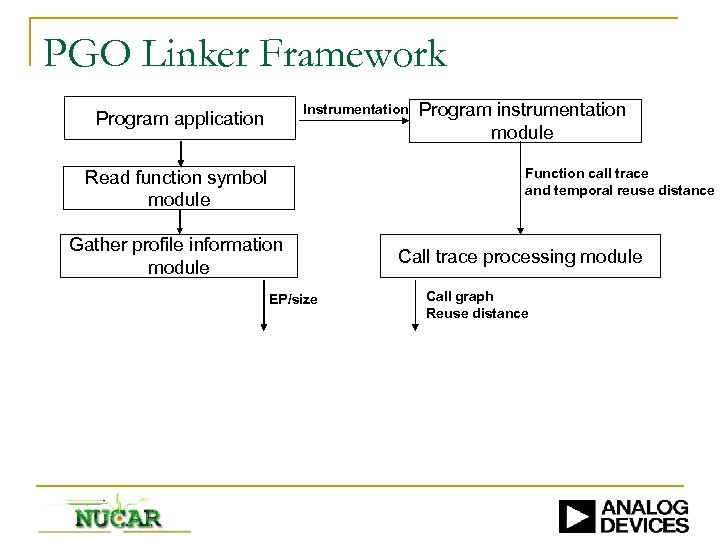

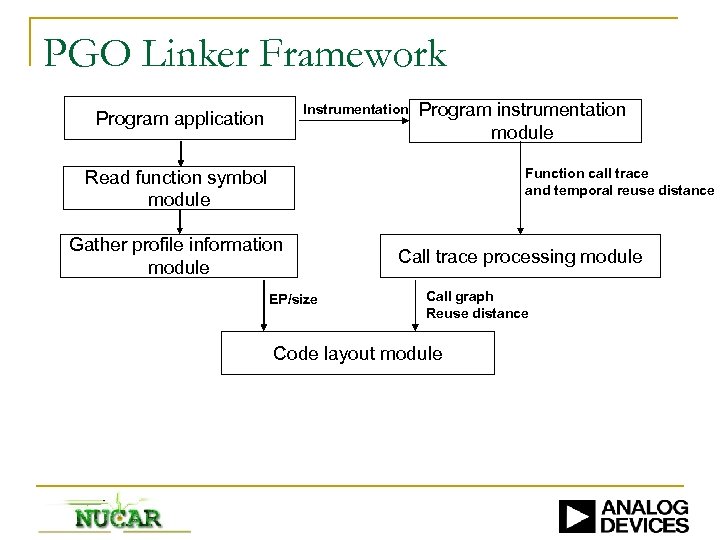

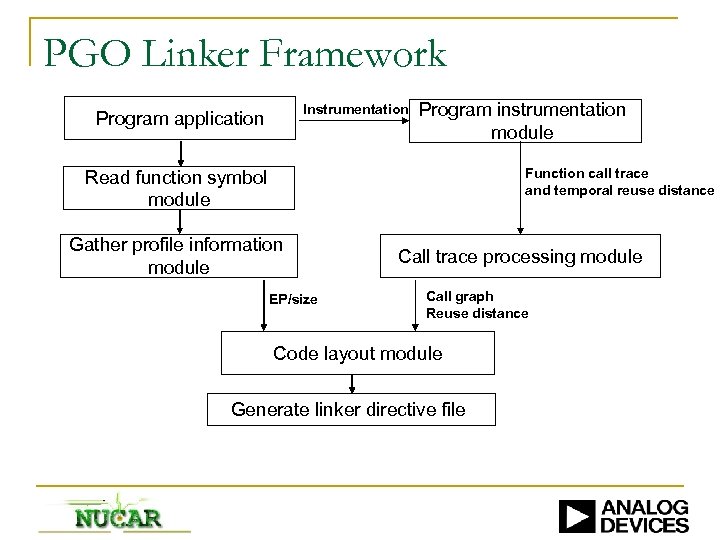

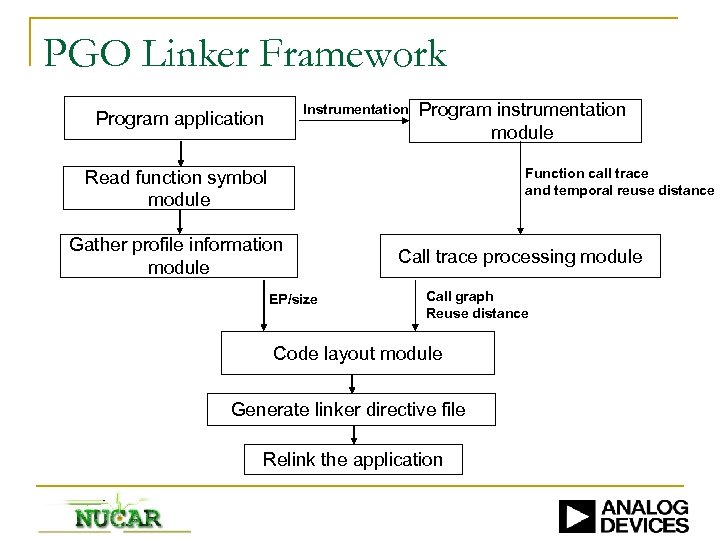

PGO Linker Framework Program application

PGO Linker Framework Program application Read function symbol module

PGO Linker Framework Program application Read function symbol module Gather profile information module

PGO Linker Framework Program application Read function symbol module Gather profile information module Instrumentation

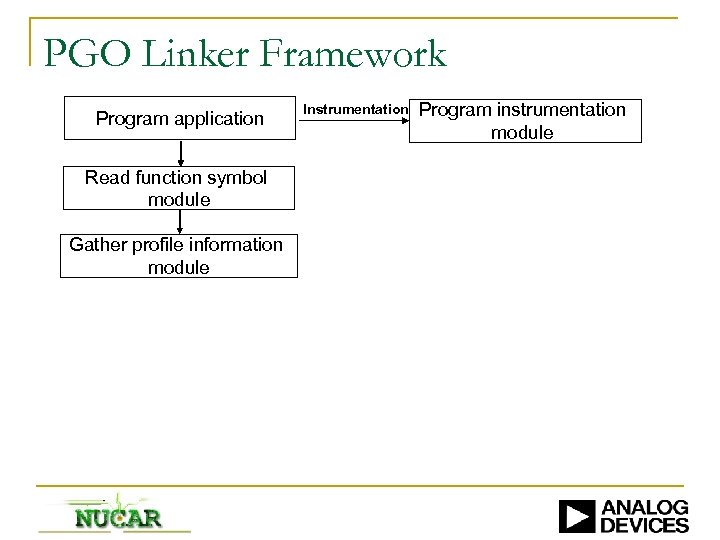

PGO Linker Framework Program application Read function symbol module Gather profile information module Instrumentation Program instrumentation module

PGO Linker Framework Program application Read function symbol module Gather profile information module Instrumentation Program instrumentation module Function call trace and temporal reuse distance Call trace processing module

PGO Linker Framework Instrumentation Program application Program instrumentation module Function call trace and temporal reuse distance Read function symbol module Gather profile information module EP/size Call trace processing module Call graph Reuse distance

PGO Linker Framework Instrumentation Program application Program instrumentation module Function call trace and temporal reuse distance Read function symbol module Gather profile information module EP/size Call trace processing module Call graph Reuse distance Code layout module

PGO Linker Framework Instrumentation Program application Program instrumentation module Function call trace and temporal reuse distance Read function symbol module Gather profile information module EP/size Call trace processing module Call graph Reuse distance Code layout module Generate linker directive file

PGO Linker Framework Instrumentation Program application Program instrumentation module Function call trace and temporal reuse distance Read function symbol module Gather profile information module EP/size Call trace processing module Call graph Reuse distance Code layout module Generate linker directive file Relink the application

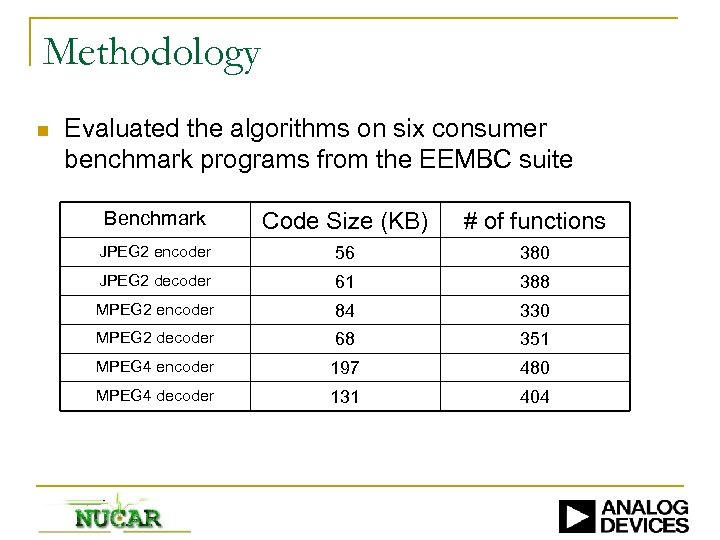

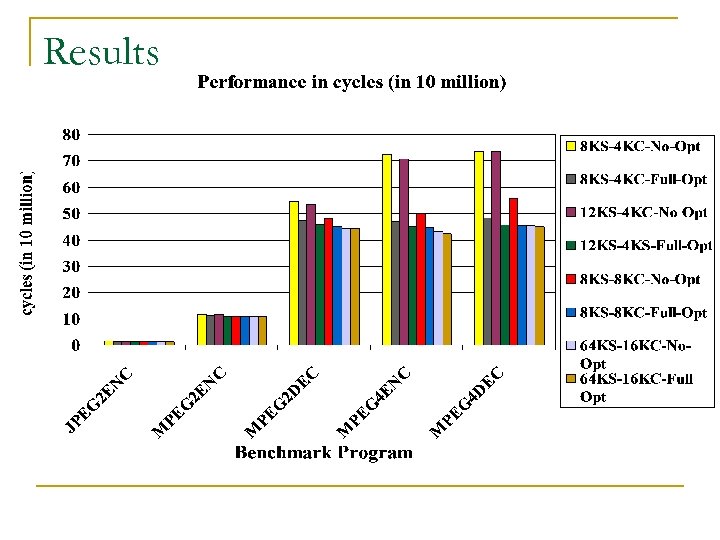

Methodology n Evaluated the algorithms on six consumer benchmark programs from the EEMBC suite Benchmark Code Size (KB) # of functions JPEG 2 encoder 56 380 JPEG 2 decoder 61 388 MPEG 2 encoder 84 330 MPEG 2 decoder 68 351 MPEG 4 encoder 197 480 MPEG 4 decoder 131 404

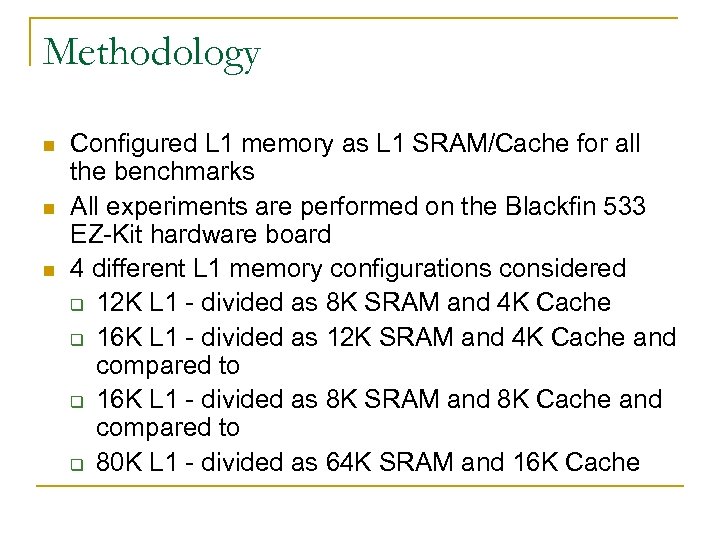

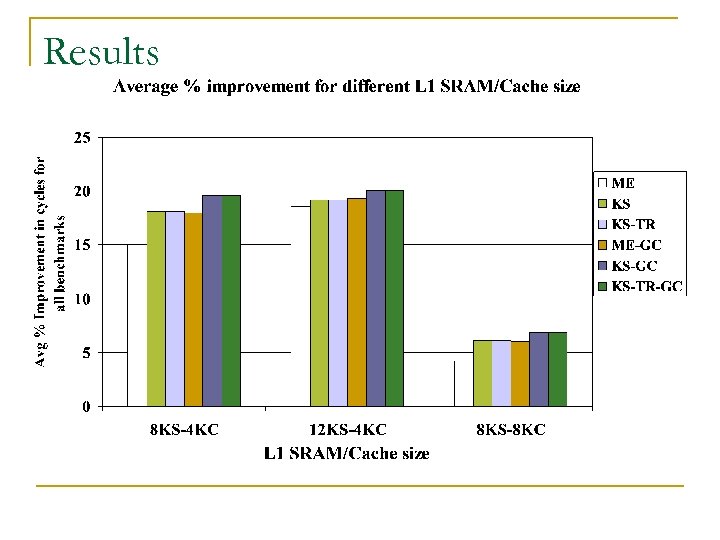

Methodology n n n Configured L 1 memory as L 1 SRAM/Cache for all the benchmarks All experiments are performed on the Blackfin 533 EZ-Kit hardware board 4 different L 1 memory configurations considered q 12 K L 1 - divided as 8 K SRAM and 4 K Cache q 16 K L 1 - divided as 12 K SRAM and 4 K Cache and compared to q 16 K L 1 - divided as 8 K SRAM and 8 K Cache and compared to q 80 K L 1 - divided as 64 K SRAM and 16 K Cache

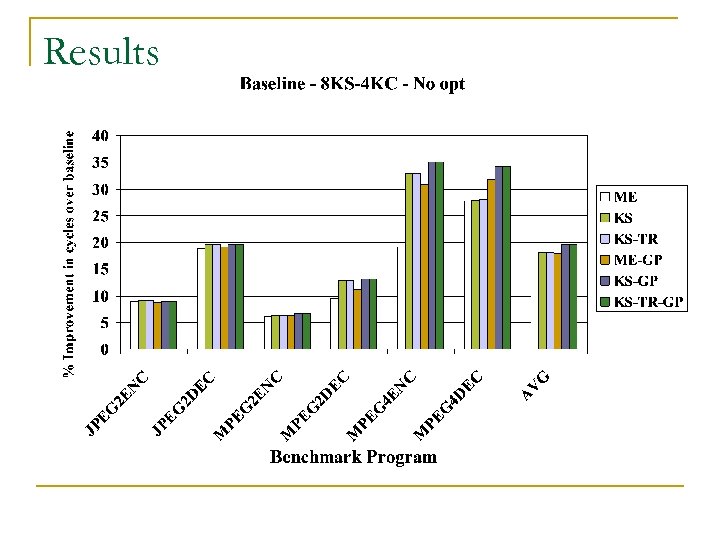

Results

Results

Results

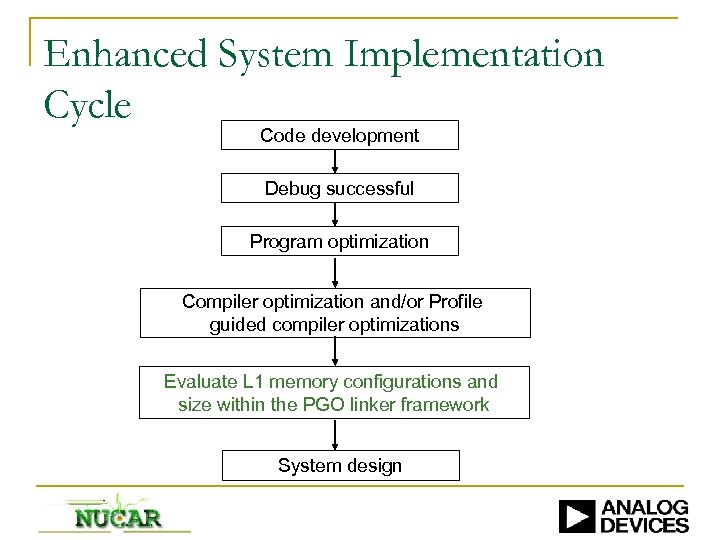

Enhanced System Implementation Cycle Code development Debug successful Program optimization Compiler optimization and/or Profile guided compiler optimizations Evaluate L 1 memory configurations and size within the PGO linker framework System design

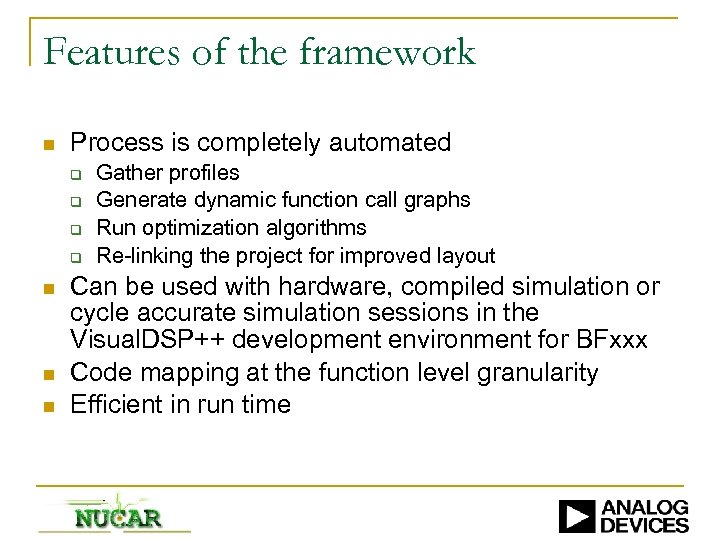

Features of the framework n Process is completely automated q q n n n Gather profiles Generate dynamic function call graphs Run optimization algorithms Re-linking the project for improved layout Can be used with hardware, compiled simulation or cycle accurate simulation sessions in the Visual. DSP++ development environment for BFxxx Code mapping at the function level granularity Efficient in run time

Conclusion n We have developed a completely automated and efficient code layout framework for a configurable L 1 code memory supported by the BFxxx We show a minimum of 3% to a maximum of 33% performance improvement (20% on average) for the six benchmark programs with a 12 K L 1 memory We show that by efficiently mapping code, a 16 K L 1 memory results in a similar performance as a 80 K of L 1 memory

Future work n n The mapping can be extended to basic block granularity Code mapping to avoid external memory bank contention (SDRAM) can be incorporated Code layout techniques for multi-core architectures can be developed; considering shared memory accesses The framework can be extended to data layout techniques

References n n Kaushal Sanghai, David Kaeli and Richard Gentile, “Code and Data Partitioning on Blackfin for partitioned multimedia benchmark programs”, In the Proceedings of the 2005 Workshop on Optimizations for DSP and Embedded Systems, Mar 2005 Kaushal Sanghai, David Kaeli, Alex Raikman and Ken Butler, “A Code Layout Framework for Configurable Memory Systems in Embedded processors”, General Technical Conference, Analog Devices Inc. , Jun-2006

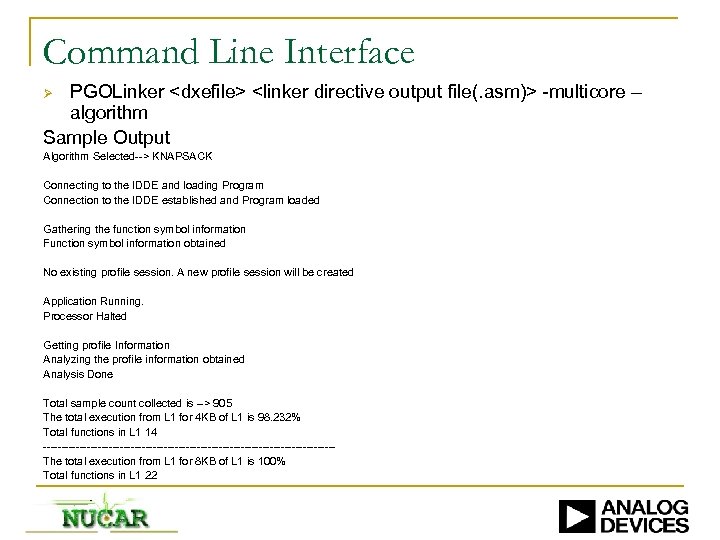

Command Line Interface PGOLinker <dxefile> <linker directive output file(. asm)> -multicore – algorithm Sample Output Ø Algorithm Selected--> KNAPSACK Connecting to the IDDE and loading Program Connection to the IDDE established and Program loaded Gathering the function symbol information Function symbol information obtained No existing profile session. A new profile session will be created Application Running. Processor Halted Getting profile Information Analyzing the profile information obtained Analysis Done Total sample count collected is --> 905 The total execution from L 1 for 4 KB of L 1 is 98. 232% Total functions in L 1 14 ----------------------------------------The total execution from L 1 for 8 KB of L 1 is 100% Total functions in L 1 22

e891aa341365b78bc8e2bb897434879e.ppt