f4490e0c1df9cc71425533923054f51f.ppt

- Количество слайдов: 73

A Century Of Progress On Information Integration: A Mid-Term Report William W. Cohen Center for Automated Learning and Discovery (CALD), Carnegie Mellon University

A Century Of Progress On Information Integration: A Mid-Term Report William W. Cohen Center for Automated Learning and Discovery (CALD), Carnegie Mellon University

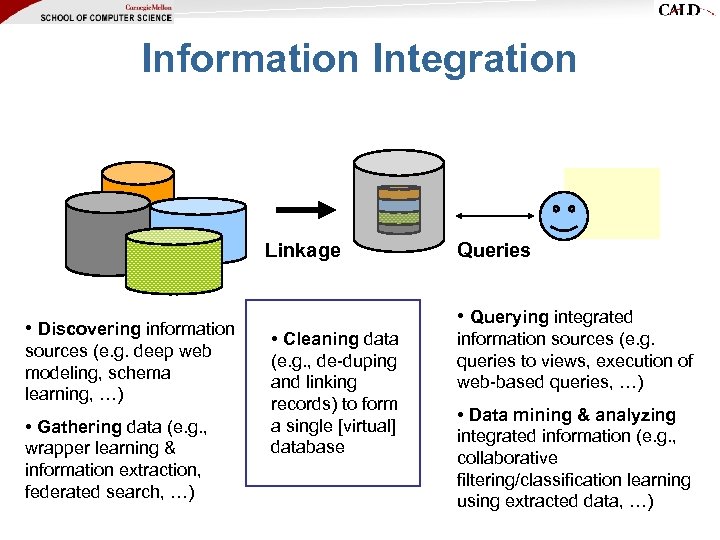

Information Integration Linkage • Discovering information sources (e. g. deep web modeling, schema learning, …) • Gathering data (e. g. , wrapper learning & information extraction, federated search, …) Queries • Querying integrated • Cleaning data (e. g. , de-duping and linking records) to form a single [virtual] database information sources (e. g. queries to views, execution of web-based queries, …) • Data mining & analyzing integrated information (e. g. , collaborative filtering/classification learning using extracted data, …)

Information Integration Linkage • Discovering information sources (e. g. deep web modeling, schema learning, …) • Gathering data (e. g. , wrapper learning & information extraction, federated search, …) Queries • Querying integrated • Cleaning data (e. g. , de-duping and linking records) to form a single [virtual] database information sources (e. g. queries to views, execution of web-based queries, …) • Data mining & analyzing integrated information (e. g. , collaborative filtering/classification learning using extracted data, …)

![[Science 1959] Record linkage: bringing together of two or more separately recorded pieces of [Science 1959] Record linkage: bringing together of two or more separately recorded pieces of](https://present5.com/presentation/f4490e0c1df9cc71425533923054f51f/image-3.jpg) [Science 1959] Record linkage: bringing together of two or more separately recorded pieces of information concerning a particular individual or family (Dunn, 1946; Marshall, 1947).

[Science 1959] Record linkage: bringing together of two or more separately recorded pieces of information concerning a particular individual or family (Dunn, 1946; Marshall, 1947).

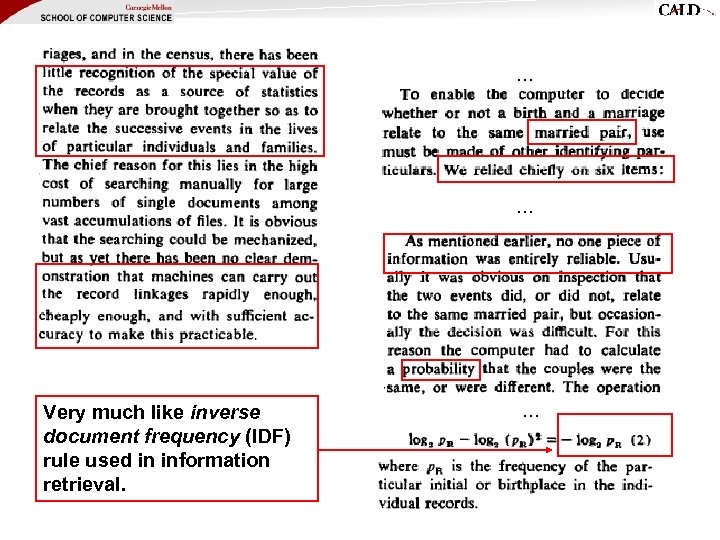

… … Very much like inverse document frequency (IDF) rule used in information retrieval. …

… … Very much like inverse document frequency (IDF) rule used in information retrieval. …

Motivations for Record Linkage c. 1959 Record linkage is motivated by certain problems faced by a small number of scientists doing data analysis for obscure reasons.

Motivations for Record Linkage c. 1959 Record linkage is motivated by certain problems faced by a small number of scientists doing data analysis for obscure reasons.

In 1954, Popular Mechanics showed its readers what a home computer might look like in 2004 …

In 1954, Popular Mechanics showed its readers what a home computer might look like in 2004 …

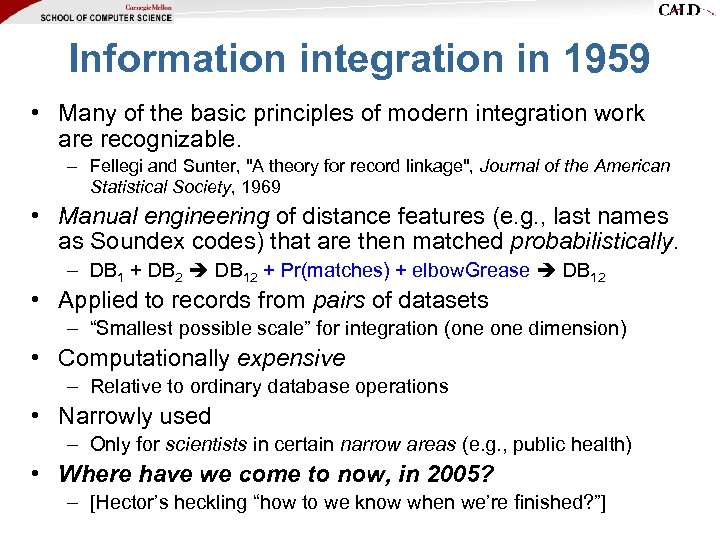

Information integration in 1959 • Many of the basic principles of modern integration work are recognizable. – Fellegi and Sunter, "A theory for record linkage", Journal of the American Statistical Society, 1969 • Manual engineering of distance features (e. g. , last names as Soundex codes) that are then matched probabilistically. – DB 1 + DB 2 DB 12 + Pr(matches) + elbow. Grease DB 12 • Applied to records from pairs of datasets – “Smallest possible scale” for integration (one dimension) • Computationally expensive – Relative to ordinary database operations • Narrowly used – Only for scientists in certain narrow areas (e. g. , public health) • Where have we come to now, in 2005? – [Hector’s heckling “how to we know when we’re finished? ”]

Information integration in 1959 • Many of the basic principles of modern integration work are recognizable. – Fellegi and Sunter, "A theory for record linkage", Journal of the American Statistical Society, 1969 • Manual engineering of distance features (e. g. , last names as Soundex codes) that are then matched probabilistically. – DB 1 + DB 2 DB 12 + Pr(matches) + elbow. Grease DB 12 • Applied to records from pairs of datasets – “Smallest possible scale” for integration (one dimension) • Computationally expensive – Relative to ordinary database operations • Narrowly used – Only for scientists in certain narrow areas (e. g. , public health) • Where have we come to now, in 2005? – [Hector’s heckling “how to we know when we’re finished? ”]

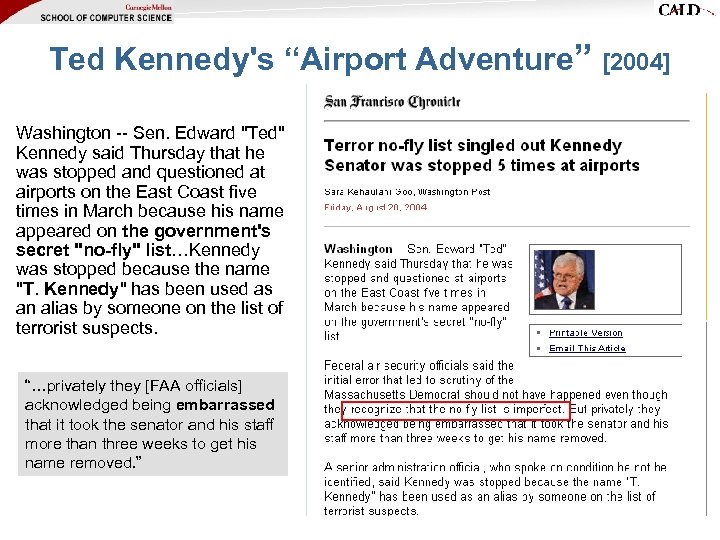

Ted Kennedy's “Airport Adventure” Washington -- Sen. Edward "Ted" Kennedy said Thursday that he was stopped and questioned at airports on the East Coast five times in March because his name appeared on the government's secret "no-fly" list…Kennedy was stopped because the name "T. Kennedy" has been used as an alias by someone on the list of terrorist suspects. “…privately they [FAA officials] acknowledged being embarrassed that it took the senator and his staff more than three weeks to get his name removed. ” [2004]

Ted Kennedy's “Airport Adventure” Washington -- Sen. Edward "Ted" Kennedy said Thursday that he was stopped and questioned at airports on the East Coast five times in March because his name appeared on the government's secret "no-fly" list…Kennedy was stopped because the name "T. Kennedy" has been used as an alias by someone on the list of terrorist suspects. “…privately they [FAA officials] acknowledged being embarrassed that it took the senator and his staff more than three weeks to get his name removed. ” [2004]

![Florida Felon List [2000, 2004] The purge of felons from voter rolls has been Florida Felon List [2000, 2004] The purge of felons from voter rolls has been](https://present5.com/presentation/f4490e0c1df9cc71425533923054f51f/image-10.jpg) Florida Felon List [2000, 2004] The purge of felons from voter rolls has been a thorny issue since the 2000 presidential election. A private company hired to identify ineligible voters before the election produced a list with scores of errors, and elections supervisors used it to remove voters without verifying its accuracy… The glitch in a state that President Bush won by just 537 votes could have been significant — because of the state's sizable Cuban population, Hispanics in Florida have tended to vote Republican… The list had about 28, 000 Democrats and around 9, 500 Republicans… The new list … contained few people identified as Hispanic; of the nearly 48, 000 people on the list created by the Florida Department of Law Enforcement, only 61 were classified as Hispanics. Gov. Bush said the mistake occurred because two databases that were merged to form the disputed list were incompatible. … when voters register in Florida, they can identify themselves as Hispanic. But the potential felons database has no Hispanic category…

Florida Felon List [2000, 2004] The purge of felons from voter rolls has been a thorny issue since the 2000 presidential election. A private company hired to identify ineligible voters before the election produced a list with scores of errors, and elections supervisors used it to remove voters without verifying its accuracy… The glitch in a state that President Bush won by just 537 votes could have been significant — because of the state's sizable Cuban population, Hispanics in Florida have tended to vote Republican… The list had about 28, 000 Democrats and around 9, 500 Republicans… The new list … contained few people identified as Hispanic; of the nearly 48, 000 people on the list created by the Florida Department of Law Enforcement, only 61 were classified as Hispanics. Gov. Bush said the mistake occurred because two databases that were merged to form the disputed list were incompatible. … when voters register in Florida, they can identify themselves as Hispanic. But the potential felons database has no Hispanic category…

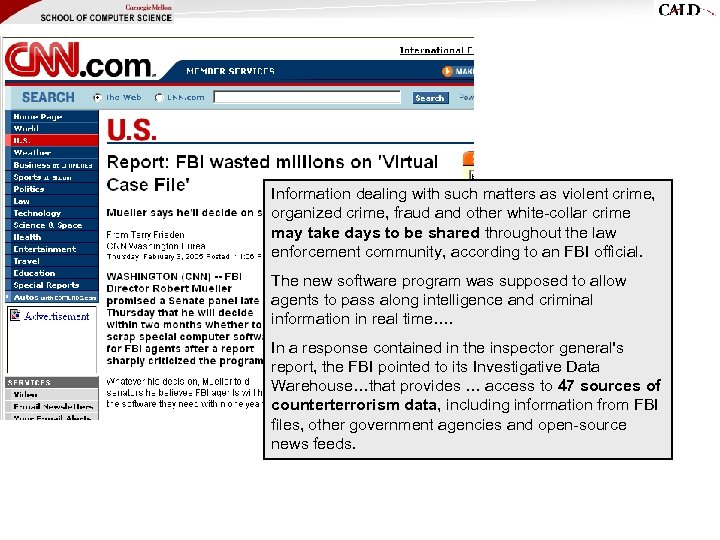

Information dealing with such matters as violent crime, organized crime, fraud and other white-collar crime may take days to be shared throughout the law enforcement community, according to an FBI official. The new software program was supposed to allow agents to pass along intelligence and criminal information in real time…. In a response contained in the inspector general's report, the FBI pointed to its Investigative Data Warehouse…that provides … access to 47 sources of counterterrorism data, including information from FBI files, other government agencies and open-source news feeds.

Information dealing with such matters as violent crime, organized crime, fraud and other white-collar crime may take days to be shared throughout the law enforcement community, according to an FBI official. The new software program was supposed to allow agents to pass along intelligence and criminal information in real time…. In a response contained in the inspector general's report, the FBI pointed to its Investigative Data Warehouse…that provides … access to 47 sources of counterterrorism data, including information from FBI files, other government agencies and open-source news feeds.

. . counter asymmetric threats by achieving total information awareness…

. . counter asymmetric threats by achieving total information awareness…

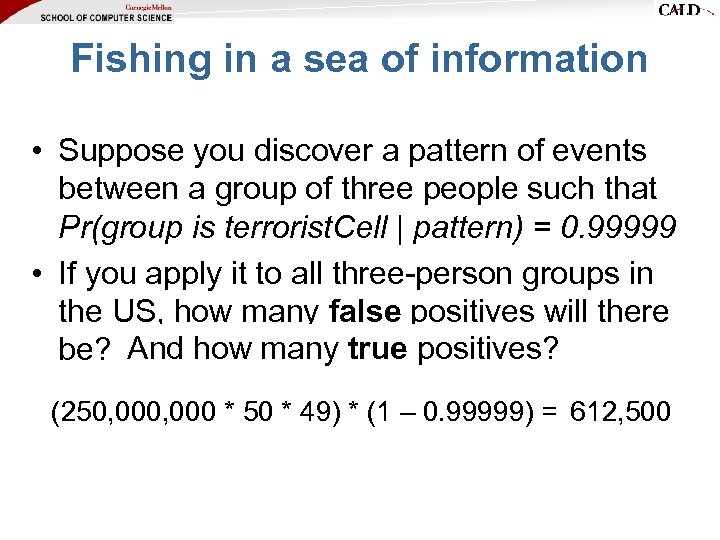

Fishing in a sea of information • Suppose you discover a pattern of events between a group of three people such that Pr(group is terrorist. Cell | pattern) = 0. 99999 • If you apply it to all three-person groups in the US, how many false positives will there be? And how many true positives? (250, 000 * 50 * 49) * (1 – 0. 99999) = _______ 612, 500

Fishing in a sea of information • Suppose you discover a pattern of events between a group of three people such that Pr(group is terrorist. Cell | pattern) = 0. 99999 • If you apply it to all three-person groups in the US, how many false positives will there be? And how many true positives? (250, 000 * 50 * 49) * (1 – 0. 99999) = _______ 612, 500

![Chinese Embassy Bombing [1999] • May 7, 1999: NATO bombs the Chinese Embassy in Chinese Embassy Bombing [1999] • May 7, 1999: NATO bombs the Chinese Embassy in](https://present5.com/presentation/f4490e0c1df9cc71425533923054f51f/image-14.jpg) Chinese Embassy Bombing [1999] • May 7, 1999: NATO bombs the Chinese Embassy in Belgrade with five precision-guided bombs—sent to the wrong address—killing three. “The Chinese embassy was mistaken for the intended target…located just 200 yards from the embassy. Reliance on an outdated map, aerial photos, and the extrapolation of the address of the federal directorate from number patterns on surrounding streets were cited … as causing the tragic error…despite the elaborate system of checks built-into the targeting protocol, the coordinates did not trigger an alarm because three databases used in the process all had the old address. ” [US-China Policy Foundation summary of the investigation] “BEIJING, June 17 –– China today publicly rejected the U. S. explanation … [and] said the U. S. report ‘does not hold water. ’” [Washington Post] “The Chinese embassy was clearly marked on tourist maps that are on sale internationally, including in the English language. … Its address is listed in the Belgrade telephone directory…. For the CIA to have made such an elementary blunder is simply not plausible. ” [World Socialist Web Site] “Many observers believe that the bombing was deliberate…it if you believe that the bombing was an accident, you already believe in the far-fetched” [disinfo. com, July 2002].

Chinese Embassy Bombing [1999] • May 7, 1999: NATO bombs the Chinese Embassy in Belgrade with five precision-guided bombs—sent to the wrong address—killing three. “The Chinese embassy was mistaken for the intended target…located just 200 yards from the embassy. Reliance on an outdated map, aerial photos, and the extrapolation of the address of the federal directorate from number patterns on surrounding streets were cited … as causing the tragic error…despite the elaborate system of checks built-into the targeting protocol, the coordinates did not trigger an alarm because three databases used in the process all had the old address. ” [US-China Policy Foundation summary of the investigation] “BEIJING, June 17 –– China today publicly rejected the U. S. explanation … [and] said the U. S. report ‘does not hold water. ’” [Washington Post] “The Chinese embassy was clearly marked on tourist maps that are on sale internationally, including in the English language. … Its address is listed in the Belgrade telephone directory…. For the CIA to have made such an elementary blunder is simply not plausible. ” [World Socialist Web Site] “Many observers believe that the bombing was deliberate…it if you believe that the bombing was an accident, you already believe in the far-fetched” [disinfo. com, July 2002].

Information integration in 2005 • Apparently, we still have work to do. • We fail to integrate information correctly – “Ted Kennedy (D-MA)” ≠ “T. Kennedy, (T-IRA)” • Crucial decisions are affected by these errors – Who can/can’t vote (felon list) – Where bombs are sent (Chinese embassy) • Storing, linking, and analyzing information is a double-edged sword: – Loss of privacy and “fishing expeditions”

Information integration in 2005 • Apparently, we still have work to do. • We fail to integrate information correctly – “Ted Kennedy (D-MA)” ≠ “T. Kennedy, (T-IRA)” • Crucial decisions are affected by these errors – Who can/can’t vote (felon list) – Where bombs are sent (Chinese embassy) • Storing, linking, and analyzing information is a double-edged sword: – Loss of privacy and “fishing expeditions”

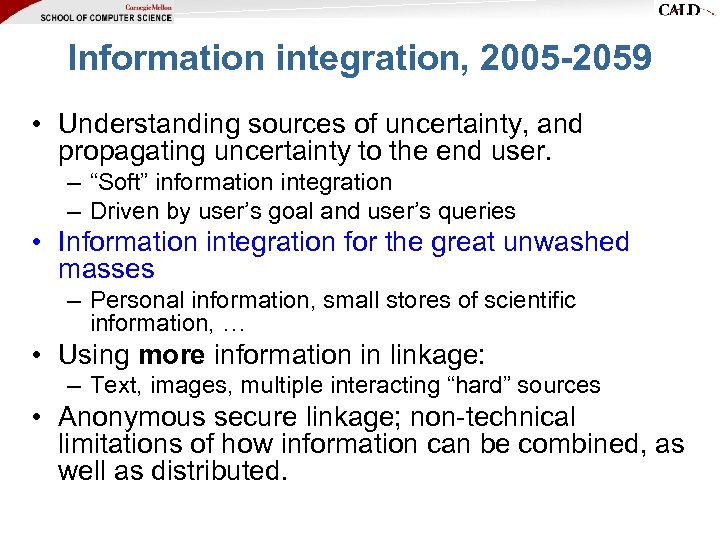

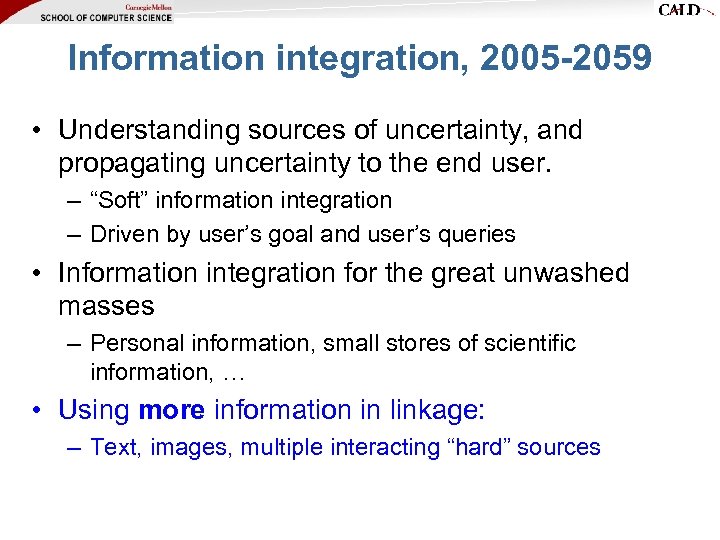

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Using more information in linkage: – Text, images, multiple interacting “hard” sources • Anonymous secure linkage; non-technical limitations of how information can be combined, as well as distributed.

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Using more information in linkage: – Text, images, multiple interacting “hard” sources • Anonymous secure linkage; non-technical limitations of how information can be combined, as well as distributed.

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries – When does a particular user believe that “X is the same thing as Y”? Does “the same thing” always mean the same thing? – Is “X is the same entity as Y” always transitive?

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries – When does a particular user believe that “X is the same thing as Y”? Does “the same thing” always mean the same thing? – Is “X is the same entity as Y” always transitive?

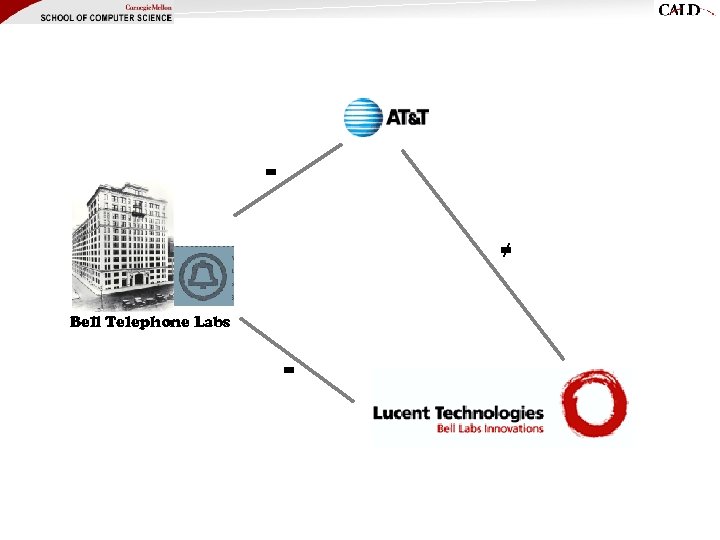

![When are two entities the same? • • • Bell Labs [1925] Bell Telephone When are two entities the same? • • • Bell Labs [1925] Bell Telephone](https://present5.com/presentation/f4490e0c1df9cc71425533923054f51f/image-18.jpg) When are two entities the same? • • • Bell Labs [1925] Bell Telephone Labs AT&T Bell Labs A&T Labs AT&T Labs—Research AT&T Labs Research, Shannon Laboratory • Shannon Labs • Bell Labs Innovations • Lucent Technologies/Bell Labs Innovations History of Innovation: From 1925 to today, AT&T has attracted some of the world's greatest scientists, engineers and developers…. [www. research. att. com] Bell Labs Facts: Bell Laboratories, the research and development arm of Lucent Technologies, has been operating continuously since 1925… [bell-labs. com]

When are two entities the same? • • • Bell Labs [1925] Bell Telephone Labs AT&T Bell Labs A&T Labs AT&T Labs—Research AT&T Labs Research, Shannon Laboratory • Shannon Labs • Bell Labs Innovations • Lucent Technologies/Bell Labs Innovations History of Innovation: From 1925 to today, AT&T has attracted some of the world's greatest scientists, engineers and developers…. [www. research. att. com] Bell Labs Facts: Bell Laboratories, the research and development arm of Lucent Technologies, has been operating continuously since 1925… [bell-labs. com]

= ≠ Bell Telephone Labs =

= ≠ Bell Telephone Labs =

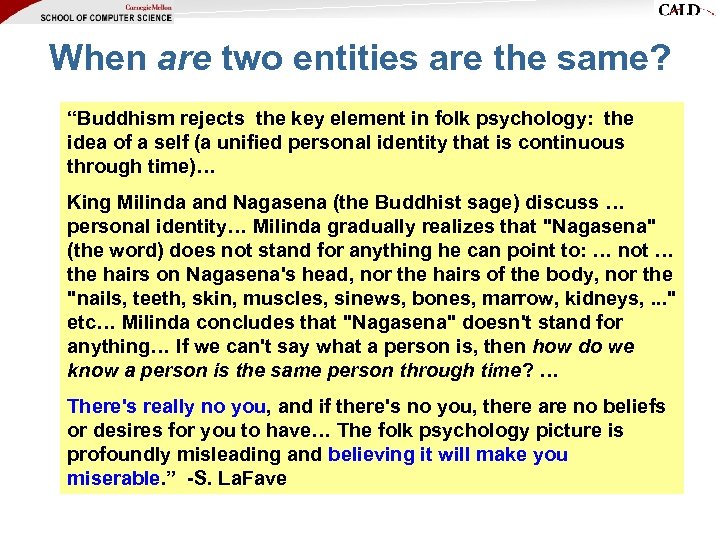

When are two entities are the same? “Buddhism rejects the key element in folk psychology: the idea of a self (a unified personal identity that is continuous through time)… King Milinda and Nagasena (the Buddhist sage) discuss … personal identity… Milinda gradually realizes that "Nagasena" (the word) does not stand for anything he can point to: … not … the hairs on Nagasena's head, nor the hairs of the body, nor the "nails, teeth, skin, muscles, sinews, bones, marrow, kidneys, . . . " etc… Milinda concludes that "Nagasena" doesn't stand for anything… If we can't say what a person is, then how do we know a person is the same person through time? … There's really no you, and if there's no you, there are no beliefs or desires for you to have… The folk psychology picture is profoundly misleading and believing it will make you miserable. ” -S. La. Fave

When are two entities are the same? “Buddhism rejects the key element in folk psychology: the idea of a self (a unified personal identity that is continuous through time)… King Milinda and Nagasena (the Buddhist sage) discuss … personal identity… Milinda gradually realizes that "Nagasena" (the word) does not stand for anything he can point to: … not … the hairs on Nagasena's head, nor the hairs of the body, nor the "nails, teeth, skin, muscles, sinews, bones, marrow, kidneys, . . . " etc… Milinda concludes that "Nagasena" doesn't stand for anything… If we can't say what a person is, then how do we know a person is the same person through time? … There's really no you, and if there's no you, there are no beliefs or desires for you to have… The folk psychology picture is profoundly misleading and believing it will make you miserable. ” -S. La. Fave

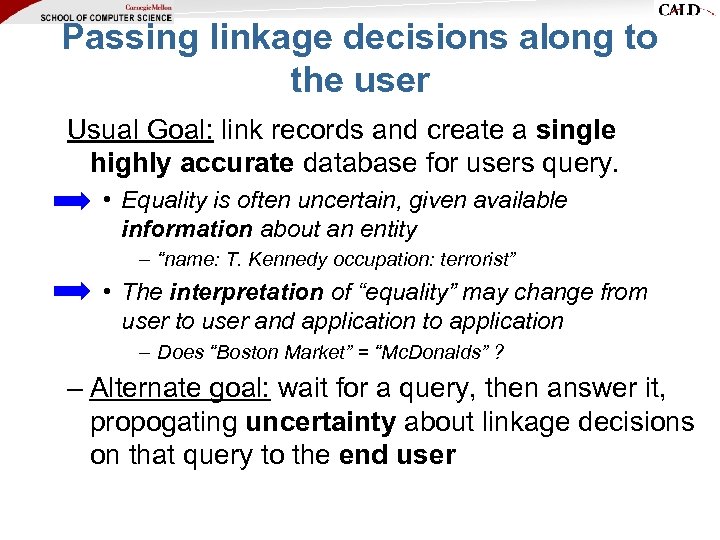

Passing linkage decisions along to the user Usual Goal: link records and create a single highly accurate database for users query. • Equality is often uncertain, given available information about an entity – “name: T. Kennedy occupation: terrorist” • The interpretation of “equality” may change from user to user and application to application – Does “Boston Market” = “Mc. Donalds” ? – Alternate goal: wait for a query, then answer it, propogating uncertainty about linkage decisions on that query to the end user

Passing linkage decisions along to the user Usual Goal: link records and create a single highly accurate database for users query. • Equality is often uncertain, given available information about an entity – “name: T. Kennedy occupation: terrorist” • The interpretation of “equality” may change from user to user and application to application – Does “Boston Market” = “Mc. Donalds” ? – Alternate goal: wait for a query, then answer it, propogating uncertainty about linkage decisions on that query to the end user

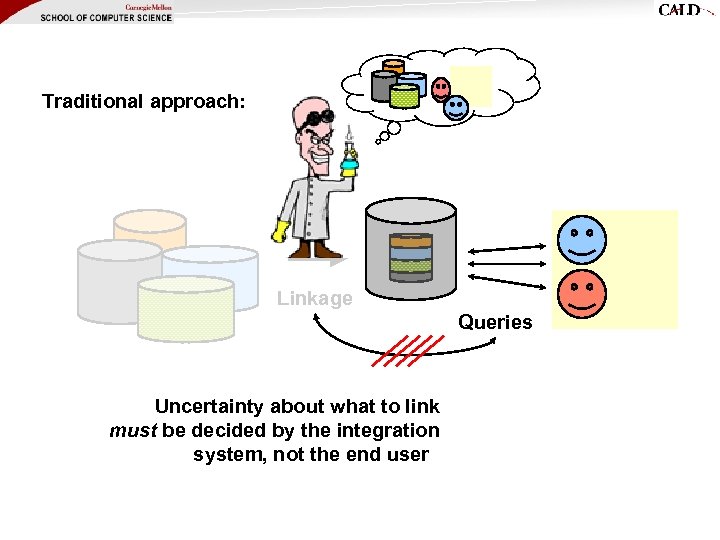

Traditional approach: Linkage Queries Uncertainty about what to link must be decided by the integration system, not the end user

Traditional approach: Linkage Queries Uncertainty about what to link must be decided by the integration system, not the end user

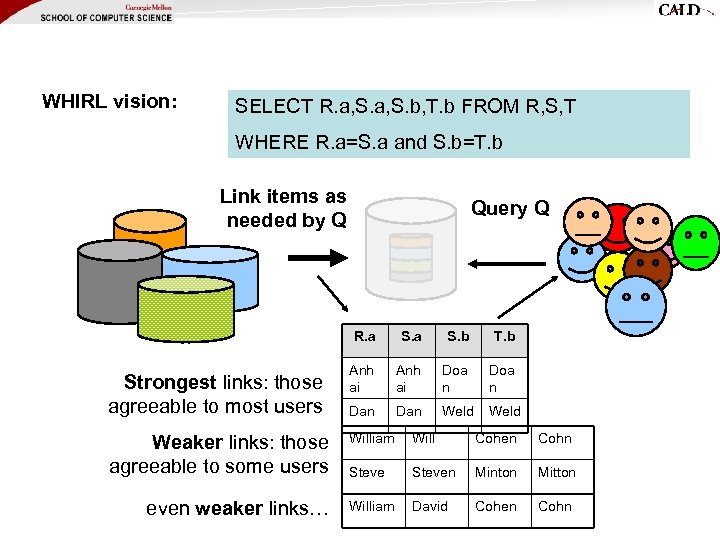

WHIRL vision: SELECT R. a, S. b, T. b FROM R, S, T WHERE R. a=S. a and S. b=T. b Link items as needed by Q Query Q R. a Strongest links: those agreeable to most users S. a S. b T. b Anh ai Doa n Dan Weld Weaker links: those agreeable to some users William Will Cohen Cohn Steven Minton Mitton even weaker links… William David Cohen Cohn

WHIRL vision: SELECT R. a, S. b, T. b FROM R, S, T WHERE R. a=S. a and S. b=T. b Link items as needed by Q Query Q R. a Strongest links: those agreeable to most users S. a S. b T. b Anh ai Doa n Dan Weld Weaker links: those agreeable to some users William Will Cohen Cohn Steven Minton Mitton even weaker links… William David Cohen Cohn

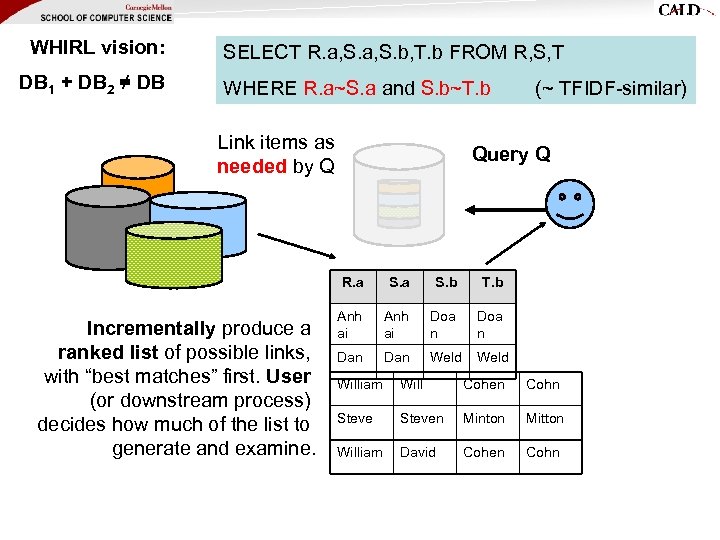

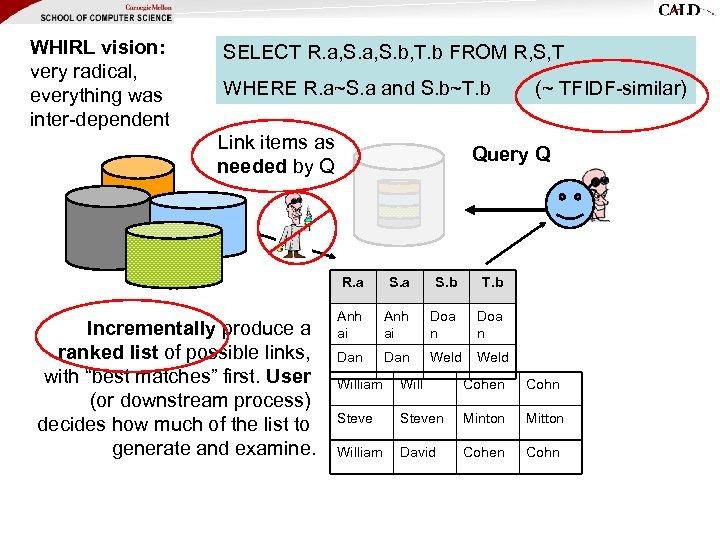

WHIRL vision: DB 1 + DB 2 ≠ DB SELECT R. a, S. b, T. b FROM R, S, T WHERE R. a~S. a and S. b~T. b Link items as needed by Q Query Q R. a Incrementally produce a ranked list of possible links, with “best matches” first. User (or downstream process) decides how much of the list to generate and examine. (~ TFIDF-similar) S. a S. b T. b Anh ai Doa n Dan Weld William Will Cohen Cohn Steven Minton Mitton William David Cohen Cohn

WHIRL vision: DB 1 + DB 2 ≠ DB SELECT R. a, S. b, T. b FROM R, S, T WHERE R. a~S. a and S. b~T. b Link items as needed by Q Query Q R. a Incrementally produce a ranked list of possible links, with “best matches” first. User (or downstream process) decides how much of the list to generate and examine. (~ TFIDF-similar) S. a S. b T. b Anh ai Doa n Dan Weld William Will Cohen Cohn Steven Minton Mitton William David Cohen Cohn

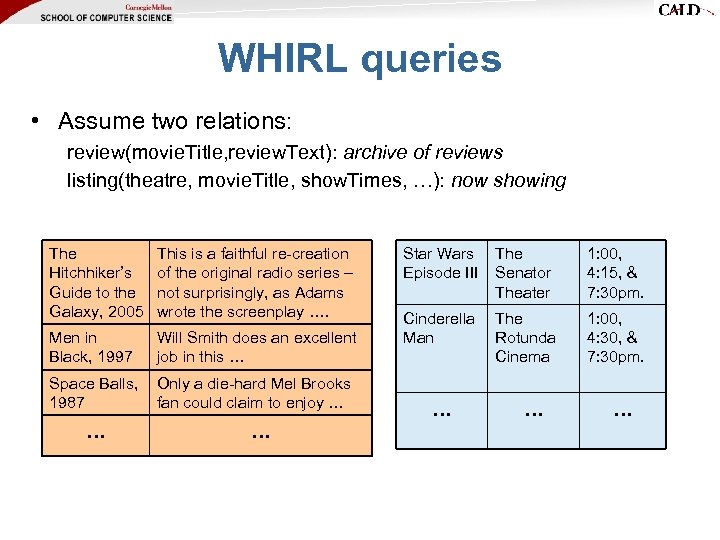

WHIRL queries • Assume two relations: review(movie. Title, review. Text): archive of reviews listing(theatre, movie. Title, show. Times, …): now showing The Hitchhiker’s Guide to the Galaxy, 2005 This is a faithful re-creation of the original radio series – not surprisingly, as Adams wrote the screenplay …. Men in Black, 1997 Will Smith does an excellent job in this … Space Balls, 1987 Only a die-hard Mel Brooks fan could claim to enjoy … … … Star Wars Episode III The Senator Theater 1: 00, 4: 15, & 7: 30 pm. Cinderella Man The Rotunda Cinema 1: 00, 4: 30, & 7: 30 pm. … … …

WHIRL queries • Assume two relations: review(movie. Title, review. Text): archive of reviews listing(theatre, movie. Title, show. Times, …): now showing The Hitchhiker’s Guide to the Galaxy, 2005 This is a faithful re-creation of the original radio series – not surprisingly, as Adams wrote the screenplay …. Men in Black, 1997 Will Smith does an excellent job in this … Space Balls, 1987 Only a die-hard Mel Brooks fan could claim to enjoy … … … Star Wars Episode III The Senator Theater 1: 00, 4: 15, & 7: 30 pm. Cinderella Man The Rotunda Cinema 1: 00, 4: 30, & 7: 30 pm. … … …

![WHIRL queries • “Find reviews of sci-fi comedies [movie domain] FROM review SELECT * WHIRL queries • “Find reviews of sci-fi comedies [movie domain] FROM review SELECT *](https://present5.com/presentation/f4490e0c1df9cc71425533923054f51f/image-26.jpg) WHIRL queries • “Find reviews of sci-fi comedies [movie domain] FROM review SELECT * WHERE r. text~’sci fi comedy’ (like standard ranked retrieval of “sci-fi comedy”) • “ “Where is [that sci-fi comedy] playing? ” FROM review as r, LISTING as s, SELECT * WHERE r. title~s. title and r. text~’sci fi comedy’ (best answers: titles are similar to each other – e. g. , “Hitchhiker’s Guide to the Galaxy” and “The Hitchhiker’s Guide to the Galaxy, 2005” and the review text is similar to “sci-fi comedy”)

WHIRL queries • “Find reviews of sci-fi comedies [movie domain] FROM review SELECT * WHERE r. text~’sci fi comedy’ (like standard ranked retrieval of “sci-fi comedy”) • “ “Where is [that sci-fi comedy] playing? ” FROM review as r, LISTING as s, SELECT * WHERE r. title~s. title and r. text~’sci fi comedy’ (best answers: titles are similar to each other – e. g. , “Hitchhiker’s Guide to the Galaxy” and “The Hitchhiker’s Guide to the Galaxy, 2005” and the review text is similar to “sci-fi comedy”)

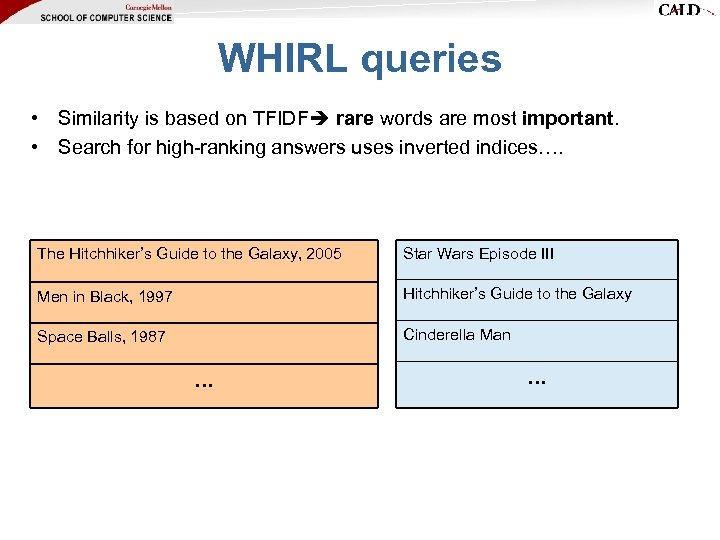

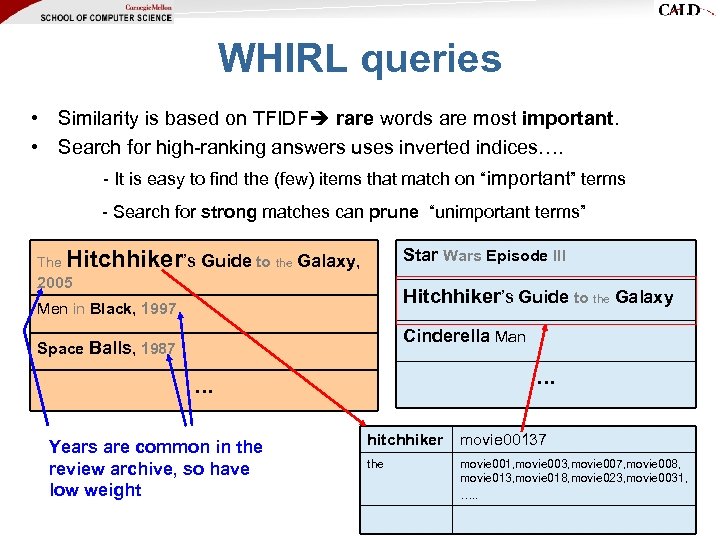

WHIRL queries • Similarity is based on TFIDF rare words are most important. • Search for high-ranking answers uses inverted indices…. The Hitchhiker’s Guide to the Galaxy, 2005 Star Wars Episode III Men in Black, 1997 Hitchhiker’s Guide to the Galaxy Space Balls, 1987 Cinderella Man … …

WHIRL queries • Similarity is based on TFIDF rare words are most important. • Search for high-ranking answers uses inverted indices…. The Hitchhiker’s Guide to the Galaxy, 2005 Star Wars Episode III Men in Black, 1997 Hitchhiker’s Guide to the Galaxy Space Balls, 1987 Cinderella Man … …

WHIRL queries • Similarity is based on TFIDF rare words are most important. • Search for high-ranking answers uses inverted indices…. - It is easy to find the (few) items that match on “important” terms - Search for strong matches can prune “unimportant terms” The Star Wars Episode III Hitchhiker’s Guide to the Galaxy, 2005 Hitchhiker’s Guide to the Galaxy Men in Black, 1997 Cinderella Man Space Balls, 1987 … … Years are common in the review archive, so have low weight hitchhiker movie 00137 the movie 001, movie 003, movie 007, movie 008, movie 013, movie 018, movie 023, movie 0031, …. .

WHIRL queries • Similarity is based on TFIDF rare words are most important. • Search for high-ranking answers uses inverted indices…. - It is easy to find the (few) items that match on “important” terms - Search for strong matches can prune “unimportant terms” The Star Wars Episode III Hitchhiker’s Guide to the Galaxy, 2005 Hitchhiker’s Guide to the Galaxy Men in Black, 1997 Cinderella Man Space Balls, 1987 … … Years are common in the review archive, so have low weight hitchhiker movie 00137 the movie 001, movie 003, movie 007, movie 008, movie 013, movie 018, movie 023, movie 0031, …. .

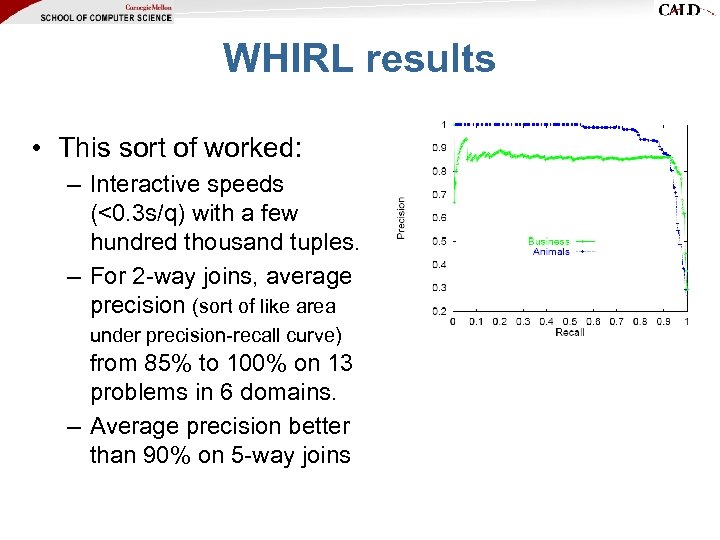

WHIRL results • This sort of worked: – Interactive speeds (<0. 3 s/q) with a few hundred thousand tuples. – For 2 -way joins, average precision (sort of like area under precision-recall curve) from 85% to 100% on 13 problems in 6 domains. – Average precision better than 90% on 5 -way joins

WHIRL results • This sort of worked: – Interactive speeds (<0. 3 s/q) with a few hundred thousand tuples. – For 2 -way joins, average precision (sort of like area under precision-recall curve) from 85% to 100% on 13 problems in 6 domains. – Average precision better than 90% on 5 -way joins

WHIRL and soft integration • WHIRL worked for a number of web-based demo applications. – e. g. , integrating data from 30 -50 smallish web DBs with <1 FTE labor • WHIRL could link many data types reasonably well, without engineering • WHIRL generated numerous papers (Sigmod 98, KDD 98, Agents 99, AAAI 99, TOIS 2000, AIJ 2000, ICML 2000, JAIR 2001) • WHIRL was relational – But see ELIXIR (SIGIR 2001) • WHIRL users need to know schema of source DBs • WHIRL’s query-time linkage worked only for TFIDF, tokenbased distance metrics – Text fields with few misspellimgs • WHIRL was memory-based – all data must be centrally stored —no federated data. – small datasets only

WHIRL and soft integration • WHIRL worked for a number of web-based demo applications. – e. g. , integrating data from 30 -50 smallish web DBs with <1 FTE labor • WHIRL could link many data types reasonably well, without engineering • WHIRL generated numerous papers (Sigmod 98, KDD 98, Agents 99, AAAI 99, TOIS 2000, AIJ 2000, ICML 2000, JAIR 2001) • WHIRL was relational – But see ELIXIR (SIGIR 2001) • WHIRL users need to know schema of source DBs • WHIRL’s query-time linkage worked only for TFIDF, tokenbased distance metrics – Text fields with few misspellimgs • WHIRL was memory-based – all data must be centrally stored —no federated data. – small datasets only

WHIRL vision: very radical, everything was inter-dependent SELECT R. a, S. b, T. b FROM R, S, T WHERE R. a~S. a and S. b~T. b Link items as needed by Q Query Q R. a Incrementally produce a ranked list of possible links, with “best matches” first. User (or downstream process) decides how much of the list to generate and examine. (~ TFIDF-similar) S. a S. b T. b Anh ai Doa n Dan Weld William Will Cohen Cohn Steven Minton Mitton William David Cohen Cohn

WHIRL vision: very radical, everything was inter-dependent SELECT R. a, S. b, T. b FROM R, S, T WHERE R. a~S. a and S. b~T. b Link items as needed by Q Query Q R. a Incrementally produce a ranked list of possible links, with “best matches” first. User (or downstream process) decides how much of the list to generate and examine. (~ TFIDF-similar) S. a S. b T. b Anh ai Doa n Dan Weld William Will Cohen Cohn Steven Minton Mitton William David Cohen Cohn

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Using more information in linkage: – Text, images, multiple interacting “hard” sources • Anonymous secure linkage; non-technical limitations of how information can be combined, as well as distributed.

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Using more information in linkage: – Text, images, multiple interacting “hard” sources • Anonymous secure linkage; non-technical limitations of how information can be combined, as well as distributed.

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Needed: – Robust distance metrics that work “out of the box” – Methods to tune and combine these metrics

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Needed: – Robust distance metrics that work “out of the box” – Methods to tune and combine these metrics

Robust distance metrics for strings • Kinds of distances between s and t: – Edit-distance based (Levenshtein, Smith. Waterman, …): distance is cost of cheapest sequence of edits that transform s to t. – Term-based (TFIDF, Jaccard, DICE, …): distance based on set of words in s and t, usually weighting “important” words – Which methods work best when?

Robust distance metrics for strings • Kinds of distances between s and t: – Edit-distance based (Levenshtein, Smith. Waterman, …): distance is cost of cheapest sequence of edits that transform s to t. – Term-based (TFIDF, Jaccard, DICE, …): distance based on set of words in s and t, usually weighting “important” words – Which methods work best when?

Robust distance metrics for strings Second. String (Cohen, Ravikumar, Fienberg, IIWeb 2003): • Java toolkit of string-matching methods from AI, Statistics, IR and DB communities • Tools for evaluating performance on test data • Used to experimentally compare a number of metrics

Robust distance metrics for strings Second. String (Cohen, Ravikumar, Fienberg, IIWeb 2003): • Java toolkit of string-matching methods from AI, Statistics, IR and DB communities • Tools for evaluating performance on test data • Used to experimentally compare a number of metrics

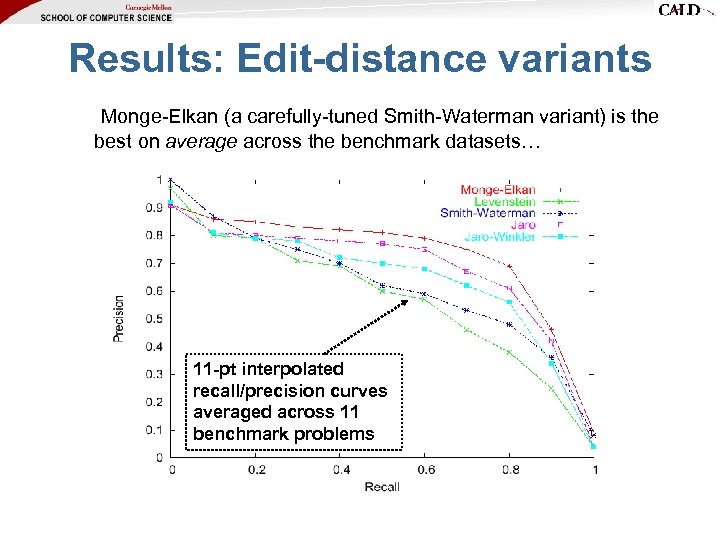

Results: Edit-distance variants Monge-Elkan (a carefully-tuned Smith-Waterman variant) is the best on average across the benchmark datasets… 11 -pt interpolated recall/precision curves averaged across 11 benchmark problems

Results: Edit-distance variants Monge-Elkan (a carefully-tuned Smith-Waterman variant) is the best on average across the benchmark datasets… 11 -pt interpolated recall/precision curves averaged across 11 benchmark problems

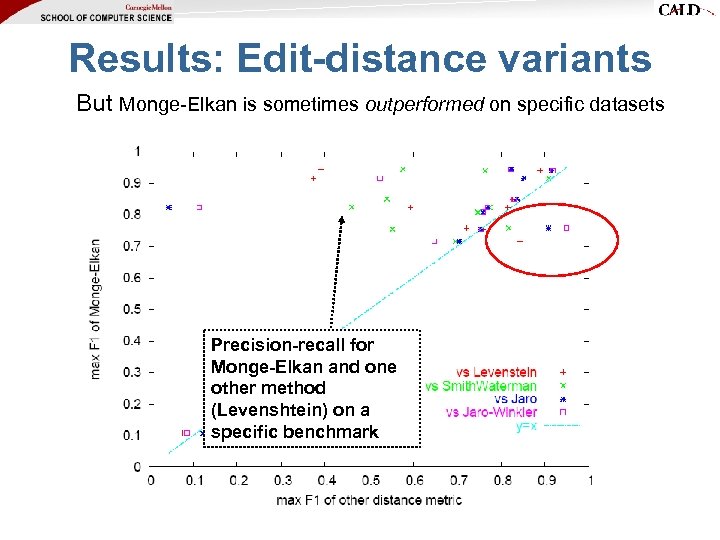

Results: Edit-distance variants But Monge-Elkan is sometimes outperformed on specific datasets Precision-recall for Monge-Elkan and one other method (Levenshtein) on a specific benchmark

Results: Edit-distance variants But Monge-Elkan is sometimes outperformed on specific datasets Precision-recall for Monge-Elkan and one other method (Levenshtein) on a specific benchmark

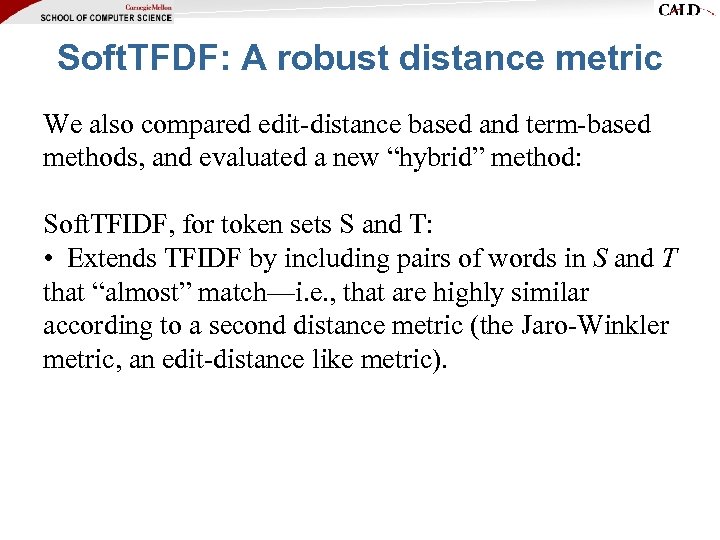

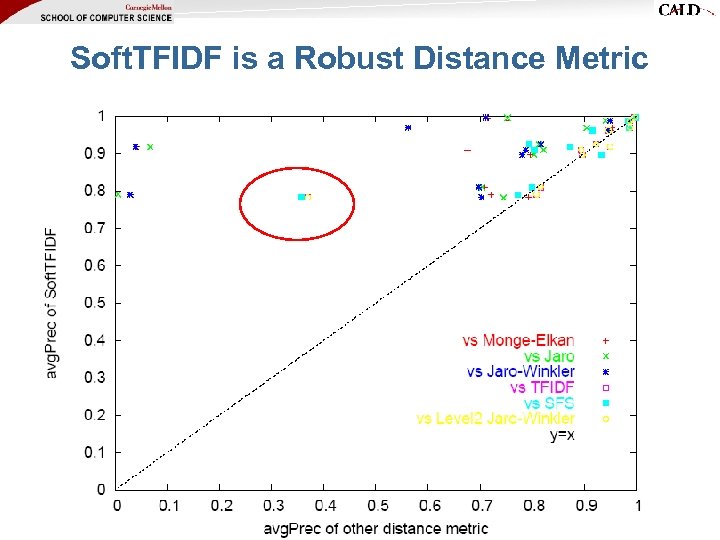

Soft. TFDF: A robust distance metric We also compared edit-distance based and term-based methods, and evaluated a new “hybrid” method: Soft. TFIDF, for token sets S and T: • Extends TFIDF by including pairs of words in S and T that “almost” match—i. e. , that are highly similar according to a second distance metric (the Jaro-Winkler metric, an edit-distance like metric).

Soft. TFDF: A robust distance metric We also compared edit-distance based and term-based methods, and evaluated a new “hybrid” method: Soft. TFIDF, for token sets S and T: • Extends TFIDF by including pairs of words in S and T that “almost” match—i. e. , that are highly similar according to a second distance metric (the Jaro-Winkler metric, an edit-distance like metric).

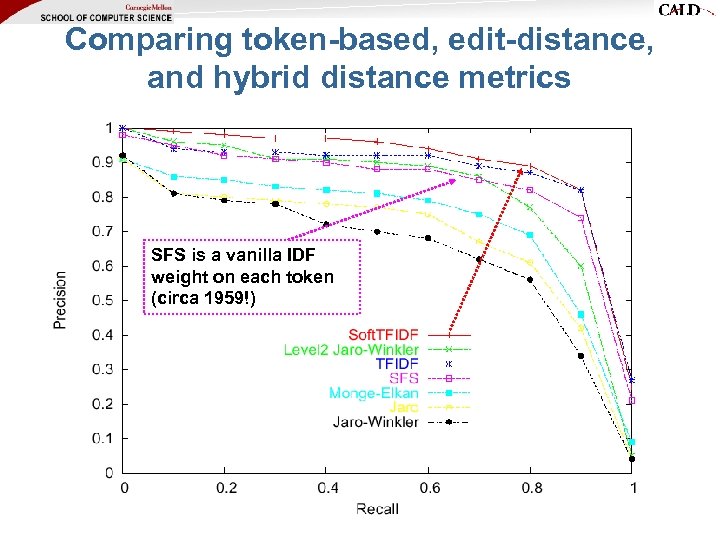

Comparing token-based, edit-distance, and hybrid distance metrics SFS is a vanilla IDF weight on each token (circa 1959!)

Comparing token-based, edit-distance, and hybrid distance metrics SFS is a vanilla IDF weight on each token (circa 1959!)

Soft. TFIDF is a Robust Distance Metric

Soft. TFIDF is a Robust Distance Metric

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Needed: – Robust distance metrics that work “out of the box” – Methods to tune and combine these metrics

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Needed: – Robust distance metrics that work “out of the box” – Methods to tune and combine these metrics

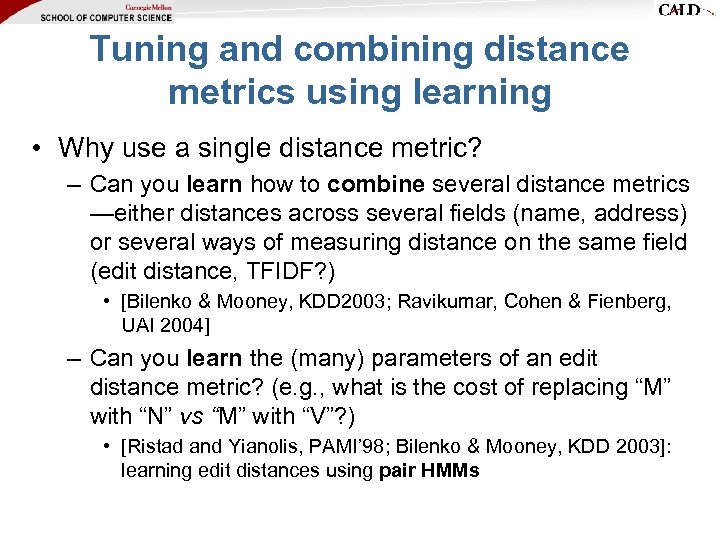

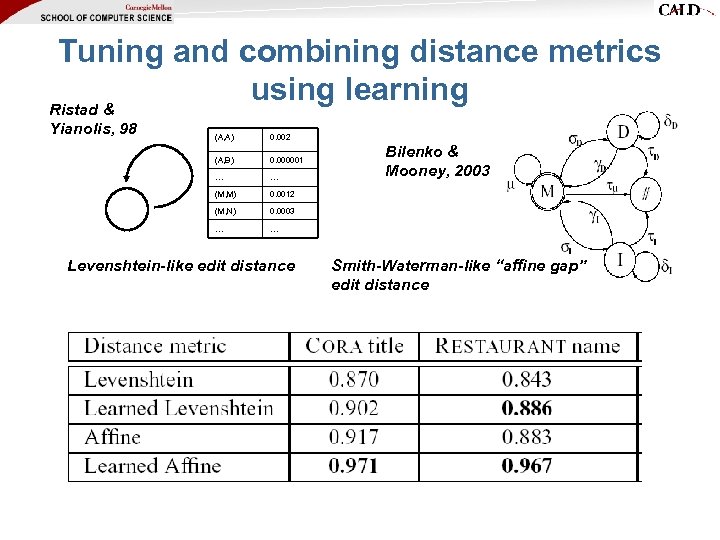

Tuning and combining distance metrics using learning • Why use a single distance metric? – Can you learn how to combine several distance metrics —either distances across several fields (name, address) or several ways of measuring distance on the same field (edit distance, TFIDF? ) • [Bilenko & Mooney, KDD 2003; Ravikumar, Cohen & Fienberg, UAI 2004] – Can you learn the (many) parameters of an edit distance metric? (e. g. , what is the cost of replacing “M” with “N” vs “M” with “V”? ) • [Ristad and Yianolis, PAMI’ 98; Bilenko & Mooney, KDD 2003]: learning edit distances using pair HMMs

Tuning and combining distance metrics using learning • Why use a single distance metric? – Can you learn how to combine several distance metrics —either distances across several fields (name, address) or several ways of measuring distance on the same field (edit distance, TFIDF? ) • [Bilenko & Mooney, KDD 2003; Ravikumar, Cohen & Fienberg, UAI 2004] – Can you learn the (many) parameters of an edit distance metric? (e. g. , what is the cost of replacing “M” with “N” vs “M” with “V”? ) • [Ristad and Yianolis, PAMI’ 98; Bilenko & Mooney, KDD 2003]: learning edit distances using pair HMMs

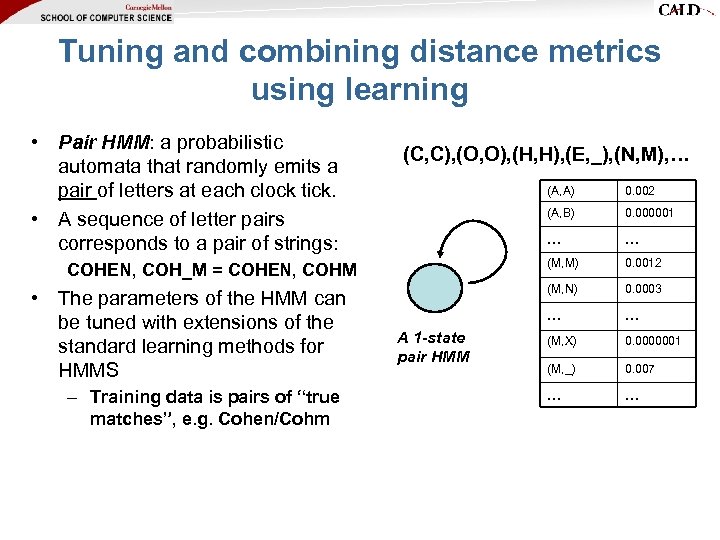

Tuning and combining distance metrics using learning • Pair HMM: a probabilistic automata that randomly emits a pair of letters at each clock tick. • A sequence of letter pairs corresponds to a pair of strings: (C, C), (O, O), (H, H), (E, _), (N, M), … (A, A) (A, B) • The parameters of the HMM can be tuned with extensions of the standard learning methods for HMMS – Training data is pairs of “true matches”, e. g. Cohen/Cohm … (M, M) 0. 0012 (M, N) 0. 0003 … A 1 -state pair HMM 0. 000001 … COHEN, COH_M = COHEN, COHM 0. 002 … (M, X) 0. 0000001 (M, _) 0. 007 … …

Tuning and combining distance metrics using learning • Pair HMM: a probabilistic automata that randomly emits a pair of letters at each clock tick. • A sequence of letter pairs corresponds to a pair of strings: (C, C), (O, O), (H, H), (E, _), (N, M), … (A, A) (A, B) • The parameters of the HMM can be tuned with extensions of the standard learning methods for HMMS – Training data is pairs of “true matches”, e. g. Cohen/Cohm … (M, M) 0. 0012 (M, N) 0. 0003 … A 1 -state pair HMM 0. 000001 … COHEN, COH_M = COHEN, COHM 0. 002 … (M, X) 0. 0000001 (M, _) 0. 007 … …

Tuning and combining distance metrics using learning Ristad & Yianolis, 98 (A, A) 0. 002 (A, B) 0. 000001 … … (M, M) 0. 0012 (M, N) 0. 0003 … … Levenshtein-like edit distance Bilenko & Mooney, 2003 Smith-Waterman-like “affine gap” edit distance

Tuning and combining distance metrics using learning Ristad & Yianolis, 98 (A, A) 0. 002 (A, B) 0. 000001 … … (M, M) 0. 0012 (M, N) 0. 0003 … … Levenshtein-like edit distance Bilenko & Mooney, 2003 Smith-Waterman-like “affine gap” edit distance

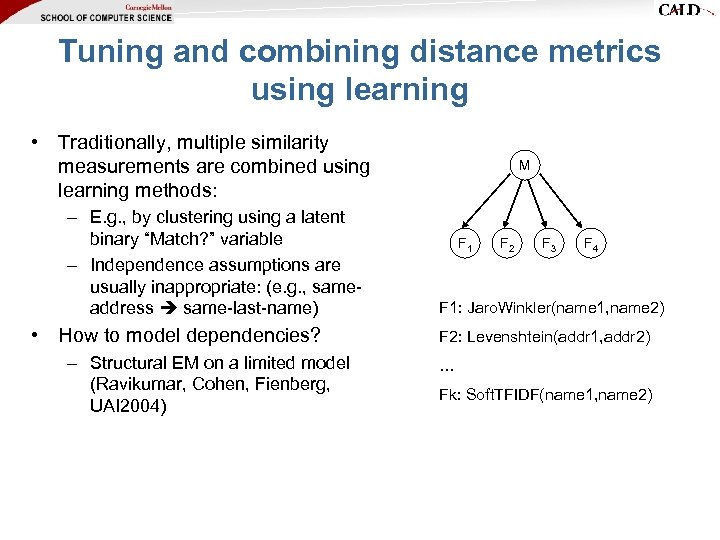

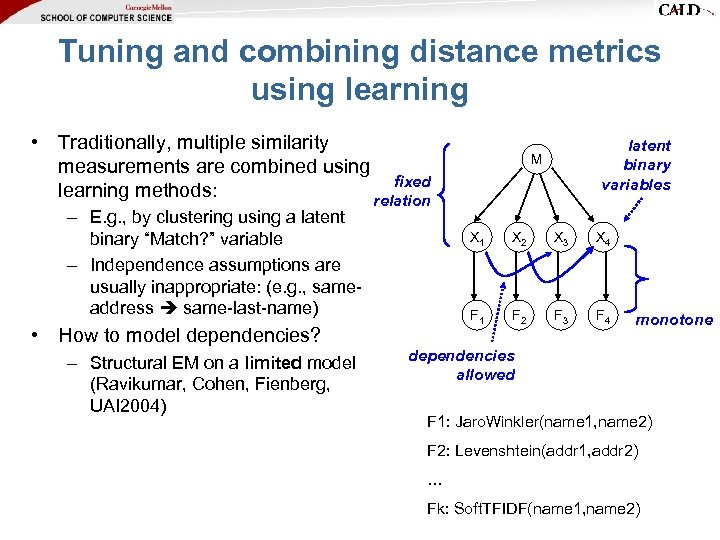

Tuning and combining distance metrics using learning • Traditionally, multiple similarity measurements are combined using learning methods: – E. g. , by clustering using a latent binary “Match? ” variable – Independence assumptions are usually inappropriate: (e. g. , sameaddress same-last-name) • How to model dependencies? – Structural EM on a limited model (Ravikumar, Cohen, Fienberg, UAI 2004) M F 1 F 2 F 3 F 4 F 1: Jaro. Winkler(name 1, name 2) F 2: Levenshtein(addr 1, addr 2) … Fk: Soft. TFIDF(name 1, name 2)

Tuning and combining distance metrics using learning • Traditionally, multiple similarity measurements are combined using learning methods: – E. g. , by clustering using a latent binary “Match? ” variable – Independence assumptions are usually inappropriate: (e. g. , sameaddress same-last-name) • How to model dependencies? – Structural EM on a limited model (Ravikumar, Cohen, Fienberg, UAI 2004) M F 1 F 2 F 3 F 4 F 1: Jaro. Winkler(name 1, name 2) F 2: Levenshtein(addr 1, addr 2) … Fk: Soft. TFIDF(name 1, name 2)

Tuning and combining distance metrics using learning • Traditionally, multiple similarity measurements are combined using fixed learning methods: relation – E. g. , by clustering using a latent binary “Match? ” variable – Independence assumptions are usually inappropriate: (e. g. , sameaddress same-last-name) • How to model dependencies? – Structural EM on a limited model (Ravikumar, Cohen, Fienberg, UAI 2004) latent binary variables M X 1 X 2 X 3 X 4 F 1 F 2 F 3 F 4 monotone dependencies allowed F 1: Jaro. Winkler(name 1, name 2) F 2: Levenshtein(addr 1, addr 2) … Fk: Soft. TFIDF(name 1, name 2)

Tuning and combining distance metrics using learning • Traditionally, multiple similarity measurements are combined using fixed learning methods: relation – E. g. , by clustering using a latent binary “Match? ” variable – Independence assumptions are usually inappropriate: (e. g. , sameaddress same-last-name) • How to model dependencies? – Structural EM on a limited model (Ravikumar, Cohen, Fienberg, UAI 2004) latent binary variables M X 1 X 2 X 3 X 4 F 1 F 2 F 3 F 4 monotone dependencies allowed F 1: Jaro. Winkler(name 1, name 2) F 2: Levenshtein(addr 1, addr 2) … Fk: Soft. TFIDF(name 1, name 2)

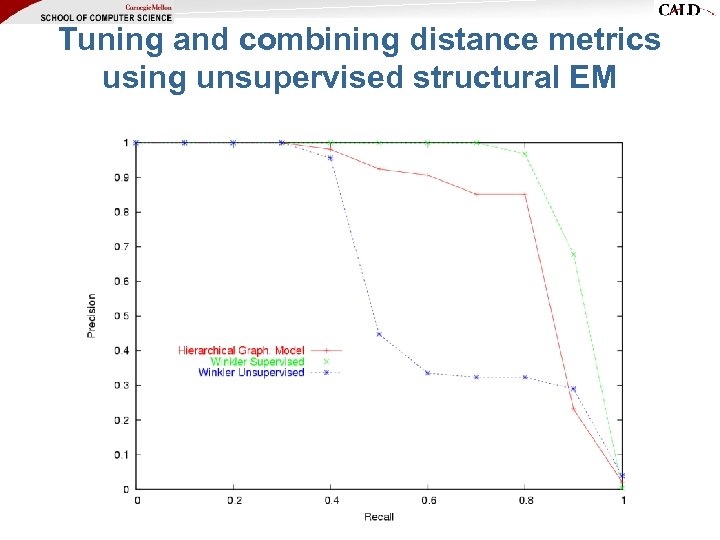

Tuning and combining distance metrics using unsupervised structural EM

Tuning and combining distance metrics using unsupervised structural EM

Robust distance metrics, learnable using generative models (semi-/unsupervised learning) • Summary: – None of these methods are evaluated on as many integration problems as one would like – Ravikumar et al structural EM method works well, but is computationally expensive – Pair-HMM methods of Ristad & Yianolis, Bilenko & Mooney work well, but require “true matched pairs” – Claim: could combine these by using pair-HMMs in inner loop of structural EM • Practical well before 2059 latent binary variables M fixed relation X 1 X 2 X 3 X 4 F 1 F 2 F 3 F 4 dependencies allowed

Robust distance metrics, learnable using generative models (semi-/unsupervised learning) • Summary: – None of these methods are evaluated on as many integration problems as one would like – Ravikumar et al structural EM method works well, but is computationally expensive – Pair-HMM methods of Ristad & Yianolis, Bilenko & Mooney work well, but require “true matched pairs” – Claim: could combine these by using pair-HMMs in inner loop of structural EM • Practical well before 2059 latent binary variables M fixed relation X 1 X 2 X 3 X 4 F 1 F 2 F 3 F 4 dependencies allowed

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Needed: – Robust distance metrics that work “out of the box” – Methods to tune and combine these metrics – Ways to rapidly integrate new information sources with unknown schemata

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Needed: – Robust distance metrics that work “out of the box” – Methods to tune and combine these metrics – Ways to rapidly integrate new information sources with unknown schemata

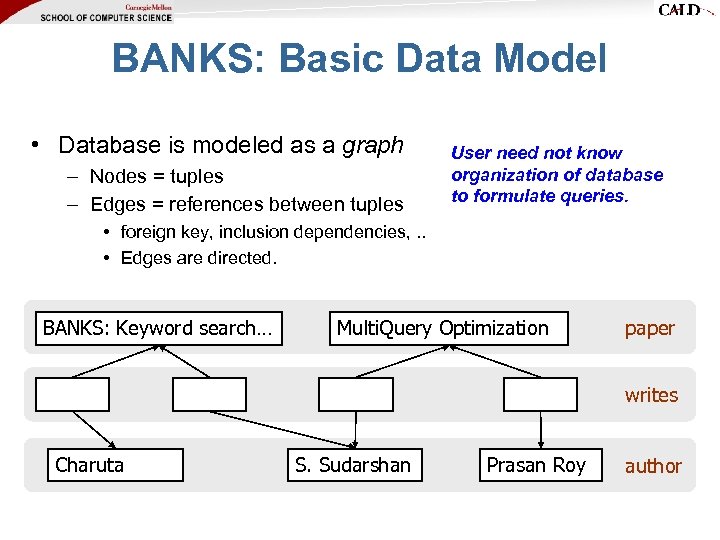

BANKS: Basic Data Model • Database is modeled as a graph – Nodes = tuples – Edges = references between tuples User need not know organization of database to formulate queries. • foreign key, inclusion dependencies, . . • Edges are directed. BANKS: Keyword search… Multi. Query Optimization paper writes Charuta S. Sudarshan Prasan Roy author

BANKS: Basic Data Model • Database is modeled as a graph – Nodes = tuples – Edges = references between tuples User need not know organization of database to formulate queries. • foreign key, inclusion dependencies, . . • Edges are directed. BANKS: Keyword search… Multi. Query Optimization paper writes Charuta S. Sudarshan Prasan Roy author

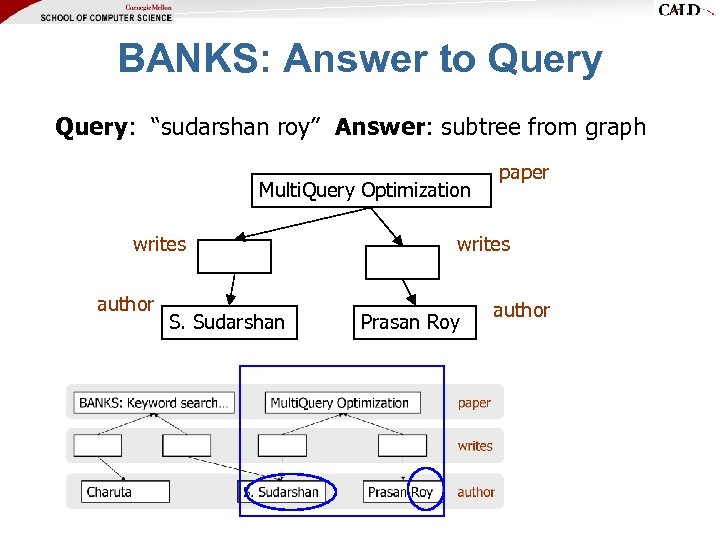

BANKS: Answer to Query: “sudarshan roy” Answer: subtree from graph Multi. Query Optimization writes author S. Sudarshan paper writes Prasan Roy author

BANKS: Answer to Query: “sudarshan roy” Answer: subtree from graph Multi. Query Optimization writes author S. Sudarshan paper writes Prasan Roy author

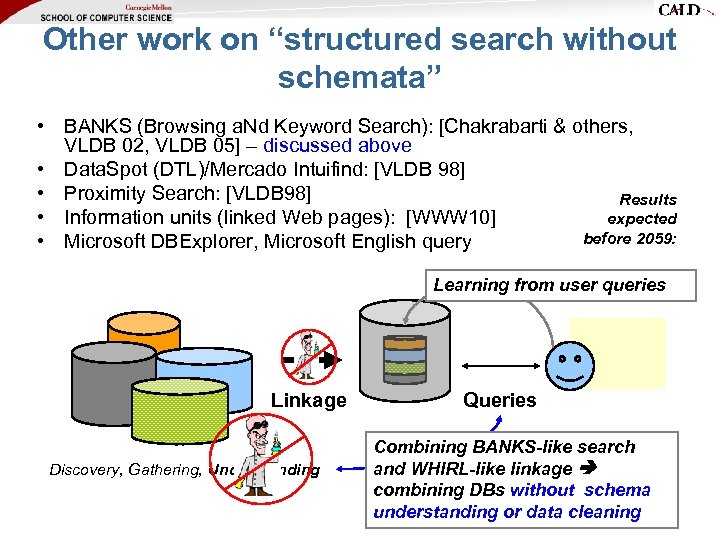

Other work on “structured search without schemata” • BANKS (Browsing a. Nd Keyword Search): [Chakrabarti & others, VLDB 02, VLDB 05] – discussed above • Data. Spot (DTL)/Mercado Intuifind: [VLDB 98] • Proximity Search: [VLDB 98] Results • Information units (linked Web pages): [WWW 10] expected before 2059: • Microsoft DBExplorer, Microsoft English query Learning from user queries Linkage Discovery, Gathering, Understanding Queries Combining BANKS-like search and WHIRL-like linkage combining DBs without schema understanding or data cleaning

Other work on “structured search without schemata” • BANKS (Browsing a. Nd Keyword Search): [Chakrabarti & others, VLDB 02, VLDB 05] – discussed above • Data. Spot (DTL)/Mercado Intuifind: [VLDB 98] • Proximity Search: [VLDB 98] Results • Information units (linked Web pages): [WWW 10] expected before 2059: • Microsoft DBExplorer, Microsoft English query Learning from user queries Linkage Discovery, Gathering, Understanding Queries Combining BANKS-like search and WHIRL-like linkage combining DBs without schema understanding or data cleaning

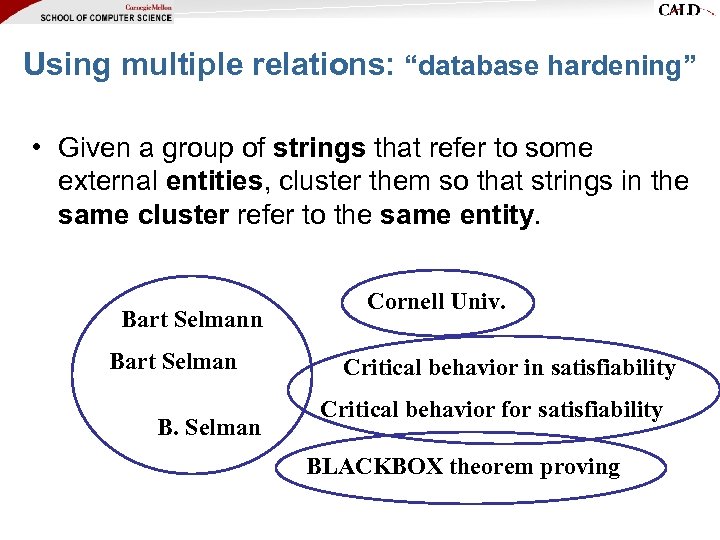

Using multiple relations: “database hardening” • Given a group of strings that refer to some external entities, cluster them so that strings in the same cluster refer to the same entity. Bart Selmann Bart Selman B. Selman Cornell Univ. Critical behavior in satisfiability Critical behavior for satisfiability BLACKBOX theorem proving

Using multiple relations: “database hardening” • Given a group of strings that refer to some external entities, cluster them so that strings in the same cluster refer to the same entity. Bart Selmann Bart Selman B. Selman Cornell Univ. Critical behavior in satisfiability Critical behavior for satisfiability BLACKBOX theorem proving

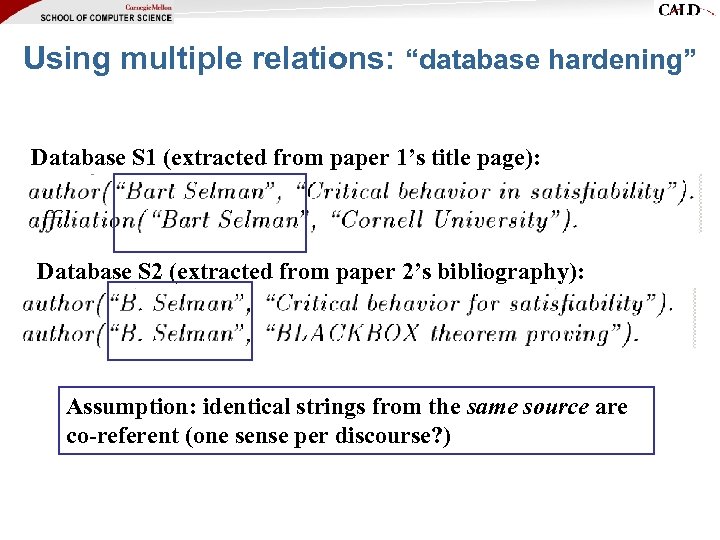

Using multiple relations: “database hardening” Database S 1 (extracted from paper 1’s title page): Database S 2 (extracted from paper 2’s bibliography): Assumption: identical strings from the same source are co-referent (one sense per discourse? )

Using multiple relations: “database hardening” Database S 1 (extracted from paper 1’s title page): Database S 2 (extracted from paper 2’s bibliography): Assumption: identical strings from the same source are co-referent (one sense per discourse? )

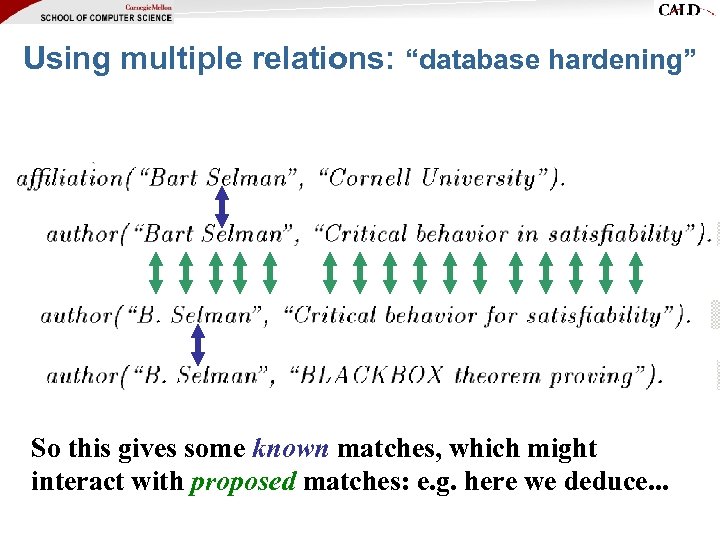

Using multiple relations: “database hardening” So this gives some known matches, which might interact with proposed matches: e. g. here we deduce. . .

Using multiple relations: “database hardening” So this gives some known matches, which might interact with proposed matches: e. g. here we deduce. . .

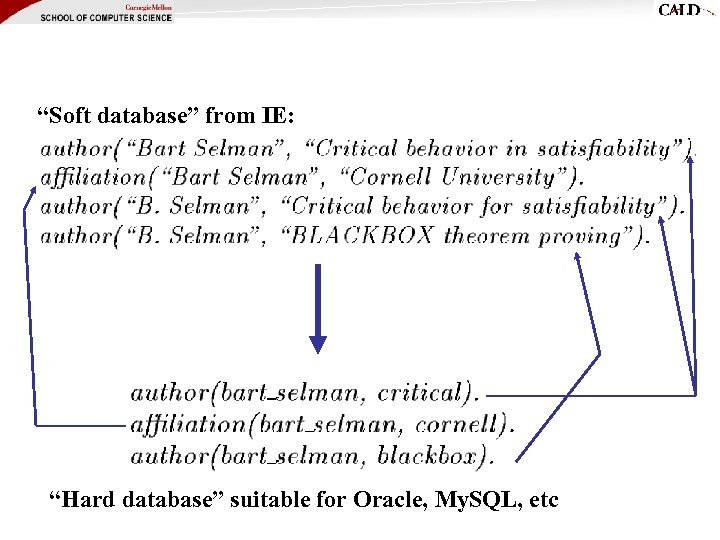

“Soft database” from IE: “Hard database” suitable for Oracle, My. SQL, etc

“Soft database” from IE: “Hard database” suitable for Oracle, My. SQL, etc

Using multiple relations: “database hardening” • (Mc. Allister et al, KDD 2000) Defined “hardening”: – Find “interpretation” (maps variant->name) that produces a compact version of “soft” database S. – Probabilistic interpretation of hardening: • Original “soft” data S is version of latent “hard” data H. • Hardening finds max likelihood H. – Hardening is hard! • Optimal hardening is NP-hard. – Greedy algorithm: • naive implementation is quadratic in |S| • clever data structures make it P(n log n), where n=|S|d • Other related work: – Pasula et al, NIPS 2002: more explicit generative Bayesian formulation and MCMC method, experimental support – Wellner & Mc. Callum 2004, Parag & Domingos 2004, Culotta & Mc. Callum 2005: discriminative models for multiple-relation linkage

Using multiple relations: “database hardening” • (Mc. Allister et al, KDD 2000) Defined “hardening”: – Find “interpretation” (maps variant->name) that produces a compact version of “soft” database S. – Probabilistic interpretation of hardening: • Original “soft” data S is version of latent “hard” data H. • Hardening finds max likelihood H. – Hardening is hard! • Optimal hardening is NP-hard. – Greedy algorithm: • naive implementation is quadratic in |S| • clever data structures make it P(n log n), where n=|S|d • Other related work: – Pasula et al, NIPS 2002: more explicit generative Bayesian formulation and MCMC method, experimental support – Wellner & Mc. Callum 2004, Parag & Domingos 2004, Culotta & Mc. Callum 2005: discriminative models for multiple-relation linkage

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Using more information in linkage: – Text, images, multiple interacting “hard” sources

Information integration, 2005 -2059 • Understanding sources of uncertainty, and propagating uncertainty to the end user. – “Soft” information integration – Driven by user’s goal and user’s queries • Information integration for the great unwashed masses – Personal information, small stores of scientific information, … • Using more information in linkage: – Text, images, multiple interacting “hard” sources

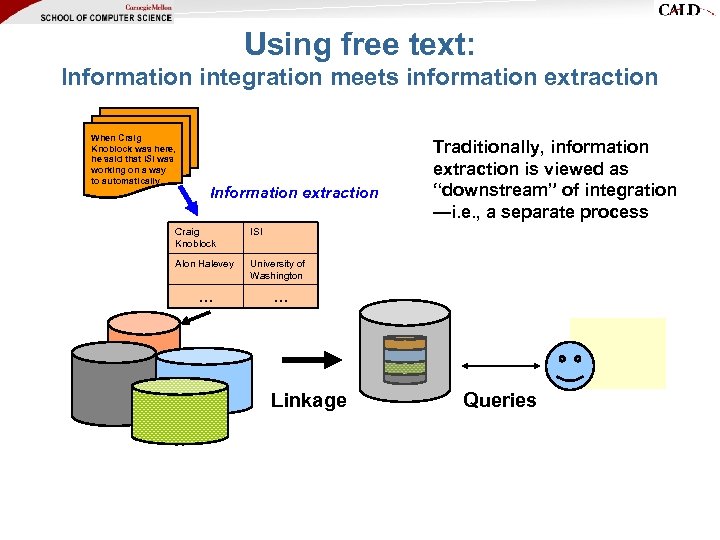

Using free text: Information integration meets information extraction When Craig Knoblock was here, he said that ISI was working on a way to automatically… Information extraction Craig Knoblock ISI Alon Halevey University of Washington … Traditionally, information extraction is viewed as “downstream” of integration —i. e. , a separate process … Linkage Queries

Using free text: Information integration meets information extraction When Craig Knoblock was here, he said that ISI was working on a way to automatically… Information extraction Craig Knoblock ISI Alon Halevey University of Washington … Traditionally, information extraction is viewed as “downstream” of integration —i. e. , a separate process … Linkage Queries

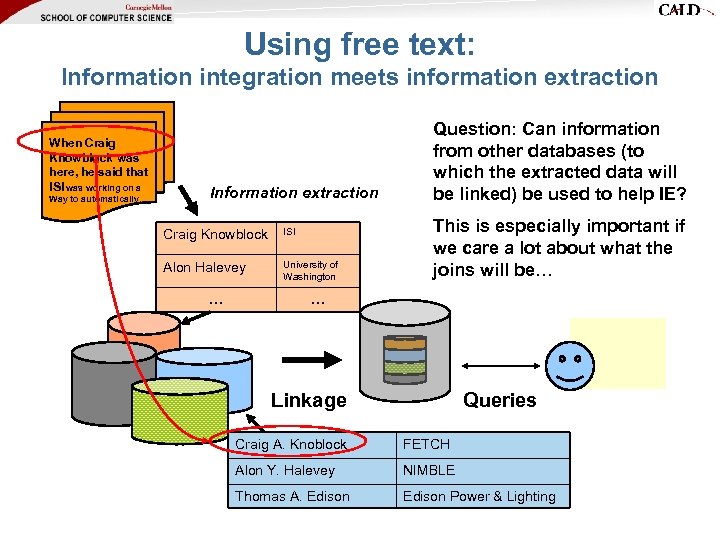

Using free text: Information integration meets information extraction When Craig Knowblock was here, he said that ISI was working on a Way to automatically… Information extraction Craig Knowblock ISI Alon Halevey University of Washington … Question: Can information from other databases (to which the extracted data will be linked) be used to help IE? This is especially important if we care a lot about what the joins will be… … Linkage Queries Craig A. Knoblock FETCH Alon Y. Halevey NIMBLE Thomas A. Edison Power & Lighting

Using free text: Information integration meets information extraction When Craig Knowblock was here, he said that ISI was working on a Way to automatically… Information extraction Craig Knowblock ISI Alon Halevey University of Washington … Question: Can information from other databases (to which the extracted data will be linked) be used to help IE? This is especially important if we care a lot about what the joins will be… … Linkage Queries Craig A. Knoblock FETCH Alon Y. Halevey NIMBLE Thomas A. Edison Power & Lighting

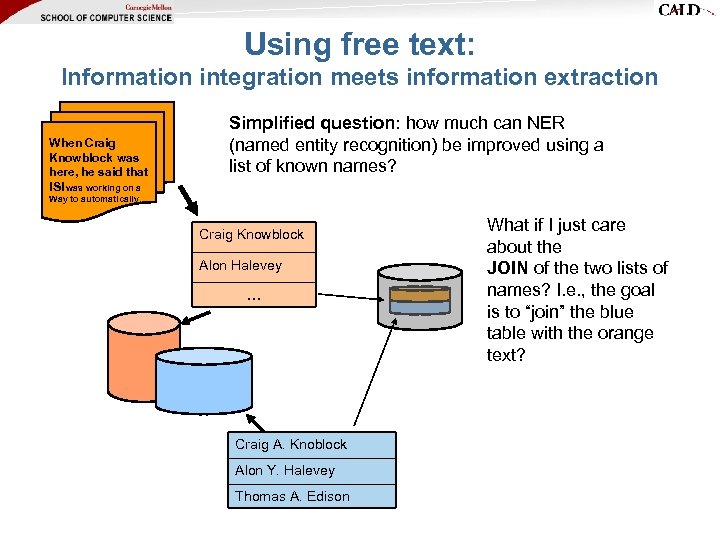

Using free text: Information integration meets information extraction When Craig Knowblock was here, he said that ISI was working on a Simplified question: how much can NER (named entity recognition) be improved using a list of known names? Way to automatically… Craig Knowblock Alon Halevey … Craig A. Knoblock Alon Y. Halevey Thomas A. Edison What if I just care about the JOIN of the two lists of names? I. e. , the goal is to “join” the blue table with the orange text?

Using free text: Information integration meets information extraction When Craig Knowblock was here, he said that ISI was working on a Simplified question: how much can NER (named entity recognition) be improved using a list of known names? Way to automatically… Craig Knowblock Alon Halevey … Craig A. Knoblock Alon Y. Halevey Thomas A. Edison What if I just care about the JOIN of the two lists of names? I. e. , the goal is to “join” the blue table with the orange text?

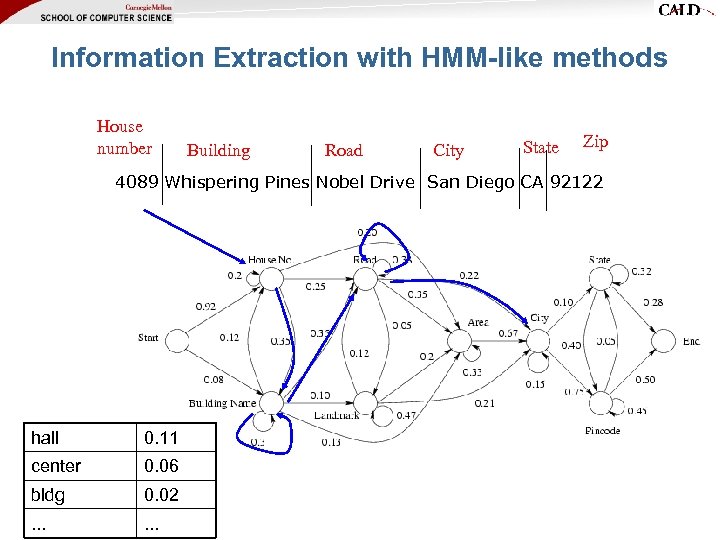

Information Extraction with HMM-like methods House number Building Road City State Zip 4089 Whispering Pines Nobel Drive San Diego CA 92122 hall 0. 11 center 0. 06 bldg 0. 02 . . .

Information Extraction with HMM-like methods House number Building Road City State Zip 4089 Whispering Pines Nobel Drive San Diego CA 92122 hall 0. 11 center 0. 06 bldg 0. 02 . . .

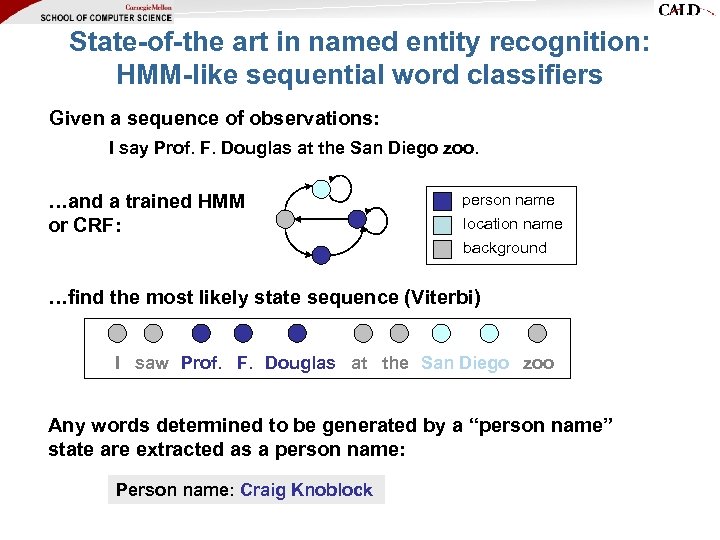

State-of-the art in named entity recognition: HMM-like sequential word classifiers Given a sequence of observations: I say Prof. F. Douglas at the San Diego zoo. …and a trained HMM or CRF: person name location name background …find the most likely state sequence (Viterbi) I saw Prof. F. Douglas at the San Diego zoo Any words determined to be generated by a “person name” state are extracted as a person name: Person name: Craig Knoblock

State-of-the art in named entity recognition: HMM-like sequential word classifiers Given a sequence of observations: I say Prof. F. Douglas at the San Diego zoo. …and a trained HMM or CRF: person name location name background …find the most likely state sequence (Viterbi) I saw Prof. F. Douglas at the San Diego zoo Any words determined to be generated by a “person name” state are extracted as a person name: Person name: Craig Knoblock

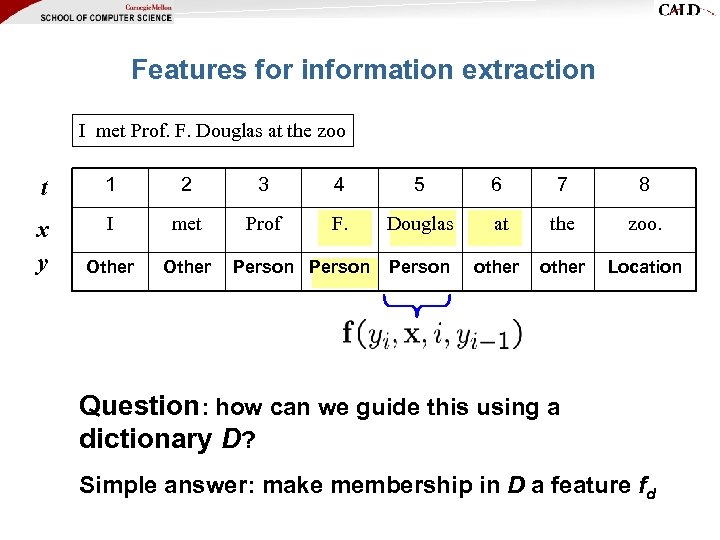

Features for information extraction I met Prof. F. Douglas at the zoo t 1 2 3 4 5 6 7 8 x y I met Prof F. Douglas at the zoo. Other Person other Location Person Question: how can we guide this using a dictionary D? Simple answer: make membership in D a feature fd

Features for information extraction I met Prof. F. Douglas at the zoo t 1 2 3 4 5 6 7 8 x y I met Prof F. Douglas at the zoo. Other Person other Location Person Question: how can we guide this using a dictionary D? Simple answer: make membership in D a feature fd

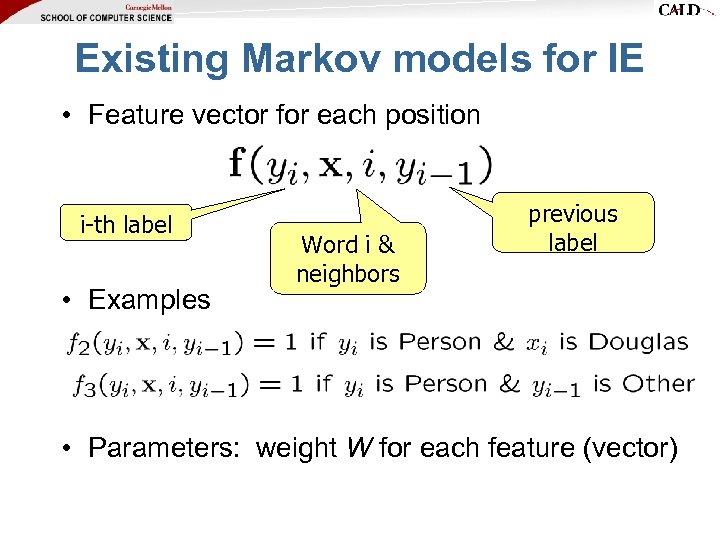

Existing Markov models for IE • Feature vector for each position i-th label • Examples Word i & neighbors previous label • Parameters: weight W for each feature (vector)

Existing Markov models for IE • Feature vector for each position i-th label • Examples Word i & neighbors previous label • Parameters: weight W for each feature (vector)

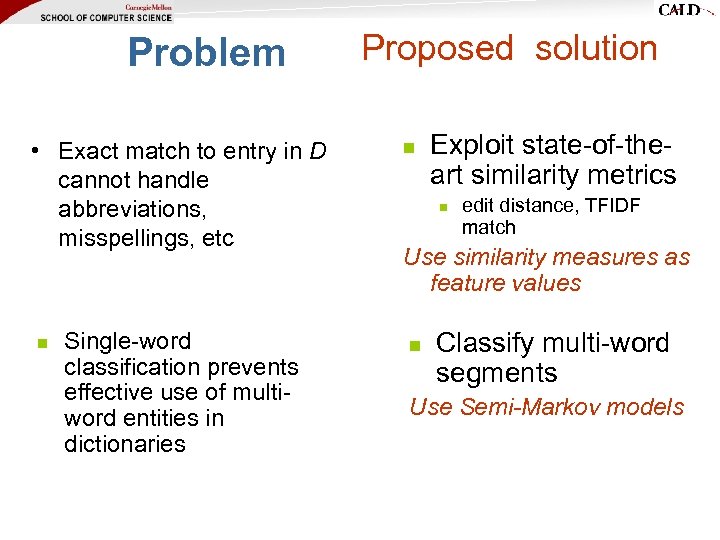

Problem • Exact match to entry in D cannot handle abbreviations, misspellings, etc n Single-word classification prevents effective use of multiword entities in dictionaries Proposed solution n Exploit state-of-theart similarity metrics n edit distance, TFIDF match Use similarity measures as feature values n Classify multi-word segments Use Semi-Markov models

Problem • Exact match to entry in D cannot handle abbreviations, misspellings, etc n Single-word classification prevents effective use of multiword entities in dictionaries Proposed solution n Exploit state-of-theart similarity metrics n edit distance, TFIDF match Use similarity measures as feature values n Classify multi-word segments Use Semi-Markov models

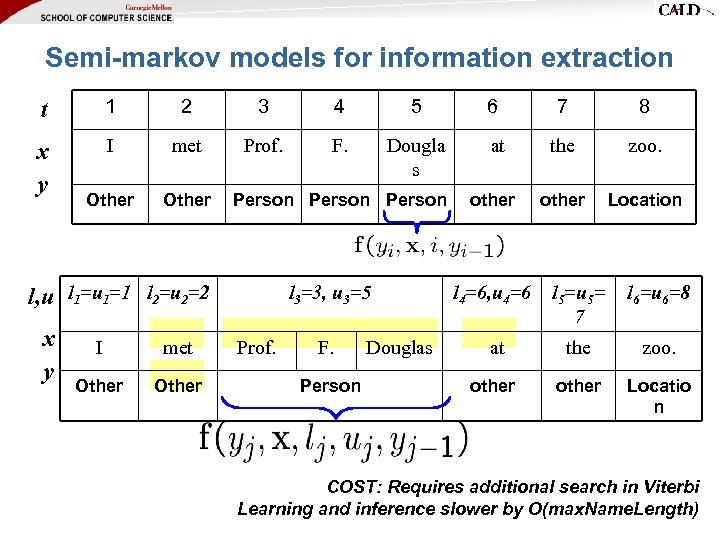

Semi-markov models for information extraction t 1 2 3 4 5 6 7 8 x y I met Prof. F. Dougla s at the zoo. Other other Location Person l, u l 1=u 1=1 l 2=u 2=2 x y I met Other l 3=3, u 3=5 Prof. l 4=6, u 4=6 l 5=u 5= 7 l 6=u 6=8 F. at the zoo. other Locatio n Person Douglas COST: Requires additional search in Viterbi Learning and inference slower by O(max. Name. Length)

Semi-markov models for information extraction t 1 2 3 4 5 6 7 8 x y I met Prof. F. Dougla s at the zoo. Other other Location Person l, u l 1=u 1=1 l 2=u 2=2 x y I met Other l 3=3, u 3=5 Prof. l 4=6, u 4=6 l 5=u 5= 7 l 6=u 6=8 F. at the zoo. other Locatio n Person Douglas COST: Requires additional search in Viterbi Learning and inference slower by O(max. Name. Length)

Features for Semi-Markov models j-th label Start of Sj end of Sj previous label

Features for Semi-Markov models j-th label Start of Sj end of Sj previous label

Internal dictionary: formed from training examples External dictionary: from some column of an external DB

Internal dictionary: formed from training examples External dictionary: from some column of an external DB

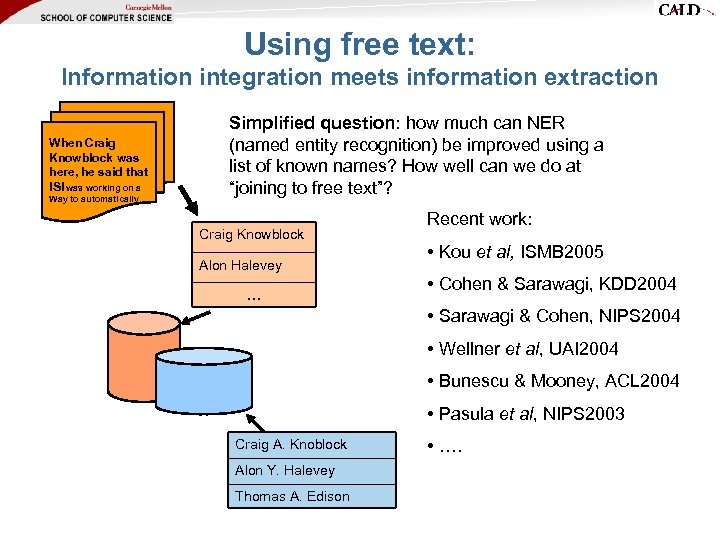

Using free text: Information integration meets information extraction When Craig Knowblock was here, he said that ISI was working on a Way to automatically… Simplified question: how much can NER (named entity recognition) be improved using a list of known names? How well can we do at “joining to free text”? Craig Knowblock Alon Halevey … Recent work: • Kou et al, ISMB 2005 • Cohen & Sarawagi, KDD 2004 • Sarawagi & Cohen, NIPS 2004 • Wellner et al, UAI 2004 • Bunescu & Mooney, ACL 2004 • Pasula et al, NIPS 2003 Craig A. Knoblock Alon Y. Halevey Thomas A. Edison • ….

Using free text: Information integration meets information extraction When Craig Knowblock was here, he said that ISI was working on a Way to automatically… Simplified question: how much can NER (named entity recognition) be improved using a list of known names? How well can we do at “joining to free text”? Craig Knowblock Alon Halevey … Recent work: • Kou et al, ISMB 2005 • Cohen & Sarawagi, KDD 2004 • Sarawagi & Cohen, NIPS 2004 • Wellner et al, UAI 2004 • Bunescu & Mooney, ACL 2004 • Pasula et al, NIPS 2003 Craig A. Knoblock Alon Y. Halevey Thomas A. Edison • ….

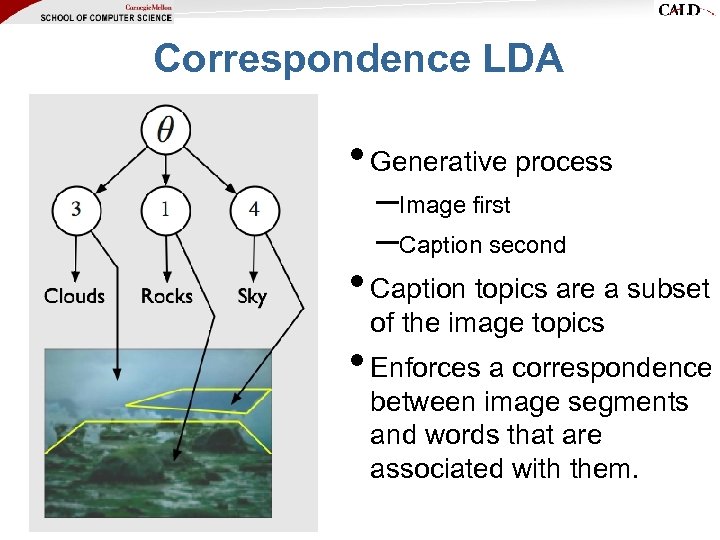

Correspondence LDA • Generative process –Image first –Caption second • Caption topics are a subset of the image topics • Enforces a correspondence between image segments and words that are associated with them.

Correspondence LDA • Generative process –Image first –Caption second • Caption topics are a subset of the image topics • Enforces a correspondence between image segments and words that are associated with them.

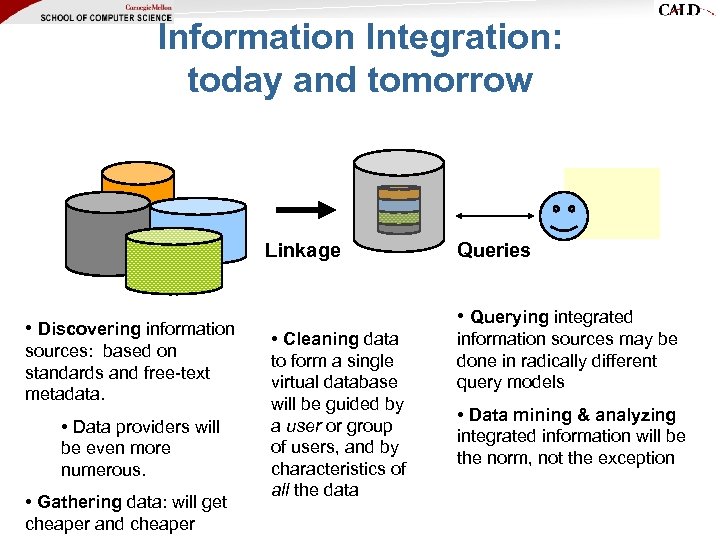

Information Integration: today and tomorrow Linkage • Discovering information sources: based on standards and free-text metadata. • Data providers will be even more numerous. • Gathering data: will get cheaper and cheaper Queries • Querying integrated • Cleaning data to form a single virtual database will be guided by a user or group of users, and by characteristics of all the data information sources may be done in radically different query models • Data mining & analyzing integrated information will be the norm, not the exception

Information Integration: today and tomorrow Linkage • Discovering information sources: based on standards and free-text metadata. • Data providers will be even more numerous. • Gathering data: will get cheaper and cheaper Queries • Querying integrated • Cleaning data to form a single virtual database will be guided by a user or group of users, and by characteristics of all the data information sources may be done in radically different query models • Data mining & analyzing integrated information will be the norm, not the exception